While LLMs such as ChatGPT will give you any text you want and graphics generators such as Stable Diffusion will create an image based on a prompt, text-to-video AI is still an emerging field. Earlier this week, we reported on an AI Pizza Commercial that used a text-to-video tool called Runway Gen-2 for its video. However, at present, Runway Gen-2 is in an invite-only beta. So, unless you were invited, you can't try it out.

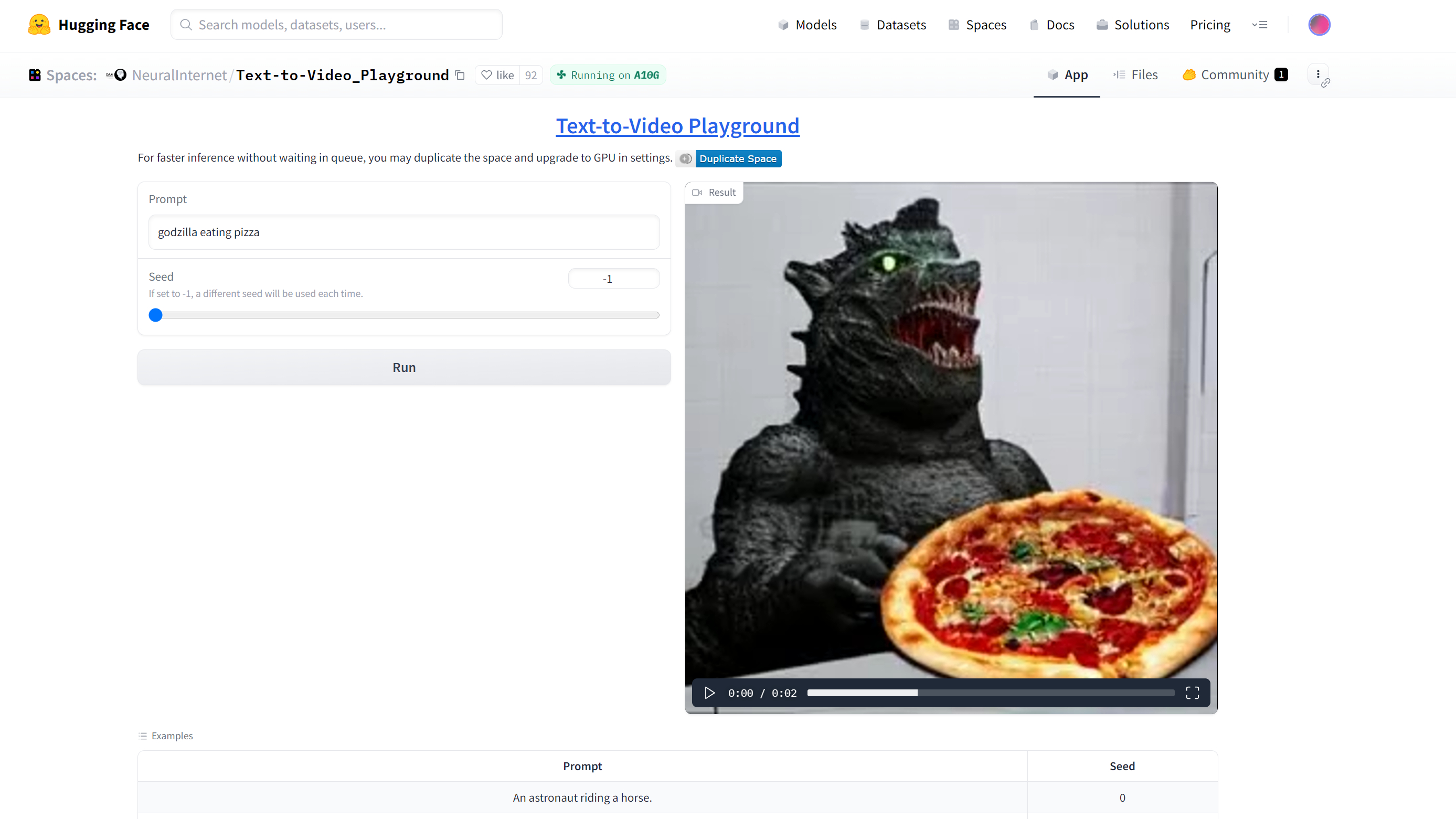

Fortunately, there is a completely free and easy-to-use tool on Hugging Face (the leading AI developer portal) called NeuralInternet Text-to-Video Playground, but it's limited to a mere two seconds, just about enough for an animated GIF. You don't even need to have a Hugging Face account to use it. Here's how.

How to Generate a 2-Second, AI Text-Video Clip

1. Navigate to the Text-to-Video Playground in your browser.

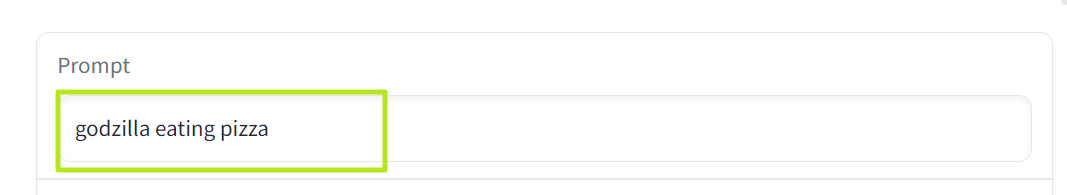

2. Enter a prompt into the prompt box or try one of the Example prompts at the bottom of the page (ex: "An Astronaut riding a horse")

3. Enter your Seed number. The Seed is a number (from -1 to 1,000,000) that the AI uses a starting point for generating the image. This means that if you use a seed of 1, you should get the same output every time with the same prompt. I recommend using a seed of -1, which gives you a random seed number each time.

4. Click Run.

The Text-to-Video Playground will then take a few minutes to generate its result. You can see the progress by looking at the Result window. Depending on the amount of traffic the server has, it may take longer.

5. Click the play button to play your video.

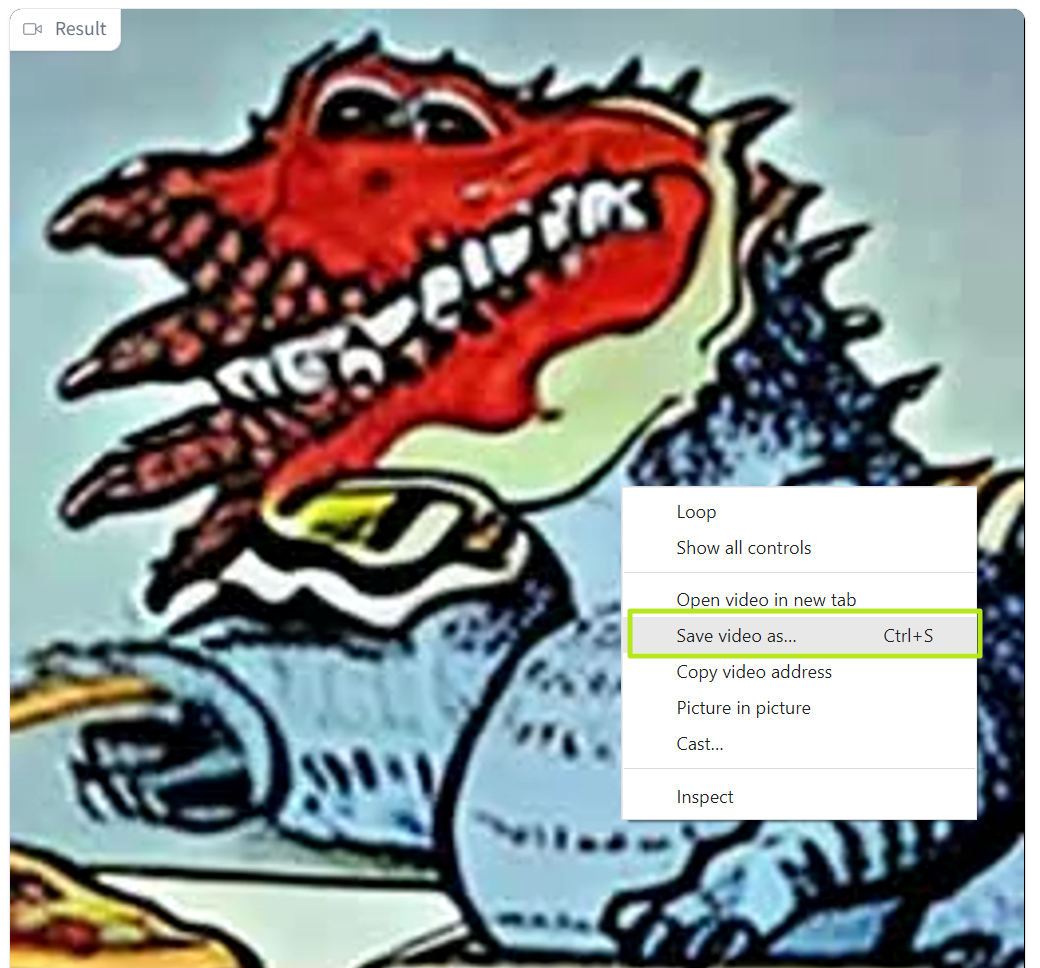

6. Right click your video and select Save Video as to download the video (as an MP4) to your PC.

The Model It's Using and the Results

The Text-to-Video playground is using a text-to-video model from a Chinese company called ModelScope, which claims that its model has 1.7 billion parameters. Like many AI models that deal with imagery, the ModelScope model has some limitations, beyond just the two second run-time.

First of all, it's clear that the training data set takes from a wide variety of web images, including some that are copyrighted and watermarked. In several examples, it showed part of a Shutterstock watermark on objects in the video. Shutterstock is a leading royalty-free image provider that requires a paid membership, but it looks like the training data just grabbed its images without permission.

Also, not everything looks as it should. For example, astute kaiju fans will notice that my Godzilla eating pizza video below shows a monster that is a giant green lizard but does not have any of the distinctive features of everyone's favorite Japanese monster.

Finally, and perhaps this goes without saying but, there's no audio in these videos. The best use for these might be converting them to animated GIFs you can send your friends. The image above is an animated GIF that I made from one of my two-second Godzilla-eating-pizza videos.

If you want to learn more about creating in AI, see our articles on how to use Auto-GPT to make an autonomous agent or how to use BabyAGI.