In the future, we may remember 2022 as the year that AI-generated art came of age.

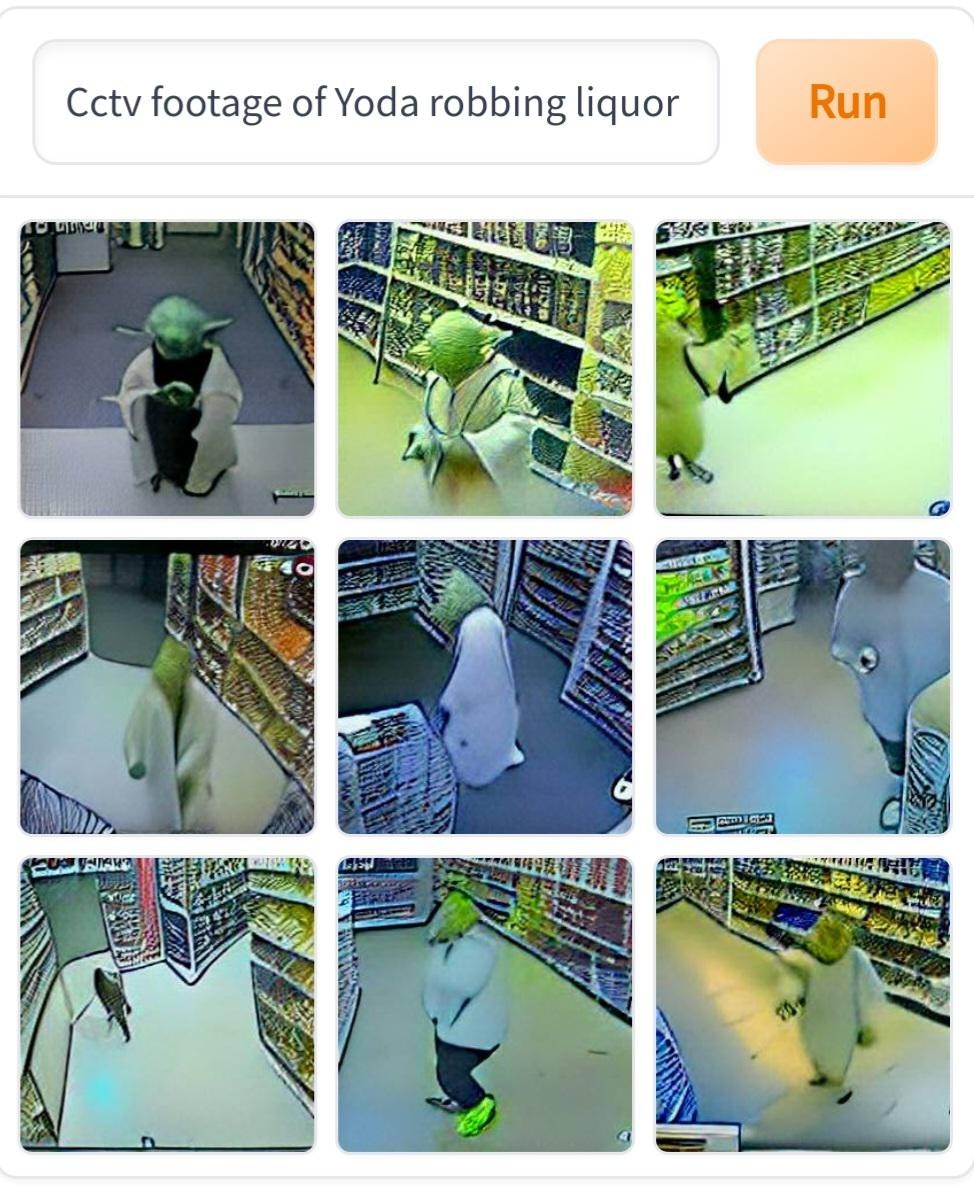

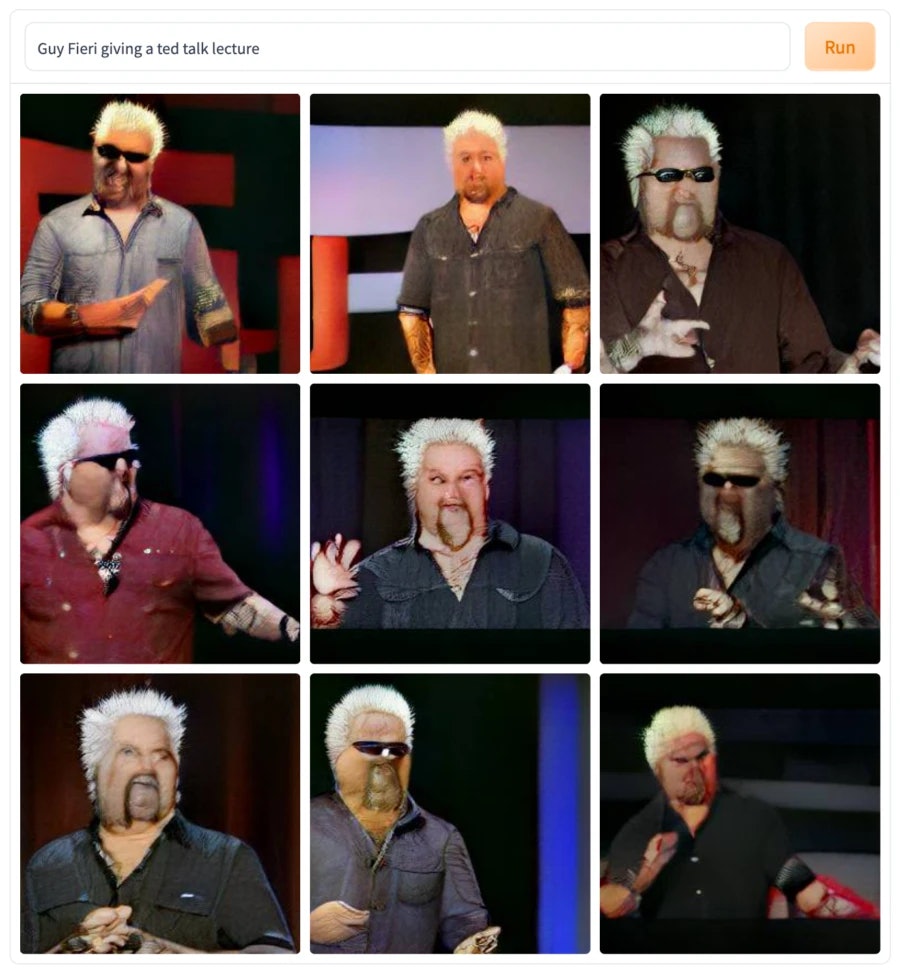

Thanks in part to DALL-E Mini (now known as Craiyon), suddenly, both programmers and regular Joes could turn a brief text prompt into a detailed image out of thin air. Social media buzzed over nine-panel collages of gamer toilets, Yoda robbing a liquor store, and mushroom clouds in the style of Monet.

This hyped-up tool was created by a machine learning engineer named Boris Dayma in July 2021 for a competition held by Google and Hugging Face, a startup that hosts open-source machine learning tools on its website. Suddenly, DALL-E Mini became the Internet’s beloved toy — largely thanks to its ease of access.

The concept was inspired by an art-making model called DALL-E 1, which was unveiled in 2021 by a machine learning research organization called OpenAI. While OpenAI kept DALL-E 1 under wraps, Dayma’s DALL-E Mini was open to anyone with an Internet connection.

OpenAI was founded in 2015 with an idealistic name and a promise to offer its work to AI researchers for free. The organization since reneged on that promise, turning for-profit and inking a $1 billion partnership with Microsoft. This year, it released its more powerful, higher-budget DALL-E 2. It costs money to use, unlike Dayma’s Craiyon — in fact, he switched the name to avoid confusion with OpenAI’s models.

Just as OpenAI did with its controversial language model, GPT-3, the company plans to license DALL-E 2 out for use by corporate clients.

But the future of AI art does not necessarily resemble walled gardens with quotas and entrance fees. Shortly after the birth of DALL-E 2, a fledgling startup named Stability AI released an open-source model called Stable Diffusion, which is free to use. Anyone could download and run Stable Diffusion themself; the only (admittedly steep) barrier was a powerful enough computer. Along with Craiyon, Internet users now have a few free options to make the bizarre images of their dreams a reality.

To dive into the origins of this AI meme-making frenzy, we spoke with Boris Dayma, the machine learning engineer who spearheaded DALL-E Mini.

This interview has been edited and condensed for clarity.

Where did you get the idea?

At the beginning of last year, OpenAI published a blog about DALL-E 1, which was that cool AI model that could draw images from any text prompt. There had already been some other projects around that. But that was the first one that looked impressive.

The only problem was that the code was not released. Nobody could play with it.

So, a bunch of people decided that they want to try to reproduce it, and I got very interested, and I was like, “I want to try, too. This is one of the coolest AI applications. I want to learn how that works and I want to try to do it myself.” So, when I saw that, I immediately tweeted, “OK, I’m going to build that.”

I didn’t do anything for six months.

What finally changed?

In July of last year, HuggingFace and Google … organized a community event, like a competition to develop AI models.

You could choose whatever subject you wanted, and in exchange, you would have access to their computers, which are much better than what people typically have at home. And you would have access to support from HuggingFace engineers and Google engineers. I thought it was a great opportunity to learn and to play with it.

I proposed the project: DALL-E Mini. Let’s try to reproduce DALL-E — or, not necessarily reproduce, but try to get the same results, even if we build it a bit differently. Let’s see how it works, and learn, and experiment on that.

What was that first version like?

It was not what we have now. Now, the [current] model is much, much more powerful. But it was already impressive.

When it started, after one or two days, you would put “view of the beach by night,” and you would have something kind of dark. “View of the beach during the day” — you would have something clear. You couldn’t necessarily recognize the beach yet, but we were like, “Oh, my God, it’s actually learning something.”

At the end of like days of training the model, it was actually able to do landscapes quite nicely, which was very impressive. We put “snowy mountain,” and it worked. That was really exciting. Yeah, actually, we were even surprised that it worked!

But, you know, we did a lot of things very fast [during the competition], and there was still so much to optimize.

Only many months later did it become popular. What do you think caused it to explode in popularity?

I was surprised how it became very popular. But I think it’s because, as we made the model public, some people realized it could do things that were, for example, funny images and memes and things like that. They realized that certain famous personalities were actually recognizable, even though they’re not necessarily drawn perfectly. You can recognize them and put them in funny situations, and the model is able to do that.

It reached a moment where it suddenly was able to compose more complex prompts, and also able to recognize more people. I think that turned it viral.

What did you think of the funny pictures?

It was something I didn’t expect. All along the way, when I was developing the model, my test prompts were very basic. My most creative prompt was “the Eiffel Tower on the moon.” Maybe I wouldn’t have noticed that it could do such creative things without the use of the broader audience, I would say.

People have been doing the model in all kind of situations. … Sometimes, I’m surprised what it can draw. Recently, people for example have been using “octopus assembling Ikea furniture.” Or, like, “a store being robbed by teddy bears, view from CCTV camera.” This is crazy that it works at all.

Does Craiyon still have a place in a world with DALL-E 2?

I think there are a lot of advantages.

One of the first is it democratizes, in a way, access to AI technology. The application of creating images, I think, is a really cool application, whether you do it for work, because it’s useful for you, or even just for entertainment. Having people just having fun, creating funny memes — I think it has a big value.

Giving access to everybody versus only the people who can afford [DALL-E 2] or the select group of users who have access, I think it lets people benefit equally from the same technology. Having it free is something that’s very important to us as well.

Also, one of the issues you have when few people can access a big model is there’s a higher danger for deepfakes, et cetera, because only a few people are able to create it and control it.