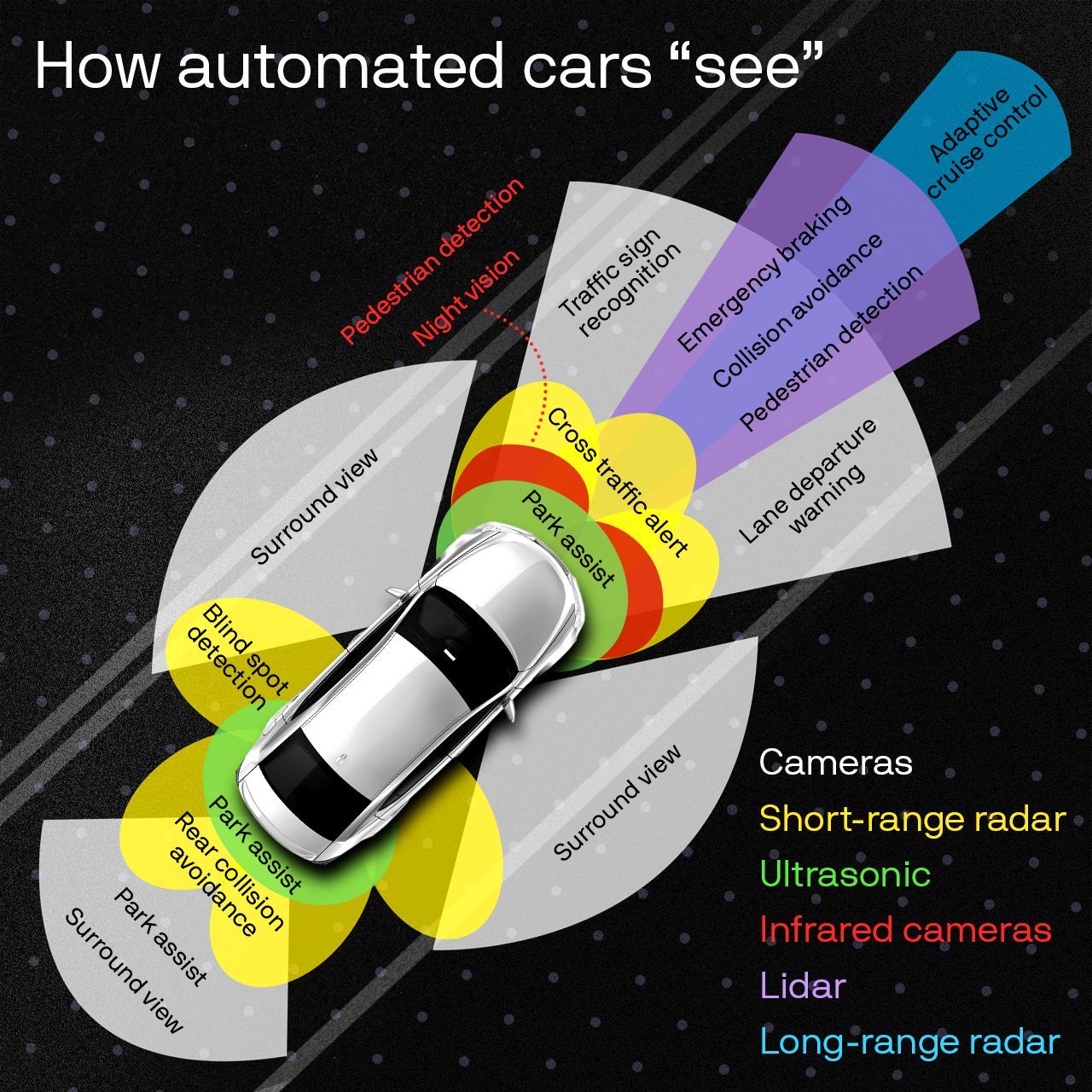

It takes a variety of overlapping sensors working together for self-driving cars to accurately perceive the world around them.

Why it matters: Cars that drive themselves — whether in limited highway settings or in geofenced urban areas, like robotaxis — require superhuman vision, along with sophisticated prediction and decision-making capabilities.

How it works: Each type of sensor has strengths and weaknesses. Putting them together in an AI-driven process called "sensor fusion" helps cars make sense of the constant flow of information.

Multiple cameras with different ranges and purposes help cars get a 360-degree view of their environment.

- But cameras can't calculate distance and aren't great in rain and fog. Infrared cameras can help with night vision.

Radar supplements camera views by sending out pulsating radio waves that can detect objects and provide data about their speed and distance.

- Yet radar's accuracy and resolution are low, so it can't identify objects.

Lidar paints a detailed 3D picture of a car's environment using signals that bounce back from high-speed lasers.

- Once prohibitively expensive, lidar is now being used even in assisted-driving systems with lower levels of autonomy.

Ultrasonic sensors mimic the way bats orient themselves by transmitting high-frequency sound waves to gauge the distance of objects within close range, like parked cars.

Editor's note: This story originally published on March 28.