Fresh off firing up the Memphis Supercluster, claimed to be "the most powerful AI cluster in the world," Elon Musk has now shared pictures of a supercomputer cluster that uses his own homegrown Dojo AI accelerators. He also announced on the Tesla earnings call that he would double down on Dojo development and deployment due to the high pricing of Nvidia's GPUs.

Aside from the opening of the xAI facility in Tennessee, which aims to have 100,000 Nvidia H100 GPUs on a single fabric, Musk said that he will have Dojo D1 up and running by the end of the year. As Musk said, it would have the processing power of 8,000 of Nvidia's H100 chips, which is “Not massive, but not trivial either.”

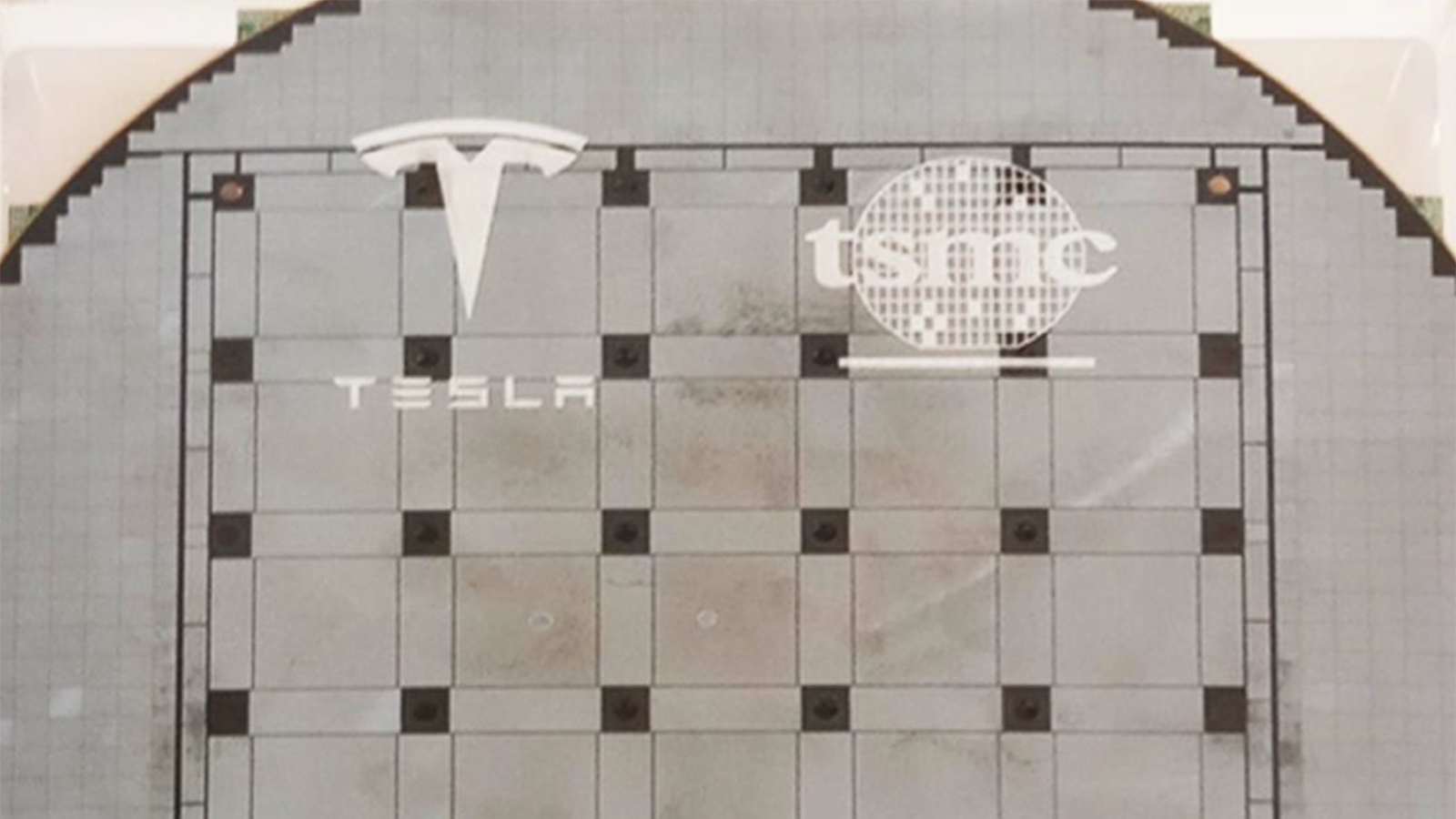

Dojo pics pic.twitter.com/Lu8YiZXo8cJuly 23, 2024

Musk first unveiled the Dojo D1 chip in 2021 with a performance target of 322 TeraFLOPs of power. Then, in August last year, Tesla was spotted hiring a Senior Engineering Program Manager for Data Centers, which is usually one of the first steps any organization would take when planning its own data centers. Tesla also doubled its orders for the Dojo D1 the following month, which shows its confidence in its performance.

By May 2024, it was reported that the Dojo processor was already in mass production. Now, it seems that the Dojo chips have already made their way to the States and into Elon’s hands, and yesterday he shared pictures of the Dojo Supercomputer at their home in the data center.

And Dojo 1 will have roughly 8k H100-equivalent of training online by end of year.Not massive, but not trivial either.July 23, 2024

The Dojo chips are system-on-wafer processors with a 5-by-5 array. This means its 25 ultra-high-performance dies are interconnected using TSMC’s integrated fan-out (InFO) technology) so they can act as a single processor and perform more efficiently than similar multi-processor machines.

TSMC manufactures Dojo chips for Tesla, and Musk will run them alongside his Nvidia-powered Memphis Supercluster. However, while the Tennessee facility is owned by xAI and is primarily used for training Grok, the Dojo chips are more tuned for AI machine learning and video training, especially as they will be used to train Tesla’s Full Self-Driving technology based on the video data gathered from Tesla cars.

When Musk combined all the chips he has on hand, he said that he’d have 90,000 Nvidia H100 chips, 40,000 Nvidia AI4, and the Dojo D1 wafers running by the end of 2024. This substantial computing power shows how much effort and resources the billionaire is pouring into artificial intelligence.