AWS re:Invent 2023 is a wrap, after a packed few days in Las Vegas for the computing giant's annual event.

The three-day event saw keynotes from AWS CEO Adam Selipsky and Swami Sivasubramanian, Vice President of AWS Data, and Dr. Werner Vogels, Vice President and CTO, Amazon.com, with a huge host of news, announcements and more

If you missed any of the news or updates from AWS re:Invent 2023 so far, check out everything in our live blog below, or read our coverage from the event:

- AWS CEO - the cloud is for everyone, so don’t stop reinventing

- Amazon Q is the AWS AI-powered chatbot looking to solve all your workplace problems

- Amazon unveils next-generation Graviton4 and Trainium2 chips to power the business AI future

- Amazon wants to help your business create images using AI

- Amazon wants you to ditch keycards, and scan your palm instead to get into the office

- AWS might have just made contact centers less awful for everyone

- AWS reveals a new pocket-sized PC that is strictly for business

Happy re:Invent 2023! After arriving last night and getting through a jet-lagged day, we're ready for one more night's sleep before the main event kicks off tomorrow.

That's not to say AWS has been cruising into the event though - as today it announced Amazon WorkSpaces Thin Client, a low-cost enterprise focused device that should help businesses everywhere.

Welcome to the official day one of AWS re:Invent 2023! We're (relatively) well-rested and about to get caffeinated, so are nearly ready for the big kick-off shortly.

This morning kicks off with a keynote from AWS CEO Adam Selipsky, who will no doubt be unveiling a host of new products and services, as well as bringing customers and other friends on stage.

The keynote begins at 08:00am PT, so there's just over an hour to go.

In true Vegas tech conference fashion, it's 07.35am and we're being assaulted by full-throttle 80's rock covers (although the band are pretty decent)

Mr Brightside anyone?

Now we have a Back in Black vs Wonderwall mash-up...if this wasn't Vegas, I'd be confused.

20 minutes until the keynote....

With a few minutes to go, it's a packed out keynote theatre full of AWS fans!

The band is closing out with "My Hero" by Foo Fighters - requested by Adam Selipsky himself apparently!

And with that, the band is done, and it's keynote time!

AWS CEO Adam Selipsky takes to the stage to rapturous applause, welcoming us to the 12th re:Invent event - there's apparently 50,000 people here this week.

Selipsky starts with a run-down of the customers AWS is working with, from financial to healthcare, manufacturing and entertainment.

Salesforce gets a special mention, with Selipsky highlighting the newly-announced partnership between the two companies. Salesforce is expanding its "already large use" of AWS, he notes, with Bedrock and Einstein working together to help developers build generative AI apps faster.

Salesforce is also putting its apps on the AWS marketplace.

But start-ups are also choosing AWS, Selipsky notes, with over 80% of unicorns running on the company's platform - from genomics mapping to guitar-making.

"Reinventing is in our DNA," Selipsky notes, adding that's how cloud computing as a whole came about.

This has evolved to making sure companies of all sizes have access to the same technology, no matter who they are.

AWS now extends to 32 regions around the world - no other cloud provider offers that, Selipsky notes.

This extends to multiple AZ's in each region, meaning regions can remain in operation, even in case of emergency or outages.

"Others would have you believe cloud is all the same - that's not true," he notes.

AWS offers three times the amount of data centers compared to the next closest cloud provider, 60% more services, and 40% more features - that's what helps it stand apart from the competitor, Selipsky says.

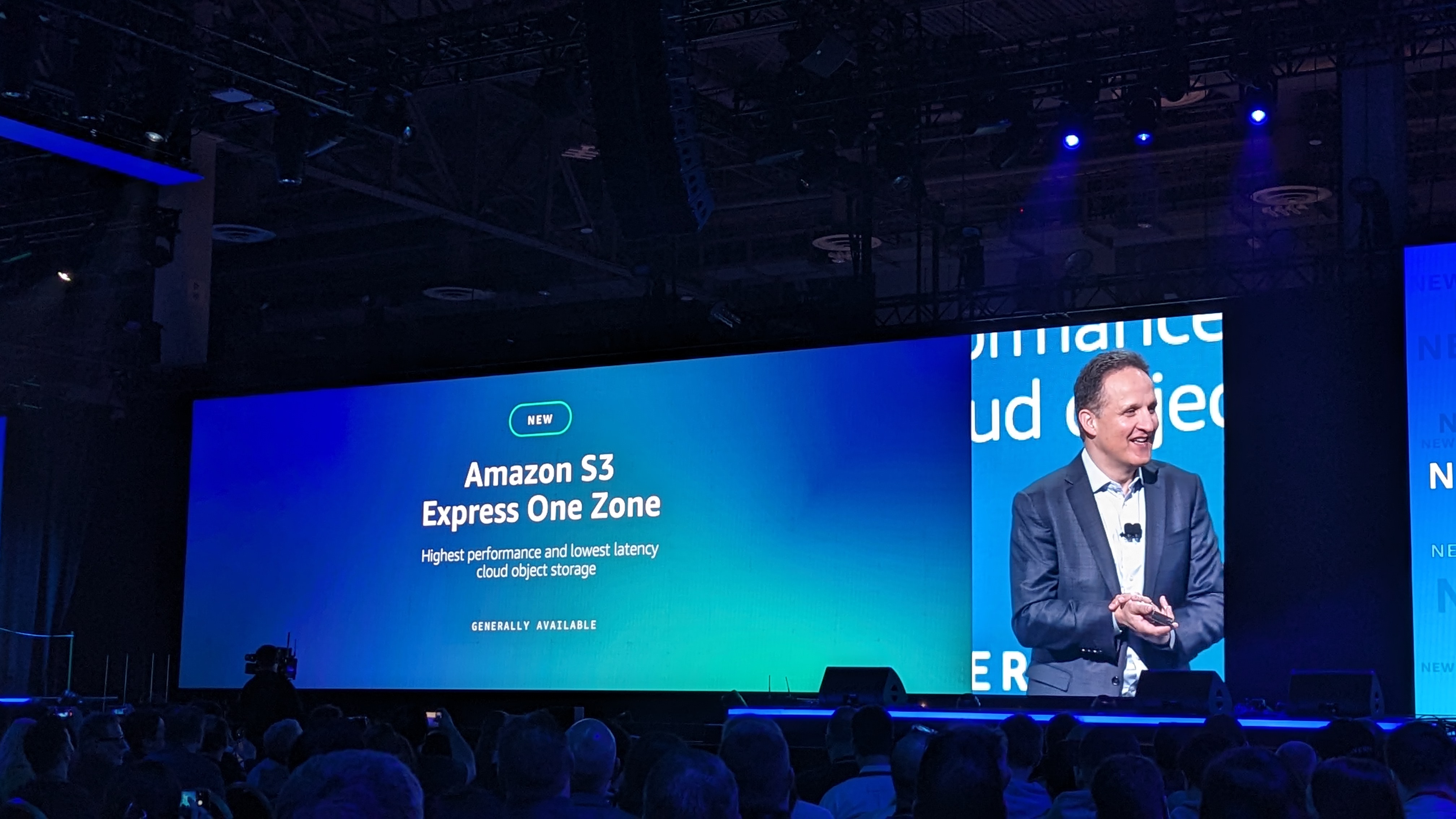

Selipsky runs through some of the history of Amazon storage, looking back at how Amazon S3 has evolved.

Now, it's time for the next step forward in this journey, he says - Amazon S3 Express One Zone.

Designed for your most-accessed data, it supports millions of requests per minute - and is up to 10x fastser than the existing S3 storage. It looks like a huge step forward for users everywhere.

Now, we move on to general-purpose computing - it's Graviton time.

Selipsky looks back to the initial launch in 2018, before Graviton2 in 2020 and Graviton3 in 2022.

Now though, it's time for an upgrade, namely AWS Graviton4 - the most powerful and energy-efficient chip the company has ever built, Selipsky says.

The chips are 30% faster than Graviton3, 40% faster for database applications, and 45% faster for large Java applications.

All this adds up to a full suite designed for helping your business, Selipsky says.

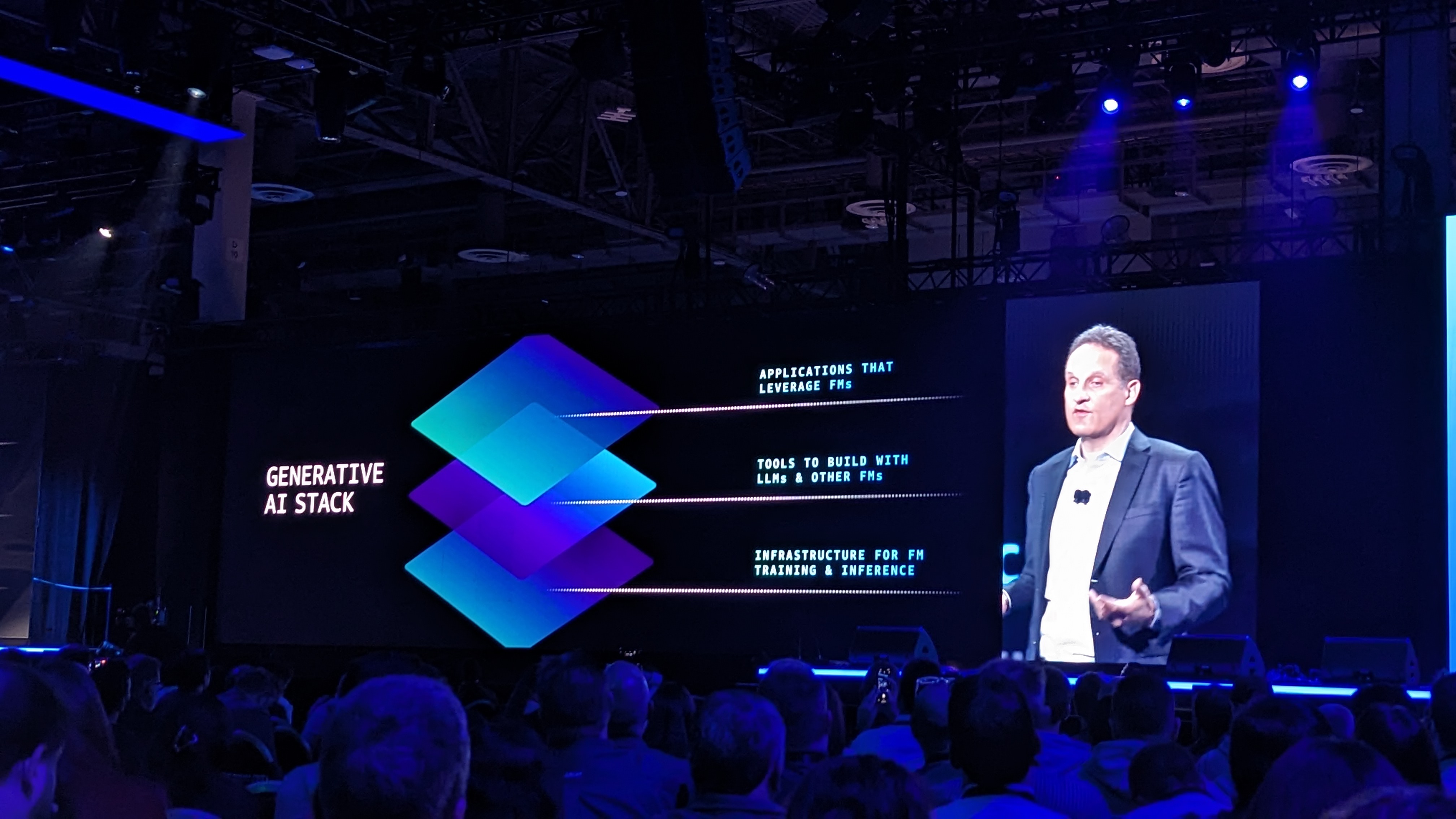

Here we go - it's AI time.

"Generative AI is going to reinvent eery application we interact with at work or home," Selipsky says, noting how AWS has been investing in AI for years, using it to generate tools such as Alexa.

The Generative AI stack has three layers, he highlights, turning to infrastructure first. AI uses huge compute power, so getting the most out of your stack is vital.

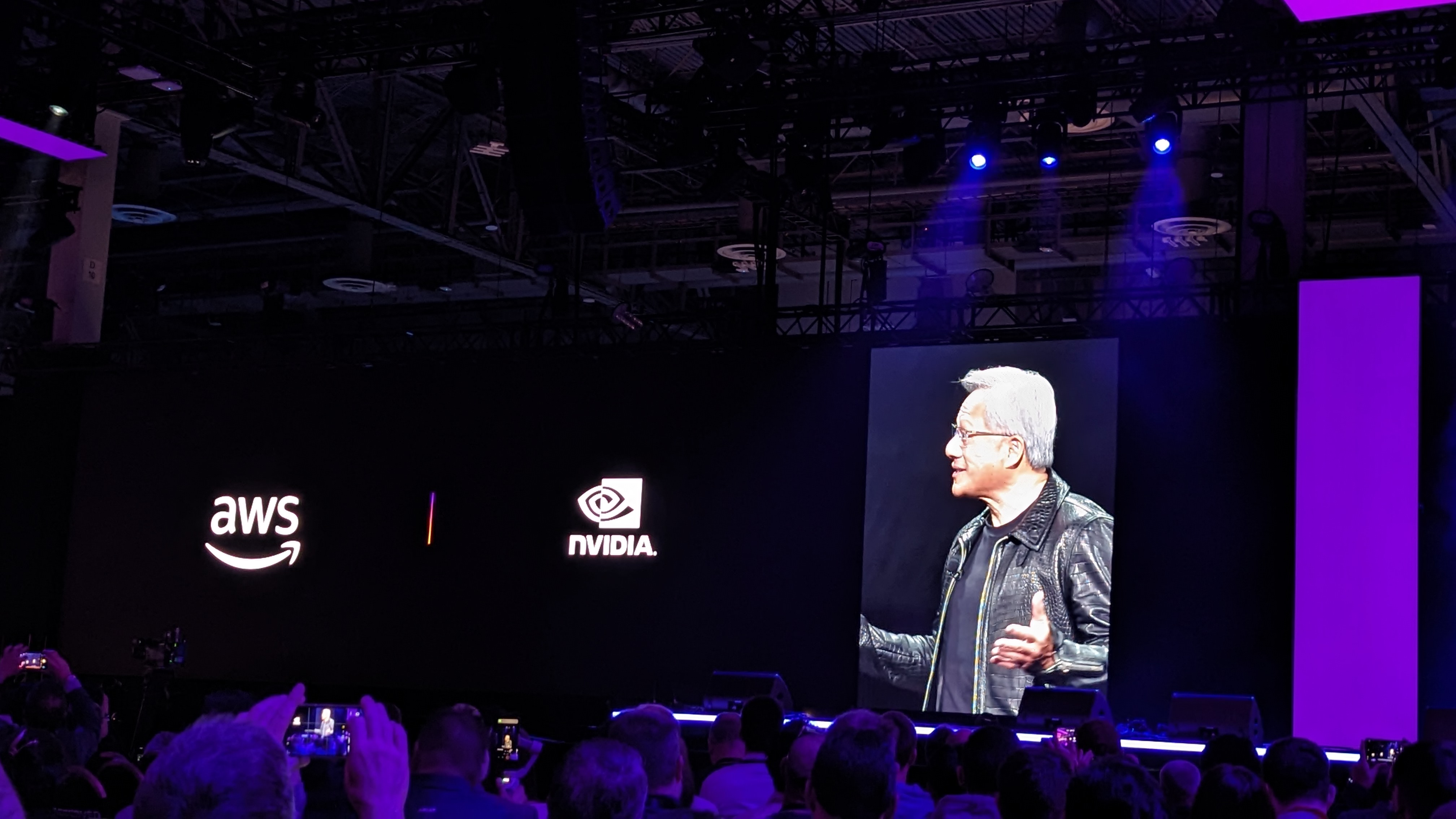

Selipsky highlights AWS' close work with Nvidia to help further the training and development of AI.

But you also need high-performance clusters alongside GPUs, with AWS providing incredibly advanced and flexible offerings that allows customers to scale up when needed.

All of this is built on AWS Nitro, which "reinvented virtualization", allowing for efficiency and productivity gains all round, Selipsky says.

Selipsky reveals an expansion of AWS and Nvidia's relationship, and introduces a special guest - Nvidia CEO Jensen Huang!

Huang announces a deployment of a whole new family of GPUs - including the new Nvidia H200 Grace Hopper superchips, offering a huge step forward in power and performance when it comes to LLMs and AI.

Nvidia is deploying 1 zettaflop of computing capacity per quarter - a staggering amount.

There's a second big announcement - Nvidia DGX Cloud is coming to AWS as well.

This is Nvidia's AI factory, Huang notes - "this is how we develop AI".

DGX Cloud will be the largest AI factory Nvidia has ever built - including running Project Seba, 16,384 GPUs connected into one AI supercomputer - "it's utterly incredible," Huang notes - a stunning 65 exaflops of power.

With those two (frankly ridiculous) announcements, Huang departs to huge applause.

Selipsky now moves on to capacity - getting access to all this compute.

Customers often needs clustered capacity brought together, but don't need all of it all the time - fluctuating demands call for short-term clustered capacity, but no main cloud provider provides this.

Luckily, the new Amazon EC2 Capacity Blocks for ML will now allow this, allowing customers to scale up hundreds of GPUs in a single offering, meaning they'll have the capacity they need, when they need it.

Moving on to the silicon level, Selipsky focuses on EC2 Inf2 Instances, which now deliver higher throughput and lower latency than ever before.

AWS Trainium is seeing major uptake among companies looking to train generative AI models - but as models get bigger, the needs get greater.

To cope with this, Selipsky reveals AWS Trainium2, four times faster than the original product, making it better for training huge models with hundreds of billions of parameters.

Now it's on to AWS SageMaker, which has played a huge role in AWS's ML and AI work over the years, and now has tens of thousands of customers across the world, training models with billions of parameters. But there's no update for this platform today...

Now, moving on from infrastructure to models. Selipsky notes that customers often have many pertinent questions about how best to implement and use AI, and AWS wants to help with its Bedrock platform.

Allowing users to build and scale generative AI applications with LLMs and other FMs, Bedrock also allows significant customization and security advantages. Over ten thousand customers are using Bedrock already, and Selipsky sees this only growing and expanding in the future.

"It's still early days," Selipsky notes, highlighting how different models often work better in different use cases - "the ability to adapt is the most important ability you can have."

In order to help deal with this, AWS is looking to expand its offerings for customers - "you need a real choice", Selipsky notes.

To do this, he emphasizes how Bedrock can provide access to a huge range of models - including Claude maker Anthropic.

He welcomes Anthropic CEO and co-founder Dario Amodei to the stage to talk more.

Amodei notes that AWS will be Anthropic's main cloud provider for mission-critical workloads, allowing it to train more advanced versions of Claude for the future.

After a glowing review from Amodei, he heads off, and Selipsky turns to Amazon Titan Models - the AI models Amazon is creating itself, with 25 years of experience with AI and ML.

These models can be used for a variety of use cases, such as text-based tasks, copy editing and writing, and search and personalization tools.

More Titan models will be coming soon - but more on that in tomorrow's keynote, apparently...

Using your own data to create a model that's customized to your business is vital, Selipsky notes, with Amazon Bedrock and new Fine tuning tools allowing just that, meaning the model learns from you and your data to help create tailored responses.

There's also a new Retrieval Augmented Generation (RAG) with Knowledge Bases release on offer, allowing even further customization.

Now, how do you actually use FMs to get stuff done? Selipsky says this can often be a complex process, but fortunately there's the new Agents for AWS Bedrock tool, which allows multi-step tasks across company systems and data sources, allowing models to be more customized and optimized for specific use cases.

But models need to be secure and private, and AWS Bedrock can allow that, the same as all other AWS services, Selipsky says.

He emphasises that no customer data will ever be used to train the underlying model, never hits the public web, and stays in the AWS network.

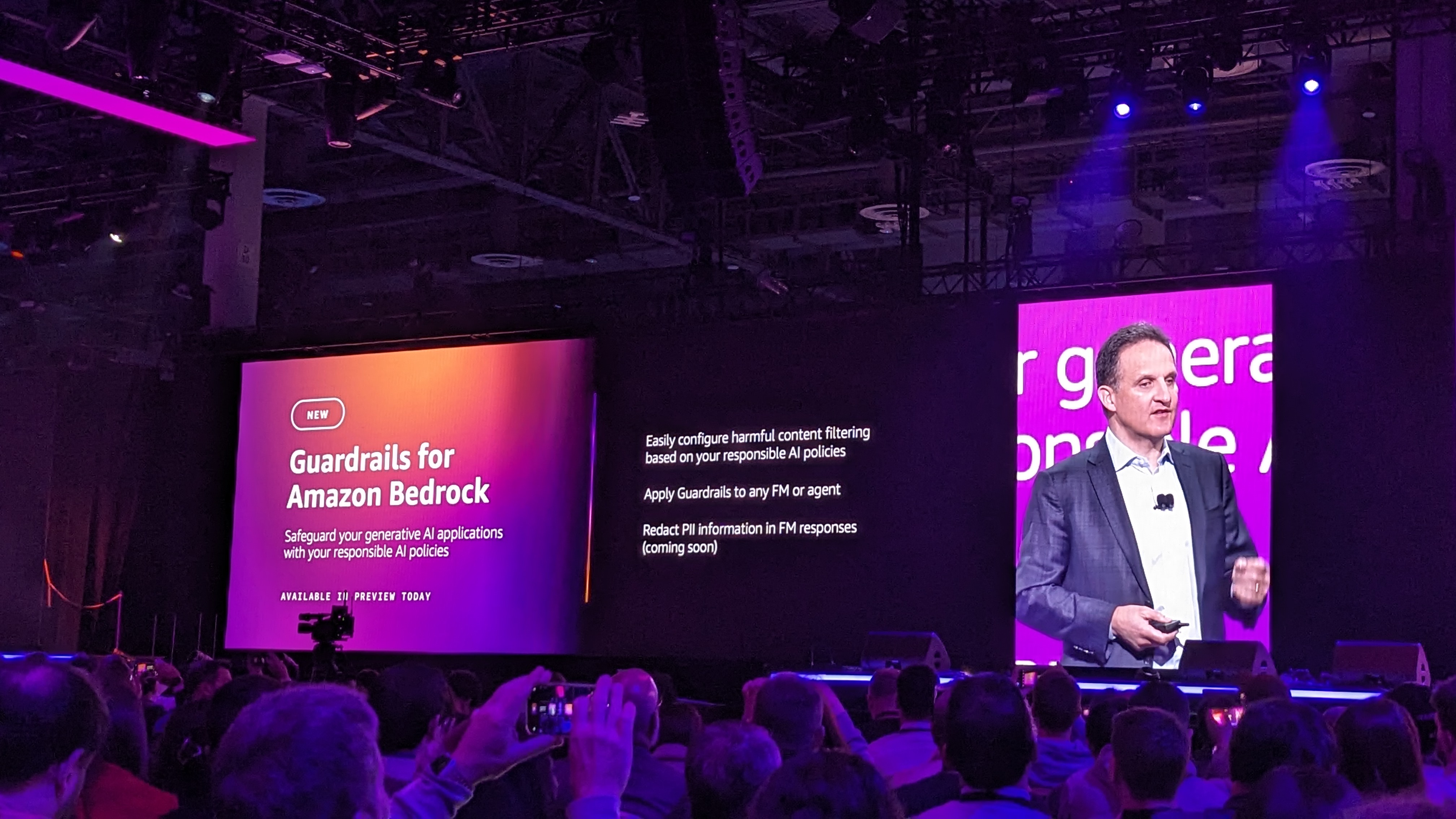

AI also needs to be responsible, Selipsky says, but enforcing this will mean "unprecedented collaboration" among all kinds of stakeholders.

AWS has been at the forefront of this, he notes, highlighting its work with US and UK governments in recent weeks,

To go further, Selipsky reveals Guardrails for Amazon Bedrock, which allows companies to set up responsible AI policies to safeguard users

Now it's time for another big customer spotlight, with Pfizer's Lidia Fonseca, chiefi digital and information officer, on stage.

Pfizer is using AWS to launch a specific data cloud platform that gave researchers and scientists much quicker and smoother access to historical medical data, greatly speeding up research.

Now we move on to employees, and helping close the skills gap, especially when it comes to cloud computing.

Selipsky notes that AWS is looking to train 29 million employees by 2025, and has many other initiatives in operation, including over 100 AI and ML courses, as well as AI Ready, it's committment to help millions of students learn more AI skills, as well as creating AWS AI and ML scholarships for young entreprenuers across the world.

Selipsky says there is a huge opportunity from infusing Generative AI into the apps you use every day.

"We believe generative AI has the potential to transfrom every customer interaction we know," he says.

A variety of tasks can be simplified by AI, he notes, including writing code, via Amazon CodeWhisperer, which provides AI-powered code suggestions.

AWS is making CodeWhisperer code suggestions free for individual users, but in order to help developers even further, the company is launching new Amazon CodeWhisperer customization capabilities.

"But we're just getting started," Selipsky notes, highlighting AI chat applications as another major target.

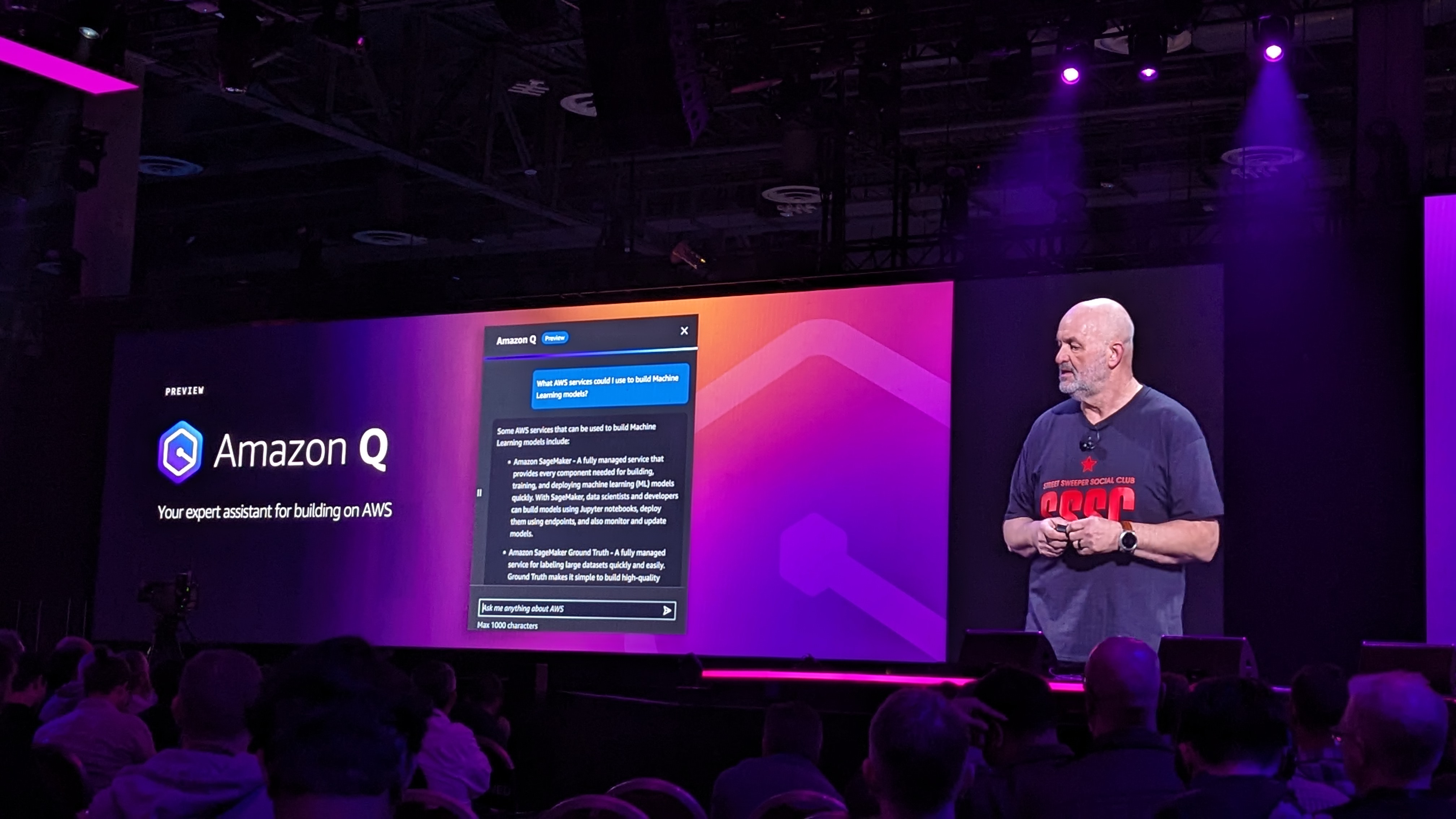

Often, these models aren't smart enough to know about specific companies or workers, your preferences or data sources - so AWS is announcing Amazon Q, a new generative AI-powered assistant that's tailored to your workplace.

Trained on 17 years of AWS knowledge, Amazon Q can be specifically tailored and customized to the precise tasks you encounter at work.

The Q Architect service can also help you research, troubleshoot and analyze issues across your business, saving time and stress, and provide suggestions on optimizing AWS infrastructue queries, such as EC2 instances.

Amazon Q is also going to be in the IDE for developers, working alongside CodeWhisperer for further code suggestions, helping reduce "hours of work...it'll do all the heavy lifting for you," Selipsky says.

Amazon Q will be available today as a preview, but will get a wider release soon.

AWS also wants to help developers who are swamped with upgrades, especially those struggling with migations and updates when it comes to language versions for platforms such as Java.

In order to help, Amazon Q Code Transformation can help complete upgrades in just a fraction of the time - not just Java, but also migraton from Windows to Linux.

But what about the less technical workers in your company?

Selipsky notes how workers across multiple roles struggle to find the information they need and turn it into the results they need.

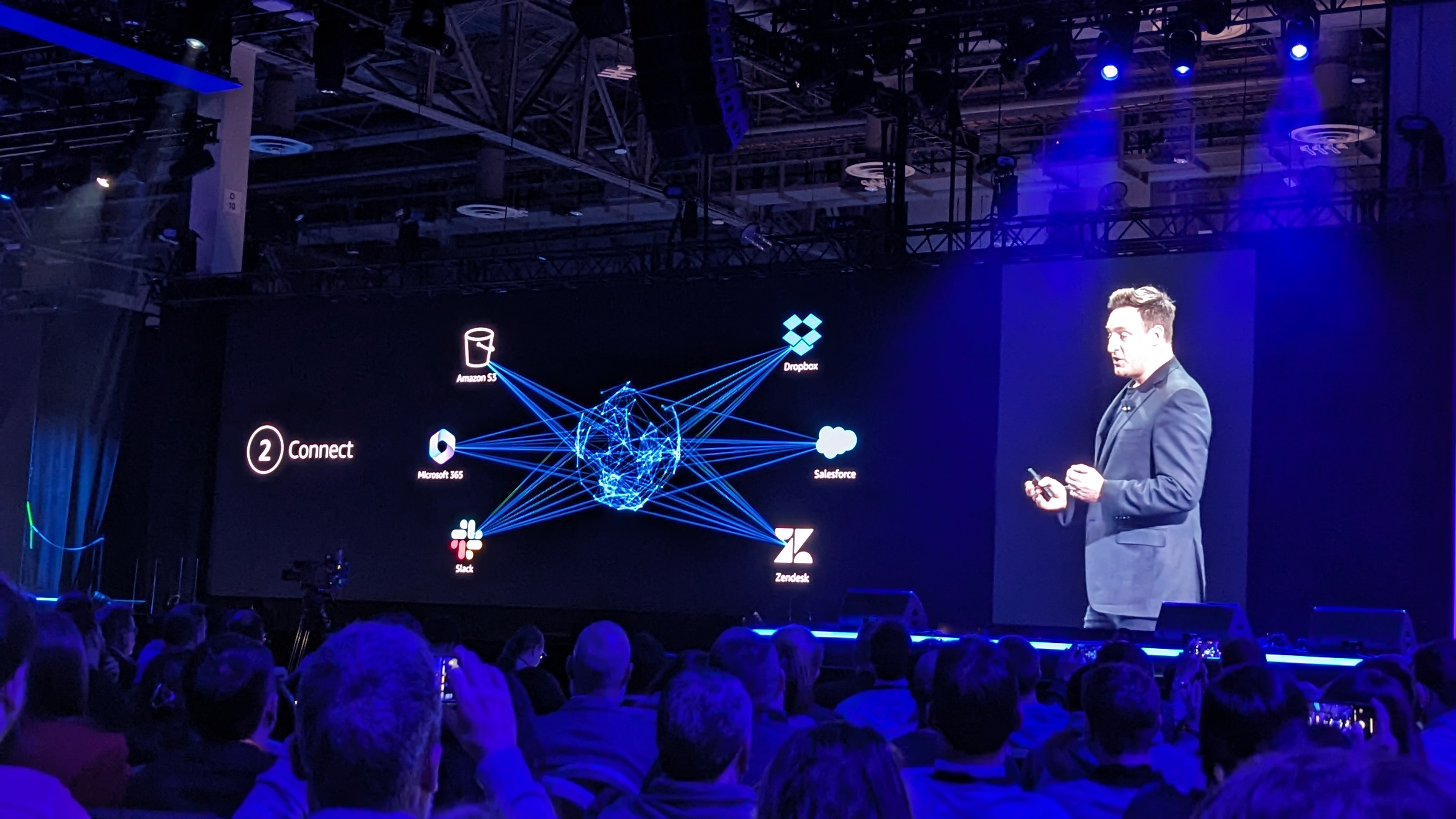

Amazon Q will also be "your business expert" he says, allowing workers to ask complex questions relevant to their precise roles. It connectes to over 40 popular tools such as Salesforce, Gmail, Slack, Atlassian and Microsoft 365.

We're then treated to a demo from AWS' head of product Matt Wood, who shows us how Q is able to use generative AI to allow users to ask complex questions based on company (and even role) specific tasks and responsibilities.

It can also take access on your behalf, updating processes and notifying co-workers of any changes or upgrades.

"Amazon Q brings AI into the business world," Wood notes.

Amazon Q can also help workers who may not be as clued-up, allowing natural language queries that will track down the data or information needed much faster, even if your question is just a single word.

The platform will also help create graphs, tables and even reports - and customer service agents will be able to get better help using Q integrations with Amazon Connect, the company's contact center-specific platform, which will provide real-time response generations as the call develops.

"This is just the start of how we're going to revolutionize the future of work," Selipsky notes, saying that it's still early in the game.

He now turns to data itself - which is more important than ever before - and he welcomes Stephan Durach from BMW to talk more about personalization and customization using AWS and AI.

Selipsky is back, and looking at how important data in generative AI really is.

"Your data is your differentiator," he notes, and ensuring it is all up to date and relevant is paramount - as is getting your data in the cloud.

Different organizations across a business will use different kinds of data from different sources - but there's no one tool to help smooth this out.

What you need is a set of tools to allow you to get value and access to your data - if only there was something like that around, hey.

Wouldn't you know it - Selipsky outlines how Amazon has provided the most comprehensive set of data services around, from Data Lkes to Aurora to Analytics to Databases via RedShift.

Another new announcement - three new zero-ETL integrations with Amazon Redshift, giving users access to more databases.

ETL is a big pain point for many businesses, and Selipsky notes that AWS is going even further with the launch of Amazon DynamoDB zero-ETL integration with Amazon OpenSeach Service.

To help with governance, there's an update to Amazon DataZone, which now goes further to help users find the right data at the right time with new AI recommendations, which can automatically add business context to your data catalog in just a few clicks.

"The most important reinvention of all is yours," Selipsky notes, emphasizing how AWS is looking to give businesses everywhere the tools they need to experiment and grow.

He notes how Amazon has a history of making big bets, and how its long-term focus is part of its DNA.

Next up is Project Kuiper, the LEO satellite project that looks to deliver fast and reliable internet access for those in need. We're then treated to a little promo video about Kuiper's recent advances, complete with real-life smoke machines!

It's not just consumers who can benefit from Kuiper though, as Selipsky outlines how first-responders, environmental scientists and more can see advances.

Kuiper is also going to provide enterprise-ready data transfer services via Kuper Network Core, coming next year.

And with that final flourish, that's a wrap! Nearly two and a half hours of pure AWS innovation and revelation - we're off to digest the mountain of press releases, so stay tuned for all the latest news on TechRadar Pro.

We'll be back tomorrow morning with the day two keynote from Swami Sivasubramanian, looking at all things AI and ML, so join us then!

Good morning and welcome to day two of our AWS re:Invent 2023 live blog!

Following yesterday's blockbuster keynote, we'll shortly be heading to hear what Swami Sivasubramanian, Vice President of AWS Data has to say - our guess is that it'll be majorly AI and ML-flavored.

The keynote kicks off at 08:0am PT, so it's time for us to grab some breakfast and fuel up!

We're in and seated nice and early today - and after today's rock and roll fest, we're being woken up good and loud by Las Vegas tech conference fixture DJ CRYKIT and her fabulous sparkly jacket.

Let's hope the keynote content today is suitably eye-catching...

As always for these kinds of conferences, the bass levels are *way* too high for such a small space - come on guys, it's 8am in the morning...

Sadly it seems Dr. Sivasubramanian hasn't put in a last song request like his CEO Adam Selipsky did yesterday, but the music dies down, and away we go...

Swami Sivasubramanian takes to the stage and says he's excited to be here once again.

"This year,we are standing at the edge of another technological era," he says, "an era in which a powerful relationship between humans and technology is unfolding right before us."

"Generatie AI is augmenting human productivity in many unexpected ways...this relationship is rife with so many possibilities."

It's a symbiotic relationship - like remoras and rays, and supernova energy, he says, noting how, "The world is captivated by generative AI's potential."

Sivasubramanian looks back over 200 years of technological innovation, harking back to Ada Lovelace and her computational breakthroughs - and how the effects of this are still being felt today.

This new era of generative AI, "will shift our attitutde towards AI towards Inteliigence Augmentation," he says - as data, generative AI and humans are advancing their potential together.

More AI workloads run on AWS than any other provider, Sivasubramanian notes, with the company offering a full stack of everything you need when it comes to building and developing AI.

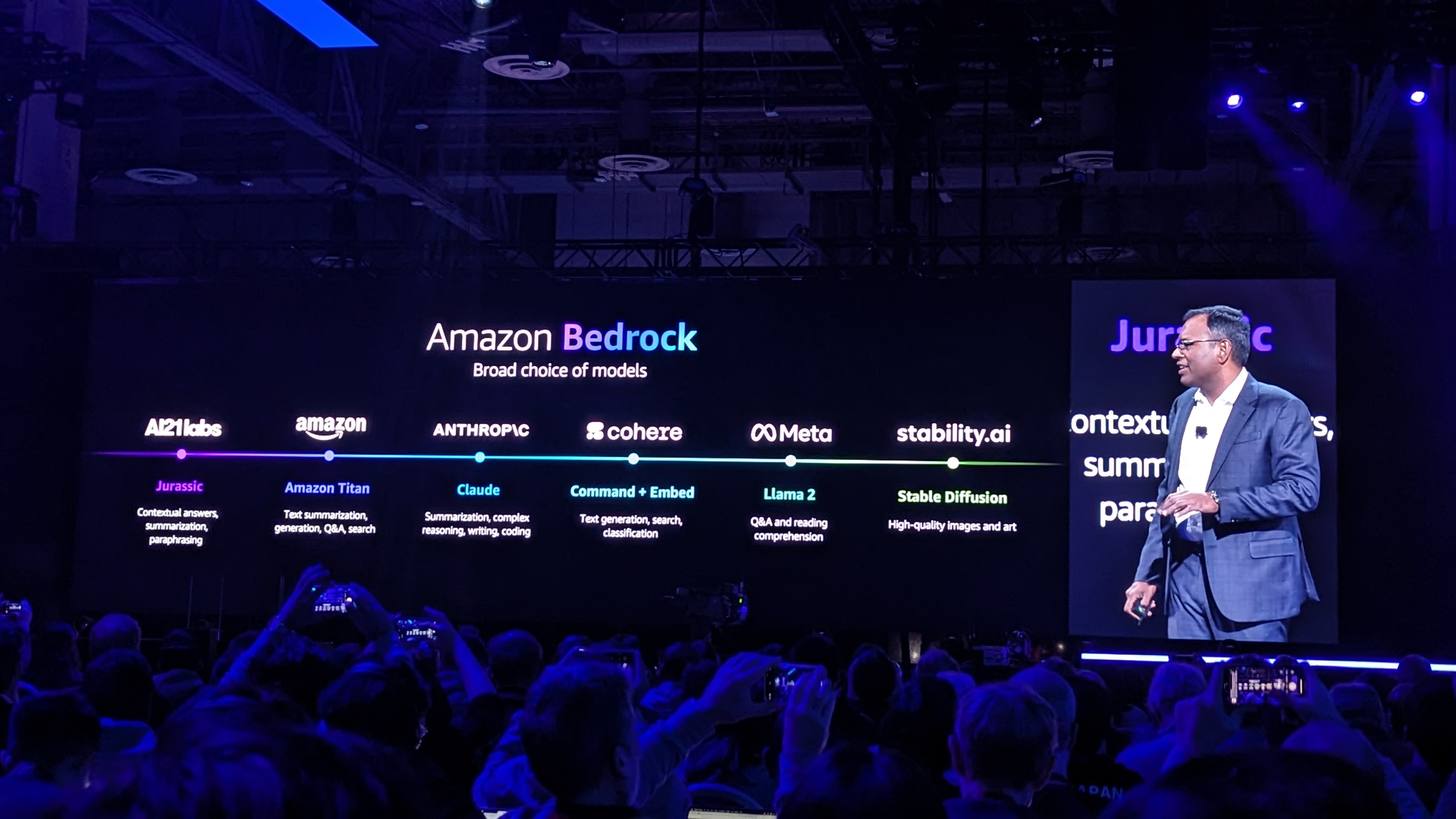

Underpinning all of this is Amazon Bedrock, which allows customers to choose from a wide range of AI models, from Meta to Anthropic to Amazon's own model.

Expanding on this, Sivasubramanian announces Anthropic's Claude 2.1 ad Meta's LLama 2 70B models will now be available on Amazon Bedrock.

"Unlike some of our competitors, AWS is continually building new innovations in this area for our customers," Sivasubramanian notes, highlighting Amazon's 25 year's experience in AI and ML.

He moves on to focus on vector embeddings, which are numerical representations of text, and are crticial for customizing generative AI applications, allowing for much more accurate and specific answers for customers.

The technology is already in use across Amazon via its Titan Text Embeddings, but as customers build more models, they need something extra to help with mutlimodel offerings.

The new Amazon Titain Multimodal Embeddings will allow companies to build richer, smarter search models.

There's also new Amazon Titan Text Lite and Amazon Titan Text Express, fixed models that allow for greater accuracy and lower cost.

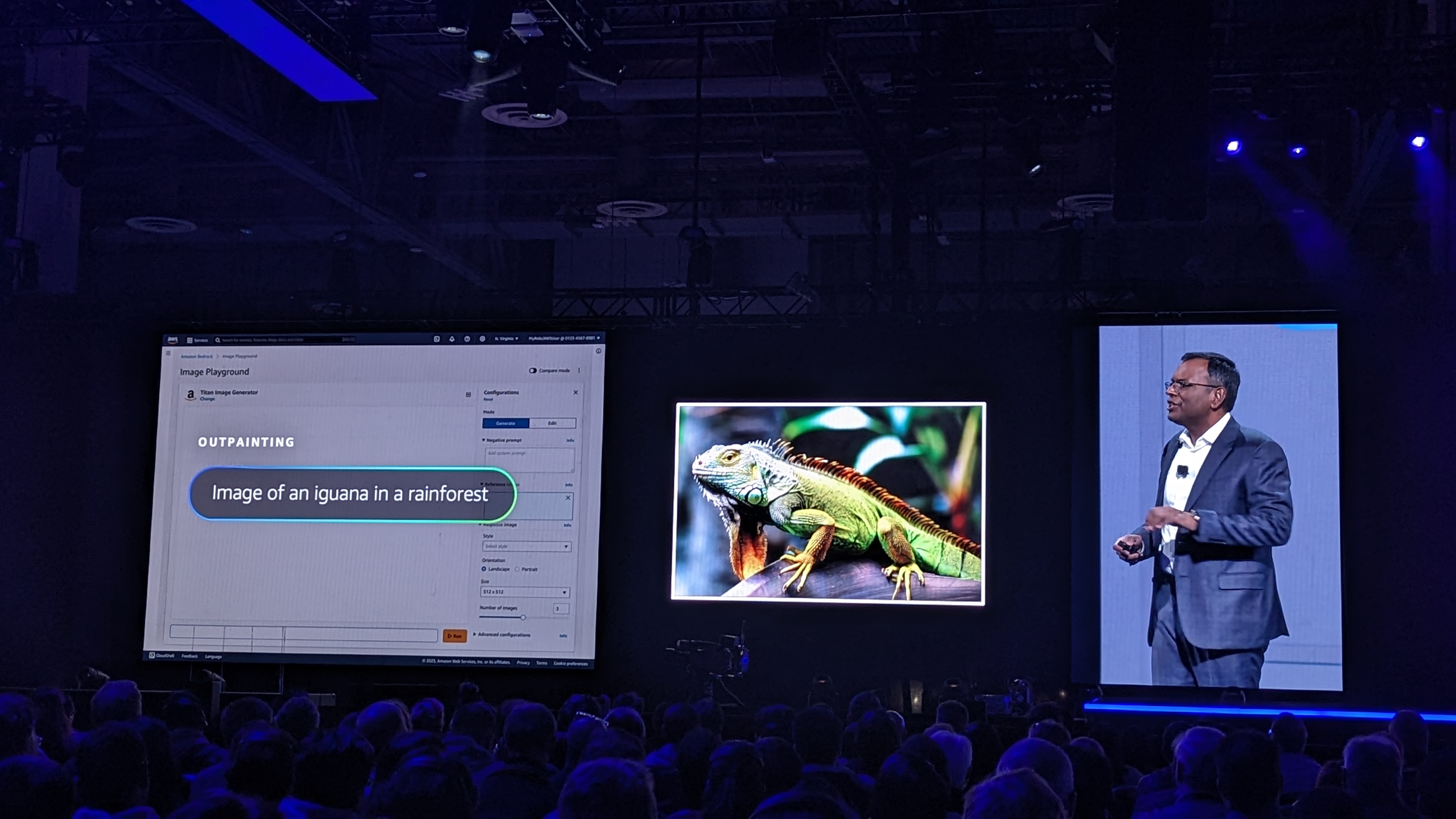

And when it comes to image generation and customization, AWS is also getting involved, launching a new Amazon Titan Image Generator, allowing customers to create high-quality, realistic images using natural language prompts, or evolve your existing imagery to make it even better, using proprietary company data to make sure it stays brand-friendly.

All AI-generated images also come with an invisible watermark built-in as a default, hopefully helping lower misinformation and tampering.

Sivasubramanian shows us a demo of the new image generator, which can swap out backgrounds, but also create variations of an original image, or even flip the orientation completely. This could be great for lifestyle images, or marketing and advertising designers looking for a variety of options, he notes.

More than 10,000 customers are using Amazon Bedrock to create AI-powered applications and assitants, Sivasubramanian notes, including SAP, Georgia-Pacific and United Airlines.

Now it's time for a customer highlight - Nhung Ho, VP of AI at Intuit comes on stage to talk us through her company's work using AWS, leading to major steps forward in productivity and efficiency.

Sivasubramanian returns, and now it's time to talk about data.

Data can be the difference in creating a model that really stands out and is useful - and fine-tuning is the key for this.

Continued pre-training can also help, for example for a medical firm updating its models with new research and findings, with Amazon Titan models doing a lot of the heavy lifting.

We're now looking at Retrieval Augmentation Grouping, or RAG - a vital part of AI and ML, but something that can be incredibly resource and time-intensive. AWS is looking to help this with its Knowledge Bases in Amazon Bedrock, which can get your models access to the data it needs, as fast as possible.

Enabling foundation models to execute tasks is another major pain point, but the new Agents for Amazon Bedrock platform enables generative AI applications to compete tasks in just a few clicks.

Sivasubramanian shows us another demo - this one about DIY. Any kind of project like this has a lot of complicated steps, but he shows how a powerful and knowledgable generative AI app can help cut out complexity, even when it comes to complicated and multi-step projects.

For those customers looking for a more hands-on repsonse, the AWS Generative AI Innovation Center is the answer - and it's getting new custom model programs for Anthropic Claude. This will let companies customize their models with AWS experts right down to the smallest detail, before deploying them on Amazon Bedrock.

We're now moving on to Amazon SageMaker, which is going to play a huge role in AWS' AI efforts, building, training and deploying ML models with fully managed infrastructure, tools, and workflows.

But even with all this help, building and deploying a model can still be a huge challenge, Sivasubramanian notes.

To help with this, the new Amazon SageMaker HyperPod will help reduce the time to train models by up to 40%, automatically taking checkpoints frequently to help with any issues, meaning downtime is no longer a drastic issue - and leading to longer training times for models everywhere.

There's also a number of other SageMaker upgrades when it comes to Inference, Training, IDEs and MLOps.

It's time for another customer story - the CEO of Perplexity comes on to tell us about how it wants to revolutionize the search engine game.

Sivasubramanian returns, and tells us how having a strong data foundation is critical to getting the most out of generative AI.

"Gen AI makes this data foundation even more critical," he says, noting how a strong data foundation is comprehensive, integrated and governed.

There's another new announcement - Vector engine for OpenSearchServerless, leading to more efficient search and processes.

Vector capabilities are also coming to Amazon DocumentDB and Amazon DynamoDB, allowing users to store multiple kinds of data together.

And there's also an upgrade for Amazon MemoryDB for Redis, which is also getting vector search, leading to faster response time and allowing for tens of thousdands of queries per second.

There's also a new analytics database - Amazon Neptune Analytics, which allows developers to examine huge amounts of graph data in seconds, and also allows faster vector search.

Having integrated data is also vital to make sure your business gets the best view of its information, allowing you to create better experiences for your customers.

AWS is committed to a zero-ETL future, with seamless integrations across its platforms. This is going a step further with the new Amazon OpenSearch Service zero-ETL integration with Amazon S3, analyzing all your operational data in S3 using a single tool.

Now on to governance, and following the announcement of Amazon DataZone last year, AWS is now moving on to further help protect and safeguard data. Alongside AWS Clean Rooms, where customers are able to examine certain specialised collected data, there's also the new AWS Clean Rooms ML, which allows users to apply machine learning models with customers without sharing underlying data.

It's time for another guest speaker - it's Rob Francis, SVP and CTO and Booking.com, who tells us about how the company is managing its huge trance of user data with AWS.

Sivasubramanian is back, and turns to how data and generative AI can interact - AI can enhance the data foundation that fuels it, he notes.

"Managing and getting value out of data can still be challenging," he notes, despite the huge advances made recently - but generative AI can help with data management, making it easier, more intuitive and more accessible.

We're now looking at Amazon Q, one of the headline announcements from yesterday's keynote.

Sivasubramanian explains how it can support the developer lifecycle, helping with a variety of tasks - not least when it comes to coding. Amazon Q can help translate natural language to SQL - and tht's going to go een further with the new release of Amazon Q natural language SQL query in RedShift.

Amazon Q data integration is also coming to AWS Glue, allowing users to integrate data using natural language.

Now, it's time to think how we as humans actually fit into this new relationship.

Sivasubramanian outlines how the company is helping people around the world with its AI offerings, including improving cancer detection and treatment access in Africa with generative AI and Amazon Bedrock.

"Generative AI will undoubtledly accelerate our productivity, and that's why AWS has continued to invest in tools to allow this," he notes - highlighting Amazon CodeWhisperer as a key example.

Another shout out for Amazon Q, which will offer major productivity increases across the board, Sivasubramanian notes.

Humans also need to play a part, Sivasubramanian notes, as they provide essential inputs.

He highlights a rainforest story that shows how symbiotic relationships are common in nature - much like how humans share their data with generative AI models to create a long-lasting relationship, fuelled by collaboration

"As humans, we are responsible for creating...a flywheel of success," he notes, as humans lead the development of generative AI apps, as well as providing feedback to help maximise the efficiency of the apps.

To help with this feedback, there's a new Model Evaluation on Amazon Bedrock tool, allowing users to evelaute, compare and select the best foundation model for your use case.

While AI will make some roles obsolete, it will also create new roles and responsibilities, Sivasubramanian notes, leading to a demand for generative AI skills.

This includes new scholarships both within Amazon and in the wider world, as well as over a hundred AI and ML courses - including the aptly-named PartyRock platform.

"We're just getting started", Sivasubramanian notes, with generative AI set to power the next step along a new journey for businesses of all sizes.

"AWS has everything to help you unlock the power of this transformational technology," he adds.

And with that, it's a wrap on the day two keynote!

Sivasubramanian bids us farewell, so it's time to go and reflect on the AI future that's on the way. There's plenty more AWS news coming later today though, so stay tuned to TechRadar Pro for all the latest news and announcements.

Welcome to day three of AWS re:Invent! We're up bright and early one final time, and will be heading to Werner Vogels' keynote soon, kicking off at 08:30am PT, so join us then - I wonder what kind of music we'll have waking us up in the keynote theatre today...

We're in and seated - thankfully this morning's entertainment is a little more sedate, as after yesterday's bass-heay rave, we're serenaded with a string quartet playing classical coers of pop tunes easing us into the day...

After that charming musical intro, the lights go down - it's time for Dr. Werner Vogels, Vice President and CTO, AWS.

After his typically "unique" intro video channeling his own spin on The Matrix, and trademark greeting, Dr. Vogels welcomes us all to his talk.

Vogels notes that for many of us in the room, they have never known a work world without cloud - but that "old world" of technology did help nurture creativity.

He harks back to when Amazon.com was a growing enterprise, and how it had to be creative to make sure shoppers got what they wanted, when they wanted it.

"The cloud has removed constraints," he notes, "and when those shackles are off...you can do all these things you've wanted to do, but were never able to do before."

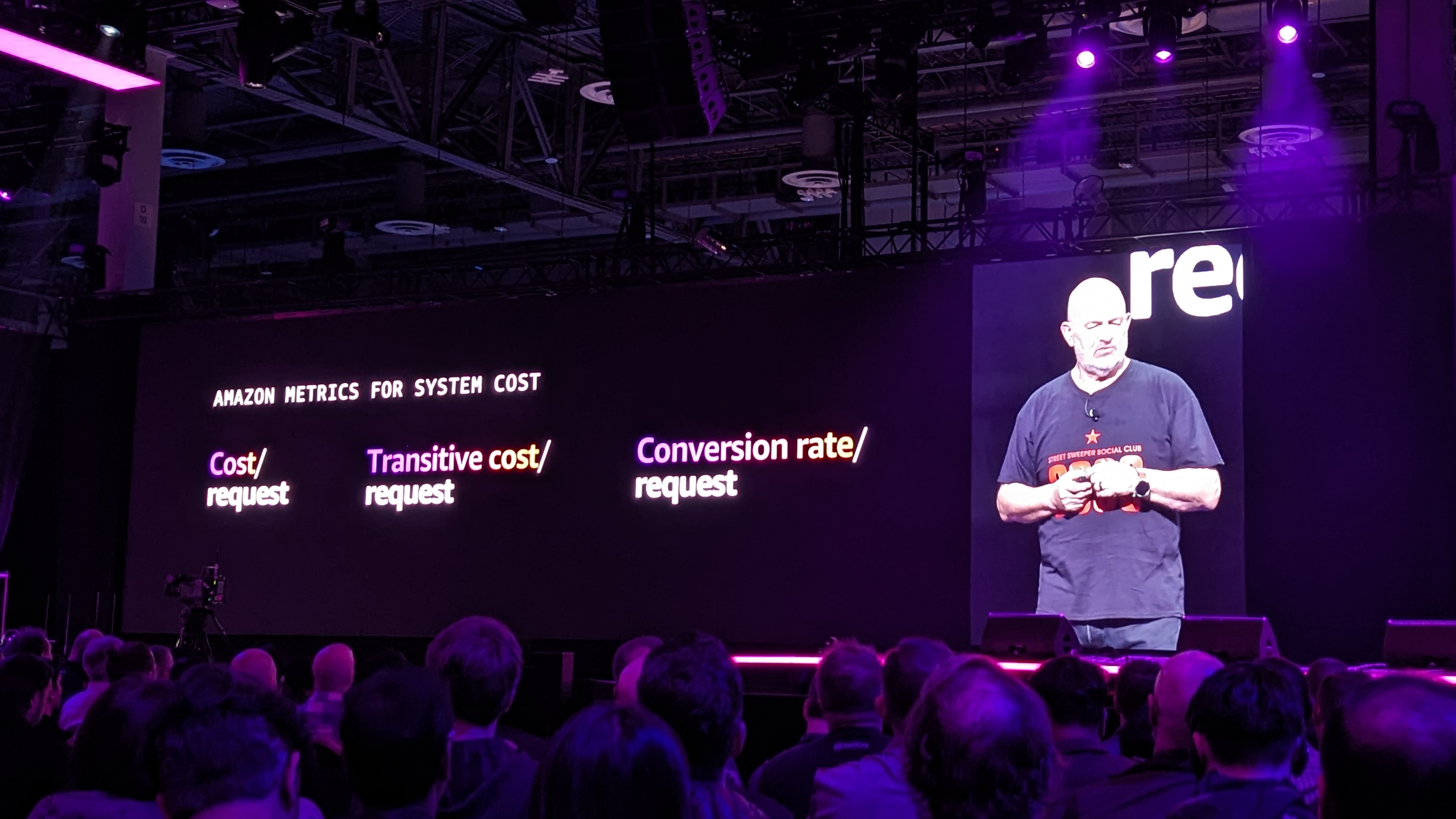

But the "art of architecting for cost" has been lost, he thinks - harking back to the first ever AWS re:Invent in 2011, where the message was architecting with cost in mind.

Many of us don't have to live with technology constraints any more, but some businesses are, Vogels notes, highlighting customer use cases such as PBS and its on-demand TV streaming service.

Cost "is a freight train coming your way that you cannot escape," he says, but is also a close proxy for sustainability, harking at AWS' efforts in making its systems as sustainable as possible.

Vogels says that all of us as builders need to think about sustainability, but also being frugal when it comes to costs.

His rules come under three categorise - the first being Design, as cost and sustainability need to be non-functional requirements for businesses everywhere, and should be front of mind at all times.

Even when building something new, you need to make sure that you can observe your storage, cost and sustainability needs, Vogels says.

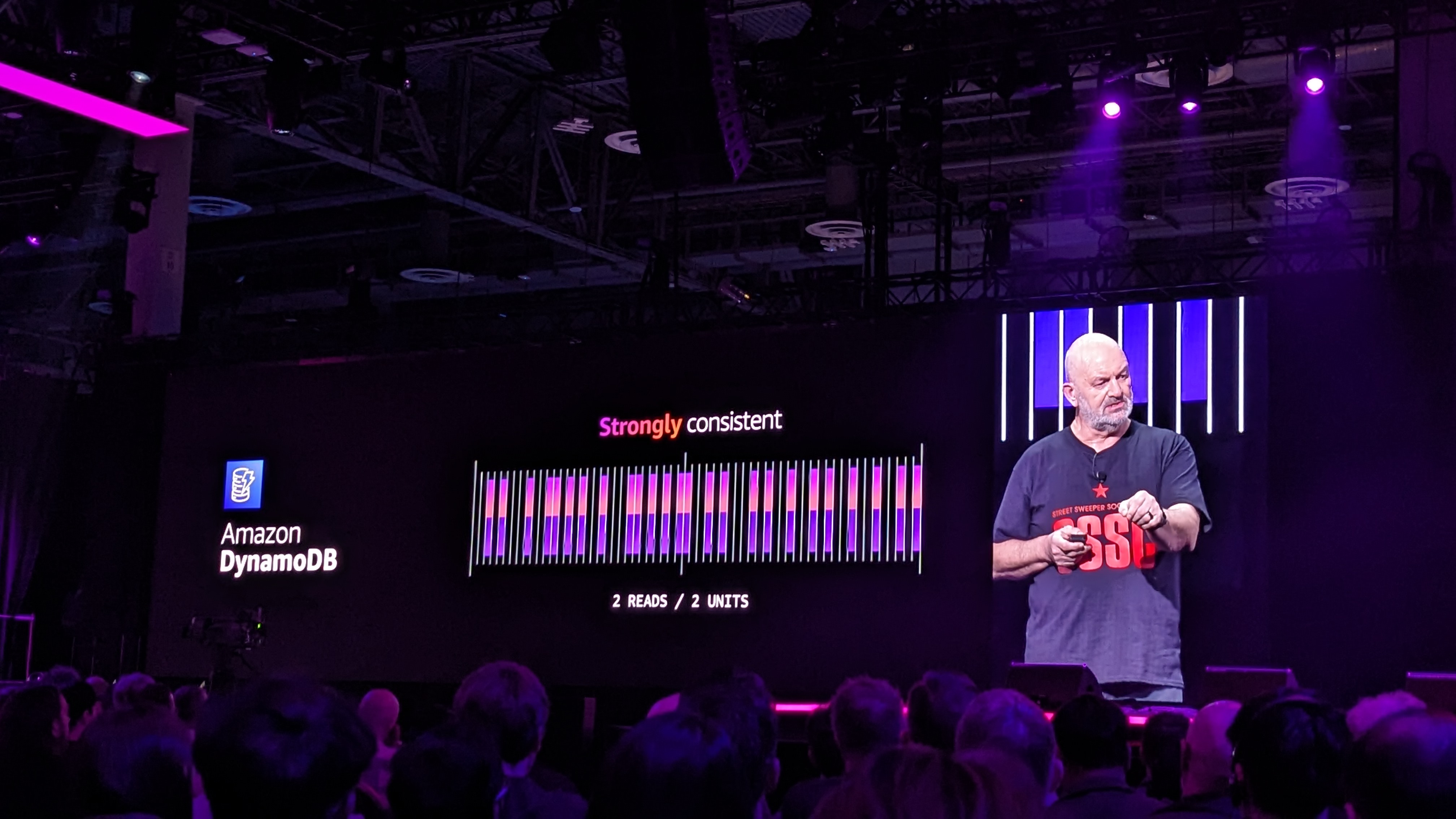

Platforms such as Amazon DynamoDB and Amazon S3 offer customers a balanced and consistent way to ensure their needs are being met when they need to be.

"You have to consider cost at every step of your design," he emphasises.

Aligning cost with revenue is another crucial consideration, Vogels says - using Amazon.com as an obvious example. But in order to ensure you aren't caught short, considering your economies of scale is vital for companies of all sizes.

Making sure your business decisions and technology decisions are in harmony with each other, Vogels notes.

He looks back at the launch of AWS Lambda in 2014, which was affected by not always having the right level of technology to make it cost-effective, until AWS sacrificed technical and economic debt to make it work.

But you also need to build evolvable architecture, as your needs will change over time, Vogels notes.

But it's always vital to pay off your technical debt as well!

It's time for a customer story - Vogels welcomes Cat Swetel from Nubank onstage to talk about her company used AWS technology to help smooth financial transactions in Brazil and Latin America.

Vogels returns to the stage, noting that "every engineering decision is a cost decision".

Aligning your priorities when it comes to business goals is vital, he says - and this includes measuring impact - and unobserved systems lead to unknown costs.

Building an evolvable architecture also makes it easier to experiment, Vogels notes, giving you much more opportunities to grow and excel.

But - again - you need to know your costs.

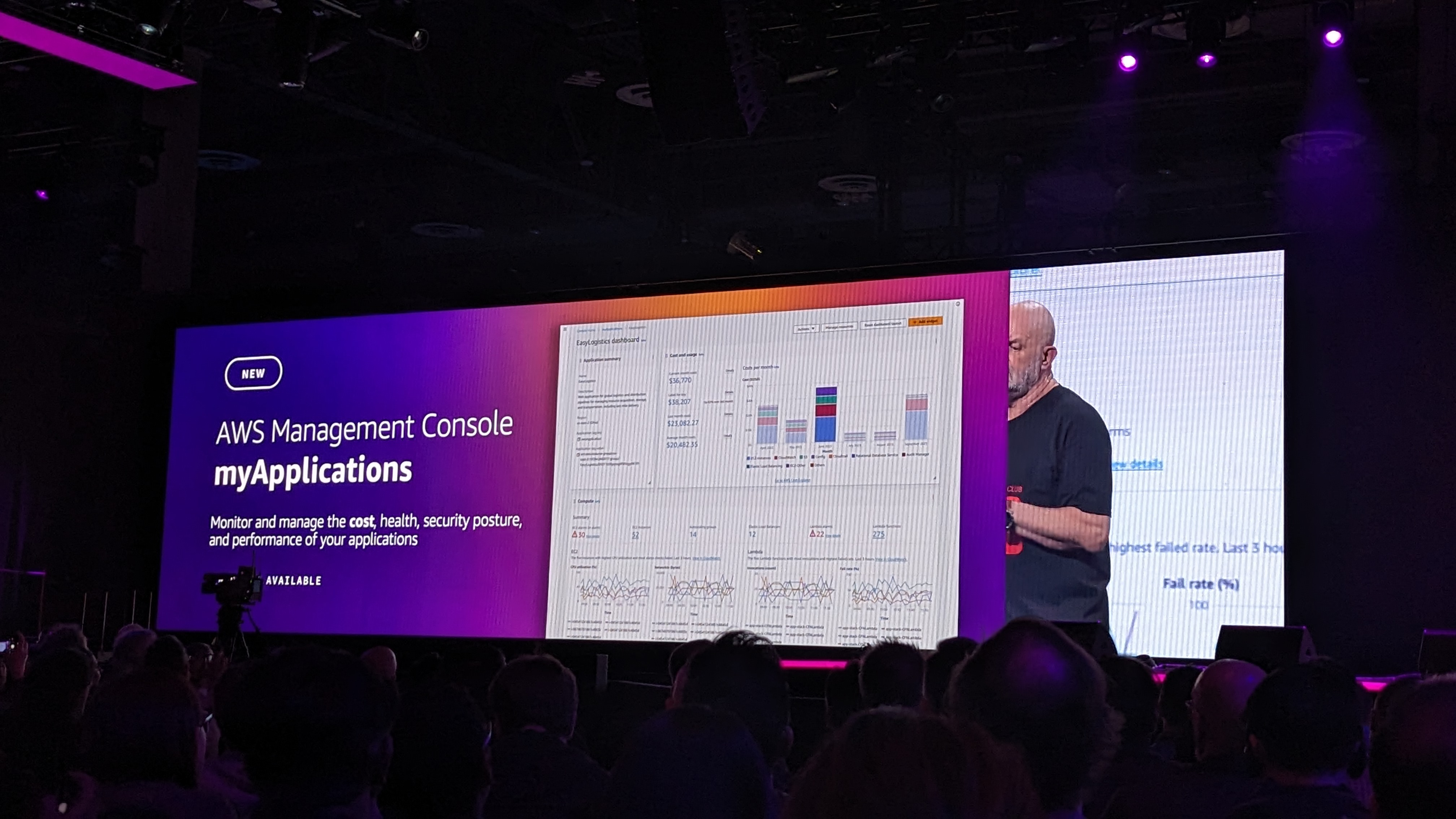

We have a new launch! The new AWS Management Console for Applications gives users more insight into the costs and any potential issues ongoing throughout development and deployment of your apps.

There's also the new Amazon CloudWatch Application Signals - giving you one single dashboard showing all the metrics for your EKS.

Establishing tiers is also vital to making sure your architecture stays cost-effective, Vogels moves on to say.

Optimization can be a vital tool for your business, boosting efficiency and productivity across all your processes - and eliminating "digital waste" can offer big savings.

AWS Graviton is one of the best opportunities to do this, funnily enough....

Some wise words that Vogels says are pertinent for every business today.

"Cost awareness is a lost art - we need to regain it," Vogels notes.

However the constraints of the past can be a good thing - as they can help breed creativity, as workers look for new solutions or ways around old problems.

We're then treated to another Vogels video special that has a dig at Oracle, and sets him up to talk about the future of technology.

"I'm not an oracle - but observing the present does help us predict the future," he notes.

After looking back at Plato and Aristotle, Alan Turing and others, Vogels turns to the future of Artificial Intelligence.

The shift to bottom-up "embodied AI" marked a major step-change for the industry, he notes, allowing for a much broader look at the technology as a whole - which resulted in the development of deep learning.

Using transformers to help build next-generation LLMs has been the next step, but Vogels says he'd rather talk about "good old-fashioned AI" - or what he calls "AI for now".

"You should keep in mind that not everything needs to be done with LLMs," he notes, highlighing the potential of AI to do good around the world.

This includes AWS customers working to reduce poverty and hunger across the world using AI.

"Without good data, there is no good AI," Vogels emphasizes - so turning mountains of unstructured data into valuable insights is vital.

Using machine learning to create meaning out of data is the key, he says, creating practical solutions to real-world problems.

To hear more about that, he welcomes Dr Rebecca Portnoff from child protection platform Thorn, which is using AI and ML to help strike down child sexual abuse.

"This is the power of technology," Vogels says, returning to the stage, "we have the power to really make a difference as technologists."

"Technology can be a force for good - that's a lesson I learned way before I became a doctor in computer science."

Vogels then tells us about a pet project he's been working on, using AWS AI and machine learning platforms to help medical professionals treat stroke patients faster.

AWS is looking to make these sorts of building experiences more straightforward than ever, with Dr Vogels urging us all to have a go at harnessing the power of AI and ML.

"AI makes predictions - professionals decide," he states, adding that AI is an assistant, but the role of humans will always be important.

"It's always been our mission to help builders be successful."

One final product announcement - Amazon SageMaker is getting a code assistant in the IDE itself, making coding smoother and faster for everyone

Vogels now highlights the recently-announced Amazon Q enterprise assistant, noting how it can take a huge amount of workload off the shoulders of overworked developers.

The platform is integrated with AWS CodeCatalyst, again helping take lengthy coding processes and tasks off the to-do lists of developers.

"There's never been a better time to be a builder," Vogels says.

And with that, it's a wrap on today's keynote. We're off to explore the show floor, but that definitely isn't the end of our AWS re:Invent 2023 coverage, as we'll have plenty more news, features and interviews coming soon - so stay tuned to TechRadar Pro!