Lvmin Zhang at GitHub, in collaboration with Maneesh Agrawala at Stanford University, has introduced FramePack this week. FramePack offers a practical implementation of video diffusion using fixed-length temporal context for more efficient processing, enabling longer and higher-quality videos. A 13-billion parameter model built using the FramePack architecture can generate a 60-second clip with just 6GB of video memory.

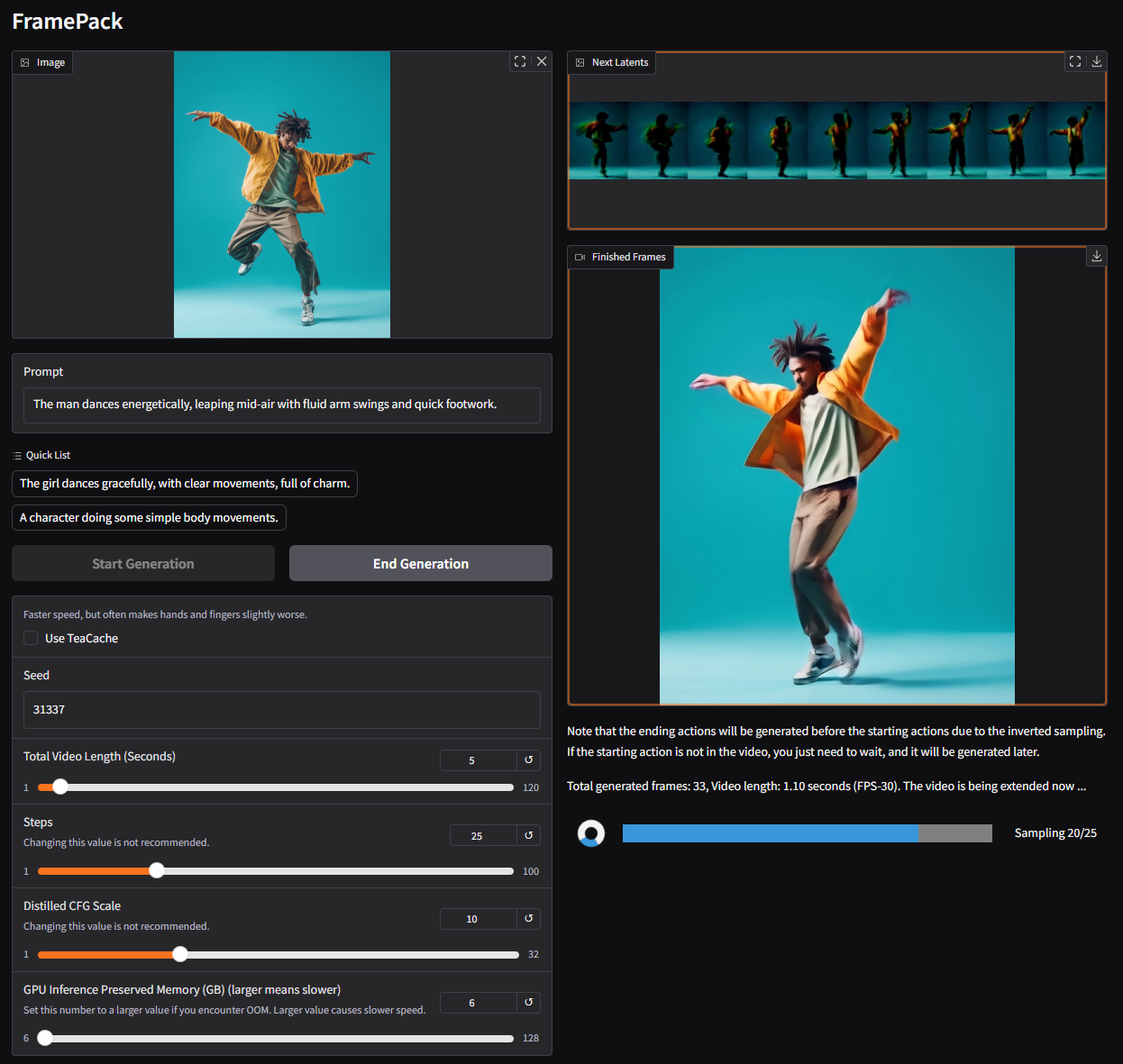

FramePack is a neural network architecture that uses multi-stage optimization techniques to enable local AI video generation. At the time of writing, the FramePack GUI is said to run a custom Hunyuan-based model under the hood, though the research paper mentions that existing pre-trained models can be fine-tuned using FramePack.

Typical diffusion models process data from previously generated noisy frames to predict the next, slightly less noisy frame. The number of input frames considered for each prediction is called the temporal context length, which grows with the video size. Standard video diffusion models demand a large VRAM pool, with 12GB being a common starting point. Sure, you can get away with less memory, but that comes at the cost of shorter clips, lower quality, and longer processing times.

Enter FramePack: a new architecture that compresses input frames, based on their importance, into a fixed-size context length, drastically reducing GPU memory overhead. All frames must be compressed to converge at a desired upper bound for the context length. The authors describe the computational costs as similar to image diffusion.

Coupled with techniques to mitigate "drifting", where the quality degrades with the video's length, FramePack offers longer video generation without significant compromise to fidelity. As it stands, FramePack requires an RTX 30/40/50 series GPU with support for the FP16 and BF16 data formats. Support on Turing and older architectures has not been verified, with no mention of AMD/Intel hardware. Linux is also among the supported Operating Systems.

Aside from the RTX 3050 4GB, most modern (RTX) GPUs meet or exceed the 6GB criteria. In terms of speed, an RTX 4090 can dish out as many as 0.6 frames/second (optimized with teacache), so your mileage will vary depending on your graphics card. Either way, each frame will be displayed after it is generated, providing immediate visual feedback.

The employed model likely has a 30 FPS cap, which might be limiting for many users. That said, instead of relying on costly third-party services, FramePack is paving the way to make AI video generation more accessible for the average consumer. Even if you're not a content creator, this is an entertaining tool for making GIFs, memes, and whatnot. I know I'll be giving it a go in my free time.

Follow Tom's Hardware on Google News to get our up-to-date news, analysis, and reviews in your feeds. Make sure to click the Follow button.