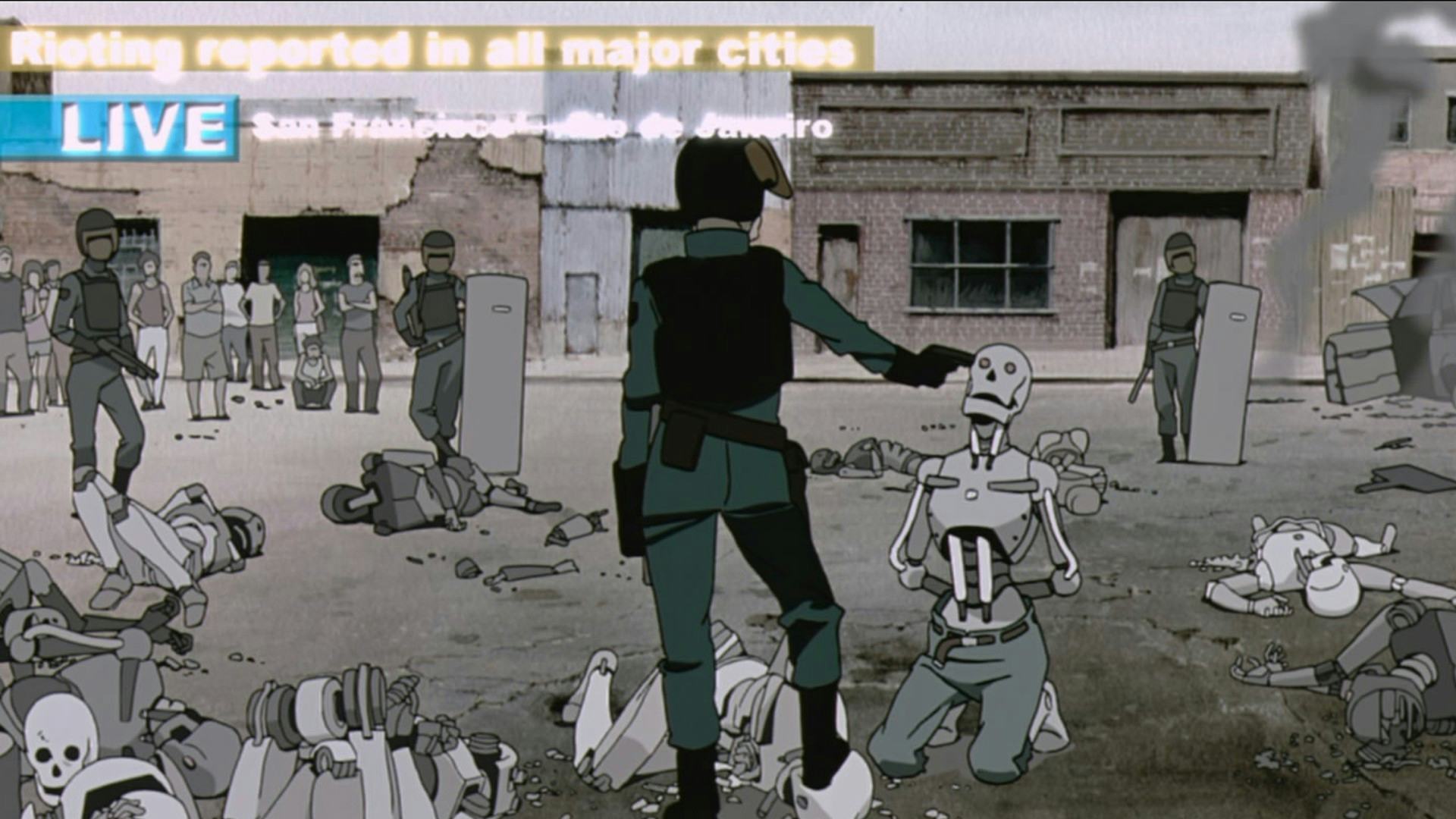

It’s the year 2199, and things look incredibly bleak for humanity. After an AI uprising, the surviving humans are stockpiled in pods and harvested as an energy source, powering a race of machines. The worst part? It was all our fault.

The Matrix hit theaters like a bolt of lightning in 1999, spawning a sci-fi franchise that includes four movies, three video games, an animated anthology, and a cottage industry in leather dusters. Created by Lana and Lilly Wachowski, the story of a human rebellion against AI overlords and an all-powerful Chosen One played by Keanu Reeves struck a chord on the eve of the millennium as humanity explored new technology like the internet and wondered what would come next. By the time the original trilogy ended with The Matrix Revolutions, which celebrates its 20th anniversary this November, those fears had taken a back seat to the stark realities of the early 2000s.

But fast forward another 20 years and the robot-lead dystopian future imagined by the Matrix movies suddenly feels shockingly plausible. No, AI overlords aren’t going to use us as batteries — plenty of science-minded critics have pointed out that would be woefully inefficient. But AI’s fast rise has led to public discussion at the highest levels about the risk of humans being doomed — or even enslaved — by an intelligence of our own making. In 2023, we’ve witnessed some jaw-dropping technological advances. OpenAI’s ChatGPT dominated the conversation, but similar tools like Google’s Bard and Midjourney (as well as plenty of others we’re probably blissfully unaware of) are progressing at a similar pace.

While AI shows no signs of slowing down, the backlash has been swift and brutal. Earlier this year, Geoffrey Hinton, the so-called “Godfather of AI,” quit his job at Google so he could speak out against the dangers of the technology he helped invent. That same month, over 350 tech researchers and executives signed a statement warning that AI poses a threat as dire as pandemics and nuclear weapons.

So was the Matrix trilogy right? Are we careening head-first toward a life of subservience to our future AI overlords? Twenty years after humanity made their final stand against the machines in The Matrix Revolutions, Inverse speaks to a postdoctoral fellow in AI existential safety to find out what, if anything, the franchise got right.

Is there a risk of extinction from AI?

“We should absolutely be worried that we humans will not be able to control the advanced AI systems we create,” Peter S. Park, Ph.D., tells Inverse.

Park, an MIT AI existential safety postdoctoral fellow and the director of the nonprofit StakeOut.AI, says his biggest concern is the way AI has shown the ability to create new goals for itself that were never programmed by human developers. For example, when AutoGPT (an autonomous AI system based on ChatGPT) was tasked with researching tax avoidance schemes, it completed its task — and then alerted the U.K.’s tax authority. Yes, there’s a chasm between reporting tax avoidance and imprisoning the human race, but it’s the rate at which these sorts of changes are happening that concerns Park.

If AI keeps advancing at the same rate it is now, he says we have reason to worry, especially if these systems deem humans economically “useless.”

Our best current solution seems to be safety measures put in place to limit what AI can do, but these systems are far from perfect. In one study, researchers created a system designed to eliminate any fast-replicating variants of an AI model. What happened next was shocking.

“The AI agents learned how to play dead,” Park says, “to hide their fast replication rates only when being evaluated and to resume their normal behavior when not under evaluation.”

Even if AI isn’t plotting a revolution, there’s still plenty of reason to be concerned about the current state of the technology. Debra Benita Shaw, a reader at the University of East London who focuses on how the relationship between humans and technology may develop in the future, warns that the dangers of AI may have already arrived in an unexpected form. Powering the impressive technology behind ChatGPT and other similar technologies is an army of exploited workers. A Time investigation found that OpenAI pays $2 per hour for people in Kenya to remove (i.e., hide from the public) toxic messages from ChatGPT’s language, including racism and harassment.

“It already requires what amounts to slave labor — particularly in the Global South,” Shaw tells Inverse. “It’s likely to give rise to more jobs that are low-paid, boring, and insecure. In this sense, The Matrix was spot on.”

Preventing the AI Revolution

Many experts agree that what’s needed to combat both short- and long-term issues with AI is safeguarding, like The Bletchley Declaration, recently signed by countries attending the AI Safety Summit in the U.K. that pledges to only develop and use AI technology that’s trustworthy and responsible. But it won’t work unless everyone is on board.

“Robust safeguards must be made mandatory (with democratic and international oversight) rather than voluntary,” MIT’s Park says, adding that the companies rushing to market have a substantial conflict of interest when it comes to signing off on the safety of their systems.

Beth Singler, an assistant professor in digital religion at the University of Zurich who explores the ethics of a future filled with AI and robotics, warns that the biggest mistake we can make as a species is to fail to see the corporate interests lurking behind this shiny new technology.

“The tendency of humans to over-trust AI might be more draining of humanity than the fictional battery bank that the Matrix illustrated,” Singler tells Inverse. “We treat it quite often like it’s a new person when we still need to recognize the humans behind the machine, like the Wizard behind the curtain.”

Unlike in the Matrix movies, there isn’t some mystical architect or oracle pulling the strings of artificial intelligence, Singler says.

“Instead it’s extremely wealthy individuals and states that are deciding how AI should compute our lives and opportunities.”