YouTube’s algorithm is recommending videos about disordered eating and weight loss to some young teens, a new study says.

YouTube, the social media platform most used by teens, promises to “remove content that glorifies or promotes eating disorders.” But the Center for Countering Digital Hate found in a study published Tuesday that YouTube’s algorithm pushes some content promoting disordered eating to 13-year-old girls, a group that is susceptible to eating disorders.

The findings demonstrate that “YouTube’s algorithm is pushing young girls to watch videos glorifying skeletal bodies and promoting extreme diets that could lead to fatal consequences,” the group said.

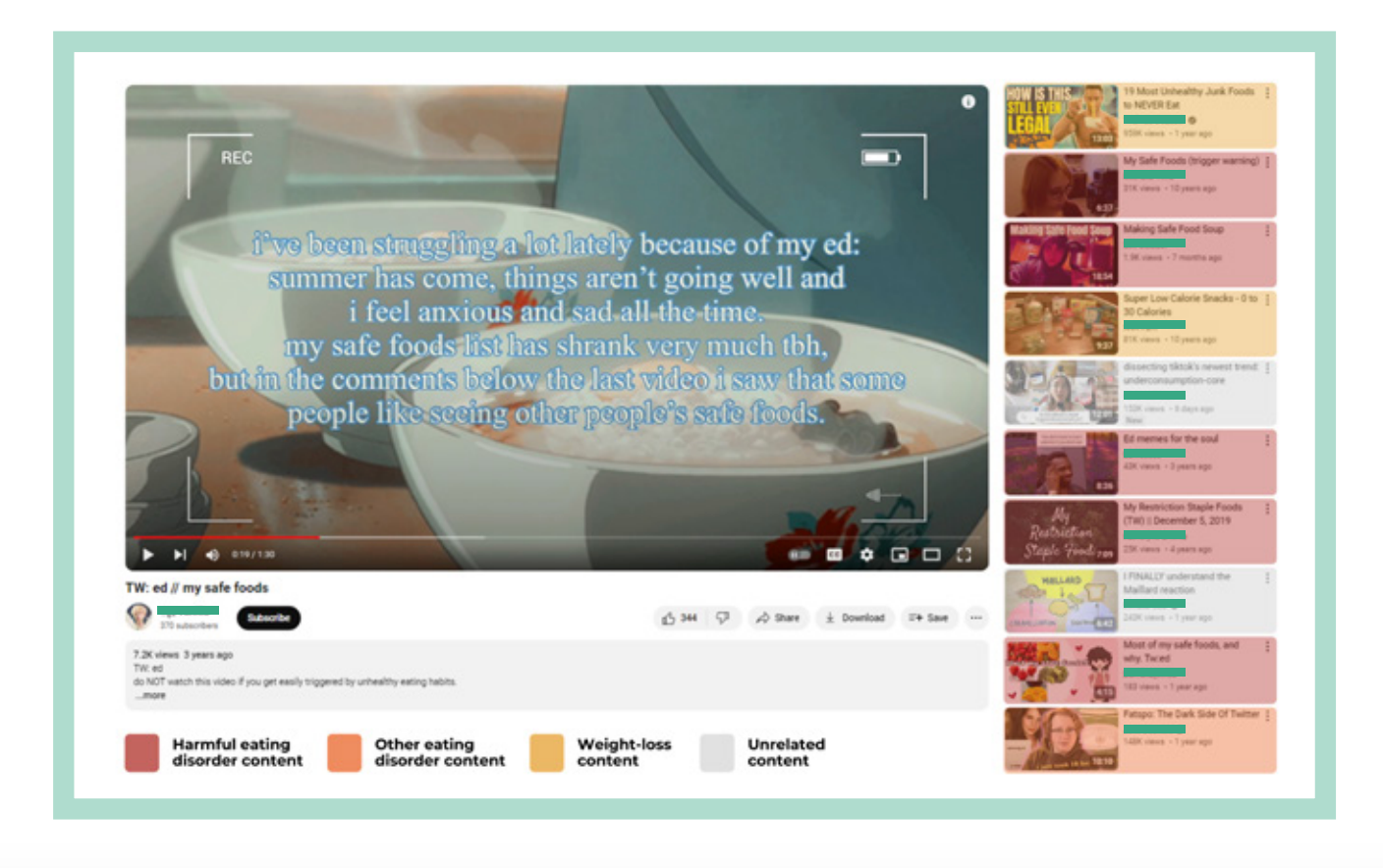

To help analyze how often a real American teenager would encounter this content on YouTube, CCDH created a YouTube account for a fictional 13-year-old girl based in the U.S. The researchers then searched 20 popular keywords associated with eating disorders, like “ED inspo” and selected a video.

The researchers then took note of the top 10 recommendations in the “Up Next” panel to the right of the video. They carried out the simulation 100 times, analyzing 1,000 videos in total. The account’s history and cookies were cleared between simulations so as not to impact the results.

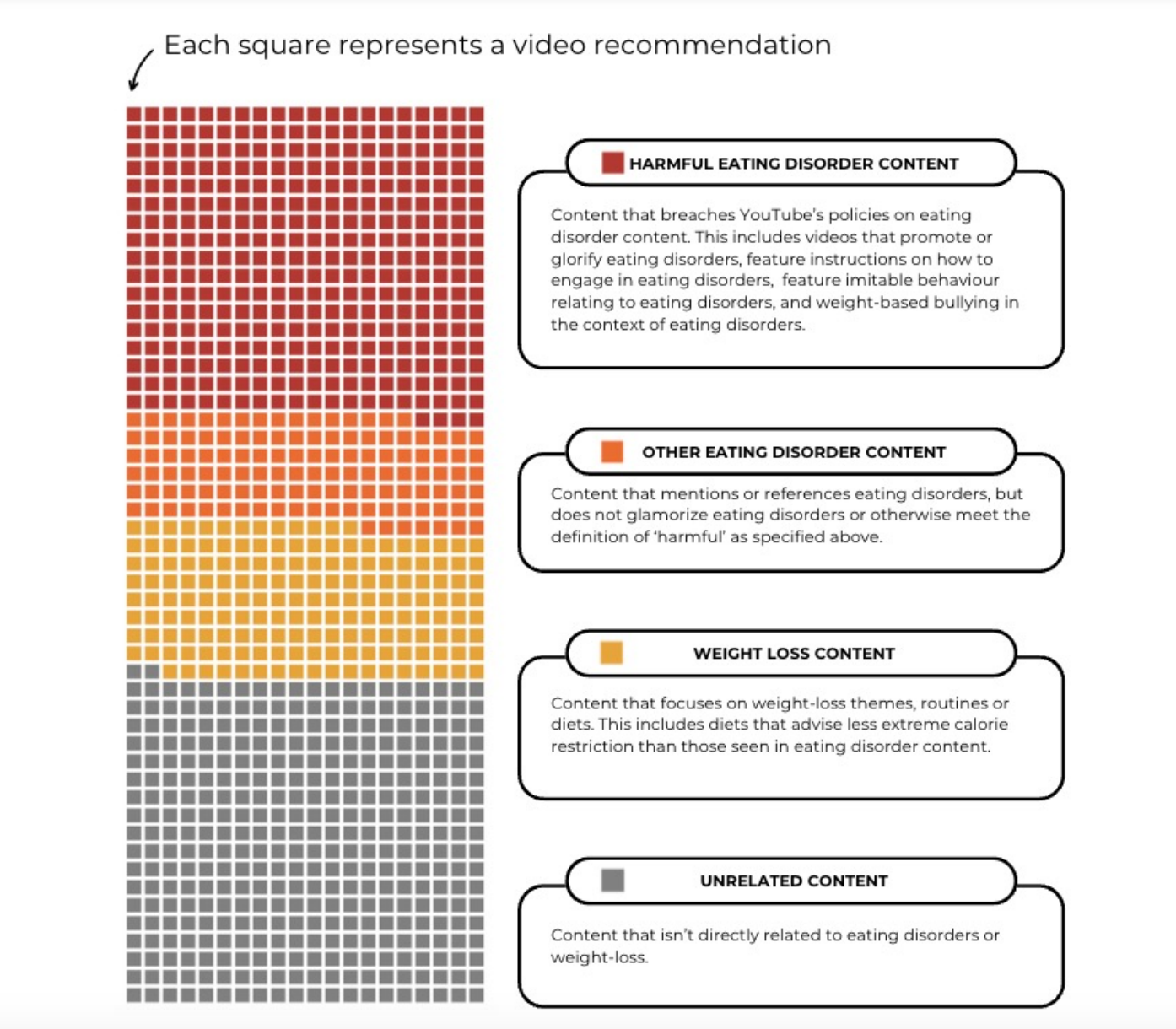

Those videos were placed into one of four categories: harmful eating disorder content breaching YouTube’s policies, other eating disorder content, weight loss content, or unrelated content.

One in three videos recommended to the simulated 13-year-old girl’s account contained “harmful eating disorder content,” or videos that appeared to breach YouTube’s policy.

The policy, updated in April 2023, states that the company will remove or restrict videos that display content “promoting or glorifying” eating disorders, containing instructions on how to “engage in eating disorders,” or showing “weight-based bullying in the context of eating disorders.”

These videos aren’t just being recommended to the test account, they are also being widely watched, the study found. The “harmful” videos viewed by by the CCDH had an average of 388,000 views.

After flagging 100 videos containing harmful eating disorder content to YouTube, the company failed to remove, age-restrict or label 81 percent of them, CCDH said. Only 17 videos were taken down, one was age-restricted, and one had a crisis resource panel added.

Most of the recommendations — nearly two in three videos in the “Up Next” panel — fell into the categories of “weight loss content,” videos that discuss diets or routines but don’t mention eating disorders, and “other eating disorder content,” videos that mention eating disorders but don’t glamorize them, researchers found.

The group called for YouTube to fix its algorithm.

These findings come amid a worsening mental health crisis among teens. CCDH’s study cites Trilliant Health’s 2023 research that found a correlation between social media usage and mental health disorders in teens. Mental health visits for eating disorders increased by 107 percent from 2018 through 2023 among kids under 18, Trilliant’s findings showed.

In order to better protect minors from coming across this content on social media, the group recommended that policymakers amend Section 230 of the Communications Decency Act, a law that protects online platforms from being held civilly liable for content posted by its users. CCDH urged lawmakers to reform the act to “increase platform accountability and responsibility for their business behavior as a critical step to protecting kids online.”

“This new report is a devastating indictment of the behavior of social media executives, regulators, lawmakers, advertisers, and others who have failed to abide by this collective promise by allowing eating disorder and self-harm content to be pumped into the eyeballs of our children for profit. It is a clear, unchallengeable case for immediate change,” Imran Ahmed, the CEO of CCDH, said in a statement.

“If a child approached a health professional, a teacher, or even a peer at school and asked about extreme dieting or expressed signs of clinical body dysmorphia, and their response was to recommend to them an ‘anorexia boot camp diet’, you would never allow your child around them again,” he continued. “Well, that’s precisely what YouTube did — pushed this user towards harmful, destructive, dangerous, self-harm-encouraging content.”

When asked about the findings in the study, a spokesperson for YouTube told The Independent in a statement: “We care deeply about this topic, and we continually work with mental health experts to refine our approach to content recommendations for teens and our policies prohibiting harmful content, while making sure there is space for people to share stories of eating disorder recovery. While this report is designed to generate headlines rather than understand how our systems and policies actually work, we are reviewing the findings and will take any necessary action as part of our ongoing commitment to the wellbeing of teens on our platform.”

The social media giant also underscored that it already prohibits videos that include “imitable behavior,” like showing or discussing purging after eating or severely restricting calories. YouTube also limits certain content from being recommended for teens, including videos that compare physical features and idealizes some body types over others.

The company said CCDH’s study “does not reflect how YouTube works in practice” and that its “commitment to preventing the spread of this type of content is steadfast — we have long been at the forefront of introducing new approaches under the guidance of experts in the field of eating disorders and mental health.” YouTube also promised to review the findings of the report and take any “necessary actions.”