What you need to know

- Xbox has published its latest AI transparency report covering data from January to June 2024.

- Player safety has been boosted with a combination of AI and human moderation, blocking over 19 million pieces of harmful content which violated the Xbox Community Standards.

- Minecraft and Call of Duty are implementing advanced moderation tools and community standards to reduce toxic behavior.

Xbox has just published its latest AI transparency report alongside an Xbox Wire update. The report, which covers data from January to June this year, details how Xbox uses artificial intelligence to enhance player safety, improve moderation processes, and ensure a positive gaming experience for players. AI is being used as an effective tool to block millions of harmful messages in both voice and text.

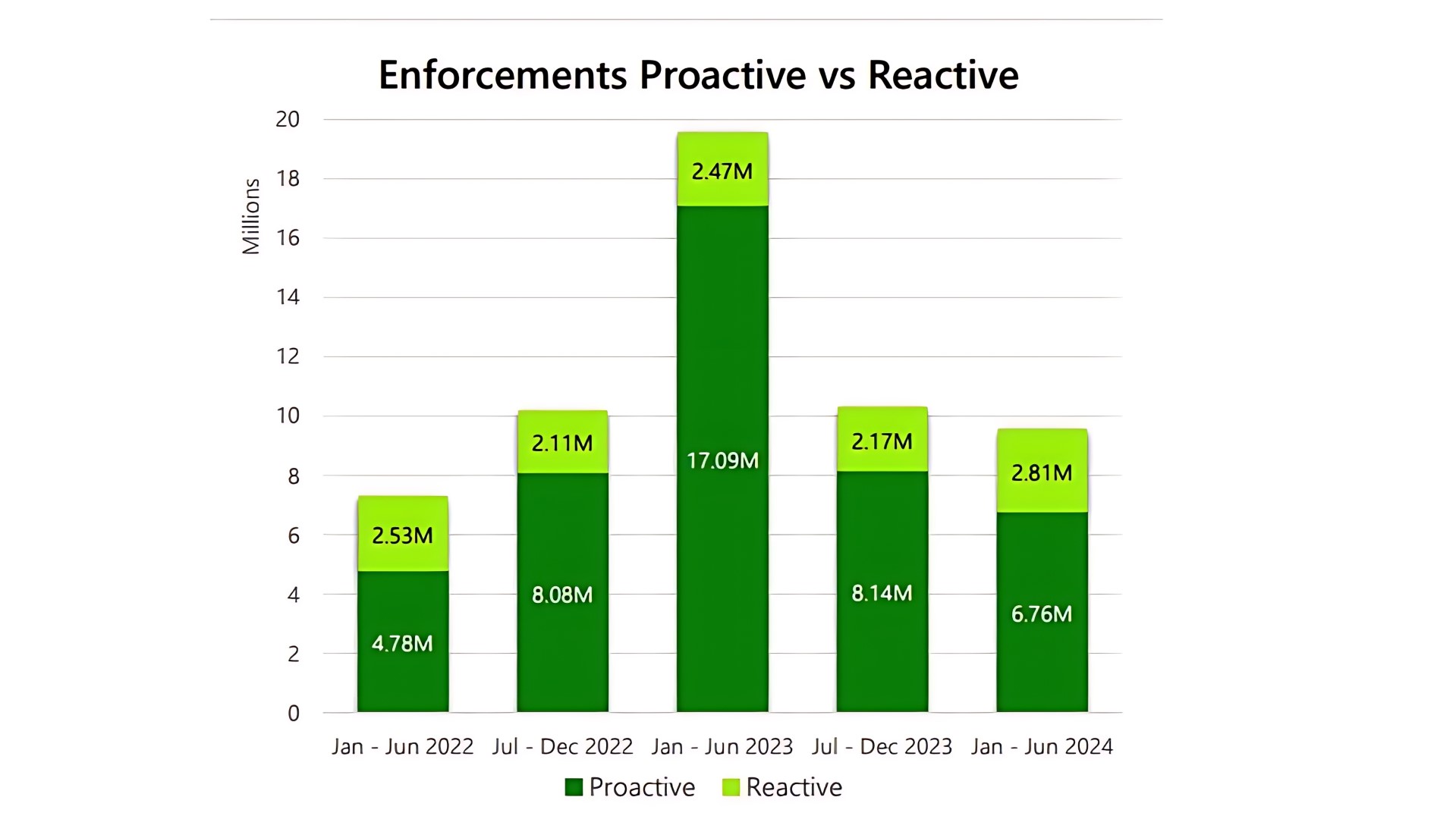

19 million pieces of content violating Xbox Community Standards were prevented from reaching players

Breaking down some of the most interesting statistics from the report, the headline figure of 19 million pieces of content being blocked over the 6 month period is impressive, and using a dual AI approach to moderation has done a lot of heavy lifting here, allowing humans to examine the more nuanced content. The tools Xbox is using automatically identify and classify harmful messages before they reach players, making quick decisions on whether the messages violate community standards. So far it's significantly improved the speed and efficiency of content moderation on Xbox (and anecdotally I've received zero messages from angry teenagers in Call of Duty!)

Other interesting stats from the report show:

- Player reports doubled to 2 million for Looking for Group (LFG) messages and increased by 8% to 30 million across all content types compared to the previous reporting period.

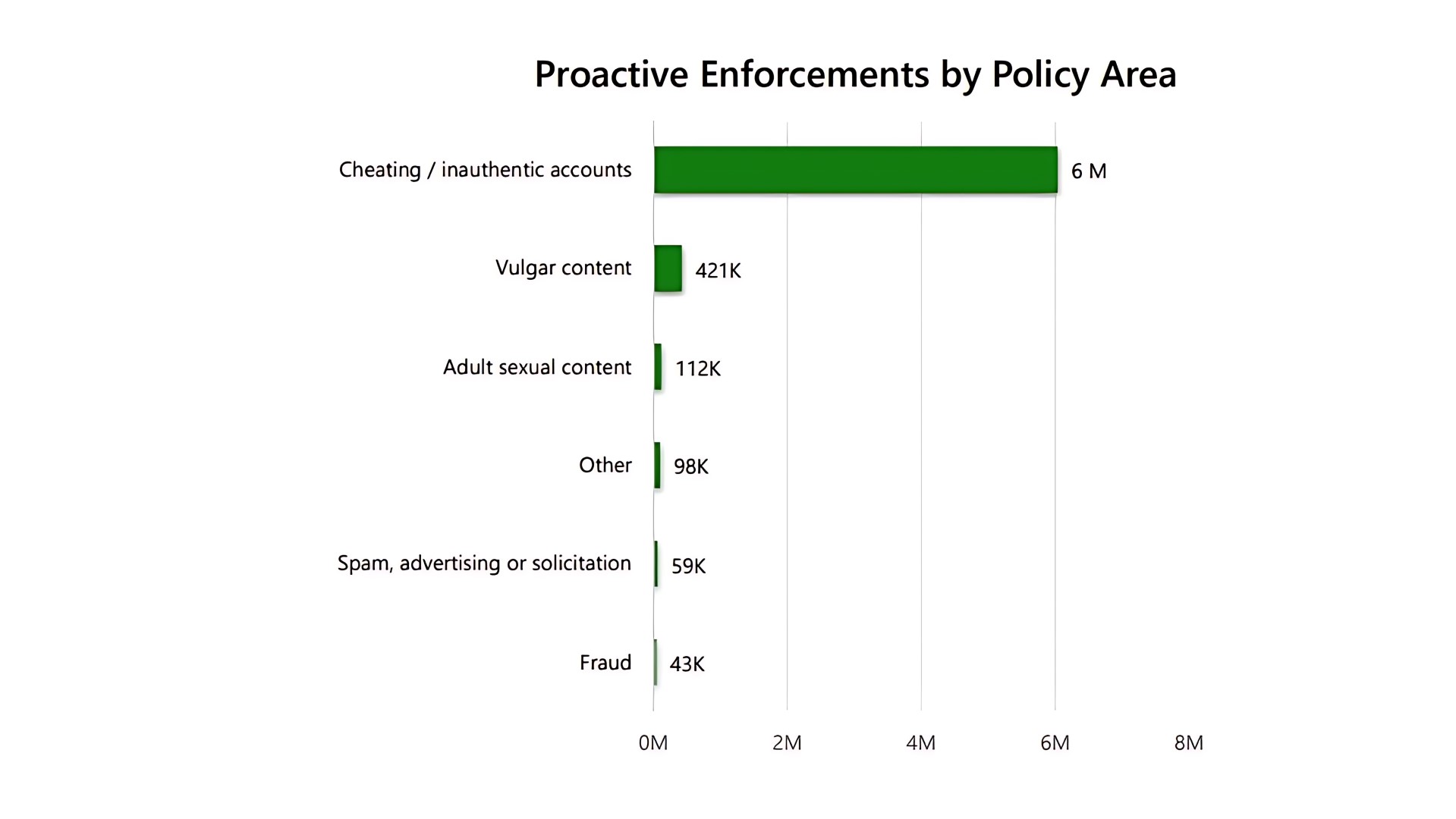

- Xbox took action on 1.7 million inauthentic accounts in April 2024, a significant increase from 320,000 in January.

- The Xbox AutoMod system handled 1.2 million cases and enabled the moderation team to remove harmful content 88% faster.

- 99.8% of proactive enforcements were made in areas like phishing, and 100% in areas such as account tampering and piracy.

🎃The best early Black Friday deals🦃

- 🎮ASUS ROG Ally (Ryzen Z1) | $349.99 at Best Buy (Save $150!)

- 💽Seagate Xbox Series X|S Card (2TB) | $199.99 at Best Buy (Save $160!)

- 📱iPad 9th Generation (64GB) | $199.99 at Best Buy (Save $130!)

- 🎮Xbox Series X (1TB) | $449.99 at Best Buy (Save $50!)

- 🖥️ABS Cyclone Desktop (RTX 4060) | $1,099.99 at Newegg (Save $400!)

- 💽WD_BLACK SSD for Handhelds (2TB) | $181.99 at Best Buy (Save $58!)

- 💻HP Victus 15.6 (RTX 3050) | $449.99 at Best Buy (Save $430!)

- 📺LG OLED Curved Ultrawide (32-inches) | $949 at Amazon (Save $550!)

- 🎮Lenovo Legion Go (Ryzen Z1 Extreme) | $539.99 at Amazon (Save $160!)

- 📺HP Curved Ultrawide (34-inches) | $349.99 at Best Buy (Save $130!)

Community tools for Minecraft and Call of Duty: Black Ops 6

Since the end of 2023, Xbox has blocked millions of offensive messages in multiple languages and seen a significant drop in voice-related toxicity in Call of Duty. Recently, they expanded their voice moderation to include more languages in Black Ops 6. A smart move since they also added a feature in Black Ops 6 to let you whisper sweet nothings to your enemy while using as a human body shield.

Minecraft has added a feature in its Bedrock Edition that sends reminders to players about the game’s Community Standards when they might be using inappropriate language or behavior in chat. This is meant to give players a chance to reflect and adjust before more serious actions, like suspensions, are taken. In addition, Minecraft’s partnership with GamerSafer has helped server owners create safer environments, giving players and parents better options for finding secure Minecraft servers.

What actually happens when a player is offensive on Xbox?

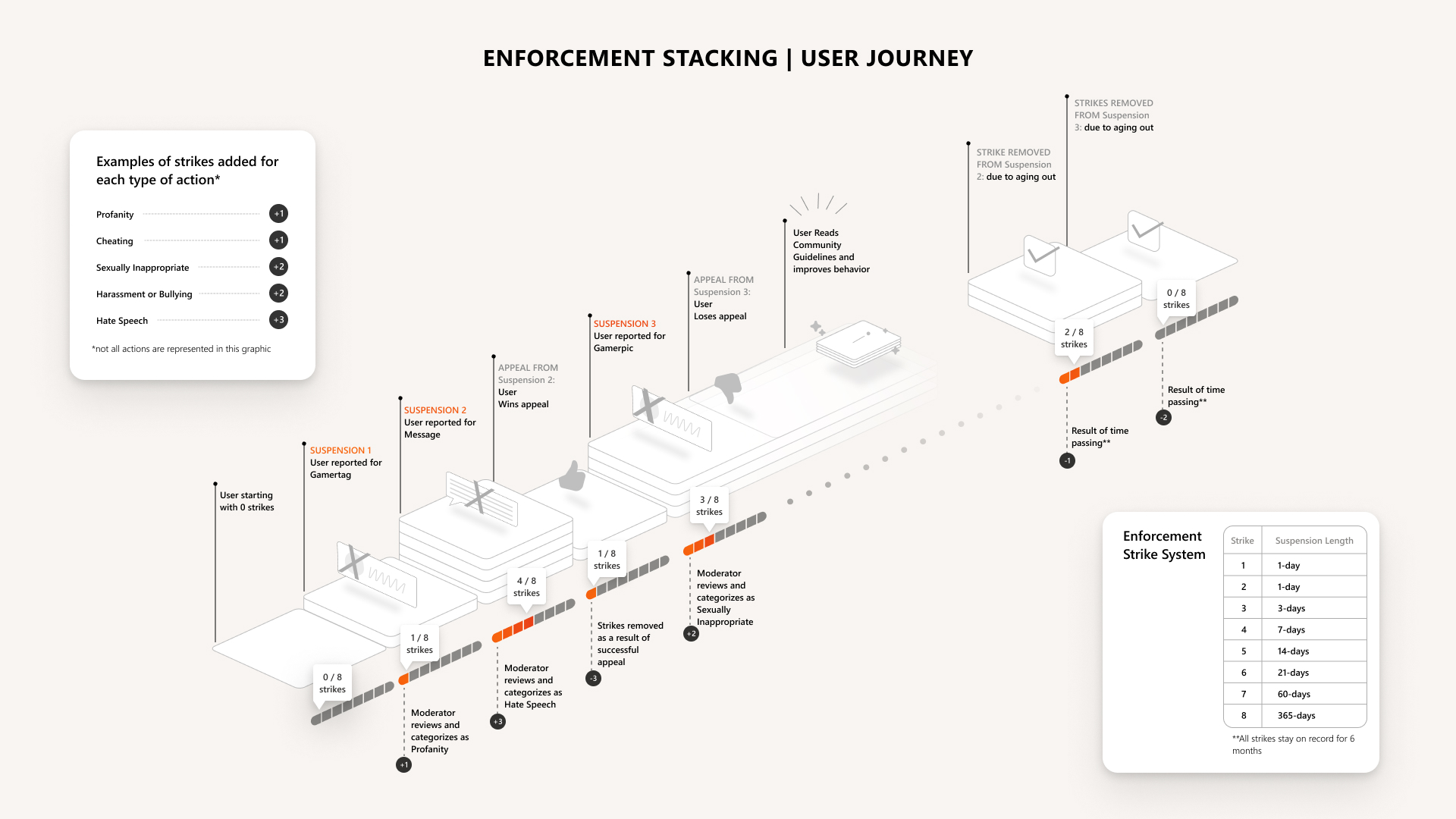

The report details a new 'strike system' implemented by Xbox, to help players understand and learn from any rule violations. Each time a player breaks a rule, they get a 'strike' on their account, which stays on record for six months. Players can receive up to eight strikes, and this system is designed to prevent them from repeating the same mistakes by showing the impact of their actions.

The system uses a progressive approach, meaning that the more strikes a player gets, the more serious the consequences become. For example, the first offense might result in a short suspension, but repeated violations can lead to longer suspensions or even a permanent ban from social features.

For severe violations—like threats or anything involving child safety—Xbox will immediately investigate, and these could lead to a permanent suspension or even a device ban, no matter how many strikes the player has.

AI in gaming isn't all bad news

While the role of AI in gaming can be a controversial topic, especially when it comes to issues like algorithmic bias, generative AI, or the potential for AI to replace human jobs, it does have its positives too, and was a hot buzzword at this years GDC.

This latest report by Xbox highlights how AI can be an effective tool for ensuring player safety. In areas like content moderation, AI is able to handle millions of decisions quickly, blocking harmful messages and identifying toxic behavior in real-time. AI being used to ease the workload for human moderators, and creating a more respectful environment for players can only be a good thing,