WWDC, Apple’s annual developer conference during which it shows off its latest and greatest software innovations, is coming on June 10. Of course, it looks like WWDC 2024’s main focus is going to be AI, if the rumours (and a Tweet from Apple exec Greg Joswiak, who claims the conference will be “Absolutely Incredible!”) are anything to go by. This could mean big changes in the way we use our best iPhones, Macbooks, iPads and, of course, Apple Watches.

The Apple Watch Ultra 2 and Apple Watch SE 2 are positioned #1 and #2 on our best Apple Watches guide for a few reasons, and one of those is the excellence of the watchOS operating system. Another is its use of your health information, both on watch and on other devices, to make positive changes and implement safeguards in your life.

For example, you can load the Health app with all your medical records and automatically share them with your doctor or pharmacy. You can use your phone or watch to generate and display a Medical ID, so first responders can access critical medical information if you’re unable to unlock your phone. Crash Detection will alert emergency services (or an emergency contact) if you’re in a car or bicycle accident, while Fall Detection can do the same if you’re more vulnerable and have taken a tumble.

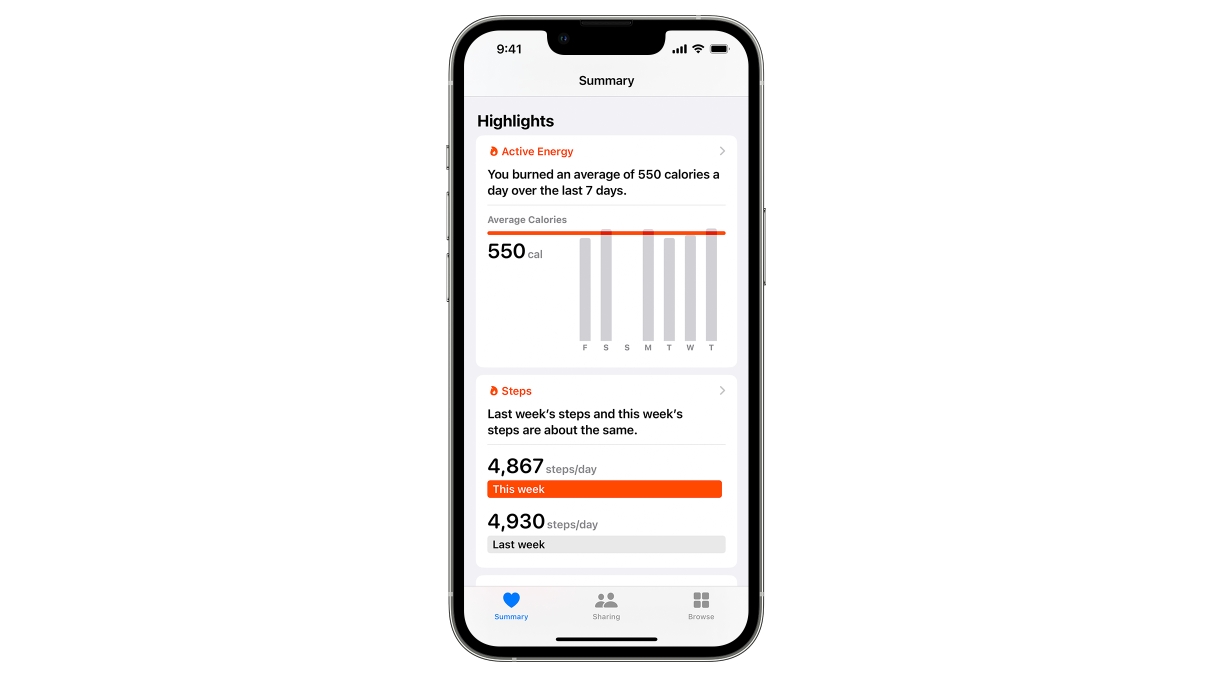

All the while, Apple is collecting all the usual information that all the best fitness trackers collect about our health, such as heart rate information, sleep information, blood oxygen, menstrual cycle tracking, location, step count, exercise statistics, and loads more, feeding back into the main Health app. Like our medical records, this is potentially sensitive information, especially when it comes to metrics like cycle tracking. Thankfully, Apple takes its privacy obligations seriously, emphasizing its commitment to encrypting such information end-to-end, so even Apple can’t read it.

However, it must take care to do the same when artificial intelligence is inserted into the mix. AI chatbots like ChatGPT are known as Large Language Models, or LLMs, and are trained on a large amount of content scraped from the internet with the intention of regurgitating the right information to you based on prompts. There is a constant need for more data to train AIs in more sophisticated ways - including health data, which is big business.

Health data is extremely valuable to tech companies, who can use it to train AIs for healthcare markets and build profiles for advertising with accuracy like never before. A 2020 research paper from the University of Essex, UK, on the commercialization of health data, writes:

“…the increasing production of digitized health data through the widespread use of electronic patient records, new health applications, and wearable technologies—coupled with advancements in computational power—have enabled the development of novel algorithmic and machine learning tools to improve diagnostics, treatment, and administration in health care.

“Training these algorithmic technologies requires access to huge datasets, resulting in increased demand for health data and fueling the emergence of a burgeoning global health data economy. With the booming AI health care market set to be worth US$6.6 billion by 2021, health data is no longer simply a source of information but a valuable asset used to generate intellectual property and economic profit.”

As you can see by that 2021 statistic, the information is a little out of date, but the point remains more pertinent four years on. Tech companies are hungry to get their hands on your health data and train their LLMs with it. Is Apple – one of the world’s most successful tech companies, and one that is actively developing medical technology – any different?

Apple is likely to take privacy concerns seriously - for now

AI is already being used to improve “personalization” in many health apps, learning from the information you provide it to better determine when your data falls outside of the established norms. For example, if you spend weeks feeding your on-device AI with your usual heart rate and temperature during sleep via a wearable, it’s more likely to detect when you have a fever coming on.

AI services interfacing with existing health apps are also able to function like the quick recall Google showed off at this year’s Google IO. You could, for example, ask if this week’s sleep was better than your average and the AI would conjure up that data in seconds.

Despite the fact that AI chatbots may have access to all your health information, they don’t actively use it to train themselves straight away. According to the UK’s National Cyber Security Center, LLMs are trained, a model is generated, and then you query that model. So if you allow an LLM to access your medical information, you’re not immediately putting it into the system, which is a relief.

However, according to the National Cyber Security Center, “the query will be visible to the organization providing the LLM.” So in the case of ChatGPT, your query would be visible to OpenAI, while in the case of whatever format Apple’s AI uses, your query would be visible to Apple.

On the web page linked above, the Center writes: “Those queries are stored and will almost certainly be used for developing the LLM service or model at some point. This could mean that the LLM provider (or its partners/contractors) are able to read queries, and may incorporate them in some way into future versions. As such, the terms of use and privacy policy need to be thoroughly understood before asking sensitive questions.”

Apple is very good at communicating with its users on data privacy. It published a white paper on its health data privacy policies last year, and has refused to unlock phones and Apple Watches even at government request, in some cases. We imagine if Apple reveals AI integration with Apple Watches and the Health app, there will be steps put in place to prevent your sensitive health data from being accessed by Apple, or by third-party apps or software using Apple’s platform.

I just hope the temptation to use this information and technology for monetary purposes doesn’t result in a rollback of security guarantees in a few years’ time.