Meta is far closer to realizing mainstream augmented reality success than I think anyone expected. And it’s largely doing so by having them do less, rather than more.

So far, the whole “computer on your face” field has been defined by extremes. Throwing out all the stops to fake AR with the VR we have now; tirelessly developing the displays and lenses to make real AR possible, while still getting bogged down by stubborn limitations; and selling a whole bunch of headphones and external monitors shaped like glasses to gesture at something bigger. None of them have fully satisfied — except for the Ray-Ban Meta smart glasses.

I always assumed Meta’s partnership with Essilor Luxottica (the European megacorp that owns Ray-Ban) would be the ultimate source of its success. It’s hard to beat a pair of smart glasses that look like glasses people already like, connected to some of the most popular social media platforms in the world. It’s certainly helped Meta in its push for public acceptance of AR glasses, but just how its glasses are succeeding where others like Snapchat’s Spectacles have failed is a more complicated recipe than hiding tech in iconic frames.

Love it or hate it, the success of Ray-Ban Meta smart glasses has less to do with capturing POV photos and videos and more to do with the latest tech trend. Yep, AI is fueling the smart glasses’ success — and it might not be a gimmick.

Smart Glasses That Are Actually Cool

At first blush, the Ray-Ban Meta smart glasses might have a clunkier name than the first-generation Ray-Ban Stories, but they’re definitely better glasses.

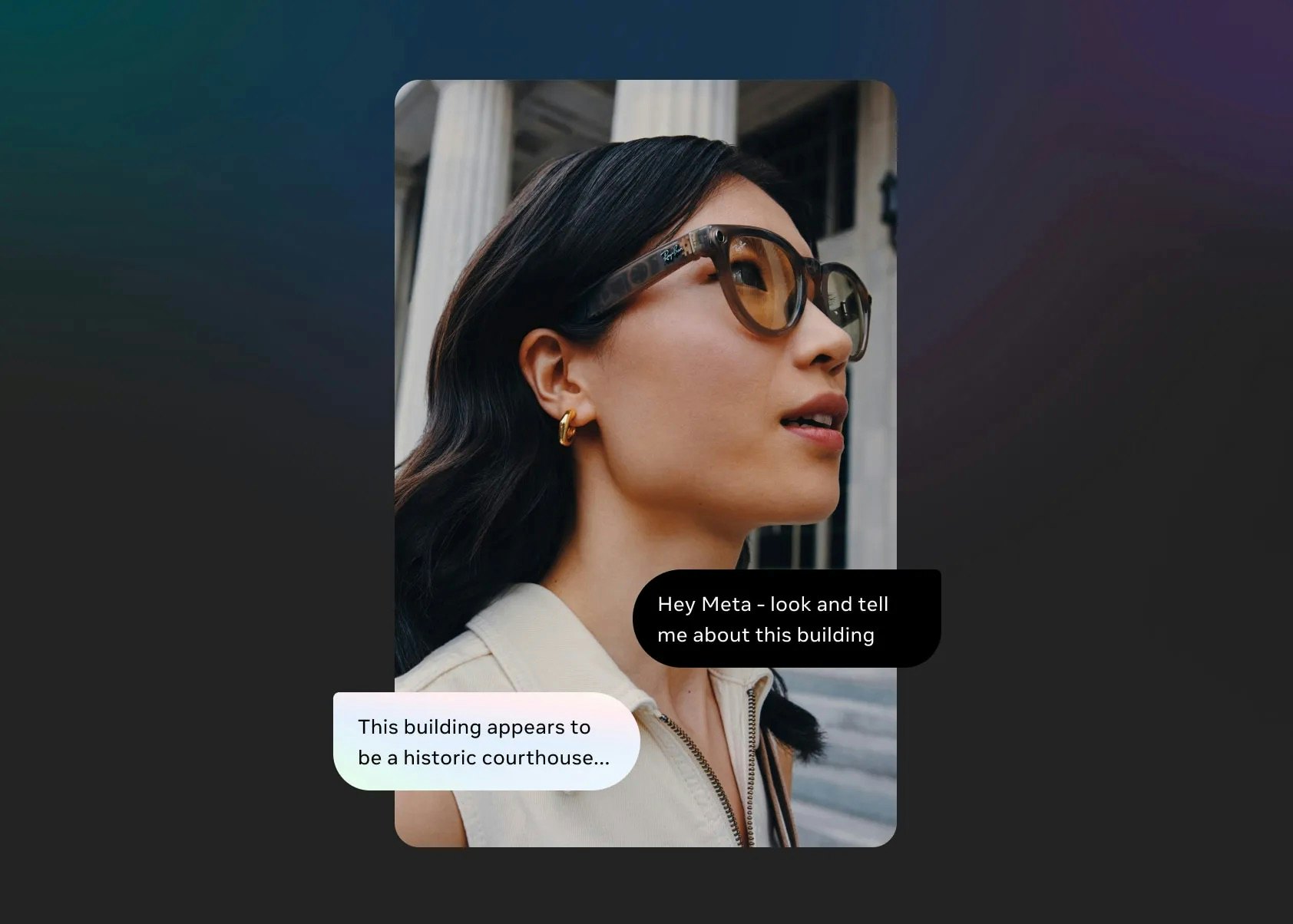

The frames themselves look thinner; closer to normal Ray-Bans in size. There are also a lot more style options to choose from, including multiple frame silhouettes, colors, and lenses. Critically, these improved looks have been paired with actually better specs. In this case, better and more microphones and speakers, a higher quality 12-megapixel ultra-wide camera that’s closer to what you can get from a cheap smartphone, and easy access to Meta AI’s vision capabilities, which opens up even more possibilities.

Reviews when the glasses first came out were generally positive, complimenting everything from the design to its much better handle on the things the first-generation Stories could only claim to do. The Ray-Ban Meta smart glasses “can easily take the place of in-ear buds,” according to Wired, and the numerous mics make the glasses ideal for hands-free phone calls. The smart glasses aren’t perfect by any means, but The Verge writes that they “set a new bar for what smart glasses can and should be able to do.”

Critically, these improved looks have been paired with actually better specs.

Other companies have tried this before, of course. Snap built a lot of buzz around its Spectacles camera glasses initially, mostly by making them look fun to wear, and making the process of getting them in-person entertaining too (the company had custom vending machines drop in random locations). Snap’s efforts to sustain that virality with follow-ups failed, though, and even an experimental pair of proper AR glasses for developers hasn’t gone anywhere. Before Snap, you could say Bose got the other half of the Ray-Ban Meta smart glasses equation with its Bluetooth audio sunglasses. They didn’t look as cool or do much other than act as headphones, though. Meta’s ability to combine the two (and well) is what’s making the Ray-Ban Meta smart glasses a winner.

Meta doesn’t share sales numbers, but CEO Mark Zuckerberg has shared with investors that the smart glasses have been more popular than expected, prompting Essilor Luxottica to put more in production to meet the high demand. Having the smart glasses available to look at and try on at thousands of retail stores probably made a big difference.

The Ray-Ban Meta smart glasses have been successful enough that Meta is responding internally, too. Reality Labs, the division of Meta responsible for its current hardware ambitions, reorganized into new “Metaverse” and “Wearables” divisions to better build the next version of the Ray-Ban Meta smart glasses, The Verge reports. Considering we know the company’s roadmap at one point included releasing smart glasses with an actual built-in display, Meta is not exactly playing it safe.

A Reason to Not Pull Out Your Phone

The Ray-Ban brand and look add a cool factor, and a few years of polish means the second-generation model works better, but I think it’s really the glasses’ newest feature, multimodal AI, that’s made them resonate with new users.

It’s useful to think about smart glasses, particularly in Meta’s case, as a smartwatch (not coincidentally, the company was working on its own). The purpose of the smart glasses, besides protecting your eyes from the sun and helping you see, is to stop you from taking out your phone; for convenience and because Meta wants to own more of your time. And actually own it this time, not rent it from Apple or Google via a popular app like Instagram.

Having a feature that lets you look at something and ask questions about it is immediately compelling, regardless of how much you know about AI. And that’s not the only thing Meta AI can even do. Add that to a built-in camera that’s a substitute for your smartphone’s camera, microphones and speakers that can replace your wireless earbuds, and the ability to dictate texts and receive notifications, and the Ray-Ban Meta smart glasses keep your phone in your pocket for longer stretches of the day.

A lot of this potential hinges on Meta AI, which doesn’t compare to the flagship AI experiences from OpenAI or Google (not that any of them have proven to be consistently reliable), but that’s why you can fall back on the Meta Ray-Bans as just a pair of headphones or a camera. It’s those three sides working together, listening, capturing, and AI, that make the glasses a device you want to keep coming back to. Build on that, and smart glasses could be the platform to own with hardware that Meta is looking for.

The Next AirPods?

It’s hard to know what success for smart glasses looks like before the technology for them to fully replace your phone exists, but I think it could look something like the AirPods.

Almost everyone uses headphones at some point in their day, and Apple has been able to leverage the popularity of the iPhone and the removal of the headphone jack and convert that to a whole AirPods business that is larger, revenue-wise, than many tech companies. If the Ray-Ban Meta smart glasses are a good enough Bluetooth headphones replacement, particularly for calls on top of all its extra features, I think they have a shot at becoming as ubiquitous as AirPods. Not everyone wears glasses, but everyone could benefit from wearing sunglasses, so why not throw in some computing?

Not everyone wears glasses, but everyone could benefit from wearing sunglasses, so why not throw in some computing?

The real unknowns are where the Ray-Ban smart glasses go next. Has Meta effectively proven that less is more when it comes to smart glasses? Will the addition of yet another screen (when Meta offers smart glasses with a display) scare people off? Whatever happens, Meta’s success seems like an important lesson. If you’re going to sell a platform of the future, it needs to be good enough to replace multiple parts of the ones we already have.