ChatGPT maker OpenAI unveiled Sora, its artificial intelligence engine for converting text prompts into video, way back in February 2024, but we had to wait until December 2024 for the full release, and even then it was only available in the US. So far the results we've got from it have been stunning.

Sora works exactly as you'd expect. You simply type in what kind of video you'd like to see and Sora does the rest. There are some limits on how long the video clips can be and how many you can generate. A ChatGPT Pro subscription gives you more credits and enables you to create longer clips without a watermark.

Here, we'll explain everything you need to know about OpenAI Sora: what it's capable of, how it works, and how to get it. The era of AI text-prompt filmmaking has finally arrived.

OpenAI Sora release date and price

In December 2024, Sora got its first public release as part of OpenAI's '12 days of OpenAI' event. Sora requires a subscription to ChatGPT Plus, Teams or Pro to use and is currently only available in the US, but we expect a worldwide release at some point in 2025.

With a ChatGPT Plus ($20/£16/AU$32 p/month) or Teams ($25/£20/AU$40 p/month per user) subscription you get all the benefits of being a ChatGPT Plus user, as well as up to 50 priority Sora videos (that's equivalent to 1,000 credits) per month at 480p. Your videos can be 480p or 720p resolution and 5 seconds long. They also come with a watermark.

With a ChatGPT Pro subscription ($200/£165/AU$325 p/month) you get unlimited access to ChatGPT 4o and o1, as well as limited access to the latest o1 pro mode. In addition, you get up to 500 priority videos (equivalent to 10,000 credits). You also get unlimited 'relaxed videos' – which will take longer to generate than priority videos. The videos go up to 1080p resolution, are 20 seconds long and you can generate five videos concurrently. You also have the ability to download your videos without the watermark.

With these limitations it's pretty clear that the more expensive Pro subscription is required if you want to use Sora in any professional capacity.

What is OpenAI Sora?

You may well be familiar with generative AI models – such as Google Gemini for text and Dall-E for images – which can produce new content based on vast amounts of training data. If you ask ChatGPT to write you a poem, for example, what you get back will be based on lots and lots of poems that the AI has already absorbed and analyzed.

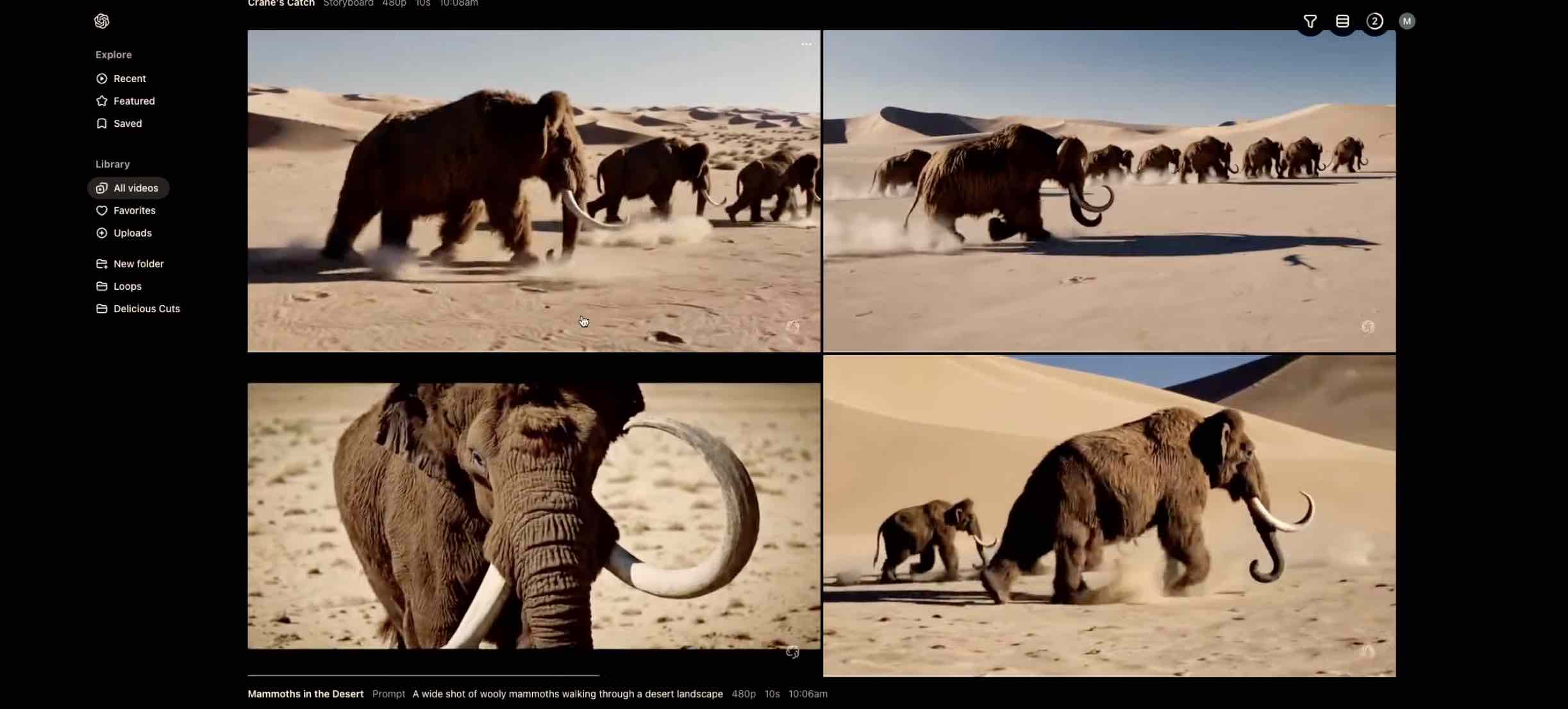

OpenAI Sora is a similar idea, but for video clips. You give it a text prompt, like "woman walking down a city street at night" or "car driving through a forest" and you get back a video. As with AI image models, you can get very specific when it comes to saying what should be included in the clip and the style of the footage you want to see.

To get a better idea of how this works, check out some of the example videos posted by OpenAI CEO Sam Altman – not long after Sora was unveiled to the world, Altman responded to prompts put forward on social media, returning videos based on text like "a wizard wearing a pointed hat and a blue robe with white stars casting a spell that shoots lightning from his hand and holding an old tome in his other hand".

How does OpenAI Sora work?

On a simplified level, the technology behind Sora is the same as that which lets you search for pictures of a dog or a cat on the web. Show an AI enough photos of a dog or cat, and it'll be able to spot the same patterns in new images; in the same way, if you train an AI on a million videos of a sunset or a waterfall, it'll be able to generate its own.

Of course there's a lot of complexity underneath that, and OpenAI has provided a deep dive into how its AI model works. It's trained on "internet-scale data" to know what realistic videos look like, first analyzing the clips to know what it's looking at, then learning how to produce its own versions when asked.

So, ask Sora to produce a clip of a fish tank, and it'll come back with an approximation based on all the fish tank videos it's seen. It makes use of what are known as visual patches, smaller building blocks that help the AI to understand what should go where and how different elements of a video should interact and progress, frame by frame.

Sora is based on a diffusion model, where the AI starts with a 'noisy' response and then works towards a 'clean' output through a series of feedback loops and prediction calculations. And like other generative AI models, Sora uses transformer technology (the last T in ChatGPT stands for Transformer). Transformers use a variety of sophisticated data analysis techniques to process heaps of data – they can understand the most important and least important parts of what's being analyzed, and figure out the surrounding context and relationships between these data chunks.

What we don't fully know is where OpenAI found its training data – it hasn't said which video libraries have been used to power Sora, though we do know it has partnerships with content databases such as Shutterstock. In some cases, you can see the similarities between the training data and the output Sora is producing.

What can you do with OpenAI Sora?

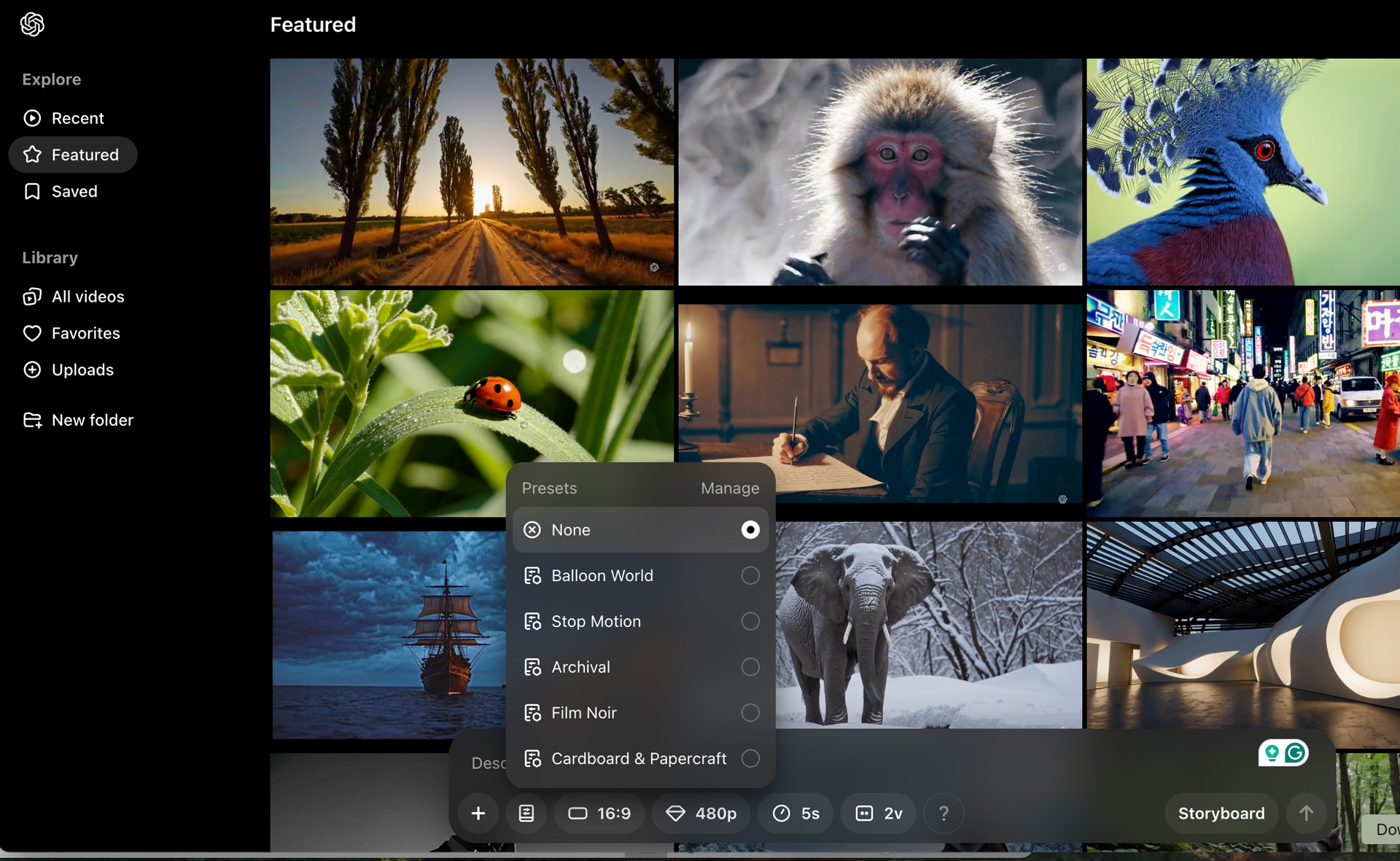

When you open Sora you see a landing page, which is a grid of everyone else's AI-generated video content. It's a great place to start if you're looking for inspiration because you can use any of those videos as a starting point for for your own creations via what OpenAI calls a 'remix'.

When remixing you can do a light remix, a subtle one, a strong one, or even a custom remix. Alternatively you can choose to generate your own original video based on a prompt like, say, "Man dances on the moon wearing a Stetson hat".

Sora also has a Storyboard tool that helps you create and direct the kind of video you want. You can use it to precisely specify inputs for each frame. There are also presets that help you create videos, like Film Noir and Stop Motion.

OpenAI does acknowledge some of the limitations of Sora at the moment. The physics don't always make sense, with people disappearing or transforming or blending into other objects. Sora isn't mapping out a scene with individual actors and props, it's making an incredible number of calculations about where pixels should go from frame to frame.

In Sora videos people might move in ways that defy the laws of physics, or details – such as a bite being taken out of a cookie – might not be remembered from one frame to the next. OpenAI is aware of these issues and is working to fix them, and you can check out some of the examples on the OpenAI Sora website to see what we mean.

Despite those bugs, further down the line OpenAI is hoping that Sora could evolve to become a realistic simulator of physical and digital worlds. In the years to come, the Sora tech could be used to generate imaginary virtual worlds for us to explore, or enable us to fully explore real places that are replicated in AI.

How can you access OpenAI Sora?

At the moment, you can't get into Sora without a Plus, Teams or Pro subscription. You access it from sora.com. At the moment, Sora is also only available in the US, so if you try to access it from another country you'll see a message saying it's not available.

OpenAI has had a history of eventually bringing all its paid-for products to its free tier in some way, and we'd expect that a version of Sora will eventually be released on the free tier of ChatGPT, but we seem a long way from that happening right now.

Is there anything else like Sora?

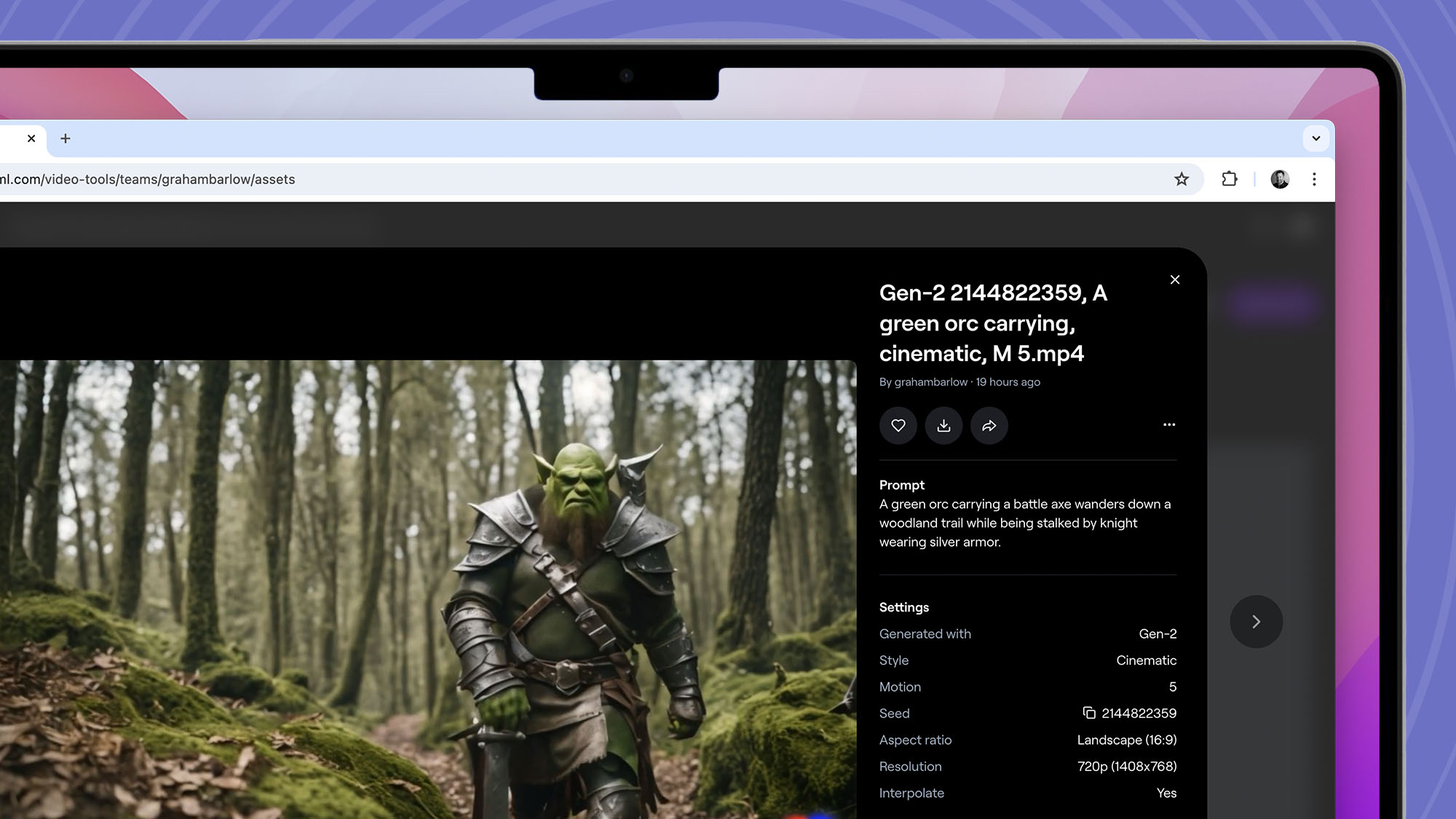

Since Sora was announced several alternatives have been released. Runway is one of the highest profile 'video from prompt' generators. It has a Runway Gen 3 Alpha version available, which you have to pay to access ($144 a year, or about £111 or AU$214), but its Runway Gen 2 version is available to try for free. You get 525 credits a month, but you are limited to 4-second clips.

Google has its own prompt-to-video generator called Veo, which is expected to launch later this year. It certainly looks impressive but you need to join a waitlist to try it.

Another contender to the AI video generation throne is Pika. It's aimed at individuals and you can upload and customize characters, objects, and settings with the new Scene Ingredients feature.

Finally, Luma AI's Dream Machine is another contender to the text-to-video throne. Again, you simply type in some text prompts and it generates a video. You can sign up for a free account but due to high demand the free tier is limited to 20 generations a day. Luma also offers paid tiers all the way up to $399.99 (about £309 and AUS$595) a month for 2,000 generations and the highest priority in the queue.