Humanoid robots could soon move in a far more realistic manner — and even dance just like us — thanks to a new software framework for tracking human motion.

Developed by researchers at UC San Diego, UC Berkeley, MIT, and Nvidia, "ExBody2" is a new technology that enables humanoid robots to perform realistic movements based on detailed scans and motion-tracked visualizations of humans.

The researchers hope that future humanoid robots could perform a much wider range of tasks by mimicking human movements more accurately. For example, the teaching method could help robots operate in roles requiring fine movements — such as retrieving items from shelves — or moving with care around humans or other machines.

ExBody2 works by taking simulated movements based on motion-capture scans of humans and translating them into usable motion data for the robot to replicate. The framework can replicate complex movements using the robot, which would let robots move less rigidly and adapt to different tasks without needing extensive retraining.

Related: 8 of the weirdest robots in the world right now

This is all taught using reinforcement learning, a subset of machine learning in which the robot is fed large amounts of data to ensure it takes the optimal route in any given situation. Good outputs, simulated by researchers, are assigned positive or negative scores to "reward" the model for desirable outcomes, which here meant replicating motions precisely without compromising the bot's stability.

The framework can also take short motion clips, such as a few seconds of dancing, and synthesize new frames of movement for reference, to enable robots to complete longer-duration movements.

Dancing with robots

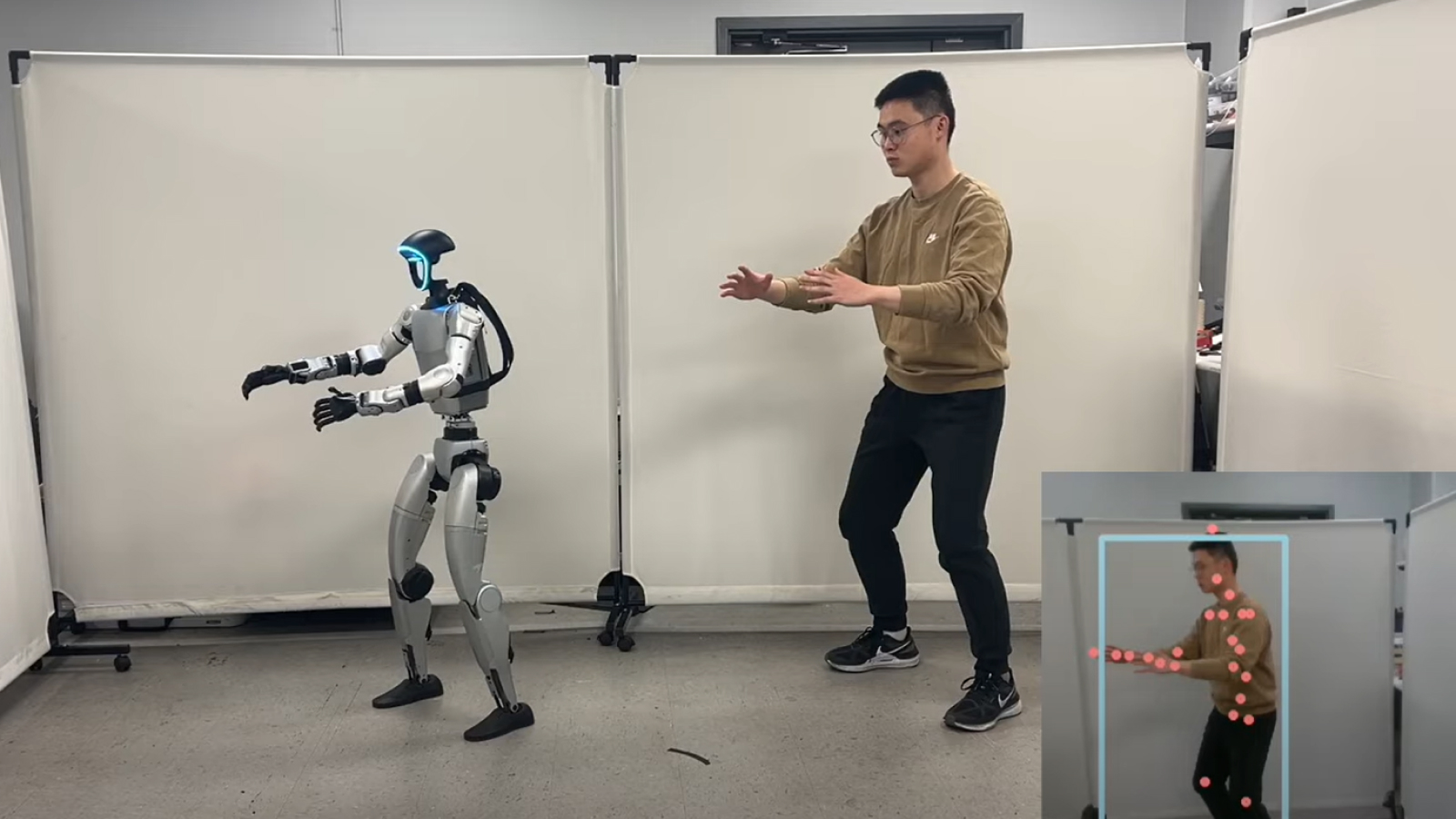

In a video posted to YouTube, a robot trained through ExBody2 dances, spars and exercises alongside a human subject. Additionally, the robot mimics a researcher's movement in real time, using additional code titled "HybrIK: Hybrid Analytical-Neural Inverse Kinematics for Body Mesh Recovery" developed by the Machine Vision and Intelligence Group at Shanghai Jiao Tong University.

At present, ExBody2's dataset is largely focused on upper-body movements. In a study, uploaded Dec. 17, 2024 to the preprint server ArXiv, the researchers behind the framework explained that this is due to concerns that introducing too much movement in the lower half of the robot will cause instability.

"Overly simplistic tasks could limit the training policy's ability to generalize to new situations, while overly complex tasks might exceed the robot's operational capabilities, leading to ineffective learning outcomes," they wrote. "Part of our dataset preparation, therefore, includes the exclusion or modification of entries that featured complex lower body movements beyond the robot's capabilities."

The researchers' dataset contains more than 2,800 movements, with 1,919 of these coming from the Archive of Motion Capture As Surface Shapes (AMASS) dataset. This is a large dataset of human motions, including more than 11,000 individual human movements and 40 hours of detailed motion data, intended for non-commercial deep learning — when a neural network is trained on vast amounts of data to identify or reproduce patterns.

Having proven ExBody2's effectiveness at replicating human-like movement in humanoid robots, the team will now turn to the problem of achieving these results without having to manually curate datasets to ensure only suitable information is available to the framework. The researchers suggest that, in the future, automated dataset collection will help smooth this process.