Toxicity runs rampant in many gaming communities. It's an accepted joke in many circles that Call of Duty voice chat is a place for people to bully each other. For those who play team-based shooters such as Valorant, vitriolic voice chat is unavoidable for anyone who wants to play this kind of team-based online game effectively. Riot Games, the publisher behind Valorant, is hoping to combat voice chat toxicity with a new tool that evaluates the audio data based on user-generated reports. What could possibly go wrong?

The intended goal is to make the process of reporting and penalizing players easier and more accurate. While initiatives to combat toxicity within a game’s community are a worthwhile endeavor, Riot’s voice evaluation update is just the latest attempt from the games industry to solve a nuanced cultural issue with a singular piece of tech.

The solution — In a June 24, 2022, blog post from Riot, the company announced the implementation of the Valorant voice evaluation update. Beginning on July 13, a background launch of the system will start in North America “to help train our language models and get the tech in a good enough place for a beta launch later this year.”

The hope is that the new system will “provide a way to collect clear evidence that could verify any violations of behavioral policies.” In other words, the system could theoretically confirm or refute claims of abusive chat. “This would also help us share back to players why a particular action resulted in a penalty,” the description reads.

The current system to deal with comms-related toxicity relies on player feedback. The system focuses on players who are repeatedly reported and how frequently these reports happen. A Riot blog post from February 2022 explains that “voice chat abuse is significantly harder to detect compared to text (and often involves a more manual process).”

This difficulty is what led to the new voice evaluation system. It is being implemented to cut down on the manual process, making action against toxic behavior quicker and more widespread. While this sounds good in theory, it doesn’t consider the inherent flaws with making a tool to regulate behavior.

The problem — Systems to categorize and regulate people’s behavior often are created with bias (be it intentional or not) such as facial recognition systems being inherently racist. The push to implement better regulation through technology comes from an admirable place, but it has problems if the systems themselves are not regulated (oh the irony).

Riot seems to understand there will be some growing pains with the system, hence the slow rollout: “We know that before we can even think of expanding this tool, we’ll have to be confident it’s effective, and if mistakes happen, we have systems in place to make sure we can correct any false positives (or negatives for that matter),” reads the initial blog post.

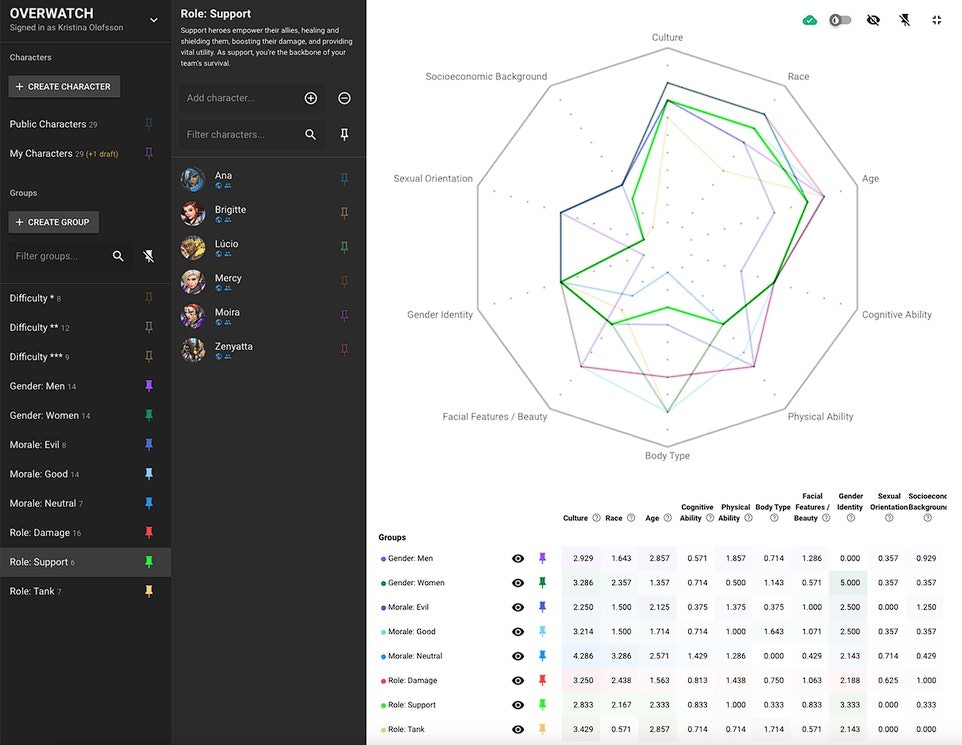

Perceived problems in the games industry are often met with a technological solution. Activision Blizzard has faced many issues in recent years, one of which is the lack of diversity within the company. To combat these accusations, the company announced that it was using a “diversity space tool” to create more diverse characters for future games. The initial post said the tool was being used in Call of Duty and Overwatch 2 and featured pictures showing how the tool measured things like sexual orientation, culture, and body type.

Activision Blizzard later updated the post with an introduction stating the tool is not being used in any active game development. Pictures of the tool and its data were also removed. A company known for its poor treatment of marginalized workers attempted to solve its diversity image problem by creating a tool instead of listening to diverse developers.

When talking to Inverse, Xbox’s Director of Accessibility Anita Mortaloni said, “There’s no one tool out there that is going to tell you everything and magically going to make your game accessible.” The same logic applies to any problem in game dev. There isn’t a tool that can solve problems like this unless devs work with the community itself.

While technological solutions to problems in such large communities as Valorant’s may be helpful, they are not a solution on their own.

They are tools. And tools need someone to use them, to guide them. While game developers turn to tools to solve their problems, they ignore the human element of the community, and, in turn, such solutions wind up feeling a bit hollow and inhumane.