Tech companies should move ahead with controversial technology that scans for child abuse imagery on users’ phones, the technical heads of GCHQ and the UK’s National Cybersecurity Centre have said.

So-called “client-side scanning” would involve service providers such as Facebook or Apple building software that monitors communications for suspicious activity without needing to share the contents of messages with a centralised server.

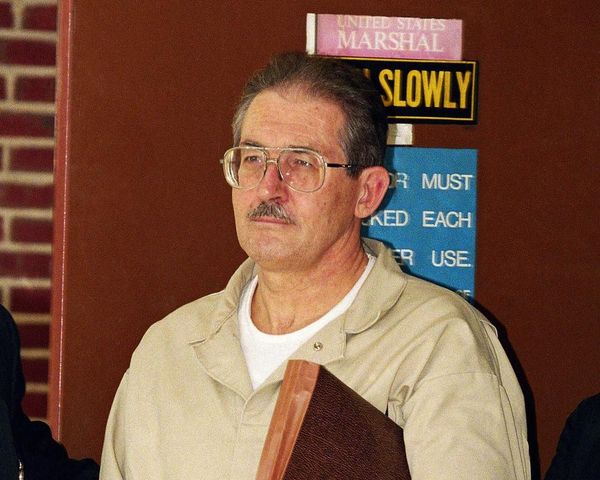

Ian Levy, the NCSC’s technical director, and Crispin Robinson, the technical director of cryptanalysis – codebreaking – at GCHQ, said the technology could protect children and privacy at the same time.

“We’ve found no reason why client-side scanning techniques cannot be implemented safely in many of the situations one will encounter,” they wrote in a discussion paper published on Thursday, which the pair said was “not government policy”.

They argued that opposition to proposals for client-side scanning – most famously a plan from Apple, now paused indefinitely, to scan photos before they are uploaded to the company’s image-sharing service – rested on specific flaws, which were fixable in practice.

They suggested, for instance, requiring the involvement of multiple child protection NGOs, to guard against any individual government using the scanning apparatus to spy on civilians; and using encryption to ensure that the platform never sees any images that are passed to humans for moderation, instead involving only those same NGOs.

“Details matter when talking about this subject,” Levy and Robinson wrote. “Discussing the subject in generalities, using ambiguous language or hyperbole, will almost certainly lead to the wrong outcome.”

The paper was welcomed by child protection groups. Andy Burrows, the head of child safety online policy at the NSPCC, said it was an “important and highly credible intervention” that “breaks through the false binary that children’s fundamental right to safety online can only be achieved at the expense of adult privacy”.

“It’s clear that legislation can incentivise companies to develop technical solutions and deliver safer and more private online services,” he added.

But critics say the proposals undercut the benefits of end-to-end encryption, and that the focus should instead be on non-technical solutions to child abuse. Alec Muffett, a cryptography expert who led Facebook’s efforts to encrypt Messenger, said the paper “entirely ignores the risks of their proposals endangering the privacy of billions of people worldwide”.

Muffett said: “It’s weird that they frame abuse as a ‘societal problem’ yet demand only technological solutions for it. Perhaps it would be more effective to use their funding to adopt harm-reduction approaches, hiring more social workers to implement them?”

The discussion paper from Levy and Robinson is not the first time the pair have waded into controversial policy areas. In 2018 they argued for a so-called “ghost protocol” solution to encryption, where GCHQ would be able to silently add itself as another recipient of messages sent to and from a target device.

“It’s relatively easy for a service provider to silently add a law enforcement participant to a group chat or call,” they wrote. “This sort of solution seems to be no more intrusive than the virtual crocodile clips that our democratically elected representatives and judiciary authorise today.”