Update: On September 20, 2024, following publication, the UK Information Commissioner's Officer (ICO) confirmed that LinkedIn halted AI training also for UK users.

If you are on LinkedIn, you might have come across users complaining about the platform using their data to train a generative AI tool without their consent.

People began noticing this change in the settings on Wednesday, September 18, when the Microsoft-owned social media platform started training its AI on user data before updating its terms and conditions.

LinkedIn certainly isn't the first social media platform to begin scraping user data to feed an AI tool without asking for consent beforehand. What's curious about the LinkedIn AI saga is the decision to exclude the EU, EEA (Iceland, Liechtenstein, and Norway), and Switzerland – the UK would be added on such list, too, later on Friday after the Information Commissioner's Officer (ICO) raised concerns. Is this a sign that only EU-like privacy laws can fully protect our privacy?

The EU backlash against AI training

Before LinkedIn, both Meta (the parent company behind Facebook, Instagram, and WhatsApp) and X (formerly known as Twitter) started to use their users' data to train their newly launched AI models. While these social media giants initially extended the plan also to European countries, they had to halt their AI training after encountering strong backlash from EU privacy institutions.

Let's go in order. The first to test out the waters were Facebook and Instagram back in June. According to their new privacy policy – which came into force on June 26, 2024 – the company can now use years of personal posts, private images, or online tracking data to train its Meta AI.

Last week, Meta admitted to having used people's public posts to train AI models as far back as 2007.

After Austria's digital rights advocacy group Noyb filed 11 privacy complaints to various Data Protection Authorities (DPAs) in Europe, the Irish DPA requested that the company pause its plans to use EU/EEA users' data.

Meta was said to be disappointed about the decision, dubbing it a "step backward for European innovation" in AI, and decided to cancel the launch of Meta AI in Europe, not wanting to offer "a second-rate experience."

Something similar occurred at the end of July when X automatically enabled the training of its Grok AI on all its users' public information – European accounts included.

Just a few days after the launch, on August 5, consumer organizations filed a formal privacy complaint with the Irish Data Protection Commission (DPC) lamenting how X's AI tool violated GDPR rules. The Irish Court has now dropped the privacy case against X as the platform agreed to permanently halt the collection of EU users' personal data to train its AI model.

While tech companies have often criticized the EU's strong regulatory approach toward AI – a group of organizations even recently signed an open letter asking for better regulatory certainty on AI to foster innovation – privacy experts have welcomed the proactive approach.

The message is strong – Europe isn't willing to sacrifice its strong privacy framework.

So, LinkedIn joined other predatory platform intermediaries in grabbing everyone's user-generated content for generative AI training by default—except in GDPR land.Seems like the GDPR and European data protection regulators are really the only effective antidote here globally. pic.twitter.com/8shCd5AWRUSeptember 18, 2024

Despite LinkedIn having now updated its terms of service, the silent move attracted strong criticism around privacy and transparency outside Europe. It's you, in fact, who must actively opt-out if you don't want your information and posts to be used to train the new AI tool.

As mentioned earlier, both X and Meta used similar tactics when feeding their own AI models with users' personal information, photos, videos, and public posts.

Nonetheless, according to some experts, the fact that other companies in the industry act without transparency doesn't make it right to do so.

"We shouldn't have to take a bunch of steps to undo a choice that a company made for all of us," tweeted Rachel Tobac, ethical hacker and CEO of SocialProof Security. "Organizations think they can get away with auto opt-in because 'everyone does it'. If we come together and demand that organizations allow us to CHOOSE to opt-in, things will hopefully change one day."

How to opt-out from LinkedIn AI training

As explained in the LinkedIn FAQs (which, at the time of writing, were updated one week ago): "Opting out means that LinkedIn and its affiliates won’t use your personal data or content on LinkedIn to train models going forward, but does not affect training that has already taken place."

In other words, the data already scraped cannot be recovered, but you can still prevent the social media giant from using more of your content in the future.

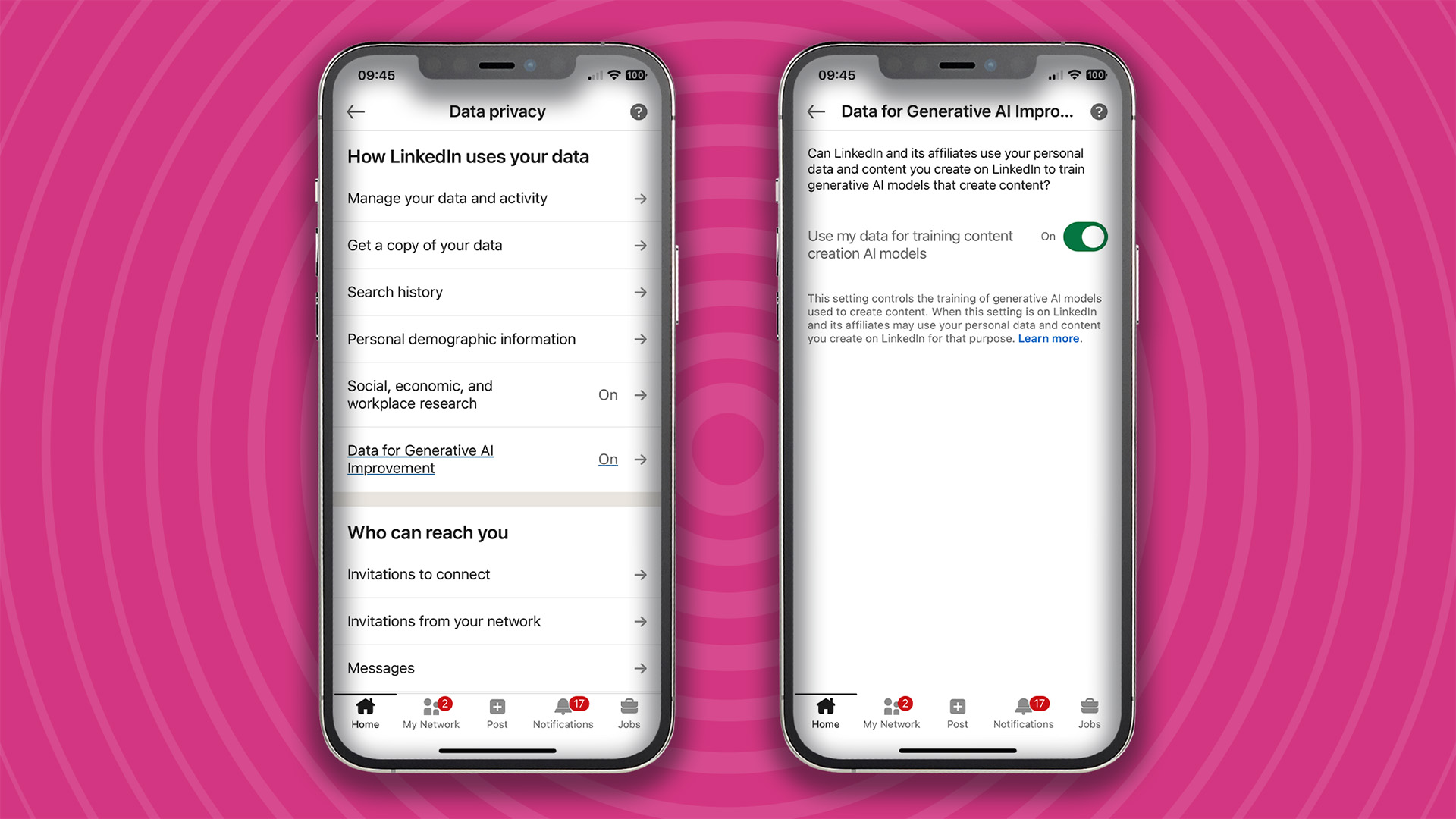

Doing so is simple. All you need to do is head to the Settings menu and select the Data Privacy tab. As the image below shows, once there you'll see that the Data for Generative AI improvement feature is On by default. At this point, you need to click on it and disable the toggle button on the right.