This week I have been mostly playing Jagged Alliance 3.

It actually made me put down Dredge (which I have been loving on the little AOKZOE handheld) and got me back into the classic turn-based shooter. I've long had a soft spot for Jagged Alliance's silliness, and with the addition of some XCOM elements it's a good ol' time.

This month I have been testing AMD laptops.

Speaking of that handheld, I've had a couple of AMD-powered laptops with the same integrated 780M graphics... but it's not playing ball in laptop form, performing around half the level of either the ROG Ally or AOKZOE A1 Pro. Testing tech can be frustrating.

To me, the 16GB Nvidia RTX 4060 Ti feels like a truly cynical graphics card, an almost petulant one, even. The green team never wanted to make it, don't want people to review it, and have already gone to great lengths to tell journalists that it performs exactly the same as the $100 cheaper RTX 4060 Ti 8GB.

Yet this unwanted graphics card is reportedly launching today… with precisely zero fanfare. As of right now, the expected day of launch, there are but a handful listed on Best Buy and Newegg, but none actually available. Not even Nvidia's own store lists any to buy.

Unlike every other GPU in the RTX 40-series family, Nvidia isn't sending cards out to reviewers, or even helping seed manufacturers' cards. For their part manufacturers themselves don't seem to want to sample cards, either. Nvidia has likened the situation to the 12GB update to the RTX 3080, where it similarly had little interest in having the cards actually tested.

In the end Nvidia doesn't seem hugely bothered whether you buy its new card or not. It was never supposed to be on the Ada agenda, but if you want to spend another $100 on an extra 8GB of GDDR6 for your mid-range card it's more than happy to facilitate.

The 16GB RTX 4060 Ti then feels purely like a reaction to a PC gaming community railing against Nvidia's initial decision to launch the RTX 4060 Ti with 8GB, a community contending that such a spec will restrict the longevity of the mid-range GPU in the near future. Hell, even AMD joined in.

The specific contention is that launching a $400 graphics card with 8GB of VRAM in 2023 will see the GPU's performance limited by near future games that swallow up more than that amount of graphics memory. We've already seen some examples launching with horrible performance on existing 8GB cards, such as The Last of Us, Jedi: Survivor, and Hogwarts Legacy—even at 1080p—so the expectation is that is a situation that will only increase as time and system requirements move on.

Whether it's as inevitable as some folk expect I'm not sure. Especially given the parameters on the consoles regarding VRAM capacities aren't going to change, and developers still need to code for their install bases, not fantasy system specs.

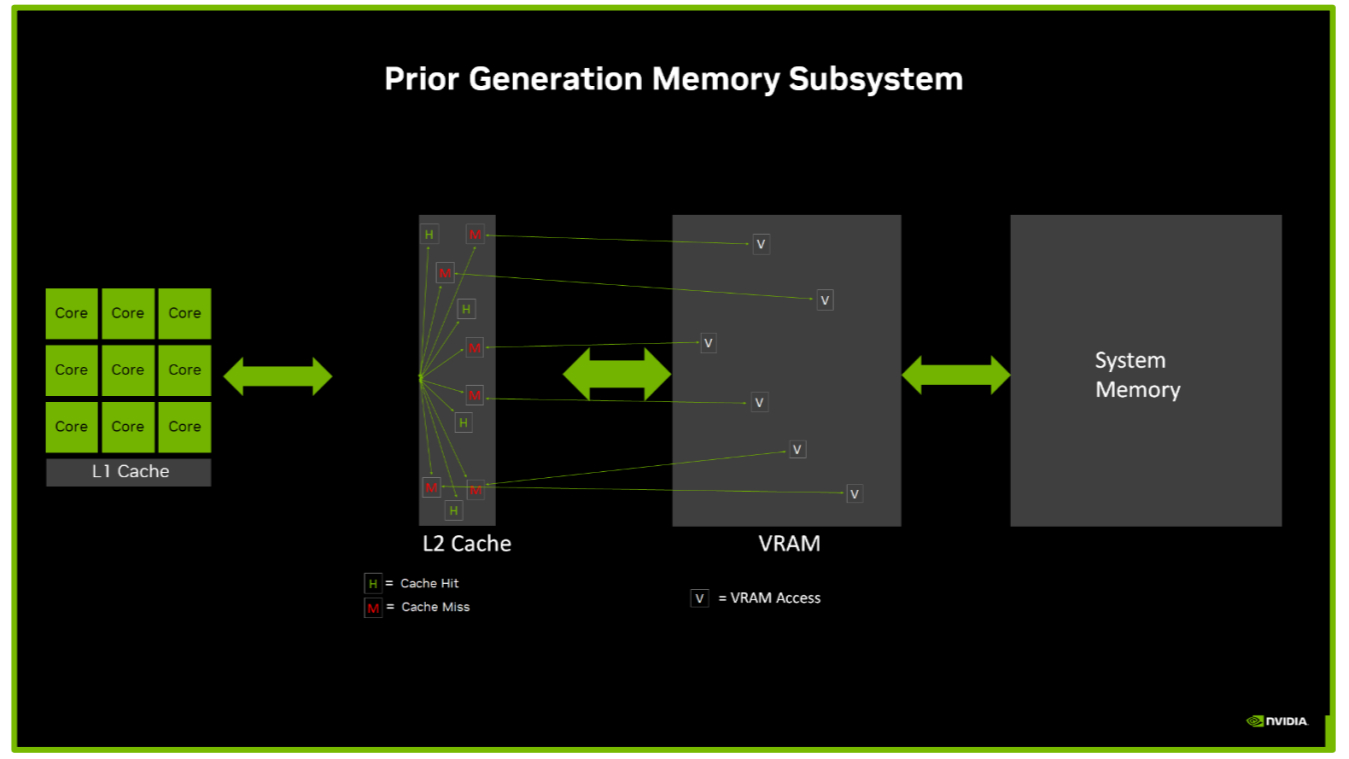

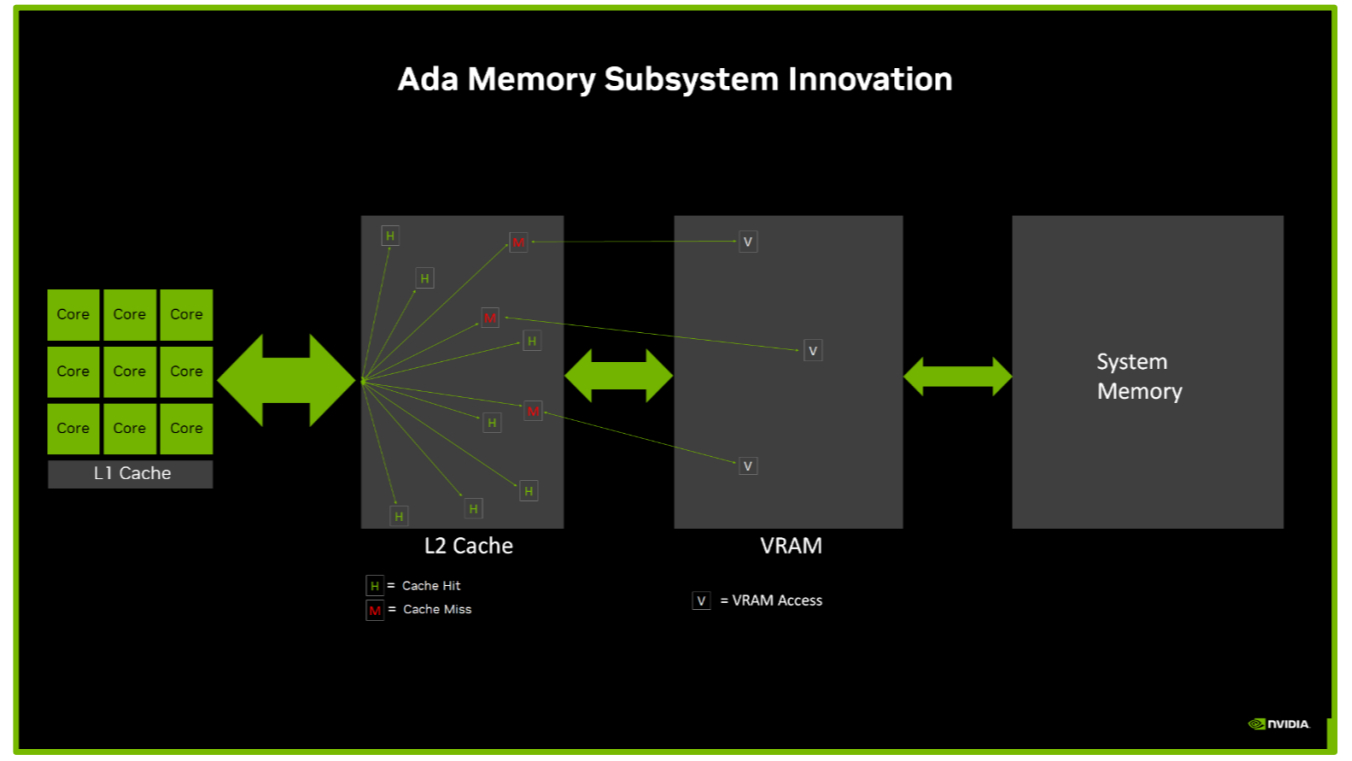

Nvidia itself suggests the problem is one born of poor game optimisation on PC, and that later patches have ironed out these particular performance issues in the past. It's also claiming the work it's done to boost the L3 cache on its GPUs reduces the number of times it has to dip into the VRAM, which is why it doesn't need as large memory buses as previous generations.

That's not necessarily going to help out when you hit on game data that exceeds 8GB in scale—and the GPU then has to dip into super slow system memory, dragging performance way down—but at least it addresses one of the memory issues people have had with the recent RTX 40-series cards.

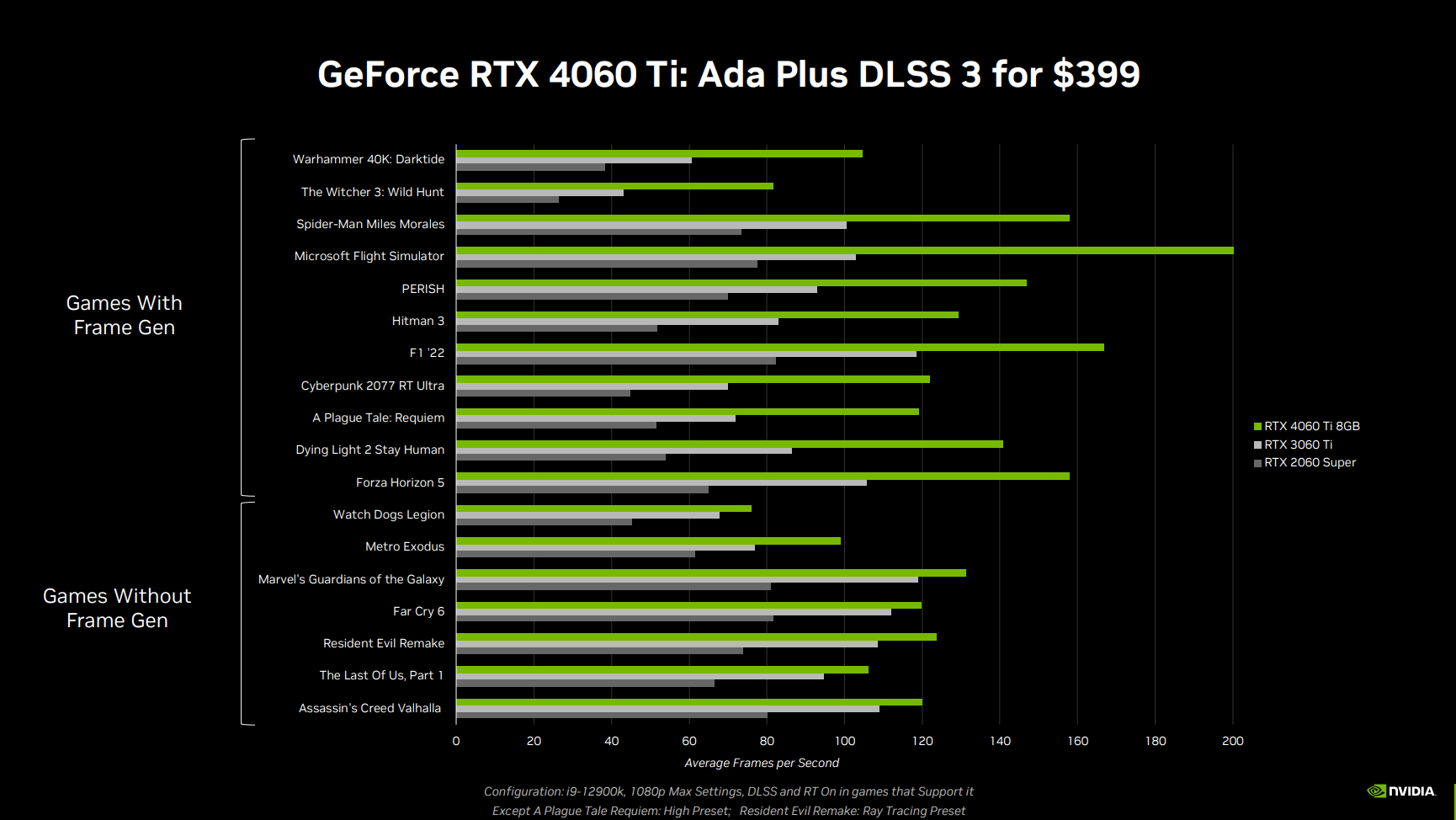

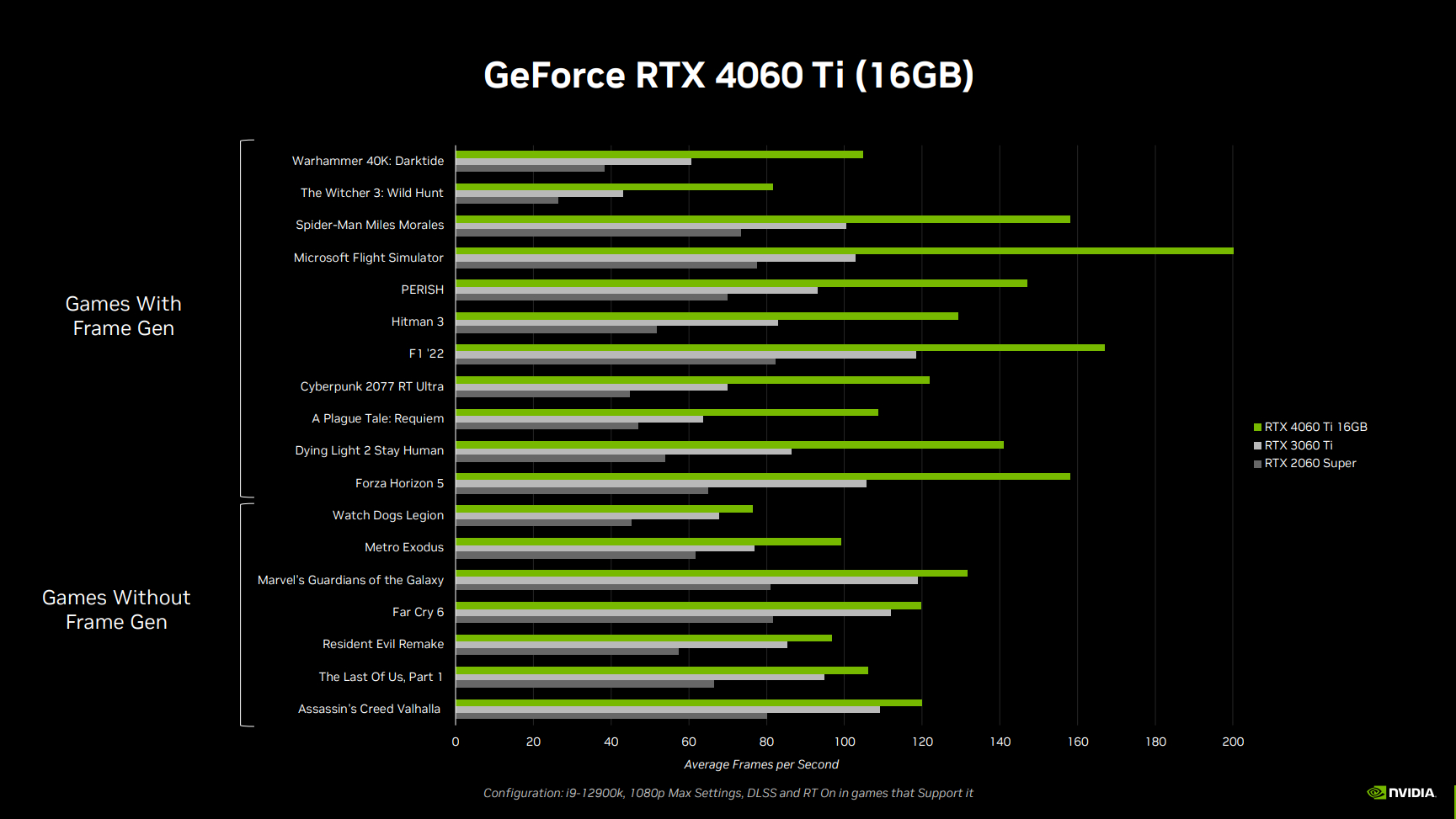

Nvidia also has the magic of upscaling and Frame Generation to salve potential performance woes. Nvidia might also have a point on the optimisation front, and about subsequent updates. During a 1:1 briefing with Nvidia around the launch of the 8GB RTX 4060 Ti I was taken through a bunch of benchmarks the green team ran the new Ada card through; pretty much all high settings and modern games.

The Last of Us was a title my attention was explicitly drawn to given that it was, to that point, the poster child for the argument against 8GB VRAM in a modern card.

And it performed fine on the RTX 4060 Ti 8GB with the latest game patches installed. Admittedly, it was not far ahead of the RTX 3060 Ti with DLSS and ray tracing enabled, but that's more about the GPU itself than the memory capacity. Still, it was shown batting above 100 fps at 1080p. With the 16GB card, however, the performance in The Last of Us was shown as identical.

And this is Nvidia highlighting that parity. After it's already pointed to the fact it's charging another $100 for the higher capacity card because it now has to stick GDDR6 on both sides of the PCB.

Nvidia is confident the extra VRAM does nothing for gaming performance and seemingly also confident that releasing a 16GB version via its manufacturing partners—it's not wasting a Founders Edition on this li'l stepchild—will only highlight it was right to go with just 8GB all along.

The card is like the embodiment of an exasperated 'FINE, here you go!'

History, however, will be the judge of that, because we just don't know how this is all going to play out over this generation. What we can be pretty confident of is that, however the game optimisation argument shakes out, there will be more games that launch in a state where your 8GB card struggles at higher settings and how long you have to wait for them to be fixed will be different each time.

But Nvidia never had plans to go for a 16GB card at this level, yet the complaints have been listened to, and the response is kinda 'well, screw you. If you want it, you pay for it.'

Sure, it sucks for the board partners spending money on making a 16GB GPU that's far too close to the excellent RTX 4070 in price and too far off in terms of performance, but there's a part of me that can't help but admire the sheer cynical bloodymindedness of this approach. The card is like the embodiment of an exasperated 'FINE, here you go!'

It sure feels like a lot of effort to go to in order to prove a point, but I'm not a multi billion dollar (temporarily trillion dollar) company with AI money to burn. Maybe if I was I wouldn't be beyond sticking a silicon middle finger up to the naysayers, either.