After years of promises, Tesla’s Robotaxi is finally here. Well, sort of. During last night’s event in Hollywood, CEO Elon Musk boasted about the automaker’s new two-seater EV as being able to drive autonomously wherever regulators allow it, starting with California and Texas. But before that happens "by the end of 2027," the company's current portfolio of passenger vehicles will allegedly be capable of unsupervised Full Self-Driving "starting next year."

This wouldn't be the first time Musk said something would happen "next year". So we might have to wait six months until we see the first driverless Tesla on the road, or a few years might pass–with Musk and Tesla, we just don’t know.

That was the theme of the whole Cybercab event. It left a lot of unanswered questions and left people’s imaginations running wild. Things like charging speed, battery size and driving range were conveniently left out of the presentation. Instead, Musk focused on the attractive but yet-to-be-true sub-$30,000 price and the "optimistic" timeline, as he put it.

Gallery: Tesla Cybercab

What we do know is that there are at least two other big companies out there that have been playing the autonomous taxi game for a lot longer than Tesla. General Motors’ Cruise was established in 2013 and offered driverless rides in several cities around the United States before being forced off the road because of a freak accident where a pedestrian was hit by a human-driven car and then dragged by a driverless Chevrolet Bolt EV operated by Cruise.

Meanwhile, Waymo’s history goes back even further. It debuted in 2009 as Google's self-driving car project, using a goofy, bubble-shaped EV. Waymo then became a fully-fledged separate corporate entity that used Chrysler Pacifica minivans as its go-to vehicle. Now, the discontinued Jaguar I-Pace is Waymo’s weapon of choice, but the company has already inked deals with Hyundai and Zeekr to bring other vehicles to its fleet in the future. But the company is already operational with an autonomous ride-hailing service in some test markets.

Tesla and its leader need to put on their big-boy pants if they want to compete. Musk needs to prove he can deliver on all of his broken promises. That means he has to make run-of-the-mill Tesla EVs like the Model 3 and Model Y into self-driving taxis via a software update. The idea sounds cool—who doesn’t want to send their car off to make money on its own—but can it really be done?

Some, including rival companies Waymo and Cruise, believe that Tesla’s vision-only approach cannot become anything more than a Level 2 system. That’s how the so-called Autopilot and Full Self-Driving (Supervised) features are categorized at the moment.

Meanwhile, outspoken CEO Elon Musk has said the opposite–that Cruise and Waymo’s approach cannot be scaled efficiently and will forever be confined to a handful of cities. But who is right? Only time will tell, but one thing is clear: Tesla is yet to put truly self-driving vehicles on the road, while the others have already made money out of it.

Leaving all the fanboy rhetoric behind, one thing is certain. Both Waymo and Cruise have developed systems that are capable of ferrying strangers from point A to point B without anyone behind the steering wheel. They’re not perfect though, as proven by the dozens of reports showing robotaxis from both entities stuck, honking at each other, impeding traffic and even creating traffic jams of their own.

So let’s dive a little deeper into each of these systems, and maybe at the end we’ll at least know what to expect. Who knows, maybe your next taxi ride will be without a driver behind the wheel.

Mapping

One of the biggest differences between Tesla’s approach and that of Waymo and GM’s Cruise is that Tesla claims its system can be deployed anywhere in the world because it doesn’t need pre-mapped information. Instead, it relies completely on the camera suite to “see” the world around it and make decisions on the fly. It also uses the navigation system’s two-dimensional map to know in which direction to go.

That said, there are clues that Tesla is currently more reliant on mapping then the company would have you believe. According to a report from Bloomberg, which was later seconded by an opinion from famous Tesla hacker Green The Only, several test vehicles gathered extensive amounts of data on the roads where the Robotaxi event was held.

We don’t know what information was collected, but one can’t help but think it was precise, high-definition map data used so everything went according to plan. That also suggests that Tesla’s self-driving taxi isn’t yet capable of delivering on previous promises, but Tesla still has time to work on refining the Robotaxi before it goes into service.

By contrast, both Waymo and Cruise have a multi-step approach, the first of which is to deploy a handful of their sensor-equipped vehicles in the real world with a driver behind the wheel. During this procedure, which may take months, each car records high-definition imaging and mapping data that will later be used as the base for all the autonomous vehicles’ decisions.

Here’s how Cruise describes what’s going on during this first phase:

The first step is identifying high-fidelity location data for road features and map information like speed limits, stop signs, traffic lights, lane paint, right turn-only lanes and more. Having current and accurate information will help an autonomous vehicle understand where it is and the location of certain road features.

And here’s what Waymo says about it:

Before our Waymo Driver begins operating in a new area, we first map the territory with incredible detail, from lane markers to stop signs to curbs and crosswalks. Then, instead of relying solely on external data such as GPS which can lose signal strength, the Waymo Driver uses these highly detailed custom maps, matched with real-time sensor data and artificial intelligence (AI) to determine its exact road location at all times.

This is an extremely important step to make sure that the car knows exactly where it is, even if it loses its GPS signal during a foggy or cloud-heavy day. It’s also possibly the biggest roadblock to these two companies’ ability to expand worldwide. Well, at least from a technical point of view, because there’s also the issue of regulatory approval. I’ll get to that later.

Both Cruise and Waymo claim their systems can detect changes in the layout of a street in real time while their vehicles are out on customer rides. If a sidewalk is five inches narrower or a new street sign has been installed since the last time a car went through there, the changes are automatically recorded and sent out to the whole fleet.

As for Tesla, we know there are (or were) people at data centers reviewing video footage from people’s cars that have the so-called Full Self-Driving (Supervised) feature enabled. But that review process happens after the fact, not in real-time, so if the system misbehaves in one way or another, reviewers need to go through the footage and then change something in the driving behavior.

Tesla doesn’t say how its Robotaxi fleet would function if something needs to be changed on the fly. In theory (and according to Musk’s online hype machine), things should sort themselves out, thanks in no small part to the Cortex supercomputer that’s being built at the company’s headquarters in Austin, Texas.

It features hundreds of thousands of Nvidia graphic processing units (GPUs) that will be used to “train real-world AI,” according to Musk. The company is also spending massive amounts of money–over $10 billion–on things like video storage, data pipelines and training compute units in the hopes that it would finally offer real autonomous driving.

Furthermore, all Tesla EVs on the road today that are fitted with at least Hardware version 2 are actively collecting data and sending it back to Tesla–which is why the company needs those massive servers–to train its self-driving efforts.

“Everyone’s training the network all the time,” Musk said in 2019. “Whether Autopilot is on or off, the network is being trained. Every mile that’s driven for the car that’s hardware 2 or above is training the network,” he added. But there’s no guarantee that training data and computing power alone can solve self-driving everywhere. For now, we’re once again taking Musk’s word for it.

Sensors

The second biggest difference between Tesla and its competitors has to do with sensors. Tesla has famously removed ultrasonic sensors and radar units from its passenger vehicles and is working exclusively with video cameras for everything from parking visualizations to advanced driving assistance systems like Autopilot and FSD. It's the same with the recently revealed Cybercab, which uses video cameras and what Tesla calls AI 5, the latest hardware version that comes after HW 4.

“Lidar is a fool’s errand,” Elon Musk said. “Anyone relying on lidar is doomed. Doomed! [They are] expensive sensors that are unnecessary. It’s like having a whole bunch of expensive appendices. Like, one appendix is bad, well now you have a whole bunch of them, it’s ridiculous, you’ll see.”

Musk believes the world is designed to be perceived with vision, just like humans, and with help from massive neural networks (i.e. artificial brains), its system will be capable of acting like a human.

By contrast, both Waymo and Cruise use several sensors on their cars to “see” the world that surrounds them. That’s in addition to the high-definition maps created in the first phase of deployment in a new city.

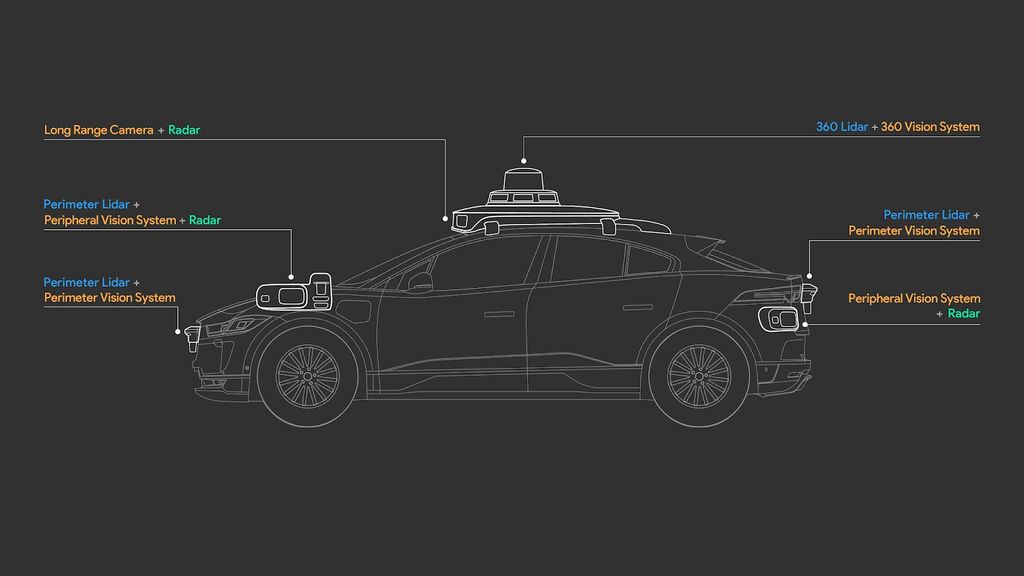

Waymo’s fifth-generation sensor suite, which is currently deployed on its fleet of Jaguar I-Pace EVs, has no fewer than 13 sensors, including a 360-degree lidar, three perimeter lidars, three radars and a bunch of video cameras. Cruise’s Chevy Bolt EVs use something similar.

All these gizmos create a 360-degree view of the area surrounding the vehicle and make it so that the car can drive even when it’s foggy, sunny or pouring rain outside. The cameras are also automatically cleaned if they get dirty.

The lidar suite creates a high-resolution, 360-degree field of view with a range of approximately 1,000 feet. Meanwhile, the long-range cameras can “see” stop signs as far as 1,600 feet. The radars complement the lidars and cameras with their ability to detect objects and their speed irrespective of weather conditions.

Tesla just has its cameras to rely on, which, granted, is a much simpler setup and there’s not much to go wrong. But what if something does go wrong? What if a camera gets covered with rain water and the Robotaxi is on its way to pick up a customer? I don’t know how much AI and all those massive server rooms will help in this scenario, as Musk didn't say anything about a camera cleaning setup.

And there’s another thing to consider. Waymo, for instance, is testing every single vehicle before it lets it loose on its own. It’s a safety measure to make sure the sensor suite works as it should.

Tesla has shown a less careful approach to new product releases and quality control. What happens if a camera is misaligned from the factory? The millions of miles of training data should tell it what to do, but if the reading is incorrect, the response will also be incorrect. This is why many experts say the lack of redundancy in Tesla’s system could be a problem. Sure, you can intuit the world using cameras, but if you can also get more information using lidar and radar, why wouldn’t you?

Cost

Besides Musk’s appendices remarks about Lidar, there is a case to be made about skipping it altogether and going with a simpler setup that relies more on software than hardware to do the job. This has the potential to significantly reduce costs and bring the technology to more people, as lidar units tend to be more expensive than cameras or radars.

Artificial intelligence

Leaving aside the fact that everything is “AI” nowadays, even though most of what we’re seeing is actually something called machine learning, Tesla has long claimed that it will be able to achieve true self-driving thanks to AI.

After years of collecting data from all of its vehicles, Tesla now has sufficient data fed into its artificial brains to offer Autopilot and FSD, but those systems are notorious for their unexpected disengagements. In a truly driverless vehicle, that’s not acceptable, and it will be interesting to see how Tesla manages this.

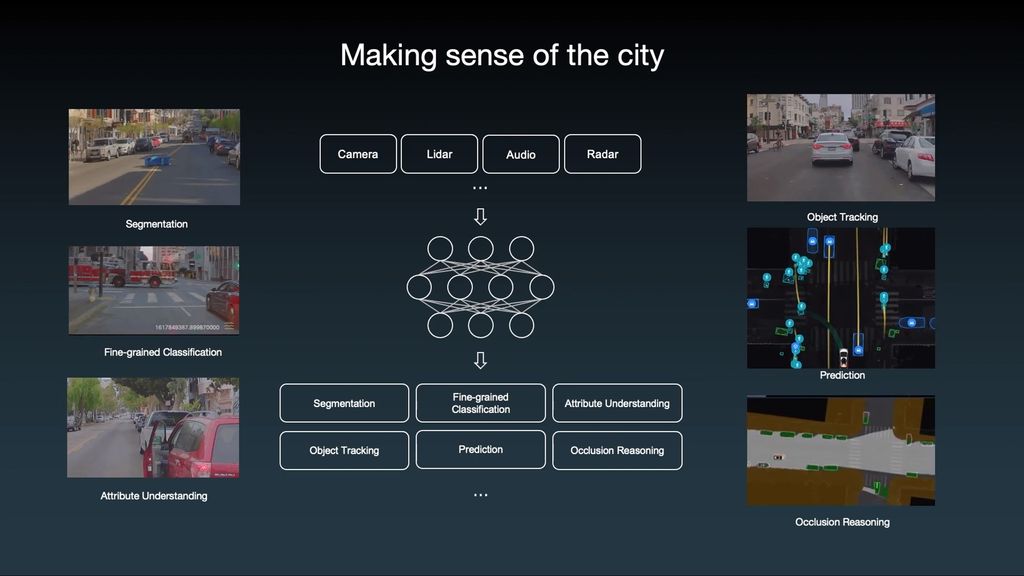

But with all this talk of AI, it’s easy to forget that Waymo and Cruise use AI, too, for their operations. Their cars constantly make decisions on the fly after analyzing every bit of data coming from those sensors. With over 15 years of experience, Waymo seems to be getting good at it. The company has so far avoided the sort of high-profile “self-driving” incidents that have hurt the image of Tesla, Uber and Cruise.

Redundancies

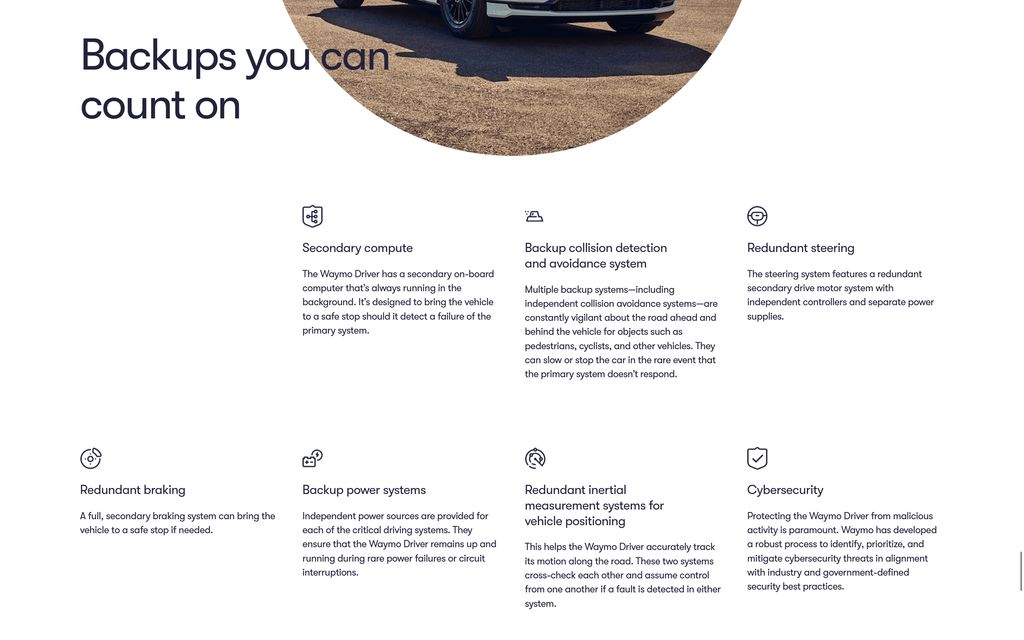

This is really important when dealing with cars that drive themselves on real roads. There’s no one behind the steering wheel to take emergency action, so the car needs to have systems in place to be able to stop safely in case something breaks.

Waymo has a secondary onboard computer that’s always running in the background and is designed to bring the vehicle to a safe stop if it detects a failure with the primary system. Then, there’s a backup collision detection and avoidance system, a secondary steering drive motor with independent controllers and a separate power supply, a secondary braking system, backup power systems and–last but not least–redundant inertial measurement systems for vehicle positioning.

Does Tesla’s Robotaxi have any sort of hardware backup solution? I don’t know, Tesla didn’t say. But in order to offer a compelling self-driving car, it should have at least some sort of redundancies in place. The fact that Tesla didn’t mention anything about this is concerning, to say the least.

Supervision

Both Waymo and Cruise autonomous taxis are constantly monitored by humans from afar, making sure they don’t do stupid things on the road. Sometimes, even this sensor-heavy approach backed by human supervisors isn’t enough to avoid incidents like the one that sidelined Cruise’s operations.

So, how will Tesla’s Robotaxi be supervised? Again, there’s no official answer. Musk said that the Cybercab would be available to buy as a regular car and that customers could manage their personal fleet from the comfort of their homes. But could they intervene if a Cybercab hops onto a curb? If one person has 10 vehicles, how could they monitor everything at once?

Granted, if the technology is advanced enough to go for millions of miles without any disengagement, human supervision will be unnecessary, but as it stands today, that hasn’t happened yet.

Legal responsibility

Tesla is currently not responsible for any accident that occurs when Autopilot or FSD is enabled. Multiple disclaimers on the company’s website and in its vehicles say that the driver is always responsible for what the car is doing and that the car is not autonomous. Tesla hasn't said a word about who will be responsible if a Cybercab crashes. According to the SAE, drivers of vehicles that are considered Level 3-capable or above are not in fact driving, even though they're in the driver's seat.

Seeing how the Cybercab doesn't have a steering wheel and pedals and that Musk hinted at the idea that it would be capable of driving anywhere in the world, as long as regulators green light it, it is considered a Level 5 vehicle, so the people inside it are not responsible for its actions.

Similarly, Waymo and Cruise are responsible for their driverless vehicles. There’s no other way around it, seeing how there will be no driver behind the wheel, and it will be interesting to see what that entails because Musk laughed at one point when asked if Tesla would take responsibility for the actions of its passenger vehicles.

Are we ready for autonomous cars?

However ambitious and technologically advanced Tesla, Waymo and Cruise are, they’re still currently at the mercy of regulators. And, as it stands today, there are just a handful of cities and regions in the world that allow localized testing of fully autonomous vehicles.

But technology is advancing at lightning speed and more cities, regions and countries will be willing to open up their roads to Level 3 and above cars, either to attract some much-needed investment or just for kicks.

Let’s not forget, though, that even with the multiple layers of security, redundancies and remote human supervision, accidents happened and people got injured because of this quest to create a safer vehicle. Let’s also not forget that human drivers kill other human drivers, so going down the computer-aided route might solve a problem some might not even know existed in the first place.

So, are we really ready for cars that don’t have steering wheels? I’m not sure that we are, at least not now. I know there’s great potential and that things will get better, but for now, I’d like to be at the helm of the 4,000-pound hunk of metal I’m sitting in. If you disagree, I’m sure you’ll let me know in the comments.

Correction 10/11 at 1:03 P.M. E.T.: An earlier version of this article listed an incorrect price for Lidar units. The story has been updated to be accurate. We regret the error.