Updated at 2:00 p.m. ET on January 8, 2018.

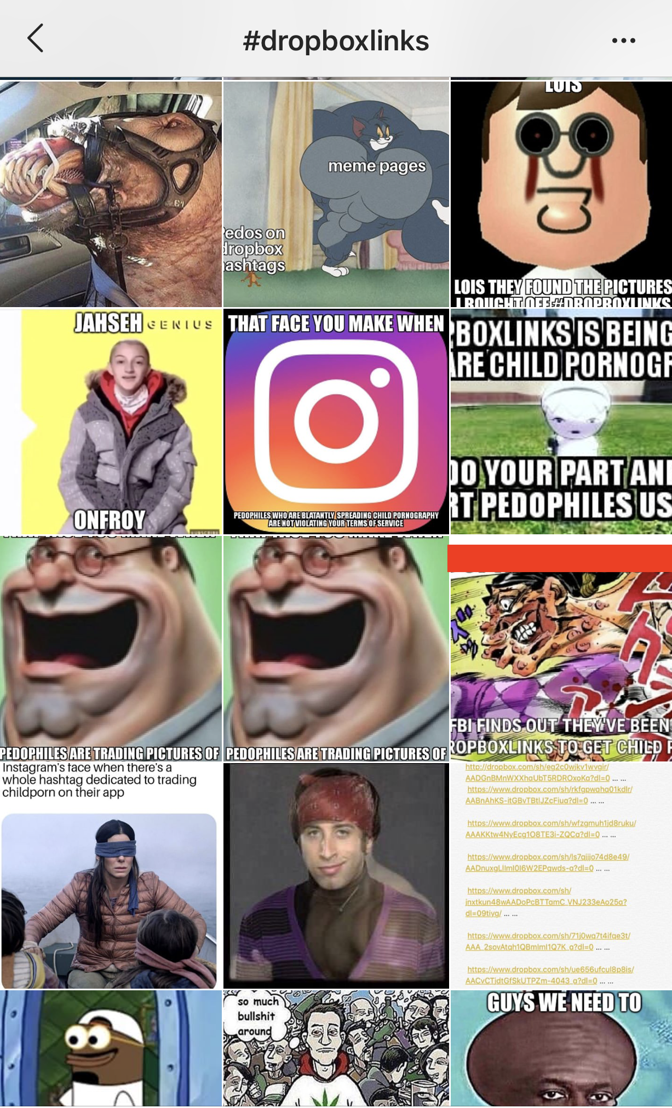

A hashtag war has been brewing on Instagram between users who appear to be trading child pornography and the memers intent on stopping them.

A network of users on the platform has allegedly been using the hashtag #dropboxlinks to find and share explicit photos of underage children. Once these users connect, they are thought to trade the illicit material via links shared through Instagram direct messages.

After uncovering this network over the weekend, hundreds of meme accounts launched a callout campaign on Monday in an effort to root out alleged child pornography on the platform. They shared PSAs about the phenomenon and flooded the hashtag with memes in order to make it harder for those looking to trade links to find one another.

The viral campaign started when the Instagram meme account @ZZtails uploaded a video to Youtube on Monday morning. “This is going to be an actual serious video,” says Jack, the 16-year-old founder and admin of @ZZtails, who, like all minors in this piece, is referred to by his first name only because of privacy concerns. Jack claims that he saw a call for Instagram users to report an account that “posted very sexually explicit photos of boys” over the weekend. As he investigated the account, he discovered that it was following the hashtag #dropboxlinks.

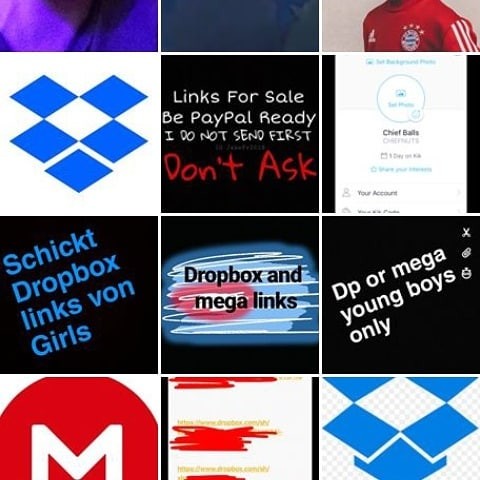

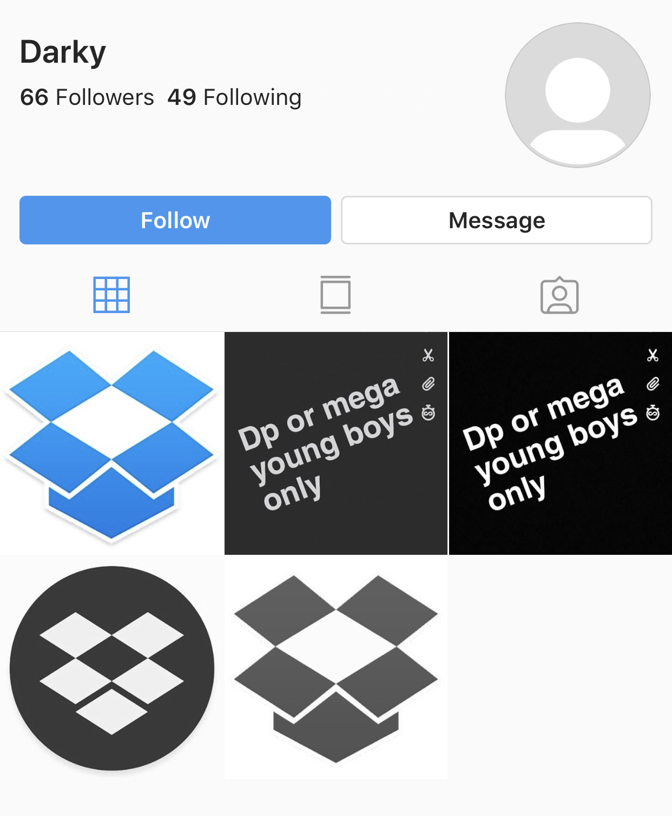

The alleged child-porn-trading users set up anonymous accounts with throwaway usernames or handles such as @dropbox_nudes_4_real (which has since been removed). The accounts that @ZZtails and other memers surfaced, which can also be found on the hashtag, contain blank posts with captions asking users to DM them for Dropbox links, which allegedly contain child porn or nudes.

“I’ll trade, have all nude girl videos,” one user commented in September 2018. “DM young girl dropbox,” said another. “DM me slaves,” said someone else. Many others commented “HMU to trade dropbox links” on various throwaway accounts. “Young boys only,” another user posted several times. On Saturday, an account with the username @trade_dropbox_linkz posted “DM if u want young girl links.”

According to screenshots shared with The Atlantic, several memers who reported the accounts received messages from Instagram claiming that the platform’s terms had not been violated. While they waited for Instagram to take action, meme-account holders banded together to spam related hashtags with memes. One memer who posted using the hashtag received a message from another user with links to illicit material, asking if he wanted to “trade boys.”

Late Monday night, after an inquiry from The Atlantic, Instagram restricted the hashtags #dropboxlinks and #tradedropbox. “Keeping children and young people safe on Instagram is hugely important to us,” an Instagram spokesperson said. “We do not allow content that endangers children, and we have blocked the hashtags in question.” The platform also said it is “developing technology which proactively finds child nudity and child exploitative content when it’s uploaded so we can act quickly.”

When reached for comment on Tuesday, a Dropbox spokesperson said, “Child exploitation is a horrific crime and we condemn in the strongest possible terms anyone who abuses our platform to share it. We work with Instagram and other sites to ensure this type of content is taken down as soon as possible.”

[Read: Instagram has a massive harassment problem]

“It’s just disgusting,” says Jacob, a 16-year-old who runs the meme account @Cucksilver. After searching the hashtag #dropboxlinks himself, Jacob said he was shocked at what it revealed. He posted several memes about it to his page, and reported the offending accounts.

Jackson Weimer, a college student and the founder of several meme pages, says he wasn’t surprised that meme accounts discovered the alleged problem before moderators from the platform itself. Memers usually follow a broad range of accounts and are intimately familiar with the platform’s dark corners. “Meme accounts do a good job of … bringing awareness to stuff that’s happening in the community. They do a good job of raising issues,” he says.

Part of the reason this particular issue spread so fast and so far is because many meme-account admins are teenagers themselves. “They could be the people at risk for this type of stuff,” Weimer said. “The fact that they’re so young makes them connect with those kids who are in danger and being taken advantage of via those hashtags.”

Many high schoolers without meme accounts spun up old pages or dedicated their main page to spamming the hashtag. “Kids my age shouldn’t be able to see [child-porn-related content], even if it’s just people offering or trying to sell it,” Jack says. “No one of any age should have access to that stuff.”

Facebook, which owns Instagram, has highlighted its quest to expand its content-moderation efforts over the past year, but some moderators say its efforts fall short. A team of 15,000 content moderators is tasked with moderating content from Facebook and Instagram’s collective 3 billion monthly users. And while Instagram is notorious for its strict ban on female nipples, a post from a user showing breasts with the nipples covered above the caption “Dm to trade young girl pics or links” was allowed to stay up until Monday night.

“I think that Instagram has to take some sort of fault here,” says Daniel, the 15-year-old admin of the meme account @bulk.bogan. “They need a better way to report tags or accounts like this.”

[Read: Teens are being bullied “constantly” on Instagram]

Meanwhile, child pornography has proved to be a pervasive problem online. In 2017, Tumblr users engaged in a campaign known as the “Woody Collective” to reclaim formerly problematic blogs. In November, Tumblr was temporarily kicked off of Apple’s App Store after its filtering technology failed to catch the illegal material. By December, Tumblr had banned all adult content on the platform in an effort to tamp down on illegal photos. TechCrunch reported last month that Facebook-owned WhatsApp was being used to spread child pornography. In December, Google was also forced to remove some third-party apps that led users to child pornography-sharing groups.

Despite Instagram’s attempt at addressing the problem, many memers were still posting about #dropboxlinks on Tuesday morning. “I’m sure that all these people, if nothing more is done, they’ll just flock to the next hashtag and keep doing what they’re doing,” Jack says.

Weimer agreed. “Meme accounts joke about a lot of random stuff, and a lot of times it’s not all clean humor,” he says. “But when it comes down to it, this isn’t a joke. People need to take this stuff seriously.”