As GPUs for AI and HPC applications get more power-hungry, they require better cooling. For example, a rack with 72 Nvidia B200 GPUs is projected to consume around 120 kW, which is 10 times higher than a typical rack power. As a result, Blackwell-based servers will mandate liquid cooling. On Monday, Supermicro introduced its datacenter-scale liquid cooling solution to prepare for these next-generation servers.

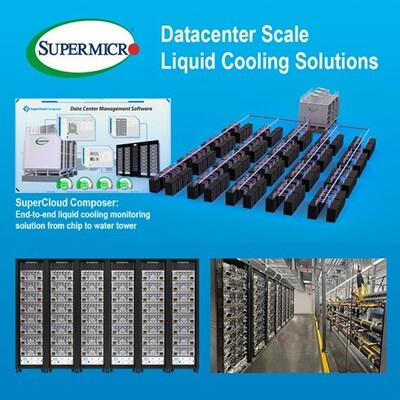

Supermicro's new complete system includes advanced cooling components, software, and management tools to optimize performance and sustainability. In particular, the solution incorporates Coolant Distribution Units (CDUs), cold plates, Coolant Distribution Manifolds (CDMs), cooling towers, and SuperCloud Composer software for end-to-end management. This setup is intended to lower initial investment costs and the total cost of ownership (TCO) by making datacenters more energy-efficient.

Supermicro's cooling technology is aimed at ultra-dense AI servers, each capable of housing dual top-tier CPUs and up to eight Nvidia HGX GPUs in a 4U configuration as well as up to 96 B200 GPUs per rack. This enables four times the computing density compared to traditional setups.

One of the key benefits of Supermicro's liquid cooling solution is its rapid deployment capability. Supermicro's modular design reduces the time required to get datacenters up and running, cutting it down from months to weeks, which is crucial for companies looking to upgrade or build new facilities quickly.

The solution’s liquid cooling capabilities are engineered to handle extreme heat levels, efficiently cooling servers that demand up to 12kW of power and AI racks generating over 100kW of heat, exactly what Blackwell-based NVL72 servers need.

Supermicro's technology also supports warm water cooling, reaching temperatures up to 113°F (45°C). This feature not only boosts cooling efficiency but also makes it possible to repurpose the heat for applications like district heating or greenhouse warming.

The SuperCloud Composer software included in the package offers real-time monitoring and control over all components. It helps data center operators manage costs and maintain system reliability, ensuring that operations run smoothly and efficiently.

Supermicro said that it had recently implemented over 100,000 GPUs using its liquid cooling solution (DLC) in some of the largest AI facilities ever built, along with other cloud service providers (CSPs).

"Supermicro continues to innovate, delivering full datacenter plug-and-play rack scale liquid cooling solutions," said Charles Liang, CEO and president of Supermicro. "Our complete liquid cooling solutions, including SuperCloud Composer for the entire life-cycle management of all components, are now cooling massive, state-of-the-art AI factories, reducing costs and improving performance. The combination of Supermicro deployment experience and delivering innovative technology is resulting in data center operators coming to Supermicro to meet their technical and financial goals for both the construction of greenfield sites and the modernization of existing data centers. Since Supermicro supplies all the components, the time to deployment and online are measured in weeks, not months."