Networked devices are all around us. Some of them enable autonomy of things or AoT™. Increasingly, these devices are also providing a path for creating smarter and safer cities - where people, vehicles (horse carriages, cars, bicycles, scooters, pets, robots) and infrastructure interact in highly dynamic situations to achieve multiple objectives - smooth traffic flow, infrastructure management, pedestrian safety, security, emergency management and crime prevention. Cameras and video analytics are the typical tools of choice. Road sensors of various types are also used to sense traffic flow and enable dynamic traffic signal management. Increasingly, LiDAR is being tested as a beneficial addition - primarily to improve the fidelity of the information, but also to add a new sensing modality that provides 3D information and superior situational awareness. The goal is to fuse dynamic information (and possibly static background information like city maps) to make decisions, with or without humans in the loop. A recent market study projects the TAM (Total Available Market) for LiDAR in Smart Infrastructure (which includes crowd analytics, perimeter security and road traffic management) to increase from $1.5B in 2025 to $14B in 2030. A note of caution though - Smart Cities implementations are still in the evaluation phase, and LiDAR is likely to capture just a portion of this TAM if and when these initiatives translate into actual deployments.

Funding for Smart City implementations will need to come from government, public and municipal agencies, or commercial entities entrusted with installing and maintaining these systems. Public organizations are fiscally conservative. Adding an expensive new functionality like LiDAR needs to be justified clearly, especially since there are no obvious monetary benefits that can be easily quantified or exploited as investments. Public agencies also generally have a poor track record of testing and deploying new technologies - either due to lack of internal talent, clear customer focused metrics or bureaucratic process infused with political and public relation constraints. For AoT™ applications in the commercial arena, the case for LiDAR is clear (although it took a while to get there!). For applications like smart cities and security, the need for LiDAR needs to be more clearly explained, given that these are expensive and are currently too immature to operate in a plug and play environment.

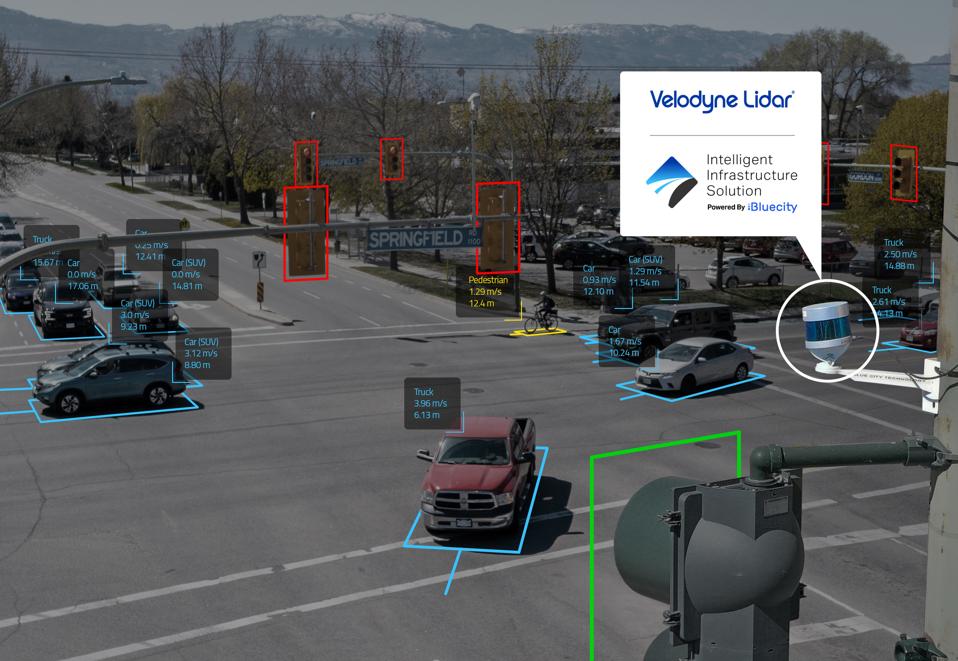

Jon Barad is the Vice President of Business Development at Velodyne, which essentially pioneered LiDAR as a must-have technology for vehicle autonomy and 3D mapping in the mid-2000 timeframe. Velodyne was the first of many LiDAR companies to go public via a SPAC merger in 2020. It has been going through significant management upheaval recently with the exit of the founder/chairman David Hall in early 2021, and recent departures of their CEO and board chairman. Mr. Barad indicates that in spite of this, the team is energized, customers are excited and their penetration into non-AoT™ applications is accelerating. Velodyne’s IIS (Intelligent Infrastructure Solution) is geared towards traffic and parking management speed enforcement, crowd analytics and applications in the U.S (Nevada, Florida, New Jersey, Texas), Canada, Finland and China.

Mr. Barad highlights some of the reasons he believes LiDAR is an integral component for Smart City applications:

1) Privacy: unlike cameras, LiDAR point clouds can recognize objects (car vs pedestrian) but do not have the resolution to identify people (this is Elon Musk). As concerns about about “Big Brother” surveillance accelerate, data privacy becomes an important consideration in advanced democracies, and LiDAR is more easily able to gain public acceptance over classical high resolution cameras.

2) Coverage: A surround view LiDAR can cover a large range and Field of View (FoV). Cameras have limited range and FoV, especially in bad lighting conditions and weather. This requires multiple cameras which incur significant “stitching” software, hardware and installations costs.

3) 3D Information and Situational Awareness: data from cameras in fixed locations can be used to extract 3D information (with reference to street maps and known landmarks), however, it is time consuming, may not be accurate due to obsolete maps and changing infrastructure.

Blue City is a Montreal based software company established in 2018, with a focus on intelligent road traffic management solutions. Velodyne and Blue City have an exclusive relationship for delivering these solutions in North America. According to CEO Asad Lesani, traditional traffic intersection management solutions focus on cars. A multi-modal perspective that takes into account other road users like pedestrians, cyclists, scooters, etc. is vital going forward. Other aspects like vehicle parking and pollution control through effective traffic management are also crucial. Blue City uses AI and machine learning on point cloud data from different Velodyne LiDARs to extract object lists and traffic flow patterns. This information is made available to city planners to identify conflicts and safety issues, and develop strategies to resolve these. Surround view LiDAR seems to be ideal because of its large FoV and range, and more importantly, consistent performance across lighting conditions and weather variations. One of the cities that is currently piloting this system is Kelowna, British Columbia (about 200 miles from Vancouver, Canada), with a population of ~150K. Typically, the population doubles during peak tourist seasons.

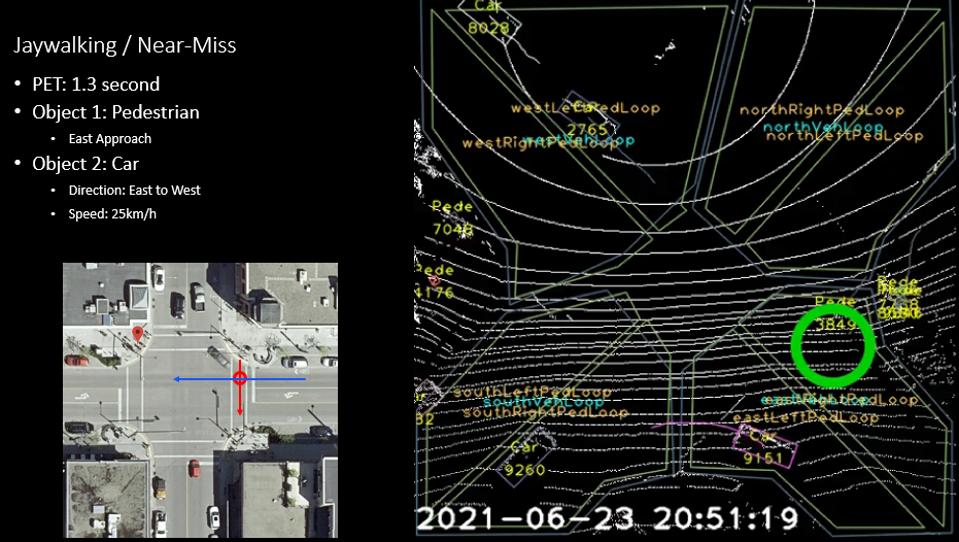

The Smart City initiative started in Canada about 4 years ago, with the federal government encouraging local groups to respond to a “challenge competition” in which quality of life improvements could be leveraged through judicious use of technology. Kelowna participated in this challenge in 2017/2018 along with over 200 other communities across Canada. From this experience it created an Intelligent Cities program in concert with Rogers (the predominant cellular/cable provider in Canada) and Blue City on a transportation focused use case. Resident workshops were conducted in 2018 to identify customer needs (residents), and provided city officials a roadmap/vision for future development, with traffic infrastructure and public transit as a priority. This helped frame the initiative with Rogers and Blue City. Andreas Boehm is the Manager, Intelligent City at Kelowna: “This initiative is not about leveraging technology for technologies’ sake. It is not a top down deployment by large tech companies without considering the concerns of the community. In addressing their concerns, we find that LiDAR has provided us with consistent data that we previously did not have - especially in low light and bad weather conditions. This is proving to be a great tool as we proceed forward.” Figure 3 shows an example of traffic conflict data that the city was able to obtain through the pilot initiative, relating to pedestrian jaywalking and near misses with speeding traffic.

The pilot program is enabling the city to analyze some of the issues that exist today and possible solutions. It also helps development of the business case to justify broader deployment - in terms of reduced overall infrastructure costs, increased tourism, and engagement by insurance companies interested in safety and accident prevention. Mr. Boehm envisions “broader deployment in the next 2-3 years and expanding the use of the Intelligent City initiative to other applications like emergency response and flood mapping”.

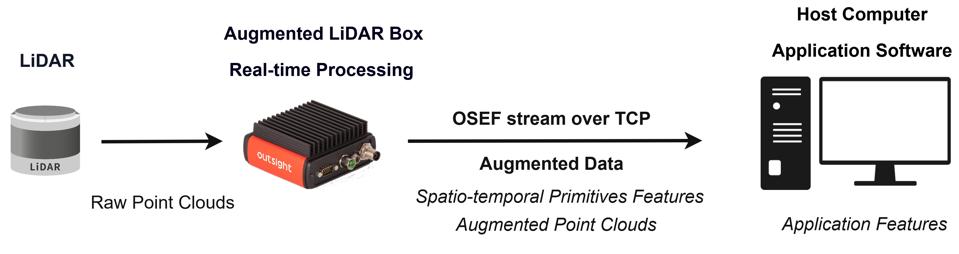

Outsight is a Paris, France based company that works with a majority of LiDAR providers to make LiDAR sensing a seamless experience for end customers.

According to Raul Bravo, President of Outsight, outside of automotive applications, customers in other application domains do not have the expertise, resources or willingness to invest in the technology required to interact directly with LiDAR hardware and data. They need a seamless plug-and-play solution that allows them to access the salient information coming out of the LiDAR. The Augmented LiDAR box (Figure 4) is an edge device with embedded real-time software that interfaces to most commercial LiDARs (Velodyne, Ouster, Robosense, Hesi, Livox among others) and provides a universal interface for power, control and data to the end user’s application. It also provides various processed data outputs like object lists and types, as well as SLAM (Simultaneous Location and Mapping) which enables real time situational tracking of the different objects.

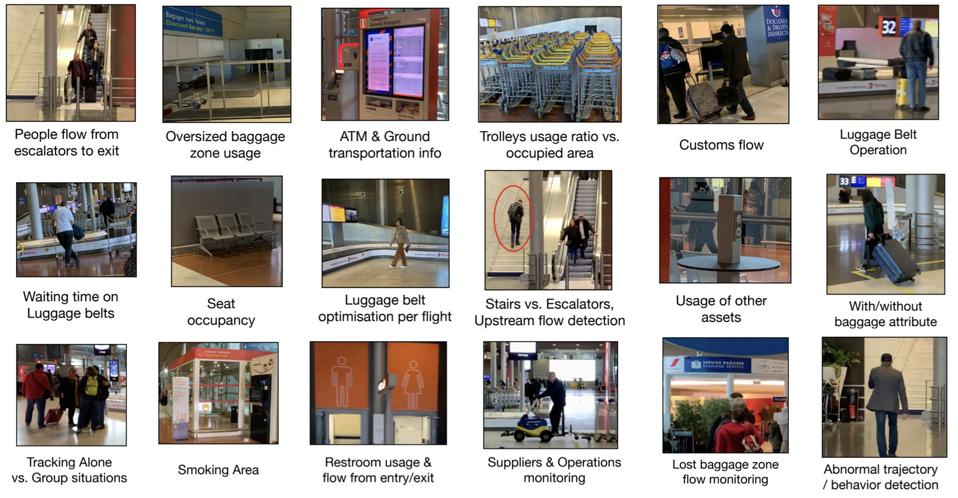

An example of an application in production today is the monitoring of people and luggage flow in airports (see Figure 5).

The processed LiDAR data can be used by human operators to improve various operational parameters in an airport (see Figure 5). It can be displayed in the form of a dashboard to enable real time decisions (time to clean the bathroom!) or stored for later analysis to optimize infrastructure improvements and make automated decisions (Figure 6 displays potential application areas).

Mr. Bravo indicates that to date 3.5B data points (anonymous, and therefore, private) have been collected to date and are available for analysis. Traffic monitoring at busy road intersections presents similar challenges as the airport example, and Outsight is also active in such applications. LiDAR manufacturers co-sell their hardware with Outsight’s Augmented LiDAR box and embedded software. They view the collaboration as an opportunity to expand the sale of their products into non-automotive markets (while automotive markets develop). The solution is also appealing to end customers because it allows them to select and/or combine different LiDAR suppliers based on price, performance and other supplier considerations.

Oyla.ai is a LiDAR-based fusion company that has a strategic partnership with In-Q-Tel, a nonprofit (imagine !) strategic investor that U.S. government intelligence and national security agencies have relied upon for more than 2 decades to anticipate their technology needs and deliver solutions. In-Q-Tel sources, invests in, and accelerates cutting-edge technologies from the commercial startup community to strengthen the security of the U.S. and its allies. Typical investments range in the $500K-$3M range, and are structured as work programs with specific delivery milestones for their customers. In-Q-Tel has ~ 500 companies in its portfolio currently, and Oyla is one of their investments.

The company is focused on delivering a LiDAR-video fusion solution for surveillance and perimeter security applications (Disclosure: I am an advisor to Oyla). Video from RGB cameras is, by far, the sensor of choice in these applications today, complemented by AI and machine learning software. In many cases, solutions based on video alone are less reliable. According to Srinath Kolluri, CEO of Oyla, “while video is effective for many surveillance and detection applications, it does not work well in the dark or with highly complex environments. These environments are diverse and include, for example, outdoor public spaces, parking lots, railway stations and crossings, city streets and intersections, airports, casinos, electrical utilities and gas pipelines, government and military facilities, warehouses and many others”. Oyla’s strategy is to enhance traditional video analytics by fusing 3D data into traditional video data processing and visualization workflows. The solution is low cost, leveraging off-the-shelf components for the proprietary LiDAR design, with pricing of the overall video+LiDAR fusion solution at a ~$500 premium to a video only solution (typically $1000-$2500 depending on the features). This pricing is palatable to public agencies like municipalities and national security agencies. An example of how video can be enhanced by LiDAR fusion is shown in Figure 7.

The left side shows traditional RGB data, whereas the right side is a fusion of RGB and LiDAR data (eRGB or enhanced RGB). Essentially, the fused image maintains the inherent x-y resolution of the video data and incorporates a depth dimension into every pixel (at a lower resolution). The key point is that in light starved conditions (or bad weather), the eRGB image picks out objects missed by the RGB video alone. This enables the AI to work more effectively in various types of surveillance and security applications. The fusion solution also makes the data visualization human friendly (LiDAR point clouds are generally meaningless to people other than LiDAR experts). This capability is also being deployed by Oyla customers at traffic intersections to identify safety conflicts between cars and pedestrians, by railway customers for platform safety, and by critical infrastructure customers for facility protection.

It is clear that LiDAR imaging is penetrating various applications in the AoT™ and non-AoT™ domains. The keys for successful larger scale deployments in the latter case are ease of use, graceful integration with AI and visualization software, performance consistency over lighting and weather conditions and competitive costs of ownership (installation, training, capital, operational, lifetime). Over time, smart cities are likely to get smarter and better focused on real customer needs of safety, convenience, security and economics.