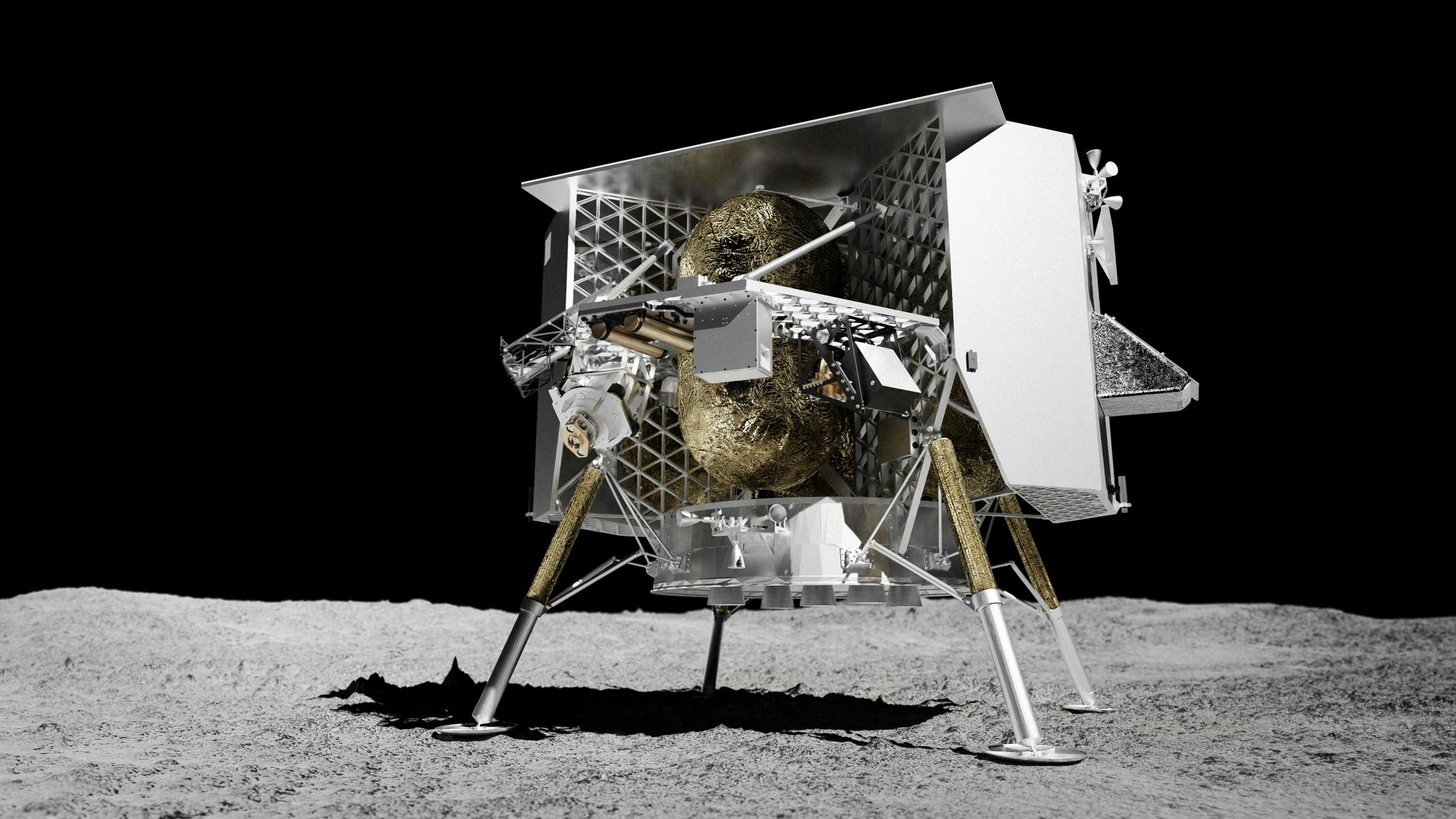

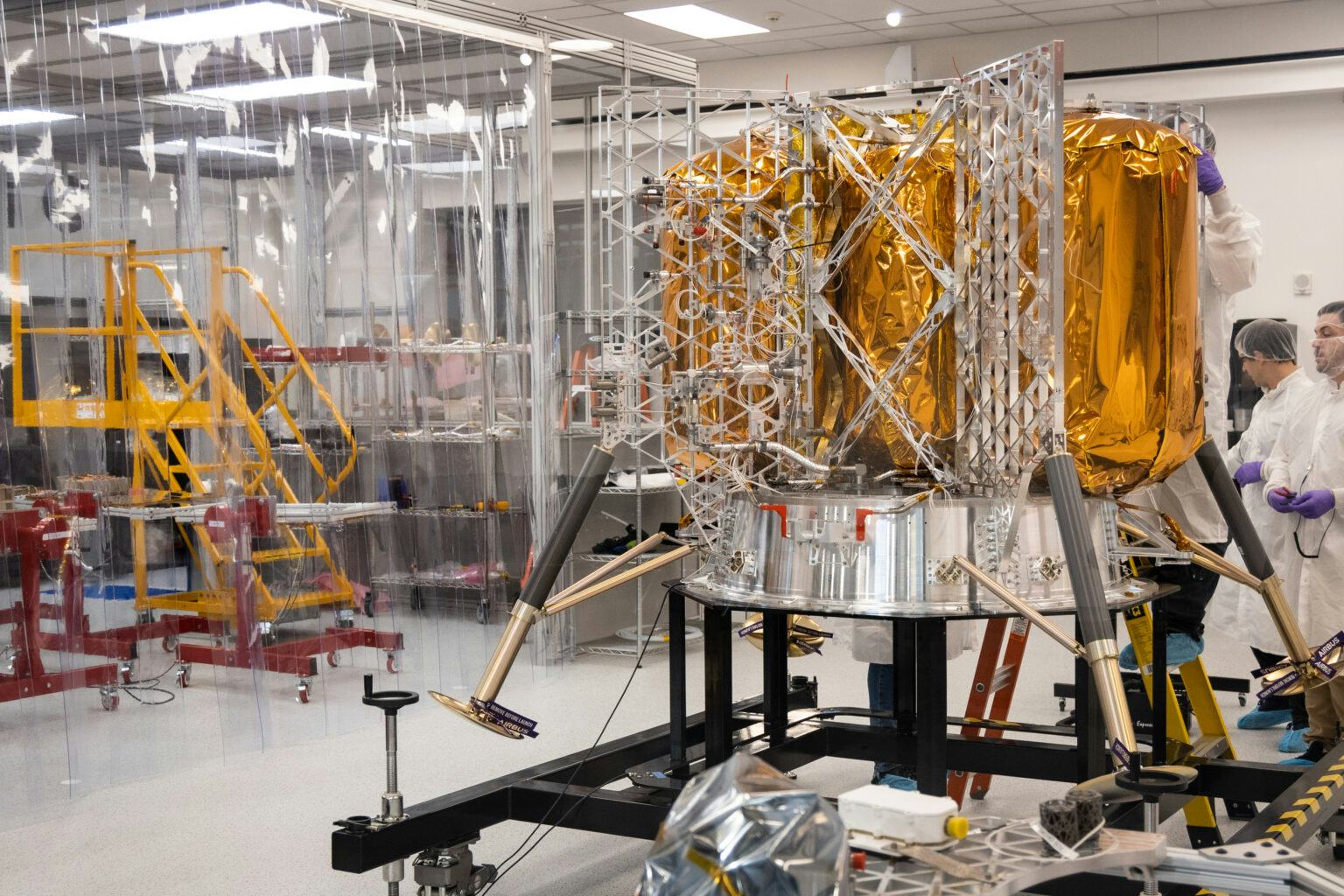

After a 50-year break, humans are finally returning to the Moon. At least, that’s the plan. The soon-to-be-completed Artemis 1 mission has kicked off our lunar reconciliation, and the upcoming Peregrine 1 mission could mark the first vehicle to touch down on the Moon since the Apollo program (if it beats two other companies to the punch).

Things will look a lot different this time than in 1969, when astronaut Neil Armstrong relied on maps to carefully navigate the Lunar Module Eagle onto the Moon’s surface.

Peregrine 1, which is set to take off in early 2023, could mark an entirely new era of lunar landings: People no longer need to do the high-stakes maneuvering on their own.

Under a NASA contract, the Pittsburgh-based space robotics company Astrobotic will launch the uncrewed Peregrine. The landing will rely on tools like the Deep Space Network (NASA’s array of giant radio antennas), motorized telescopes called star trackers, and navigation Doppler lidar to get most of the job done autonomously.

Lidar technology (the name comes from “light detection and ranging”) uses lasers to measure the distance between objects based on the time it takes for light to return to the receiver. Doppler lidar goes a step further with a more complex pulse that can detect the movement and relative speed of those objects.

NASA’s Jet Propulsion Laboratory has landed vehicles on Mars several times successfully using radar systems, which rely on radio waves instead of lasers to track speed and location. But for its advantages in terms of cost, size, and power, “we think lidar systems are ideal for these commercial, lower-cost robotic missions to the Moon,” says Andrew Horchler, chief research scientist at Astrobotic.

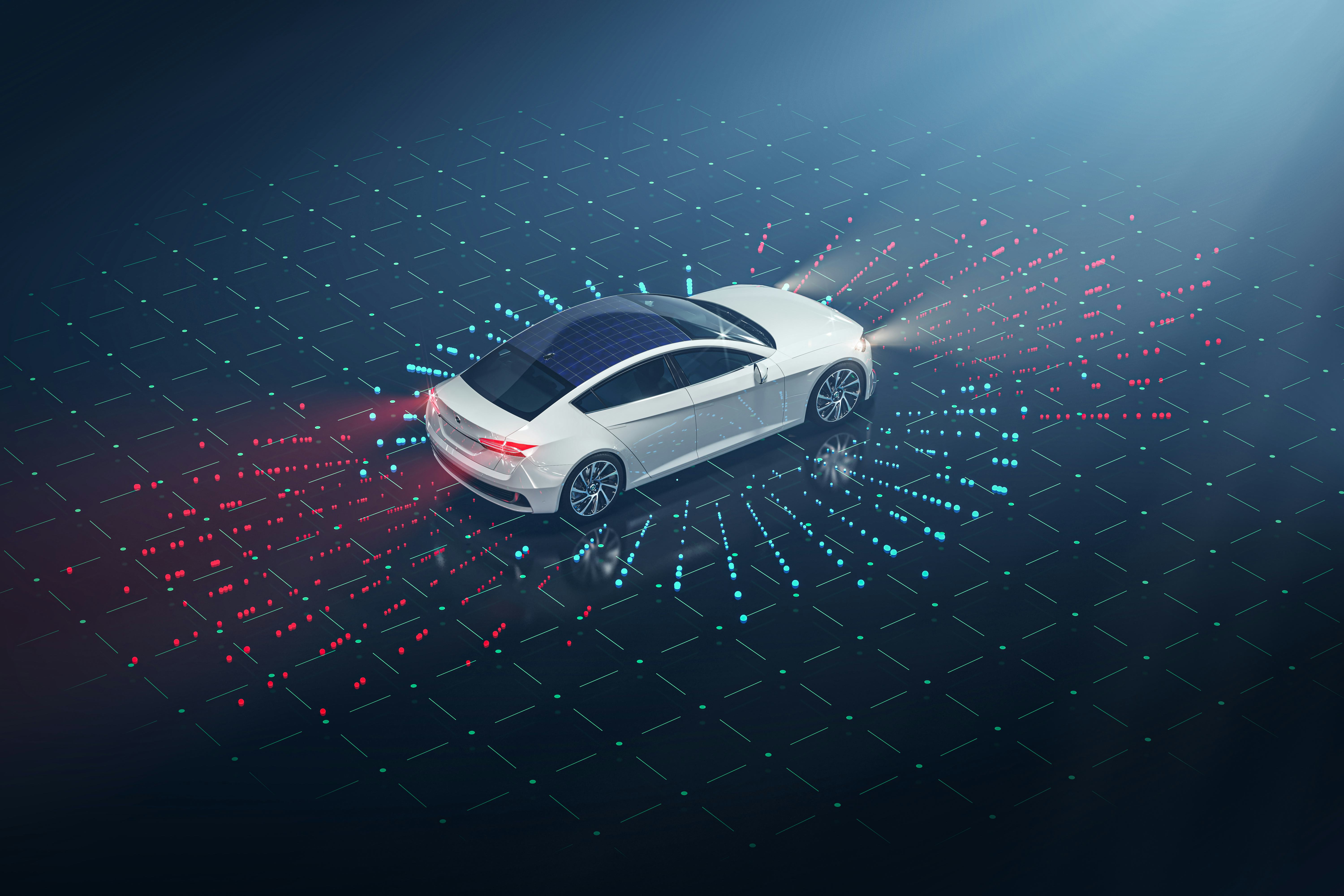

Doppler lidar is also being developed to guide today’s futuristic tech like self-driving cars and drones — now, it will bring a makeover to space navigation that has been decades in the making.

And if Moon missions succeed over the next few years, NASA and companies like Boeing, Lockheed Martin, and SpaceX hope to land crews on Mars. “Exploration of the Moon and Mars is intertwined,” notes the NASA site for the initiative, because the equipment used on lunar missions can be tested and adapted for the Martian surface.

If all goes according to plan, Doppler lidar could support the growing commercialization of space. Unlike in the Apollo days, private companies are beginning to make their mark on the extraterrestrial realm. “It’s really a new space out there,” says Tom Garvey, Astrobotic’s mission operations manager.

Lunar landings of yore

The history of Doppler lidar can be traced back to the early 1960s — soon after the laser was invented — when the Hughes Aircraft Co. created the first lidar instrument. For decades, lidar’s main use was in meteorology, where it tracked cloud size and speed for more precise forecasts.

But even then, researchers speculated it could be useful for studying the Moon. The technology even played a role on Apollo 15 in 1971: The crew used a lidar altitude meter to map the lunar surface. But the ability to bring Doppler lidar to space travel emerged only in the past few years, long after the Apollo program shuttered.

Here’s how things went down on that infamous day in July 1969: To start things off, Apollo vehicles fired their engines to enter a lunar orbit.

Apollo used a combined human-computer team to get the lander into orbit position. The onboard crew worked a telescope and a navigational instrument called an optical sextant to measure the angles between heavenly bodies; the computer calculated those angles and processed the resulting directions for the astronauts.

Space technology has come a long way since.

Next, Houston-based staff worked on a massive Apollo Guidance Computer, which helped them navigate and control the spacecraft, to feed the crew’s semi-automated landing process.

But five minutes into Apollo 11’s descent, the computer struggled to complete all its crucial tasks. Armstrong and Buzz Aldrin watched lunar features appear a few seconds earlier than expected. That meant the lander was coming in too fast.

Aldrin called out navigation details as Armstrong guided the Eagle down with a control stick. At just 250 feet above the surface, he changed course as he saw boulders looming in the site they’d been targeting — their maps had missed these crucial details.

As we all know, the astronauts did in fact safely touch down and make “one giant leap for mankind.” Space technology has come a long way since — now, it can take more human action out of the equation.

Getting autonomous

To replace Neil Armstrong’s role in plopping the lander onto the surface, Astrobotic will deploy Doppler lidar. The Doppler lidar sensor measures changes in the phase of light beams that bounce off the Moon’s surface. Three optical heads will shoot laser beams at the Moon and measure their return to track the lander’s speed and position during the journey; the navigation software uses that information to ensure that it doesn’t run off course or smack too hard onto the surface.

More specifically, it can pick up on speed by determining the “phase shift” of the reflected beam. Redshift and blueshift describe how fast the beam moves relative to the receiver. When an object is moving away from the sensor, the light it reflects is called redshift; when the object is approaching, the light is referred to as blueshift.

When it’s 9 miles from the Moon’s surface, the lander is also supported by what’s called a terrain-relative navigation system. At this point, cameras capture a stream of images and the system compares landscape features with a map pre-loaded in the system.

“Very similar to what Neil Armstrong could have done,” Horchler says. In 1969, Armstrong compared the view out the window with the map in his lap to determine the craft’s position relative to the target landing site.

“Very similar to what Neil Armstrong could have done.”

“Our system does that with computer vision,” Horchler explains. “And it will try to line up the pictures it takes and their features with a map we have on board.”

At this point, the system measures the spacecraft’s position relative to coordinates on the Moon, somewhat like a GPS. “It allows us to get down to 100 meters or better accuracy of landing, in the end.”

The lander combines patterns from all this data from the craft’s navigation system and other onboard instruments, including a star tracker that examines the celestial bodies and determines the craft’s bearing in space based on their pattern.

The spacecraft’s navigation software also determines when to fire its engines to maintain or change its path, when to stop, and when to gently touch down and deploy its precious cargo.

Non-lunar Lidar

Down here on Earth, Doppler lidar is used to create detailed maps and guide drones, among other high-tech uses. Recently, the tech has drawn attention for its utility in self-driving cars — it can perceive the speed and distance of nearby vehicles.

These terrestrial uses wouldn’t be possible without the technology’s space origins. Doppler lidar developed for precise NASA landings yielded the precision needed for self-driving cars to navigate traffic and the ability to blend data from multiple sensors. NASA credits its engineers for the path from the Moon to Main Street. Now, the technology is returning to its roots.

There could be a downside to this development. As Doppler lidar makes its way into extraterrestrial landings, it could worsen space communications traffic. After all, Astrobotic will be one of many clients using NASA’s network of deep space satellite dishes located around the world to determine its position and communicate with the ground team. Another user of that network taking up plenty of bandwidth at the moment: the James Webb telescope.

“We will land one way or another.”

“They have a lot of NASA missions using their antenna network, as well as now a few commercial missions like ourselves,” Garvey says. “It’s certainly getting crowded.” So far, the bandwidth needed for Peregrine’s pathfinding to lunar orbit is not at risk.

While it seems promising, Astrobotic won’t know how well the new Doppler lidar system works until it’s actually deployed up in space. When the time comes, Horchler estimates that the landing process will take 10 to 15 minutes. That is, if everything works as planned.

“It’s going to either end well or it’s going to end badly,” says Horchler with a laugh. “We will land one way or another.” A spacecraft can’t just hover over the Moon’s surface forever. “The data we gain each step of the way is invaluable.”