Comparing the RTX 3050 and Arc A750 continues from our previous look at the RTX 3050 vs RX 6600, this time swapping out AMD for Nvidia in the popular $200 price bracket. Nvidia failed to take down AMD, but does it fare any better against Intel? These are slightly older GPUs, as no company has seen fit to offer anything more recent in the budget sector, so let's find out who wins this matchup.

The RTX 3050 debuted at the start of 2022 and represents Nvidia's latest xx50-class desktop graphics card, with no successor in the pipeline for the RTX 40-series (at least that we know of). The card nominally launched at $249, though we were still living in an Ethereum crypto mining world so most cards ended up selling well above that mark. Two and a half years later, it's one of the few RTX 30-series Ampere GPUs that remains relatively available, and it's now going for around $199, the rest having been supplanted by the newer Ada Lovelace GPUs.

The Arc A750 is Intel's mainstream / budget offering based on the Arc Alchemist GPU architecture. It debuted two years ago and has been one of the most promising offerings for Intel's first lineup of discrete graphics cards in over 20 years. Driver support was a big concerns at launch, but Intel has made excellent progress on that front, which could sway things in favor of the Intel GPU in this face-off. Maybe. Similar to the RTX 3050, the A750 started out as a $289 graphics card, then saw an official price cut to $249, and now it's often selling at or below $199 in the wake of new graphics card releases from Nvidia and AMD.

While we're now a couple of years past the 3050 and A750 launch dates, they remain relevant due to the lack of new sub-$200 options. So, let's take an updated look at these budget-friendly GPUs in light of today's market and see how they stack up. We'll discuss performance, value, features, technology, software, and power efficiency — and as usual, those are listed in order of generally decreasing importance.

RTX 3050 vs Arc A750: Performance

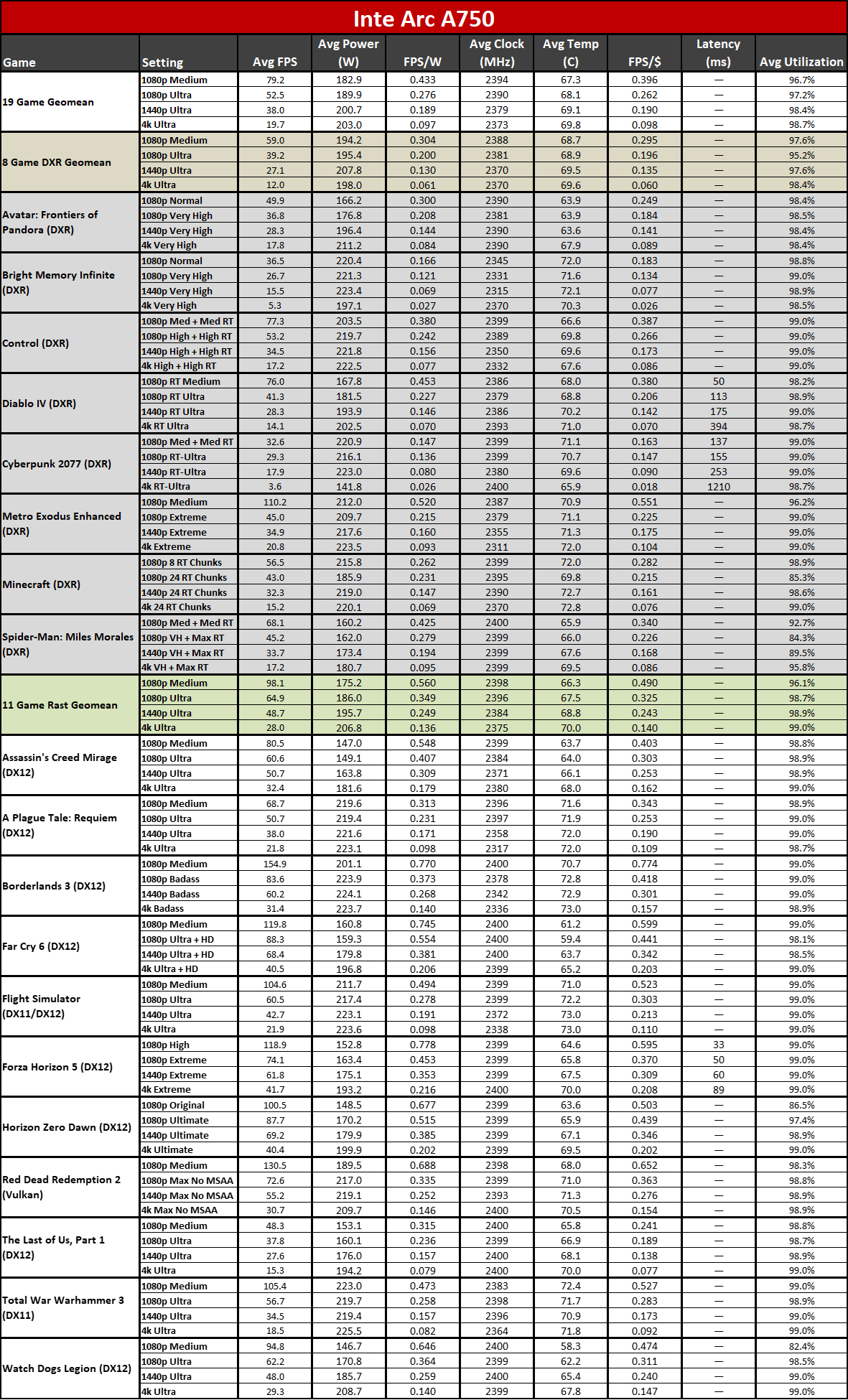

Intel made a big deal about the Arc A750 targeting the RTX 3060 when it launched, so dropping down to the RTX 3050 should give the A750 an easy victory... and it does. The A750 shows impressive performance compared to the 3050, with both rasterization and ray tracing performance generally favoring the Intel GPU by a significant margin.

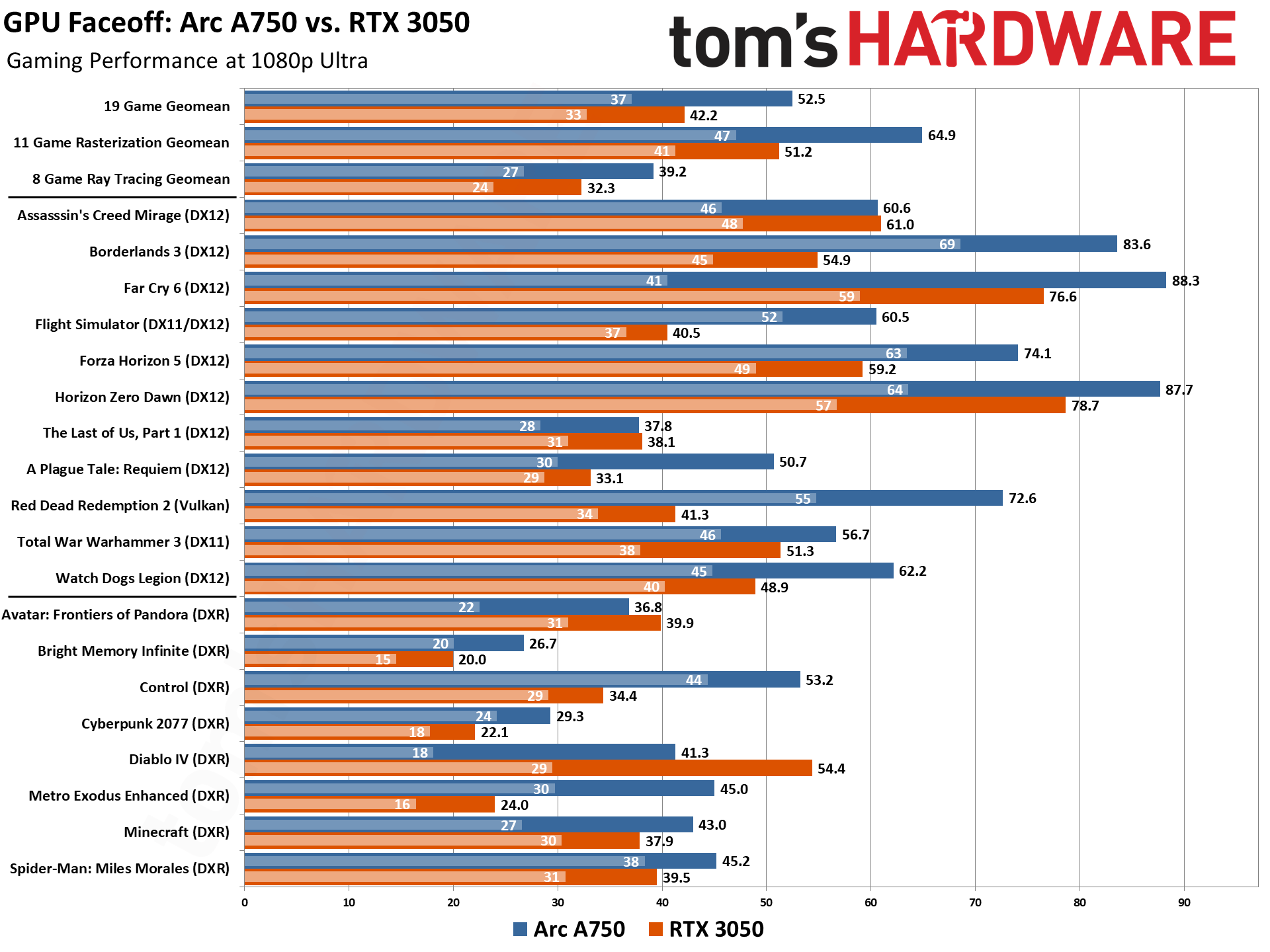

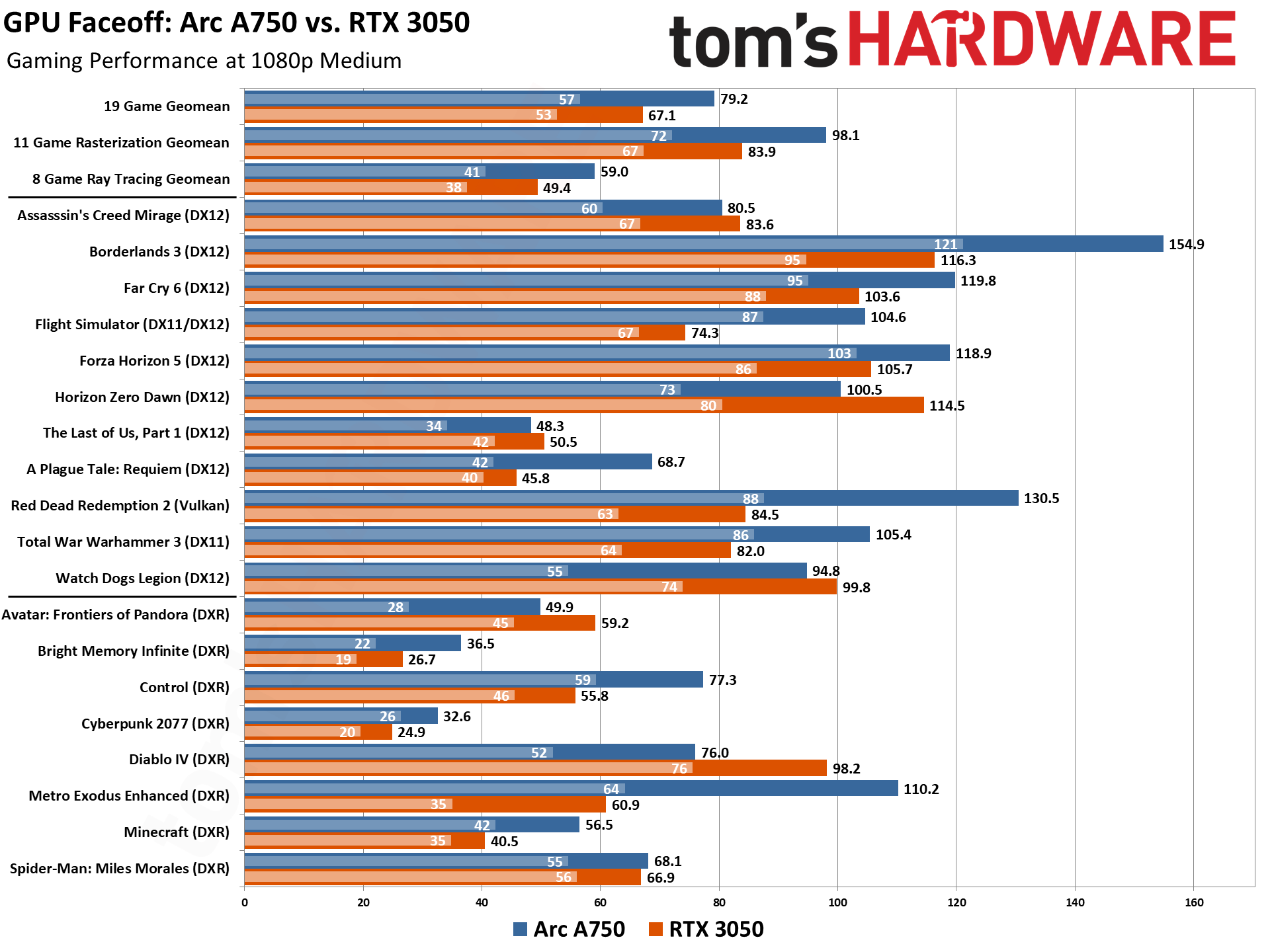

At 1080p ultra the Arc card boasts an impressive 25% performance lead over the RTX 3050 in our 19 game geomean. That grows to 27% when looking just at rasterization performance, and conversely shrinks to 21% in the ray tracing metric. Regardless, it's an easy win for Intel. The RTX 3050 does a bit better at 1080p medium, but the Arc A750 still wins by a considerable 18% across our test suite.

That's not to say that Intel wins in every single game of our test suite, however, and that's likely the same old issue of drivers popping up in the exceptions. Diablo IV (with ray tracing — it runs much better in rasterization mode) is clearly not doing well on Arc. Avatar, another more recent release, also performs somewhat worse. The Last of Us and Assassin's Creed Mirage, the other two newer releases, are at best tied (ultra settings) and at worse slightly slower on the A750. You might be noticing a pattern here. So while Intel drivers have gotten better, they're still not at the same level as Nvidia. Most of the other games favor the A750 by a very wide margin of roughly 25–55 percent.

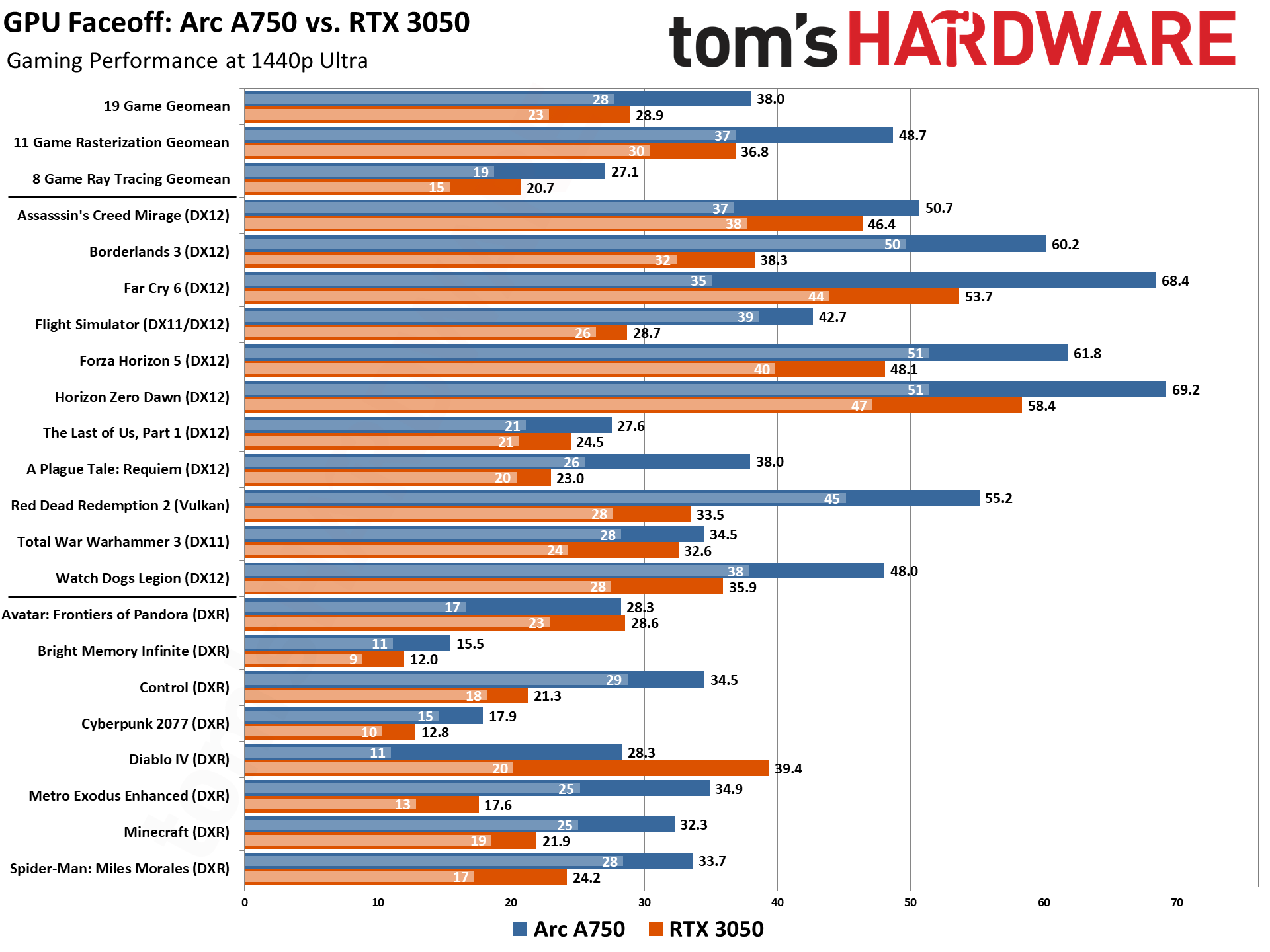

The lead gets more favorable for the Arc A750 as we increase resolution. At 1440p, the Intel GPU is roughly 32% ahead of the RTX 3050, in all three of our geomeans. The only game where the RTX 3050 still leads is Diablo IV, again likely a driver issue with the ray tracing update that came out earlier this year. (Our advice would be to turn off RT as it doesn't add much in the game, but we do like to use it as a GPU stress test.)

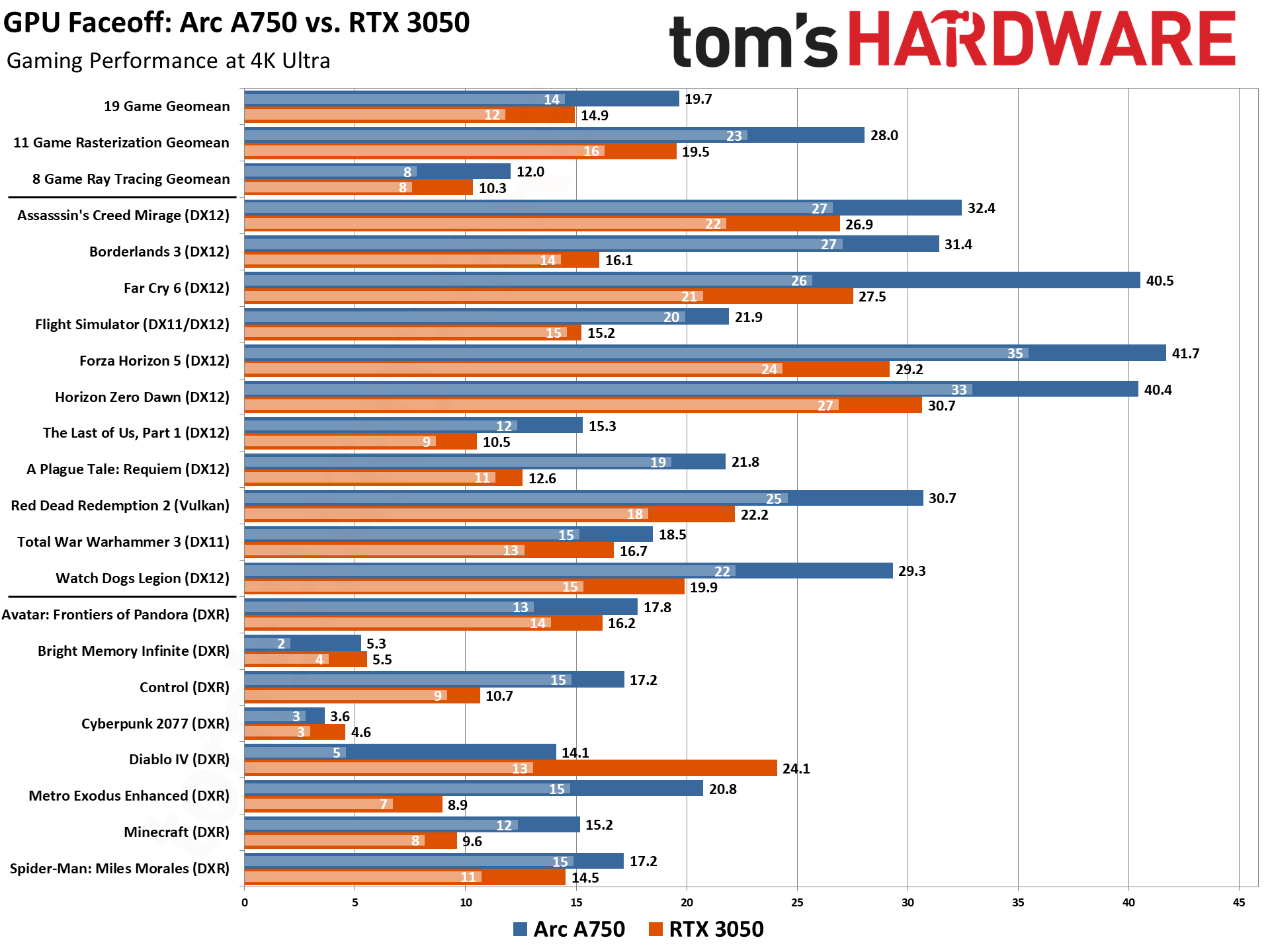

Things get a little bumpy for the A750 at 4K ultra, with good rasterization performance — even better compared to the 1440p results with a whopping 43.6% lead — but several issues in ray tracing games. The Arc card looses a decent chunk of its lead in RT, sliding down to 17% overall, but with three games (Diablo IV again, Cyberpunk 2077, and Bright Memory Infinite) all performing better on the 3050. Diablo IV routinely dips into the single digits for FPS, and it generally needs more than 8GB of VRAM to run properly at 4K with all the RT effects enabled.

Performance Winner: Intel

It's a slam dunk for the Arc A750 in this round. The Intel GPU dominates the RTX 3050 even in ray tracing games, an area where Nvidia often holds a strong lead over rival AMD's competing GPUs. Intel may still have driver issues to work out, but the underlying architecture delivers good performance when properly utilized, at least relative to Nvidia's previous generation budget / mainstream GPUs.

RTX 3050 vs Arc A750: Price

Pricing has fluctuated quite a bit in recent days, what with Prime Day sales. We saw the Arc A750 drop as low as $170 at one point, but over a longer period it's been hanging out at the $200 mark. RTX 3050 8GB cards also cost around $200 — and again, note that there are 3050 6GB cards that cut performance quite a bit while dropping the price $20–$30; we're not looking at those cards.

If you want to be fully precise, the RTX 3050 often ends up being the higher-priced card. The cheapest variant, an MSI Aero with a single fan, costs $189 right now. $5 more gets you a dual-fan MSI Ventus 2X. Arc A750 meanwhile has sales going right now that drop the least expensive model to $174 for an ASRock card, or $179 for a Sparkle card. Both are "normally" priced at $199, however, and we expect them to return to that price shortly.

But RTX 3050 inventory isn't that great, and before you know it, you start seeing 3050 listings for $250, $300, or more. That's way more than you should pay for Nvidia's entry-level GPU. Arc A750 listings are far more consistent at hitting the $200 price point, with most models hitting the $199.99 price point exactly. There may be fewer A750 models overall (only seven exist that we know of), but most are priced to move.

Pricing: Tie

While we could say the Arc A750 the winner, based on current sales, in general we've seen the two GPUs separated by just $5–$10. Prices will continue to fluctuate, and inventory of the RTX 3050 could also disappear. But for the here and now, we're declaring the price a tie because there's usually at least one inexpensive card for either GPU priced around $190–$200. If there's not, wait around for the next sale.

RTX 3050 vs RX 6600: Features, Technology, and Software

Unlike our recent RX 6600 vs. Arc A750 comparison, Intel's Arc A750 and Alchemist architecture look a lot more like Nvidia's RTX 3050 and its Ampere architecture. Both the Arc A750 and RTX 3050 sport hardware-based acceleration for AI tasks. The RTX 3050 boasts third generation tensor cores, each of which is capable of up to 512 FP16 operations per cycle. The Arc A750 has Xe Matrix eXtensions (XMX), with each XMX core able to do 128 operations per cycle. That ends up giving the A750 a relatively large theoretical advantage. Both GPUs also come with good ray tracing hardware.

But let's start with the memory configurations. The RTX 3050 uses a mainstream 128-bit wide interface accompanied by 8GB of GDDR6 operating at 14 Gbps. The Arc A750 has 256-bit interface, still with only 8GB of capacity, but the memory runs at 16 Gbps. The bus width and memory speed advantage on the A750 gives the Intel GPU a massive advantage in memory bandwidth, with roughly 1.3X as much bandwidth as the RTX 3050 — 512GB/s vs 224 GB/s. And there's no large L2/L3 cache in either of these architectures to further boost the effective bandwidth.

It's worth mentioning that Nvidia GPUs generally have less memory physical memory bandwidth than their similarly priced competition. Nvidia compensates for this fact with its really good memory compression, which reduces the memory load. We aren't sure if Intel is using memory compression or how good it might be, but regardless, it's obvious from the performance results that the A750's robust memory subsystem has more than enough bandwidth to defeat the RTX 3050.

The physical dimensions of the GPUs are also quite different. The Arc A750's ACM-G10 uses a colossal 406 mm^2 die, while the RTX 3050 uses a significantly smaller die that's only 276 mm^2. Of course, Ampere GPUs are made on Samsung's 8N node while Intel uses TSMC's newer and more advanced N6 node. Transistor count scales with size and process technology, with the A750 packing 21.7 billion transistors compared to the RTX 3050's comparatively tiny 12 billion.

These differences can be seen as both a pro and con for the Arc A750. Its bigger GPU with more transistors obviously helps performance compared to the RTX 3050. However, this also represents a weakness of Intel architecture. The fact that Intel has 80 more transistors and a chip that's nearly 50% larger, on a more advanced node, means that the ACM-G10 has to cost quite a bit more to manufacture. It also shows Intel's relative inexperience in building discrete gaming GPUs, and we'd expect to see some noteworthy improvements with the upcoming Battlemage architecture.

Put another way, if we use transistor count as a measuring stick, the Arc A750 on paper should be performing more on par with the RTX 3070 Ti. That's assuming similarly capable underlying architectures, meaning Intel's design uses a lot more transistors to ultimately deliver less performance. Yes, the A750 beats up on the 3050 quite handily, but the RTX 3070 wipes the floor with roughly 60% higher performance overall — and still with fewer transistors and a smaller chip on an older process node.

We can make similar point when it comes to raw compute performance. On paper, the A750 delivers 17.2 teraflops of FP32 compute for graphics, and 138 teraflops of FP16 for AI work. That's 89% more than the RTX 3050, so when combined with the bandwidth advantage, you'd expect the A750 to demolish Nvidia's GPU. It wins in performance, but even a 25% advantage is a far cry from the theoretical performance.

It's a bit more difficult to make comparisons on the RT hardware side of things. Nvidia tends to be cagey about exactly how it's RT cores work, often reporting things like "RT TFLOPS" — using a formula or benchmark that it doesn't share. What we can say is that, based on the gaming performance results, when Arc drivers are properly optimized, the RT throughput looks good. We see this in Minecraft, a demanding "full path tracing" game that can seriously punish weaker RT implementations. Pitting the A750's 28 ray tracing cores against the 3050's 20 cores, Intel comes out roughly 40% faster at 1080p medium as an example. So, 40% more cores, 40% more performance.

While Intel might have the hardware grunt, however, software and drivers heavily favor Nvidia. Nvidia has been doing discrete gaming GPU software/driver development much longer than Intel, and Intel has previously admitted that all of its prior work on integrated graphics drivers didn't translate over to Arc very well. Nvidia's drivers are the gold standard other competitors need to strive to match, and while AMD tends to be relatively close these days, Intel still has some clear lapses in optimizations. As shown earlier, check out some of the newer games (Diablo IV, Avatar, The Last of Us, and Assassin's Creed Mirage) and it's obvious those aren't running as well on the A750 as some of the older games in our test suite.

Intel has come a long way since the initial Arc launch, especially with older DX11 games, but its drivers still can't match AMD or Nvidia. Intel has a good cadence going with driver updates, often releasing one or more new drivers each month, but it still feels like a work in progress at times. Arc generally doesn't play as well as its competitors in day one releases, and we've seen quite a few bugs over the past year where you'll need to wait a week or two before Intel gets a working driver out for a new game.

Features are good for Intel, but again nowhere near as complete as what Nvidia offers. Nvidia has DLSS, Intel has XeSS (and can also run AMD FSR 2/3 when XeSS isn't directly supported). As far as upscaling image quality goes, DLSS still holds the crown, but XeSS 1.3 in particular looks better in almost all cases that we've seen than FSR 2. Frame generation isn't something we're particularly worried about, but Nvidia has a bunch of other AI tools like Broadcast, VSR, Chat RTX. It also has Reflex and DLSS support is in far more games and applications than XeSS.

The driver interface also feels somewhat lacking on Intel. There's no way to force V-sync off as an example, which can sometimes impact performance. (You have to manually edit a configuration file to remove vsync from Minecraft, for example — if you don't, performance suffers quite badly.) Maybe for the uninitiated the lack of a ton of options to play with in the drivers could feel like a good thing, but overall it's clearly a more basic interface than what Nvidia offers.

Features, Technology, and Software Winner: Nvidia

This one isn't even close, at all. The Intel Arc experience has improved tremendously since 2022, but it still lags behind. Intel isn't throwing in the towel, and we're hoping that Battlemage addresses most of the fundamental issues in both the architecture as well as software and drivers. But right now, running an Arc GPU can still feel like you're beta testing at times. It's fine for playing popular, older games, but newer releases tend to be much more problematic on the whole.

RTX 3050 vs Arc A750: Power Efficiency

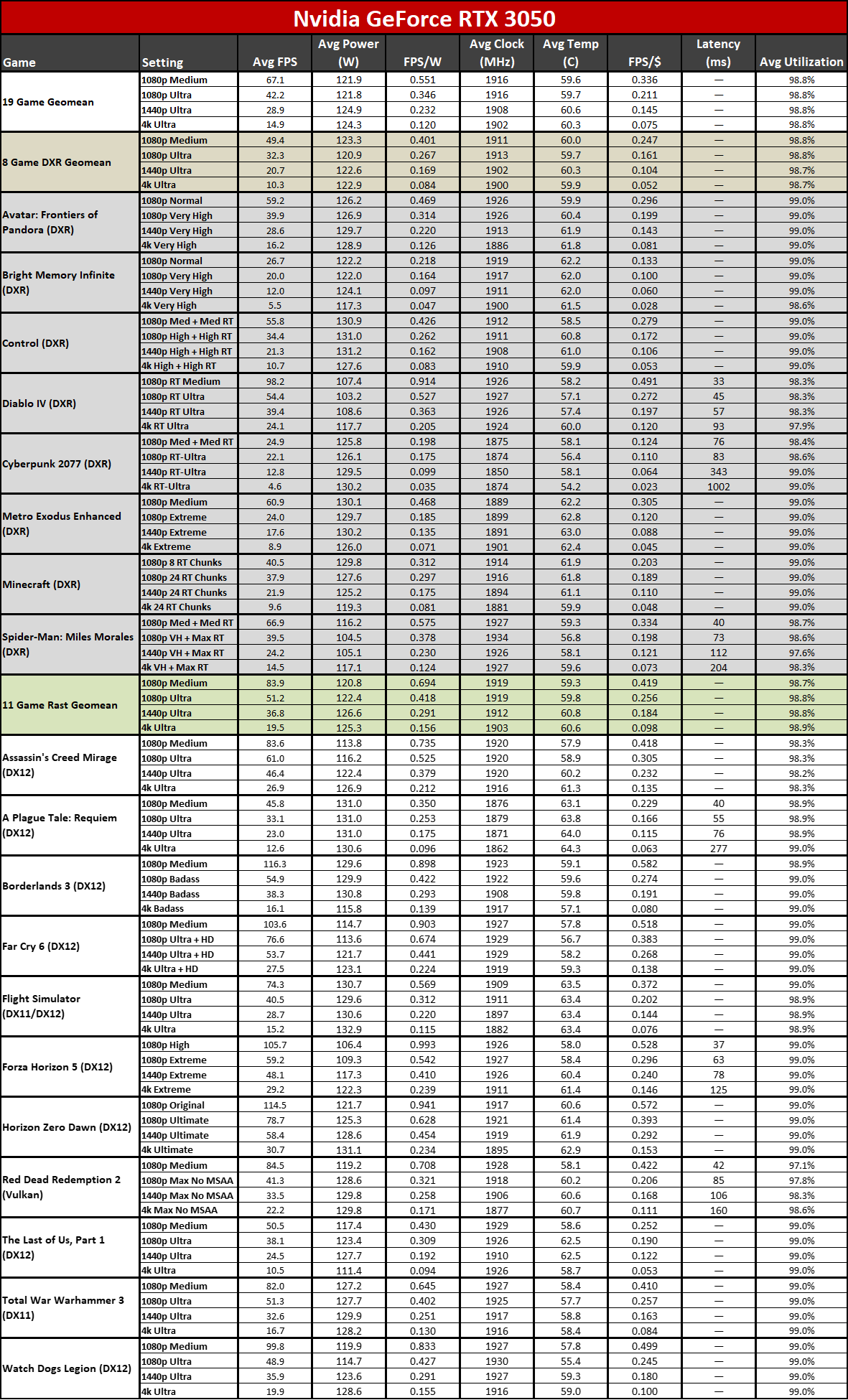

Power consumption and energy efficiency both heavily favor the RTX 3050. Even though the Arc A750 outperforms the RTX 3050 in raw performance, the RTX 3050 gets the upper hand in all of our power-related metrics. And again, this is with a less advanced manufacturing node — Nvidia's newer RTX 40-series GPUs that use TSMC's 4N process blow everything else away on efficiency right now.

The RTX 3050 pulled roughly 123 watts on average across our testing suite. Peak power use topped out at around 131W in a few cases, matching the official 130W TGP (Total Graphics Power) rating. Nvidia GPU pulled a bit less power at 1080p (122W), but basically we're GPU bottlenecked in almost all of our tests, so the card runs flat out.

The Arc A750 officially has a 225W TDP, and actual real-world power use was often 20–30 watts below that. But that's still at least 60W more power use than Nvidia's card, basically matching the power draw of the significantly faster RTX 3060 Ti. 1080p proved to be the most power efficient, averaging 183W. That increased to 190W at 1080p ultra, 201W for 1440p ultra, and 203 at 4K ultra.

Divide the framerate by power and we get efficiency in FPS/W. Across all resolutions, efficiency clearly favors the RTX 3050. It's about 25% more efficient at each test resolution and setting, for example the 3050 averaged 0.551 FPS/W at 1080p medium compared to the A750's 0.433 FPS/W.

The RTX 3050's power efficiency speaks to Nvidia's experience in building discrete GPU architectures. Even with a less advanced "8nm" process node (Samsung 8N in actuality is more of a refined 10nm-class node), going up against TSMC's "6nm" node, Nvidia comes out ahead on efficiency. The A750 wins the performance comparison, but only by using 50–65 percent more power. And that's not the end of the world, as even a 200W graphics card isn't terribly difficult to power or cool, but there's a lot of room for improvement.

Power Efficiency Winner: Nvidia

This is another area where the RTX 3050 easily takes the win. Not only does it use 30–40 percent less power, depending on the game, but it's about 25% higher efficiency. This isn't the most important metric, but if you live in an area with high electricity costs, that may also be a factor to consider. It's also worth noting that idle power use tends to be around 40W on the A750, compared to 12W on the 3050, with lighter workloads like watching videos also showing a pretty sizeable gap.

RTX 3050 vs. Arc A750 Verdict

Unfortunately (or fortunately, depending on your viewpoint), we are calling this one a draw. Both GPUs stand up very well against each other but have totally different strengths and weaknesses.

The brunt of the Arc A750's strength lies in its raw performance. It handily beat the RTX 3050 in rasterized games, and generally comes out ahead in ray tracing games as well — except in those cases where drivers hold it back. Thanks to its bigger GPU and higher specs, with more raw compute and memory bandwidth, it comes out with some significant performance leads. If you're willing to risk the occasional drivers snafu in new releases, and you only want the most performance per dollar spent, the A750 is the way to go. It gets two points for performance that's often the most important category in these faceoffs, plus it comes out with a relatively large lead of around 30% (unless you only play at 1080p medium).

But despite the A750's strong performance, the RTX 3050 also has a lot of upsides. Arguably, the biggest strength of the 3050 is the fact that it's an Nvidia GPU. With Nvidia hardware, you generally know you're getting a lot of extras outside of raw performance. Nvidia cards have more features (e.g. DLSS, Reflex, Broadcast, VSR) and arguably the best drivers of any GPU. CUDA apps will run on everything from a lowly RTX 3050 up to the latest RTX 4090 and even data center GPUs, as long as your GPU has sufficient VRAM for the task at hand.

Power consumption and efficiency are less essential advantages, but the gap is more than large enough to be meaningful. PC users looking to pair a budget GPU with lower wattage power supplies will be far better off with the RTX 3050 in general, as it only needs a single 8-pin (or potentially even a 6-pin) power connector and draws 130W or less. The Arc A750 doesn't usually need to use its rated 225W or power, but it's often going to end up in the 200W ballpark, and idle power also tends to be around 20W higher. That adds up over time.

For someone willing to deal with some of the potential quirks, the A750 can be enticing. It's also a lot safer if you're not playing a lot of recent releases. People who like to play around with new tech might also find it of interest, simply because it's the first serious non-AMD, non-Nvidia GPU to warrant consideration. And if you can find the A750 on sale for $175, all the better.

People who just want their graphics card to work without a lot of fuss, and to have access to Nvidia's software ecosystem, will find the RTX 3050 a safer bet. It often won't be as fast, but it can catch up in games with DLSS support (that lack FSR/XeSS support), and you're less likely to pull your hair out in frustration. Which isn't to say that anyone should buy the RTX 3050 — we declared the RX 6600 the superior card in that comparison, and nothing has changed there.

What we want now from Intel is to see Battlemage address all these concerns and come out as a superior mainstream option. We'll see what happens with the next generation GPU architectures in the next six to twelve months.