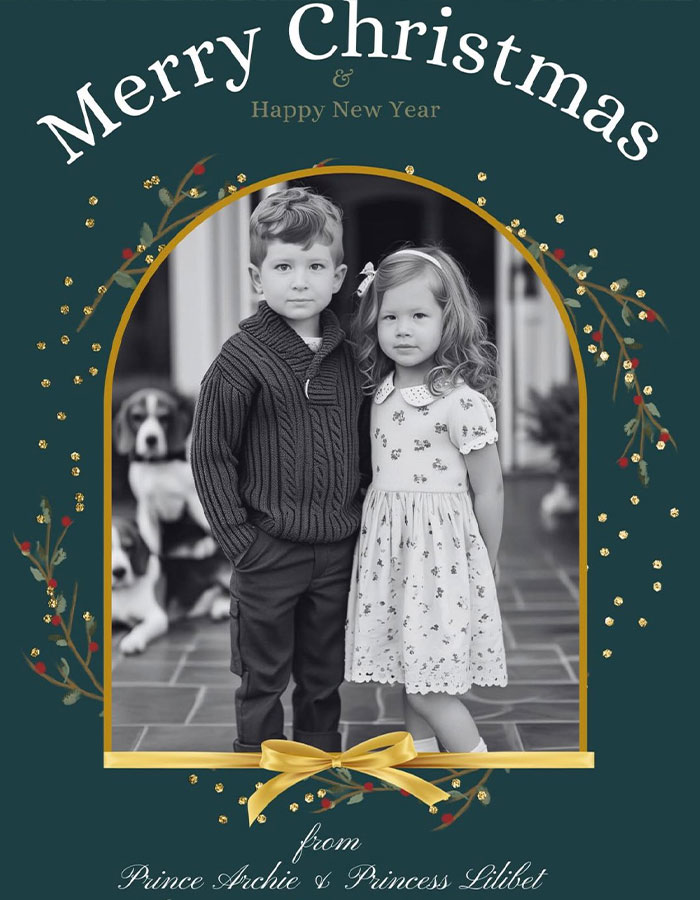

An AI-generated Christmas card featuring Prince Harry and Meghan Markle’s children, Prince Archie and Princess Lilibet, has gone viral after eagle-eyed netizens pointed out how it was constructed via software using the kids’ old photo samples.

“Pathetic fakery,” one user wrote, pointing out how the AI program failed to properly depict details like hands and feet, as well as putting the brother and sister against a very blurred out background that made little sense when closely examined.

“Crap photoshop. The trouser seam isn’t joined together,” another user said. “That’s not Archie! That’s AI.”

Fans found the card even more insulting as it copied the borders, style, and overall layout of the official holiday card shared earlier in the month by Harry and Meghan.

AI-generated image of Prince Harry and Meghan Markle’s children gets roasted online after users rightfully call it out

“On behalf of the office of Prince Harry & Meghan, the Duke and Duchess of Sussex, Archewell Productions, and Archewell Foundation, we wish you a very happy holiday season and a joyful new year,” the official card read.

The post came with six photos highlighting the couple’s various philanthropic efforts and travels. They included their visit to Colombia in August, as well as a picture of Harry meeting a hospital patient.

While the image appears to have been shared by a fan of the couple, it was not well received by users who feel the post tried to mislead them into believing the photo was real.

Many netizens have seemingly grown tired of AI-generated images, and probably found many of them previous to encountering the picture of Archie and Lilibet. According to researchers at Google, image-based misinformation skyrocketed in spring of 2023.

“The sudden prominence of AI-generated content in fact checked misinformation claims suggests a rapidly changing landscape,” the researchers wrote in a paper released in May 2024.

The fake photo is part of a never-seen-before surge in misinformation caused by AI-generated video and images on social media

The surge, the researchers argue, is in part due to the ease of access to AI photo and video generation software being made widely available for users online, with dozens of sites offering free and easy ways to create doctored content with little restrictions.

“We go through waves of technological advancements that shock us in their capacity to manipulate and alter reality, and we are going through one now,” said Alexios Mantzarlis, director of the Security, Trust, and Safety Initiative at Cornell Tech, to NBC.

“The question is, how quickly can we adapt? And then, what safeguards can we put in place to avoid their harm?”

The paper went on to reveal that about 80% of all fact-checked misinformation claims on their platforms is caused by images and video created using AI tools, with video footage being the most prevalent.

“We were surprised to note that such cases comprise the majority of context manipulations,” the paper continued.

“These images are highly shareable on social media platforms, as they don’t require that the individual sharing them replicate the false context claim themselves: they’re embedded in the image.”

“Creepy.” Users were put off by the ease with which fake images can be created and made to pass as the real thing online