OpenAI hosted its Spring Update event live today and it lived up to the "magic" prediction, launching a new GPT-4o model for both the free and paid version of ChatGPT, a natural and emotional sounding voice assistant and vision capabilities.

There are still many updates OpenAI hasn't revealed including the next generation GPT-5 model, which could power the paid version when it launches. We also haven't had an update on the release of the AI video model Sora or Voice Engine.

However, there was more than enough to get the AI-hungry audience excited during the live event including the fully multimodal GPT-4o that can take in and understand speech, images and video content, responding in speech or text.

ChatGPT-4o Cheat Sheet: What You Need to Know

- Big upgrade for free ChatGPT users: OpenAI is opening up many of the features previously reserved for paying customers. This includes access to image and document analysis, data analytics and custom GPT chatbots.

- ChatGPT GPT-4o — why it's a big deal: Multimodal by design, GPT-4o was rebuilt and retrained from scratch by OpenAI to understand speech-to-speech as well as other forms of input and output without first converting them to text.

- How to get access to GPT-4o: You can already get access to GPT-4o if you are a Plus subscriber, with access gradually rolling out to all ChatGPT users on mobile, desktop and the web over the coming weeks.

- ChatGPT-4o vs Google Gemini Live: At its I/O event Google announced Project Astra and Gemini Live, a voice and video assistant designed to directly compete with the new ChatGPT Voice feature powered by GPT-4o, but how do they stack up?

- GPT-4 vs GPT-4o: While it might be a completely new type of AI model GPT-4o didn't actually outperform GPT-4 on standard text-based tasks. However, its victory will be found in live speech and video analysis. It is also more conversational.

- 5 top new features in GPT-4o: GPT-4o comes with some impressive new features and functionality not previously possible. This includes conversational speech and live translation across multiple languages — but those features aren't live yet.

- Putting Siri on notice: OpenAI's GPT-4o and specifically ChatGPT4 makes "Siri look downright primitive," declares Tom's Guide global editor in chief Mark Spoonauer.

OPENAI SPRING UPDATE EVENT LIVESTREAM

So what can we expect from today’s special event? On the eve of Google I/O, the confirmed details are very thin on the ground, but we have some leaks and rumors that point to two big things.

To go into more detail, check out Ryan Morrison’s write up on what is being heavily speculated right now. But to summarize the key points:

- A new kind of voice assistant: We’re predicting a chunky upgrade to OpenAI’s Whispering transcription model for a true end-to-end conversational AI that you can talk to.

- AI agent behavior: Expanding beyond conversation and image recognition-based AI, OpenAI could announce agent-like behavior that means it can perform actions across the web for you.

And just to clarify, OpenAI is not going to bring its search engine or GPT-5 to the party, as Altman himself confirmed in a post on X.

One key question that is being asked in anticipation of this event is a simple one: what does this mean for Apple?

It's being reported that the Cupertino crew is close to a deal with OpenAI, which will allow for "ChatGPT features in Apple's iOS 18." In terms of how this is executed, we're not sure. It could be anything from keeping ChatGPT as a separate third-party app and giving it more access to the iOS backend, to actually replacing Siri with it.

Based on rumors and leaks, we're expecting AI to be a huge part of WWDC — including the use of on-device and cloud-powered large language models (LLMs) to seriously improve the intelligence of your on-board assistant. On top of that, iOS 18 could see new AI-driven capabilities like being able to transcribe and summarize voice recordings.

Will we see a sneak preview of how OpenAI and Apple plan to work together? We're not sure it'll be that blatant, but we'll be reading between the lines for sure.

Don't expect ChatGPT-5 to be announced at this event. CEO Sam Altman has said so himself, but that doesn't mean there hasn't already been a ton of speculation around this new version — reportedly set to debut by the end of the year.

While concrete facts are very thin on the ground, we understand that GPT-5 has been in training since late than last year. It's looking likely that the new model will be multimodal too — allowing it to take input from more than just text.

Alongside this, rumors are pointing towards GPT-5 shifting from a chatbot to an agent. This would make it an actual assistant to you, as it will be able to connect to different services and perform real-world actions.

Oh, and let's not forget how important generative AI has been for giving humanoid robots a brain. GPT-5 could include spatial awareness data as part of its training, to be even more cognizant of its location, and understand how humans interact with the world.

OpenAI is not going after Google search just yet. But leaks are pointing to an AI-fuelled search engine coming from the company soon.

Little is known, but we do know it's in the later stages of testing, with the possible plan being to pair ChatGPT with a web crawler based search. Ryan Morrison provides some great insight into what OpenAI will need to do to beat Google at its own game — including making it available as part of the free plan.

New model codenames: gpt-4l, gpt-4l-auto, gpt-4-auto pic.twitter.com/NBUtBDVQXfMay 9, 2024

What are gpt-4l, and gpt-4-auto? Could these be what we will see at the event today? Time will tell, but we've got some educated guesses as to what these could mean — based on what features are already present and looking at the direction OpenAI has taken.

- GPT4l = ChatGPT-4 Lite. We wouldn't be surprised if we saw GPT-3.5 get retired — replaced by a slimmer version of GPT-4.

- GPT-4-auto is an interesting one. We already know the name based on the codename (no initials here). However, it could mean one of two things. Either, it's a reference to the agent AI — being able to complete tasks on your behalf by visiting websites. Or, it could be a faster, smarter version of the current dynamic model switching — intelligently flipping between models based on the nature of your query.

not gpt-5, not a search engine, but we’ve been hard at work on some new stuff we think people will love! feels like magic to me.monday 10am PT. https://t.co/nqftf6lRL1May 10, 2024

OpenAI CEO Sam Altman made it clear there will not be a search engine launched this week. This was re-iterated by the company PR team after I pushed them on the topic. However, just because they’re not launching a Google competitor doesn’t mean search won’t appear.

One suggestion I've seen floating around X and other platforms is the theory that this could be the end of the knowledge cutoff problem. This is where AI models only have information up to the end of their training— usually 3-6 months before launch.

OpenAI recently published a model rule book and spec, among the suggested prompts are those offering up real information including phone numbers and email for politicians. This would benefit from live access taken through web scraping — similar to the way Google works.

So while we might not see a search engine, OpenAI may integrate search-like technology into ChatGPT to offer live data and even sourcing for information shared by the chatbot.

ChatGPT phone home

OpenAI seems to be working on having phone calls inside of chatGPT. This is probably going to be a small part of the event announced on Monday.(1/n) pic.twitter.com/KT8Hb54DwAMay 11, 2024

One of the weirder rumors is that OpenAI might soon allow you to make calls within ChatGPT, or at least offer some degree of real-time communication from more than just text.

This comes from leaked code files revealing various call notification strings. The company has also set up webRTC servers linked to ChatGPT. This is an open-source project for providing real-time communication inside an application — such as voice and video conferencing.

This could be part of the agent behavior also rumored for ChatGPT. With this, you'd be able to give the AI an instruction and have it go off and perform the action on your behalf — giving it call access could allow it to phone for an appointment or handle incoming calls without you getting involved.

However, as OpenAI looks to further enhance the productivity potential of ChatGPT they could have Slack and Microsoft Teams in their sights — offering meeting and conferencing capabilities inside the chat interface, allowing greater collaboration on a shared chat thread.

Will ChatGPT be able to make music?

OpenAI has started its live stream an hour early and in the background we can hear bird chirping, leaves rustling and a musical composition that bears the hallmarks of an AI generated tune.

At its "Spring Update" the company is expected to announce something "magic" but very little is known about what we might actually see. Speculation suggestions a voice assistant, which would require a new AI voice model from the ChatGPT maker.

Current leading AI voice platform ElevenLabs recently revealed a new music model, complete with backing tracks and vocals — could OpenAI be heading in a similar direction? Could you ask ChatGPT to "make me a love song" and it'll go away and produce it? Probably not yet, but its fun to speculate.

Will there be a 'one more thing'?

One question I’m pondering as we’re minutes away from OpenAI’s first mainstream live event is whether we’ll see hints of future products alongside the new updates or even a Steve Jobs style "one more thing" at the end. Something unexpected.

Sora has probably been the most high-profile product announcement since ChatGPT itself but it remains restricted to a handful of selected users outside of OpenAI.

The company also has an ElevenLabs competitor in Voice Engine that is also buried behind safety research and capable of cloning a voice in seconds.

Rumors also point to a 3D and improved image model, so the question is whether, in addition to the updates to GPT-4 and ChatGPT, we’ll get a look at Sora, Voice Engine and more.

My bet would be on us seeing a new Sora video, potentially the Shy Kids balloon head video posted on Friday to the OpenAI YouTube channel. We may even see Figure, the AI robotics company OpenAI has invested in, bring out one of the GPT-4-powered robots to talk to Altman.

See you soon: https://t.co/yM7TCDoQpF pic.twitter.com/jWDQSXJwQeMay 13, 2024

It's show time! This is the first mainstream live event from OpenAI about new product updates. The question is — which products.

Dubbed a "spring update", the company says it will just be a demo of some ChatGPT and GPT-4 updates but company insiders have been hyping it up on X, with co-founder Greg Brockman describing it as a "launch".

The hype is real and there are nearly 40,000 people watching the live stream on YouTube — so hopefully we get something interesting.

OpenAI CTO Mira Murati opens the event with a discussion of making a product that is more easy to use "wherever you are". This includes a desktop app with refreshed UI.

Also launching a new model called GPT-4o that brings GPT-4-level intelligence to all users including those on the free version of ChatGPT. These are rolling out over the next few weeks.

"An important part of our mission is being able to make our advanced AI tools available to everyone for free," including removing the need to sign up for ChatGPT.

During a demo they showed off a Mac desktop app that includes the Voice mode currently only available on mobile. This isn't a voice assistant as rumored.

Free users are getting a big upgrade

GPT-4o is shifting the collaboration paradigm of interaction between the human and the machine. "When we interact with one another there is a lot we take for granted," said CTO Mira Murati.

She said GPT-4o is able to reason across voice, text and vision. "This allows us to bring the GPT-4-class intelligence to our free users." Which they've been working on for months.

More than 100 million people use ChatGPT regularly and 4o is significantly more efficient than previous versions of GPT-4. This means they can bring GPTs (custom chatbots) to the free version of ChatGPT.

You'll also be able to use data, code and vision tools — allowing you to analyze images without paying for a count.

Fewer benefits to paying for ChatGPT

With the free version of ChatGPT getting a major upgrade and all the big features previously exclusive to ChatGPT Plus, it raises questions over whether it is worth the $20 per month.

Mira Murati, OpenAI CTO says the biggest benefit for paid users will be five times more requests per day to GPT-4o than the free plan.

Talking to AI just got real!

One of the biggest upgrades with GPT-4o is live speech. The model is capable of working end-to-end with speech-to-speech. It listens to the audio rather than transcribing the speech first.

In the demo of this feature the OpenAI staffer did heavy breathing into the voice assistant and it was able to offer advice on improving breathing techniques.

It even warned him "you're not a vacuum cleaner". You don't have to wait for it to finish talking either, you can just interrupt in real time. It even picks up on emotion.

AI gets emotional

In another demo of the ChatGPT Voice upgrade they demonstrated the ability to make OpenAI voice sound not just natural but dramatic and emotional.

They started by asking it to create a story and had it attempt different voices including a robotic sound, a singing voice and with intense drama.

The singing voice was impressive and could be used to provide vocals for songs as part of an AI music model in the future.

Will ChatGPT do homework for you?

One of the features of the new ChatGPT is native vision capabilities. This is essentially the ability for it to "see" through the camera on your phone.

In a demo the team showed ChatGPT an equation they'd just written on a piece of paper and asked the AI to help solve the problem. It didn't give the answer, rather offered advice and talked them through it step-by-step.

The AI is able to see the changes you make. In another example they opened the camera to show the letters "I heart ChatGPT" on a piece of paper. The AI sounded very emotional at the idea of being told "I love you".

There was a weird moment at the end where the camera was still active and ChatGPT saw the outfit the presenter was wearing and said "wow, I love the outfit you have on."

OpenAI created the perfect tutor

Using an incredibly natural voice, running on a Mac, ChatGPT was able to view code being written and analyze the code. It was also able to describe what it is seeing, including spotting any potential issues.

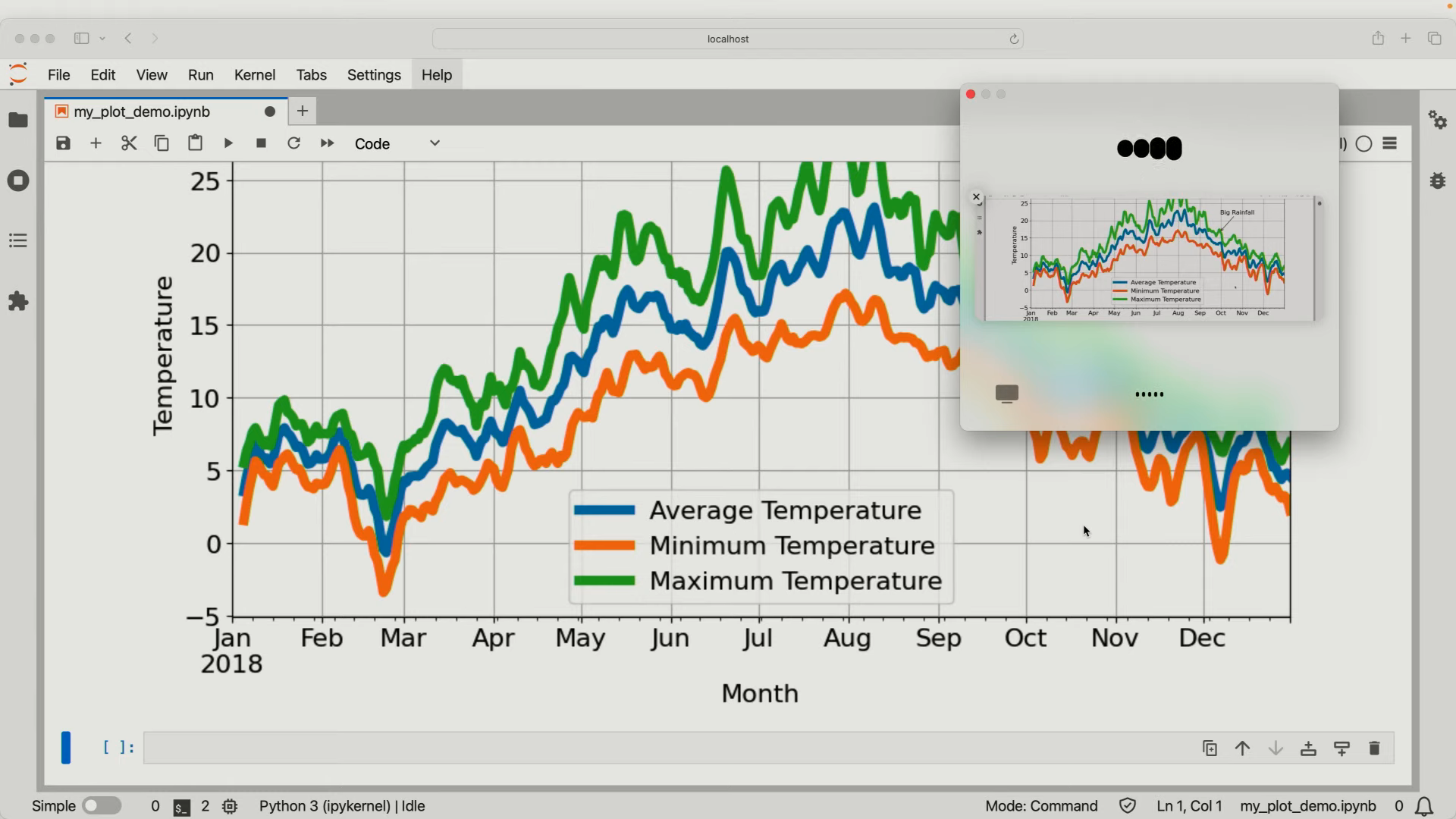

The vision capabilities of the ChatGPT Desktop app seem to include the ability to view the desktop. During the demo it was able to look at a graph and provide real feedback and information.

A real-time translation tool

During a demo the OpenAI team demonstrated ChatGPT Voice's ability to act as a live translation tool. It took words in Italian from Mira Murati and converted it to English, then took replies in English and translated to Italian.

This is going to be a big deal for travellers.

Not launching today

OpenAI's ChatGPT is now capable of detecting emotion by looking at a face through the camera. During the demo they showed a smiling face and the AI asked "want to share the reason for your good vibes."

I completely understand why Sam Altman described this as magical! The voice assistant is incredible and if it is even close to as good as the demo this will be a new way to interact with AI, replacing text.

It will be rolling out over the coming few weeks.

That's all folks. A short and sweet presentation that genuinely felt like a ChatGPT-moment. The voice interface sounds natural, emotional and genuinely useful.

It is only a rumor, but if this is what is going to replace Siri on the iPhone thanks to a deal with OpenAI — I'm all for it.

Bringing the power of GPT-4 to the free version of ChatGPT, along with voice, GPTs and other core functionality is also a bold move on the eve of the Google I/O announcement and will likely cement OpenAI's dominance in this space.

Our global editor in chief, Mark Spoonauer, has weighed in on OpenAI's announcements and demos and he says that the new GPT-4o voice assistant puts Siri on notice. And he has a point.

This assistant is fast, is more conversational than anything Apple has done (yet), and has Google Lens-like vision. It can even do real-time translations. In other words, the new Siri had better be good. Because this just raised the bar.

One more day until #GoogleIO! We’re feeling 🤩. See you tomorrow for the latest news about AI, Search and more. pic.twitter.com/QiS1G8GBf9May 13, 2024

It looks like Google is nervous. During OpenAI's event Google previewed a Gemini feature that leverages the camera to describe what's going on in the frame and to offer spoken feedback in real time, just like what OpenAI showed off today. We'll find out tomorrow at Google I/O 2024 how advanced this feature is.

GPT-4o as an accessibility device?

OpenAI has been releasing a series of product demo videos showing off the vision and voice capabilities of its impressive new GPT-4o model.

Among them are videos of the AI singing, playing games and helping someone "see" what is happening and describe what they are seeing.

A video filmed in London shows a man using ChatGPT 4o to get information on Buckingham Palace, ducks in a lake and someone going into a taxi. These are all impressive accessibility features that could prove invaluable to someone with poor sight or even sight loss.

It also highlights something I've previously said — the best form factor for AI is smart glasses, with cameras at eye level and sound into your ears.

A new way to interact with data

ChatGPT with GPT-4o voice and video leaves other voice assistants like Siri, Alex and even Google's Gemini on Android looking like out of date antiques.

Its launch felt like a definitive moment in technology equal to Steve Jobs revealing the iPhone, the rise and rule of Google in search or even as far back as Johannes Gutenberg printing press. It will change how engage with data.

Our AI Editor Ryan Morrison described it as the "most excited he's been for a new product ever." Declaring it a paradigm shift in human computer interface.

"The small black dot you talk to and that talks back is as big of a paradigm shift in accessing information as the first printing press, the typewriter, the personal computer, the internet or even the smartphone," he wrote.

Hands on with GPT-4o

Some users already have access to the text features of GPT-4o in ChatGPT including our AI Editor Ryan Morrison who found it significantly faster than GPT-4, but not necessarily a significant improvement in reasoning.

He created five prompts that are designed to challenge an AI's reasoning abilities and used them on both GPT-4 and GPT-4o, comparing the results.

One of the tests asked each model to write a Haiku comparing the fleeting nature of human life to the longevity of nature itself.

GPT-4:

Autumn leaves whisper,

Mountains outlive fleeting breath—

Silent stone endures.

Omni:

Ephemeral bloom,

Whispers fade in timeless breeze—

Dust upon the dawn.

GPT-4o as a live translation device?

OpenAI demonstrated a feature of GPT-4o that could be a game changer for the global travel industry — live voice translation.

Working in a similar way to human translators at global summits, ChatGPT acts like the middle man between two people speaking completely different languages.

During the demo OpenAI CTO Mira Murati spoke in Italian, ChatGPT repeated what she said in English and relayed it to her colleague Mark. He then spoke in English and ChatGPT translated that into Italian and spoke the words — with questionable accent — back to Murati.

It isn't perfect, and likely won't be available for several weeks and even then on a limited rollout, but its ability to allow interruptions and live voice-to-voice communication is a major step-up in this space. I just wish it was ready for my trip to Paris next week.

ChatGPT-4o vs Google Gemini Live

Google isn't taking the introduction of GPT-4o standing still. It just unveiled its own Gemini Live AI assistant that's multi-modal with impressive voice and video capabilities. Check out our GPT-4o vs Gemini Live preview to see how these supercharged AI helpers are stacking up.