In a bid to help businesses of all sizes embrace the new AI-driven world, Nvidia has taken the wraps off a new approach for software and microservice access that it says could change everything.

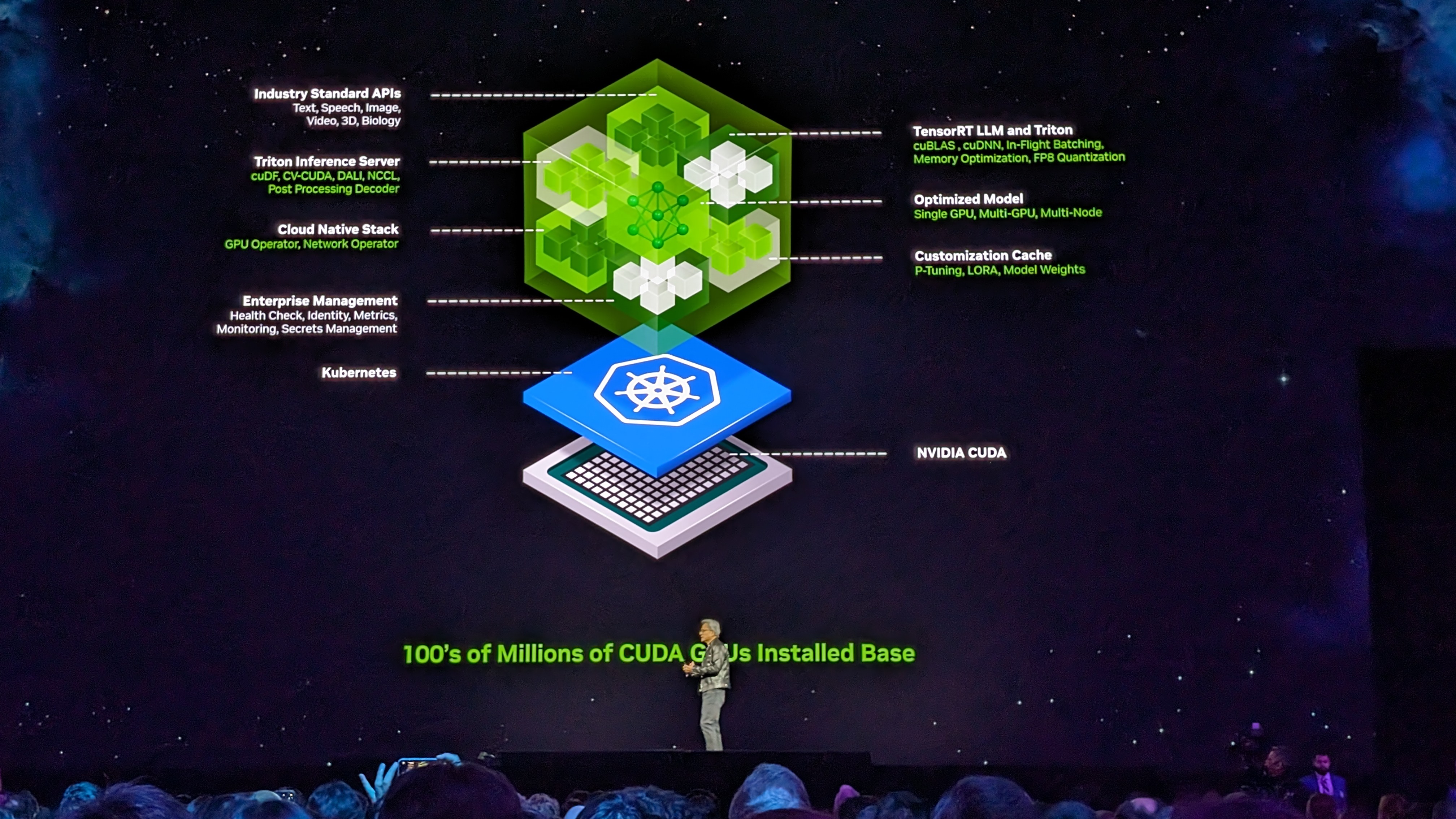

The company's Nvidia Inference Microservices, or NIM, offerings will look to replace the myriad of code and services currently needed to create or run software.

Instead, a NIM will collate together a collection of containerized models and their dependencies in a single package, which can then be distributed and deployed where needed.

NIM-ble

In his keynote speech at the recent Nvidia GTC 2024 event, company CEO Jensen Huang said that the new approach signals a shift change for businesses everywhere.

"It is unlikely that you'll write it from scratch or write a whole bunch of Python code or anything like that," Huang said. "It is very likely that you assemble a team of AI."

"This is how we're going to write software in the future."

Huang noted that AI tools and LLMs will likely be a common sight in NIM deployments as companies across the world look to embrace the latest technologies. He gave one example of how Nvidia itself is using one such NIM to create an internal chatbot designed to solve common problems encountered when building chips, helping improve knowledge and capabilities across the board.

Nvidia adds that NIMs built for portability and control, and can be deployed across not only cloud, but also on-premise data centers and even local workstations, including its RTX workstations and PCs as well as its DGX and DGX Cloud services.

Developers can access AI models through APIs that adhere to the industry standards for each domain, simplifying application development, and NIM will be available as part of Nvidia AI Enterprise, the company's new platform and hub for AI services, offering a one-stop shop for businesses to understand and access new tools, with NIM AI use cases spreading across LLMs, VLMS, drug discovery, medical imaging and more.

.jpg?w=600)