Nvidia is reportedly teaming up with memory manufacturers SK hynix, Micron and Samsung to create a new memory standard that's both small in size, but big on performance, according to a report via SEDaily.

The new standard, named System On Chip Advanced Memory Module (SOCAMM) is in the works with all three large scale memory makers. The report claims (via machine translation): 'Nvidia and memory companies are currently exchanging SOCAMM prototypes to conduct performance tests'. We could also be seeing the new standard sooner rather than later, adding that 'mass production could be possible as early as later this year'.

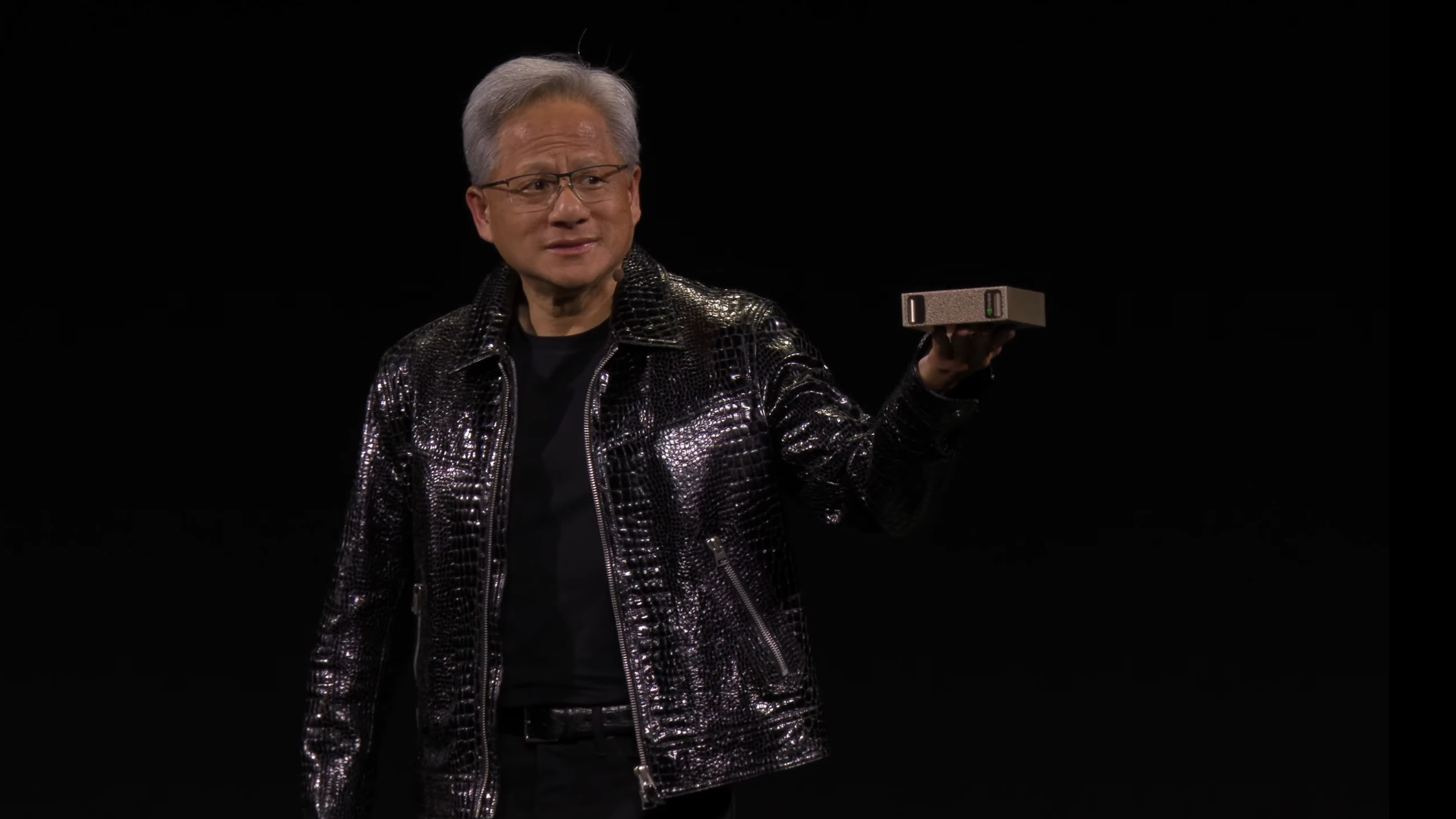

The SOCAMM module is suspected to be put into use for Nvidia's next-generation successor to Nvidia's Project Digits AI computers, announced at CES 2025. SOCAMM is expected to be a sizable upgrade over Low-Power Compression Attached Memory Modules (LPCAMM), and traditional DRAM, thanks to several factors.

The first is that SOCAMM is set to be more cost-effective when compared to traditional DRAM that uses the SO-DIMM form-factor. The report further details that SOCAMM may place LPDDR5X memory directly onto the substrate, offering further power efficiency.

Secondly, SOCAMM is reported to feature a significant number of I/O ports when compared to LPCAMM and traditional DRAM modules. SOCAMM has up to 694 I/O ports, outshining LPCAMM's 644, or traditional DRAM's 260.

The new standard is also reported to possess a 'detachable' module, which may offer easy upgradability further down the line. When combined with its small hardware footprint (which SEDaily reports is around the size of adult's middle finger), it is smaller than traditional DRAM modules, which could potentially enhance total capacity.

Finer details about SOCAMM are still firmly shrouded in mystery, as Nvidia appears to be developing the standard without any input from the Joint Electron Device Engineering council (JEDEC).

This could end up being a big deal, as Nvidia is seemingly venturing out on its own to refine and create a new standard, with the key memory makers in tow. But, SO-DIMM's time in the sun has been ticking for a while, as the CAMM Common Spec was set to supersede it.

The reason as to why that might be is down to the company's focus on AI workloads. Running local AI models demand a large amount of DRAM, and Nvidia would be wise to push for more I/O, capacity and more configurability.

Jensen Huang made it clear at CES 2025 that making AI mainstream is a big focus for the company, though it's not gone without criticism. While Nvidia's initial Project Digits AI computers go on sale in May this year, the advent of SOCAMM may be a good reason for many to wait.