Nvidia is prepping three new GPUs for artificial intelligence (AI) and high-performance computing (HPC) applications tailored for Chinese market and to comply with U.S. export requirements, according to ChinaStarMarket.cn. The new units will be based on the Ada Lovelace and Hopper architectures, according to the leaked information.

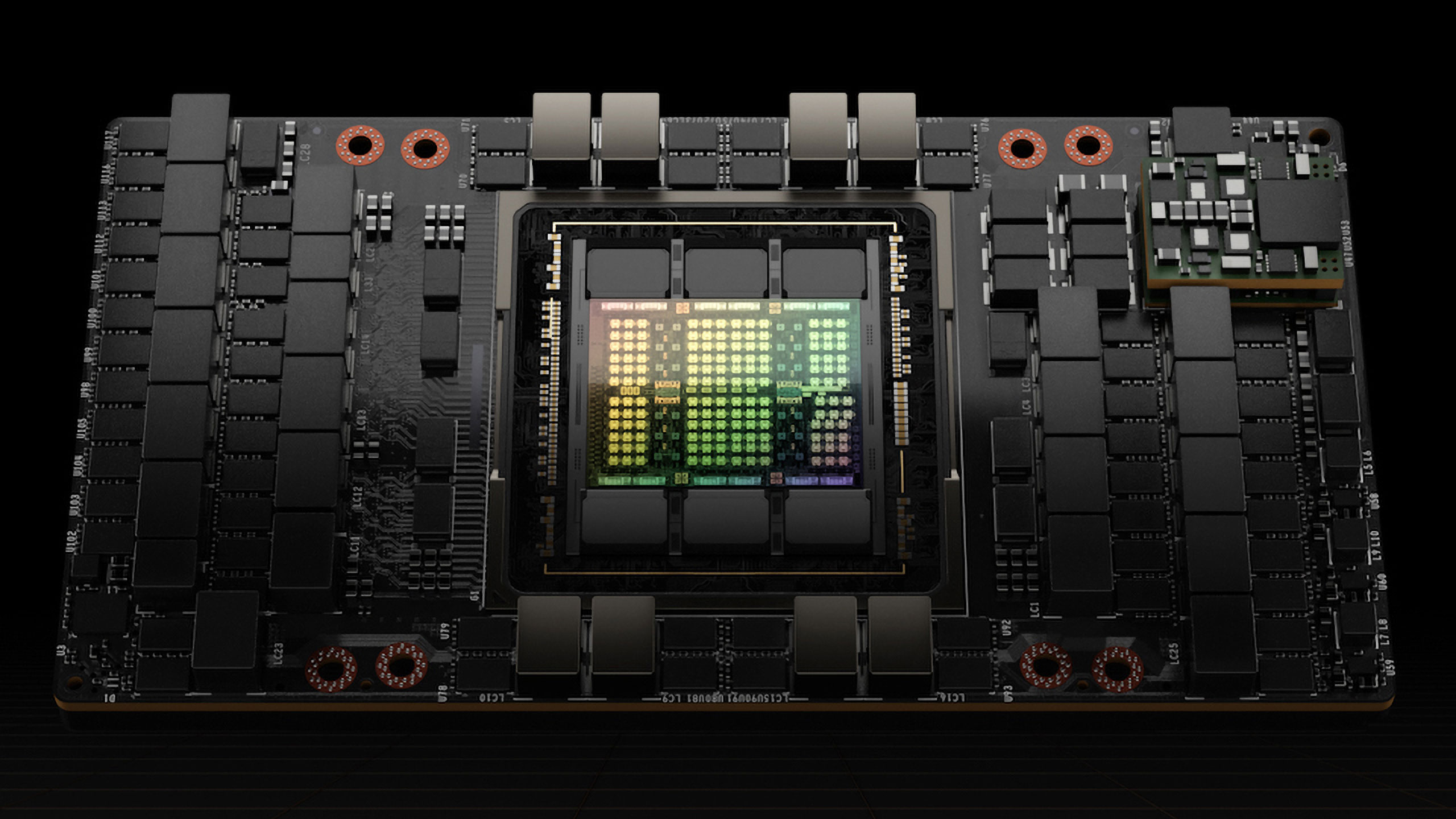

The AI and HPC products in question are HGX H20, L20 PCle, and L2 PCle GPUs and all of them are already heading to Chinese server makers, the report claims. Meanwhile, HKEPC has published a slide which claims that the new HGX H20 with 96 GB of HBM3 memory is based on the Hopper architecture and either uses a severely crippled flagship H100 silicon, or a new Hopper-based AI and HPC GPU design. Since this is an unofficial piece of information, take it with a pinch of salt.

When it comes to performance, HGX H20 offers 1 FP64 TFLOPS for HPC (vs. 34 TFLOPS on H100) and 148 FP16/BF16 TFLOPS (vs. 1,979 TFLOPS on H100). It should be noted that Nvidia's flagship compute GPU is way too expensive to be cut down and sold at a discount, but Nvidia could have no choice at this point. The company already has a lower-end A30 AI and HPC GPU with 24 GB HBM2 that is based on Ampere architecture, and it is cheaper than the A100. In fact, A30 is faster than HGX H20 in both FP64 and FP16/BF16 formats.

As for L20 and L2 PCIe AI and HPC GPUs, these seem to be based on Nvidia's cut-down AD102 and AD104 GPUs and will address the same markets as L40 and L40S products.

In the last couple of years, the U.S. has imposed stiff restrictions for high-performance hardware exports to China. The U.S. controls for the Chinese supercomputer sector imposed in October 2022 are focused on preventing Chinese entities build supercomputers with performance of over 100 FP64 PetaFLOPS within 41,600 cubic feet (1178 cubic meters). To comply with U.S. export rules, Nvidia had to cut inter-GPU connectivity and GPU processing performance for its A800 and H800 GPUs.

The limitations set in November 2023 require export licenses on all hardware that achieves a certain total processing performance and/or performance density, no matter whether the part can efficiently connect to other processors (using NVLink in Nvidia's case) or not. As a result, Nvidia can no longer sell A100, A800, H100, H800, L40, L40S, and GeForce RTX 4090 to Chinese entities without an export license from the U.S. government. To comply with the new rules, HGX H20, L20 PCle, and L2 PCle GPUs for AI and HPC compute will not only come with crippled NVLink connectivity, but also with crippled performance.

Interestingly, Nvidia recently launched its A800 and H800 AI and HPC GPUs in the U.S. formally targeting small-scale enterprise AI deployments and workstations. Keeping in mind that the company can no longer sell these units to companies in China, Saudi Arabia, the United Arab Emirates, and Vietnam, this is a good way to get rid of the inventory that will hardly be interesting for large cloud service providers in the U.S. and Europe.