Nvidia is preparing to release yet another China-focused GPU SKU, aimed at complying with the U.S. export regulations. Sources from Reuters reveal that Nvidia's latest GPU will be an offshoot of the Blackwell B200, Nvidia's fastest AI GPU to date. The GPU is expected to launch next year, but specifications remain an open question.

Tentatively named the "B20", the new chip will be distributed throughout China by Inspur, one of Nvidia's major partners in the region. The B20 will reportedly make its official debut in Q2 of 2025.

Specs are completely unknown at this time regarding the neutered Blackwell GPU, though it seems inevitable that the B20 will be an entry-level part — a stark contrast to the B200 with its industry-leading AI performance. The U.S. has strict performance regulations for China GPU exports, using a metric dubbed "Total Processing Power" (TPP), which takes into account the amount of TFLOPS and precision of a GPU's compute capabilities. Specifically, multiply the TFLOPS (without sparsity) by the precision in bits to get TPP.

The current limit is set at 4,800 TPP. For reference, the Hopper H100 and H200 far exceed that mark, with 16,000 TPP on both GPUs — the metric doesn't directly account for memory bandwidth or capacity, which are the chief improvements that H200 brings to the table. Even the RTX 4090 passes the limit with its 660.6 TFLOPS of FP8 compute. The most powerful Nvidia desktop GPU that stays within the 4,800 TPP limit is the RTX 4090D, which was built specifically to comply with export restrictions.

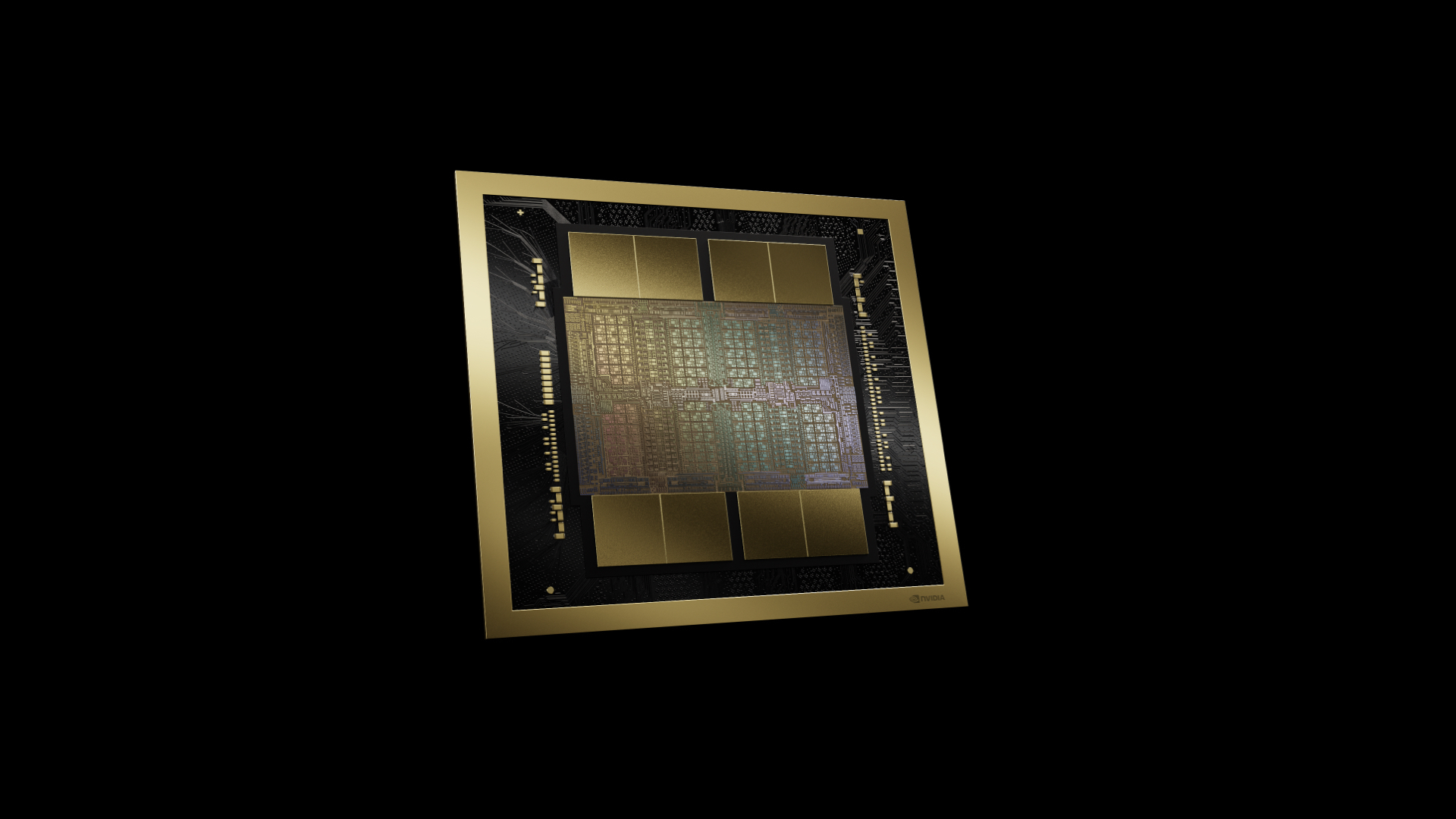

Blackwell raises the bar on compute performance, with a dual-die solution potentially spitting out around 4,500 TFLOPS of FP8 compute. That would make it 7.5 times the allowed limit. Even the lesser B100 will deliver 3.5 PFLOPS of dense FP8 compute, or 28,000 TPP.

The B20 also faces additional restrictions since the U.S. also enforces a "performance density" (PD) restriction targeted specifically at datacenter GPUs (consumer GPUs are exempt from this restriction). Take the TPP score and divide it by the die size to get the PD metric; anything above 6.0 gets restricted. Using that metric, every RTX 40-series GPU would be restricted for data center use, and Blackwell should improve over the density and performance of Ada Lovelace. So, Nvidia will need to severely curtail the B20 performance and/or use a proportionately larger die in order to comply with the regulations. (We still don't know the exact die size of the already announced B200.)

We expect the B20 to be a successor to Nvidia's A30 and H20 entry-level AI GPUs. The H20 as an example offers just 296 TFLOPS of FP16, compared to 1,979 TFLOPS on the H100/H200. That's a TPP of 2,368, in order to keep PD below 6.0 — it has a PD rating of just 2.90. The A30 meanwhile has a TPP rating of 2,640 and a PD score of 3.20. So there's room for Nvidia to create a faster AI GPU for China... just not too much faster.

We can't help but think the B20 will be a difficult chip to sell. Both Ampere and Hopper are already beyond the performance limit, so Nvidia created China-specific SKUs to comply with regulations. All of the advancements in the Blackwell architecture push it even further into non-compliance, as the maximum TPP hasn't changed, which means rolling back performance to stay compliant. Best-case? Nvidia would be looking to create a GPU with perhaps 4,000–4,500 TPP and an 800 mm^2 die size.