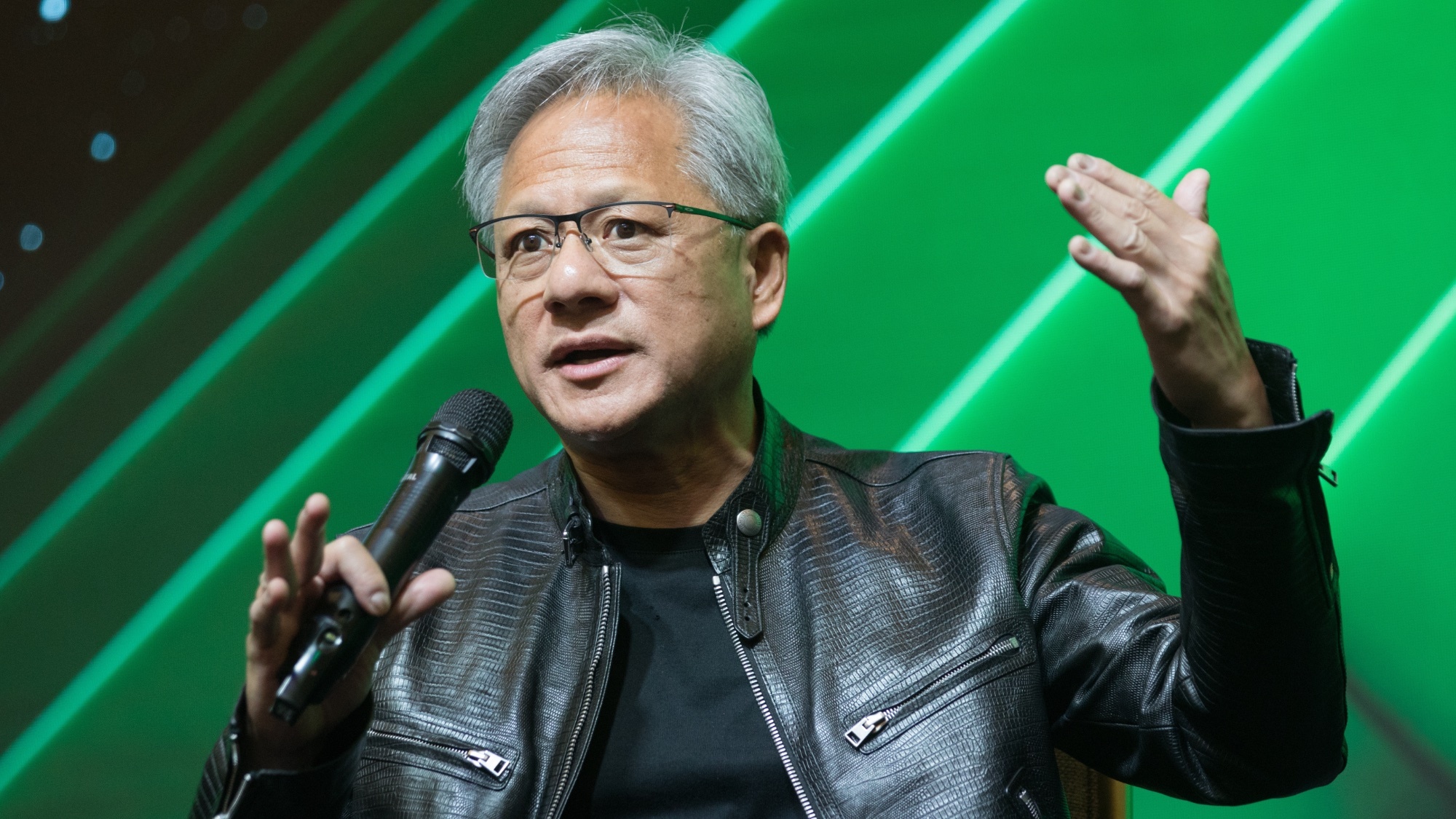

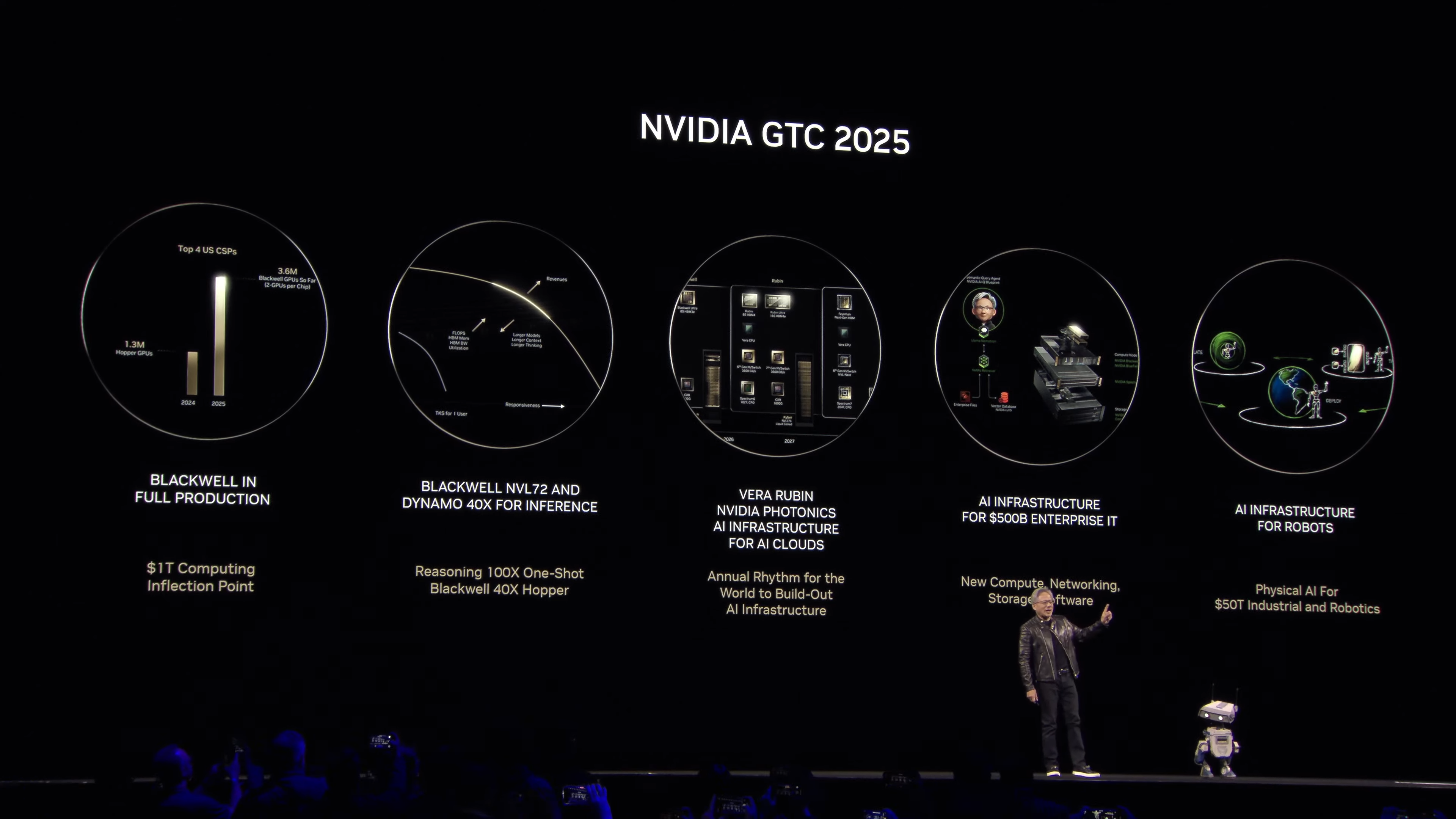

The Nvidia GTC 2025 event was jam-packed with news from CEO Jensen Huang, touching on everything from autonomous car development and a roadmap for AI data centers to robotic AI and (of course) Nvidia computers for business.

We even got a look at Blackwell Ultra AI chips for next-level computational AI, the new Nvidia Groot N1 AI model for robotics, and a fun little robot on stage called Blue, powered by Newton in collabroation with Google DeepMind and Disney.

For a full rundown of the keynotes' announcements, check out all you need to know below.

Nvidia GTC 2025 biggest news

- Dell Pro Max announced: Bringing Nvidia's most powerful professional graphics, Dell announced its new AI PC portfolio: the Dell Pro Max. These include Nvidia GB10 and GB300 Blackwell Superchips, along with RTX Pro GPUs.

- Nvidia Isaac Groot N1: A new model for robotics, Groot N1 is set to be "the world’s first open Humanoid Robot foundation model."

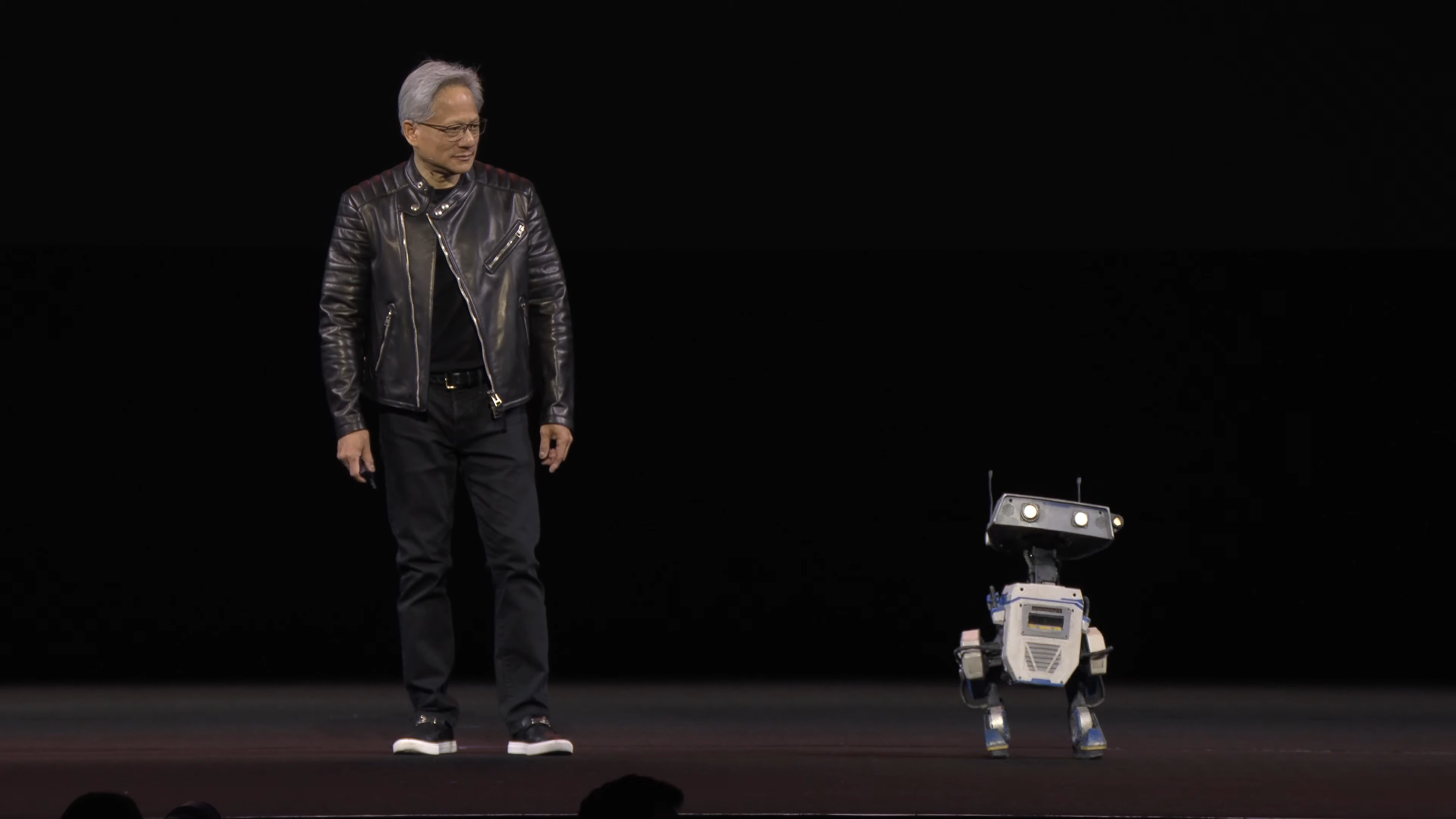

- "Blue," Robotics and AI collaboration: Powered by a new physics engine to simulate robotic movements, Newton was introduced, showcasing a little robot named Blue. It's a collaborative robotics project with Disney Research and Google DeepMind. An open-source model of Newton will arrive later in 2025.

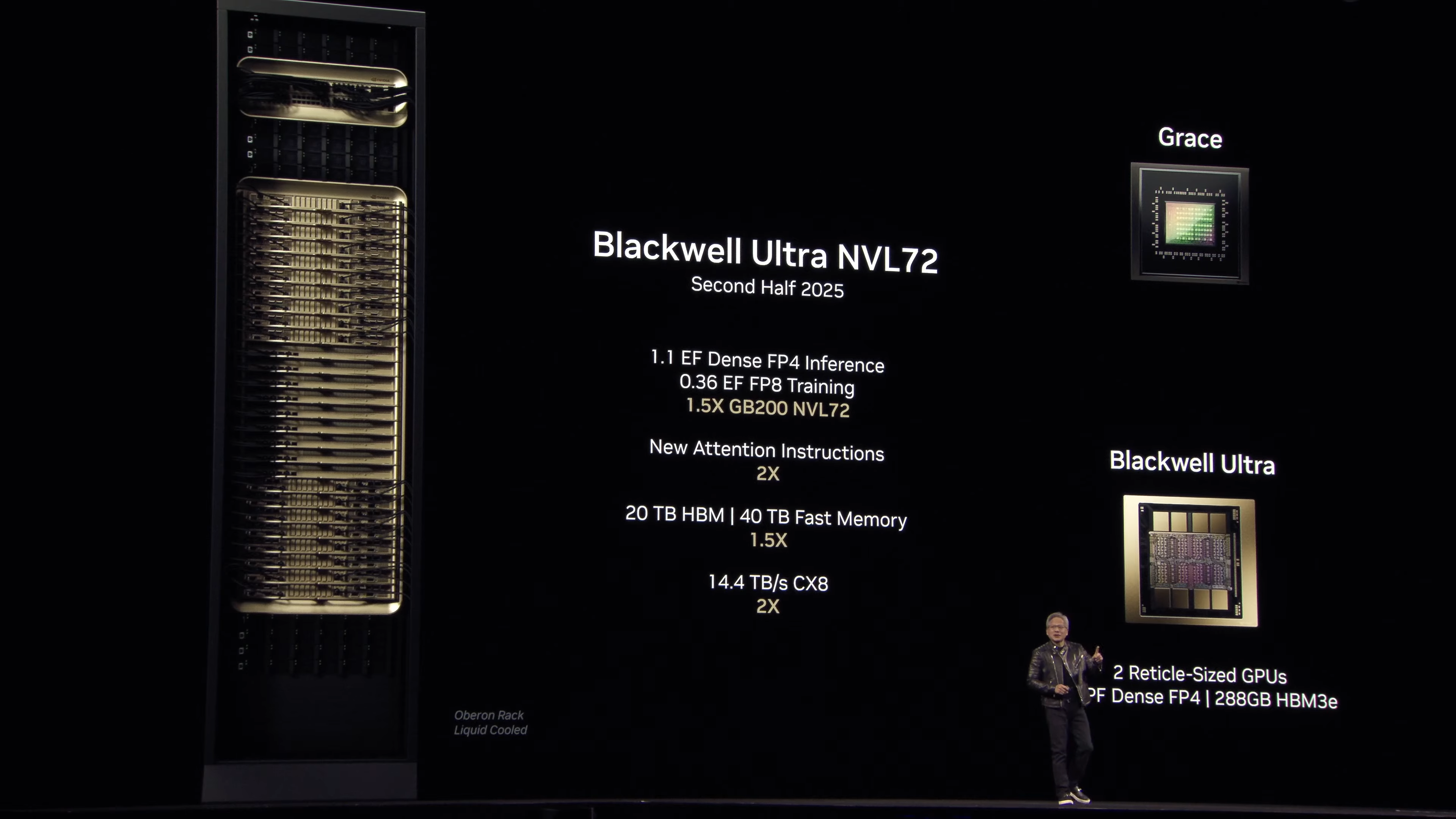

- Blackwell Ultra AI chips: New chips to be released later this year, aimed at meeting the growing demand for computational power in AI.

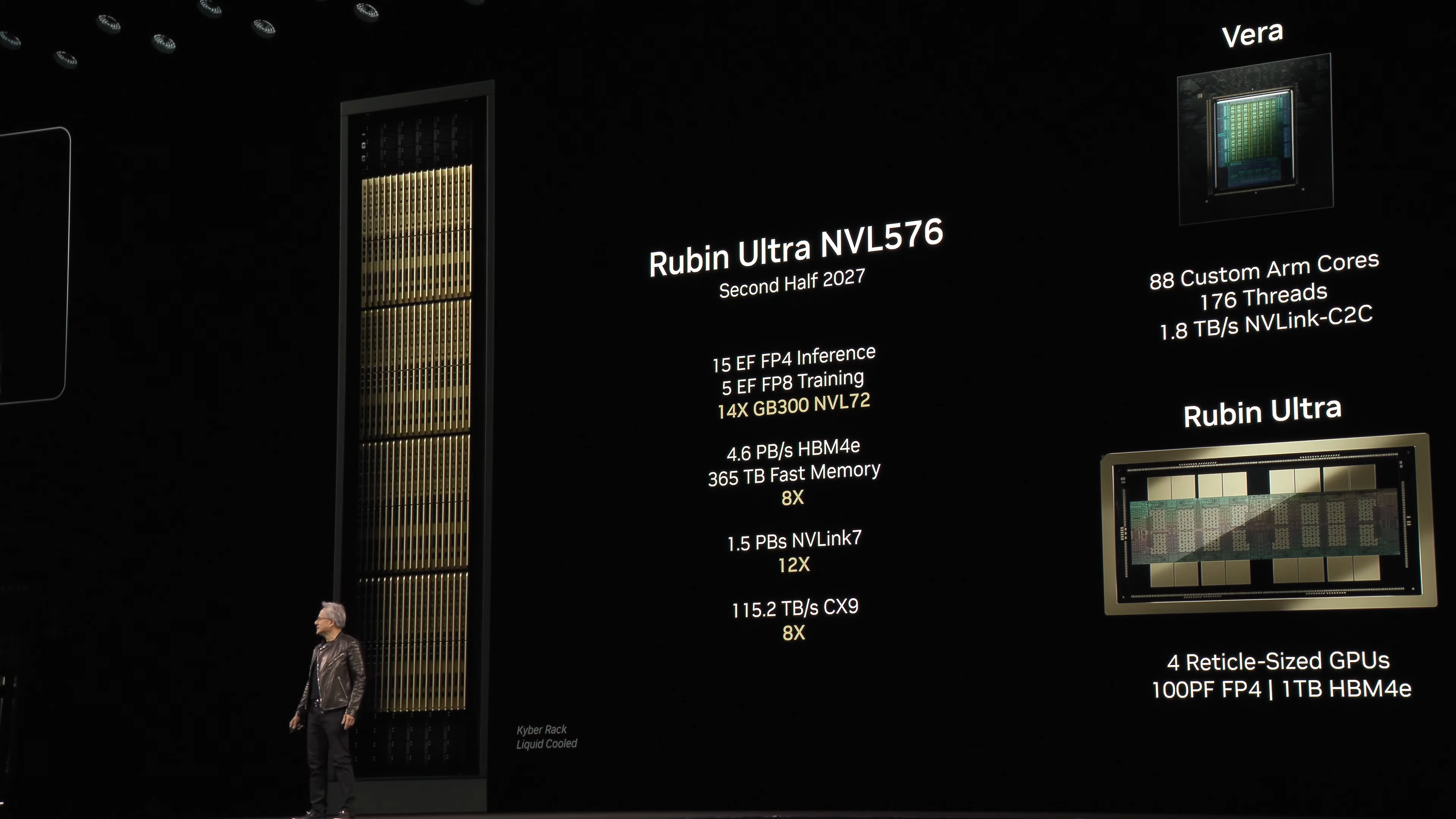

- Vera Rubin Architecture: The next step beyond Blackwell, Vera Rubin will increase bandwidth and perform even faster. It's set for release in late 2026, with Vera Rubin Ultra expected in 2027.

- Nvidia Dynamo: Nvidia's new open-source software system designed to scale AI models effectively, and it's able to customize a data center far more effectively.

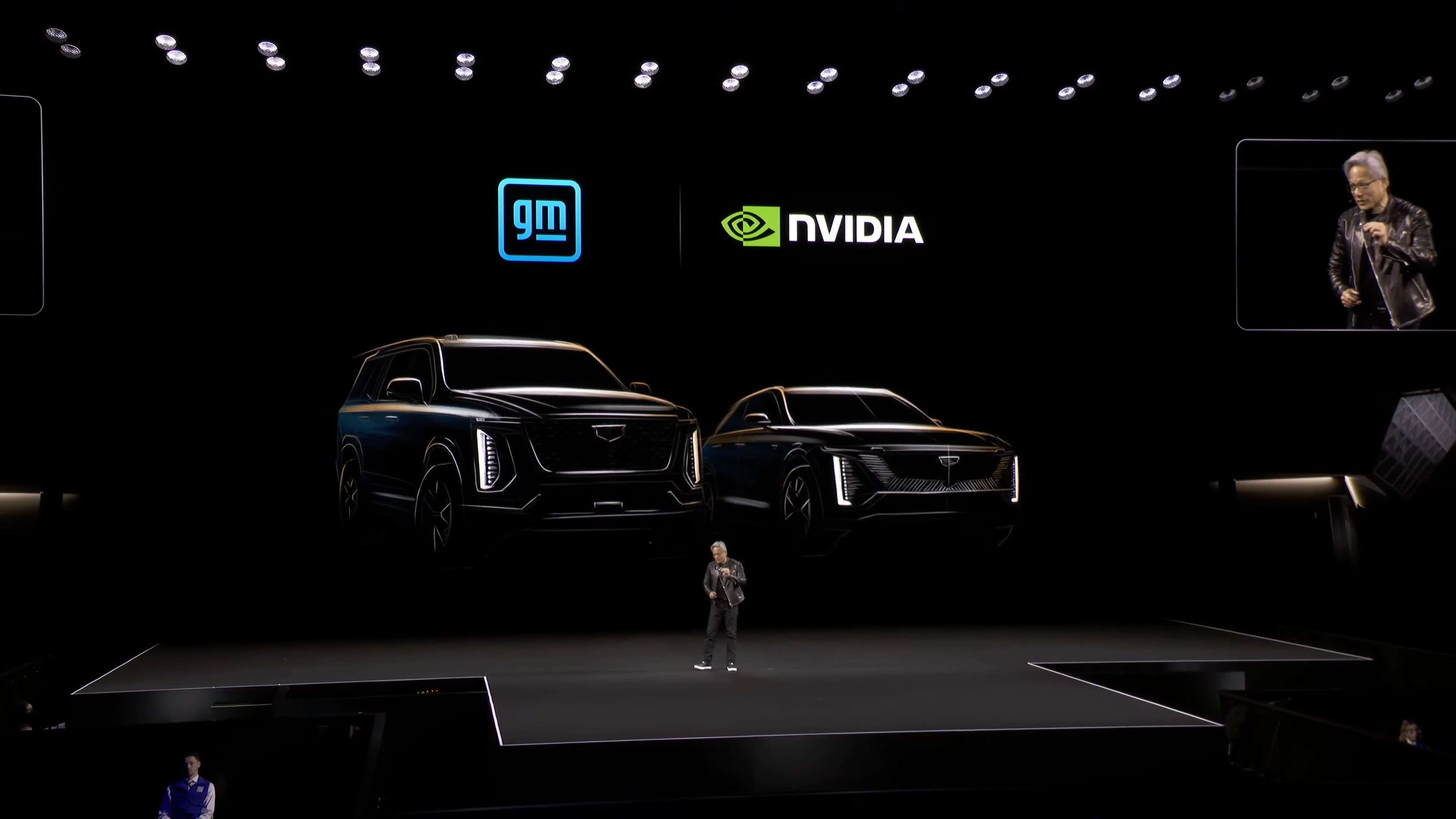

- Self-driving cars: Nvidia is teaming up with GM to create autonomous cars, developing custom AI systems for autonomous vehicles and putting "AI in the car."

How to watch Nvidia GTC 2025

In case you missed it, check out the full Nvidia GTC keynote from CEO Jensen Huang below.

Nvidia GTC 2025: Latest updates

Prepare for next-level AI

Welcome! Nvidia GTC 2025 is kicking off with Jensen Huang spilling the beans on what we can expect the future of AI to look like, and we know it will cover everything from robotics to accelerated computing.

Since the launch of the RTX 50-series earlier this year, we got a look at how AI is changing next-gen gaming, with DLSS 4 and Multi Frame Generation offering a boost in frame rates for supported PC games.

Nvidia is known to have cool tricks and surprises up its sleeve, so we're sure Huang will have plenty to talk about in the upcoming keynote.

How to watch Nvidia's event

Nvidia is preparing its big event for our viewing pleasure. The company will hit the stage on Tuesday, March 18, at 10 a.m. PT / 1 p.m. ET / 4 p.m. GMT. We don't have an end time, so be prepared to hang out for a while if you're going to watch.

And if watching the keynote doesn't sound like how you want to spend your afternoon, follow along with our live blog.

Regarding how to watch, you can head to Nvidia's YouTube channel or aim your gaze slightly up, as we've embedded the stream right here.

It seriously is that easy. After all, Nvidia wants you to watch its event, so it's in the company's best interest to make watching as painless as possible.

The company's YouTube description sounds exciting: "Watch NVIDIA CEO Jensen Huang’s GTC keynote to catch all the announcements on AI advances that are shaping our future."

What Nvidia might announce

This is primarily a developer-focused event, so many announcements and news will target them. However, it still affects you, as the developers will use agentic AI (AI that can do work for you autonomously) and robotics to bring apps and products to the rest of us.

Even if you've never written a line of code, something at this keynote should excite you.

Not only could the AI and robotics announcements eventually find their way into consumer-facing products, but the company could have some surprises to go along with the presentation.

What we found out at CES 2025

As is typical for Nvidia, the company had a busy show at CES 2025. Like today, it had a big keynote. However, the company was more focused on hardware at CES, announcing the Blackwell-based GeForce RTX 50 Series GPUs. We tested the RTX 50 GPUs in-depth and were impressed with what we saw.

The company also partnered with Toyota, the NVIDIA Cosmos platform, AI foundation models for RTX PCs and, of course, NVIDIA Project DIGITS.

“GeForce enabled AI to reach the masses, and now AI is coming home to GeForce,” Huang said at CES.

We will learn more about Nvidia's AI push at 1 p.m. ET today when the keynote kicks off.

More on Project DIGITS

Project Project DIGITS might be the most exciting thing announced at CES 2025 and we can't wait to hear more about it at Nvidia GTC 2025.

This tiny computer, targeted at developers, offers a super chip for AI. Sure, you won't be buying one of these in your home anytime soon, but you will see the exciting stuff developers can do with it.

We can only hope that Nvidia shows off more of its capabilities today, though we'll have to wait until the keynote starts in just over an hour and a half to learn more.

What are AI Foundation Models?

We expect Nvidia to talk about AI foundation models (Nvidia NIM microservices) during its keynote today. If you're not sure what that is, you're not alone. Give our glossary of AI terms a quick read to learn about these and other AI phrases. The quick definition is " huge data stores which have been pre-trained with a neural network and vast amounts of data to cope with a wide variety of tasks."

AI foundational models are essentially the backbone of agentic AI, which we expect to be a fundamental part of Nvidia's presentation today. They're a big part of RTX AI PCs, so they'll be sure to figure into the company's future plans going forward.

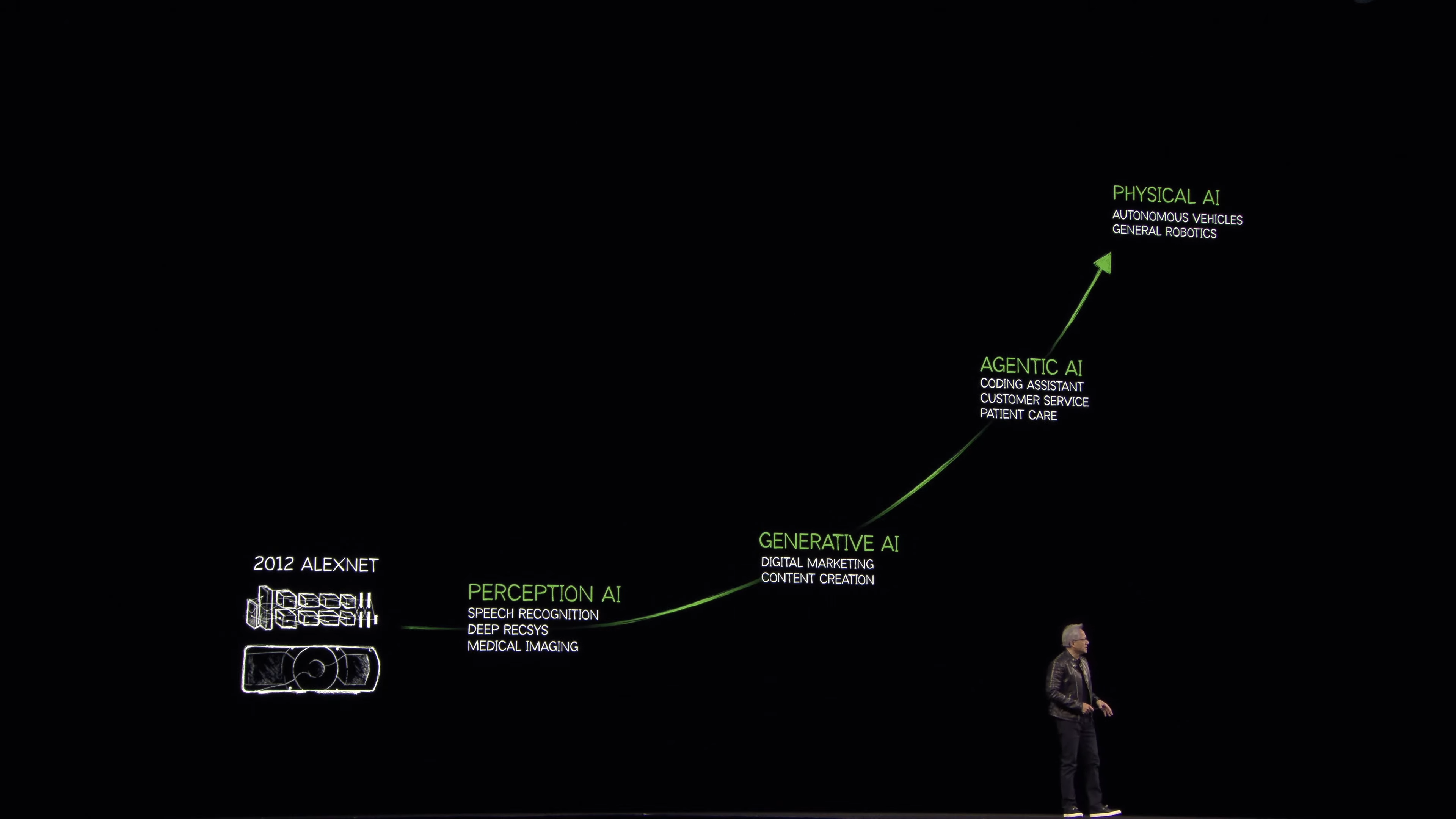

“AI is advancing at light speed, from perception AI to generative AI and now agentic AI,” said Jensen Huang, founder and CEO of NVIDIA during CES 2025. “NIM microservices and AI Blueprints give PC developers and enthusiasts the building blocks to explore the magic of AI.”

If you want to know more, check out Nvidia's blog post from January.

Nvidia Cosmos

Let's get to the really exciting stuff: robots in AI!

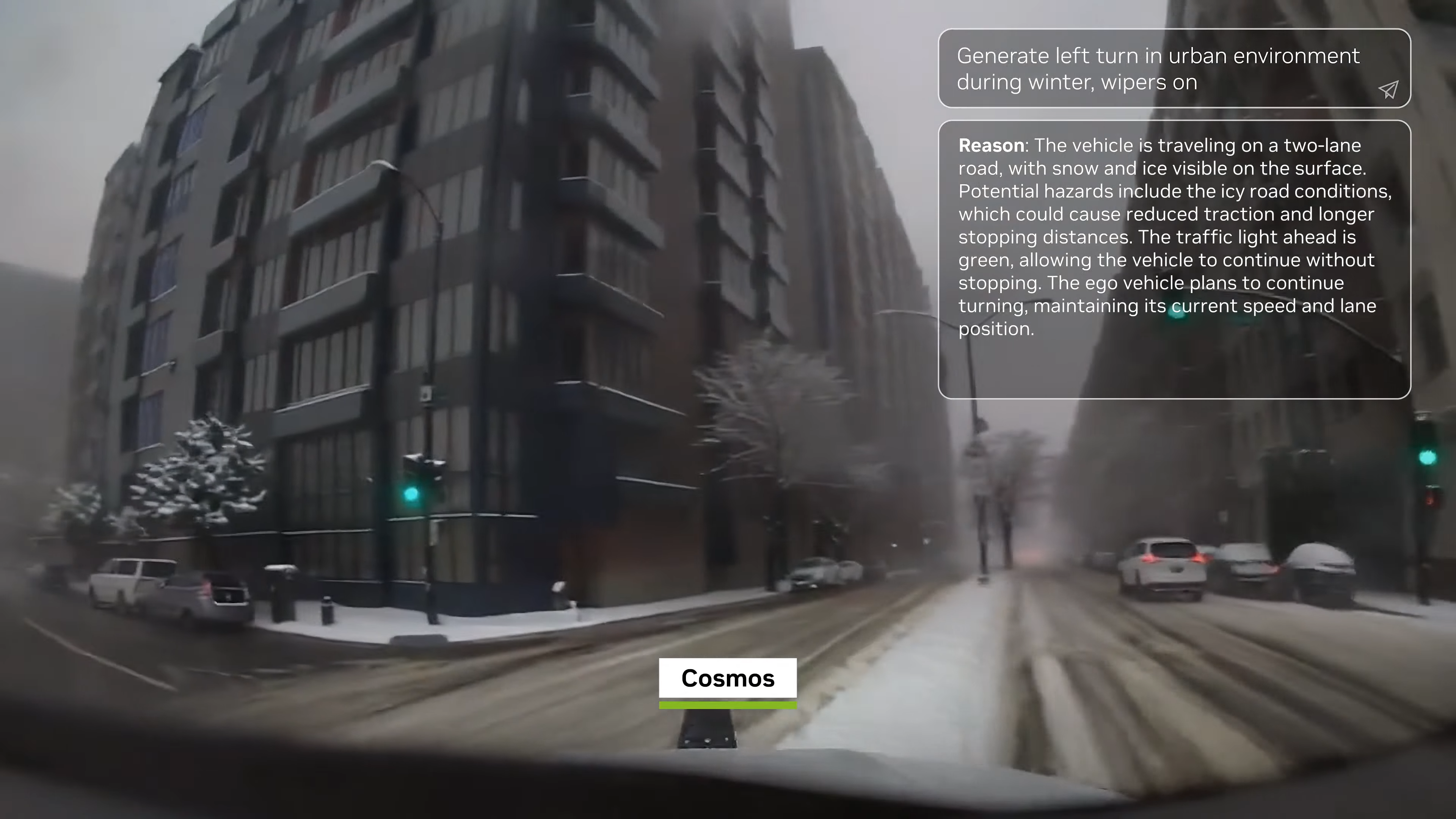

Nvidia Cosmos is described by the company in a press release: "A platform comprising state-of-the-art generative world foundation models, advanced tokenizers, guardrails and an accelerated video processing pipeline built to advance the development of physical AI systems such as autonomous vehicles (AVs) and robots."

The company mentions how expensive physical AI models are, but it says, "Cosmos models will be available under an open model license to accelerate the work of the robotics and AV community."

That means we could see many more developers taking advantage using AI robots. Jensen Huang, founder and CEO of NVIDIA, said, "The ChatGPT moment for robotics is coming."

Will it come today during Nvidia's keynote? Quite frankly, that's up to the company to reveal something that truly entices the masses (and hopefully, doesn't terrify everyone). Of all the things Nvidia is showing off today, Cosmos could be the most game-changing, but we'll have to wait and see.

15 minutes to go...

While we're counting down to seeing how Nvidia's Blackwell GPU technology can power the future of robotics and agentic AI, let's talk about the gaming side of things.

I got to check out the Half-Life 2 RTX demo, which features all of the new DLSS 4 and neural rendering techniques on top of a loving remaster of the original game. And when heading to Ravenholm, I felt that same level of terror I did playing the original when I was a kid!

Read more about my hands-on and in-depth look at the tech running it!

One of the Nvidia robots we could see today

Nvidia Isaac GROOT is the platform announced back at CES 2025, which will drive forward the humanoid robot developer program. And trust me, it looks insanely cool!

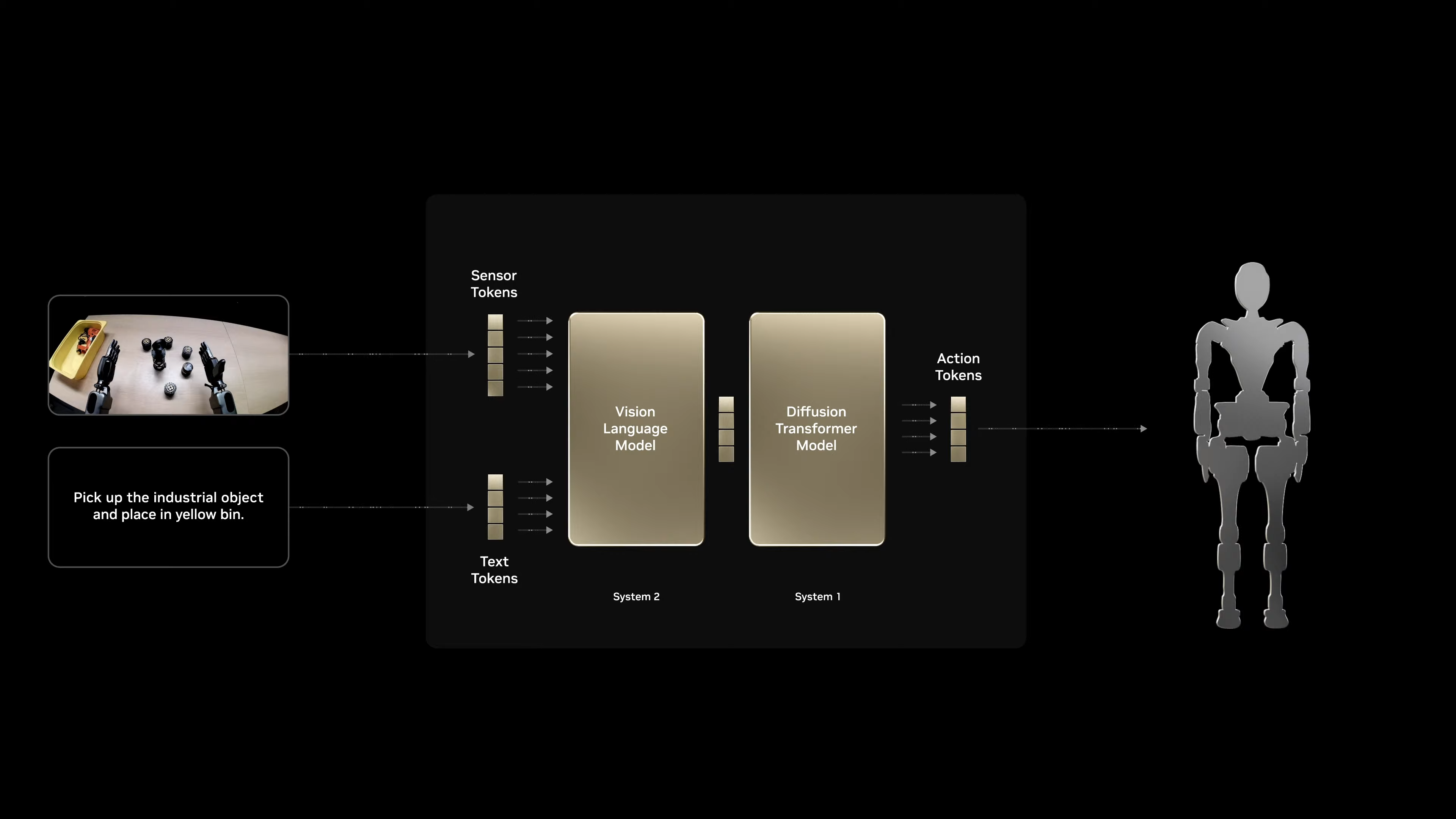

It works by essentially programming in a set of rules (or workflows) for it to stick to. At that point, it can learn to adapt to situations, be teleoperated by a human, work with vision language models and LLMs to talk to you, and even be dextrous enough to solve a Rubiks cube.

If there's something I'm expecting to see, it's definitely this.

The stream has started! Of course, we're counting down to the main event, so we're just seeing some pretty graphics... I wonder if this is running with RTX neural rendering?

Also given what happened when DeepSeek launched (spoiler alert: the US stock market did not like that), this has quickly become Jensen's big chance to show Nvidia is still good at the forefront of this AI gold race — offering the shovels and seives (blackwell GPUs) to companies trying to win.

And the stock price shows investors are a little nervous about what this will entail.

Two minutes left!

By the way, if the Project Digits isn't a sneak peek at what kind of CPU Nvidia could make on Arm, I'll eat my hat.

Of course this is a supercomputer, but let them cook.

We're a little late starting...

Must be some hiccup or final touches to make things perfect. Maybe it's to find just the right leather jacker for Jensen!

Let the show begin!

We're getting quite the cinematic intro showing how AI tokens can help with healthcare, transportation and more — all from this Her-esque AI avatar.

AI PROSTHETICS!? Count me in.

Jensen's on stage, and it's time to learn what Nvidia has in store. Strap in — the leather jacket fit is good and Huang has no teleprompter!

A lot of sponsors have joined the GTC party across retail, healthcare and transportation! It'll be interesting to see how Nvidia's AI tech can impact these areas.

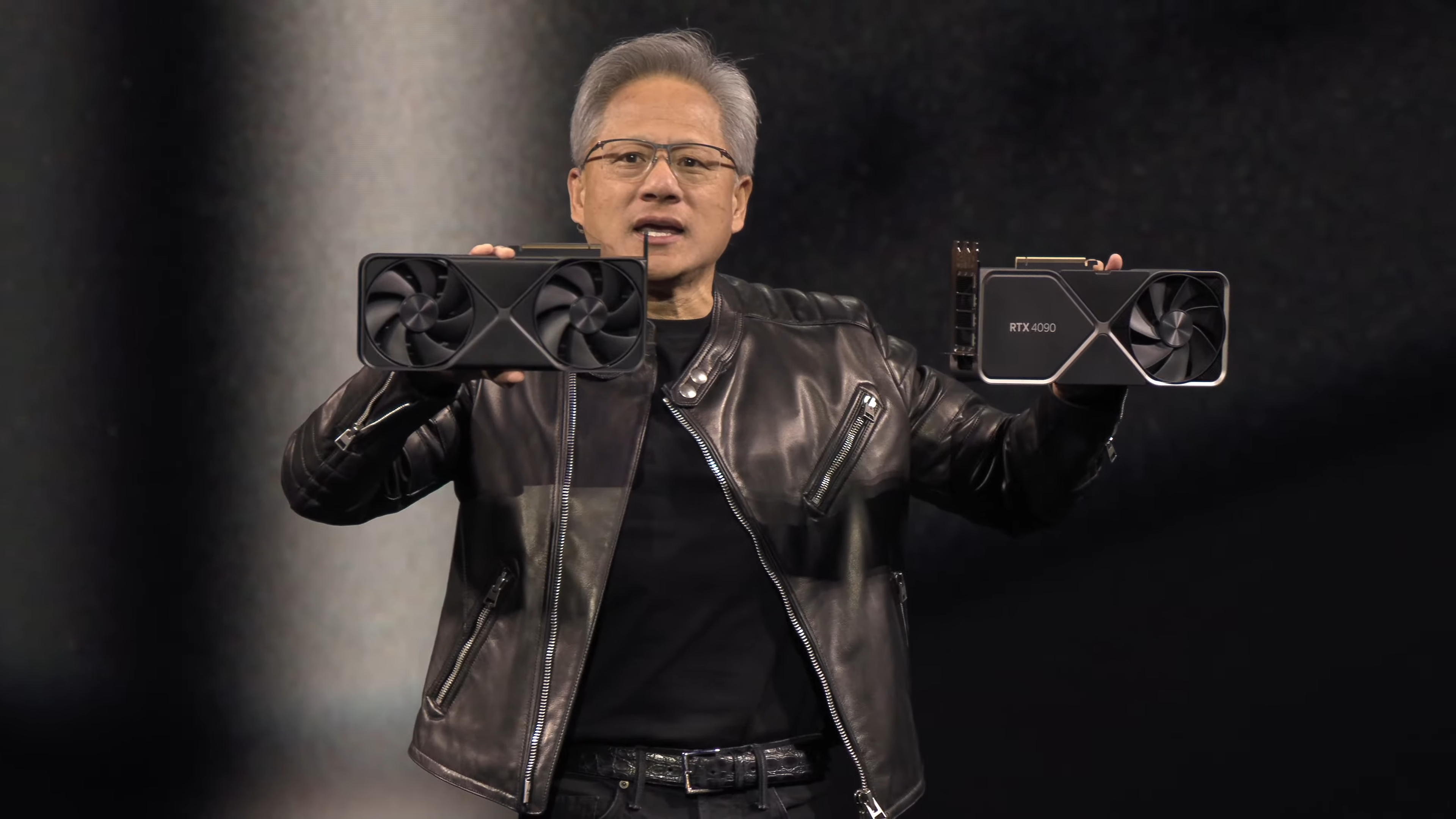

Jensen's just took a second to hold probably the one last remaining RTX 5090 that hasn't been bought/scalped right now. Fingers crossed he puts it on the stock list for some lucky gamer to buy.

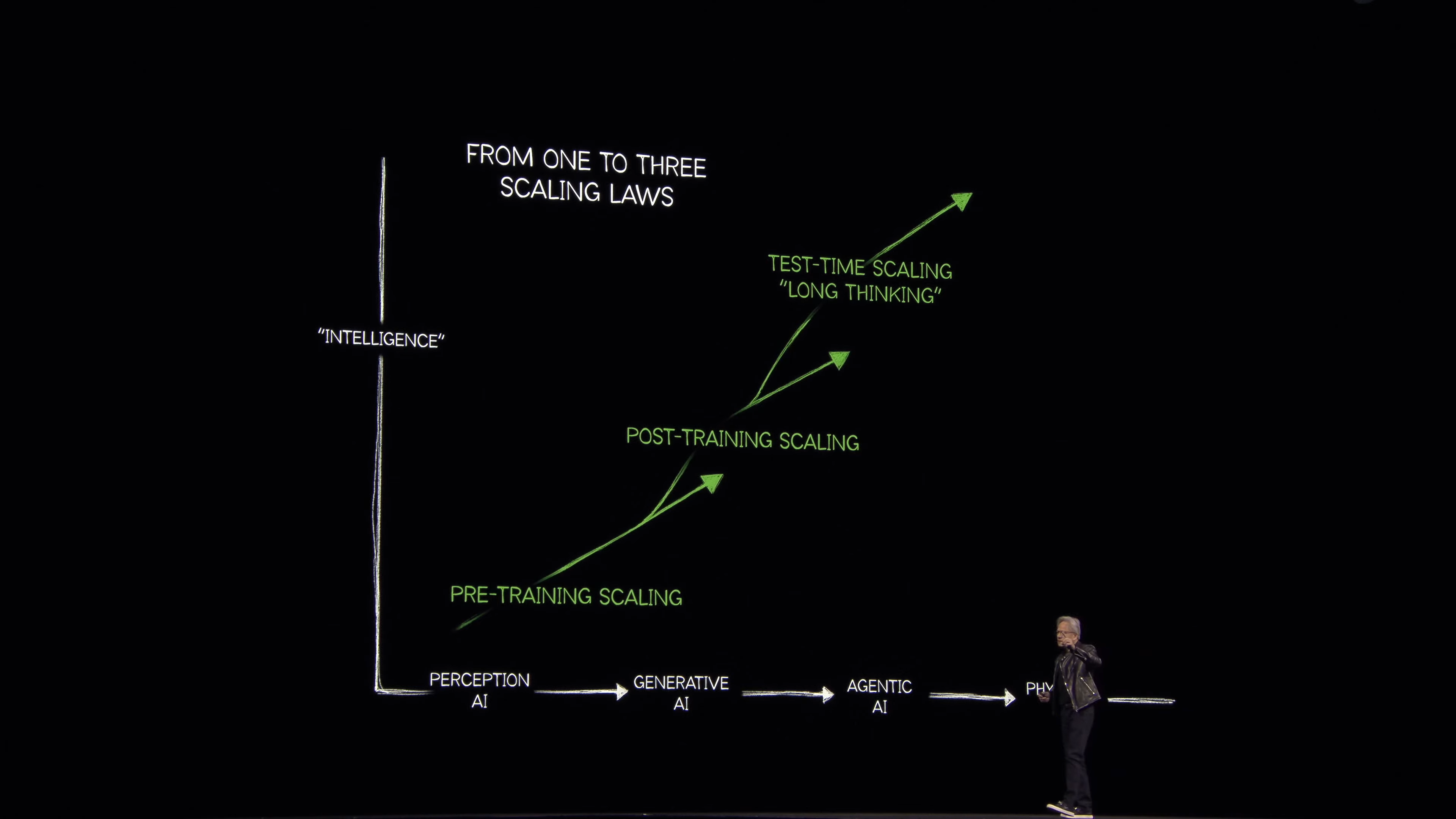

This graph looks familiar... We saw something similar to this at Nvidia's CES 2025 keynote. Currently, we're heading into the Agentic AI phase, and Jensen is sure to tell us a bit about Physical AI too.

Nvidia wants...to grow San Jose?

At the moment, Jensen's just talking about the evergrowing crowds to GTC, which led him to say that to fit more people, Nvidia will have to "grow San Jose." He says the company is working on it — obviously a joke but hopefully there isn't some robot demolition force making room for a bigger stadium!

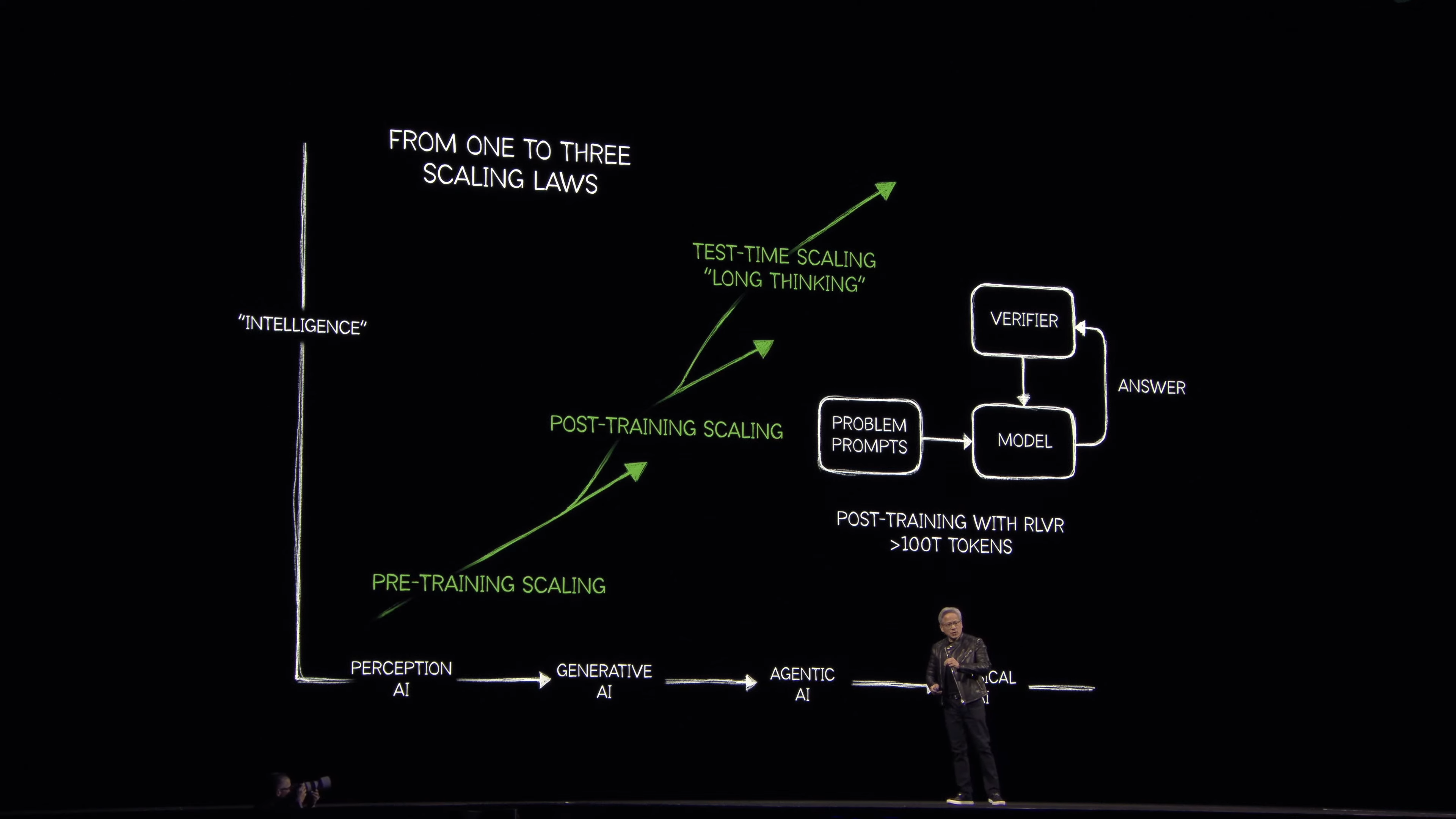

Here is the key question that Nvidia is looking to answer: how does AI scale and learn at "super human" rates?

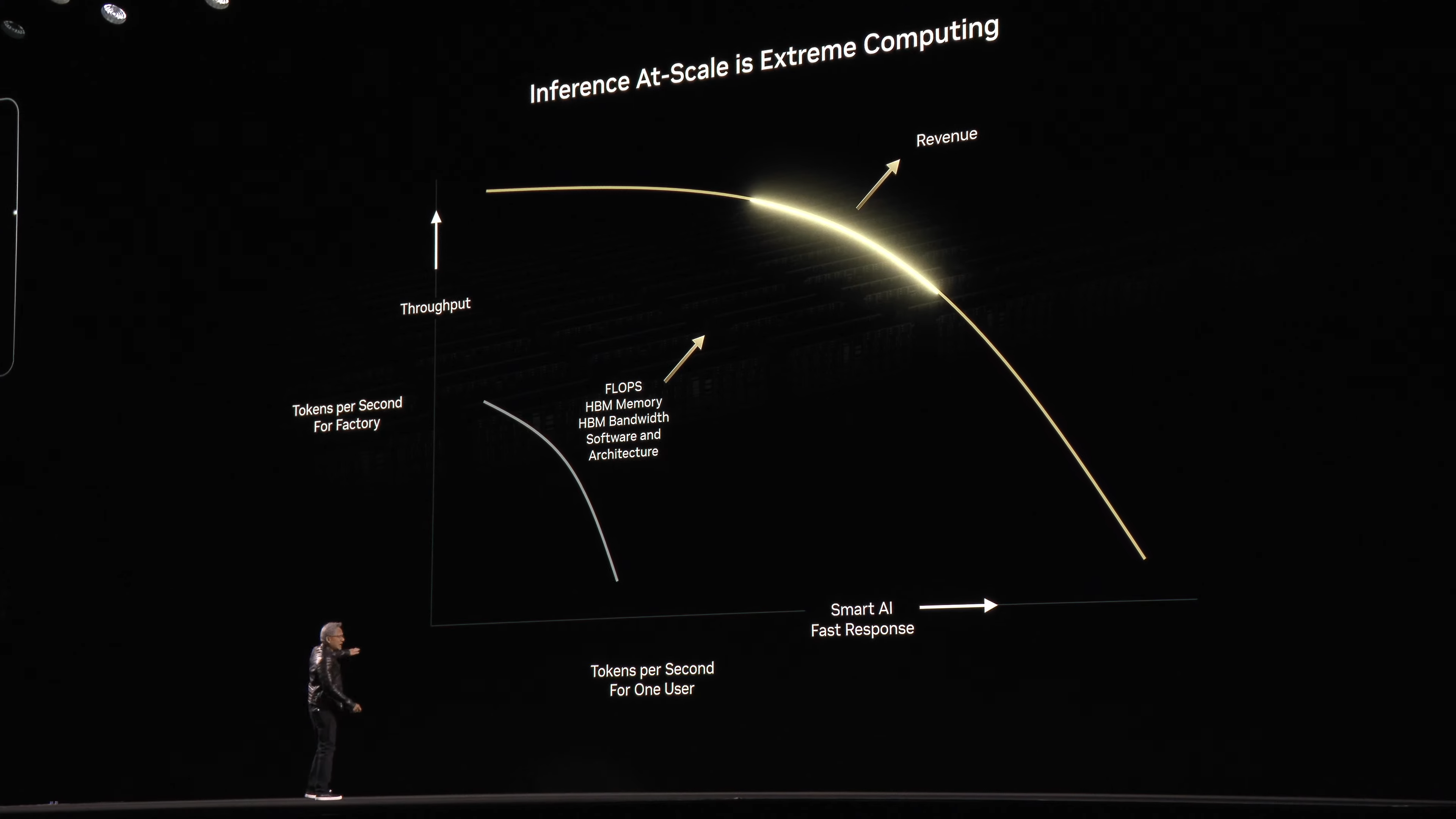

The demands of agentic AI and reasoning have been far greater than any company expected.

Reasoning models require a whole lot more AI inference compute than the one-shot answer based on pre-trained data — running and re-running that thinking through a chain of reasoning, some planning, and give you a step-by-step.

This results in a whole lot more tokens being used — easily 100x more tokens.

And to keep it running at a pace that people are used to with simpler AI chatbots of past, AI inference computing needs to be 10x faster.

How is this done? Well, there has to be a solution without human in the loop to create reinforcement learning and verifiable results to your reasoned answers.

And to solve the problem, we're at a point now where robots are able to teach the AI reasoning model — taking a "robotic approach" to remove the human in the loop. This, of course, has drastically increased the amount of power needed.

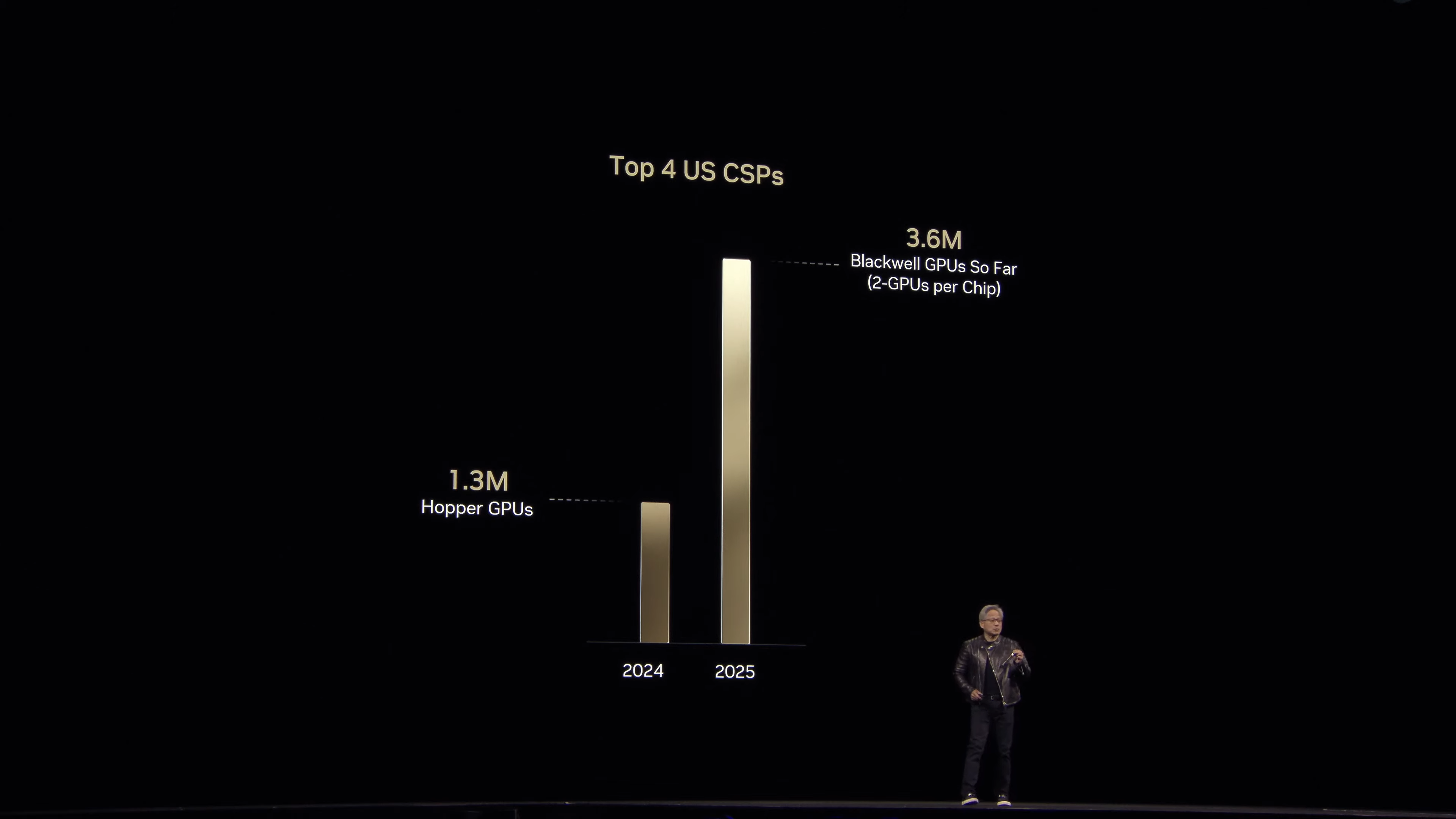

To demonstrate that Nvidia is the shovel and pickaxe in the AI gold rush, we're now seeing just how many are being sold.

Jensen's shooting his shot and projecting $1 trillion in revenue by 2028.

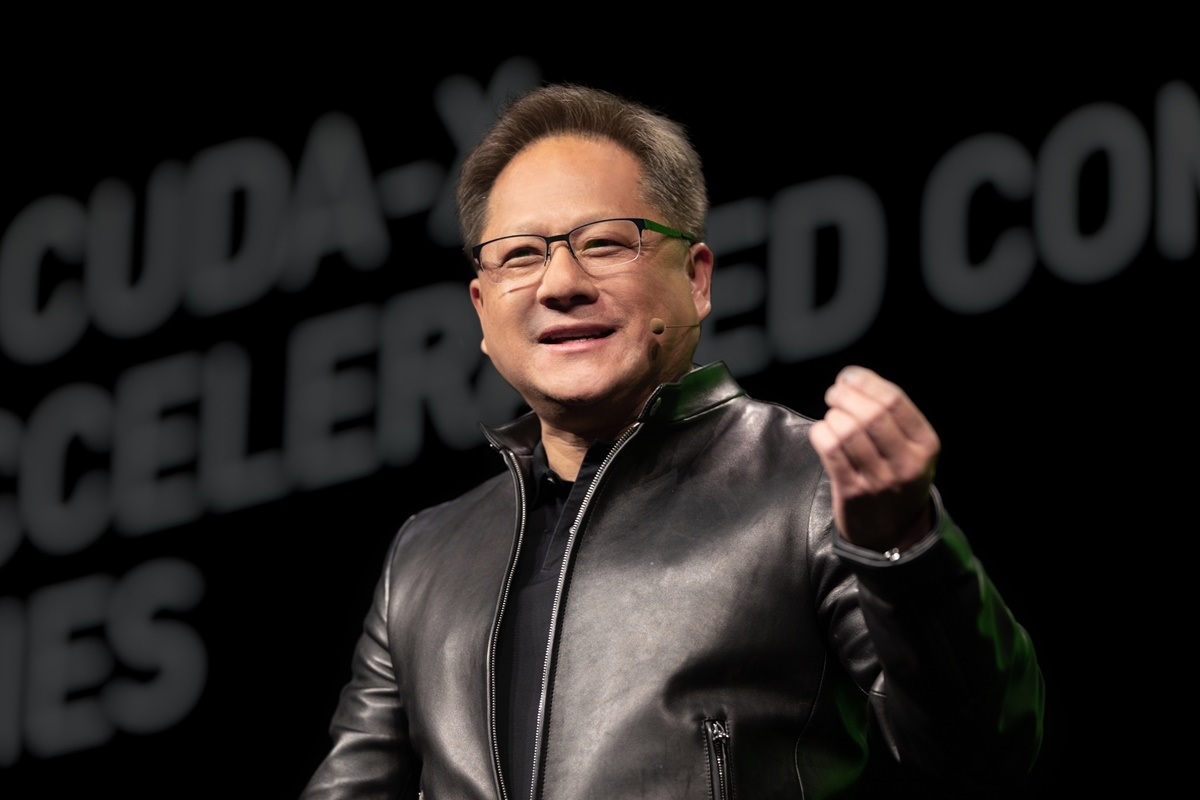

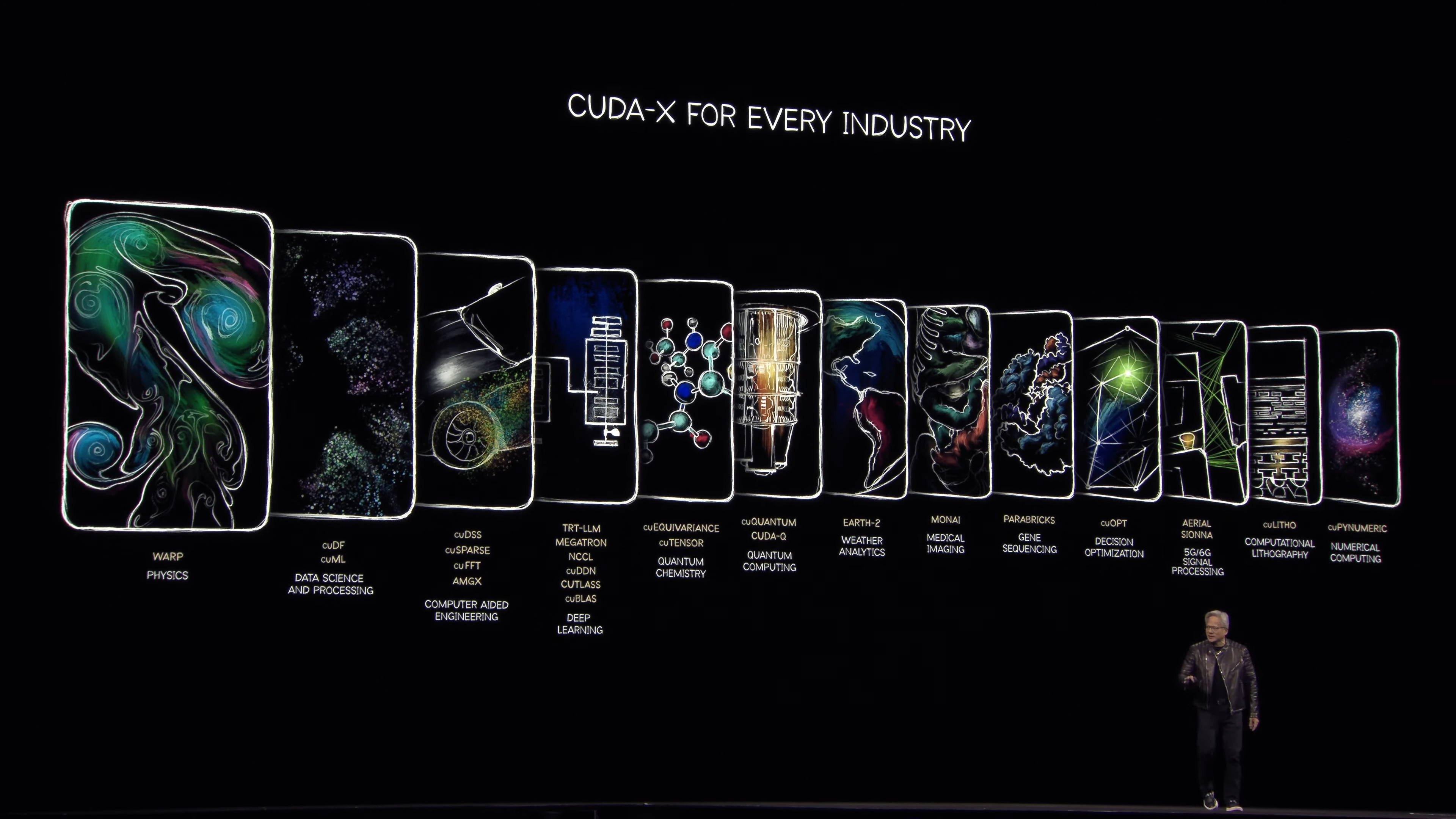

And here's where those AI frameworks can apply. Nvidia's Cuda-X libraries are helping across DNA gene sequencing, 5G/6G signal processing, quantum computing, medical imaging, physics and more.

Well, I never knew a GPU could be used as a 5G radio. But the signal processing capabilities of CUDA cores are there!*

*don't expect your iPhone to have RTX in it next year. This is for companies!

"We've now reached the tipping point of accelerated computing"

Previously, Nvidia has been using slow traditional computers to build faster computers for everyone else. Now, with Blackwell been 50,000x faster than the first CUDA, Jensen claims that we've hit an inflection point.

Time for AI

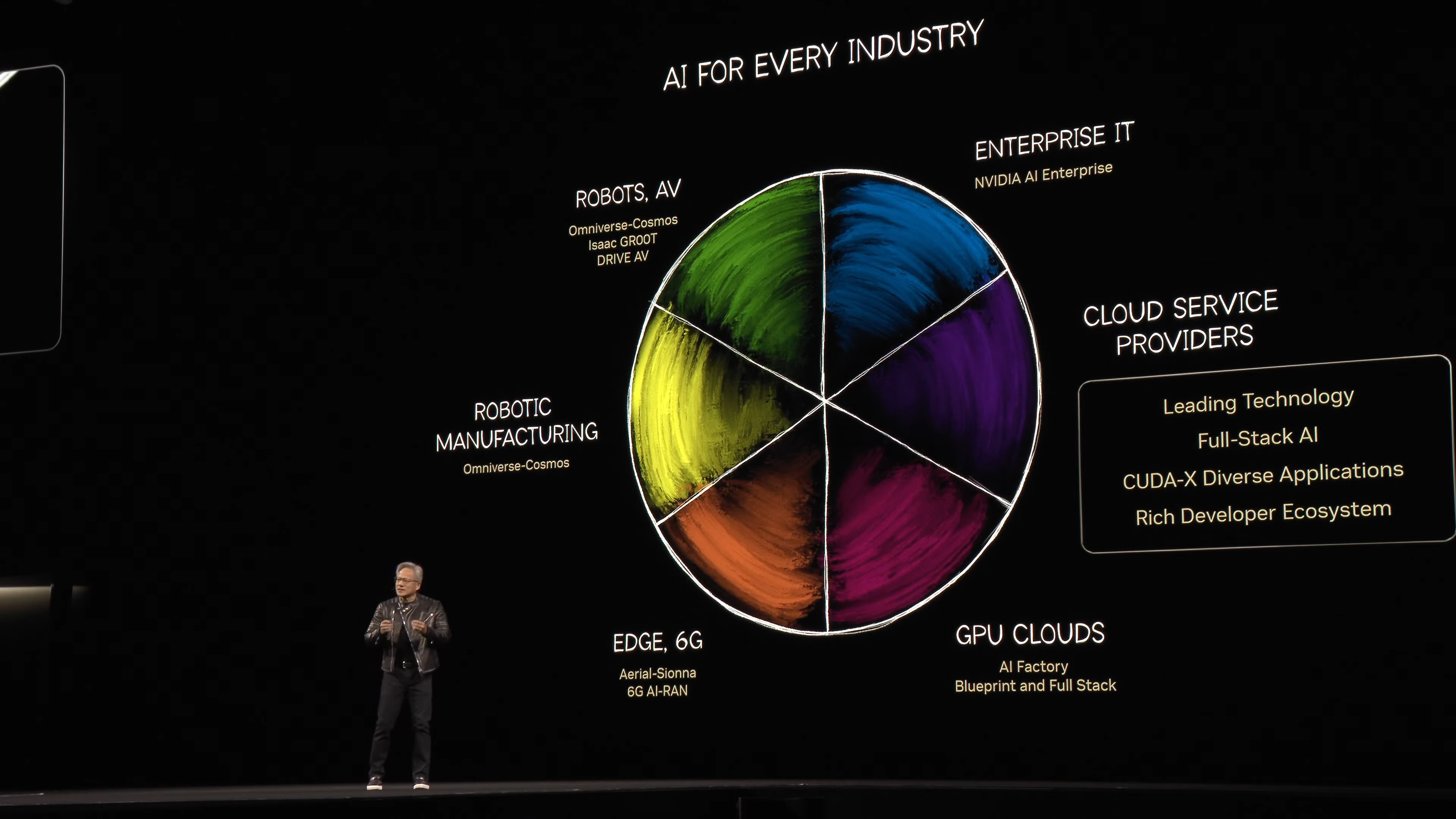

Jensen has now turned his attention to the AI pie — I predict he's about to show how Nvidia is able to help each of these stacks.

Full self driving?

After announcing a full AI-driven radio stack with T-Mobile, Cisco and more, we're now moving over to autonomous cars.

Nvidia is teaming up with GM to help create autonomous cars. This AI partnership will reach into the factories and infrastructure, but also to "AI in the car."

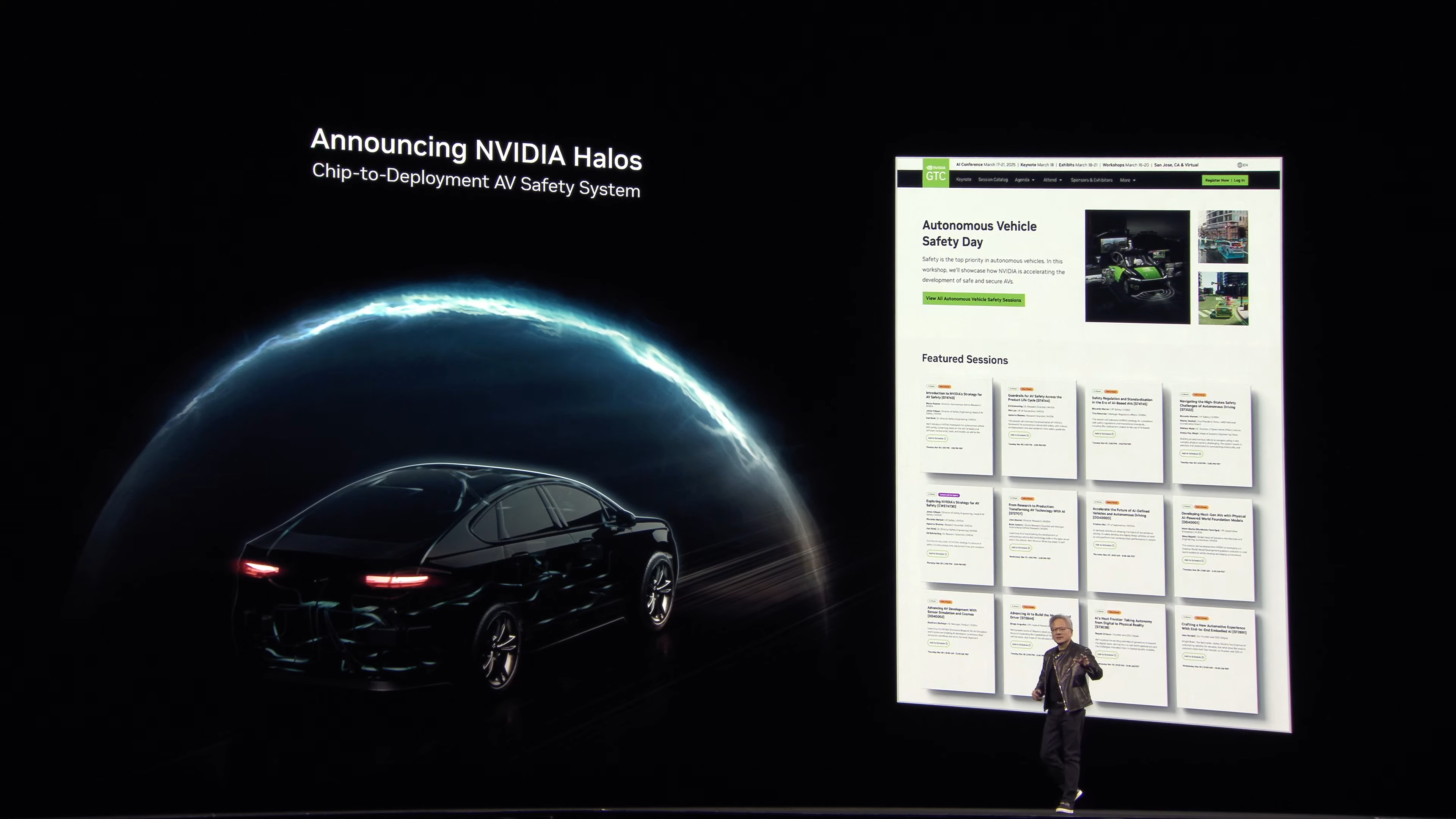

Nvidia Halos is a commitment to safety and transparency in self-driving cars. Every line of code in FSD programs Nvidia is cooking will be safety assessed by a third party. This could be huge for Waymo!

Now we're seeing the reasoning model in action for self-driving! Omniverse and Cosmos is able to train itself, learn from variations and improve itself — evaluating its surroundings.

On top of that, driving environments are created to generate a digital twin to continue training in a diverse range of scenarios. This is a crazy huge step forward!

Time for data centers

This is probably the part that a lot of investors have been waiting for...

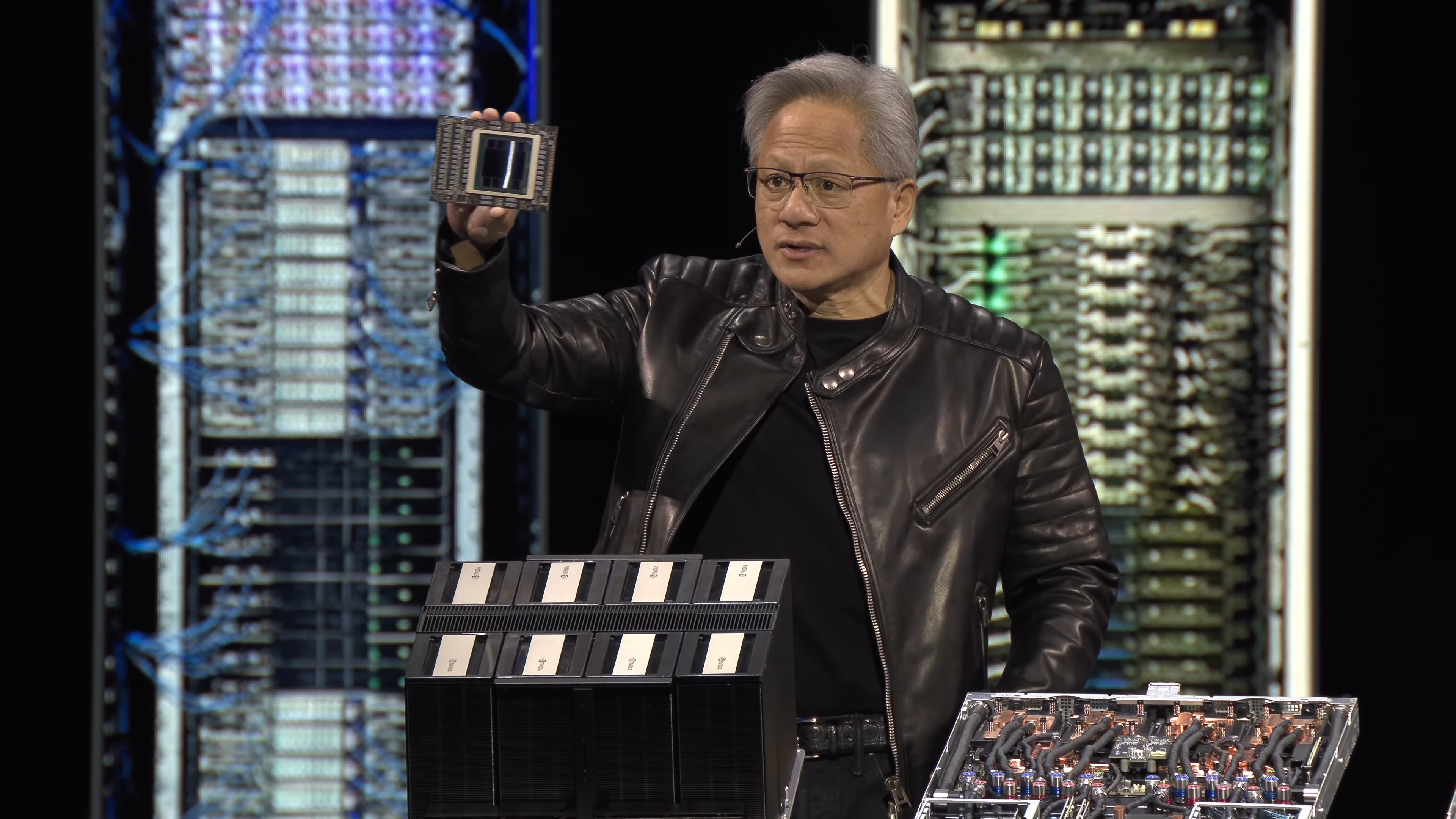

Each of these racks have 8 Blackwell GPUs in it... I know this is all for AI supercomputers for server arrays, but I just really want to play Cyberpunk on it!

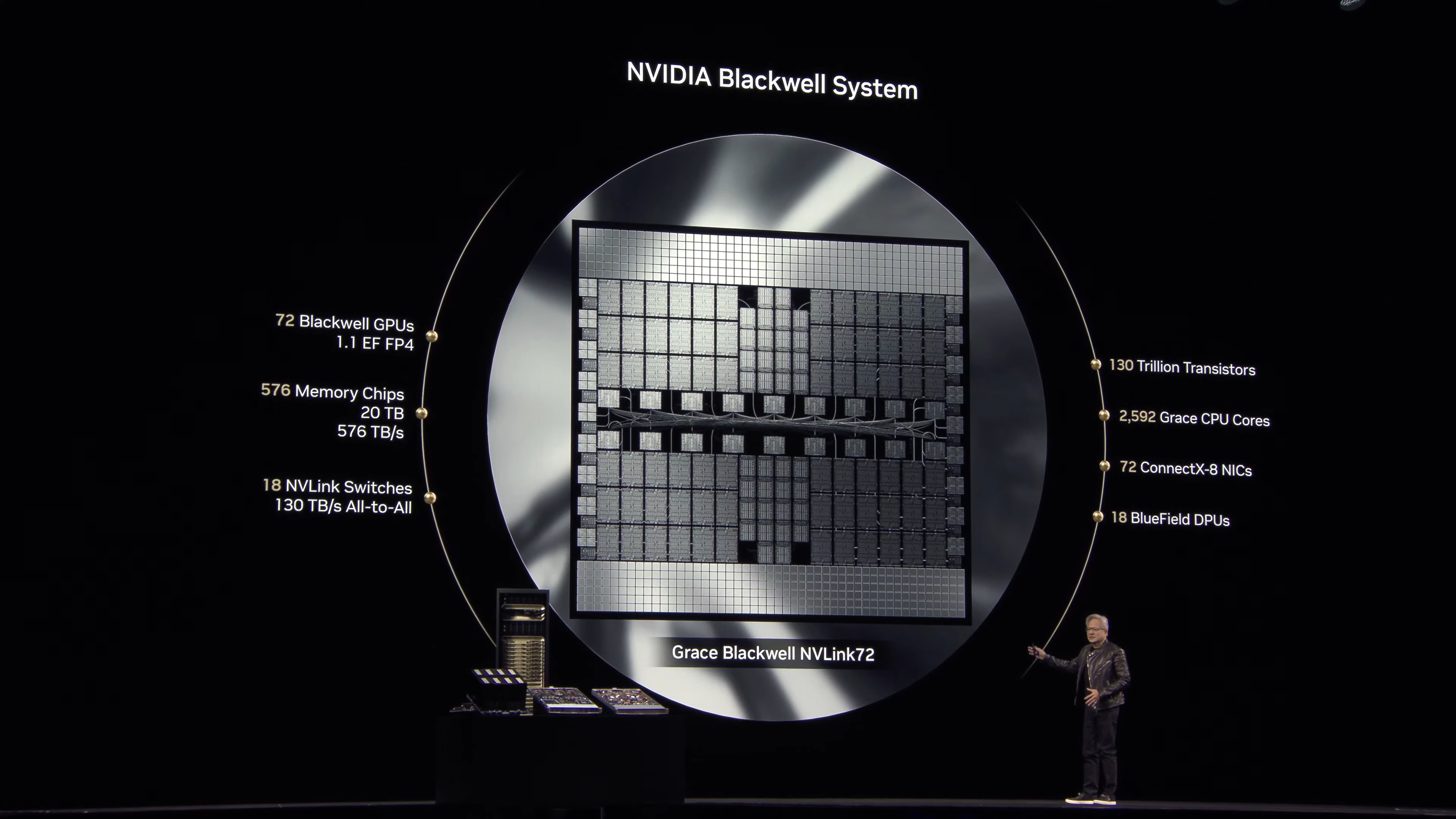

This is the chip Nvidia wants to make, which is theoretically impossible (1.7 trillion transistors for example). So that's why scaling up to these racks is important for the future of AI computation.

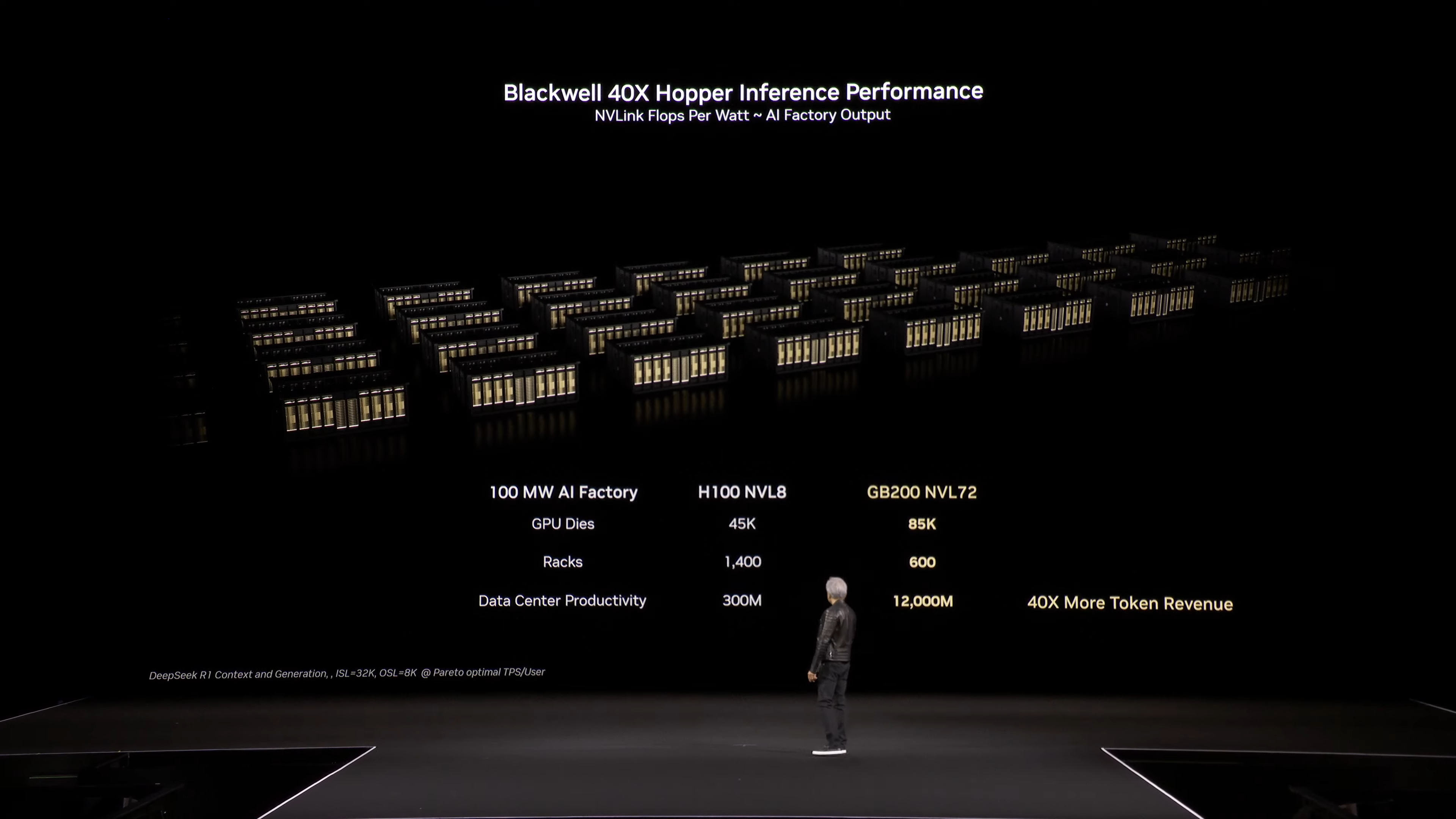

And for the business of AI, extreme efficiency is important here. Since everything AI-based runs on tokens, there needs to be a balance between accuracy and speed, while also generating token-based responses in a way that doesn't impact the company's bottom line.

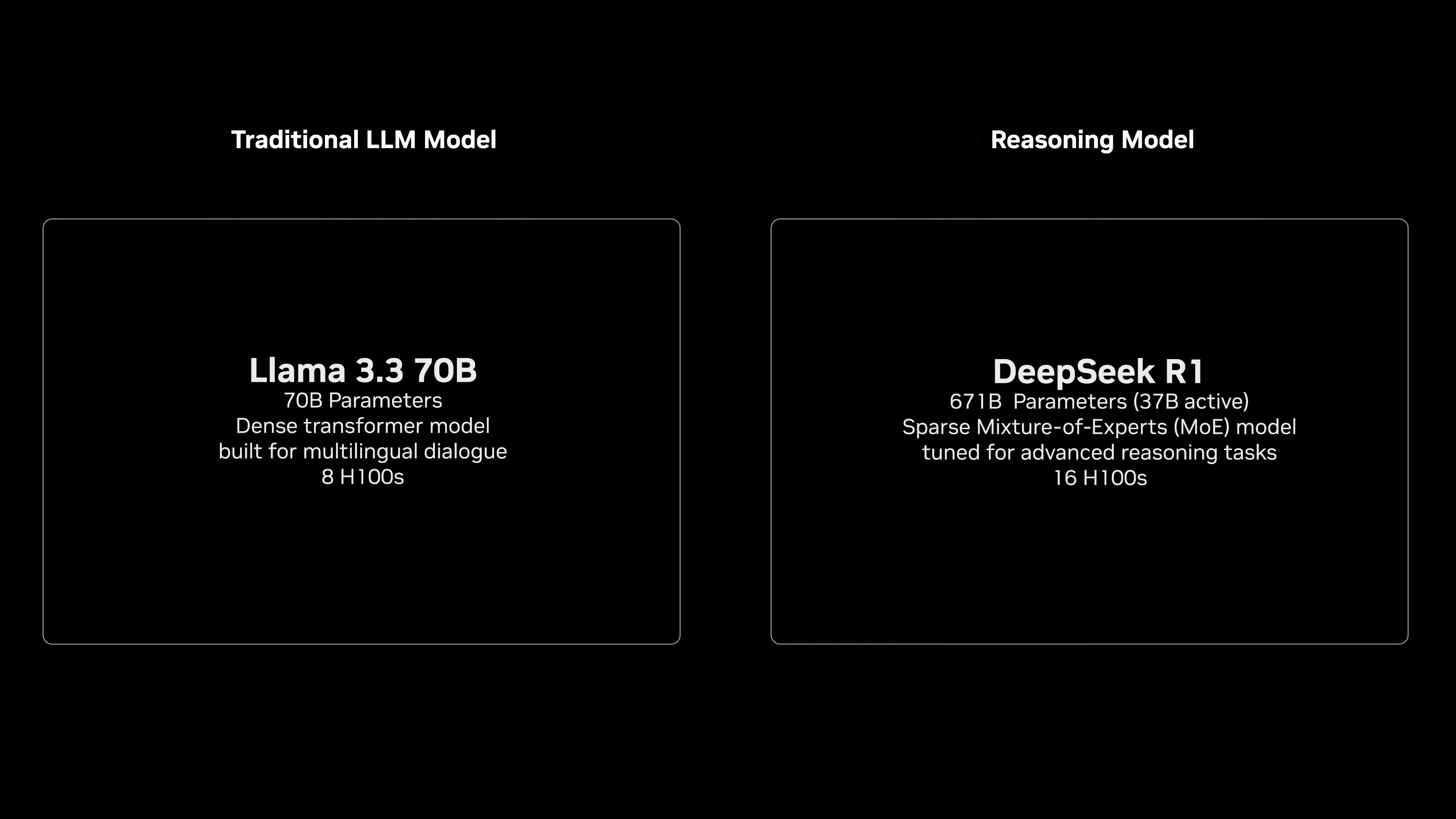

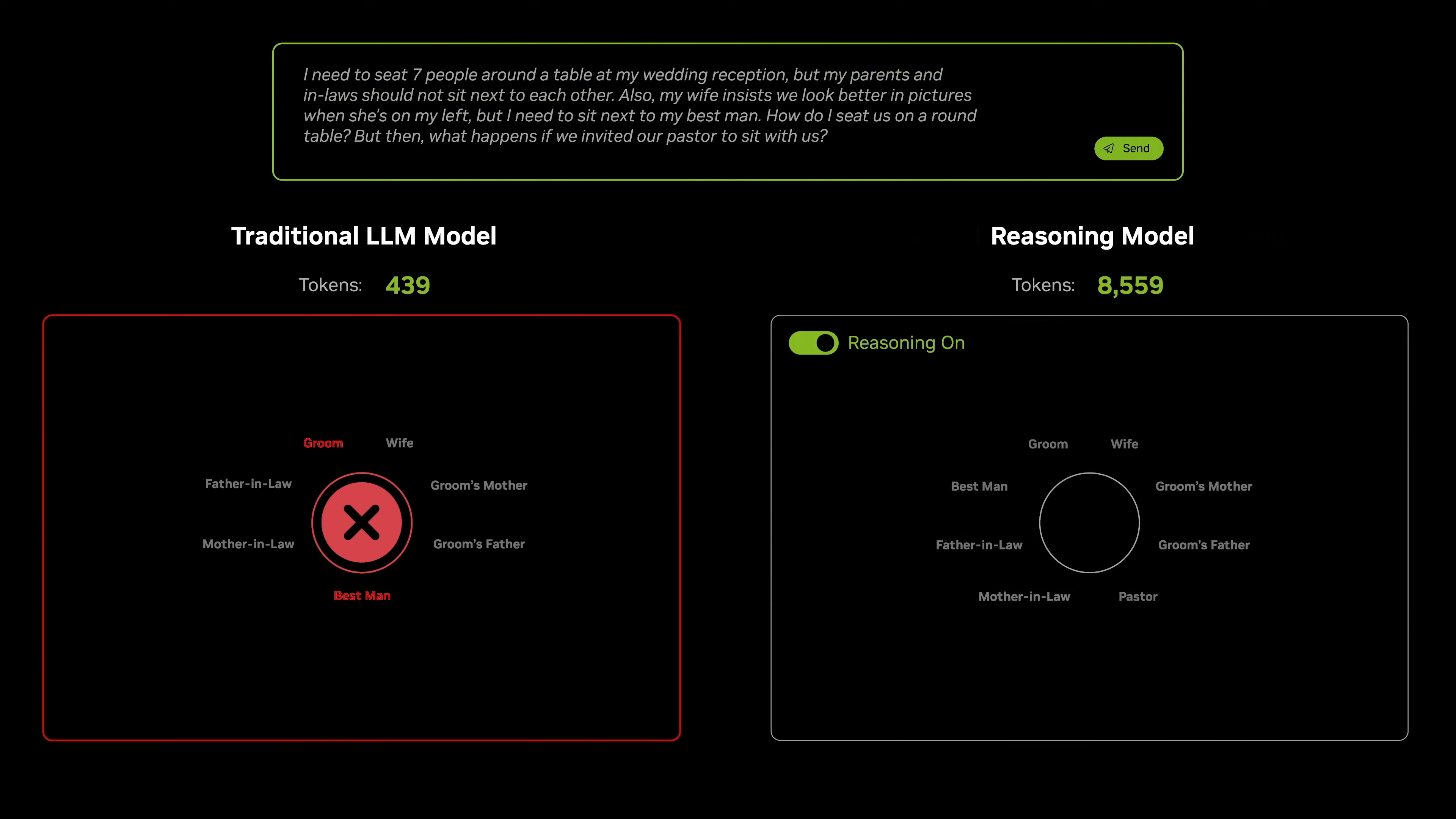

To demonstrate this, Nvidia put Llama's traditional model up against Deepseek R1's reasoning model. The one shot took 439 tokens — short time but got the answer wrong. Meanwhile, DeepSeek took almost 9,000 tokens, a lot more computation and time.

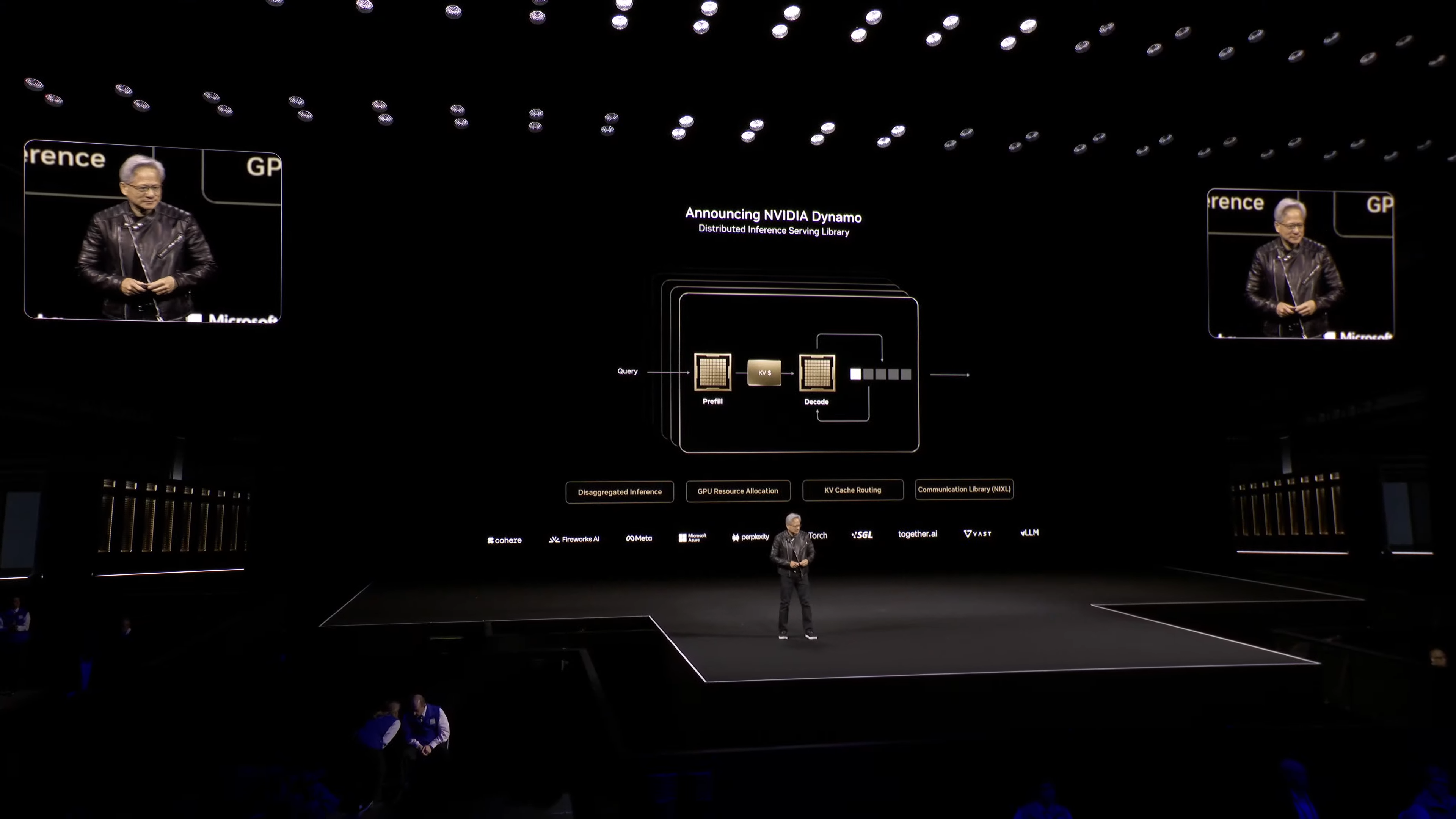

Introducing Nvidia Dynamo

To do AI inference computing, companies have to process trillions of bytes of information with GPUs to generate a single token. And that pipeline includes backfill of information and decoding of said information (have I said "information" enough)?

On top of that, the emphasis on either of these two sides of the pipe can vary based on your use case: e.g. chatbots need to decode a lot of information, whereas a company may need more of the backfill of information.

Well the OS to back that up hasn't really quite been up to scratch, and that's where Dynamo comes in — the OS of an AI factory. It's able to customize a data center to suit your uses. Also Perplexity is partnered up for this!

With Dynamo alongside Blackwell GPUs, and the NV Link tying up all the GPUs to work together, you can see just how many more tokens can be generated every second.

It is a massive uplift from Hopper - what many companies are currently using. a 25X uplift

RIP Hopper. Blackwell just annihilated Hopper with 40X more tokens generated for the same revenue. This will be significant for AI companies!

Turns out the investors may have liked those past 20 minutes, as there's a bit of an uptick.

Blackwell Ultra is set to come in the second half of this year, and Jensen is moving on to telling us what's next after this...

Going forward into the back half of 2026, Vera Rubin is the next step beyond Blackwell with faster speeds across the board. And then with an extreme scale up in 2027, Rubin Ultra is going to vastly increase bandwidth and speeds again.

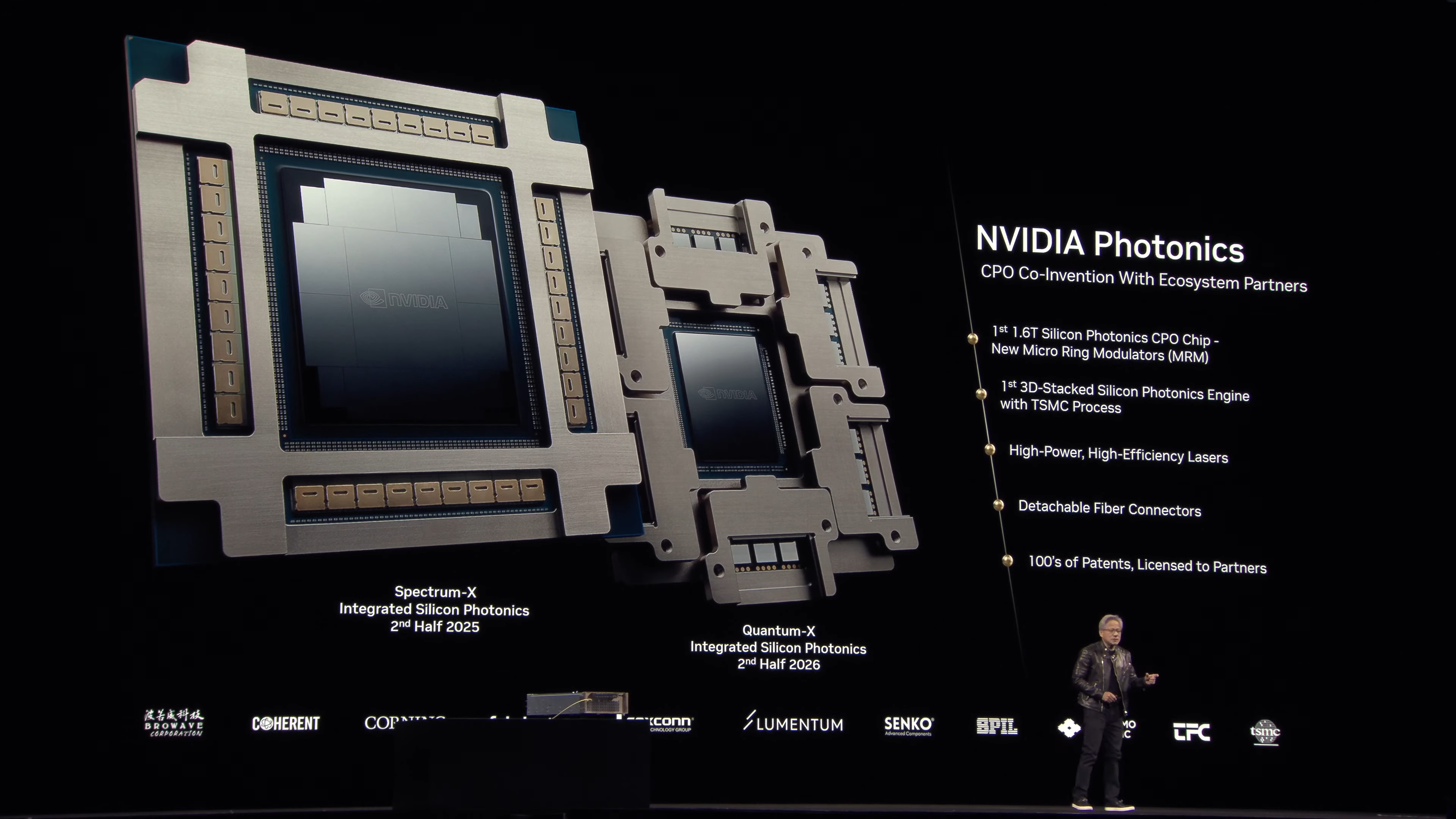

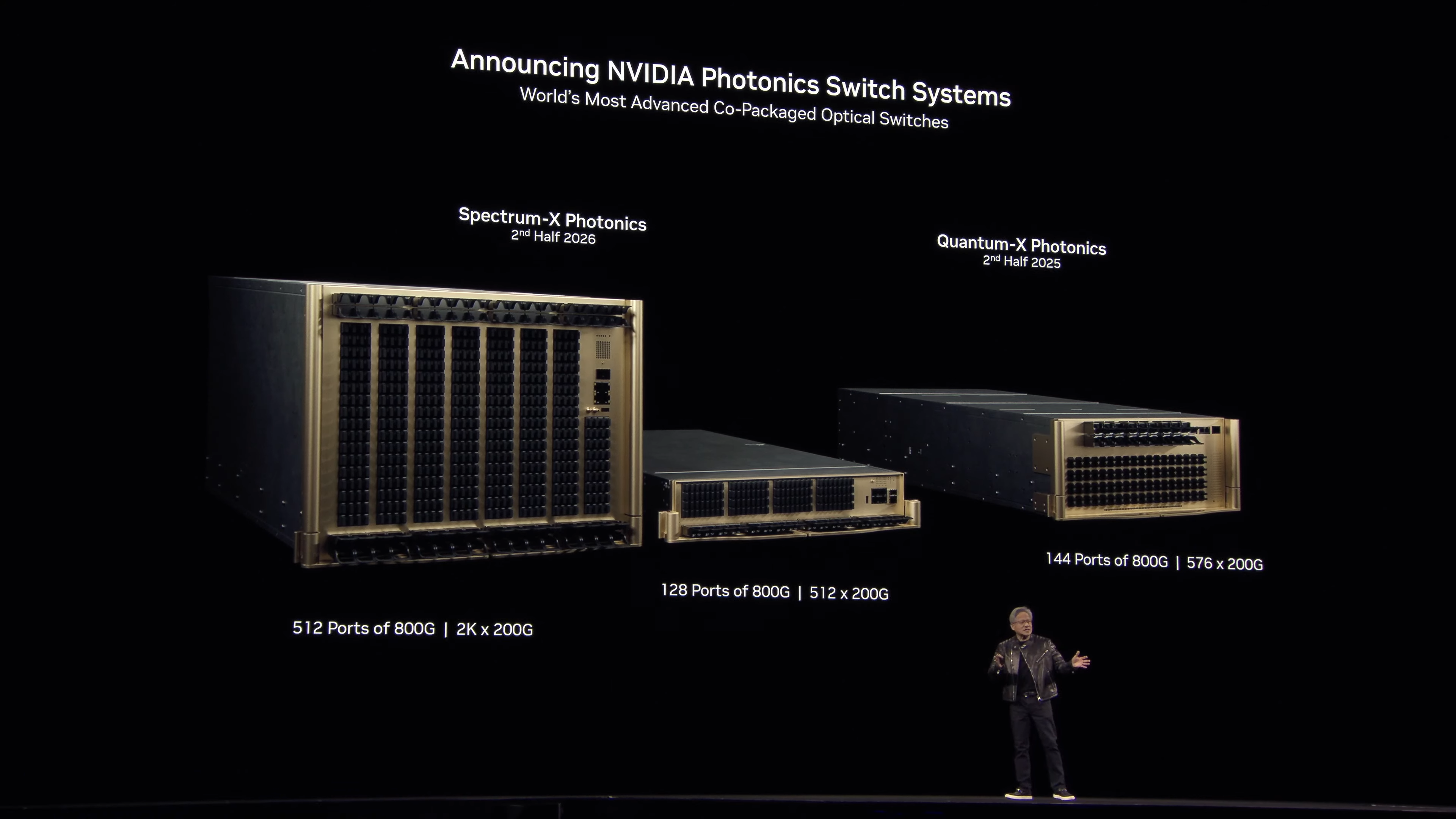

Time for some Photonics to improve the bandwidth and speed up data transfer. We've seen some of this happening in the background, as Nvidia did announce a partnership with TSMC.

To help manage power and open up bandwidth, there is a new Photonic system that is packed with mirrors and micro lenses to control the flow of light carrying data.

All about lasers to transmit data in a data center, here's the upcoming releases for Nvidia's Photonics Switch Systems, which could save many megawatts and enable businesses to redeploy into more racks.

wait...did the stream just go down? Can anyone else verify?

Literally at the moment it looks like Nvidia was about to talk about Project DIGITs, the stream has gone down!

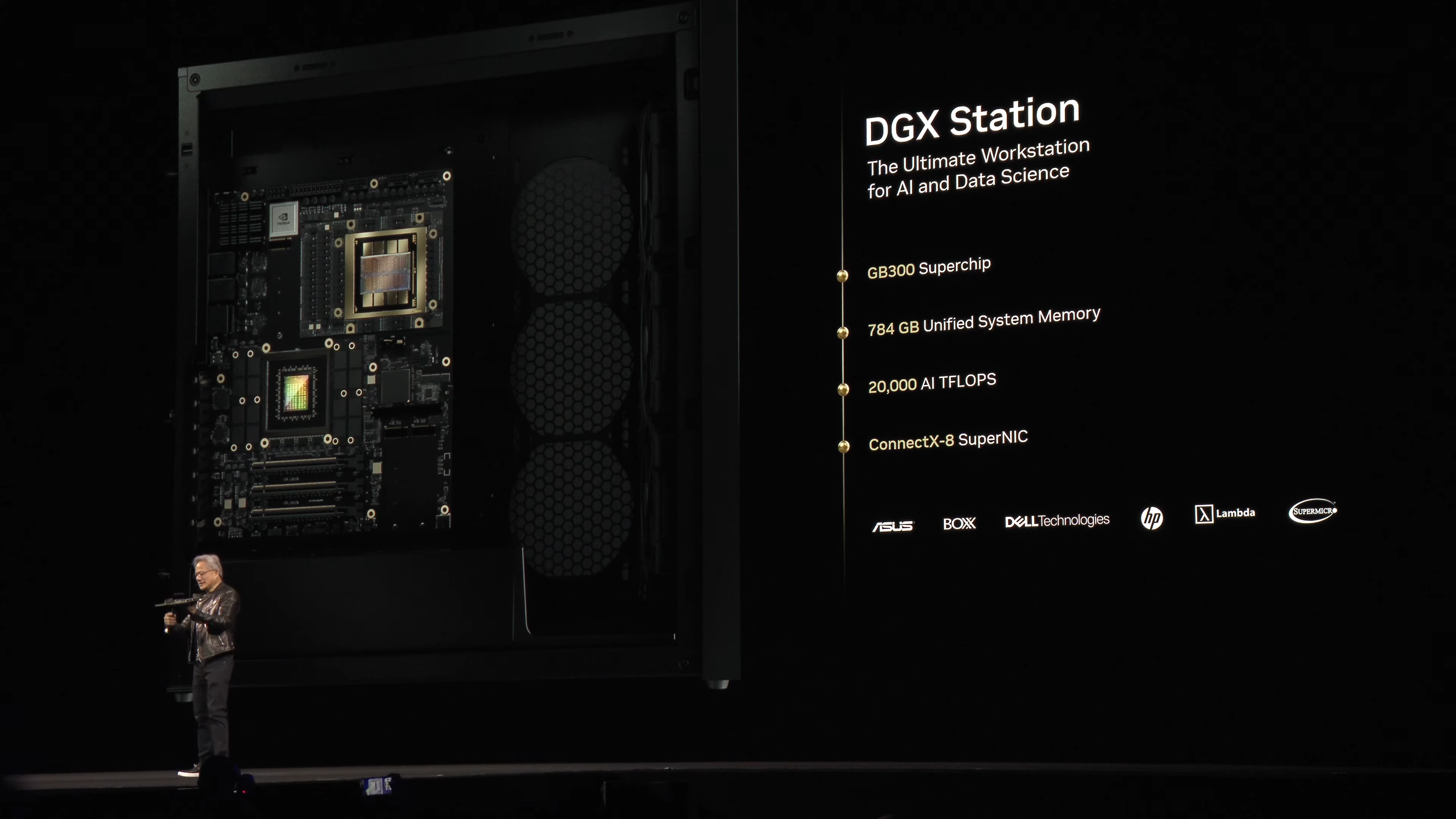

OK so we only got the back end of this, but it looks as if we just got news on the DGX Spark and a DGX Station for AI and data scientists. That Spark looks mighty DIGITS-ish.

Companies like Asus, Dell, HP and Lenovo are jumping in to build these for enterprise.

Let's talk about robots!

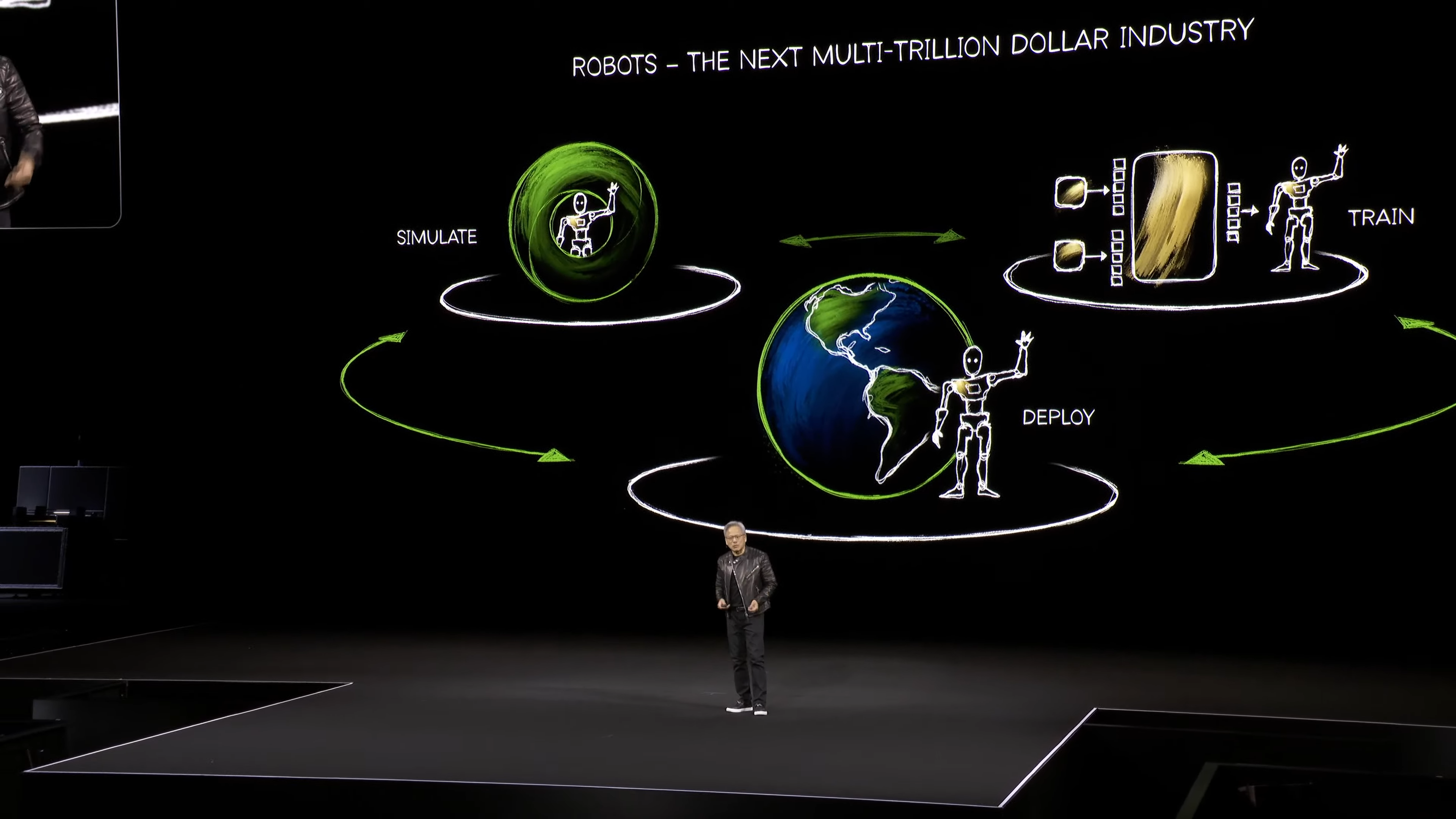

To solve a work crisis, Nvidia's bringing general robots to the party. Time to find out more about Physical AI!

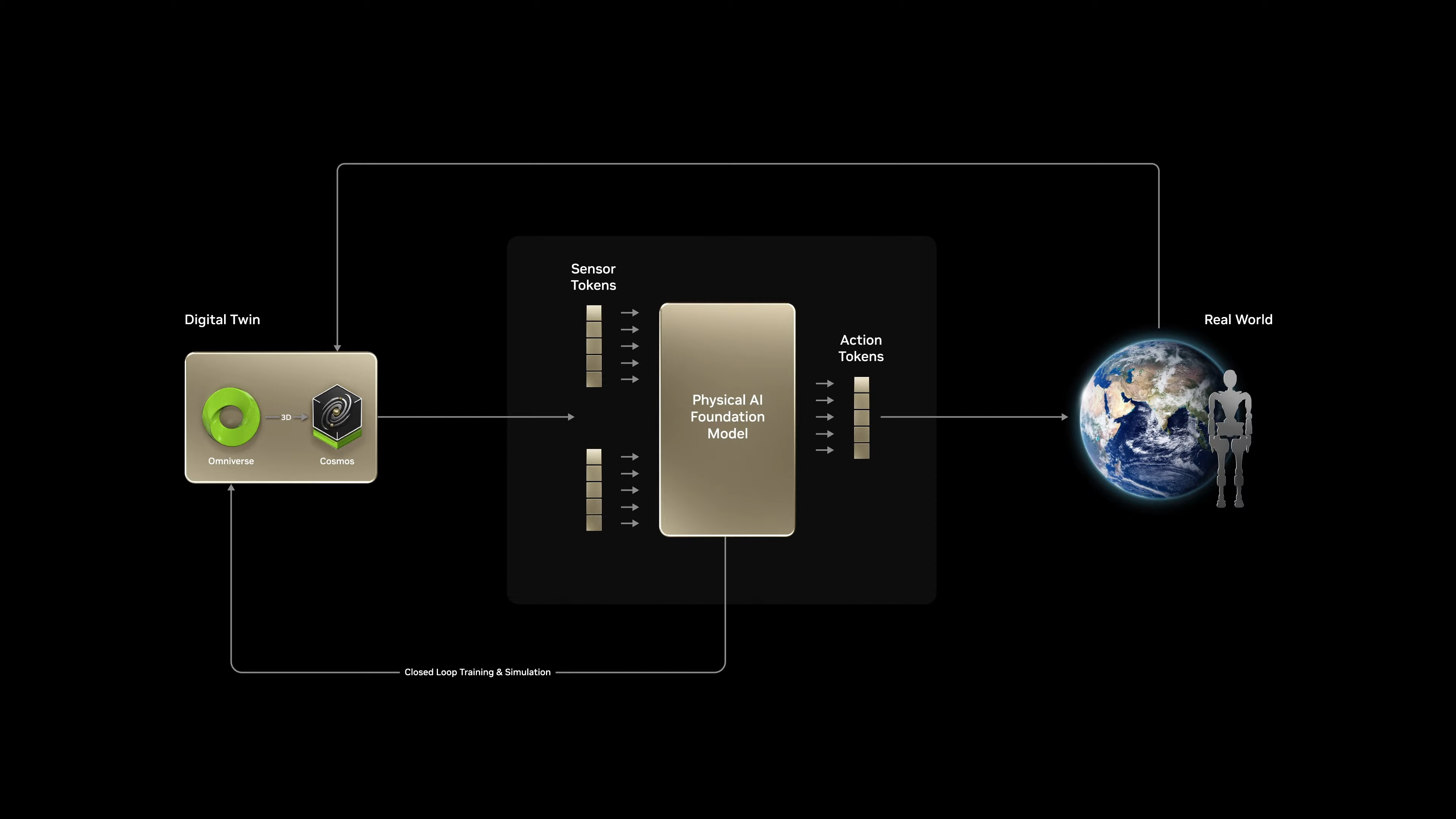

The model for this is very similar to self-driving cars — data trained on simulations generated by computation to encourage reinforcement AI feedback. Policies are tested via the digital twin to establish how these will work in the real world. And then they're deployed to work.

Nvidia Groot N1 is the AI model that will run the robotics AI! We're finding out how they can work collaboratively, learn independently and optimize across many environments.

Damn, this robot has better drip than me.

Omniverse with Cosmos brings it altogether

We heard about these a little at CES! This is what will be used to create an infinite amount of simulations to train a robot to do what it does.

Coming with this too is a fascinating new physics engine to train the robots on tactile touch and dexterity — with incredible granularity. Named Newton, Nvidia has partnered with Google DeepMind and Disney!

Oh, and that Groot N1 foundational model for robots? It's going to be completely open source! Expect to see faster development in this area than you think!

And that's GTC!

As predicted, very data-center focused, but we got a lot to glean from that too! Namely, huge developments in autonomous cars, new Nvidia computers for enterprise, a huge roadmap for AI data centers, and robotic AI.

Something tells me this is going to be a very good few years for Nvidia, but we'll wait until we see it!

Dell Pro Max is here with Nvidia Blackwell RTX Pro GPUs

Along with Nvidia's big AI announcements, Dell is sharing a whole lineup of PCs fitted with Team Green's latest — aiming to set "the standard as the AI developer PC."

The new Dell Pro Max portfolio includes several laptops and workstations housing Nvidia's most powerful professional graphics chips, including the Nvidia GB10 and GB300 Grace Blackwell Superchips, along with Nvidia RTX Pro Blackwell Generation GPUs in laptops.

Just how powerful, you ask? Well, the Dell Pro Max with GB10 offers up to one petaflop (1000 TFLOPs) of AI computing performance and 128GB of unified memory, while the GB300 model delivers a whopping 20 petaflops and 784GB unified system memory. Yeah, a lot.

As for the Dell Pro Max laptops, you can also expect them to come with the latest Intel Core Ultra (Series 2) and AMD Ryzen CPUs to power these Copilot+ PCs.

Expect these PCs with RTX power to land this July, with the more powerful GB10 and GB300 coming later this year.

Here's a quick rundown of the newly announced AI PCs from Dell:

- Dell Pro Max 14, 14 Premium and 14 Plus

- Dell Pro Max 16, 16 Premium and 16 Plus

- Dell Pro Max Tower, Slim and Micro (desktops)

- Dell Pro Max with GB10

- Dell Pro Max with GB300

Check out Nvidia Isaac Groot N1 in action!

We heard CEO Jensen Huang talk about Nvidia's new open-source foundation model for humanoid robots, and if you're looking for a better look, you can see them in action.

What interests me is the the way the system complies a small number of captured data and multiply it to make a massive data training set, leading to robots learning to copy precise motions like humans easily — and cooperatively with other robots!

It's pretty cool to watch, and while I probably won't see a robot help me put away the dishes in my kitchen anytime soon, the advancements are eye-catching nevertheless.

Yes, self-driving cars will be safer thanks to Nvidia Halos

Look, autonomous vehicles (AVs) have already been driving around, but safety has been a key concern for me. But with Nvidia's Halos safety system in place, it makes self-driving cars far safer on the road.

Using a virtual testing ground called Omniverse, offering "physically accurate" driving simulations, the system can create variations of different, real-life scenarios and work out how to handle them. When anomalies or uncertainties are shown, then safety guardrails will take affect to make sure each situation is dealt with safely.

That's just an oversimplified version of it. For a deeper dive into it all, Nvidia explains it in more detail in the video!

Quantum computing

Nvidia is building a research center in Boston to spearhead its advancements in quantum computing.

The NVAQC (Nvidia Accelerated Quantum Research Center) will integrate quantum hardware with AI supercomputers to enable accelerated quantum supercomputing. The goal is to solve the biggest challenges facing quantum computing, such as turning experimental quantum processors into real-world devices.

“Quantum computing will augment AI supercomputers to tackle some of the world’s most important problems, from drug discovery to materials development,” said Jensen Huang. “Working with the wider quantum research community to advance CUDA-quantum hybrid computing, the NVIDIA Accelerated Quantum Research Center is where breakthroughs will be made to create large-scale, useful, accelerated quantum supercomputers.”

The NVAQC is expected to begin operations later this year.

Surgical robots

Nvidia detailed its vision for robotic surgeons and fully automated hospitals.

Nvidia Isaac for Healthcare, as the company calls it, is a developer framework for AI healthcare robotics that enables healthcare robotics developers to solve medical challenges. Nvidia says Isaac for Healthcare is a domain-specific framework that leverages Nvidia's three computer system for enabling physical AI.

The framework offers capabilities like digital prototyping, hardware-in-the-loop (HIL) product development and testing, synthetic data generation for AI training, policy training, and real-time deployment for medical robotics across: Surgical and interventional robotics, imaging and diagnostic robotics and rehab, assistive, and service robotics.

You can read more via the link above, but if Nvidia's plans pan out, it could revolutionize the medical industry.

How robots learn to be robots

Training robots to perform real-world tasks isn't as simple as they make it seem in your favorite sci-fi stories.

Nvidia aims to address the challenges associated with training robots with Nvidia Cosmos. This platform can speed up synthetic data generation and act as a base for post-training to develop physical AI models to solve these problems.

The video above and associated post go into more detail, but suffice it to say that the future of robotics is more exciting than ever.... provided none of these developments conjure images of Skynet in your mind.

DGX Spark and DGX Station AI PCs

Nvidia announced DGX Spark, which is a new high-performance Nvidia Grace Blackwell desktop supercomputer powered by the Nvidia Blackwell Ultra platform.

The company says this computer will allow AI developers, researchers, data scientists and students to test inference large models on desktops. This can be employed locally or via Nvidia DGX Cloud or other cloud or data center infrastructure.

“AI has transformed every layer of the computing stack. It stands to reason a new class of computers would emerge — designed for AI-native developers and to run AI-native applications,” said Jensen Huang. “With these new DGX personal AI computers, AI can span from cloud services to desktop and edge applications.”

If you're in one of the aforementioned fields, DGX Spark could be beneficial.