Nvidia positions its new GeForce RTX 4070 as a great upgrade for GTX 1070 and RTX 2070 users, but that doesn't hide the fact that in many cases, it's effectively tied with the last generation's RTX 3080. The $599 MSRP means it's also replacing the RTX 3070 Ti, with 50% more VRAM and dramatically improved efficiency. Is the RTX 4070 one of the best graphics cards? It's certainly an easier recommendation than cards that cost $1,000 or more, but you'll inevitably trade performance for those saved pennies.

At its core, the RTX 4070 borrows heavily from the RTX 4070 Ti. Both use the AD104 GPU, and both feature a 192-bit memory interface with 12GB of GDDR6X 12Gbps VRAM. The main difference, other than the $200 price cut, is that the RTX 4070 has 5,888 CUDA cores compared to 7,680 on the 4070 Ti. Clock speeds are also theoretically a bit lower, though we'll get into that more in our testing. Ultimately, we're looking at a 25% price cut to go with the 23% reduction in processor cores.

We've covered Nvidia's Ada Lovelace architecture already, so start there if you want to know more about what makes the RTX 40-series GPUs tick. The main question here is how the RTX 4070 stacks up against its costlier siblings, not to mention the previous generation RTX 30-series. Here are the official specifications for the reference card.

There's a pretty steep slope going from the RTX 4080 to the 4070 Ti, and from there to the RTX 4070. We're now looking at the same number of GPU shaders — 5888 — as Nvidia used on the previous generation RTX 3070. Of course, there are plenty of other changes that have taken place.

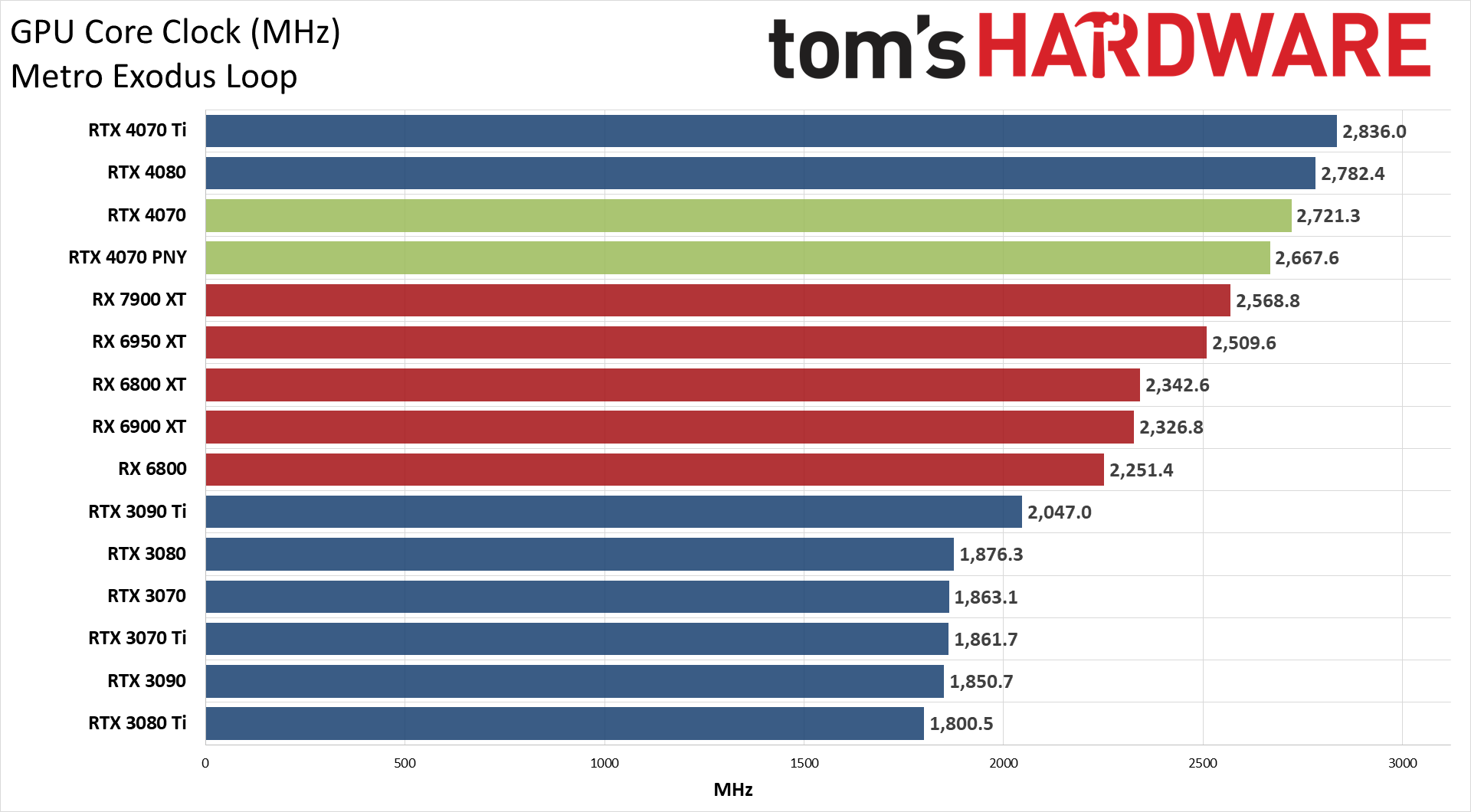

Chief among those is the massive increase in GPU core clocks. 5888 shaders running at 2.5GHz will deliver a lot more performance than the same number of shaders clocked at 1.7GHz — almost 50% more performance, by the math. Nvidia also likes to be conservative, and real-world gaming clocks are closer to 2.7GHz... though the RTX 3070 also clocked closer to 1.9GHz in our testing.

The memory bandwidth ends up being slightly higher than the 3070 as well, but the significantly larger L2 cache will inevitably mean it performs much better than the raw bandwidth might suggest. Moving to a 192-bit interface instead of the 256-bit interface on the GA104 does present some interesting compromises, but we're glad to at least have 12GB of VRAM this round — the 3060 Ti, 3070, and 3070 Ti with 8GB are all feeling a bit limited these days. But short of using memory chips in "clamshell" mode (two chips per channel, on both sides of the circuit board), 12GB represents the maximum for a 192-bit interface right now.

While AMD was throwing shade yesterday about the lack of VRAM on the RTX 4070, it's important to note that AMD has yet to reveal its own "mainstream" 7000-series parts, and it will face similar potential compromises. A 256-bit interface allows for 16GB of VRAM, but it also increases board and component costs. Perhaps we'll get a 16GB RX 7800 XT, but the RX 7700 XT will likely end up at 12GB VRAM as well. As for the previous-gen AMD GPUs having more VRAM, that's certainly true, but capacity is only part of the equation, so we need to see how the RTX 4070 stacks up before declaring a victor.

Another noteworthy item is the 200W TGP (Total Graphics Power), and Nvidia was keen to emphasize that in many cases, the RTX 4070 will use less power than TGP, while competing cards (and previous generation offerings) usually hit or exceeded TGP. We can confirm that's true here, and we'll dig into the particulars more later on.

The good news is that we finally have a latest-gen graphics card starting at $599. There will naturally be third-party overclocked cards that jack up the price, with extras like RGB lighting and beefier cooling, but Nvidia has restricted this pre-launch review to cards that sell at MSRP. We've got a PNY model as well that we'll look at in more detail in a separate review, though we'll include the performance results in our charts. (Spoiler: It's just as fast as the Founders Edition.)

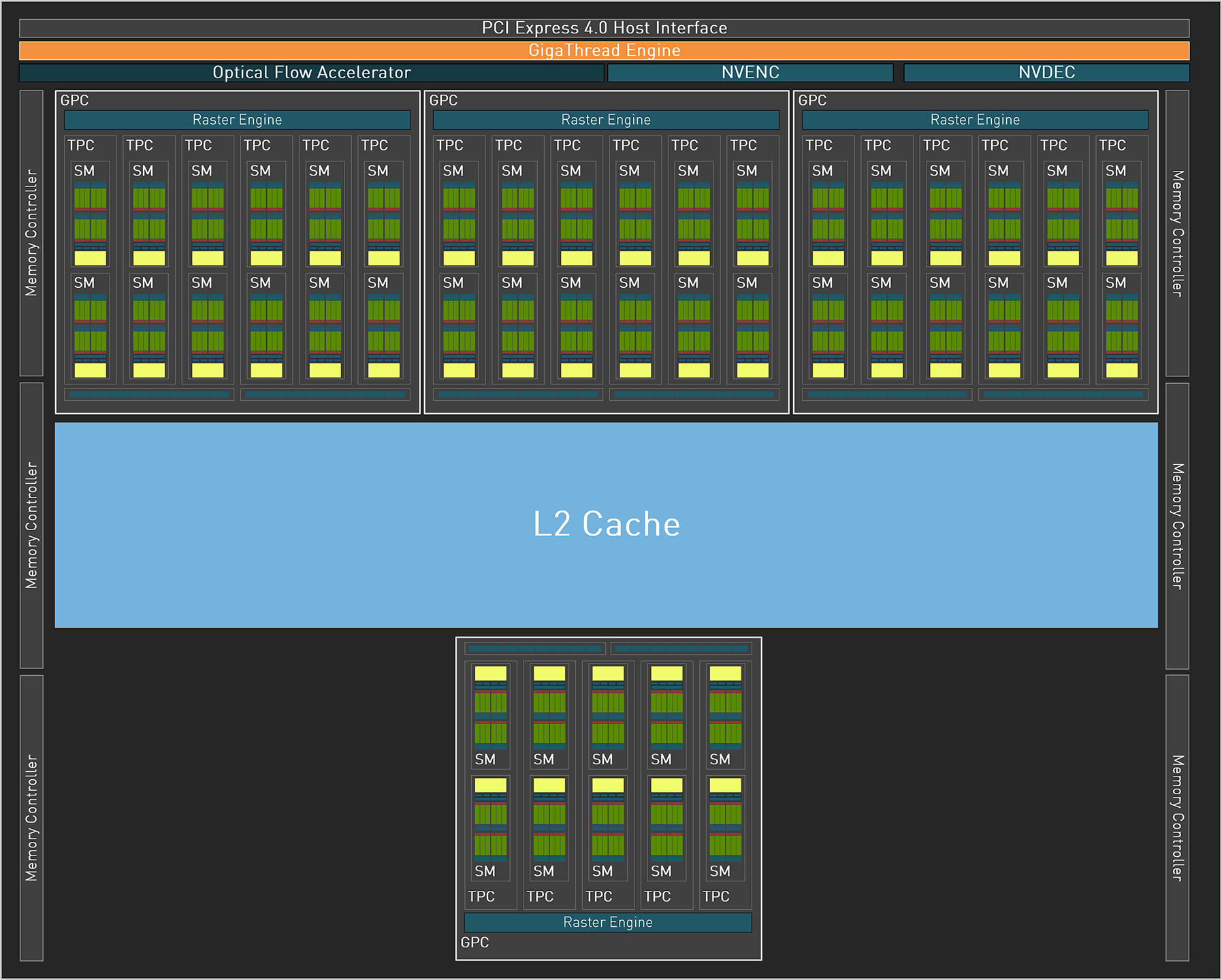

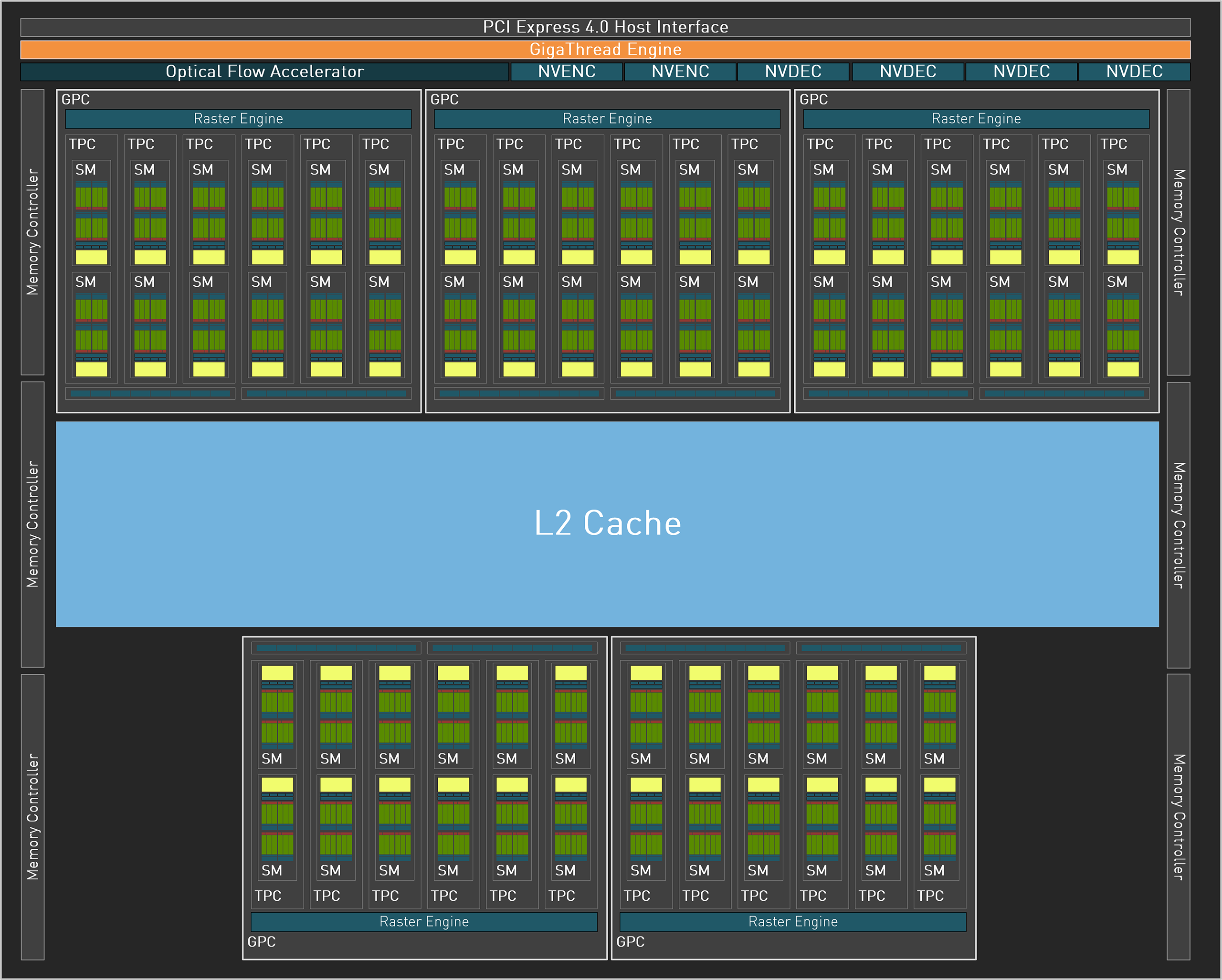

Above are the block diagrams for the RTX 4070 and for the full AD104, and you can see all the extra stuff that's included but turned off on this lower-tier AD104 implementation. None of the blocks in that image are "to scale," and Nvidia didn't provide a die shot of AD104, so we can't determine just how much space is dedicated to the various bits and pieces — not until someone else does the dirty work, anyway (looking at you, Fritzchens Fritz).

As discussed previously, AD104 includes Nvidia's 4th-gen Tensor cores, 3rd-gen RT cores, new and improved NVENC/NVDEC units for video encoding and decoding (now with AV1 support), and a significantly more powerful Optical Flow Accelerator (OFA). The latter is used for DLSS 3, and while it's "theoretically" possible to do Frame Generation with the Ampere OFA (or using some other alternative), so far only RTX 40-series cards can provide that feature.

The Tensor cores meanwhile now support FP8 with sparsity. It's not clear how useful that is in all workloads, but AI and deep learning have certainly leveraged lower precision number formats to boost performance without significantly altering the quality of the results — at least in some workloads. It will ultimately depend on the work being done, and figuring out just what uses FP8 versus FP16, plus sparsity, can be tricky. Basically, it's a problem for software developers, but we'll probably see additional tools that end up leveraging such features (like Stable Diffusion or GPT Text Generation).

Those interested in AI research may find other reasons to pick an RTX 4070 over its competition, and we'll look at performance in some of those tasks as well as gaming and professional workloads. But before the benchmarks, let's take a closer look at the RTX 4070 Founders Edition.

- MORE: Best Graphics Cards

- MORE: GPU Benchmarks and Hierarchy

- MORE: All Graphics Content

Nvidia RTX 4070 Founders Edition Design

We're still more than a little bit curious about the lack of an RTX 4070 Ti Founders Edition. We confirmed with Nvidia that there will not be a 4070 Ti FE, leaving a small hole in the RTX 40-series lineup for those that like reference cards. Perhaps it was a Nvidia's way of throwing a bone to its add-in board partners, or maybe it's just part of that whole RTX 4080 12GB rebranding nonsense from last year. Regardless, we have the RTX 4070 Founders Edition, which looks like the 30-series parts, only with a few differences.

If you check out the RTX 3070 Founders Edition photos, you'll notice that — unlike the RTX 3080 or RTX 4080 — there's no large "RTX 3070" logo on the card. The RTX 3070 Ti meanwhile looks like the other high-end offerings. Basically, Nvidia created a different design and aesthetic on the RTX 3070 and RTX 3060 Ti Founders Edition cards. Those two are also more compact designs, but we did appreciate the look of the higher tier reference models.

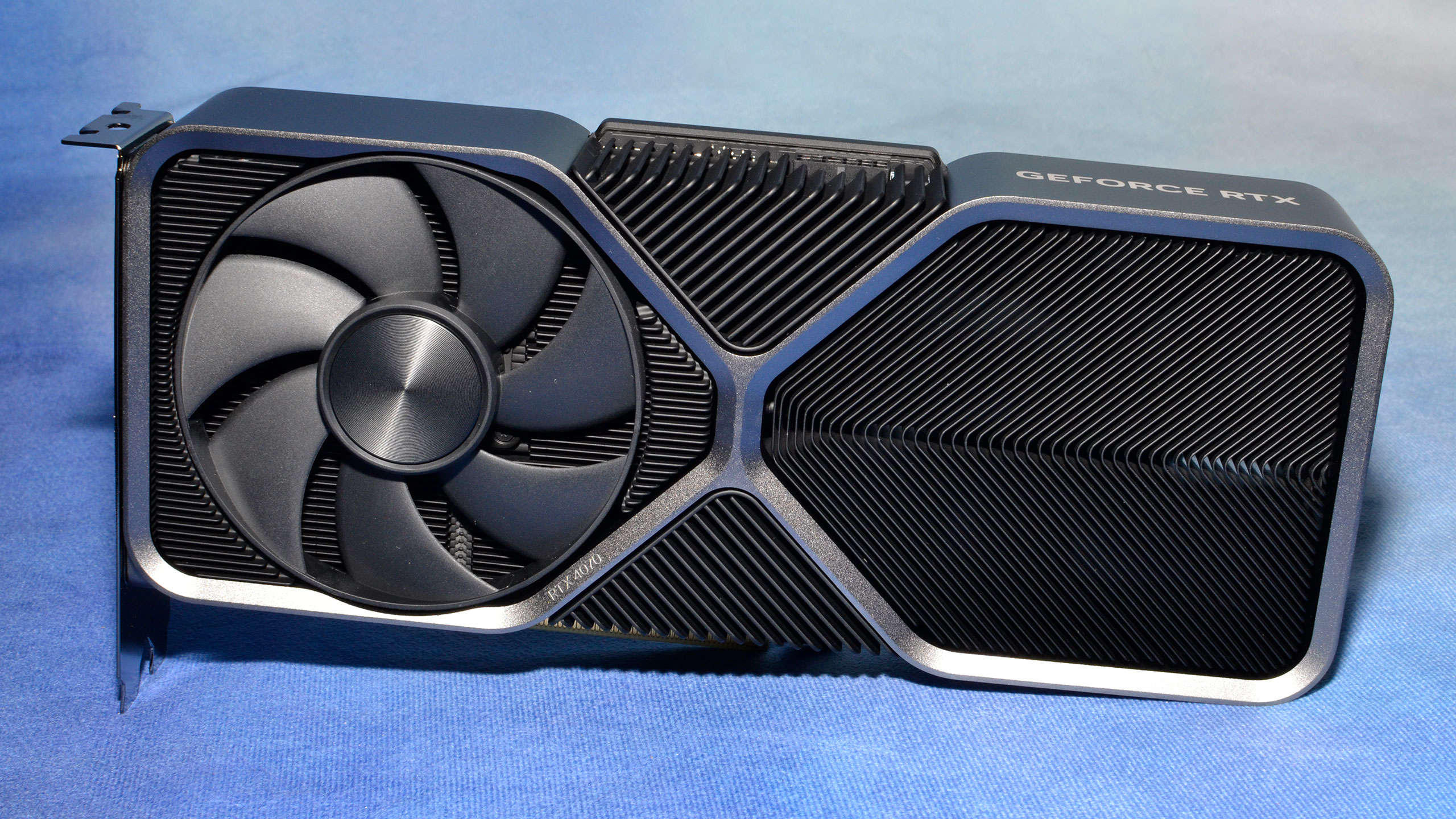

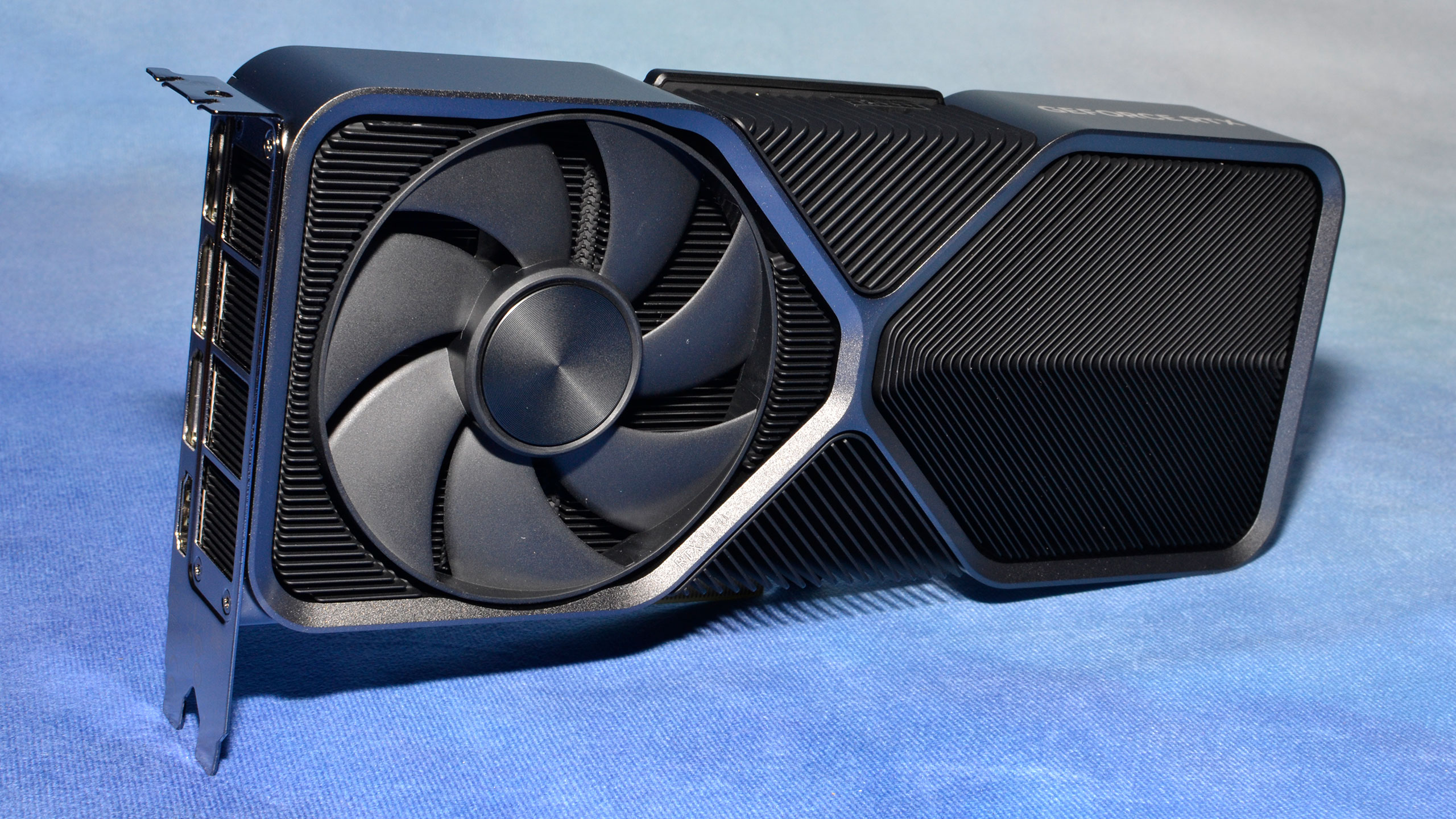

Nvidia has "fixed" this with the RTX 4070 Founders Edition, in that there are now two fans on different sides of the card, with a large blank area sporting the RTX 4070 logo. It's not just the RTX 3070 Ti / 3080 design repurposed, however, as the 4070 Founders Edition includes the larger fan used in the 40-series models and also comes with a smaller form factor than the 3080. It's actually just a touch larger (maybe 1mm) than the RTX 3070 FE, but it's also about 2mm thicker.

Also "fixed" is that the RTX 4070 Founders Edition comes with the same large clam box that you'll get with the 4080 and 4090 Founders Editions. Is that a good thing? Maybe, maybe not — less expensive packaging in exchange for an RGB-lit logo would have been better, we think.

The full dimensions of the RTX 4070 Founders Edition are 244x111x40 mm (compared to 243x111x38 mm on the RTX 3070 FE). It has two custom fans that are 91mm in diameter (versus 85mm on the 3070), and it weighs 1021g (compared to 1030g for the 3070). While we generally prefer the new aesthetic over the 3070 design, there are a few blemishes that are sticking around.

Like that completely pointless 16-pin power connector. Seriously, this is a 200W card, and while it might have required dual 8-pin (or 8-pin plus 6-pin) connectors to ensure there's ample room for overclocking, we'd rather have that than the rather clumsy 16-pin to dual 8-pin adapter. Even if it doesn't melt, it's just one more piece of cabling you'll have to deal with in your build. The PNY RTX 4070 incidentally opts for a single 8-pin connector, though it's also limited in overclocking options, so if you don't want the 16-pin adapter (and/or you don't have a new ATX 3.0 power supply), you might want to give that a look.

Most PCs aren't using much in the way of expansion cards beyond the GPU these days, so a relatively compact dual-slot design will work well in mini-ITX cases all the way up to full-size ATX builds.

I generally like the look of the current and previous generation Founders Edition cards. They're not flashy, particularly on the models that don't have any RGB like the RTX 4070 model. But when they're installed in a typical case with a window, you can see the nice "RTX 4070" (or whatever model) logo facing upward. Also, cooling performance seems to have improved quite a bit with the 40-series, or at least the second generation GDDR6X doesn't run anywhere near as hot as the first generation did on the 30-series.

As with pretty much every Nvidia Founders Edition GPU of the past several generations, you get the standard triple DisplayPort and single HDMI outputs. (Some might still miss the USB type-C VirtualLink from the 20-series, though.) It's an HDMI 2.1 port and three DisplayPort 1.4a outputs. Not to beat a dead horse, but while DP1.4a is technically worse than HDMI 2.1 and DisplayPort 2.1 (54 Gbps), we have tested a bunch of GPUs with a Samsung Odyssey Neo G8 that supports up to 4K and 240 Hz, thanks to Display Stream Compression (DSC). Until we start seeing DisplayPort 2.1 monitors that run at 4K and 480Hz, DisplayPort 1.4a should suffice.

Since this will be our reference RTX 4070, we're skipping disassembly and teardown — we need to guarantee the card keeps working properly, and ideally not change any of the cooling or other factors. We do know there are six GDDR6X chips inside, and during testing we saw peak temperatures of 70C on the memory — a far cry from the 100–104C we often saw on the RTX 30-series cards with GDDR6X!

- MORE: Best Graphics Cards

- MORE: GPU Benchmarks and Hierarchy

- MORE: All Graphics Content

Nvidia RTX 4070 Founders Edition Overclocking

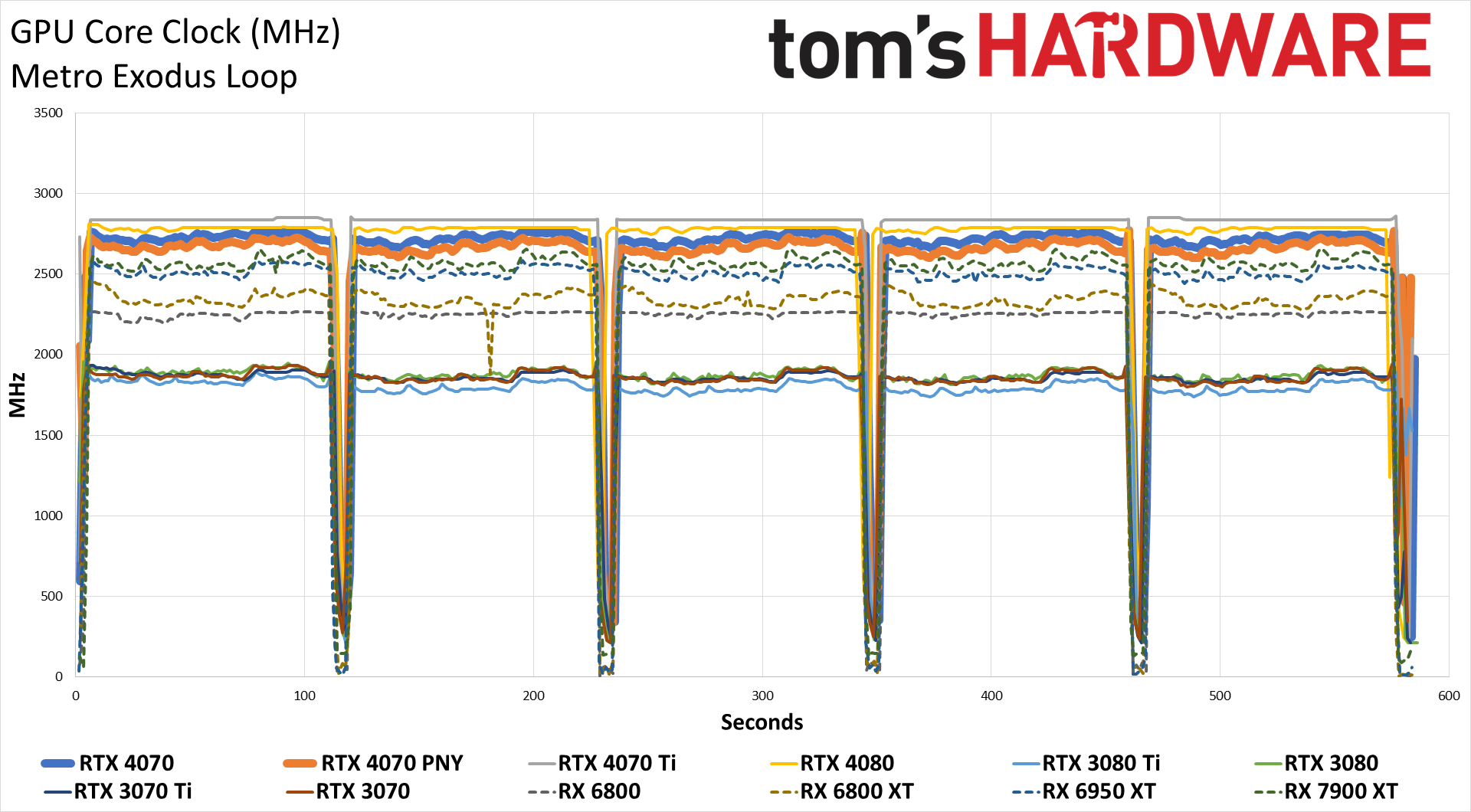

In the above images, you can see the results of our power testing that shows clocks, power, and temperatures. The big takeaway is that the card ran quite cool on both the GPU and VRAM, and you can see the clocks were hitting well over 2.7GHz in the gaming test. As for overclocking, there's trial and error as usual, and our results may not be representative of other cards — each card behaves slightly differently. We attempt to dial in stable settings while running some stress tests, but what at first appears to work just fine may crash once we start running through our gaming suite.

We start by maxing out the power limit, which in this case was 110% — and again, that can vary by card manufacturer and model. Most of the RTX 40-series cards we've tested can't do more than about +150 MHz on the cores, but we were able to hit up to +250 MHz on the RTX 4070. We backed off slightly to a +225 MHz overclock on the GPU to keep things fully stable.

The GDDR6X memory was able to reach +1450 MHz before showing some graphical corruption at +1500 MHz. Because Nvidia has error detection and retry, that generally means you don't want to fully max out the memory speed so we backed off to +1350 MHz. With both the GPU and VRAM overclocks and a fan curve set to ramp from 30% at 30C up to 100% at 80C, we were able to run our full suite of gaming tests at 1080p and 1440p ultra without any issues.

As with other RTX 40-series cards, there's no way to increase the GPU voltage short of doing a voltage mod (not something we wanted to do), and that seems to be a limiting factor. GPU clocks did break the 3 GHz mark at times, and we'll have the overclocking results in our charts for reference.

Nvidia RTX 4070 Test Setup

We updated our GPU test PC at the end of last year with a Core i9-13900K, though we continue to also test reference GPUs on our 2022 system that includes a Core i9-12900K for our GPU benchmarks hierarchy. (We'll be updating that later today, once the embargo has passed.) For the RTX 4070, our review will focus on the 13900K performance, which ensures (as much as possible) that we're not CPU limited.

TOM'S HARDWARE INTEL 13TH GEN PC

Intel Core i9-13900K

MSI MEG Z790 Ace DDR5

G.Skill Trident Z5 2x16GB DDR5-6600 CL34

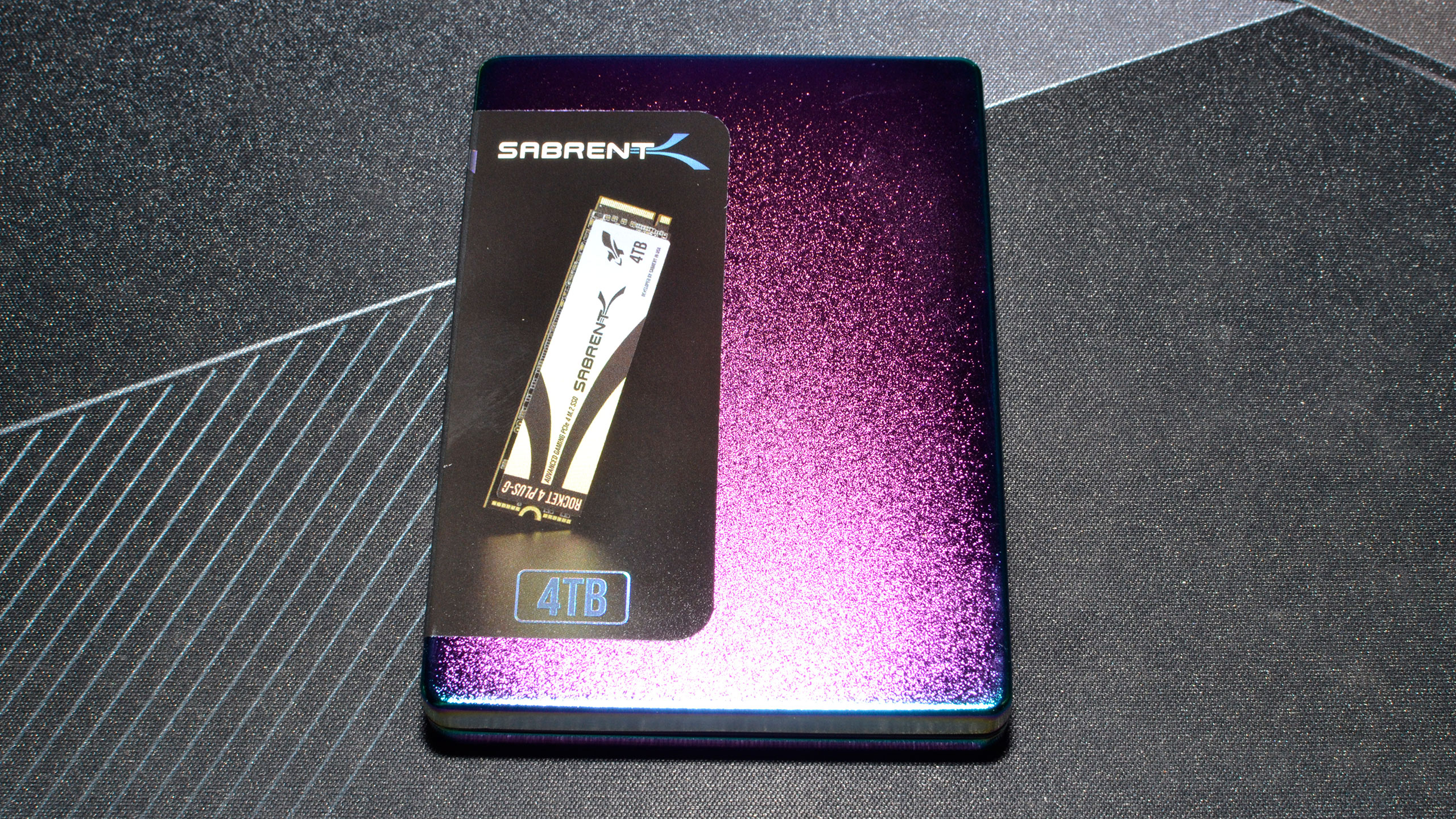

Sabrent Rocket 4 Plus-G 4TB

be quiet! 1500W Dark Power Pro 12

Cooler Master PL360 Flux

Windows 11 Pro 64-bit

TOM'S HARDWARE 2022 PC

Intel Core i9-12900K

MSI Pro Z690-A WiFi DDR4

Corsair 2x16GB DDR4-3600 CL16

Crucial P5 Plus 2TB

Cooler Master MWE 1250 V2 Gold

Corsair H150i Elite Capellix

Cooler Master HAF500

Windows 11 Pro 64-bit

OTHER GRAPHICS CARDS

AMD RX 7900 XT

AMD RX 6950 XT

AMD RX 6900 XT

AMD RX 6800 XT

AMD RX 6800

Nvidia RTX 4080

Nvidia RTX 4070 Ti

Nvidia RTX 3080 Ti

Nvidia RTX 3080 (10GB)

Nvidia RTX 3070 Ti

Nvidia RTX 3070

Multiple games have been updated over the past few months, so we retested all of the cards for this review (though some of the tests were done with the previous Nvidia drivers, as we've only had the review drivers for about a week). We're running Nvidia 531.41 and 531.42 drivers, and AMD 23.3.2. Our professional and AI workloads were also tested on the 12900K PC, since that allowed better multitasking on the part of our testing regimen.

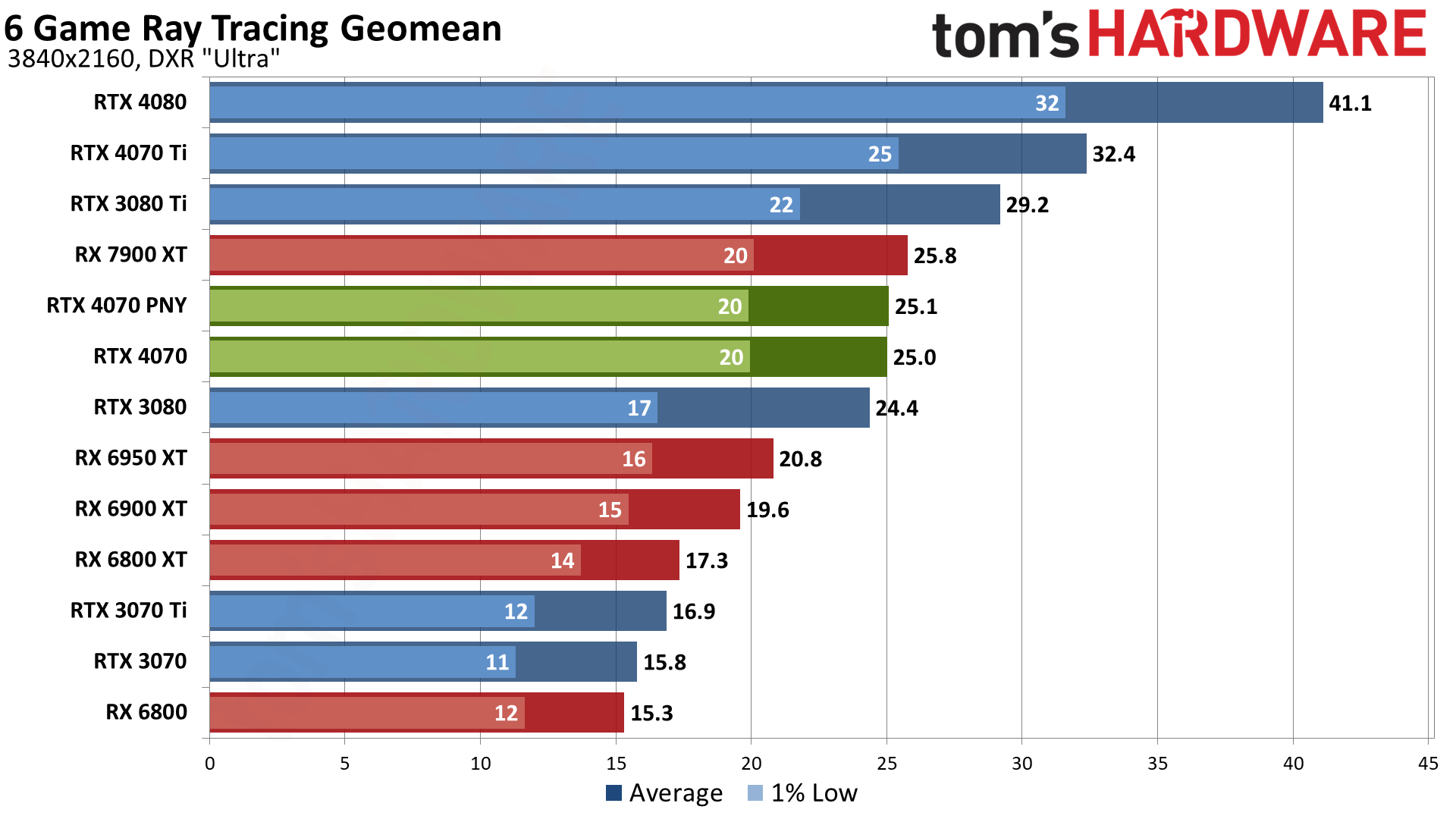

Our current test suite consists of 15 games. Of these, nine support DirectX Raytracing (DXR), but we only enable the DXR features in six of the games. At the time of testing, 12 of the games support DLSS 2, five support DLSS 3, and five support FSR 2. We'll cover performance with the various upscaling modes enabled in a separate gaming section.

We tested all of the GPUs at 4K, 1440p, and 1080p using "ultra" settings — basically the highest supported preset if there is one, and in some cases maxing out all the other settings for good measure (except for MSAA or super sampling). We also tested at 1080p "medium" to show what sort of higher FPS games can hit. Our PC is hooked up to a Samsung Odyssey Neo G8 32, one of the best gaming monitors around, just so we could fully experience some of the higher frame rates that might be available — G-Sync and FreeSync were enabled, as appropriate.

When we assembled the new test PC, we installed all of the then-latest Windows 11 updates. We're running Windows 11 22H2, but we've used InControl to lock our test PC to that major release for the foreseeable future (security updates still get installed on occasion).

Our new test PC includes Nvidia's PCAT v2 (Power Capture and Analysis Tool) hardware, which means we can grab real power use, GPU clocks, and more during all of our gaming benchmarks. We'll cover those results in our page on power use.

Finally, because GPUs aren't purely for gaming these days, we've run some professional application tests, and we also ran some Stable Diffusion benchmarks to see how AI workloads scale on the various GPUs.

- MORE: Best Graphics Cards

- MORE: GPU Benchmarks and Hierarchy

- MORE: All Graphics Content

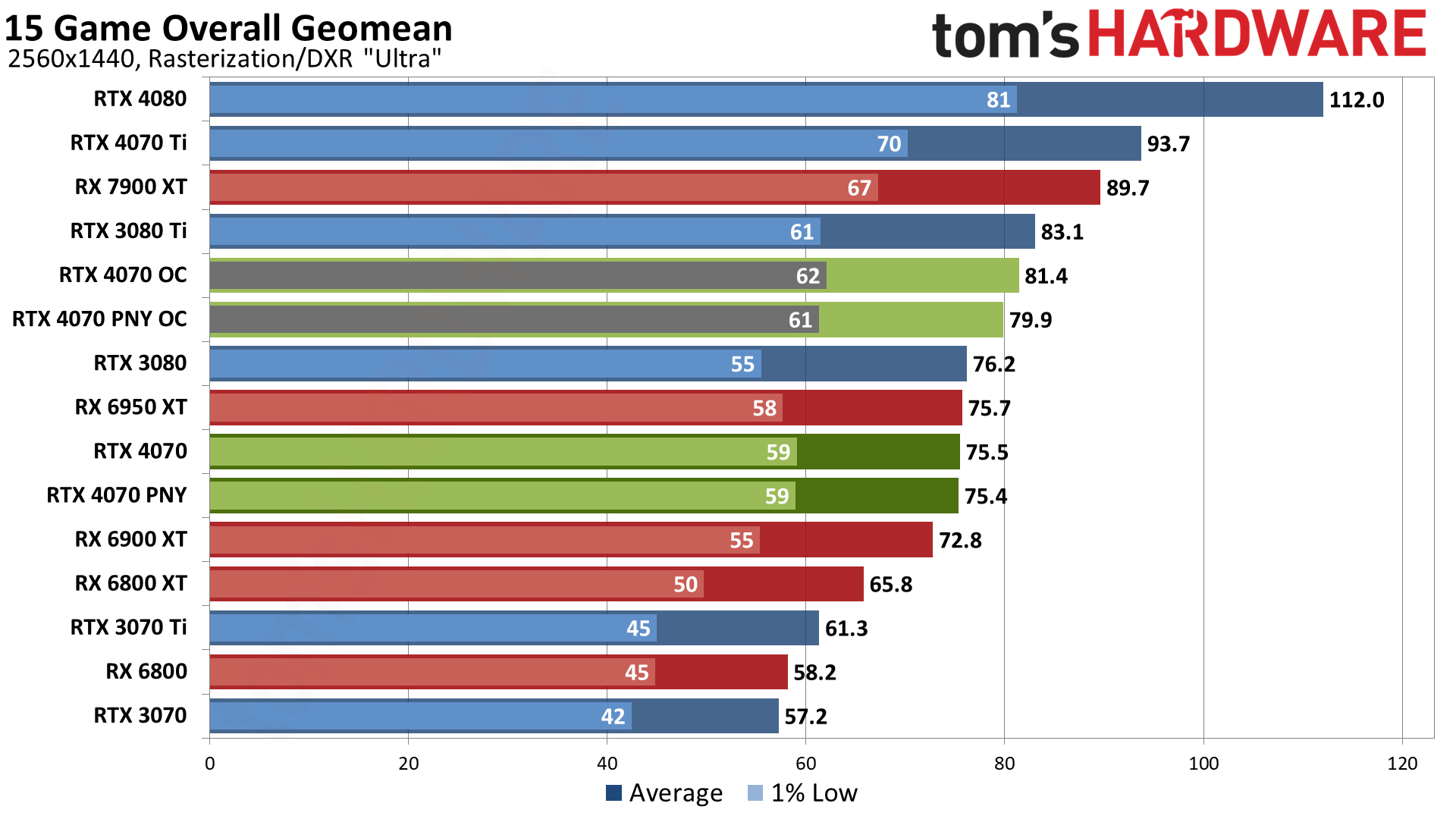

The RTX 4070 is either the bottom of the high-end GPU range, or the top of the mainstream segment, depending on how you want to classify things. We're going to start with 1440p gaming, as that makes the most sense for this level of hardware. Then we'll move on to the 1080p results and finish up with 4K testing. We'll have a section on upscaled 1440p and 4K gaming as well, separate from the main benchmarks.

We're going to break things down a bit differently this time as well. We have a global geometric mean of performance across all 15 games that we tested, including both the ray tracing and rasterization suites. Then we've got separate charts for only the rasterization and ray tracing suites, plus charts for the individual games. If you don't like the "overall performance" chart, the other two are the same view that we've previously presented.

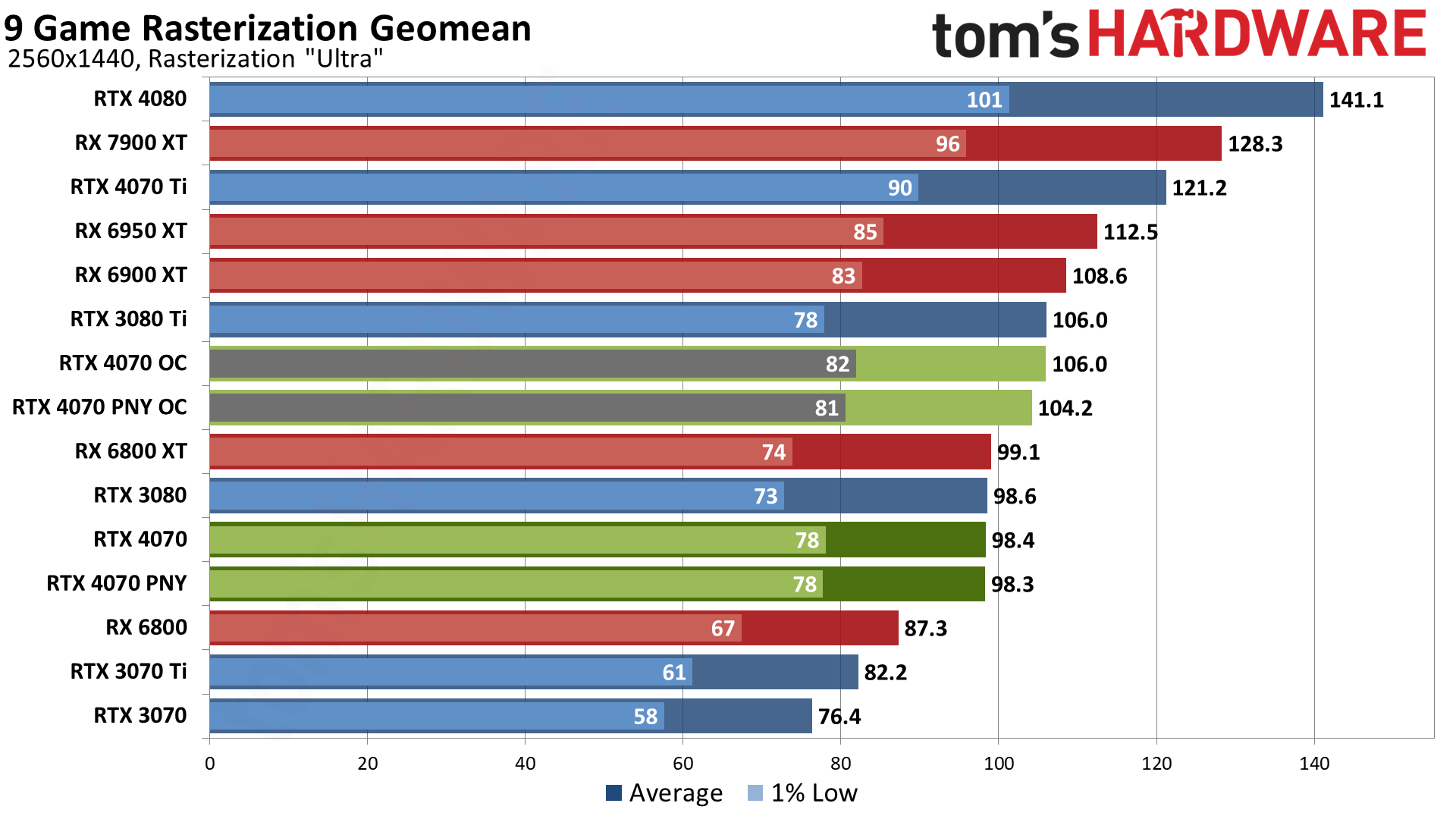

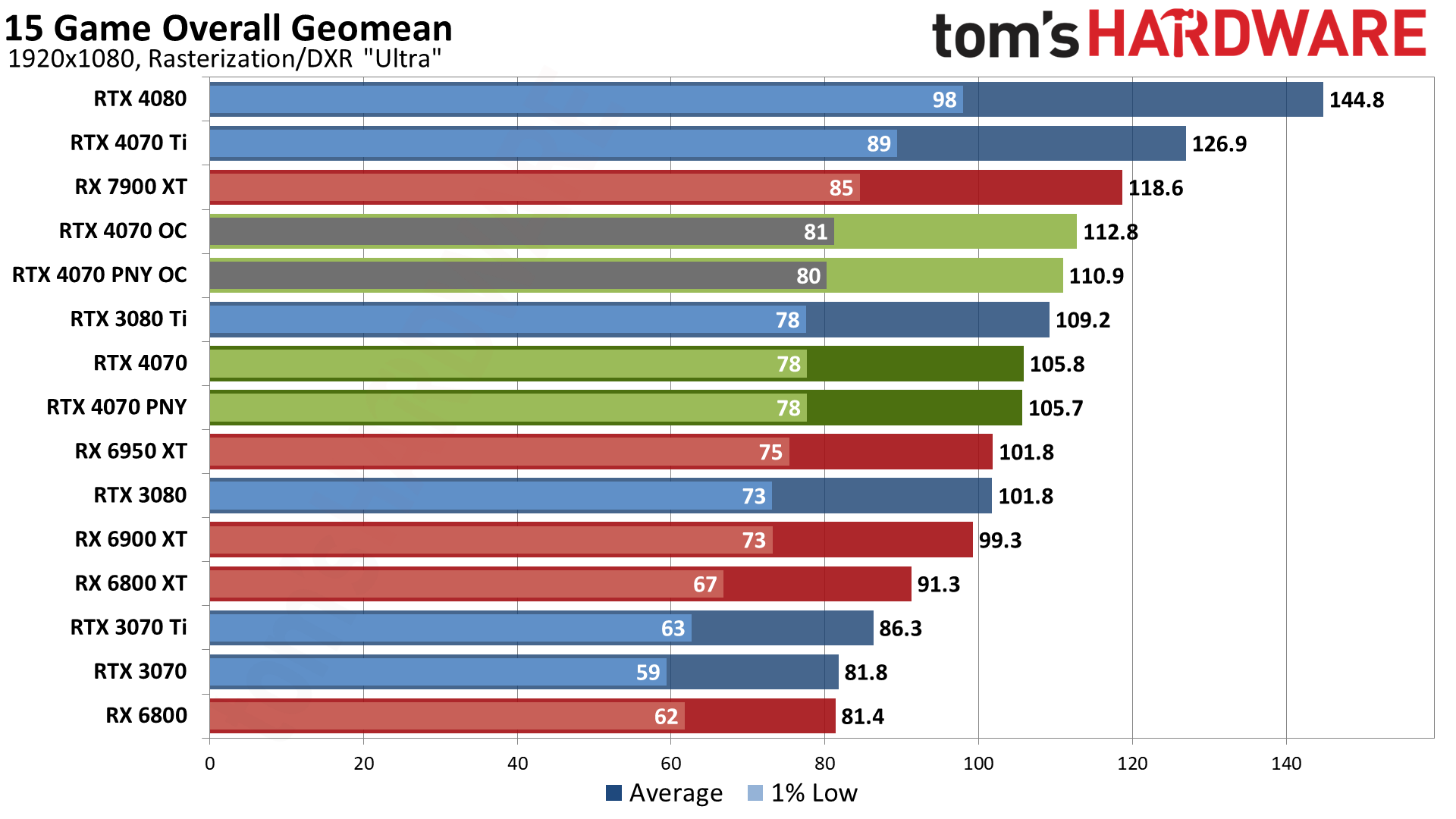

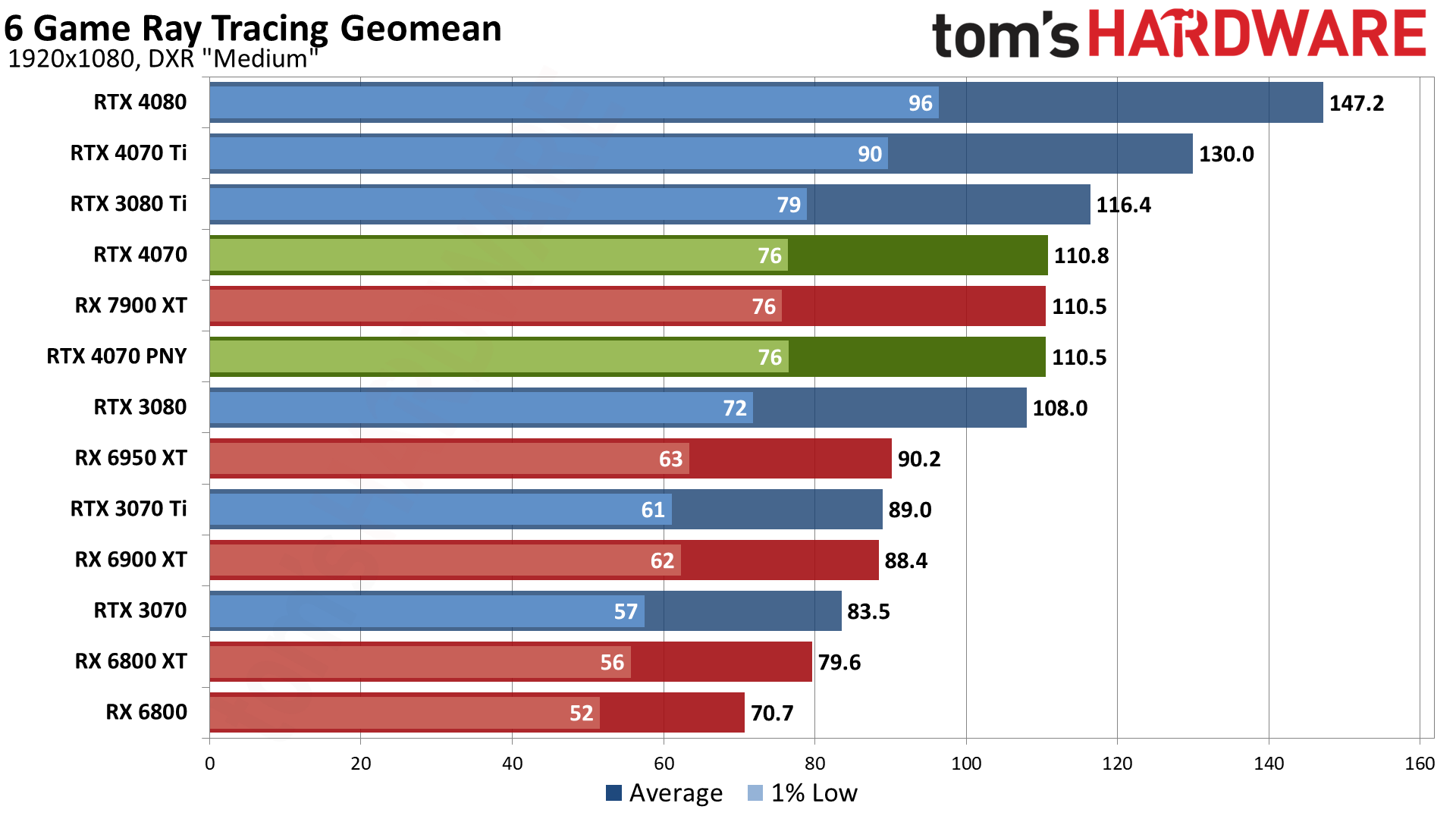

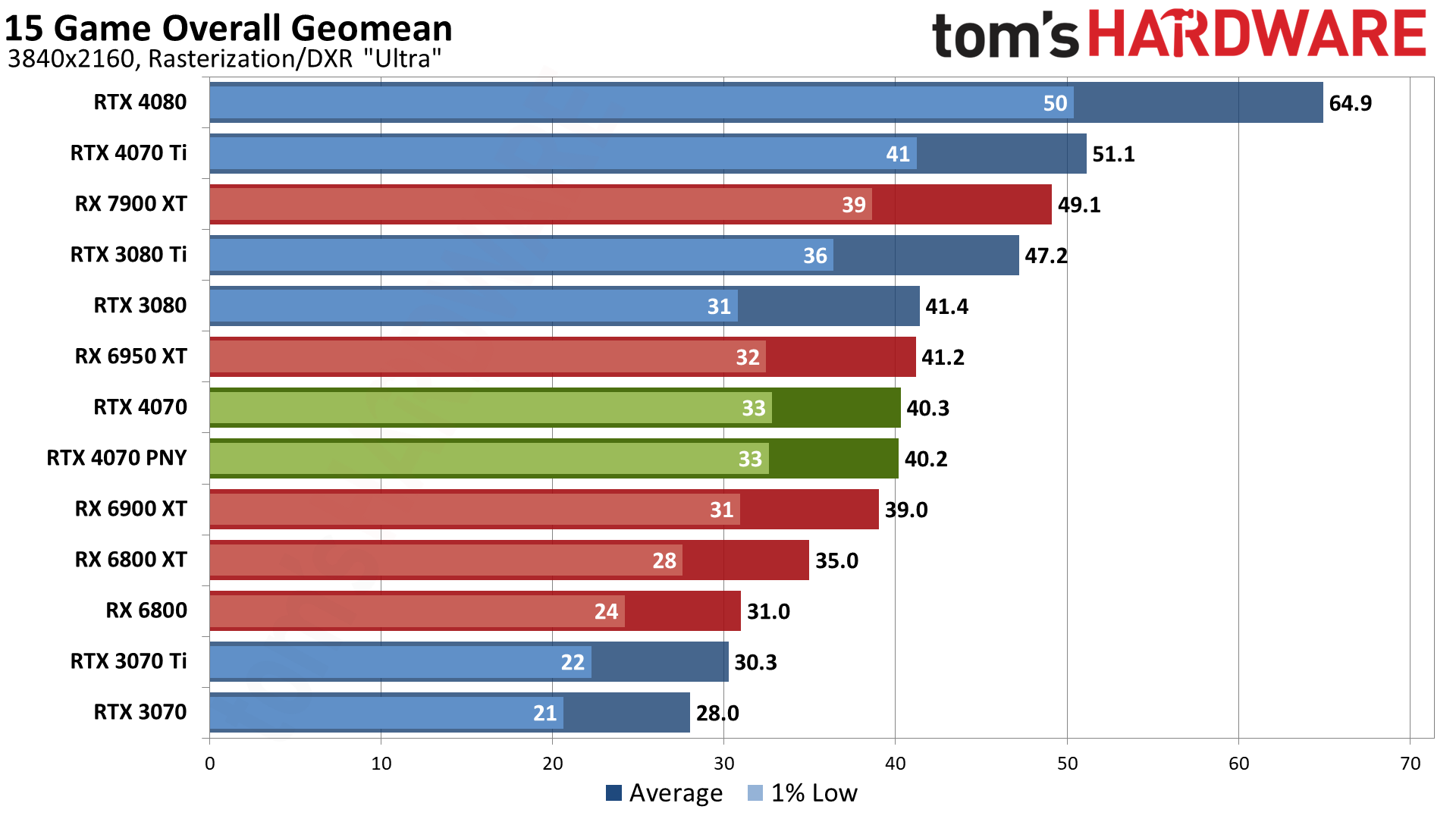

Our first look at performance gives a good overview of our general impressions of the RTX 4070. Factoring in both ray tracing and rasterization games — but not including upscaling technologies — the new GPU ends up roughly on par with the previous generation RTX 3080, and it also matches AMD's previous generation RX 6950 XT.

Some might take exception to our ranking, but like it or not, the use of ray tracing in games is becoming increasingly common. It's in most major releases these days, including Hogwarts Legacy, Spider-Man: Miles Morales, and you can even push things to their ray traced limits with stuff like Cyberpunk 2077's RT Overdrive mode that skips hybrid rendering and implements a fully path traced lighting model. (Yeah, you'll want an RTX 4090 for that, and no, we didn't use that mode for our tests since it just became public yesterday.)

Even without upscaling, overall performance on the RTX 4070 averages 75 fps, which is definitely playable. There are games where it comes up well short of 60 fps as well as games where it's well over 100 fps, but 1440p gaming at close to maximum settings is definitely within reach.

Overclocking of the Founders Edition and PNY cards provided another 6–8 percent performance, so not a lot and not even close to enough to close the gap between the 4070 and the 4070 Ti. Nvidia continues to build in relatively large performance gaps between its 40-series GPUs, though we'll have to wait and see where future parts like the 4060 and 4050 eventually land.

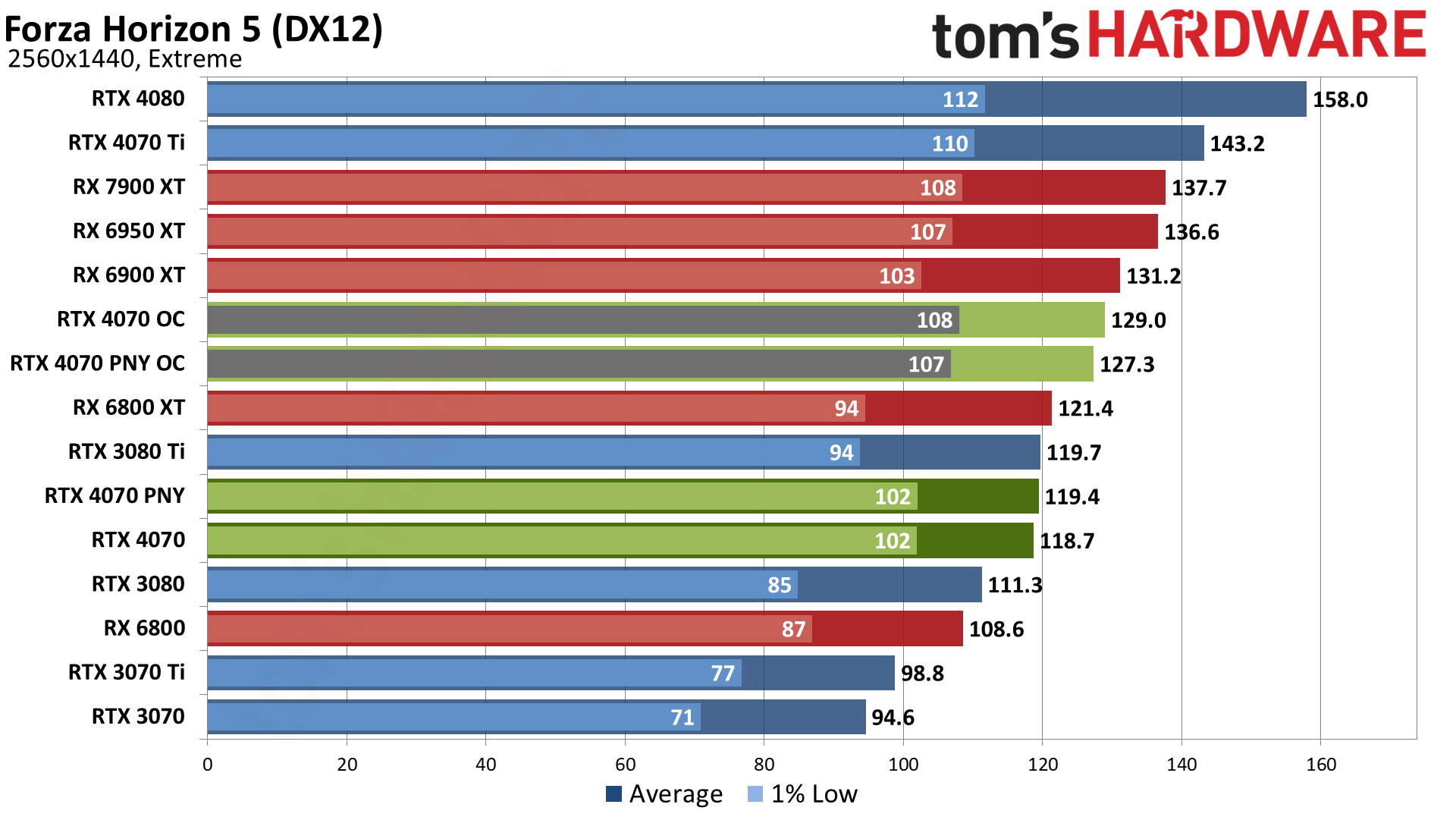

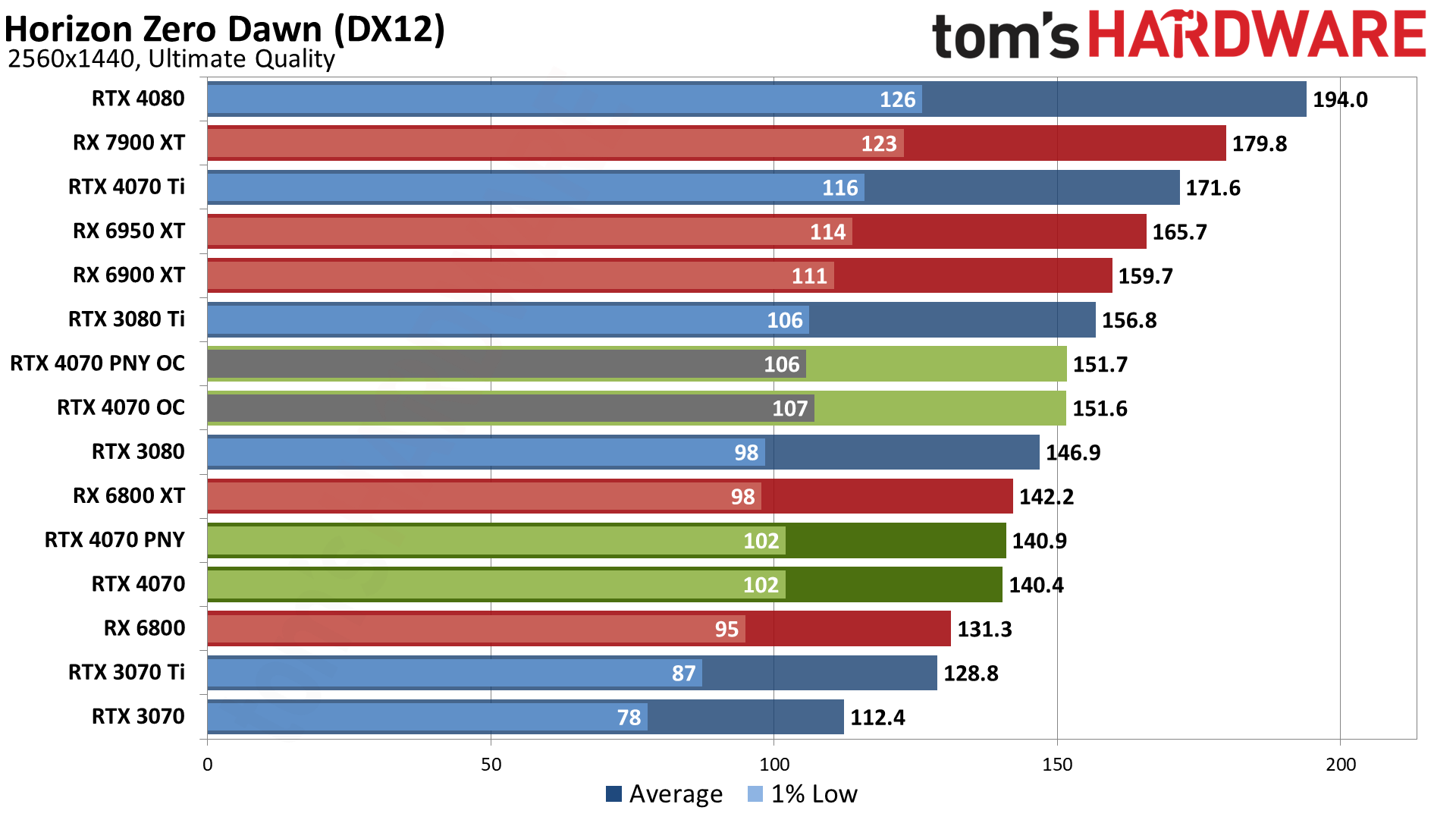

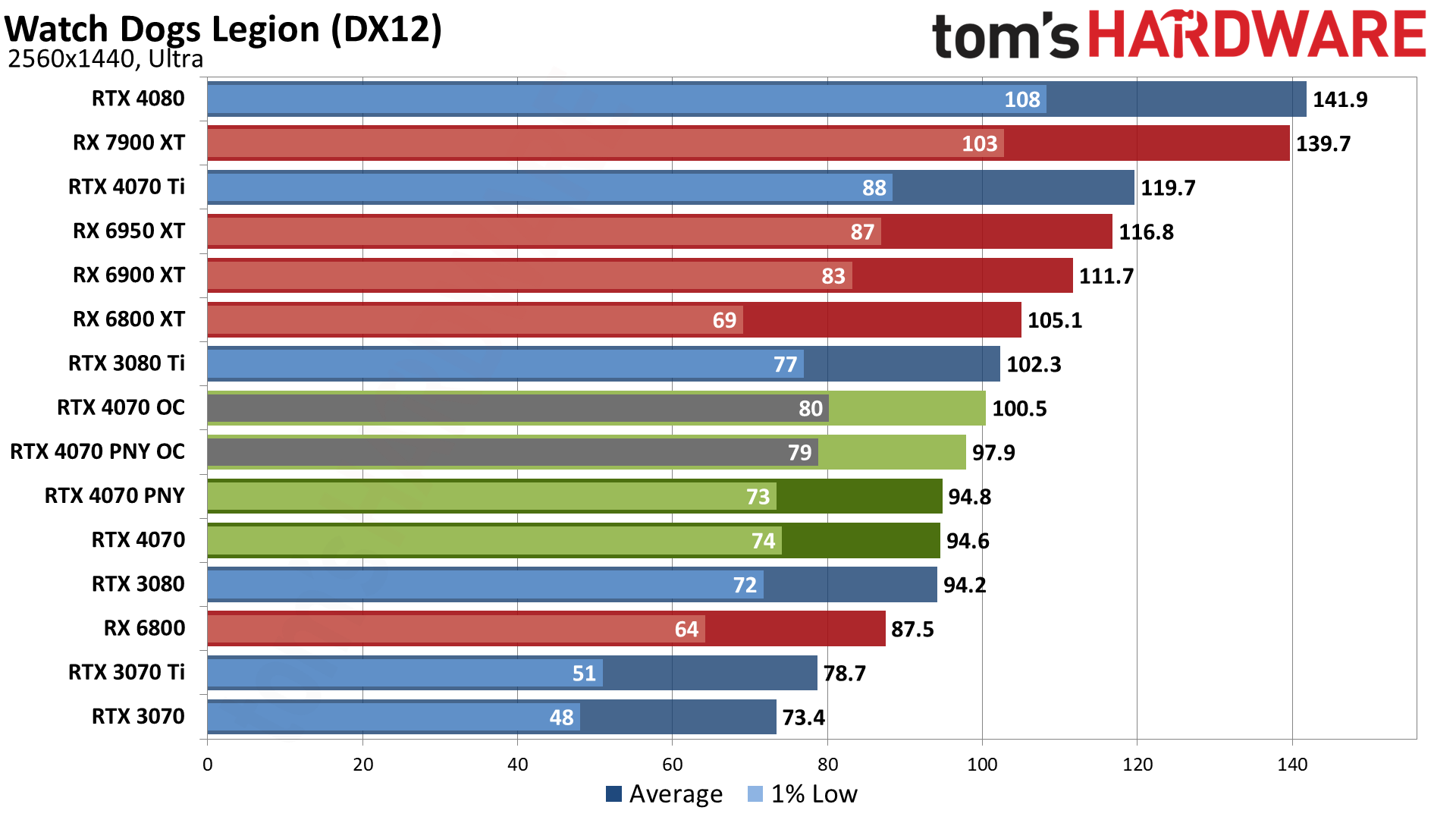

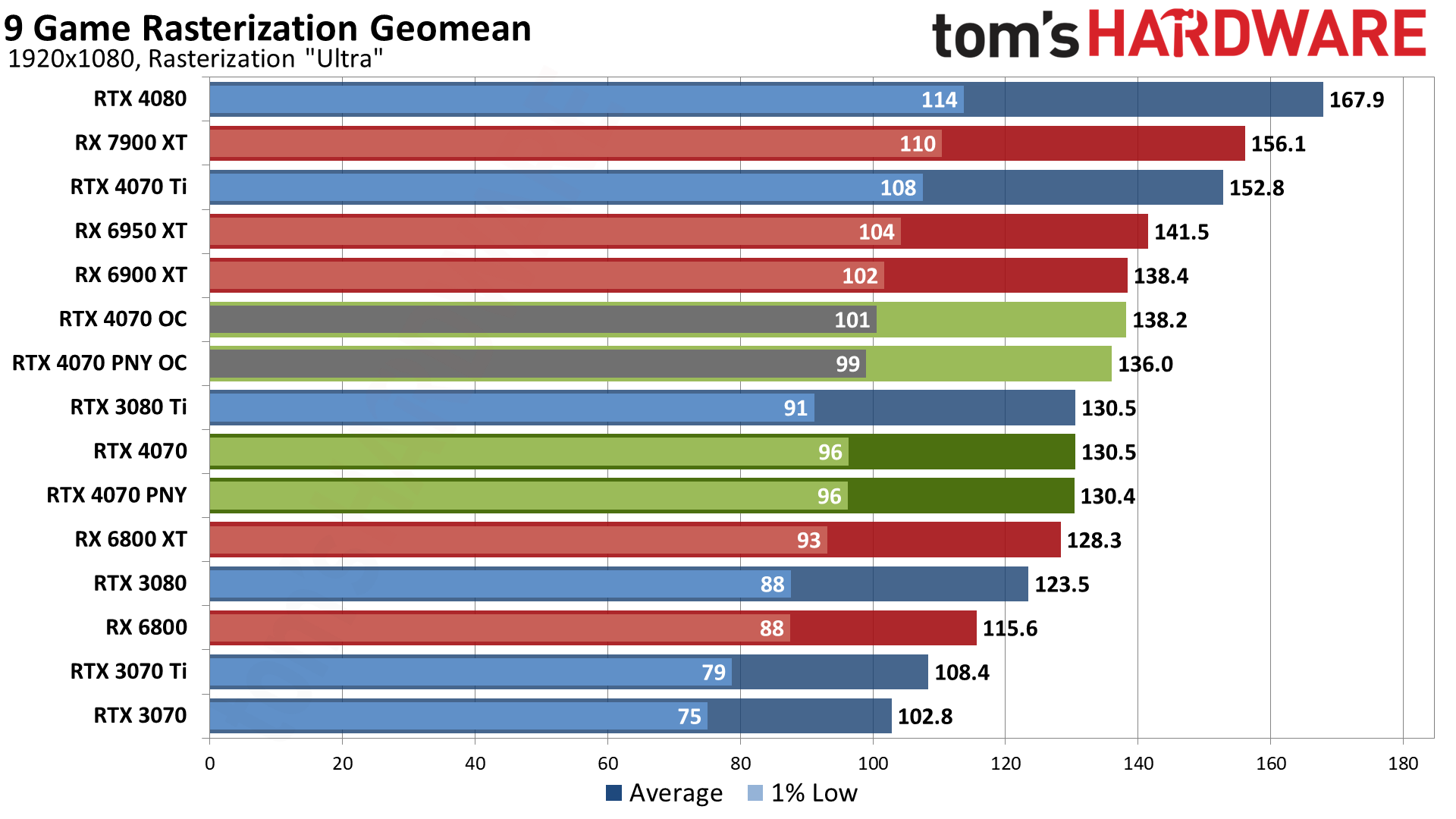

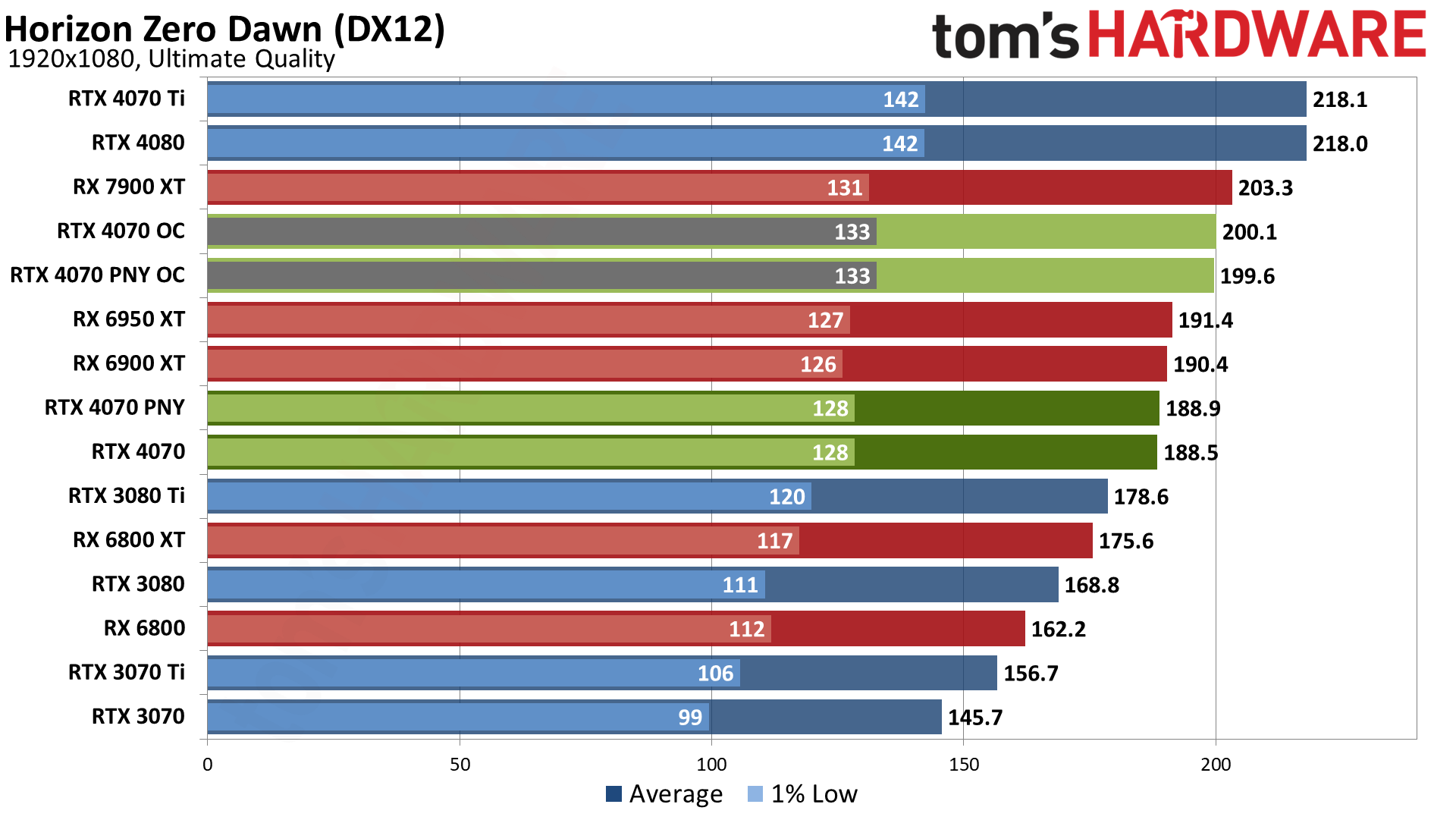

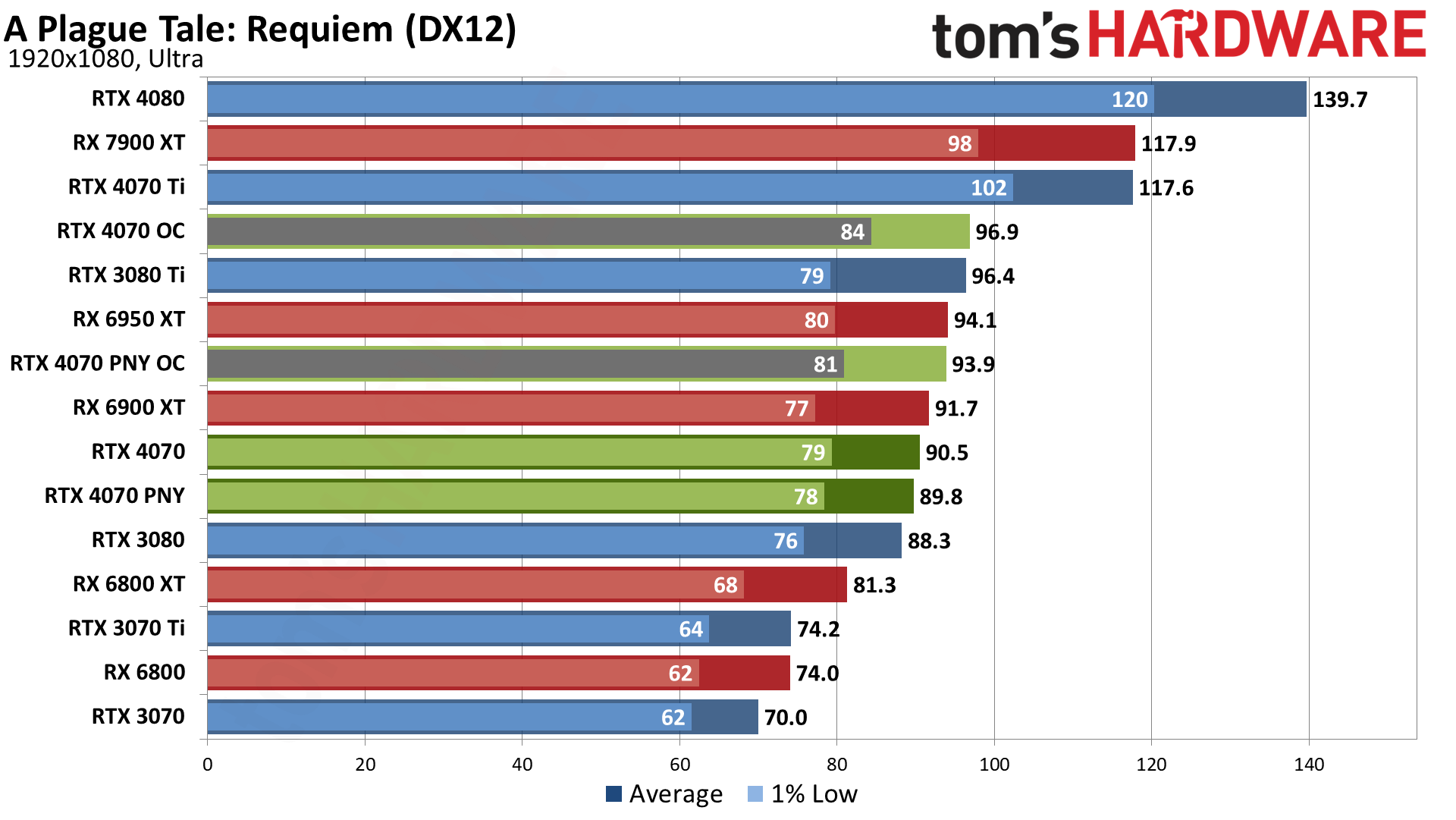

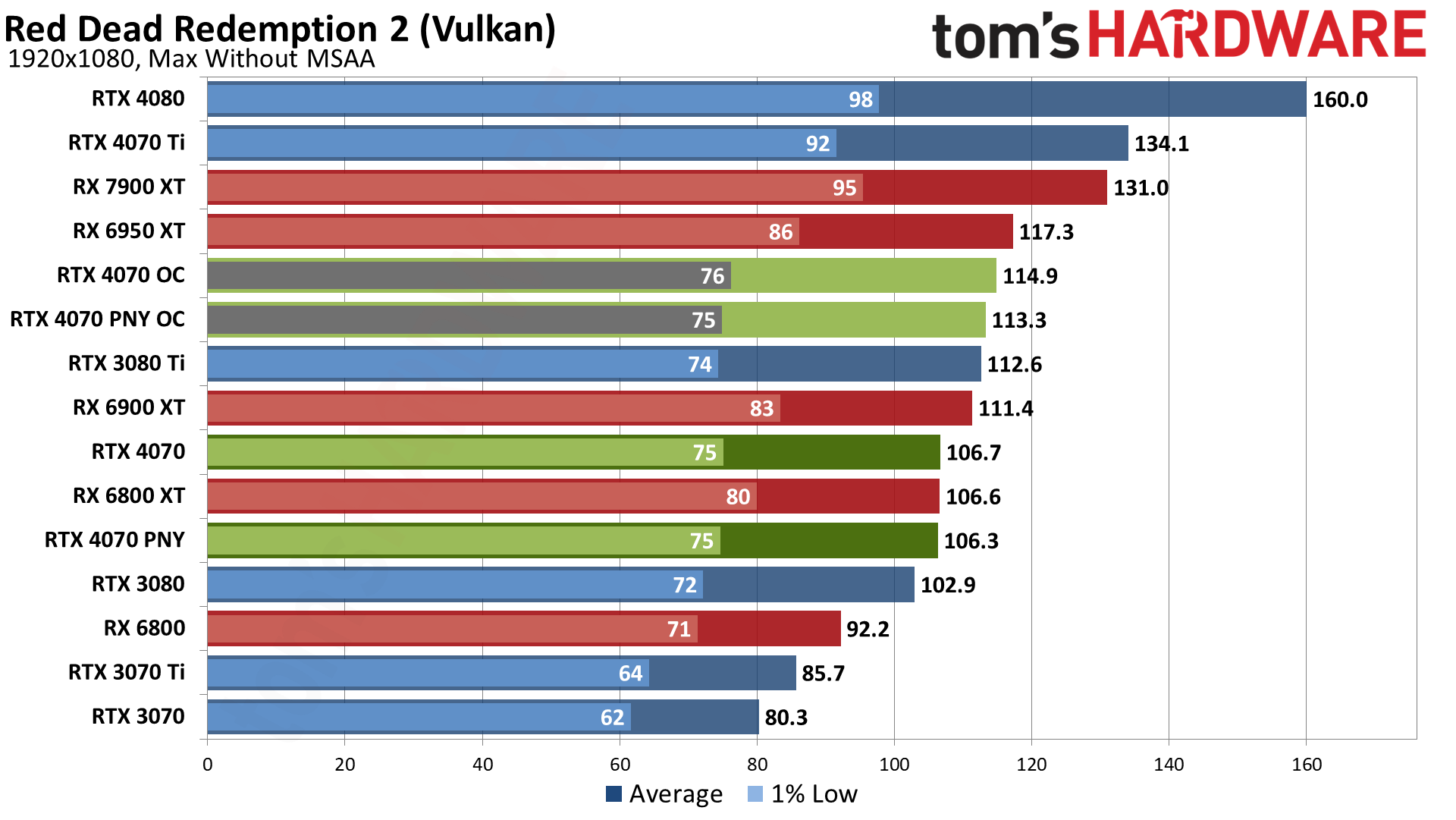

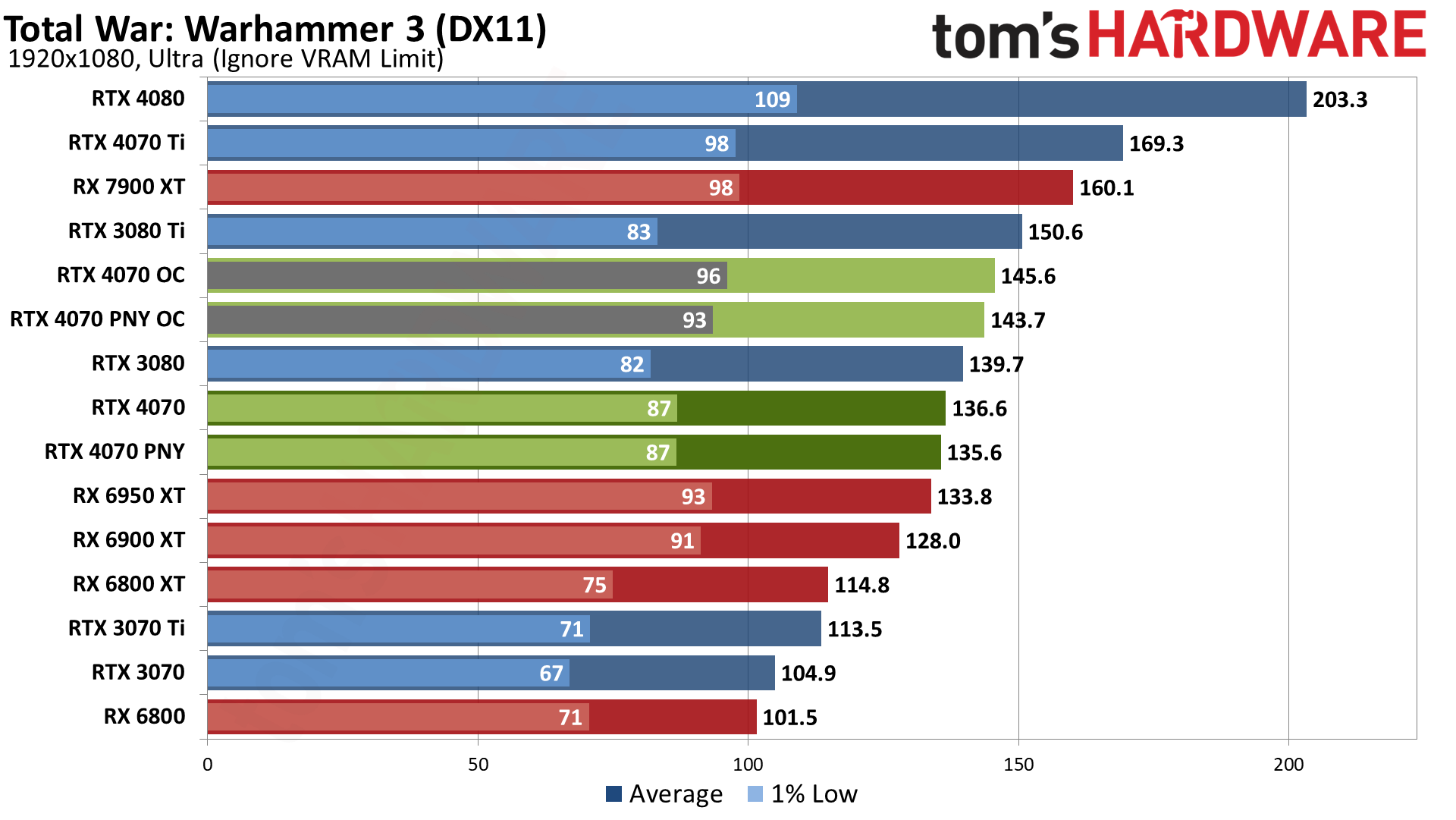

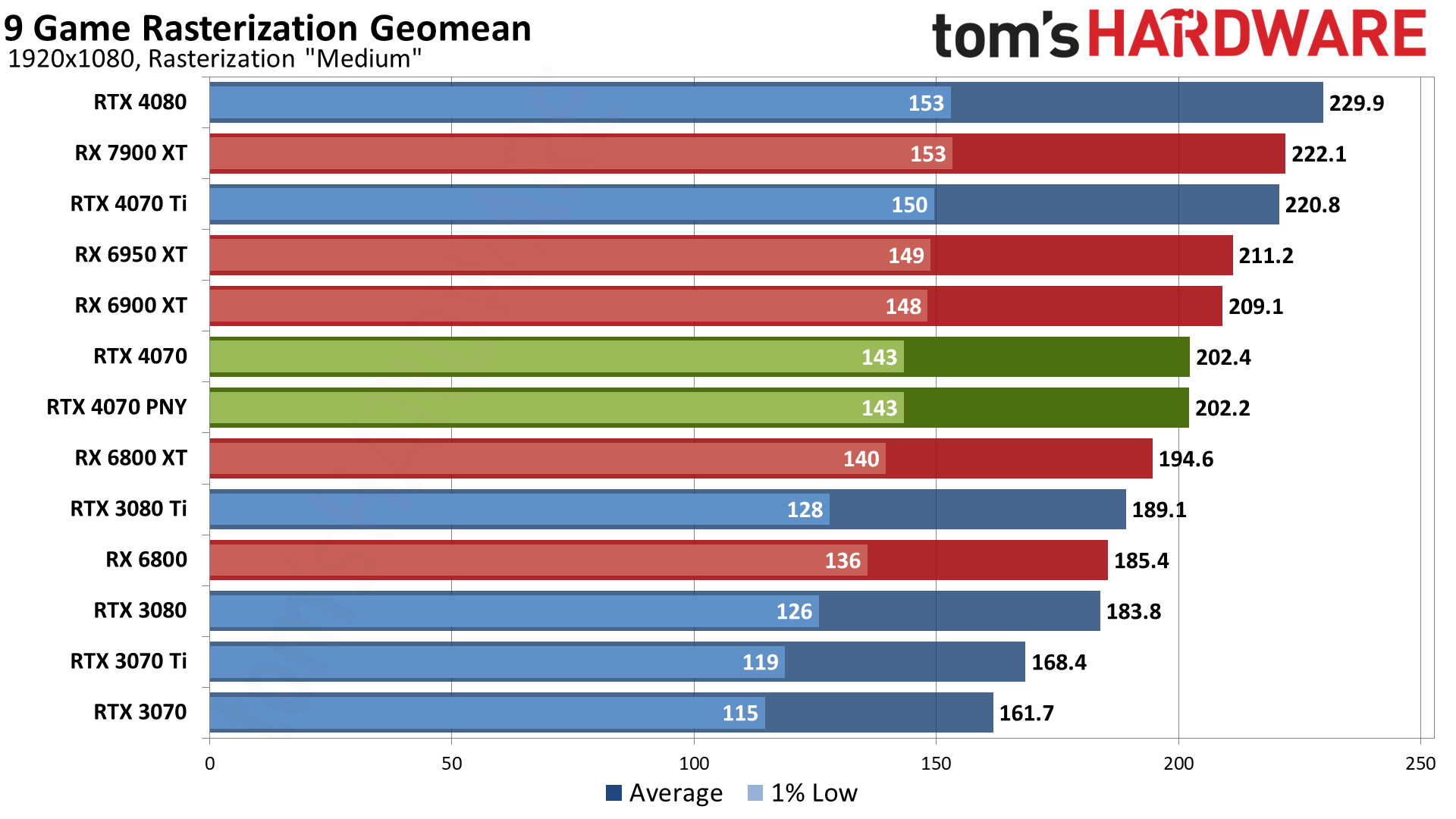

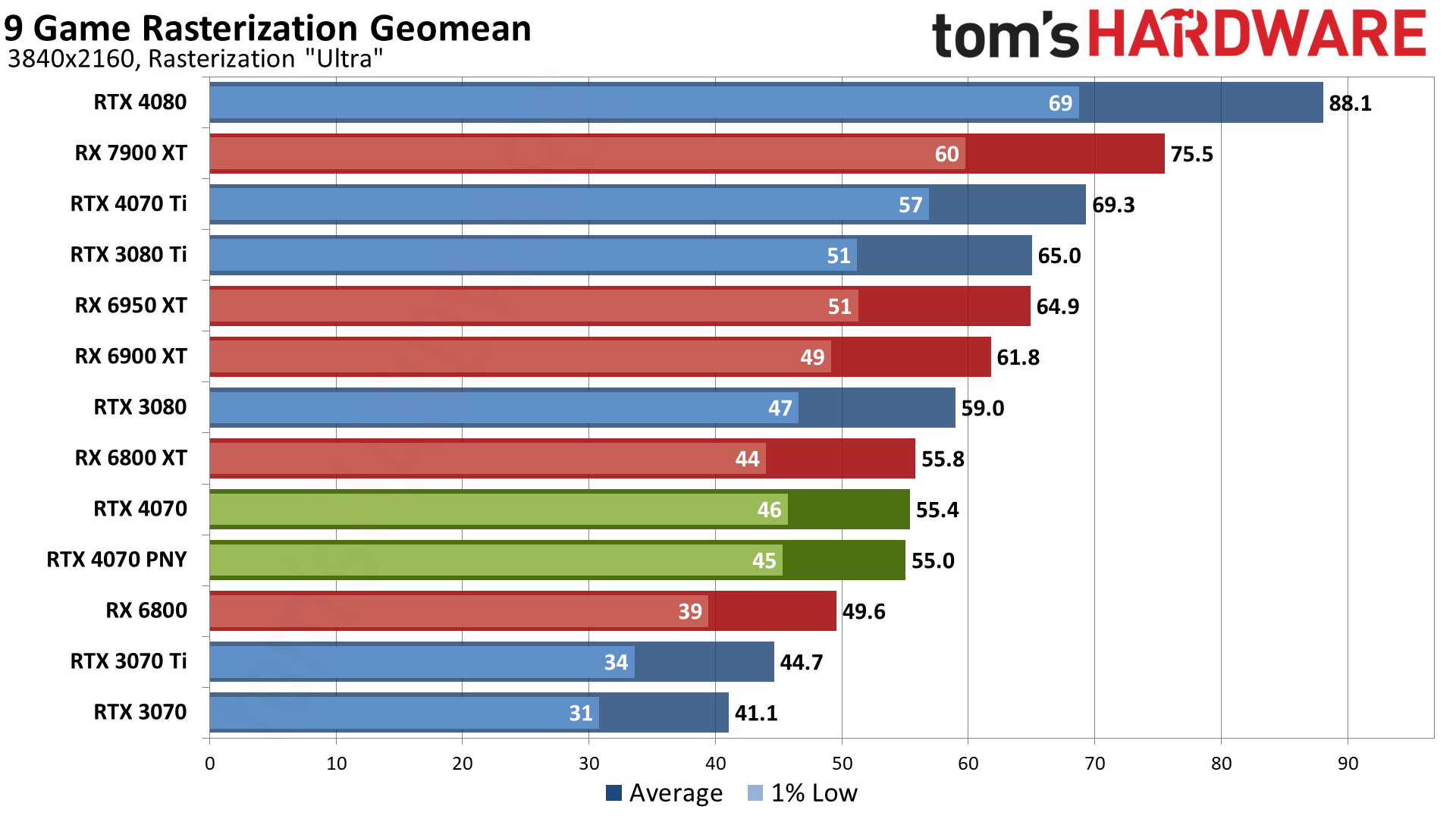

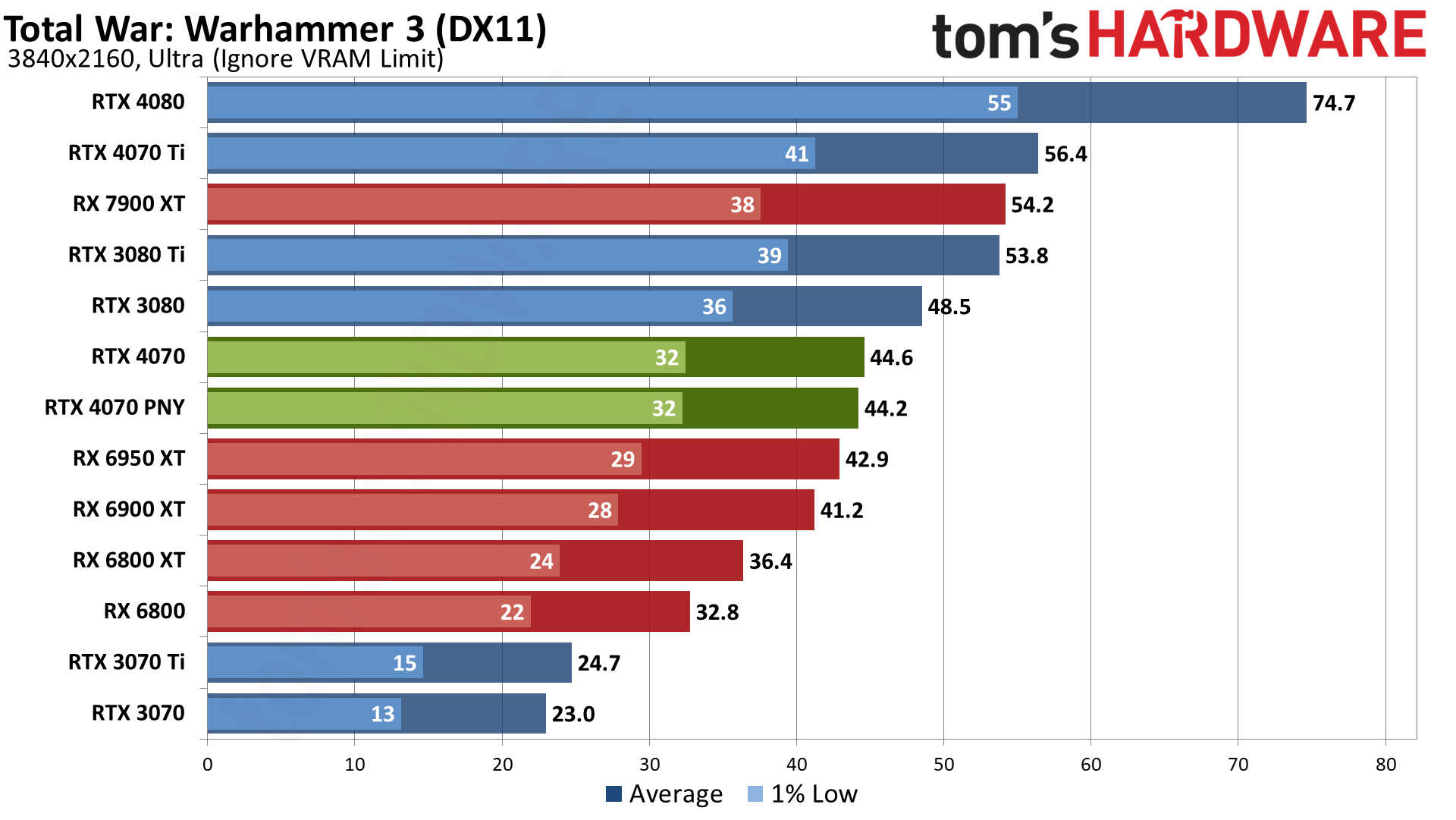

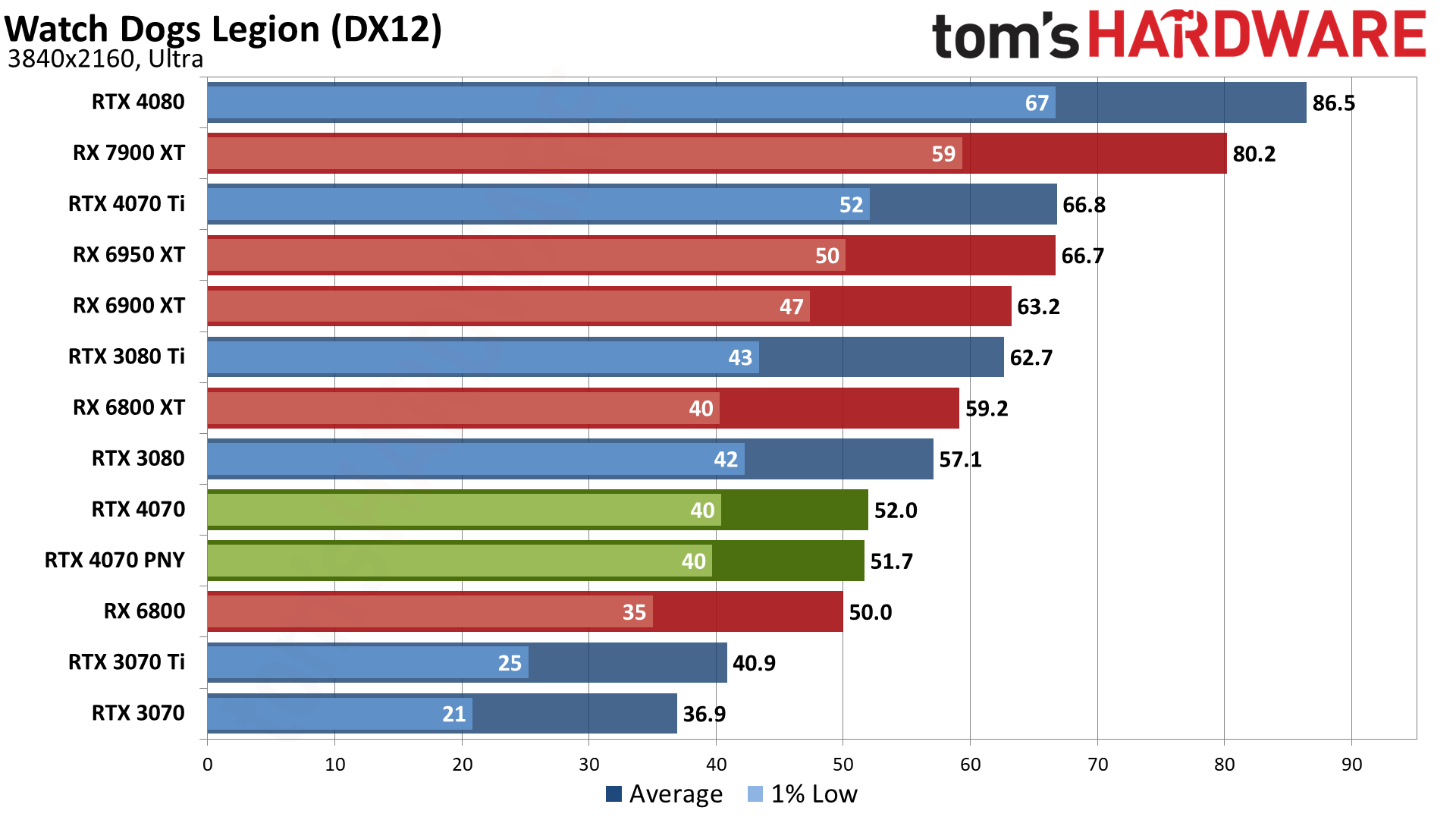

Turning to our traditional rasterization test suite, AMD's GPUs move up the charts quite a bit, but the story for the RTX 4070 remains mostly unchanged: It's effectively a modern equivalent of the RTX 3080 that launched in late 2020, with 20% more memory and a power rating that's less than two-thirds of its predecessor.

Other points of interest: The RTX 4070 delivers nearly 30% more performance than its previous generation namesake, the RTX 3070 — while still using less power. It's also 20% faster than the 3070 Ti, but the 4070 Ti delivers 20% higher performance than the vanilla 4070.

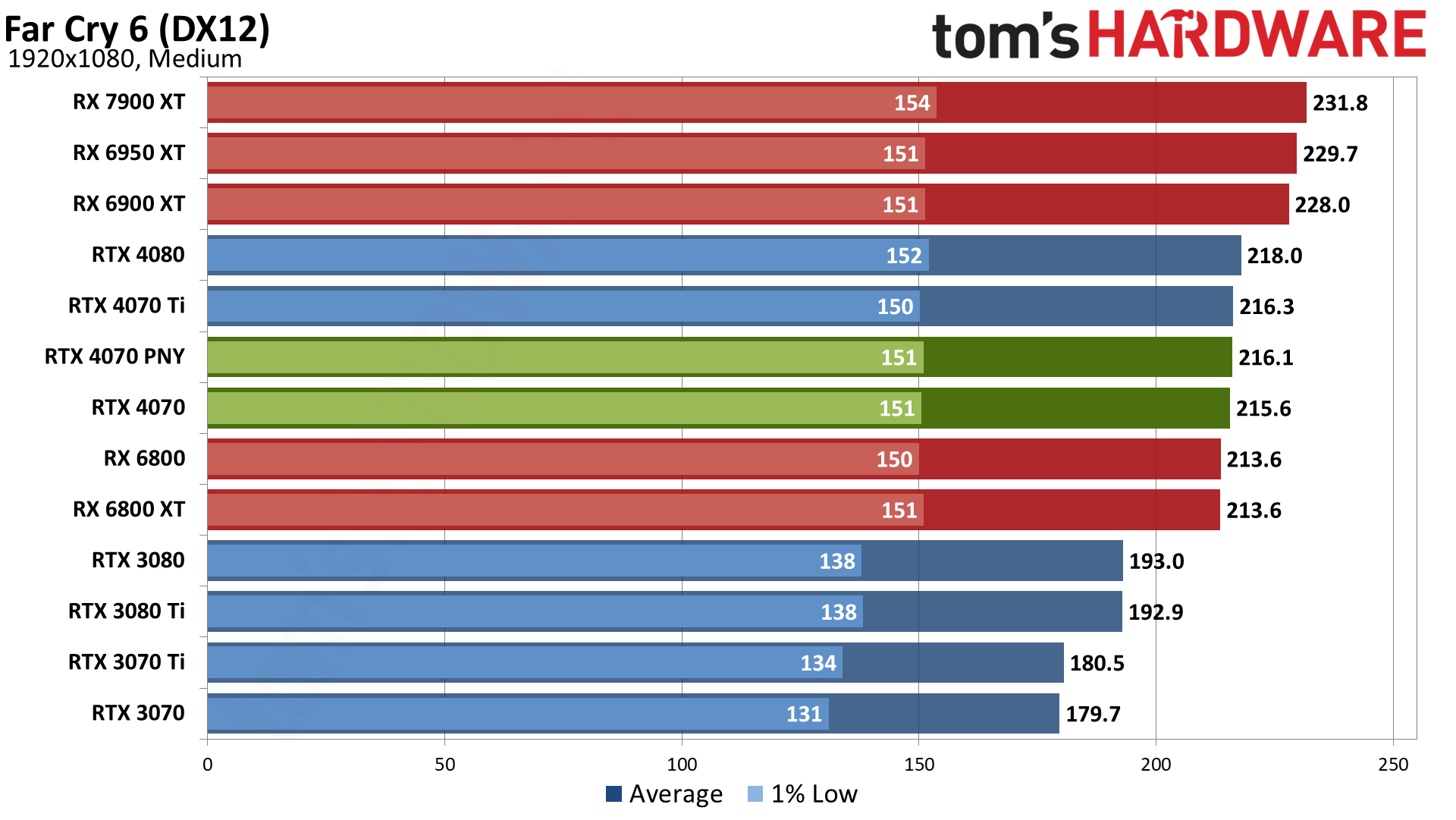

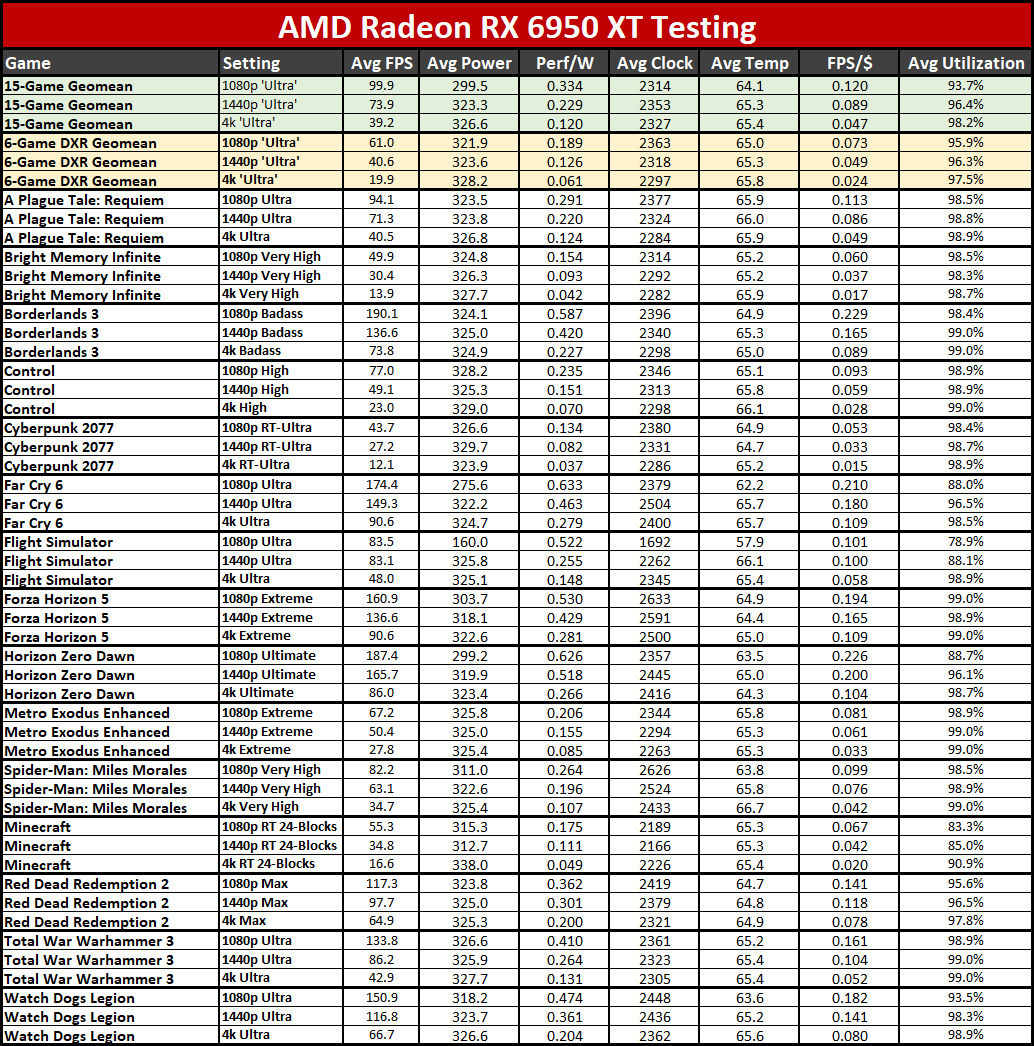

AMD would like potential buyers of the RTX 4070 to consider its RX 69xx and 6800 class GPUs as an alternative, and that's mostly a fair point. If you're not playing ray tracing games, the RX 6950 XT easily delivers better native resolution gaming than the RTX 4070 — it's 14% faster, though that's on a factory overclocked 6950 XT. If we factor in our maximum overclock, the margin shrinks to about 6%.

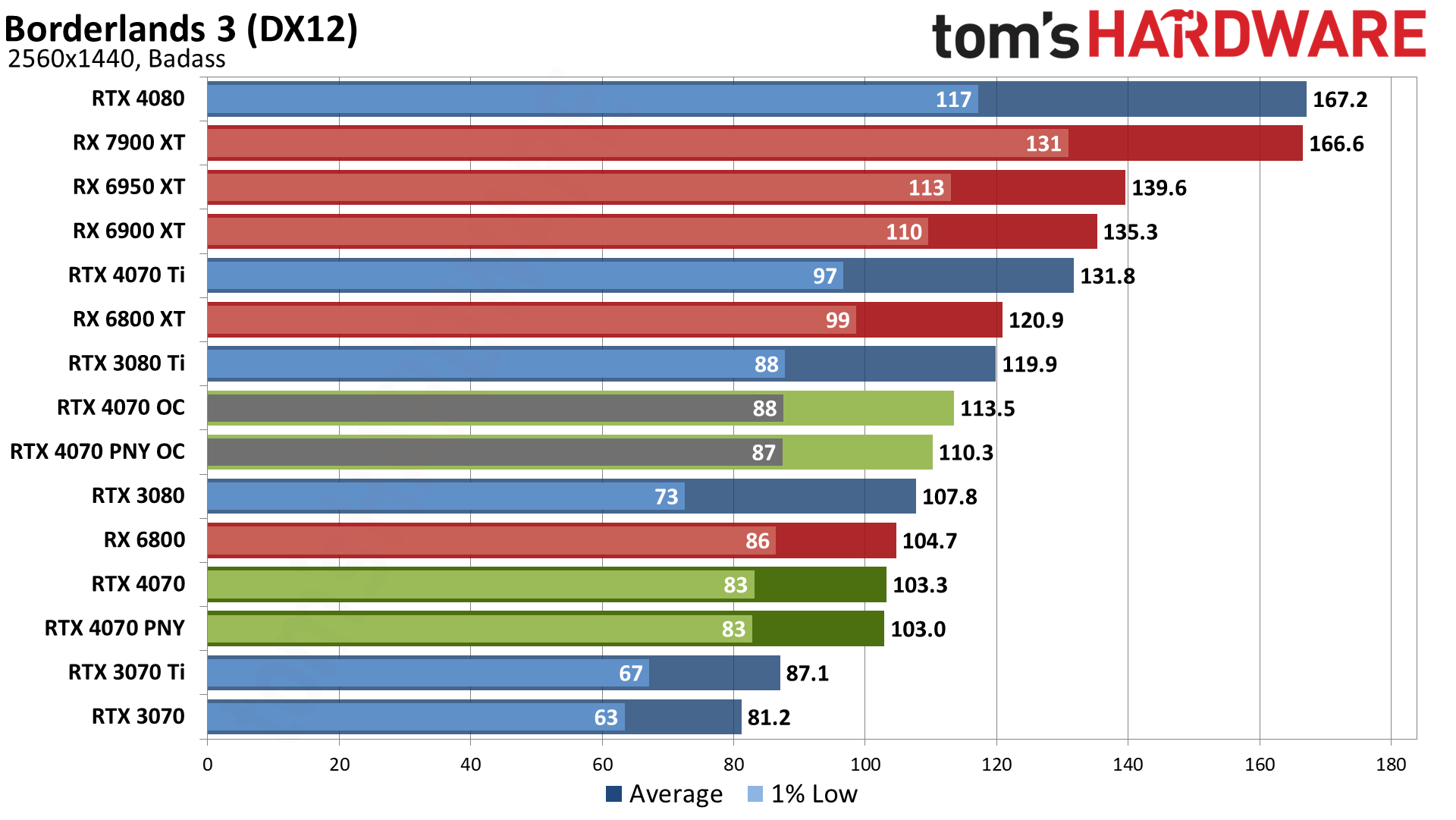

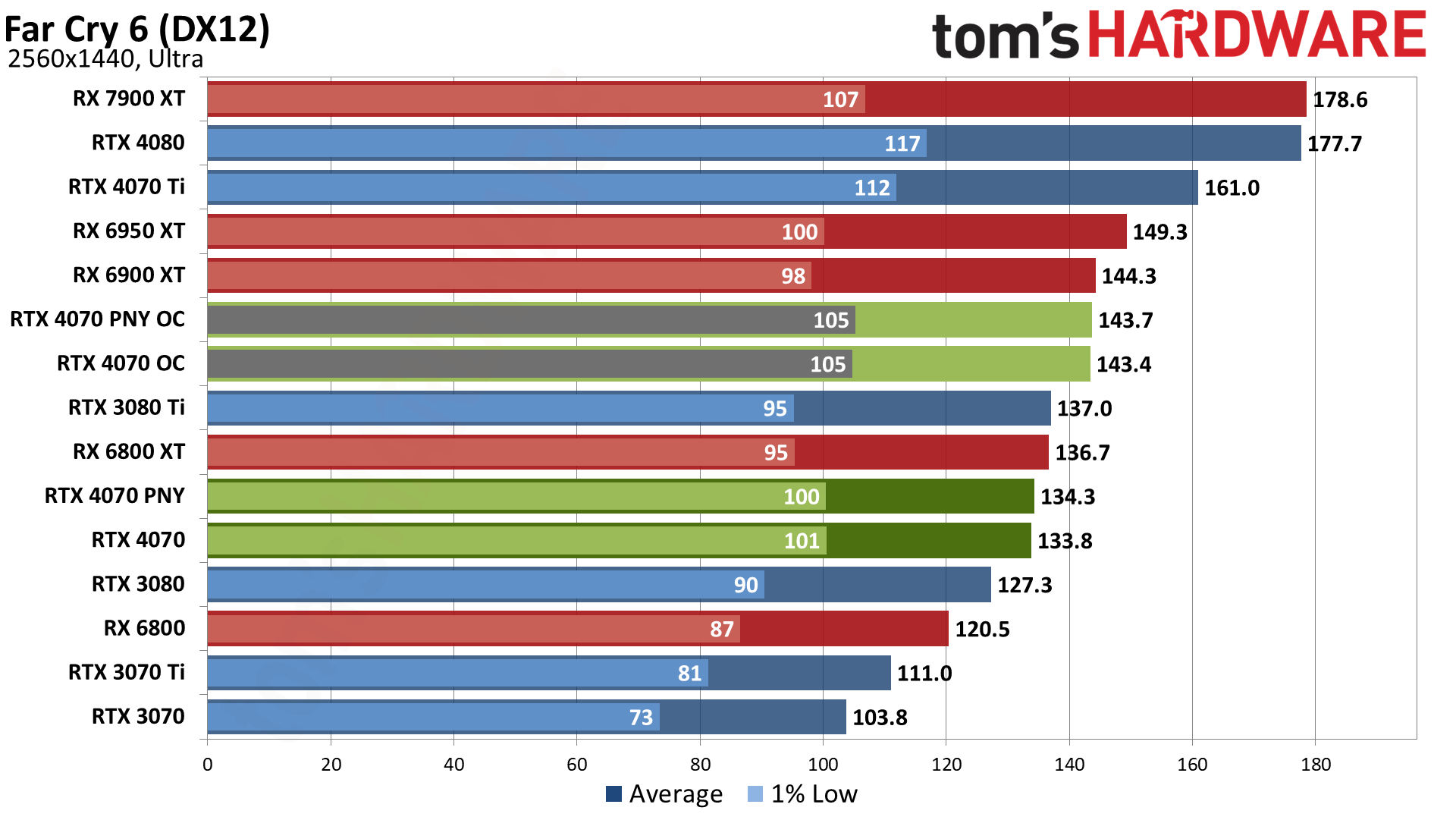

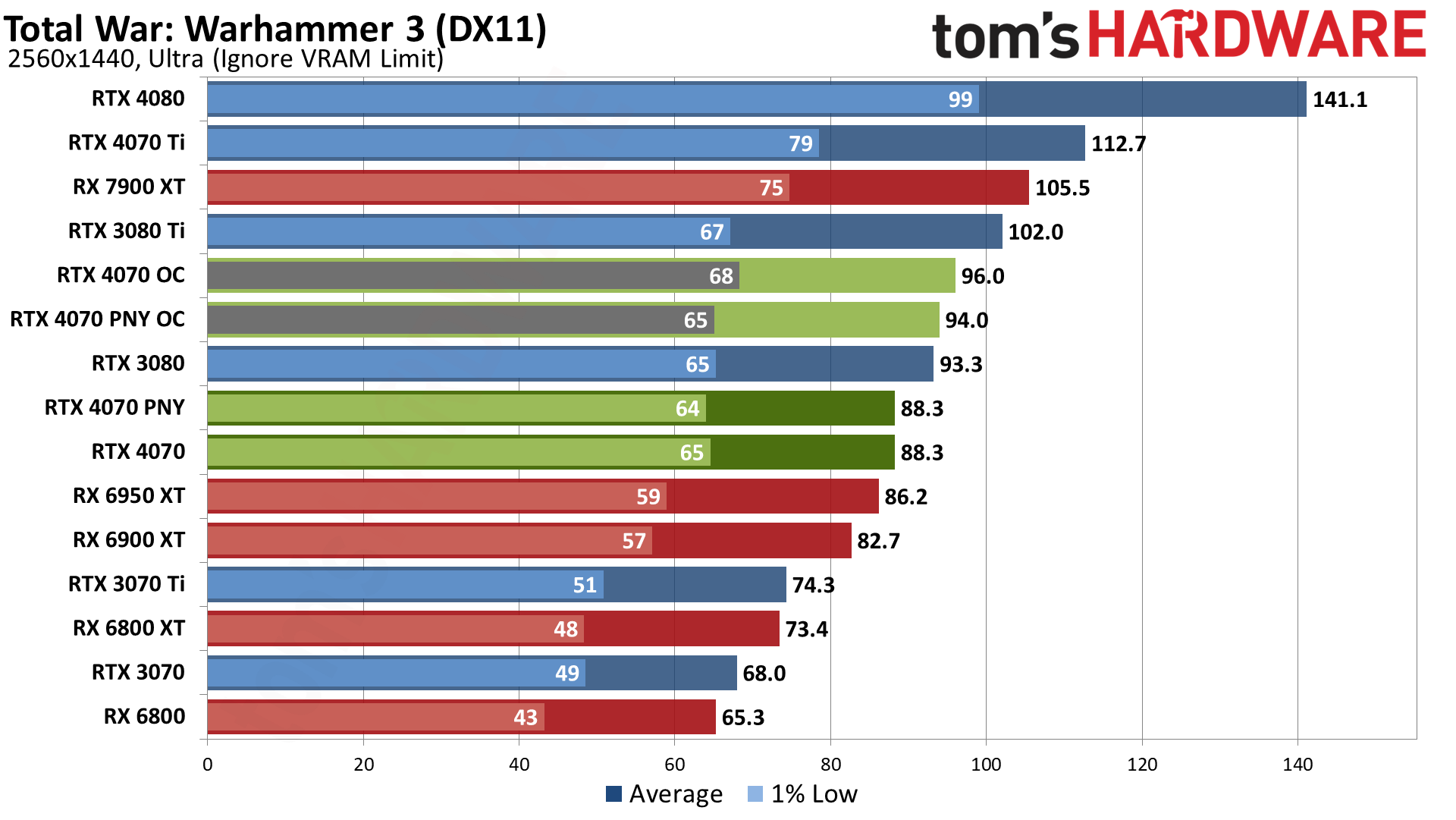

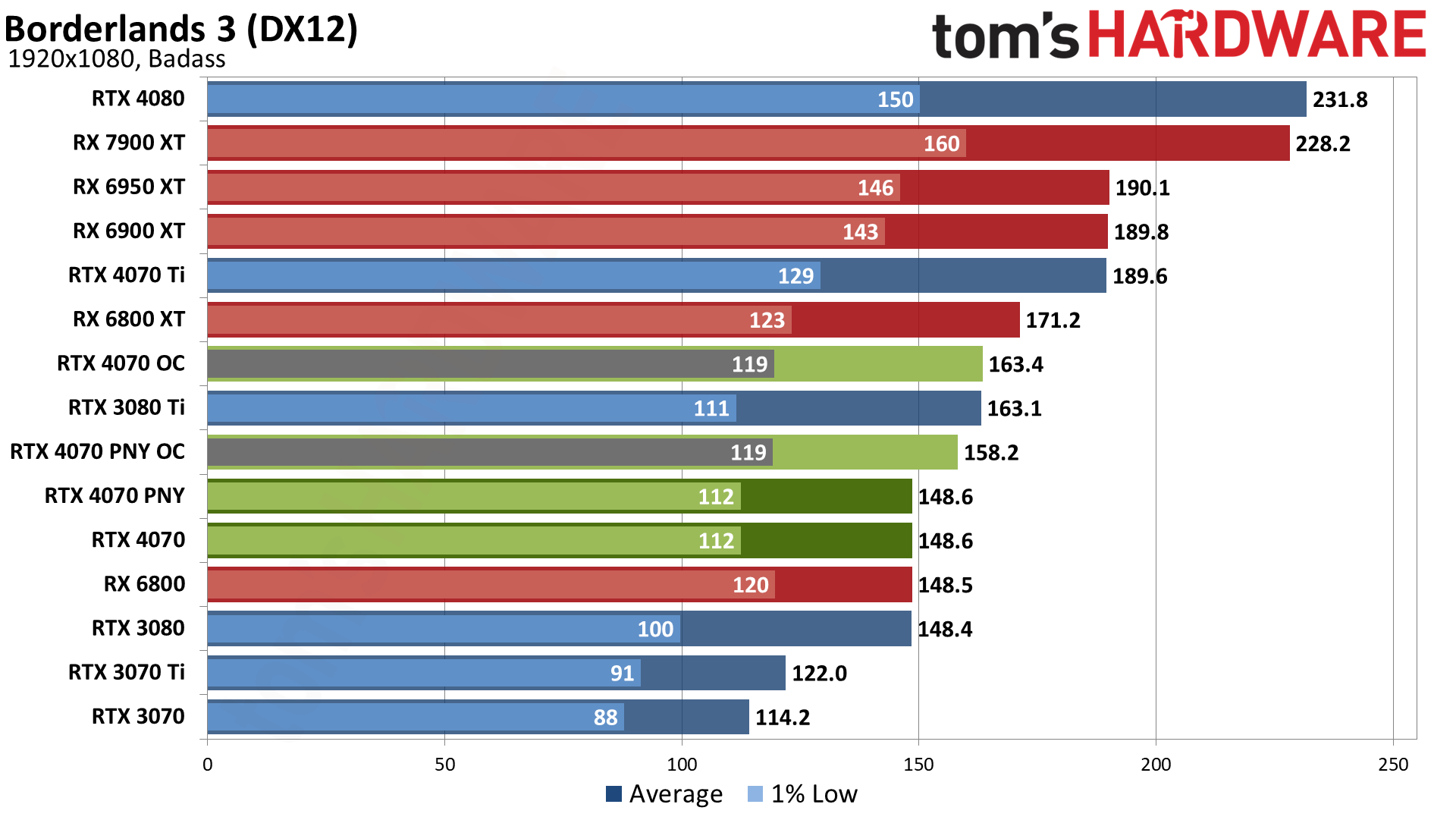

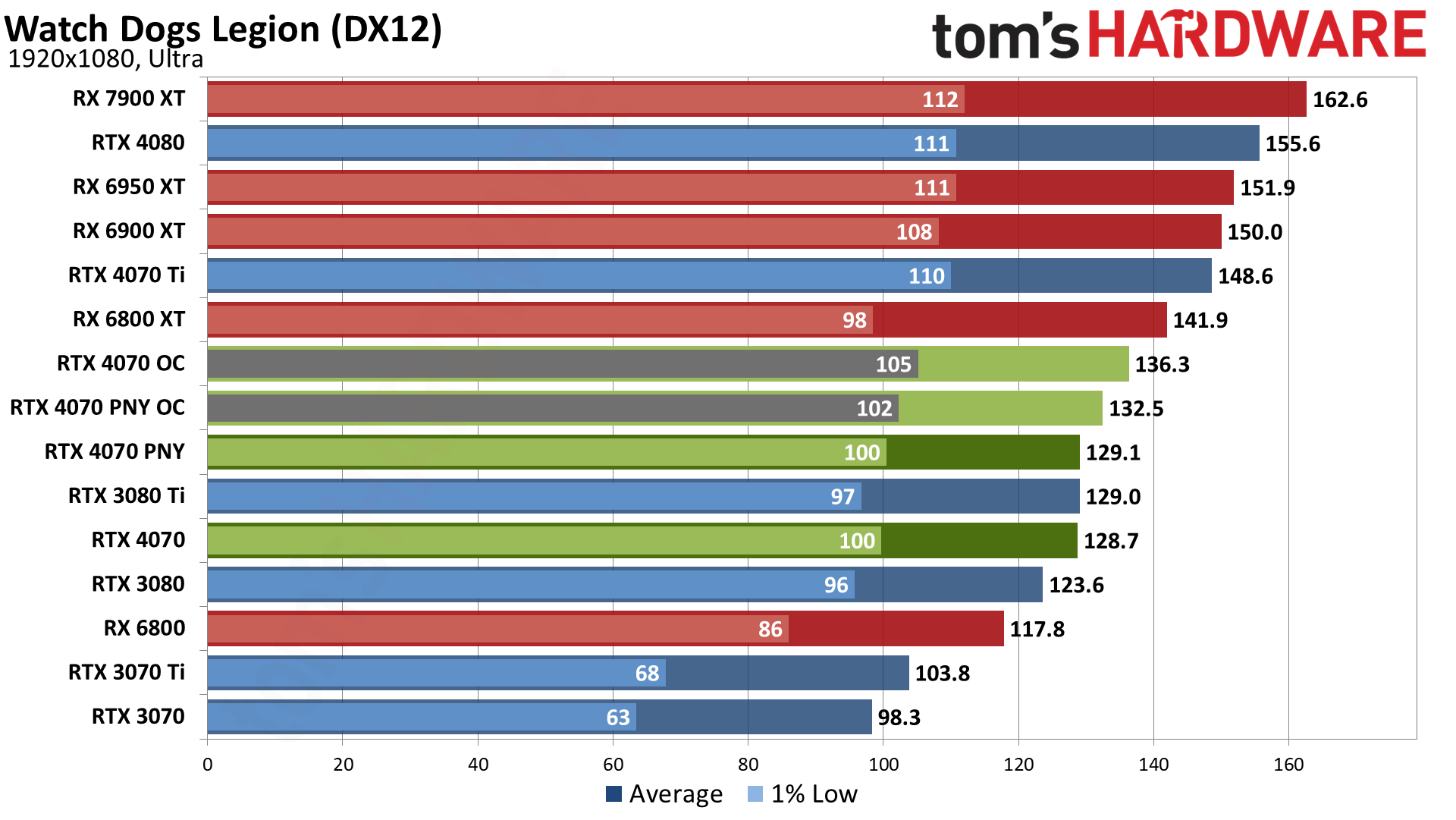

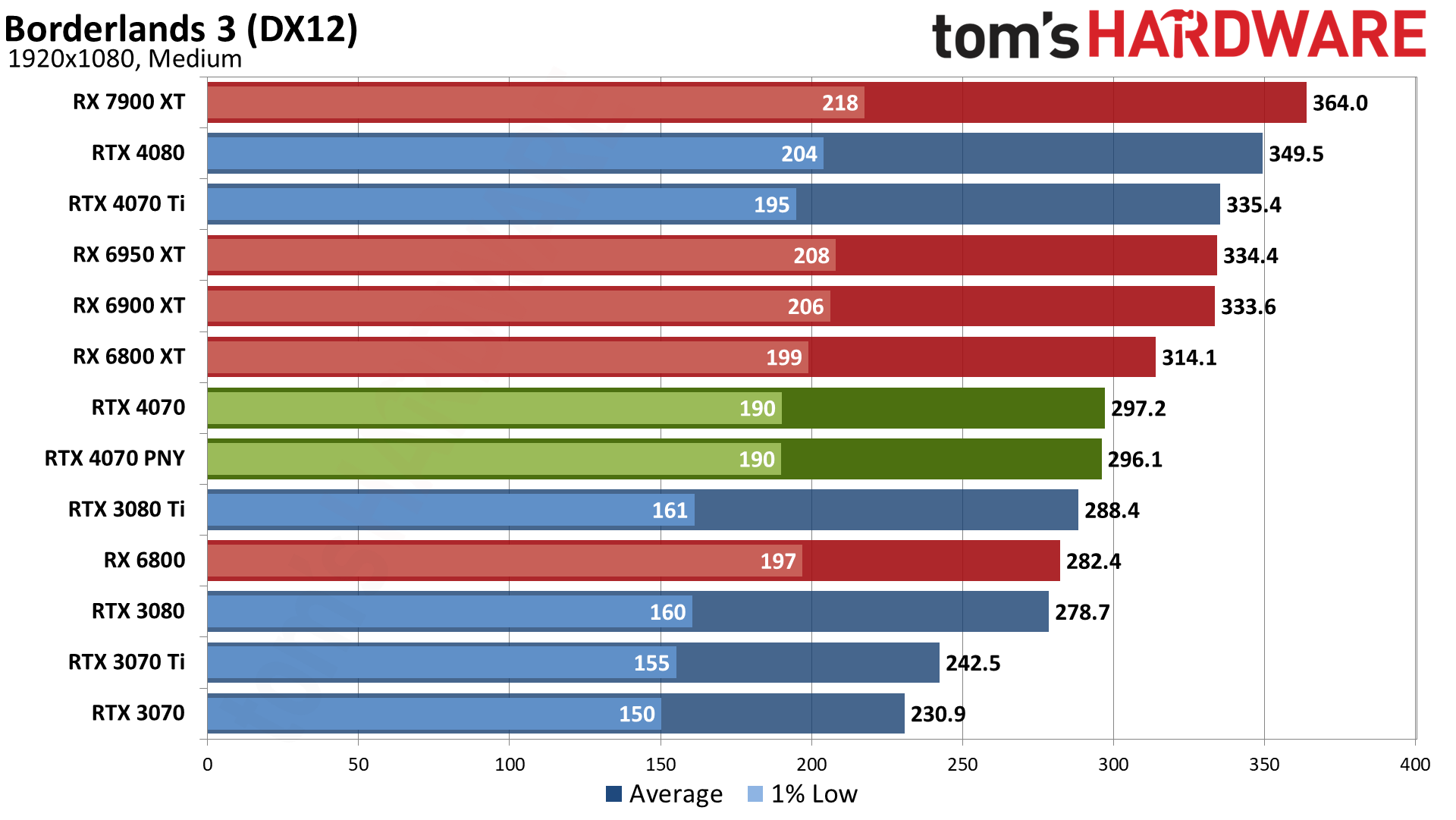

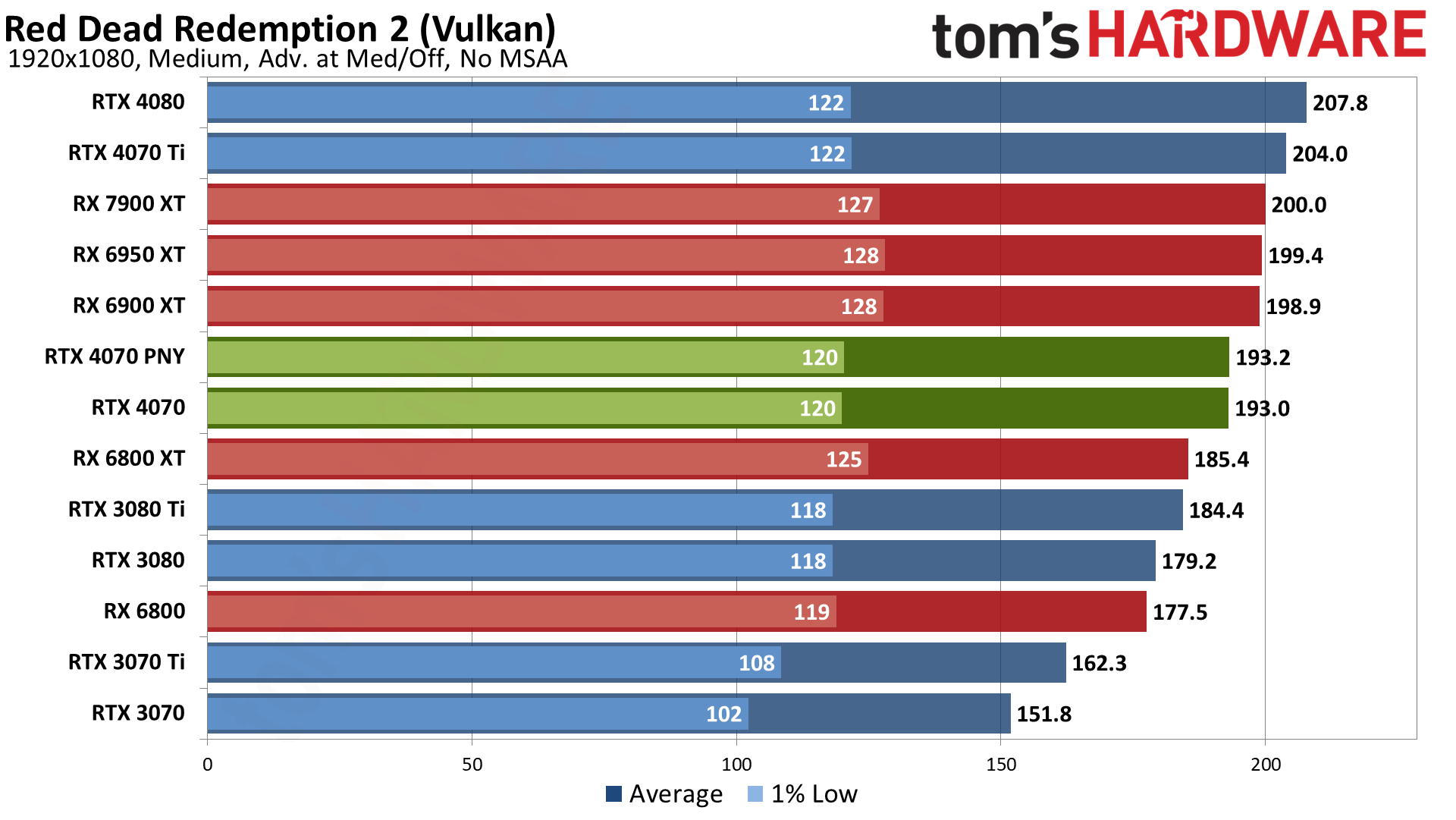

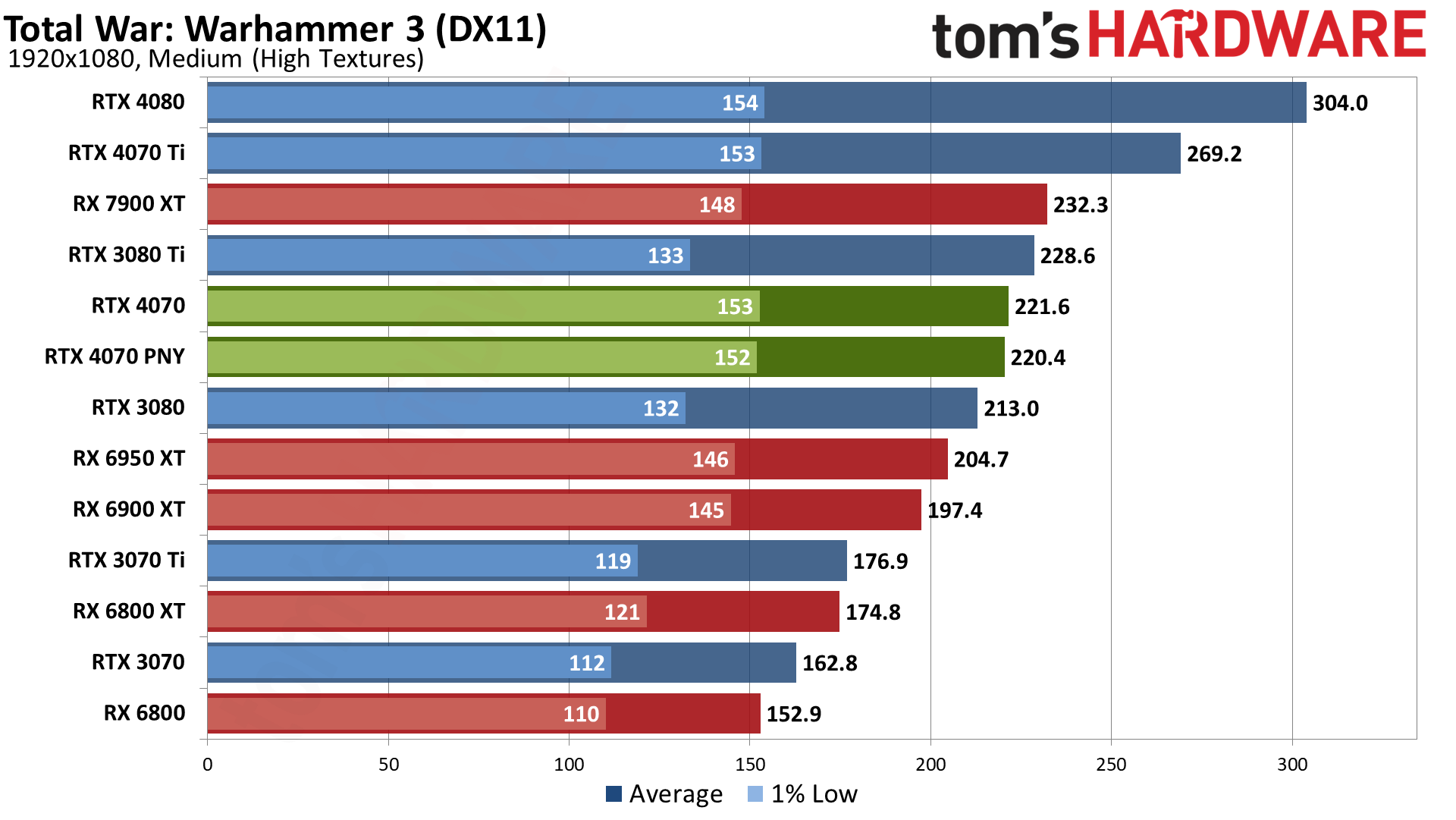

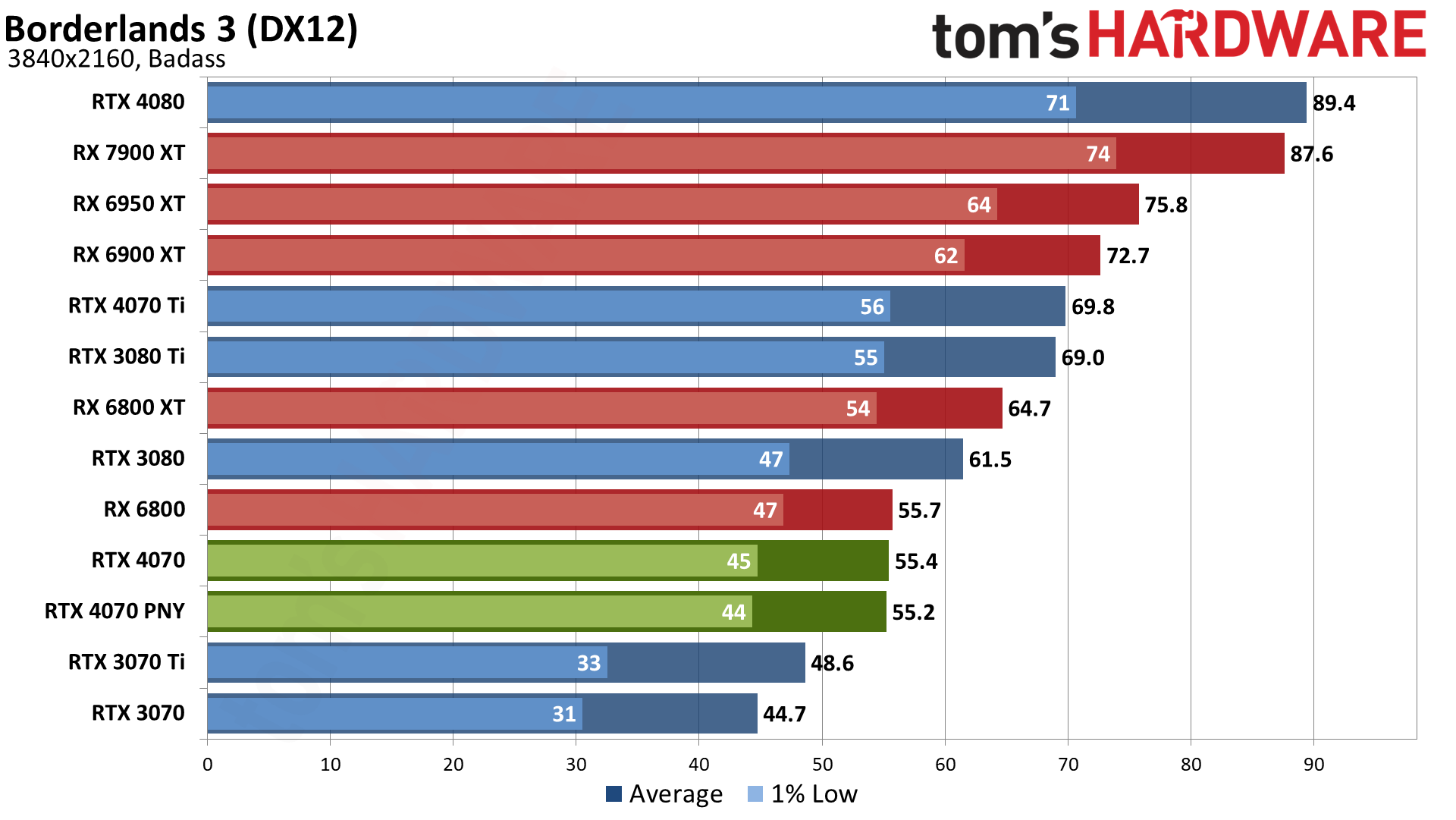

You can flip through the individual gaming charts as well, though mostly they coincide with the geometric mean of the rasterization suite. The the RX 6950 XT lead mostly lands in the low double digit percentage points, with Total War: Warhammer 3 being the only game where the RTX 4070 was (barely) faster. Borderlands 3 meanwhile favors the AMD card by 26%.

The issue with the RX 6000-series parts isn't just features, however; it's availability. Right now, there's an ASRock RX 6950 XT on Newegg for $599 after the promo code. That's the same price as the RTX 4070, likely chosen specifically to pit them against each other. AMD's previous generation card will use over 50% more power, but if you're less concerned with being green and more interested in FPS, it's a reasonable option.

That's probably the best option from AMD, as the RX 6900 XT now starts at closer to $1,000, though the RX 6800 XT can be had for $539 and the RX 6800 starts at $484. Neither one is clearly faster than the RTX 4070, however, and Nvidia does offer some additional perks like DLSS support if you're willing to buy into its ecosystem.

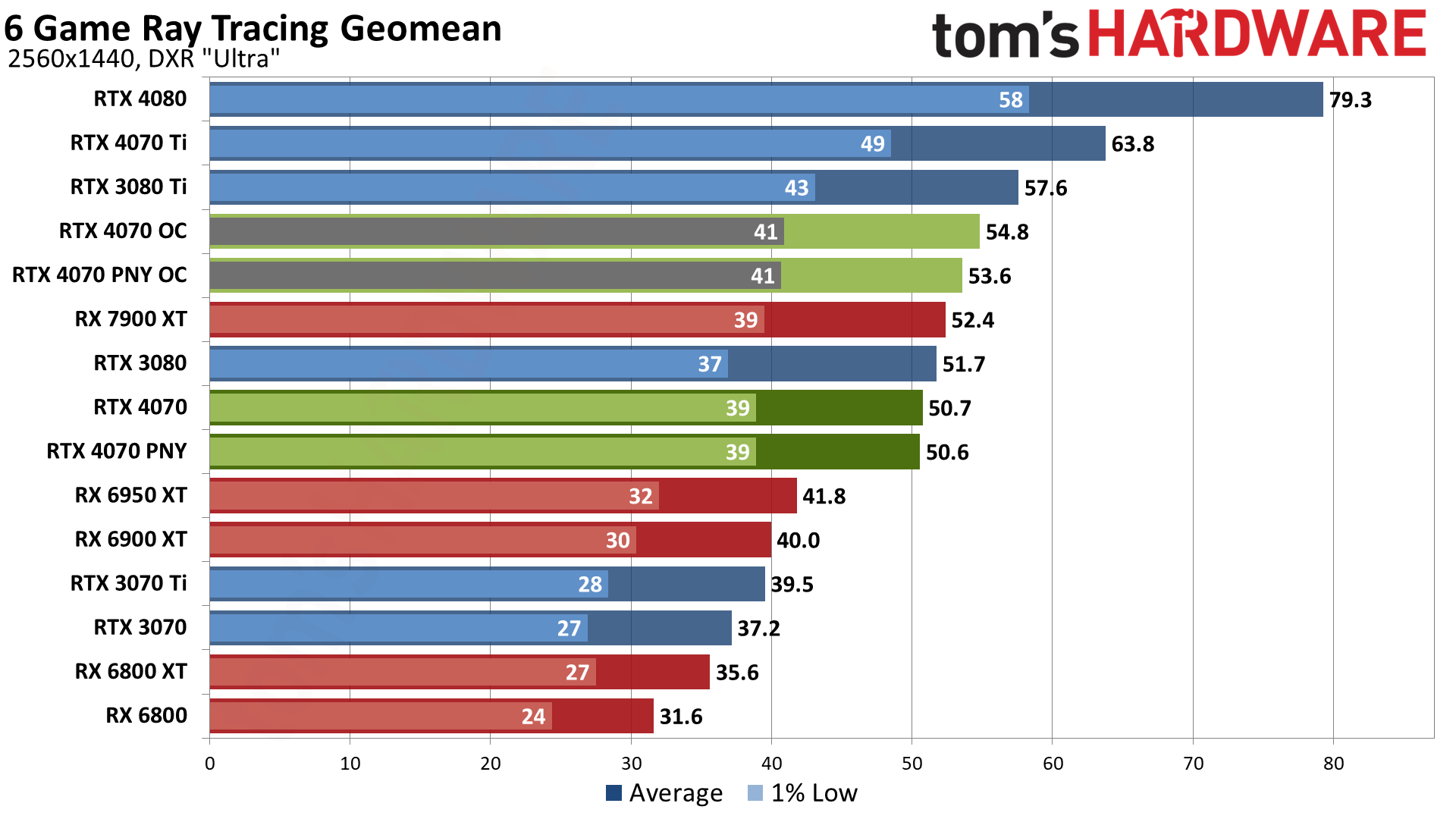

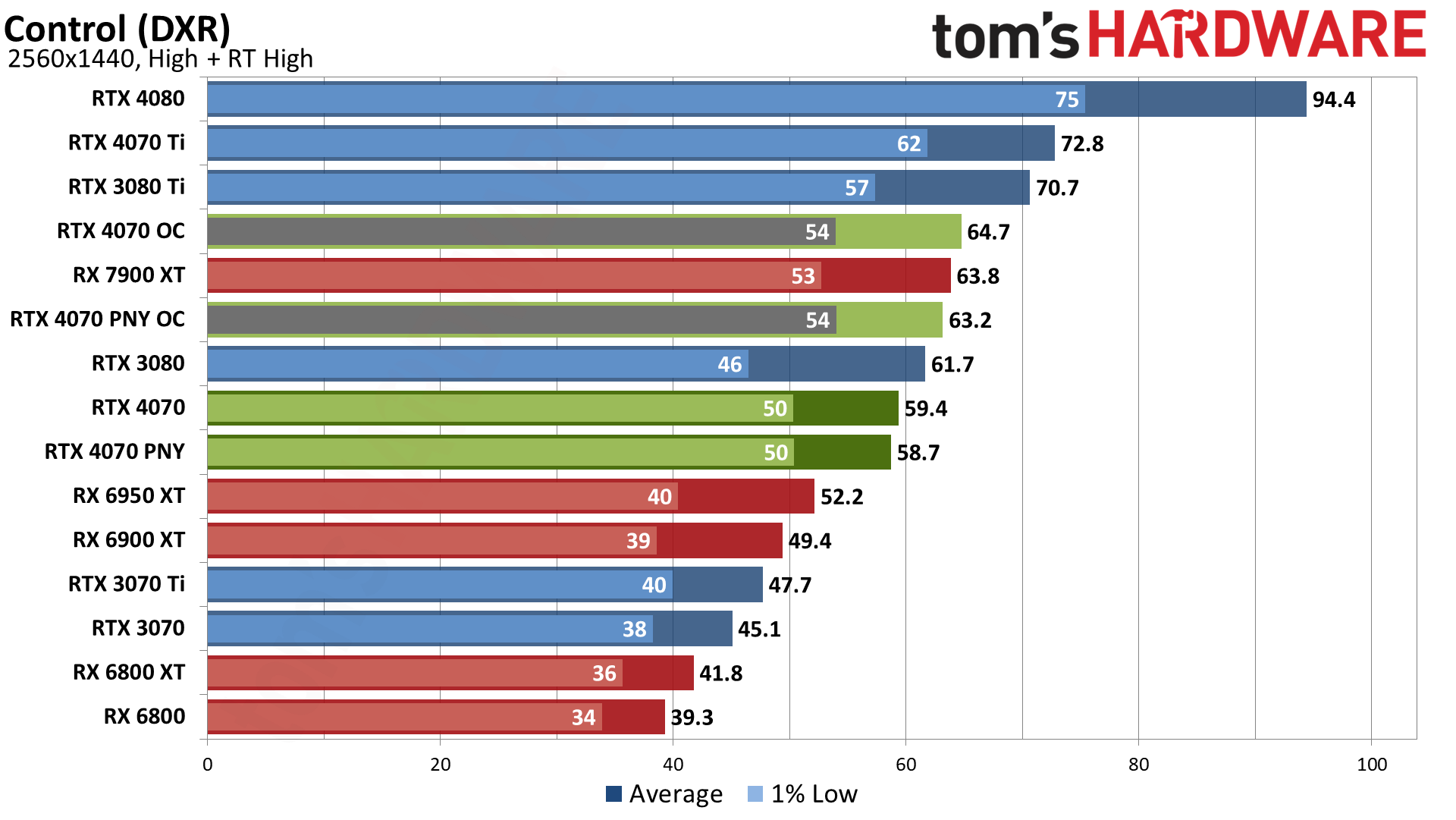

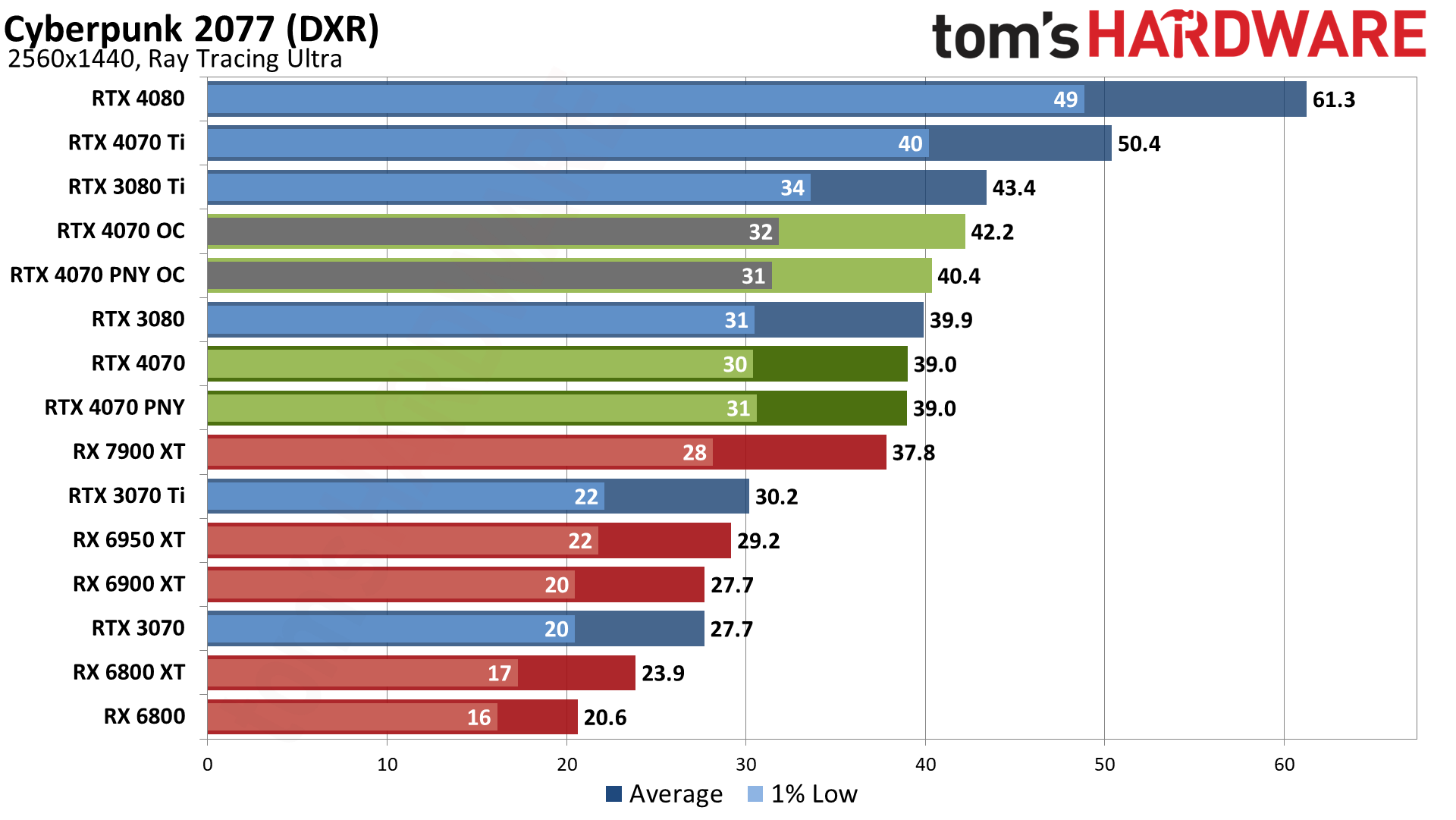

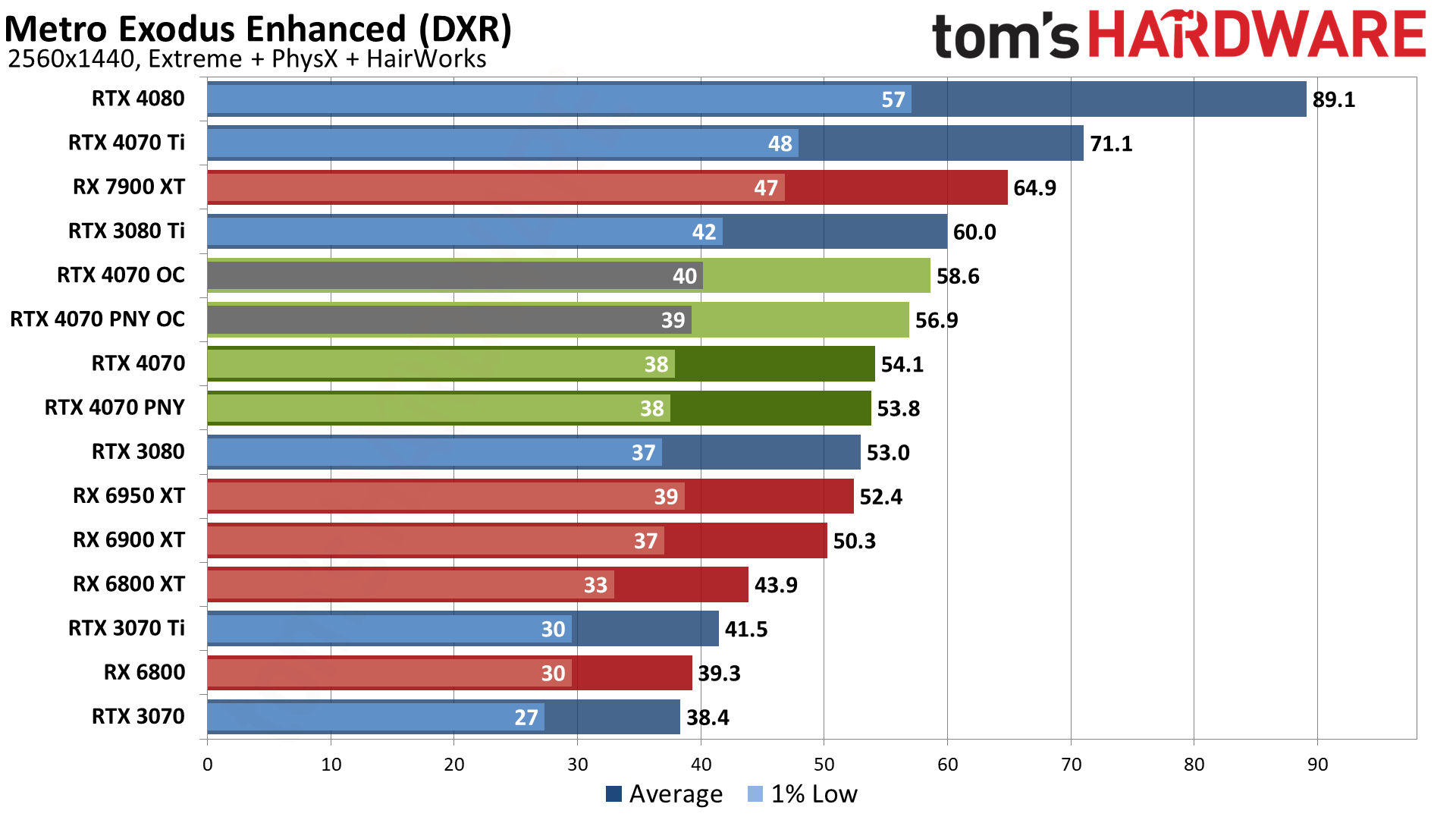

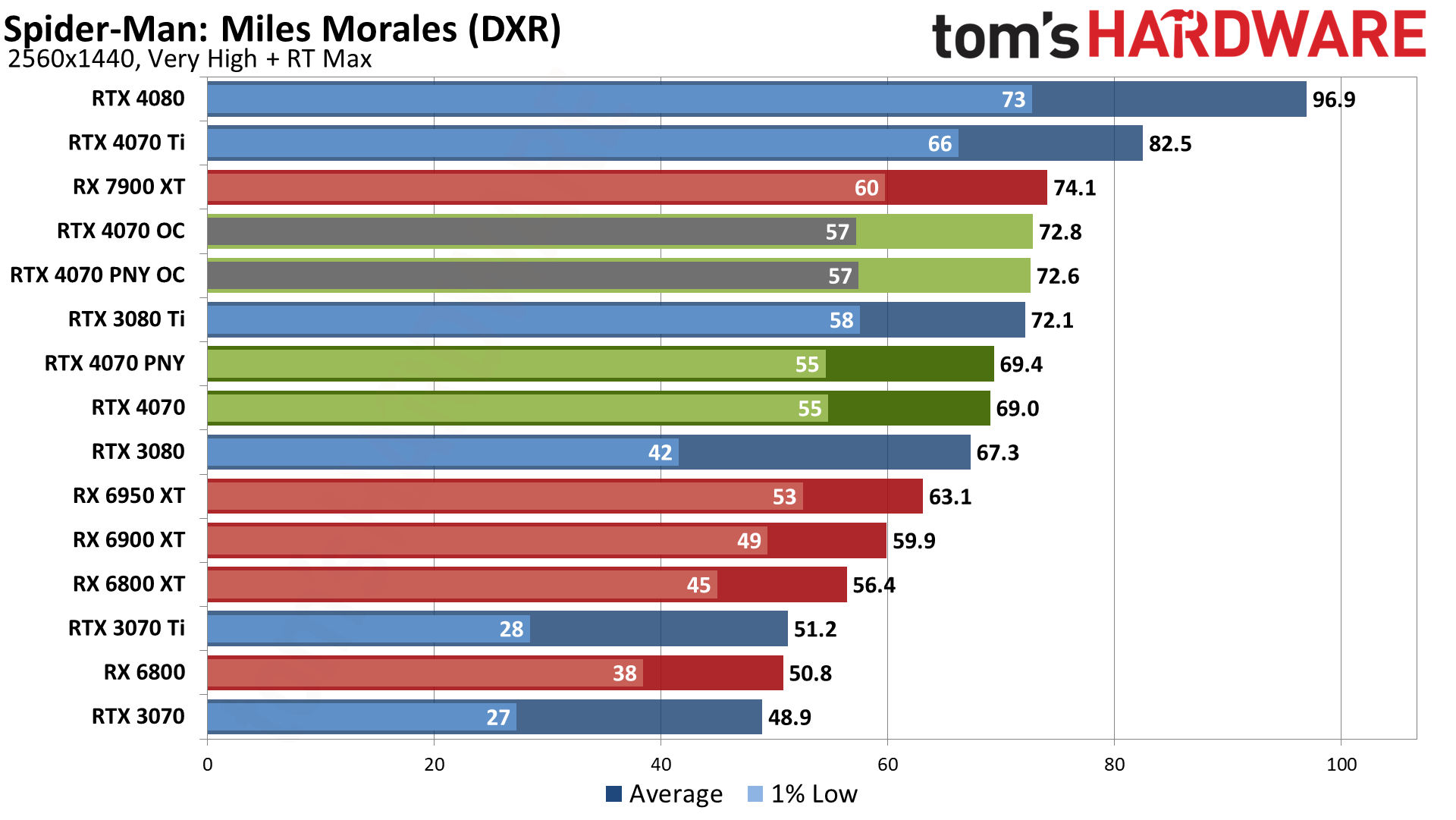

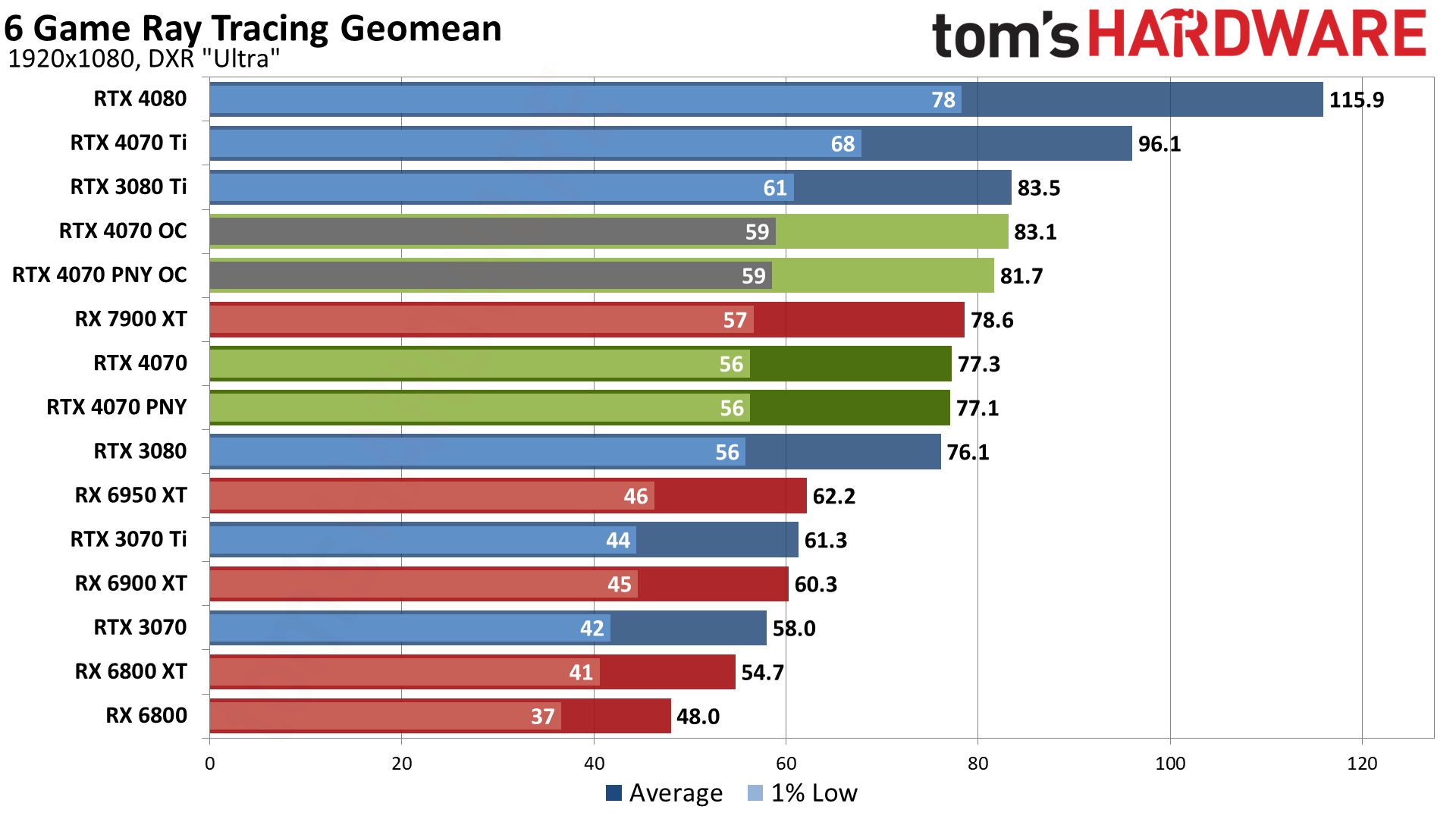

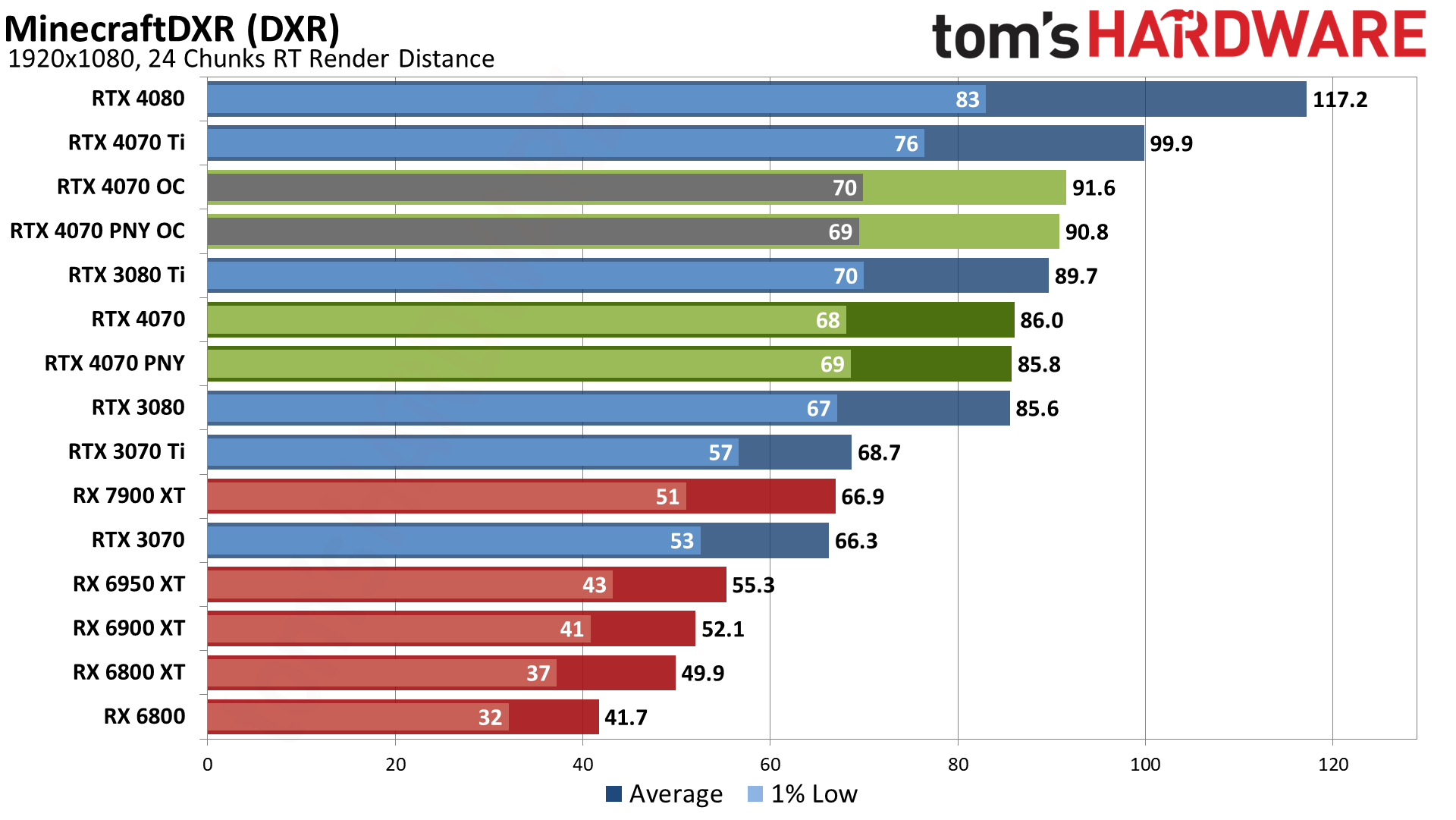

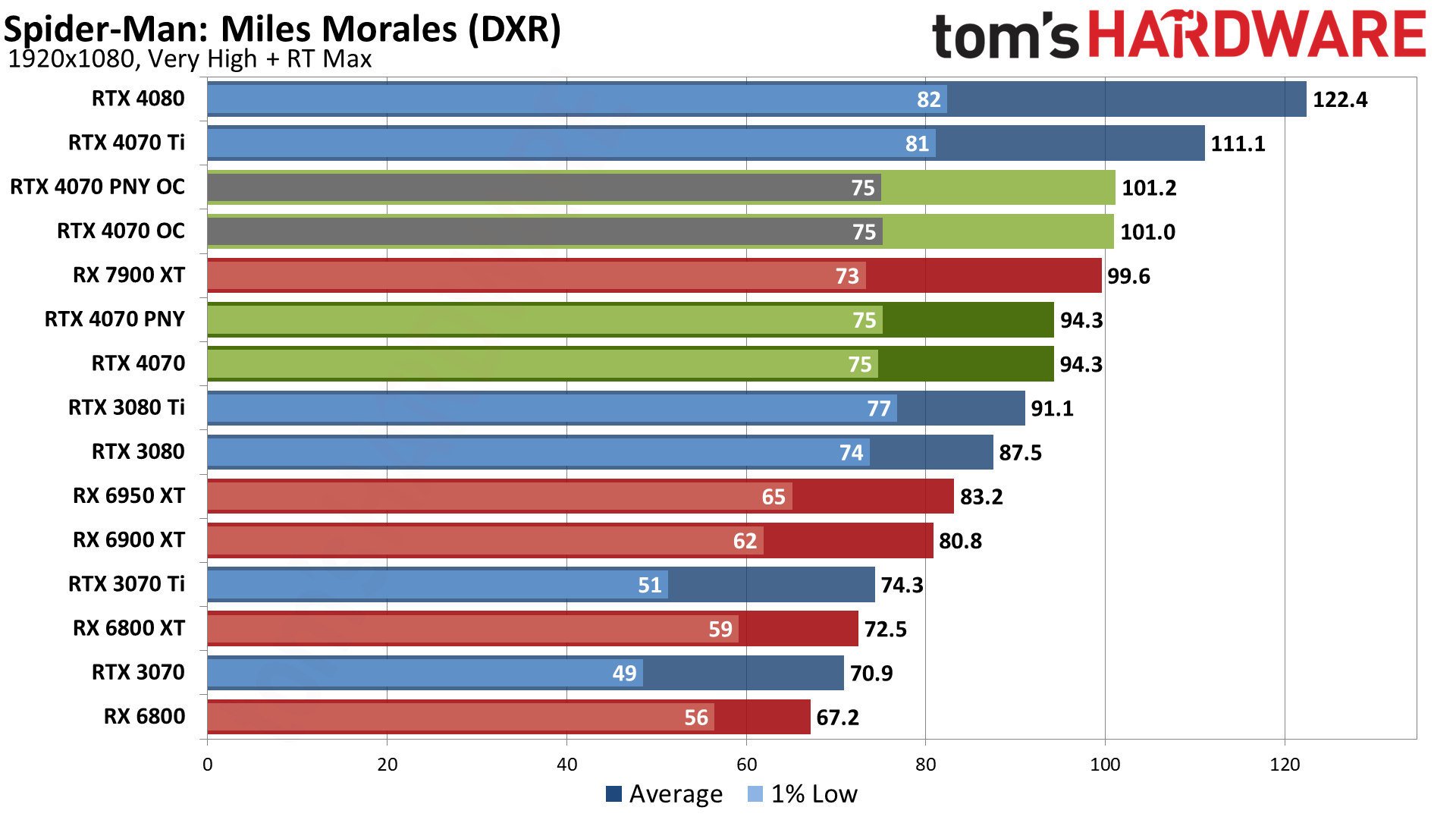

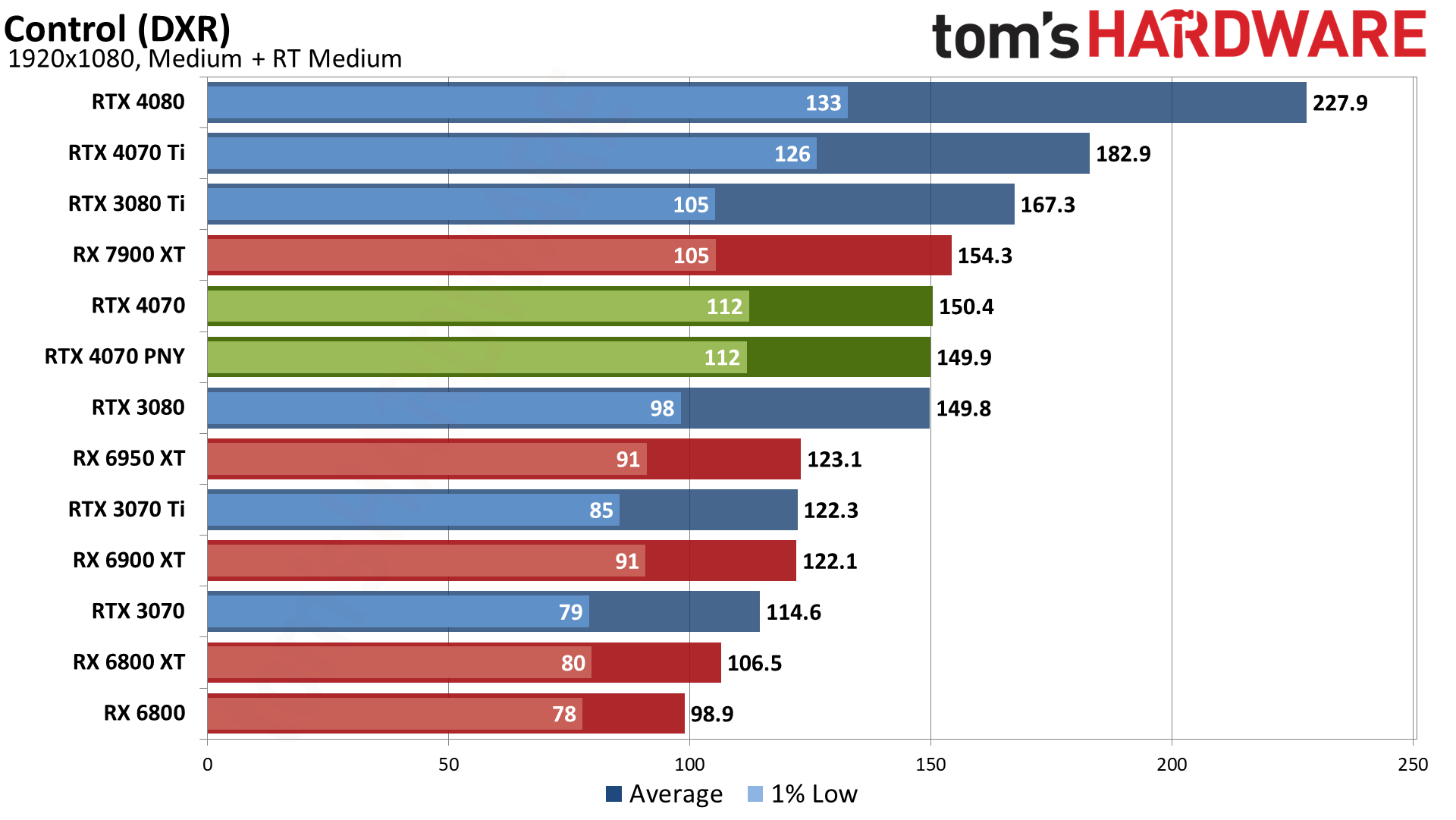

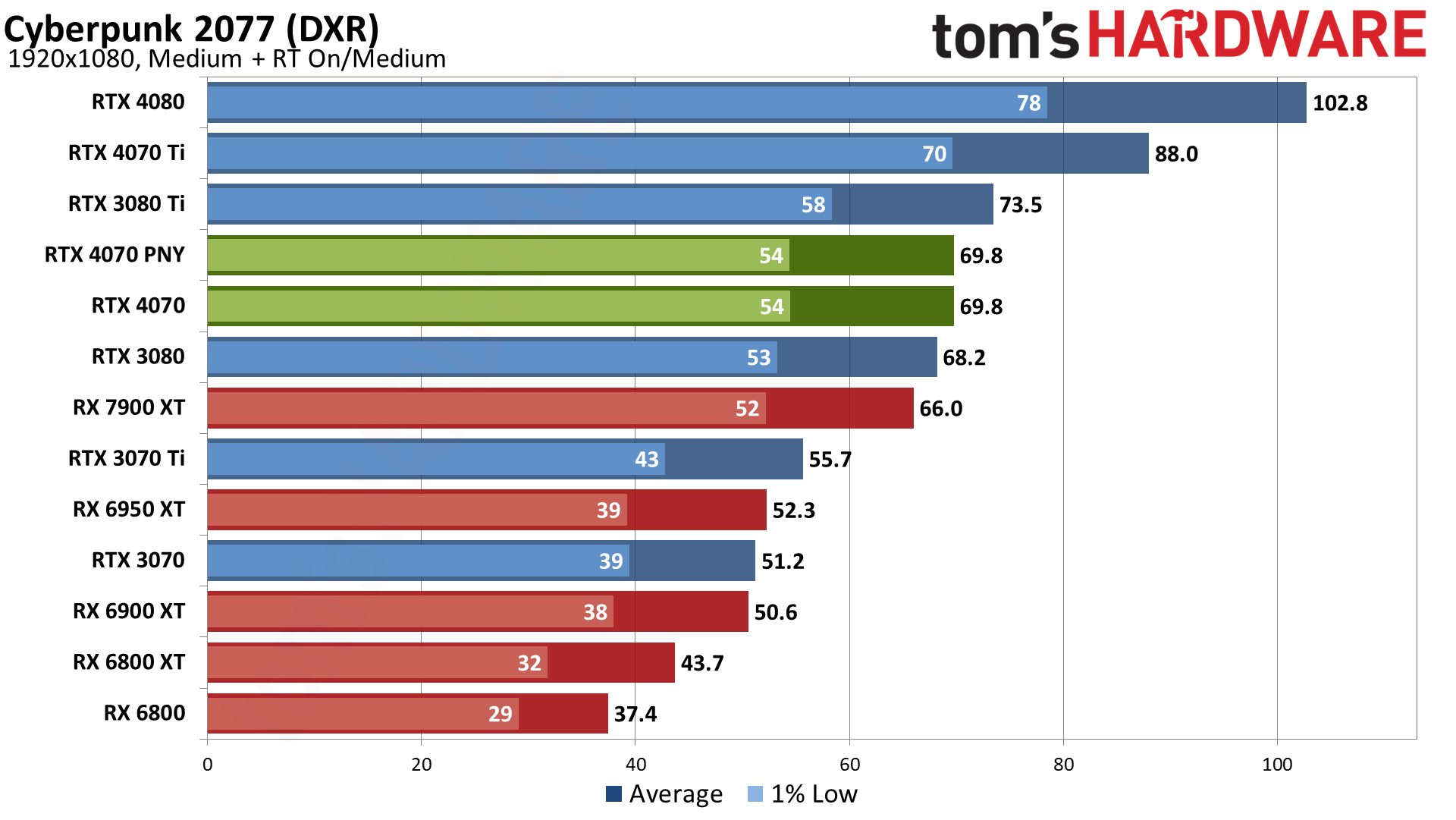

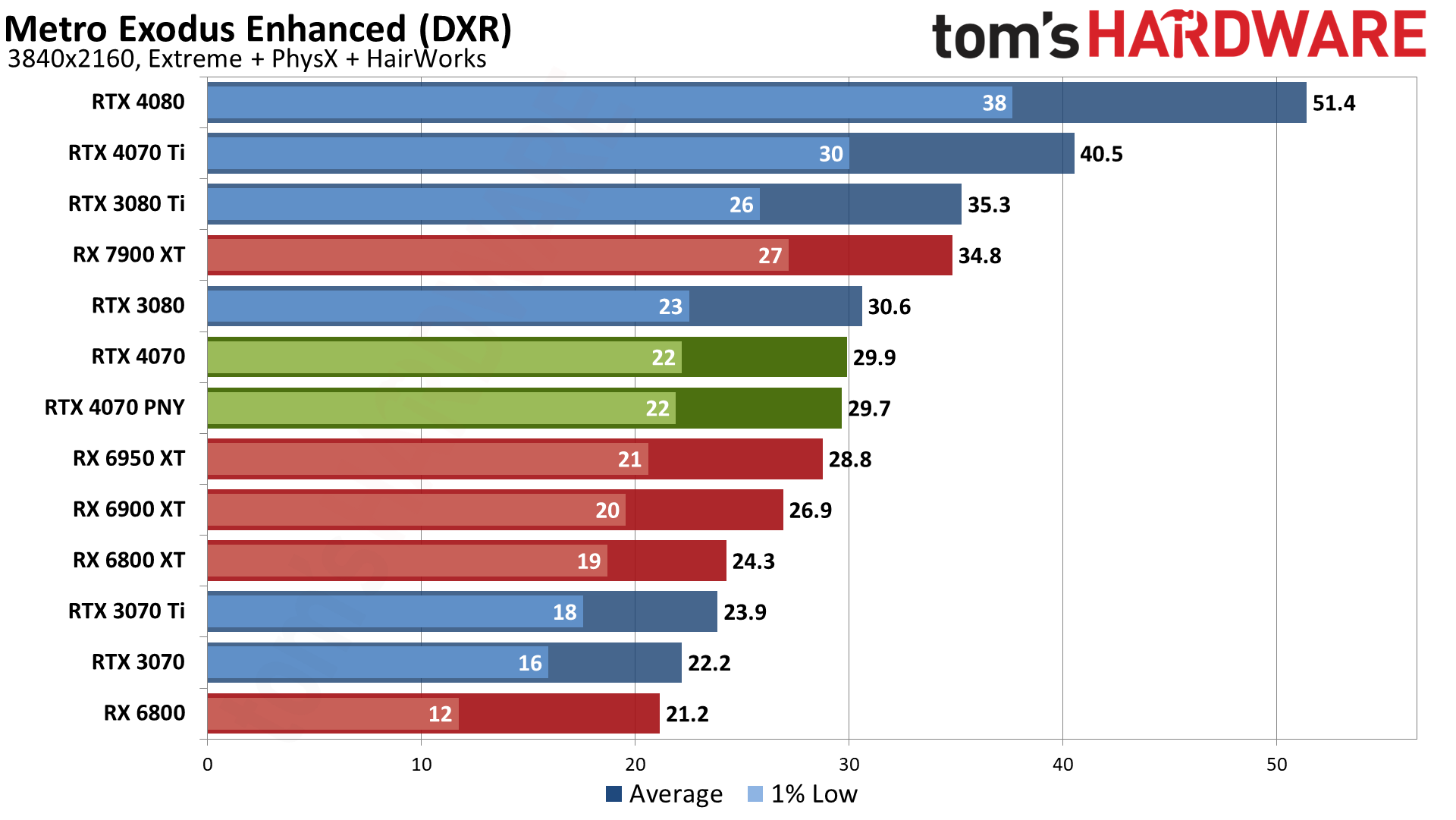

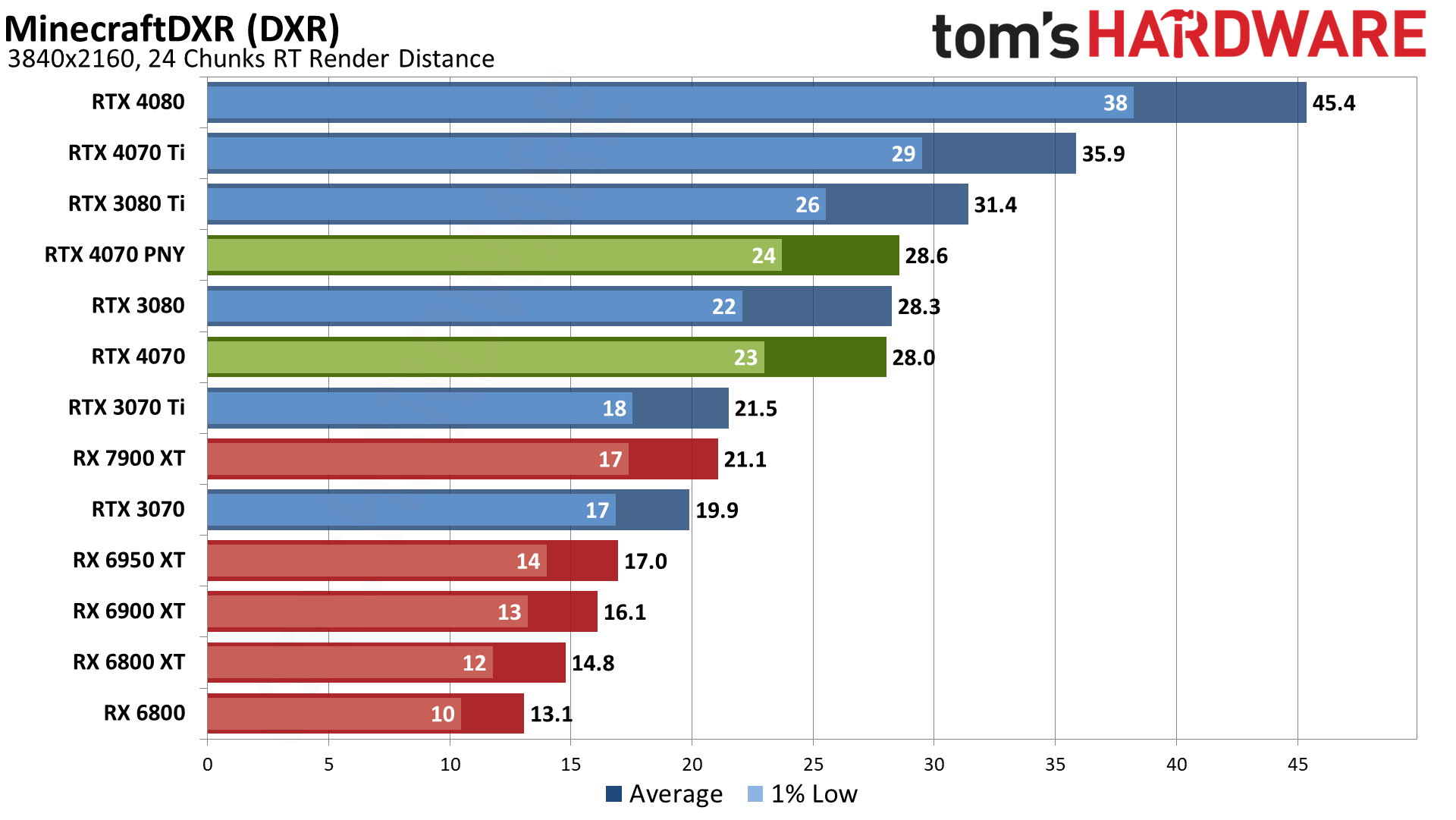

There's also the ray tracing aspect to consider, a continual pain point for AMD's GPUs. Where the RX 6950 XT was 14% faster on average in our rasterization test suite, with demanding DXR games the RTX 4070 claims a 21% lead. In fact, in our DXR suite, the RTX 4070 is very nearly tied with AMD's RX 7900 XT in performance.

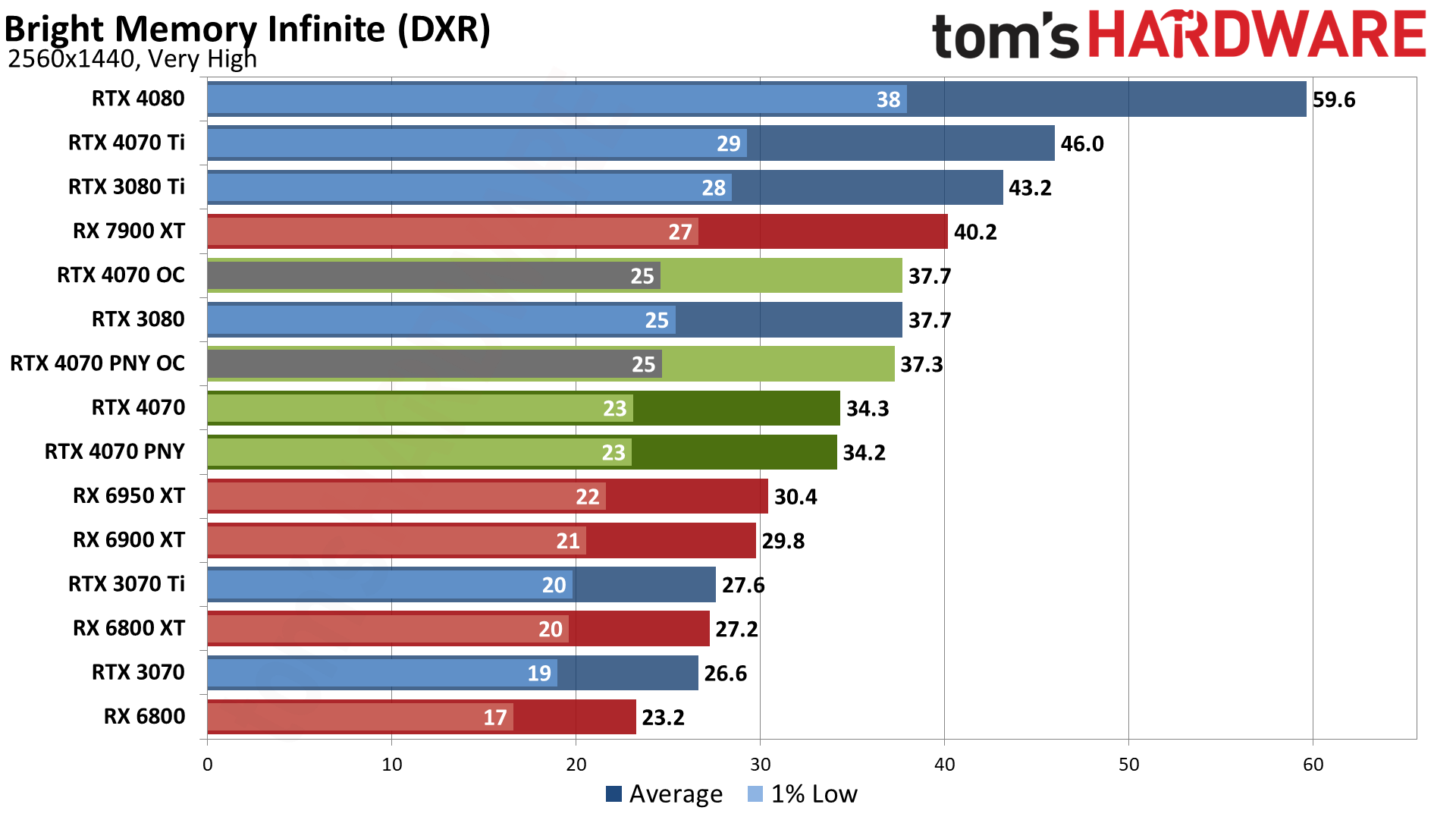

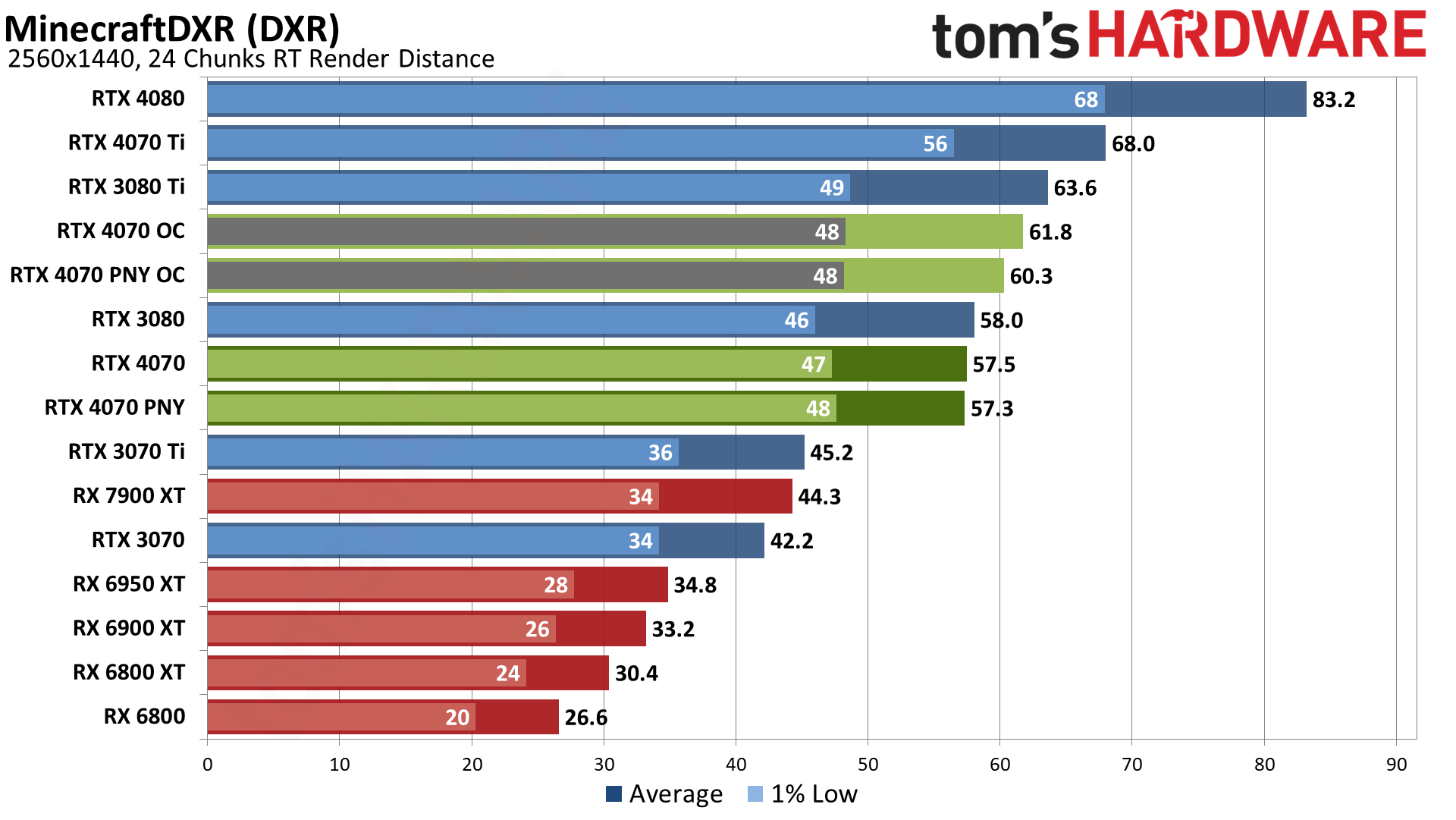

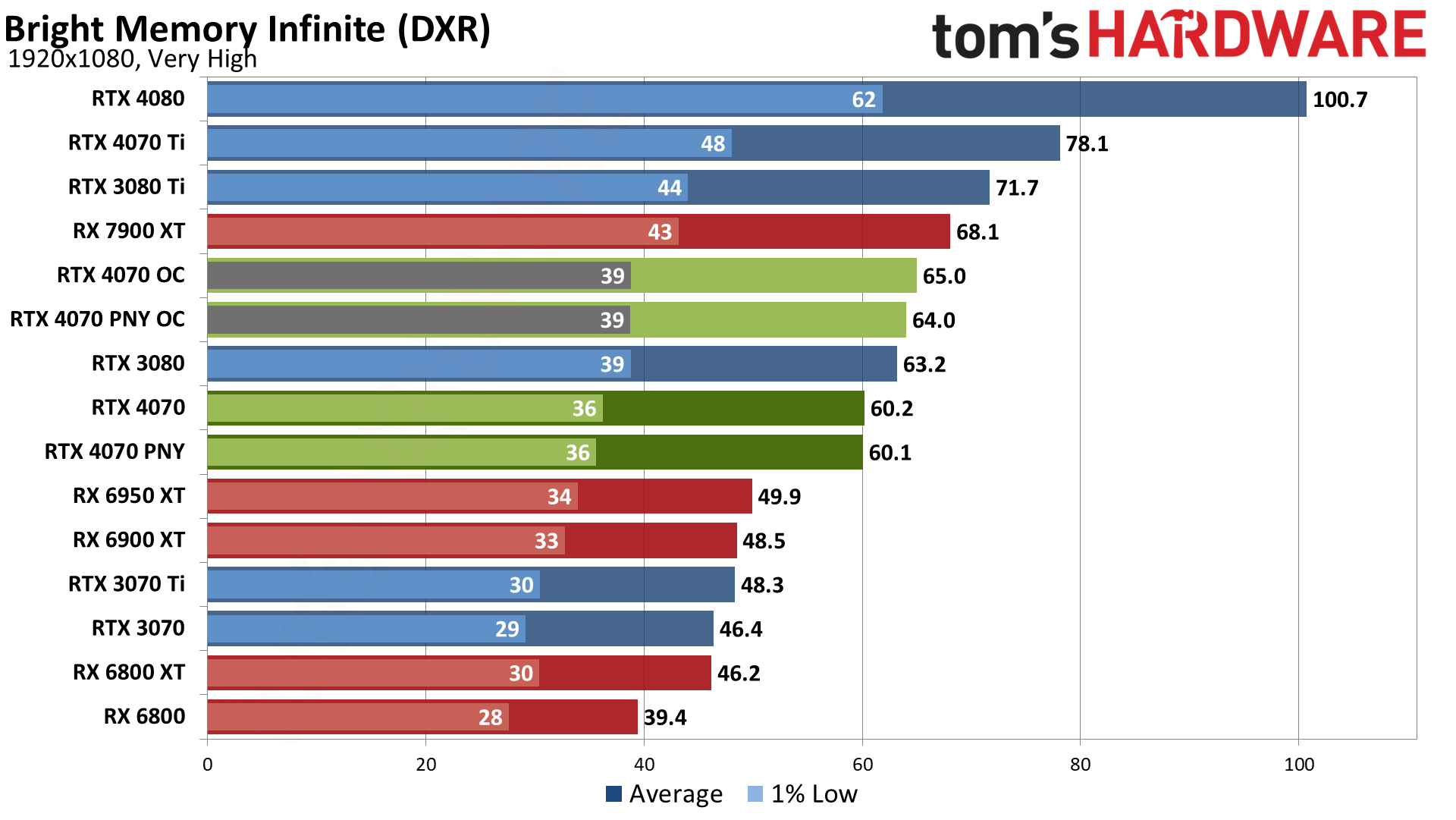

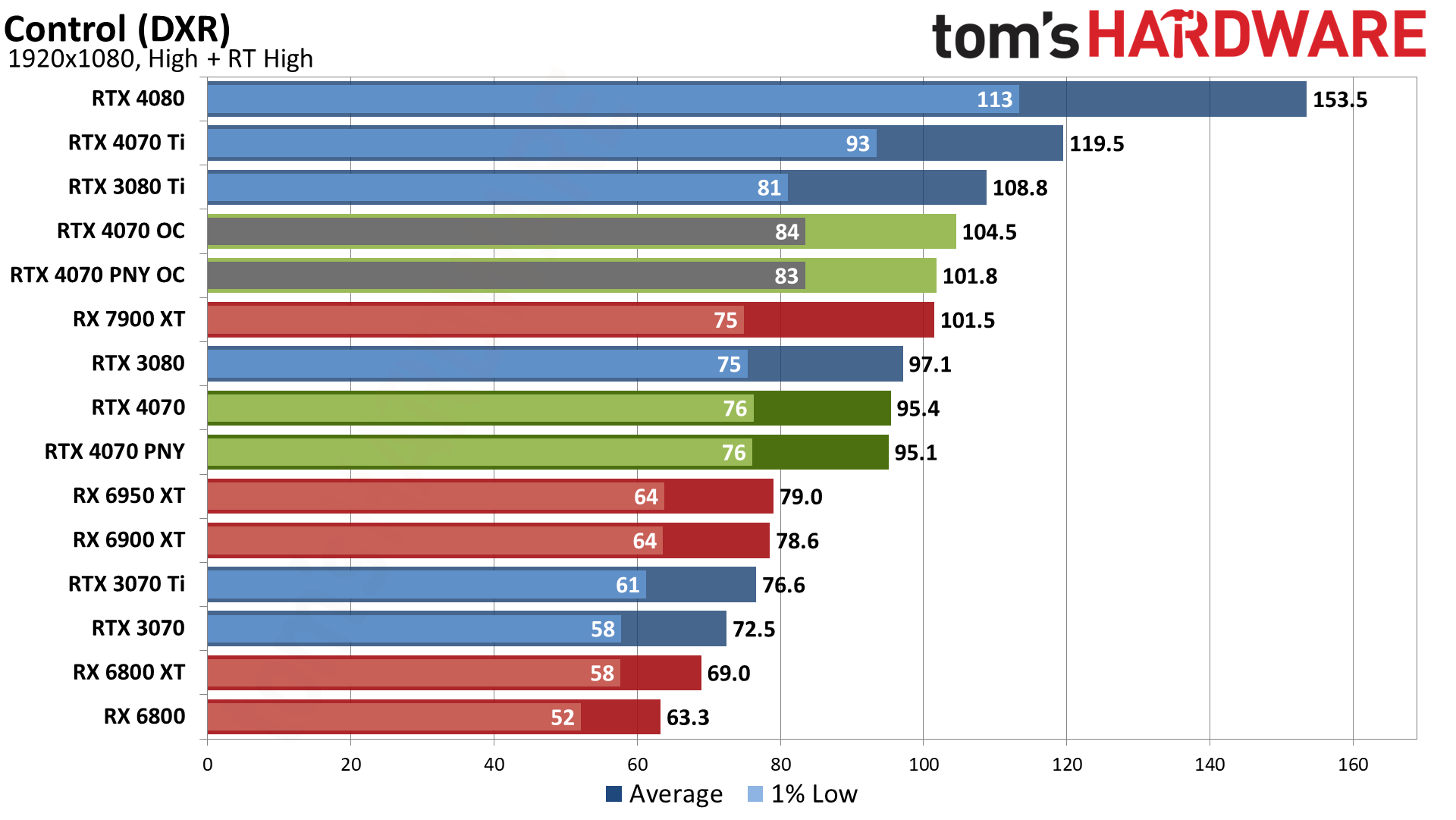

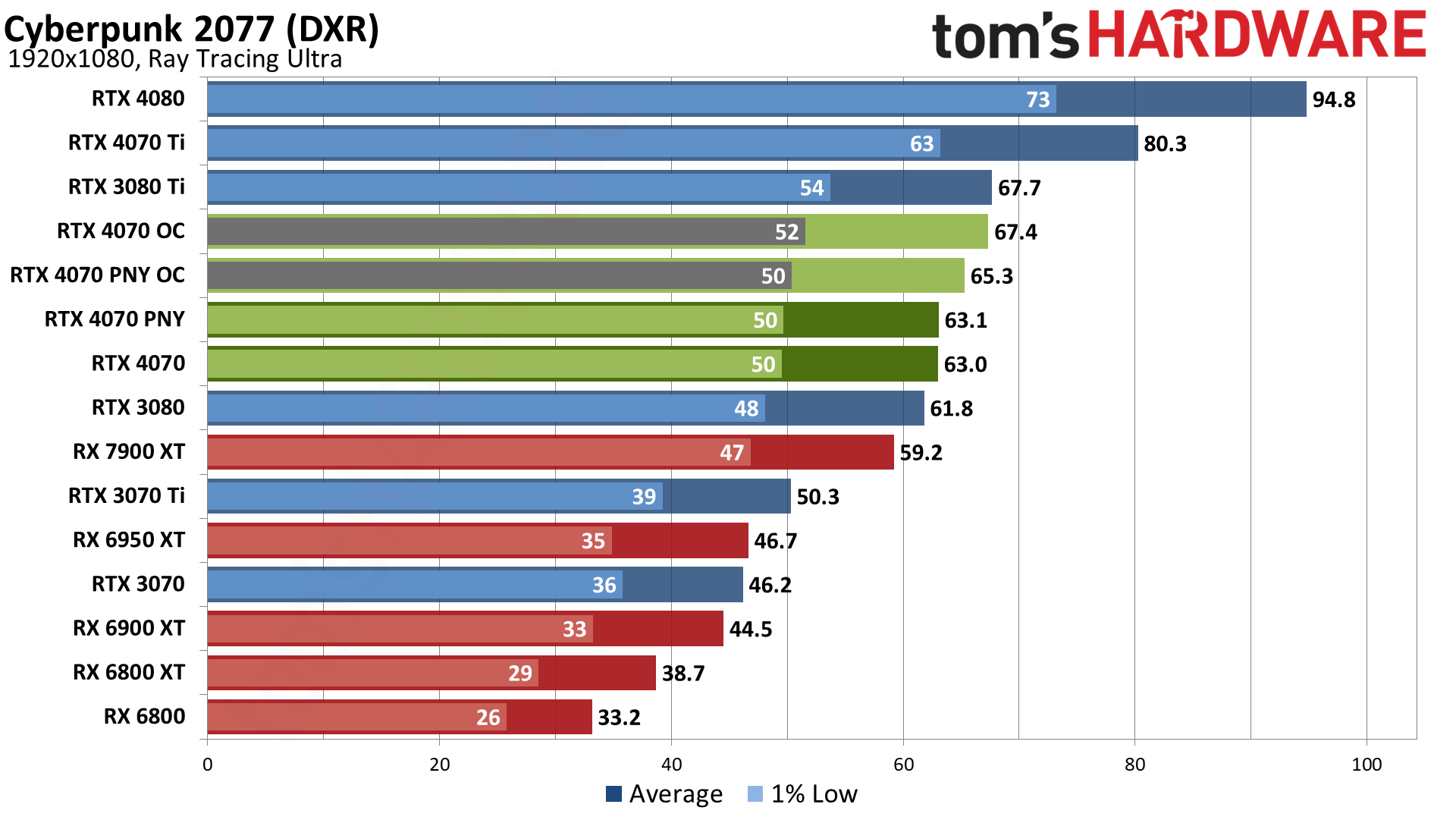

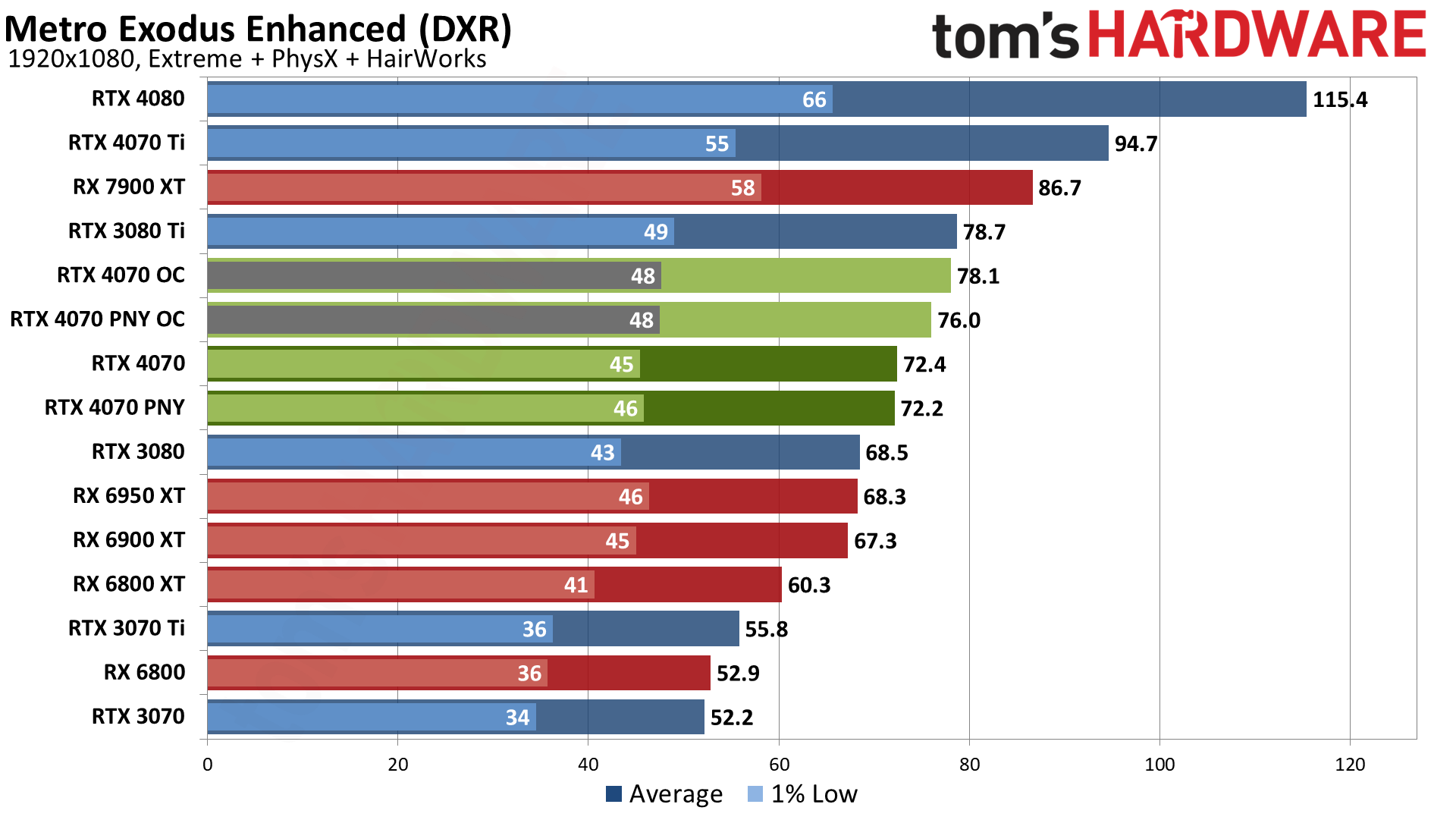

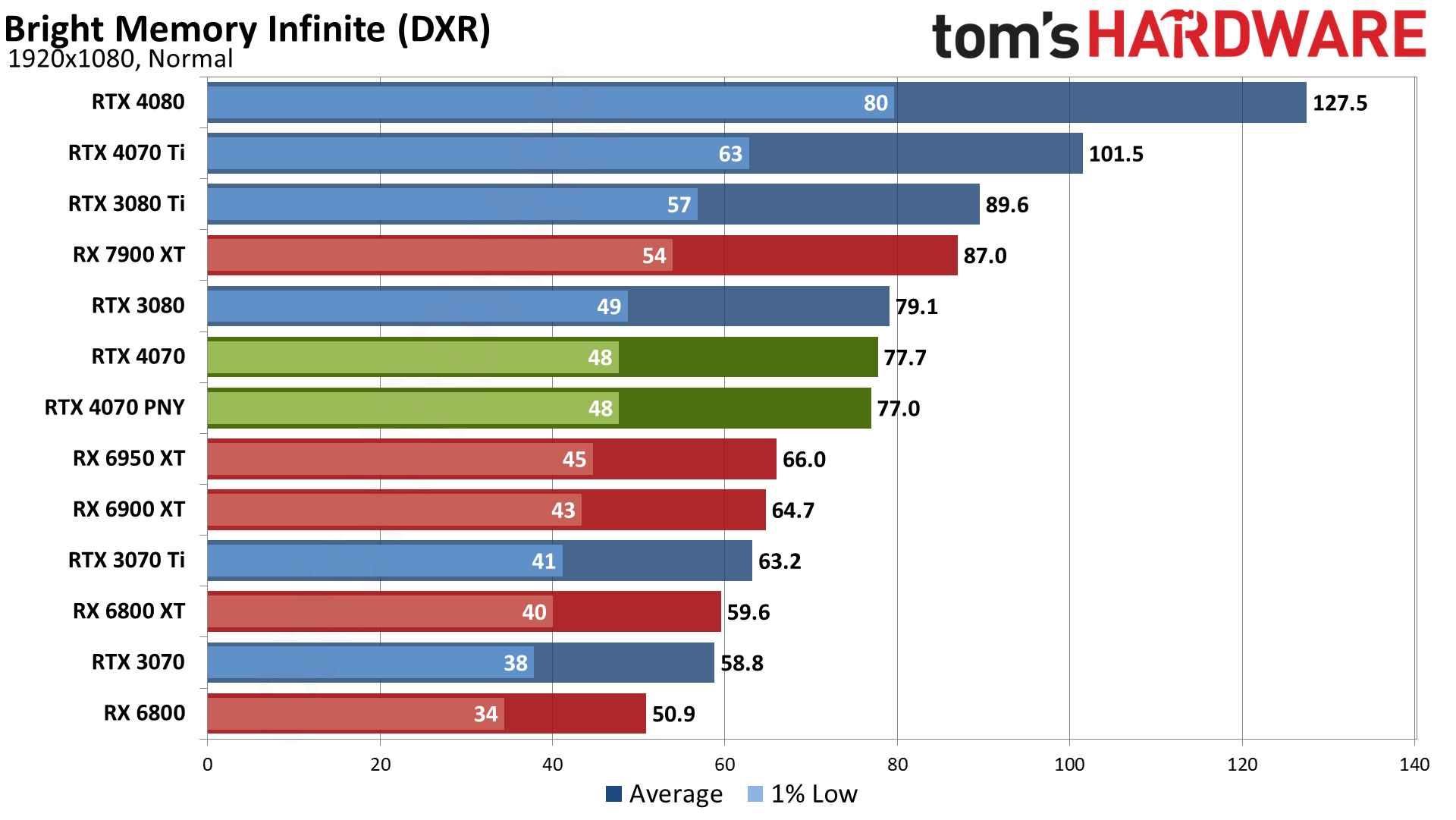

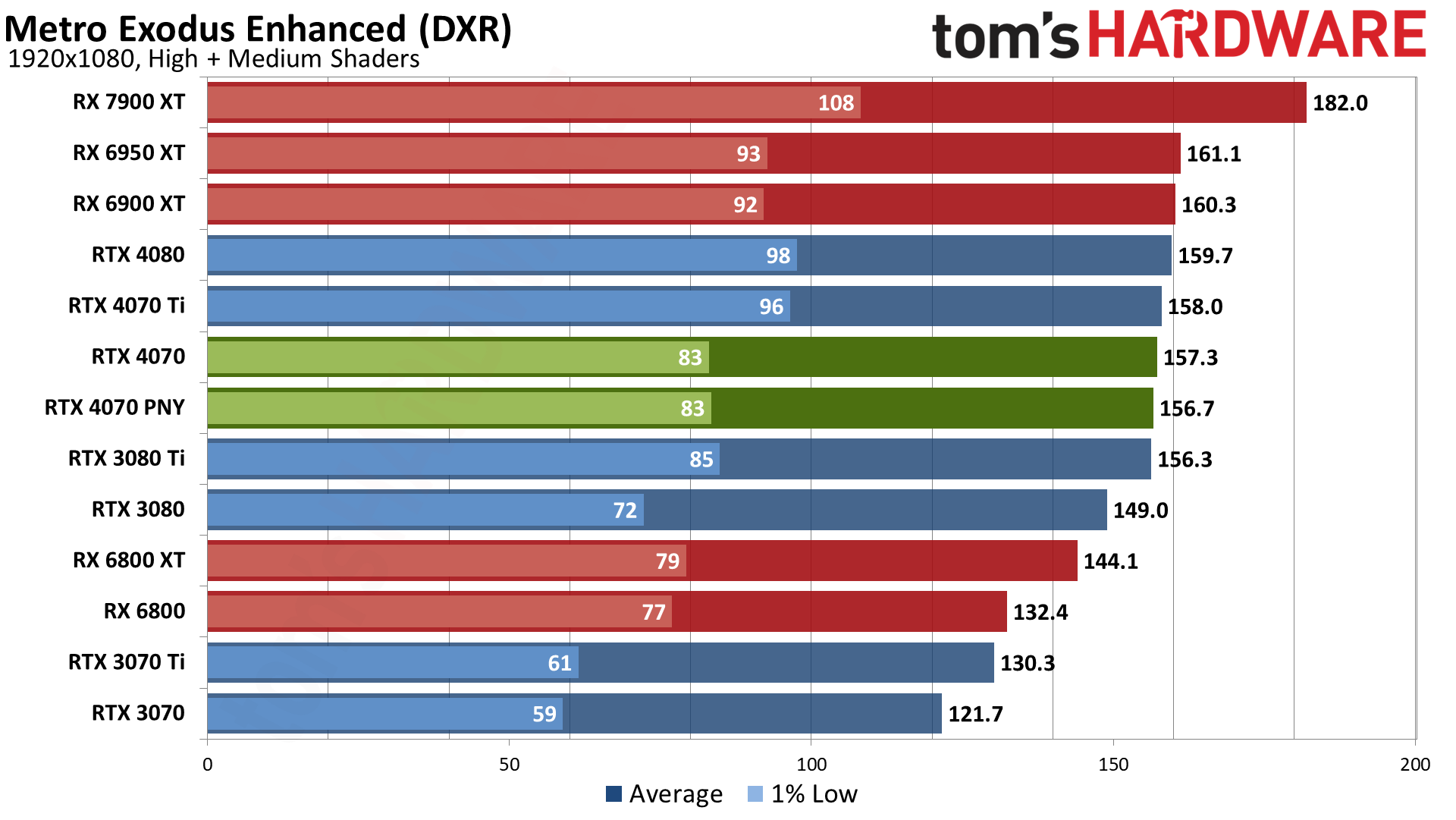

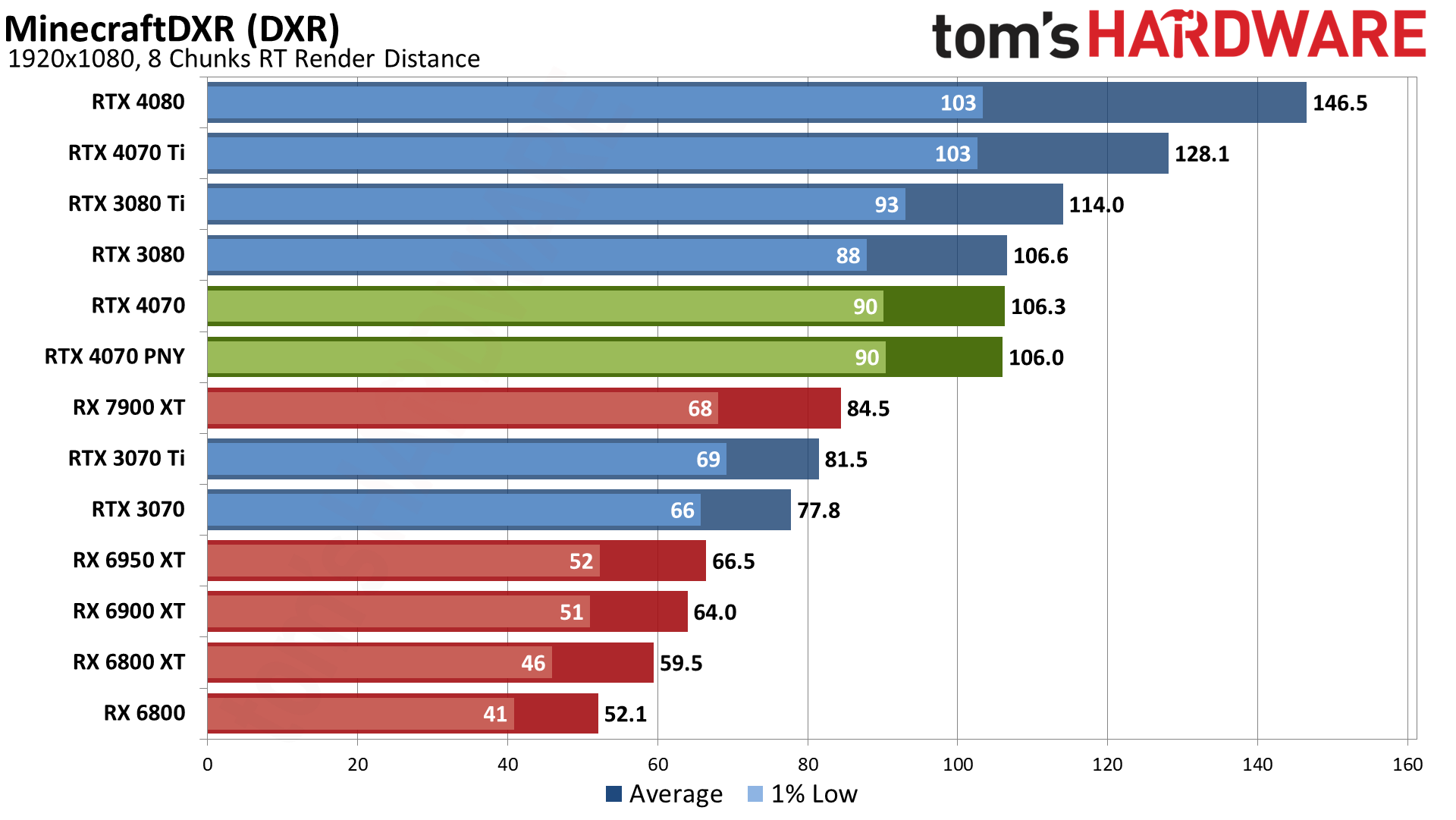

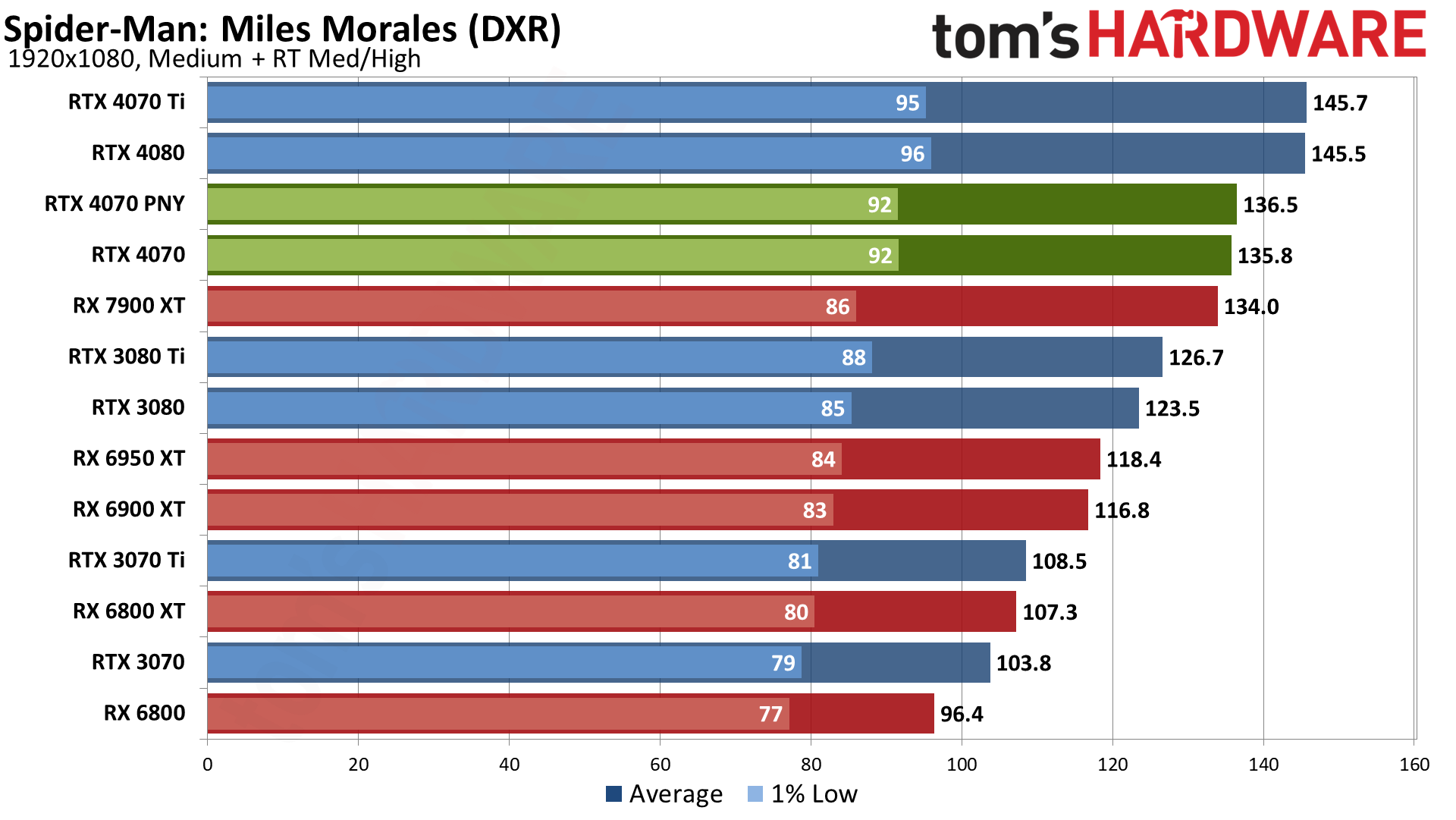

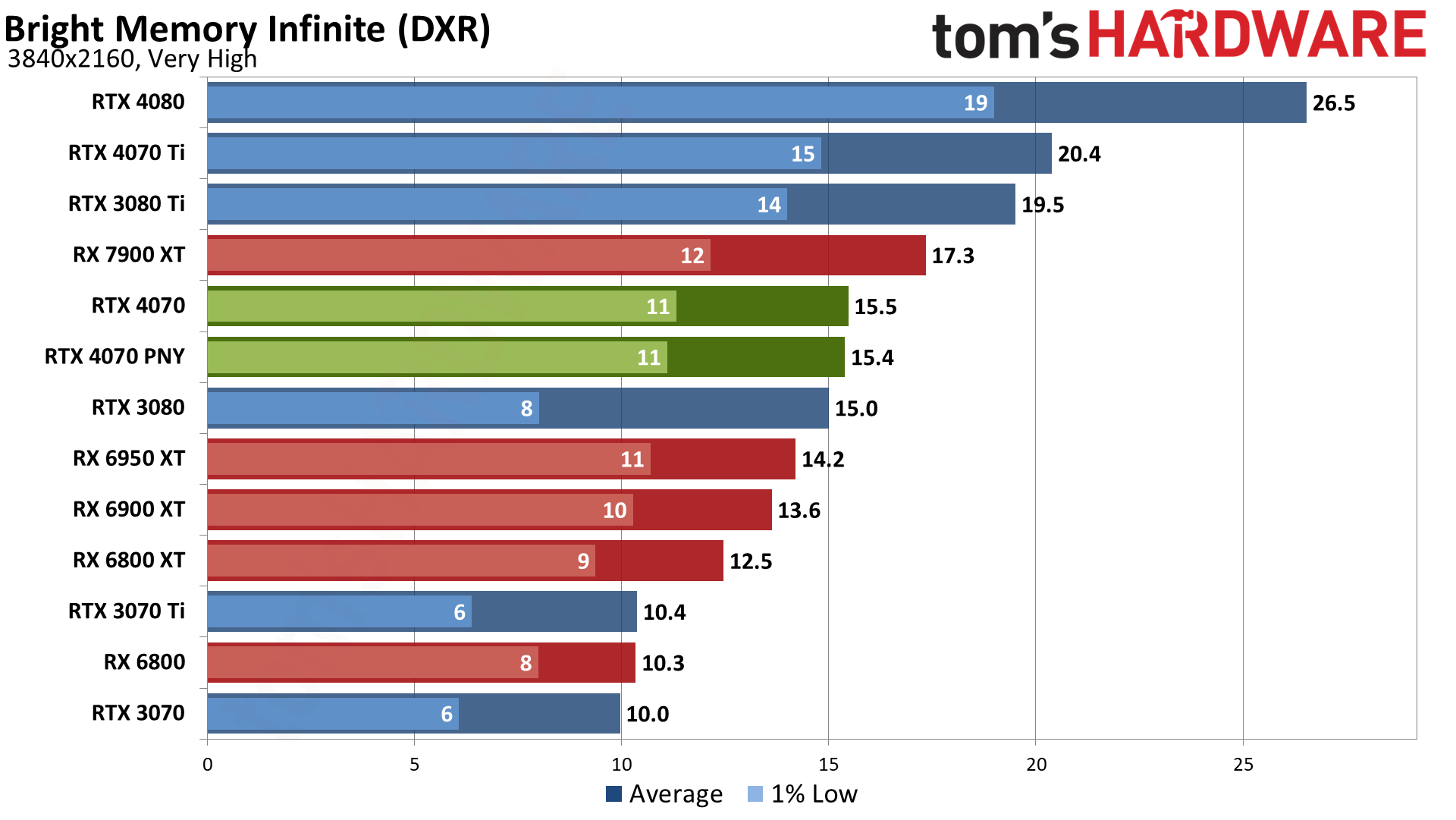

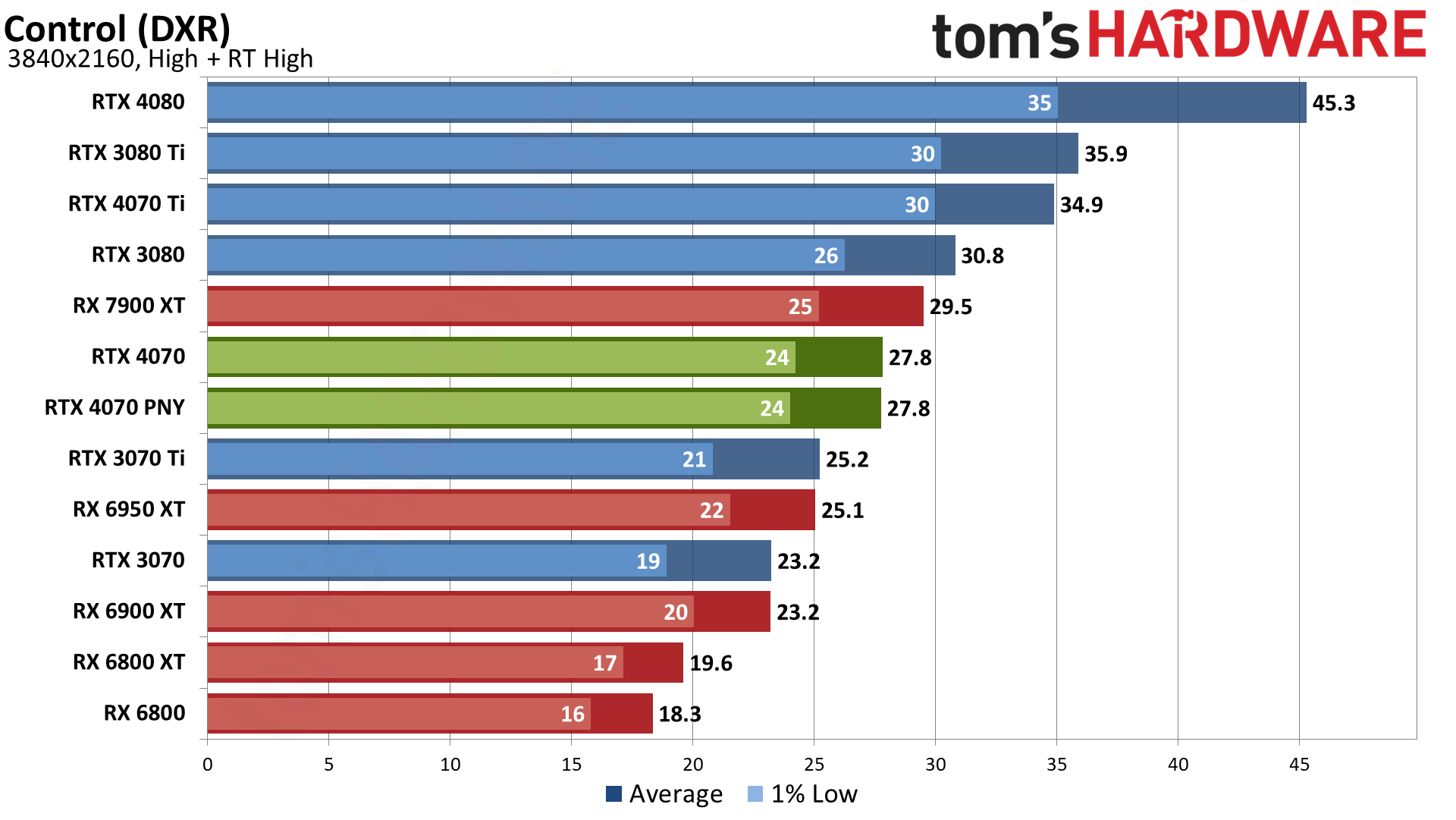

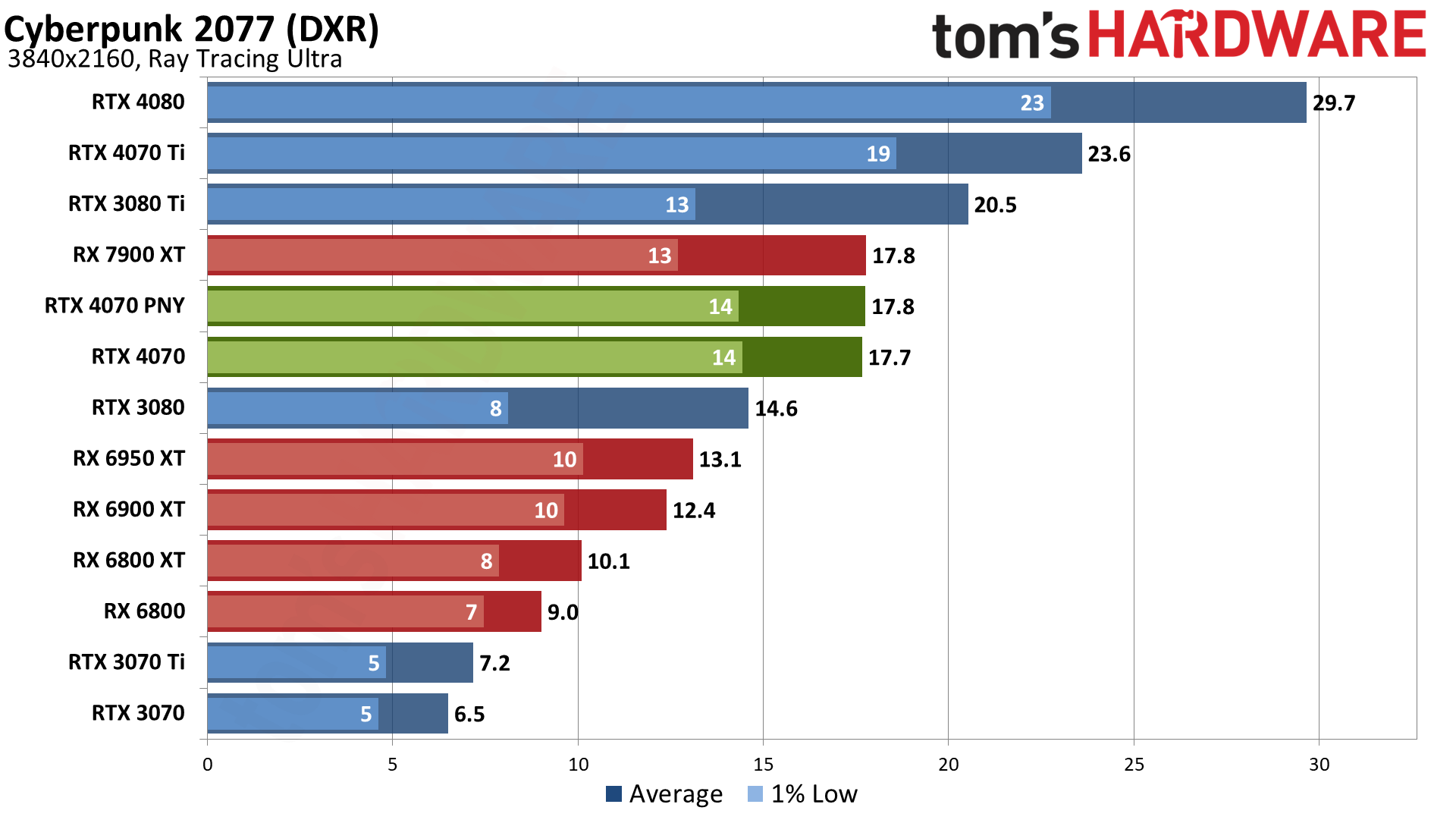

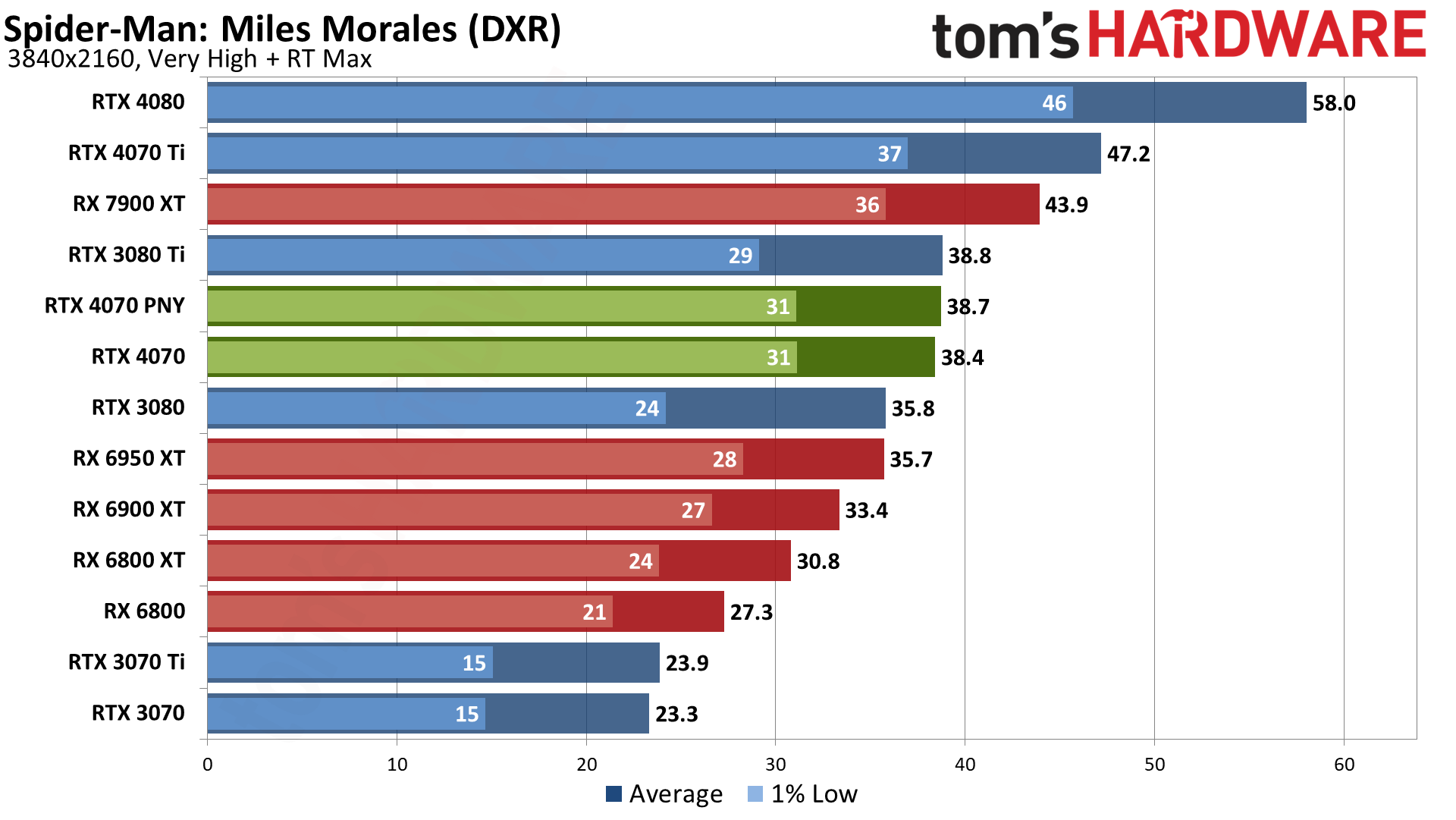

Nvidia's ray tracing hardware continues to outperform its competition, and that's particularly true for games that use even more ray tracing effects. The RTX 4070 is only slightly faster than the RX 6950 XT in Metro Exodus Enhanced Edition, it's around 10% faster in Bright Memory Infinite Benchmark, Control Ultimate Edition, and Spider-Man: Miles Morales, but then it's 34% faster in Cyberpunk 2077 and 65% faster in Minecraft.

How does the RTX 4070 fare against Nvidia's previous generation? Once again, it's basically tied with the RTX 3080 (2% slower), ranging from 9% slower (Bright Memory Infinite) to 3% faster (Spider-Man). You'd be hard pressed to notice the difference between the two cards if you were just sitting down and playing games... unless you factor in DLSS 3, but we'll get to that later.

Sticking to the $599 price point, the RTX 4070 delivers a solid 28% performance improvement over the RTX 3070 Ti — not that we were particularly impressed with the 3070 Ti, the 30-series mid-cycle refresh that still only included 8GB VRAM. Against the vanilla RTX 3070, that lead grows to 37%, perhaps not enough to entice someone to upgrade, but there's no good reason to spend more than about $350 on an RTX 3070 these days.

At the other end of the spectrum, the RTX 4070 Ti continues to hold onto a large 24% lead over its lesser variant. There's no extra VRAM, so all of that comes thanks to the extra GPU cores (and perhaps a bit from clock speeds). That's because the large L2 cache means memory bandwidth tends to be less of a factor than on previous generation architectures.

- MORE: Best Graphics Cards

- MORE: GPU Benchmarks and Hierarchy

- MORE: All Graphics Content

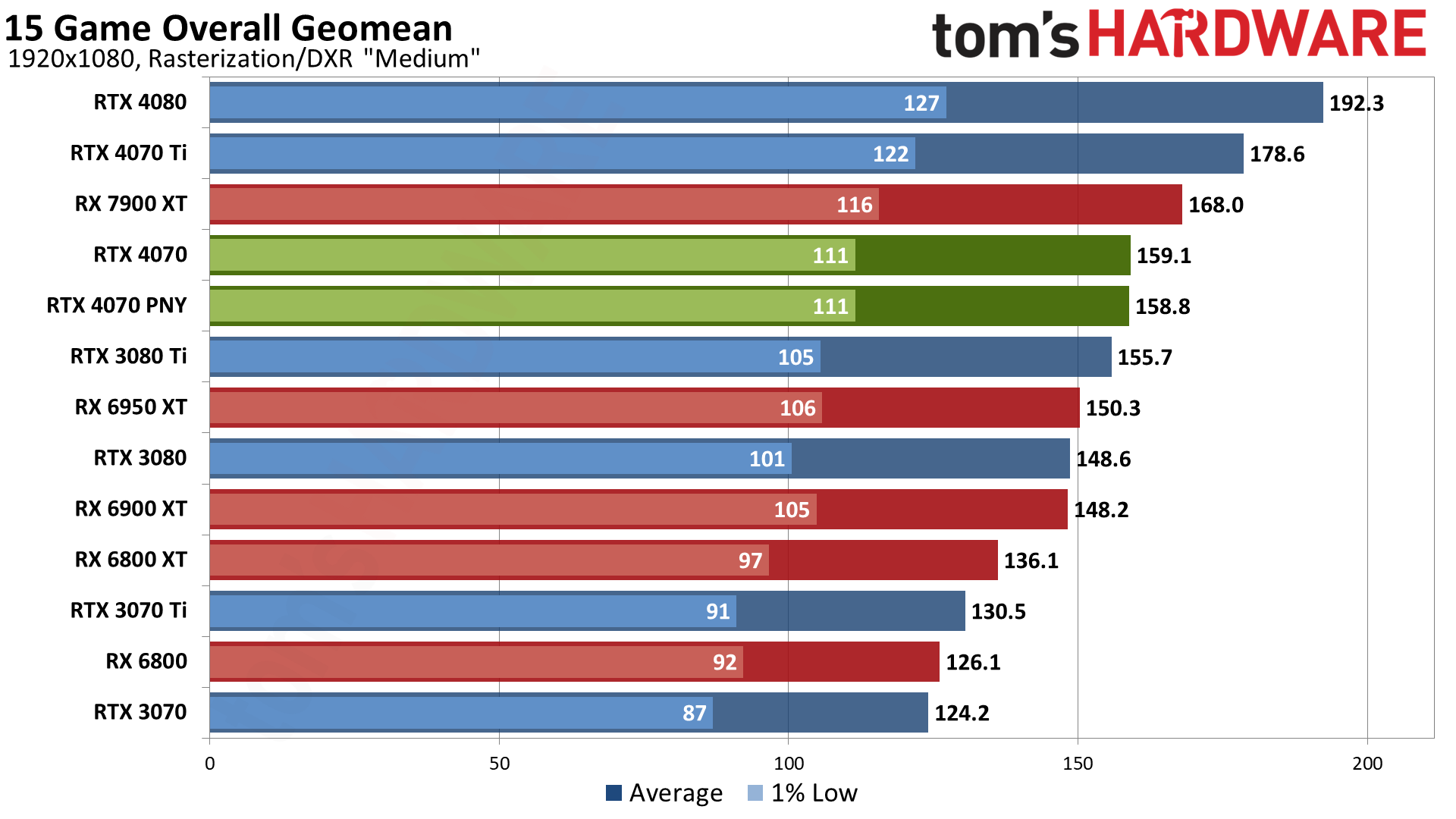

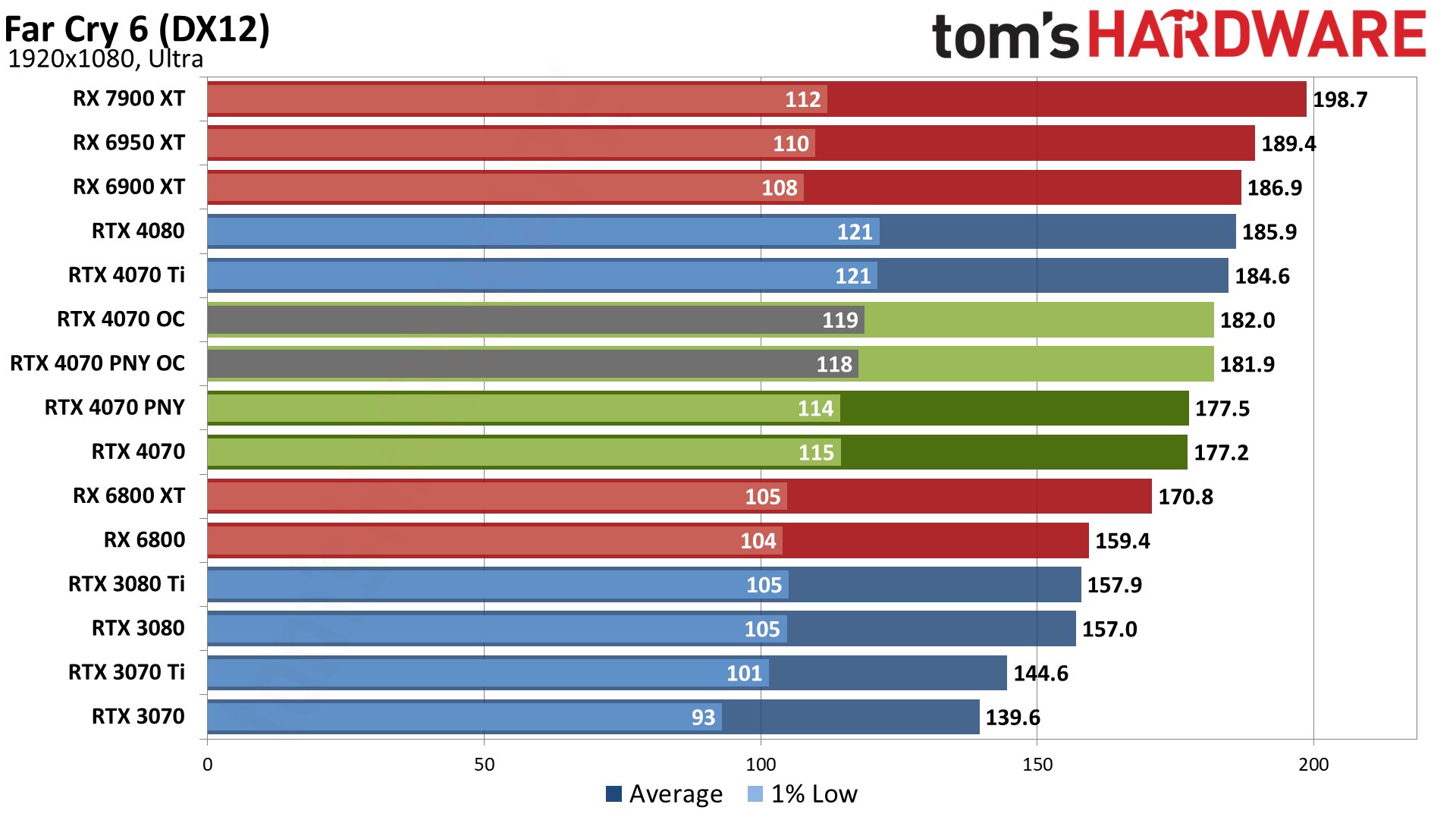

Given what we already saw at 1440p ultra, 1080p will deliver much higher performance and at times start to run into CPU bottlenecks. We're not going to go into as much detail on these charts, as we have both "medium" and "ultra" quality comparisons to cover. Most of what we said on the previous page still applies, only the margins tend to shrink with the lower resolution. Let's start with the overall view.

We have two images this time, one for 1080p ultra and one for 1080p medium. Nearly all of the cards maintain their 1440p rankings, with the only exception being the RTX 4070 gaining a couple of slots. The RTX 4070 now ends up a few percent faster than the 3080, and it also edges past the RX 6950 XT — which squeaks past the RTX 3080 as well. That's for the 1080p ultra chart.

Looking at the 1080p medium chart, you can see that the gap from the top to the bottom of the charts shrinks quite a bit at the lower settings. Where the top RTX 4080 was 77% faster than the bottom RTX 3070 at 1080p ultra, it's only 55% faster at 1080p medium. In a similar vein, the RTX 4070 also climbs one more spot and overtakes the RTX 3080 Ti at 1080p medium.

In our rasterization test suite, CPU bottlenecks become far more of a factor. At 1440p ultra, there was an 85% spread between the fastest (4080) and slowest (3070) GPU in our charts. 1080p ultra reduces that spread to 63%, and 1080p medium shows just a 42% gap. That makes sense, as without ray tracing taxing the GPUs, we're more likely to hit other performance limits.

The RTX 4070 gains a couple of slots in going from 1440p to 1080p, suggesting that perhaps the large L2 cache becomes more of a benefit at lower resolutions and settings — that's something we also saw with the previous generation on AMD's RX 6000-series cards. RTX 4070 comes out several percent ahead of the RTX 3080 and also moves ahead of the RX 6800 XT, and basically ties the RTX 3080 Ti. With overclocking, it can even close the gap with the RX 6900 XT.

At 1080p medium, the RTX 3080 Ti drops a few spots and lands behind the RX 6800 XT. Does that mean it's a slower card than AMD's 6800 XT? Well, sure, sometimes, like in low resolution testing with lower quality settings. If you play esports games, maybe that matters more. But really, these 1080p results aren't taxing and even the RTX 3070 averages over 160 fps at our medium settings.

In contrast to our rasterization testing, ray tracing pushes the GPU so hard that it's almost the only thing that matters. At 1440p, the spread from the 4080 to the RTX 3070 (we'll stick with that comparison, even though AMD now has cards at the bottom of the charts) was 113%. At 1080p ultra, the spread drops a bit to 100%, and at 1080p medium it's still 76%. So it's shrinking, but not nearly as fast as with our rasterization tests.

1080p ray tracing effectively puts the RTX 4070 on equal footing with AMD's RX 7900 XT — there's less than a 2% difference between the two GPUs. The same applies to the RTX 3080, where again there's less than a 2% gap (this time in favor of the 4070).

RTX 4070 also outpaces the RTX 3070 Ti by 26% and beats the RTX 3070 by 33%. So the margins are shrinking, as expected, but there are no major changes to discuss. If you only have a 1080p monitor, the RTX 4070 might be borderline overkill in rasterization games, while for complex ray tracing games it's basically the minimum you'd want for a consistent 60 fps without upscaling.

- MORE: Best Graphics Cards

- MORE: GPU Benchmarks and Hierarchy

- MORE: All Graphics Content

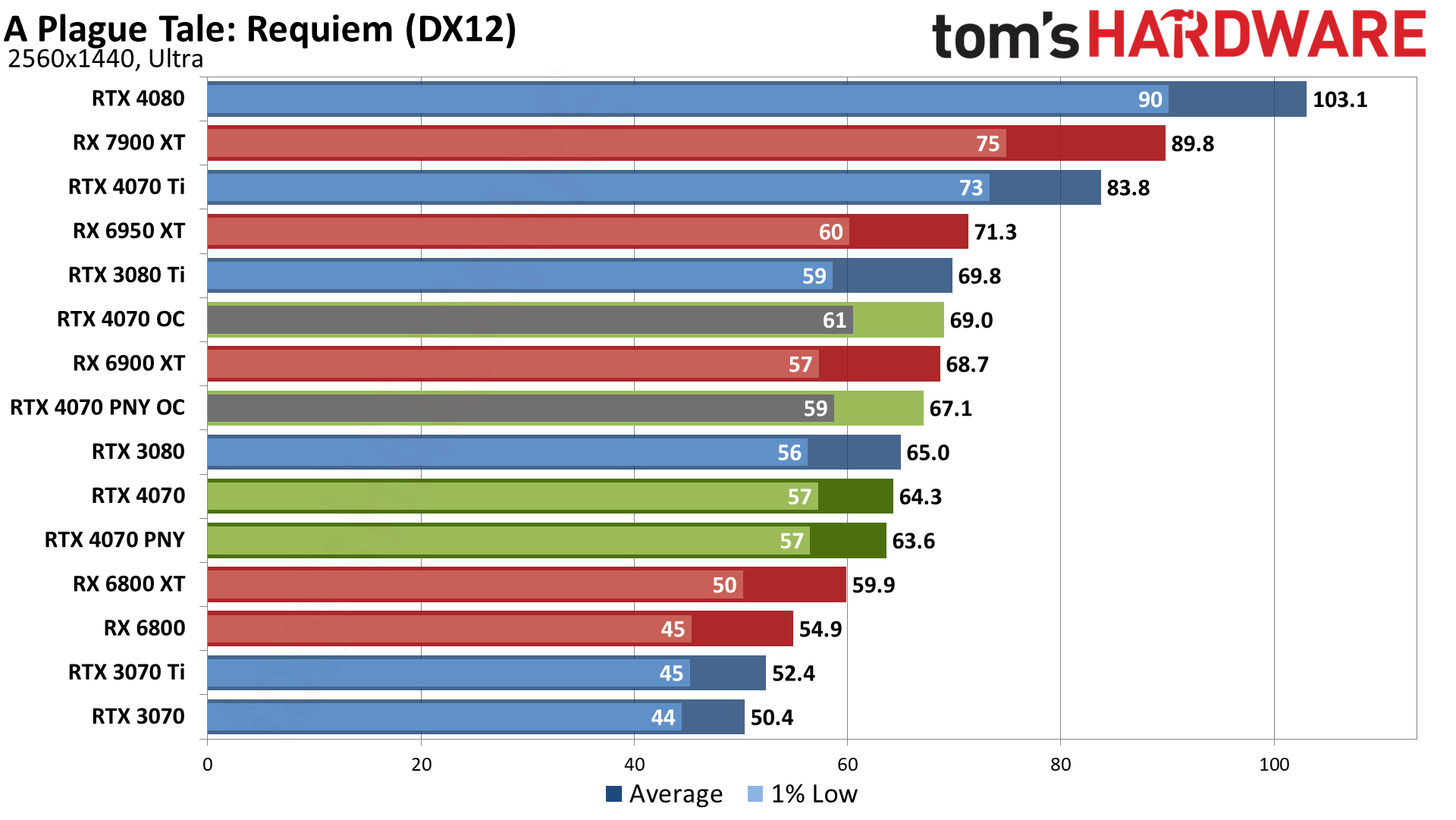

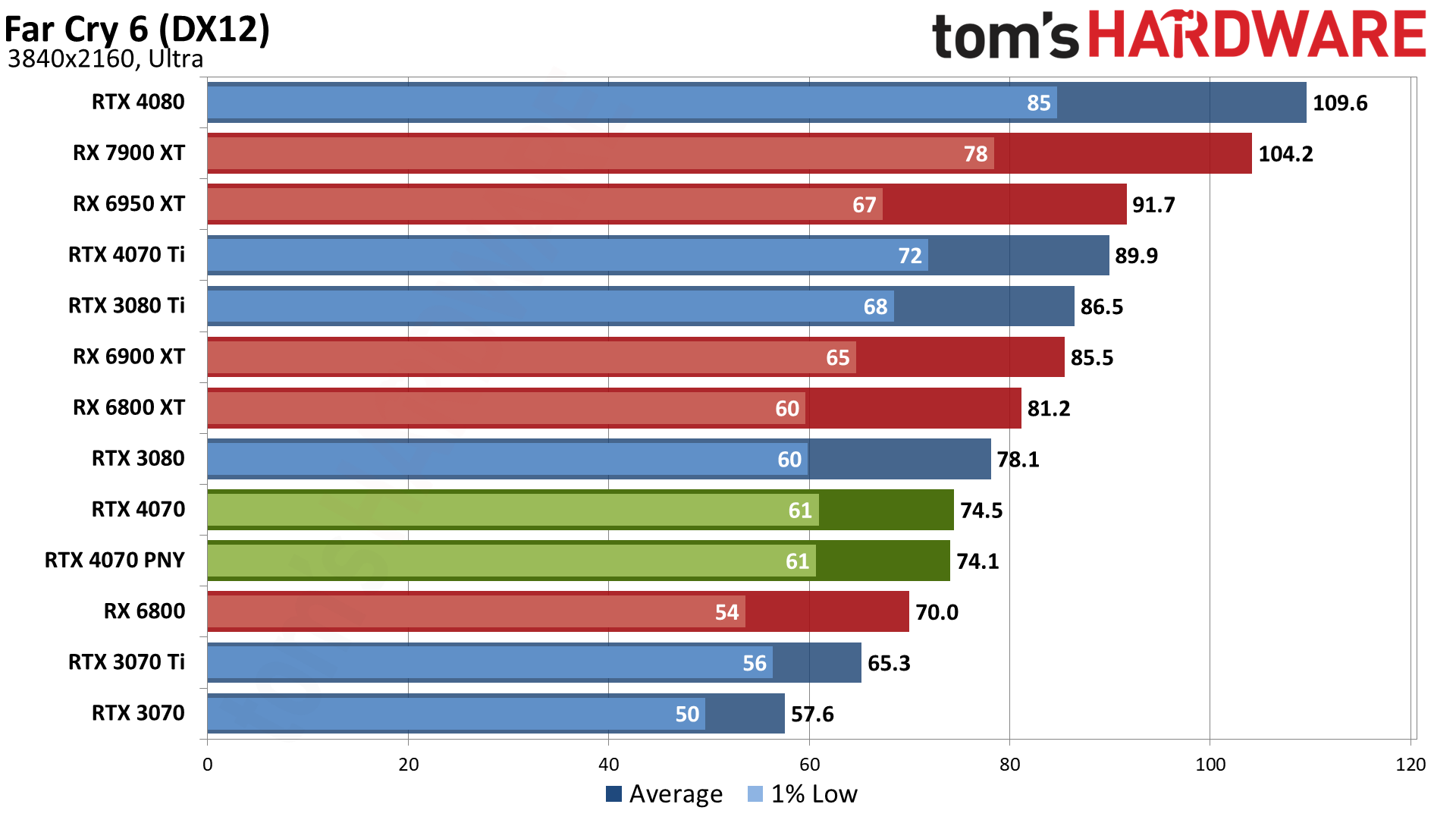

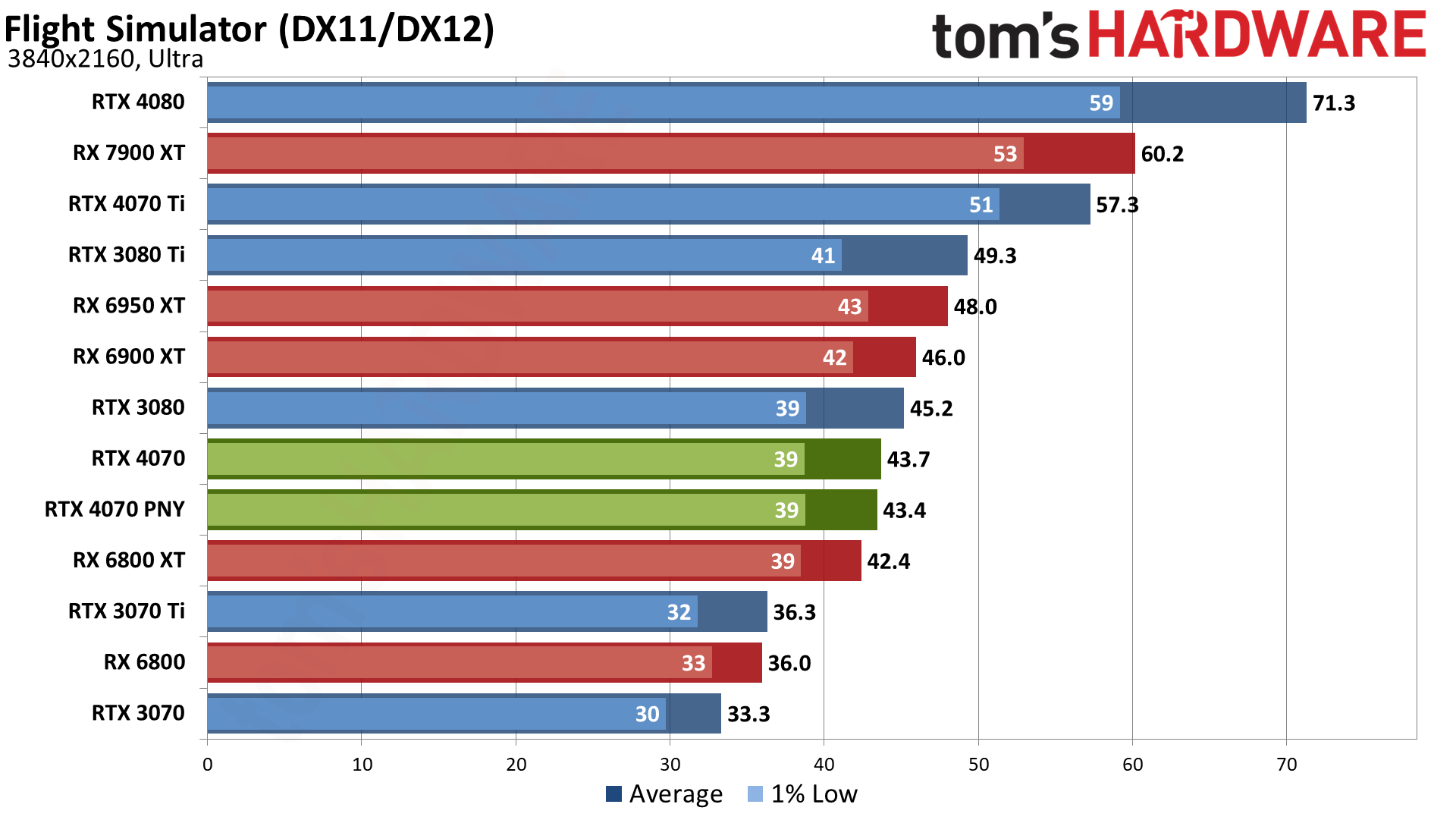

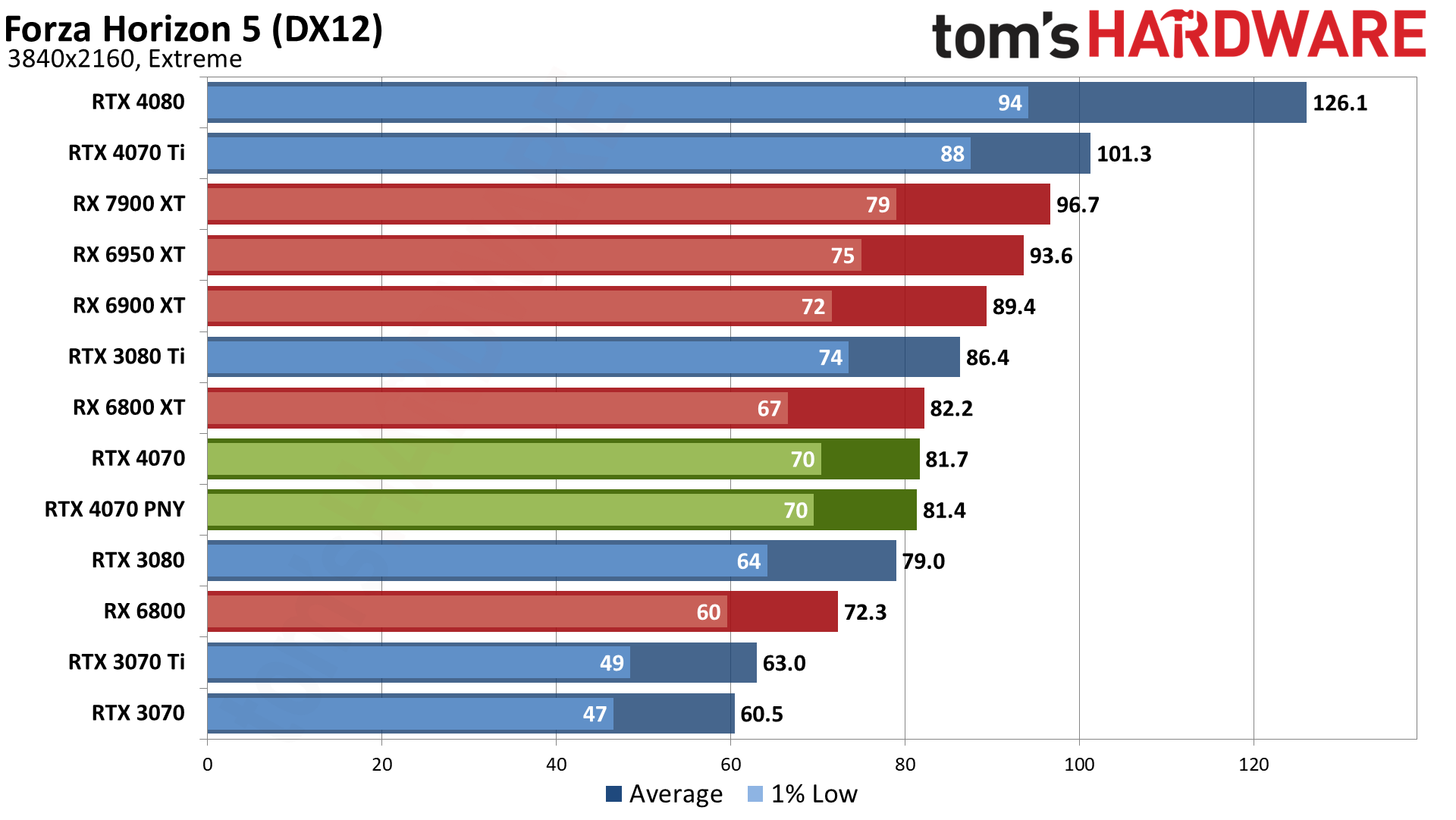

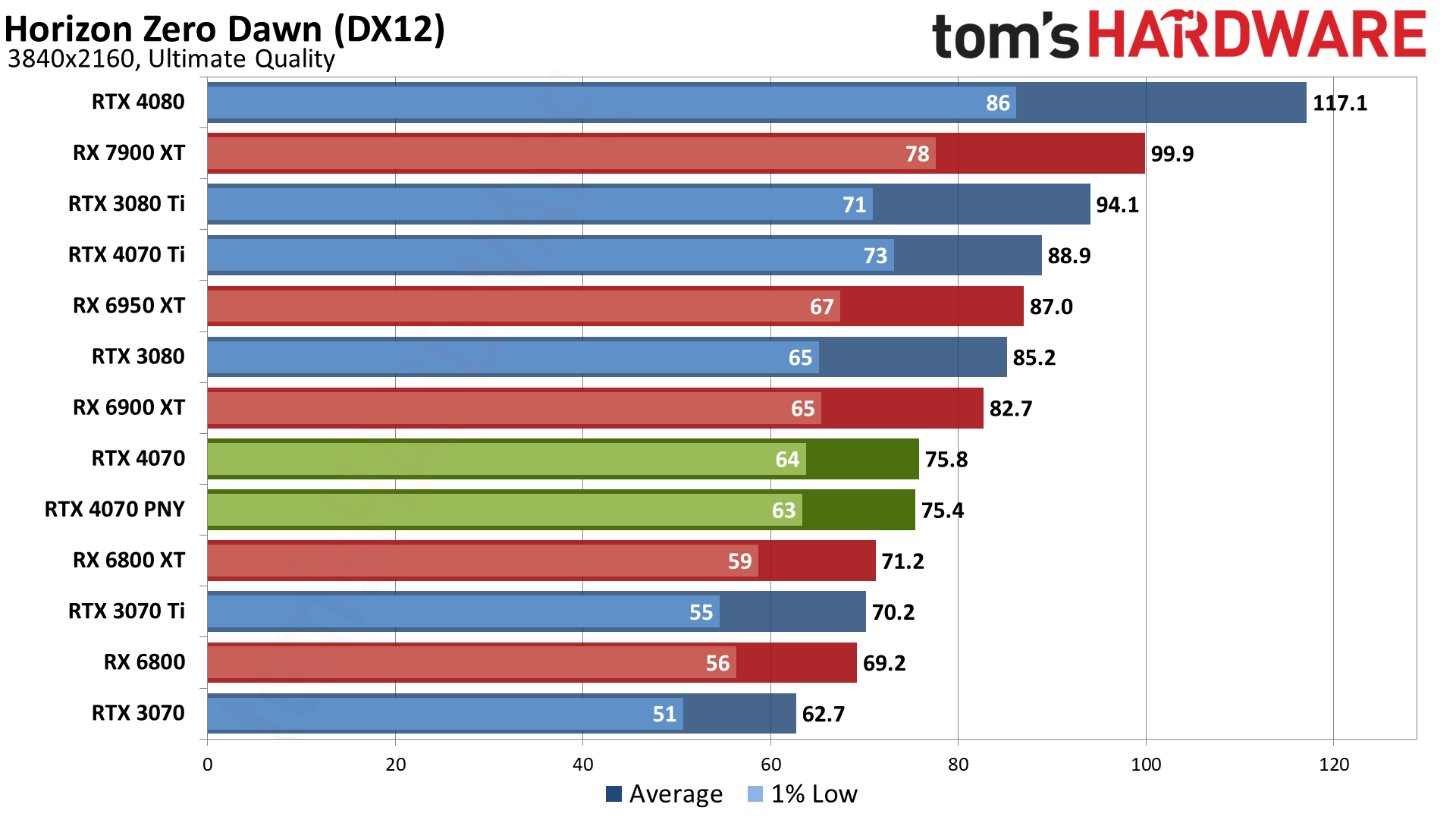

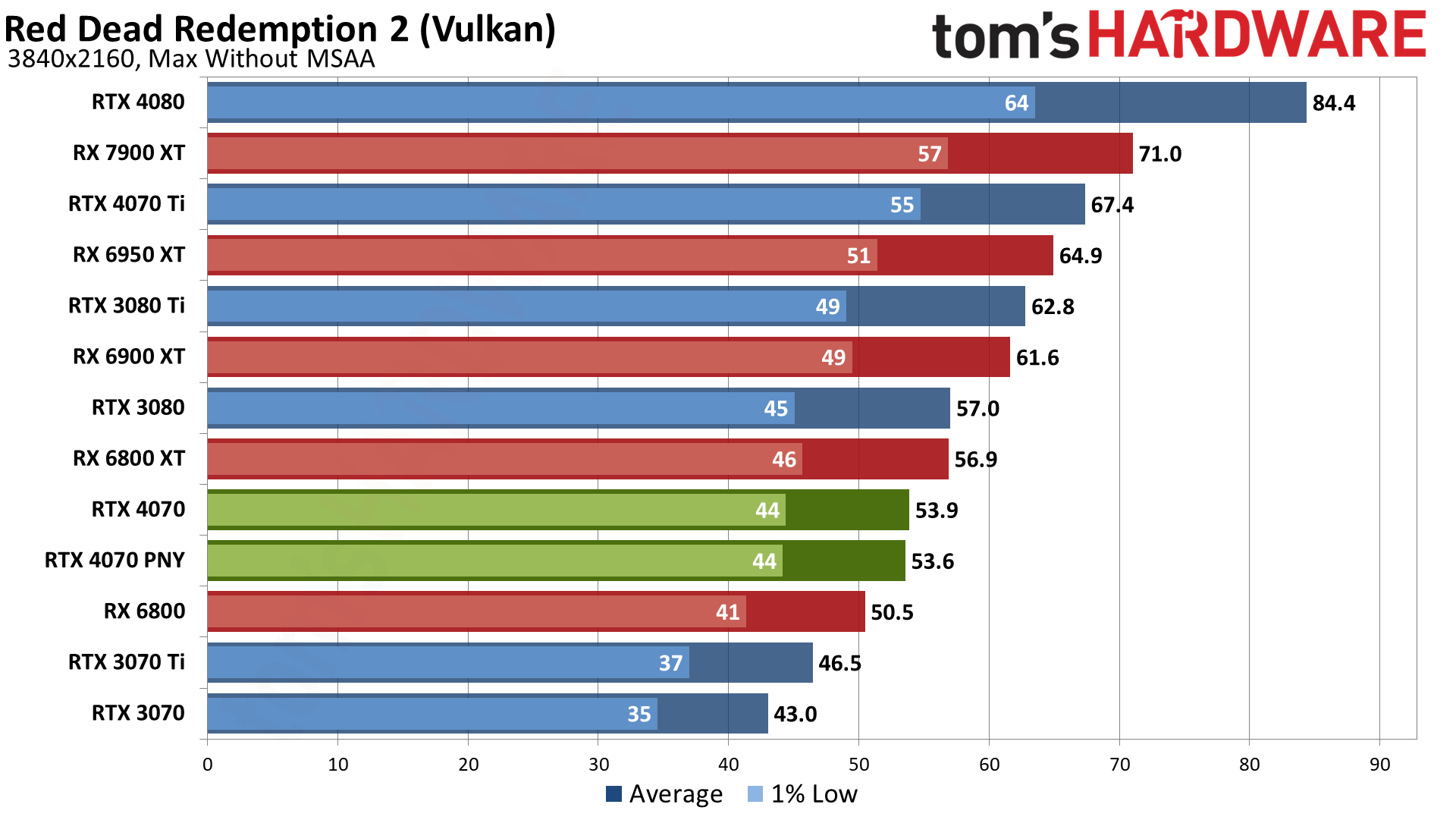

Much like the RTX 3080, the RTX 4070 can legitimately handle 4K gaming, as long as you're not hoping to max out all of the settings — especially in ray tracing games. Here's the big picture.

Perhaps because of the 192-bit interface, even with 12GB of VRAM, the RTX 4070 trails the RTX 3080 by a couple of percent. Averaging 40 fps overall, it's really mix of games that remain very playable (most of the rasterization suite) and games that fall well short of being smooth (most of the ray tracing suite).

If you're hoping to game at 4K with the RTX 4070, you'll definitely want to consider using DLSS or some other upscaling algorithm, which we'll cover on the next page. It's the difference between sub-30 fps and potentially 60+ fps in the most demanding games.

In our rasterization suite, the RTX 4070 falls behind most of the other GPUs in our charts — it ties the RX 6800 XT and leads the RX 6800, RTX 3070 Ti, and RTX 3070, but nothing more than that.

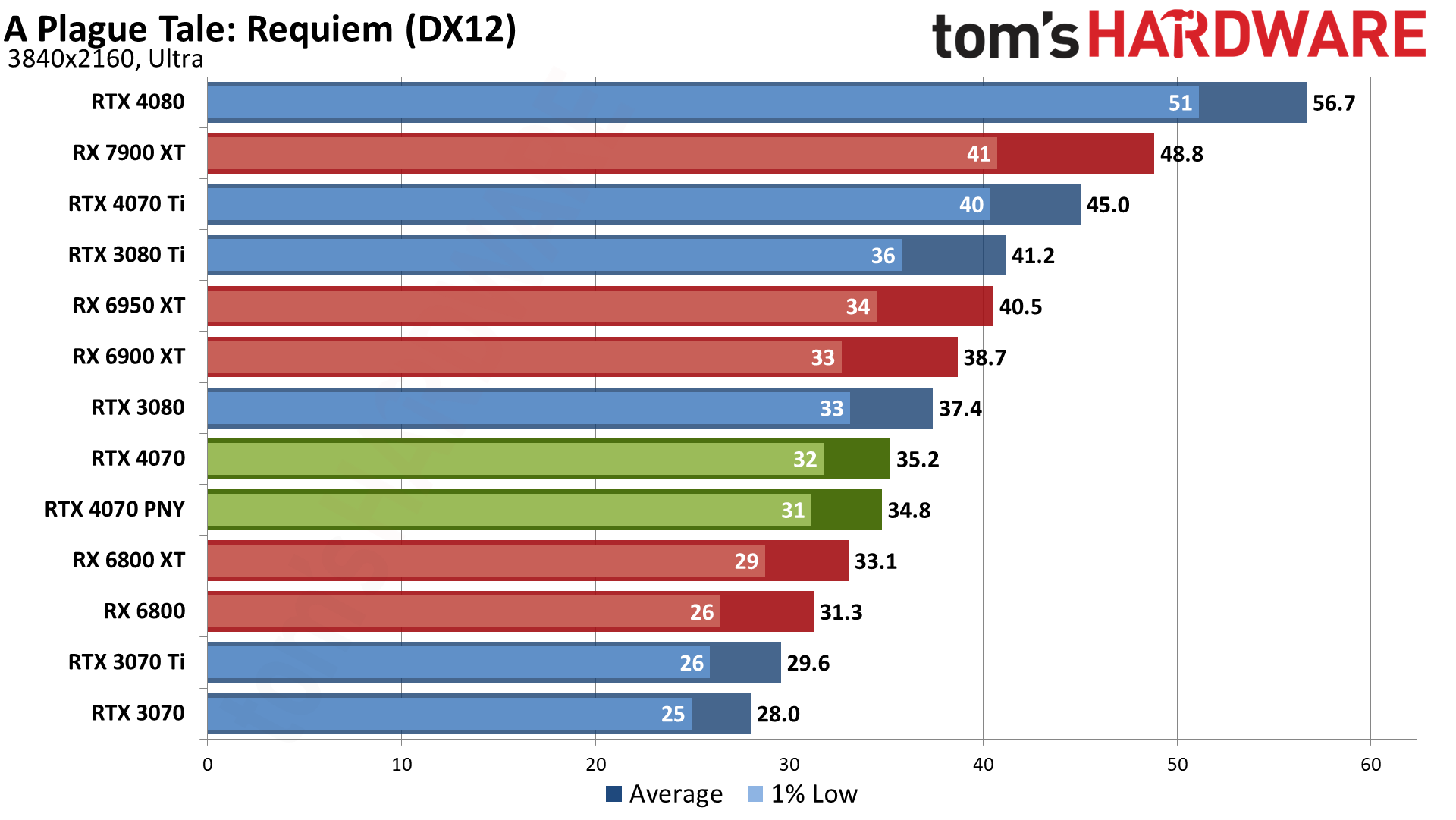

Average performance across the suite is 55 fps, with only three of the nine games clearing 60 fps. A Plague Tale: Requiem ranks as the most demanding of the rasterization games, and the 4070 only manages 35 fps.

You can check our full GPU benchmarks hierarchy for comparisons with other graphics cards, and if you do that you can also see that the RTX 4070 beats the first generation RTX 2080 Ti quite soundly. That's no surprise as the RTX 3070 basically ties the 2080 Ti at 4K. So, in a bit more than four years, Nvidia has improved performance over its best GPU by about 33% while cutting the price in half.

Maxed out settings, with ray tracing, at 4K, without upscaling? Yeah, good luck. Of the six DXR games in our suite, only Spider-Man can consistently break 30 fps. That's hardly shocking, considering even the RTX 4080, which costs twice as much, only manages 41 fps.

But then, these results are precisely why Nvidia started working on DLSS clear back before the RTX 20-series even saw the light of day! The first iteration wasn't all that great, but it paved the way for substantially better results over the past four years. So let's not belabor the poor native 4K results and instead move on to our upscaling tests.

- MORE: Best Graphics Cards

- MORE: GPU Benchmarks and Hierarchy

- MORE: All Graphics Content

GeForce RTX 4070: DLSS 2, DLSS3, and FSR 2 Upscaling

We've elected to pull out all of the upscaling results and put them into separate charts, just to help with clarity of the results. We've got DLSS 2 in 12 games, DLSS 3 in five games, and FSR 2 in five games. None of the games are FSR 2 exclusive, meaning DLSS 2 is always an option and tends to be better on Nvidia's RTX cards. FSR 2 is more for AMD GPU owners, as well as Arc GPU users (since XeSS support is even less likely than FSR 2) and GTX owners.

DLSS 3 on the other hand isn't nearly as easy to quantify as DLSS 2 and FSR 2. Upscaling provides a boost to performance and in turn results in lower latencies. Image fidelity might drop a bit, depending on the setting, but from the performance side it's usually a clear win. Frame Generation on the other hand introduces latency, and the best things about DLSS 3 support is that it requires the games to also support Nvidia Reflex (which only helps if you own an Nvidia GPU, but whatever), and it's also "backward compatible" with DLSS 2 upscaling.

Don't get me wrong. If I'm playing a game for fun, like I was with Hogwarts Legacy, I'll turn on Frame Generation. It does make games look a bit smoother, and for non-twitch gamers like myself, that's good. But Nvidia's charts comparing DLSS 3 performance against DLSS 2 (without Reflex), or native resolution... they're at best overstating the performance gains. 40% higher performance thanks to Frame Generation never feels like 40% higher "real" framerates.

But let's get to the results. We've tested with "Quality" mode in all cases, at least where we're able to specify the upscaling mode. Minecraft is the sole exception, and it uses Balanced upscaling (3X) for 1440p and Performance mode (4X) for 4K, with no way to change that. But then Minecraft is also a bit of a best-case scenario for doing higher upscaling factors, since it's already blocky to begin with.

Here are the results, one game at a time (with 1440p and 4K results in each game). The RTX 4070 Ti is included as a point of reference for how upscaling can often more than make up for the difference between a GPU and the next higher tier of GPU (plus my charts need at least three results or they get screwy).

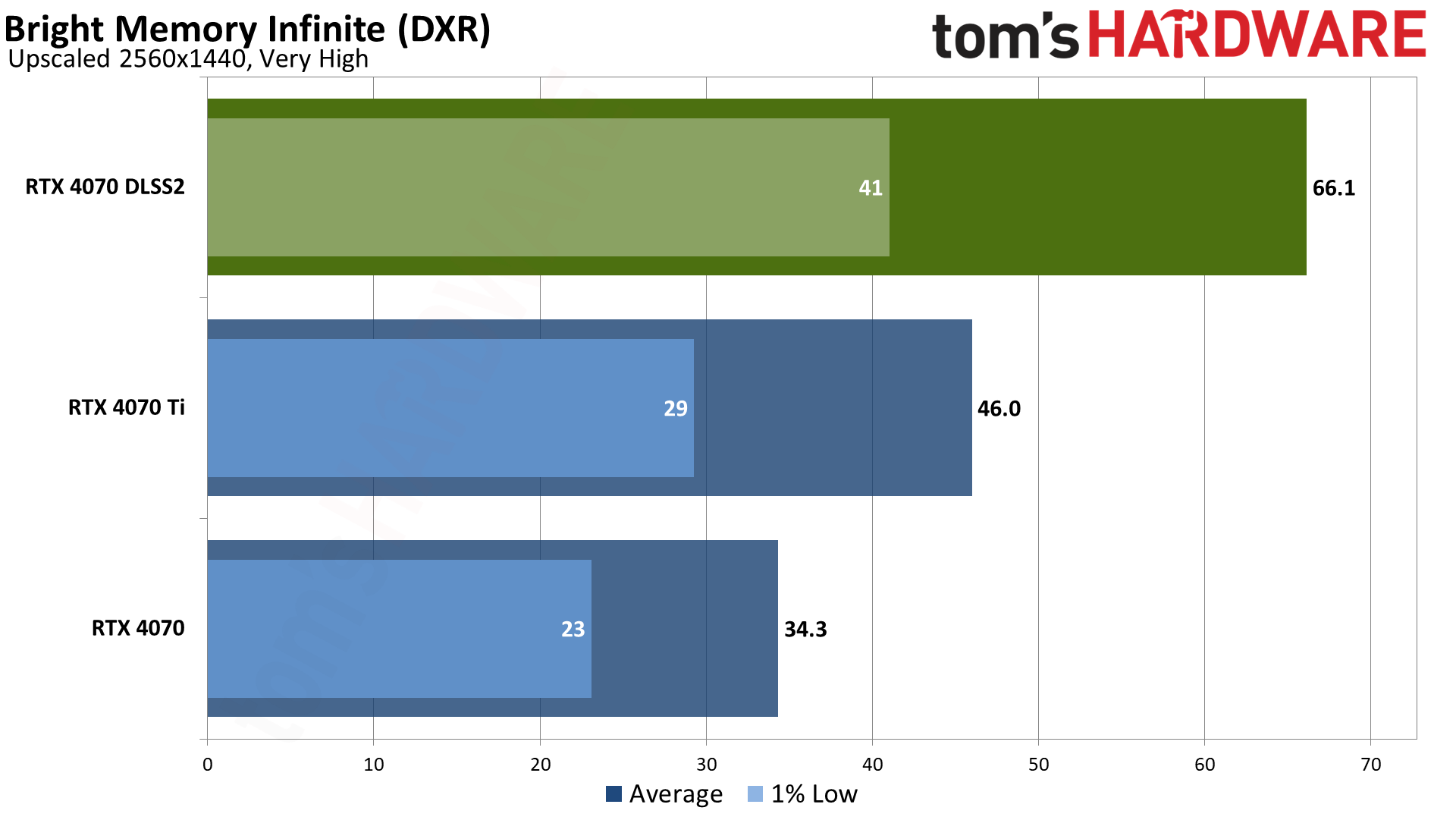

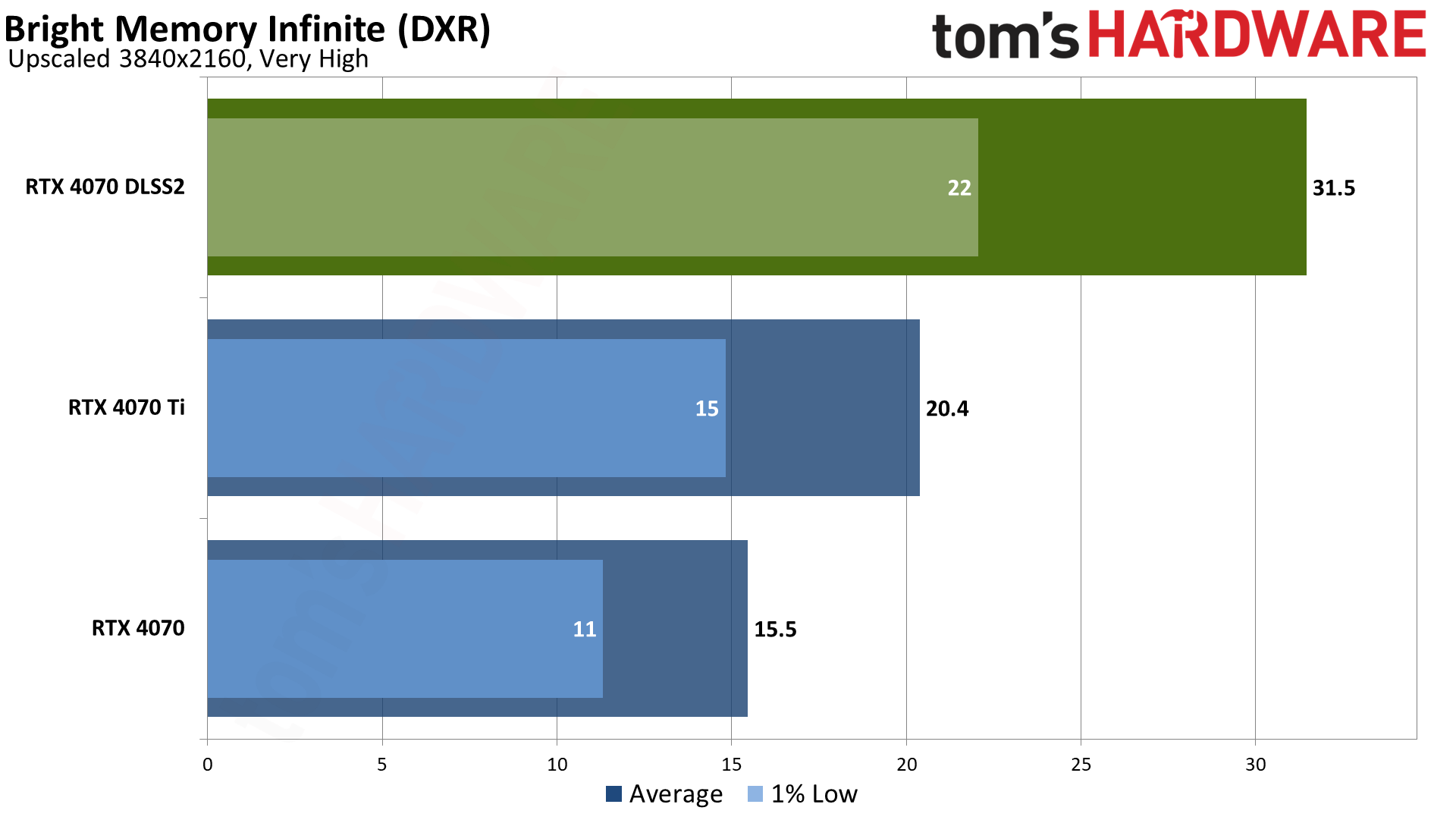

We're taking these games in alphabetical order, and the Bright Memory Infinite Benchmark comes first. This is sort of a synthetic benchmark, in that the actual game is far less graphically demanding than the standalone benchmark. However, the benchmark also looks way better than the game and represents a better look at future games that use lots of ray tracing effects.

Only DLSS 2 is supported, but the gains are quite large. Again, that goes with the territory of being a very demanding ray tracing game: Rendering even half as many pixels can result in double the performance after upscaling. And that's basically what we get, with the RTX 4070 running 93% faster at 1440p and 103% faster at 4K.

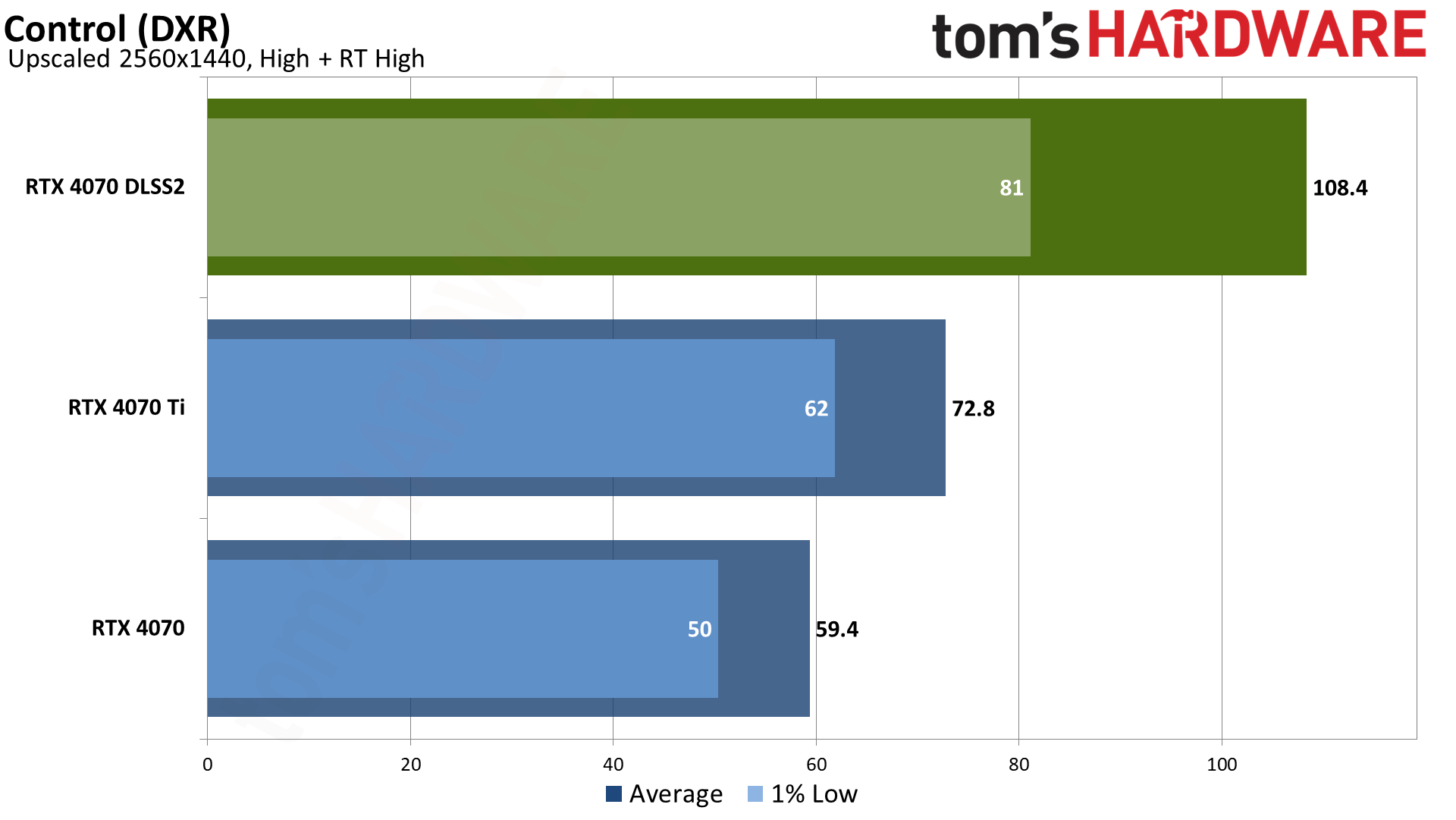

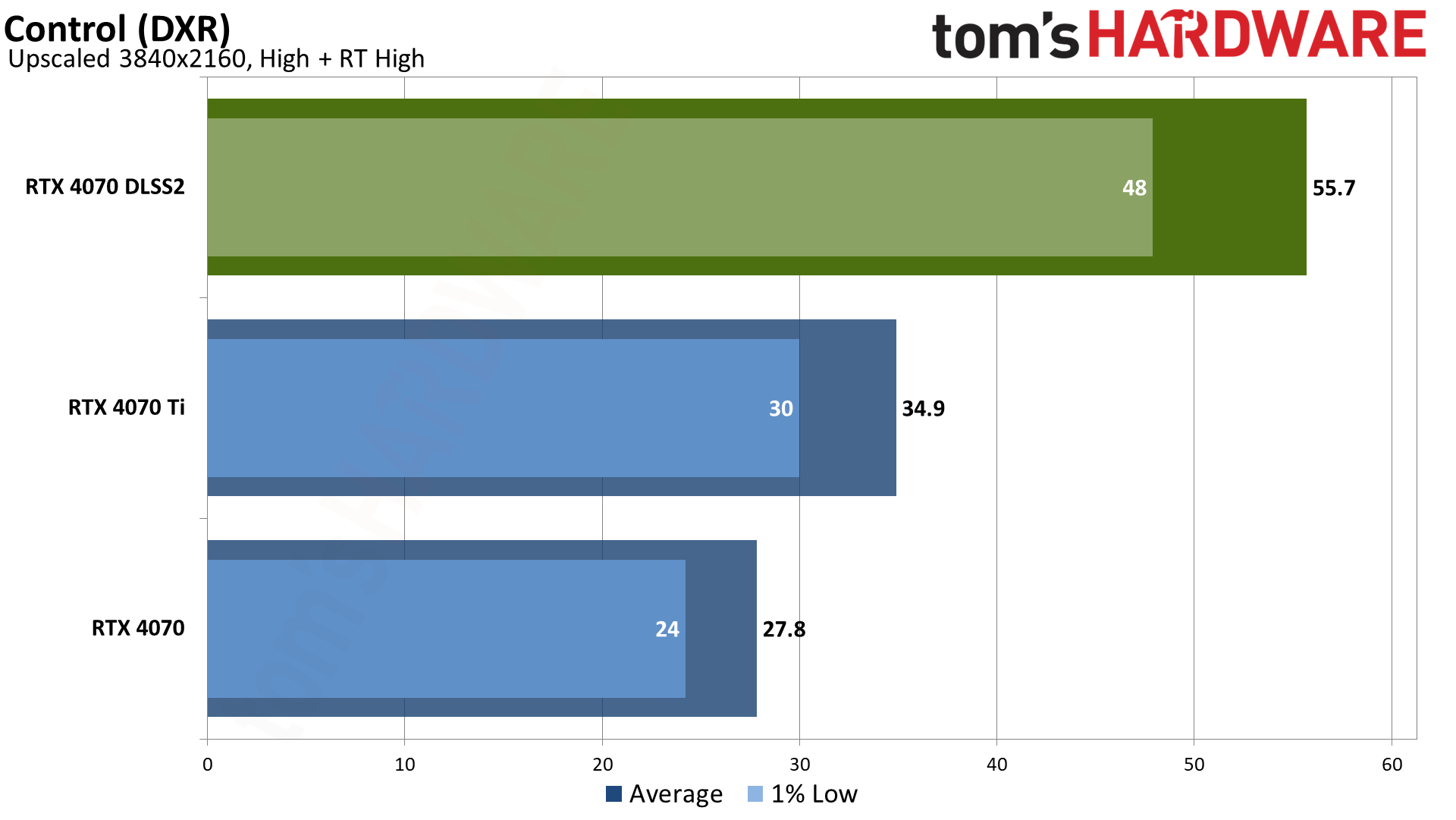

Control Ultimate Edition is perhaps the oldest game in our test suite. It's also the first game (or one of the first couple) to get DLSS 2 support, though here the game lists exact resolutions for upscaling rather than the typical Quality/Balanced/Performance presets we're not used to seeing. The game also supports ray traced reflections, transparent reflections, and diffuse lighting, and it was one of the first DXR games to use multiple RT effects.

Even now, over three and a half years after its initial release, Control remains fairly demanding. The RTX 4070 gets just under 60 fps at 1440p, and that improves by 82% to 108 FPS with DLSS Quality upscaling. At 4K, the game goes from a borderline unplayable 28 fps to 56 fps, a 100% improvement.

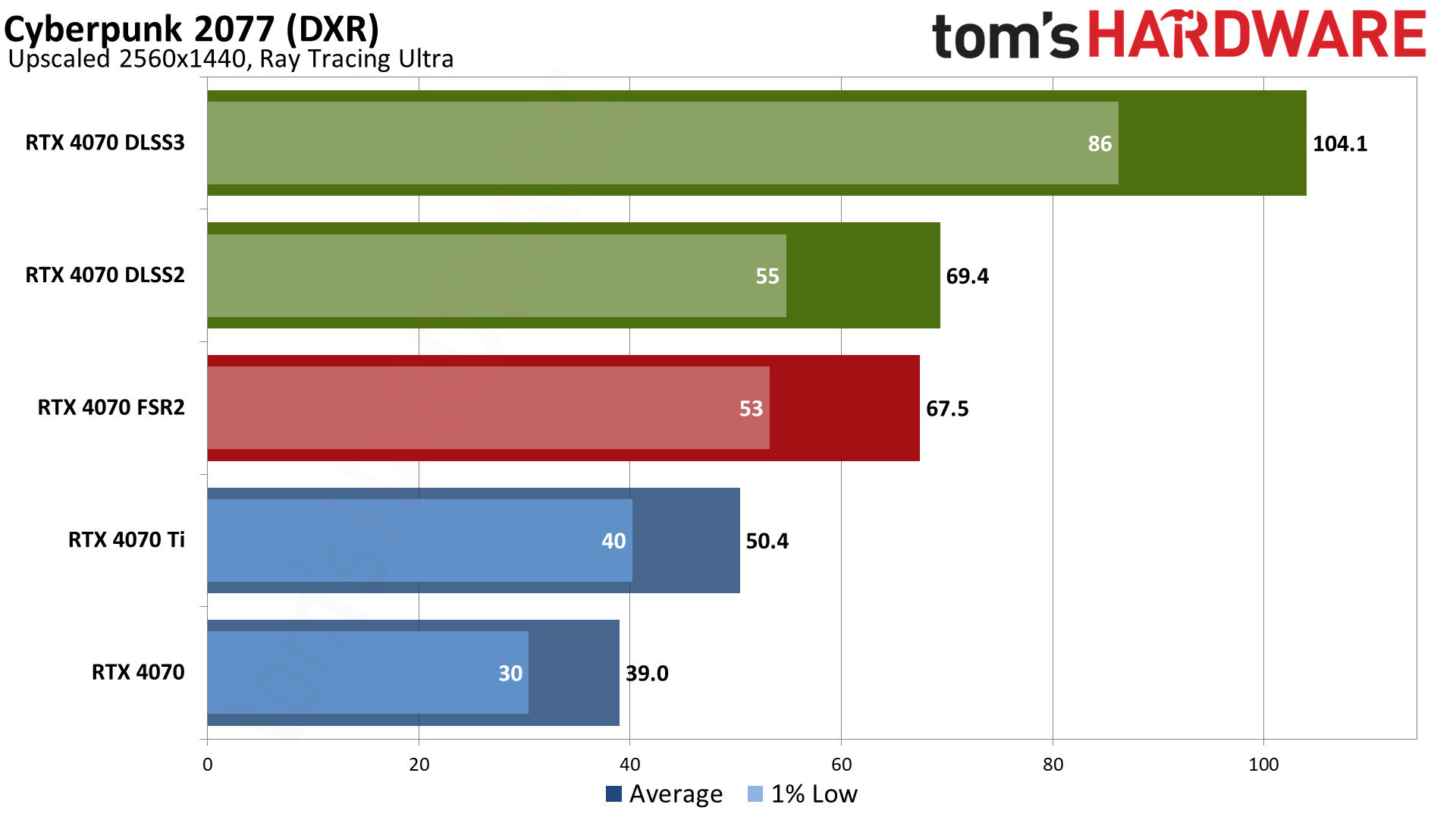

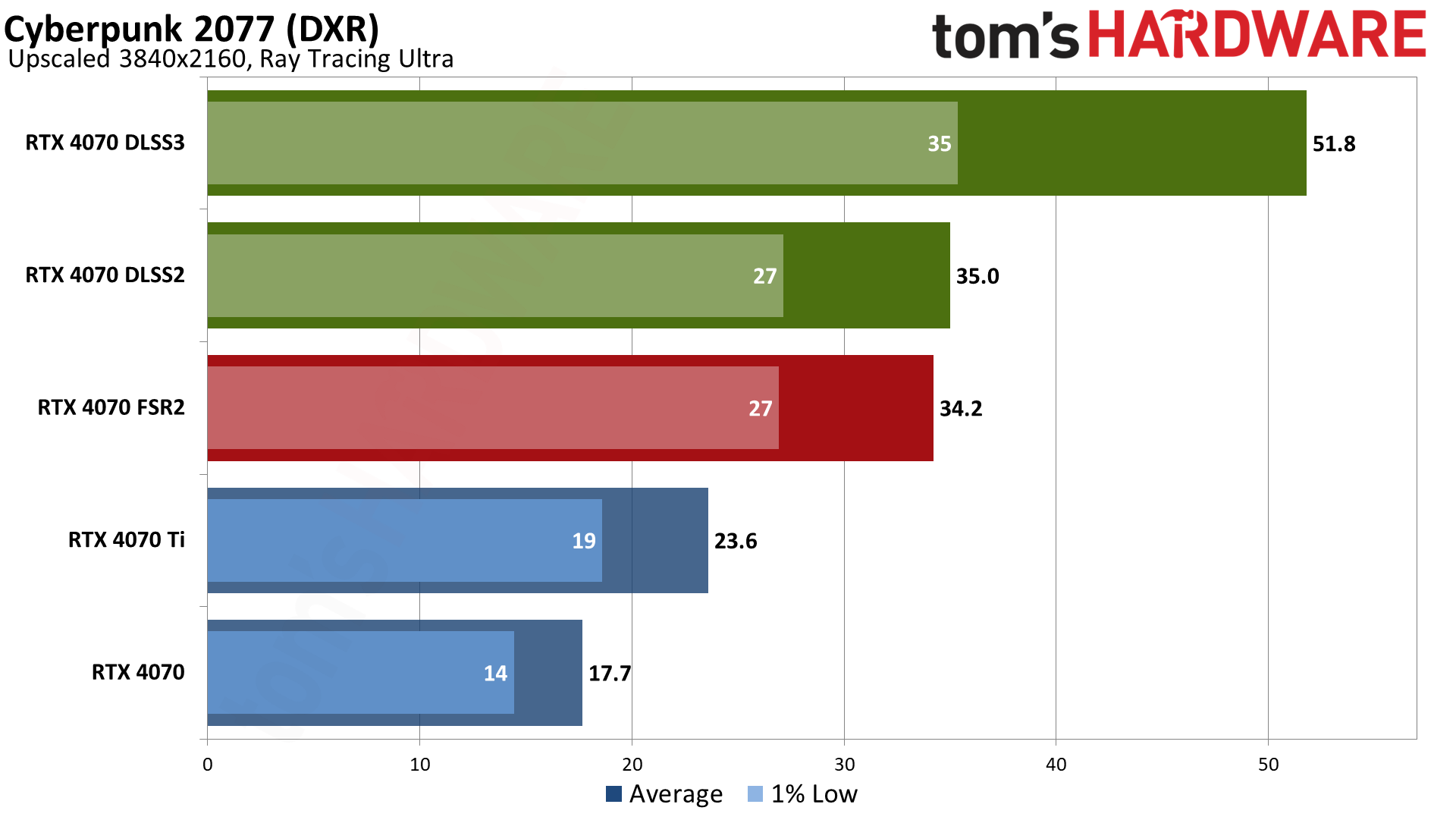

Cyberpunk 2077 doesn’t need an introduction, but we do need to mention that testing was conducted before the RT Overdrive mode came out. Which is probably for the best, as we suspect performance on most of these GPUs would be horribly slow in that mode, even with upscaling. Anyway, the game now supports DLSS 2/3 as well as FSR 2.1 and XeSS. We’re skipping XeSS testing, since FSR 2 generally looks superior on non-Intel Arc cards.

Performance at 1440p with the RT Ultra preset is at least playable at 39 fps, but it’s certainly not a great experience. Both DLSS 2 and FSR 2.1 provide a similar uplift of around 75–80 percent, with DLSS providing slightly better performance. That gets the game into the smooth zone, and then DLSS 3 Frame Generation boosts performance an additional 50%, giving a net 167% increase in framerate.

At 4K, the game definitely isn’t playable on the 4070, averaging just 18 fps. DLSS Quality upscaling improves that to a borderline experience with 35 fps, nearly double the performance but still not really smooth. Frame Generation meanwhile yields an additional 48% increase in FPS… except the game still feels like it’s running at 30–40 FPS, even if more frames are coming to the monitor. That’s basically the issue with Frame Generation, and we feel like you generally need a game that already gets over 40 fps if you don’t want it to feel a bit sluggish.

Conveniently (for us), Cyberpunk 2077 also reports latency to FrameView, which gives us one more data point to mention. We tested with Reflex enabled, because that’s generally the best experience you’ll get with an Nvidia GPU. At native 1440p, Cyberpunk had 50ms latency. Turning on DLSS 2 upscaling dropped the latency to 32ms, while DLSS 3 bumped it back up to 46ms. That’s a net gain with Frame Generation compared to native rendering, but DLSS without Frame Generation can feel a bit more responsive. 4K is a different story, as native latency was 114ms, but DLSS upscaling dropped that to 56ms. Frame Generation pushed the latency back up to 81ms, which wasn’t terrible but was still noticeable if you’re sensitive to such things.

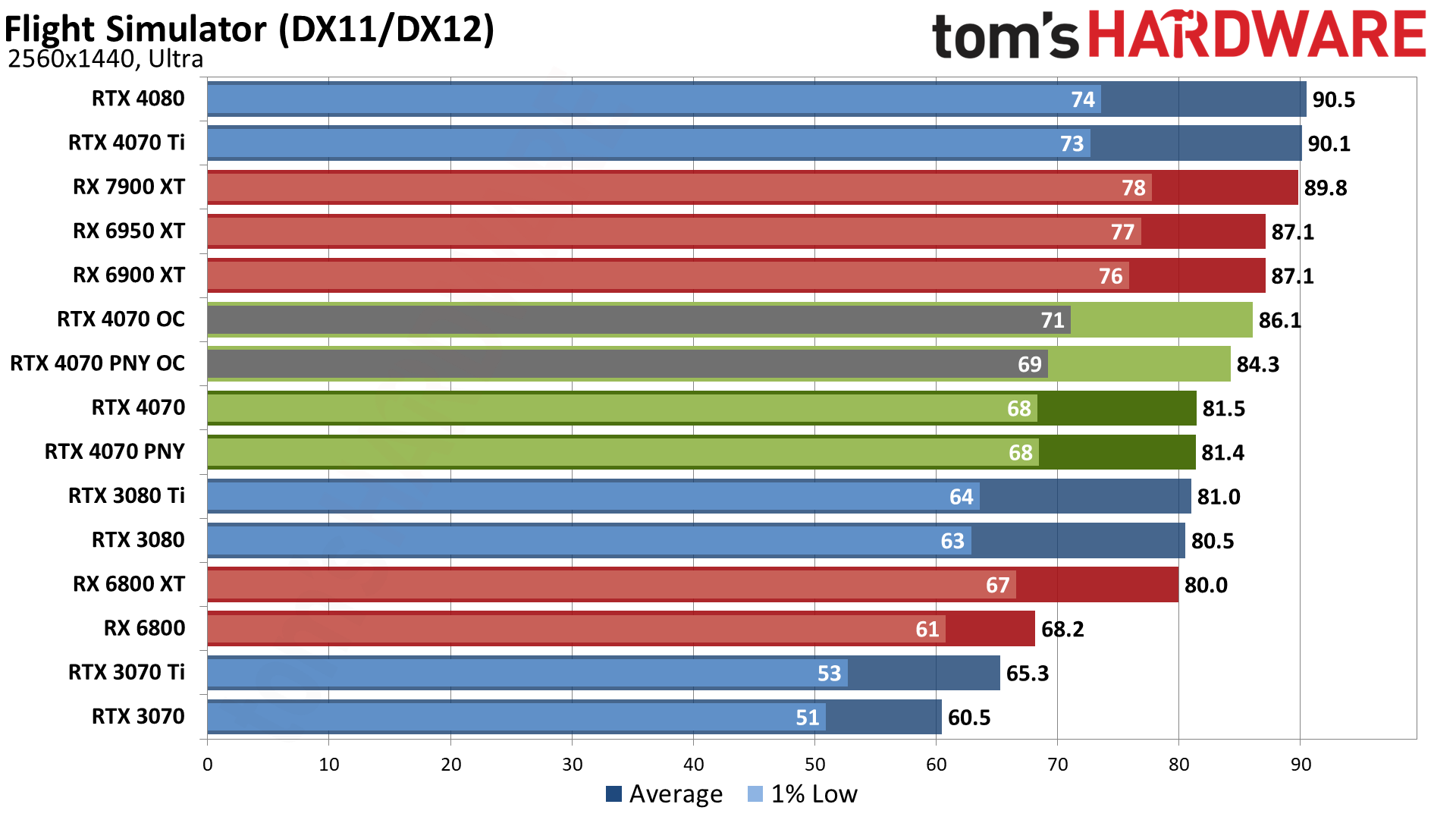

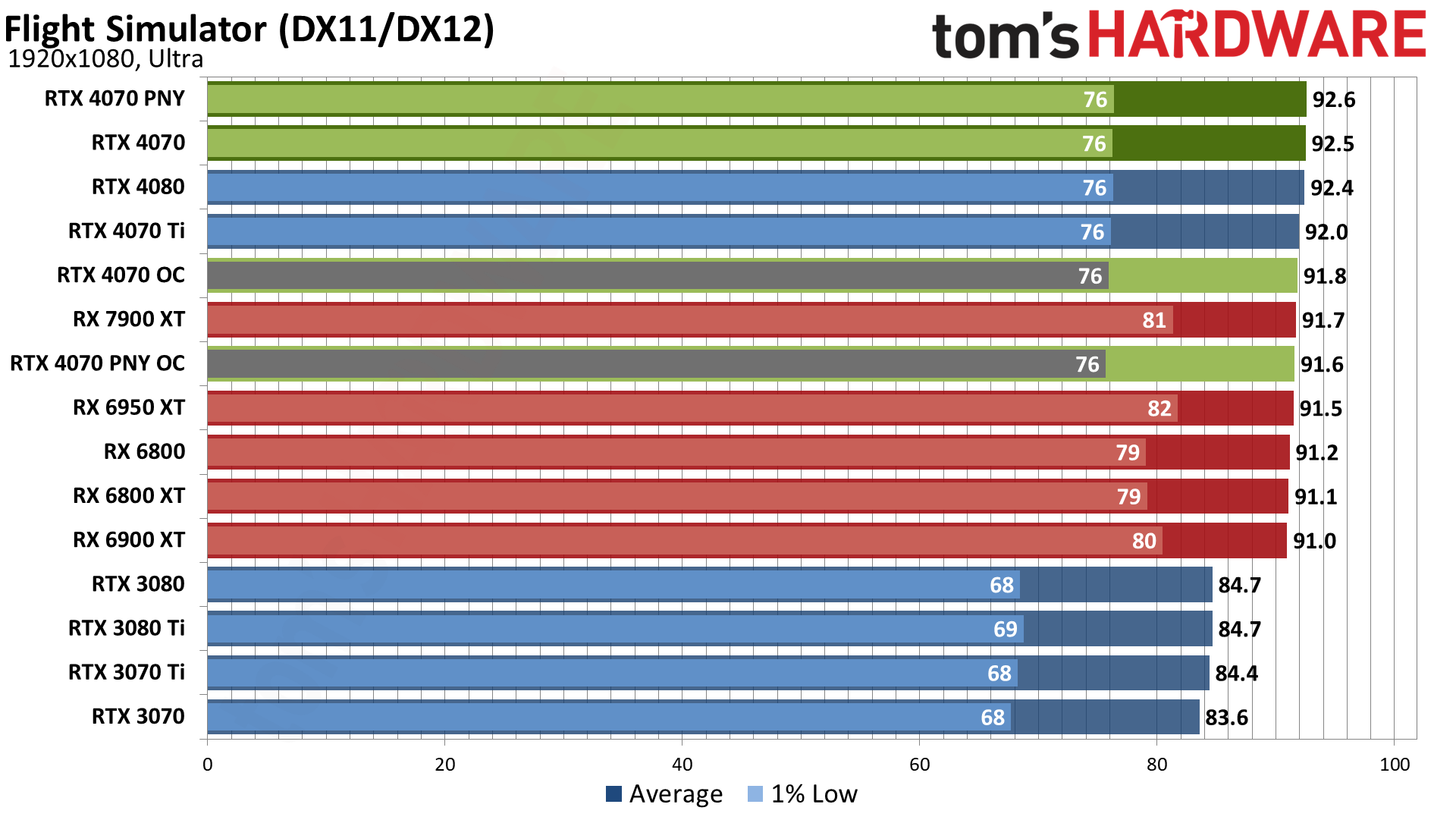

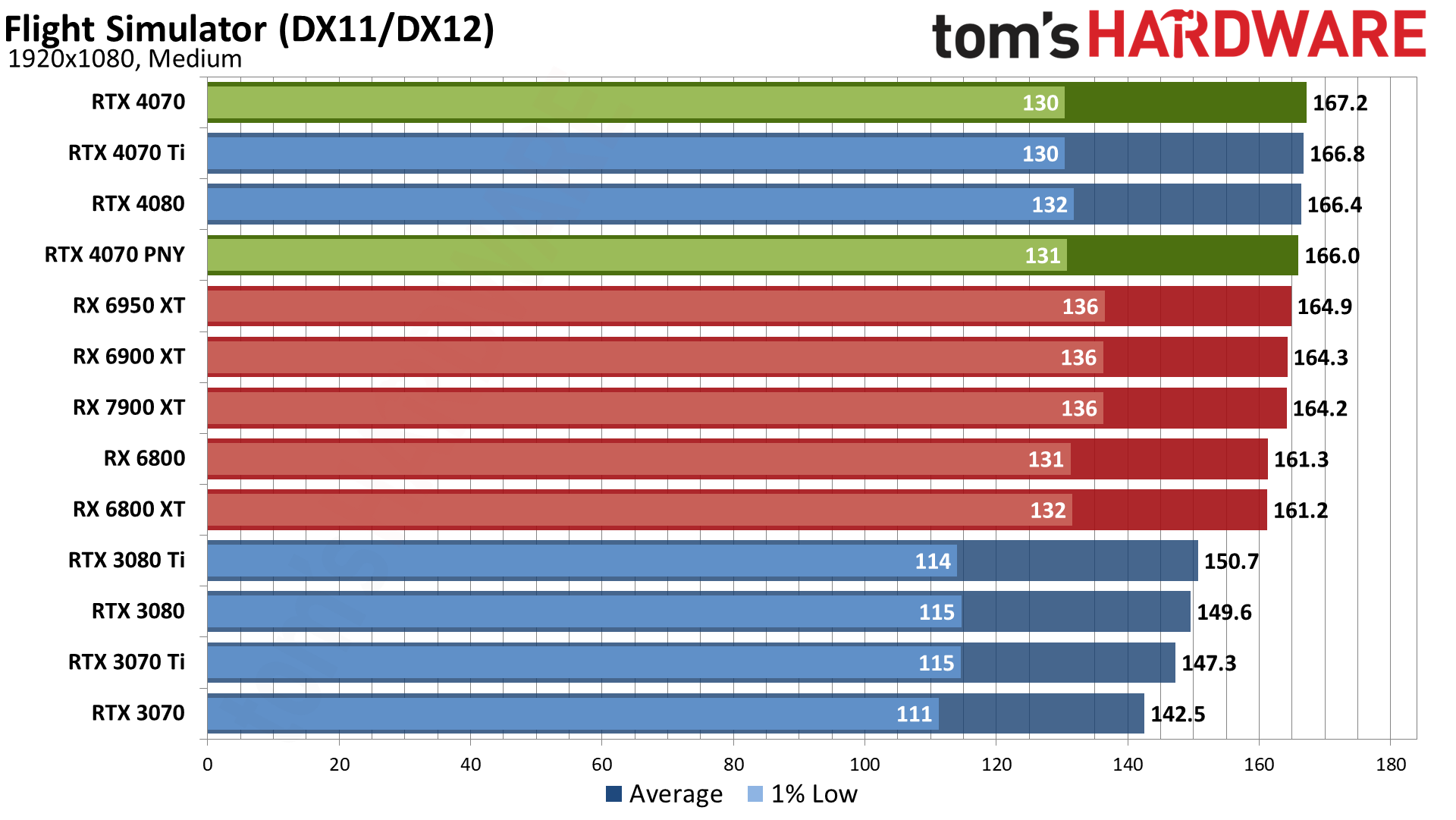

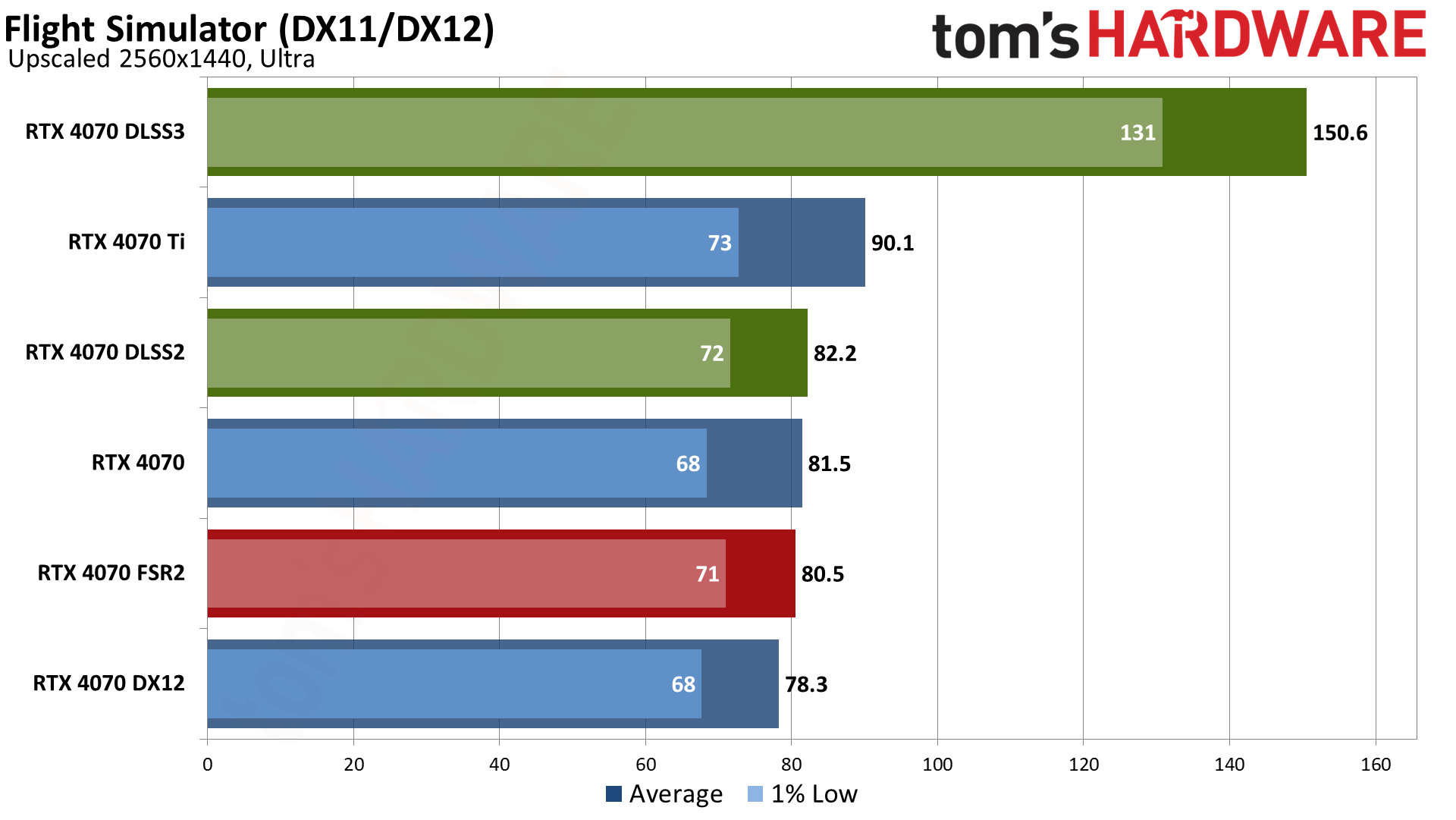

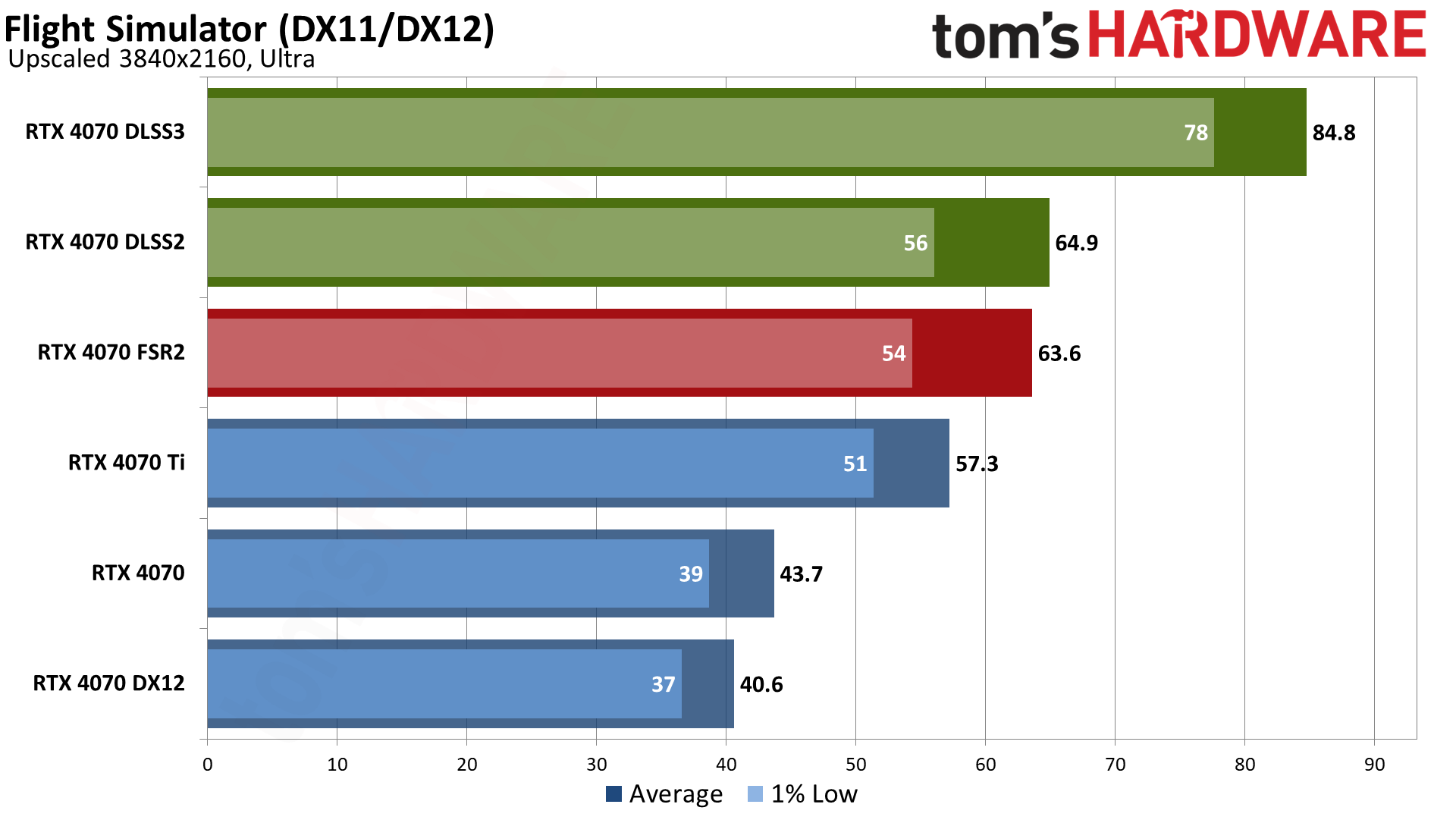

Flight Simulator is another game with DLSS 3 and FSR 2 support (this time only FSR 2.0, if you’re wondering). The problem is that the game is also almost completely CPU limited on a fast GPU like the RTX 4070, at least until you’re running at 4K. Also, you can’t use Frame Generation unless the game is running in DX12 mode, which ends up being a bit slower than running in DX11.

At 1440p, neither DLSS nor FSR upscaling does much for performance — FSR actually dropped the framerate slightly, but we’re basically looking at margin of error differences. This is where Nvidia likes to tout the advantages of Frame Generation, and it does indeed provide a major increase in measured framerate: 151 fps compared to 82 fps, an 83% improvement. But despite the large gains, I have to point out that Flight Simulator doesn’t really need over 100 fps. It’s nice to see such a result, but the game feels just as good (to me) at 80 fps.

The gains from Frame Generation at 4K were far less pronounced. DLSS 2 upscaling increased performance by 49%, and DLSS 3 gave an additional 31% improvement. The total gains with DLSS 3 are still impressive, nearly double the native performance, but the feel of the game wasn’t really different. Someone looking over your shoulder while you play with DLSS 3 enabled would probably say the game looks smoother thanks to the extra frame, but the player experience feels about the same.

As for latency, in DX12 mode at 1440p, we measured 39ms. DLSS 2 and FSR 2 dropped that to 31ms, while DLSS 3 (Frame Generation) increased latency to 47ms. Moving up to 4K, native rendering had 58ms latency, and upscaling (DLSS and FSR) reduced that to 43–44ms. DLSS 3 meanwhile still came in at 70ms, an extra few frames delay compared to just upscaling.

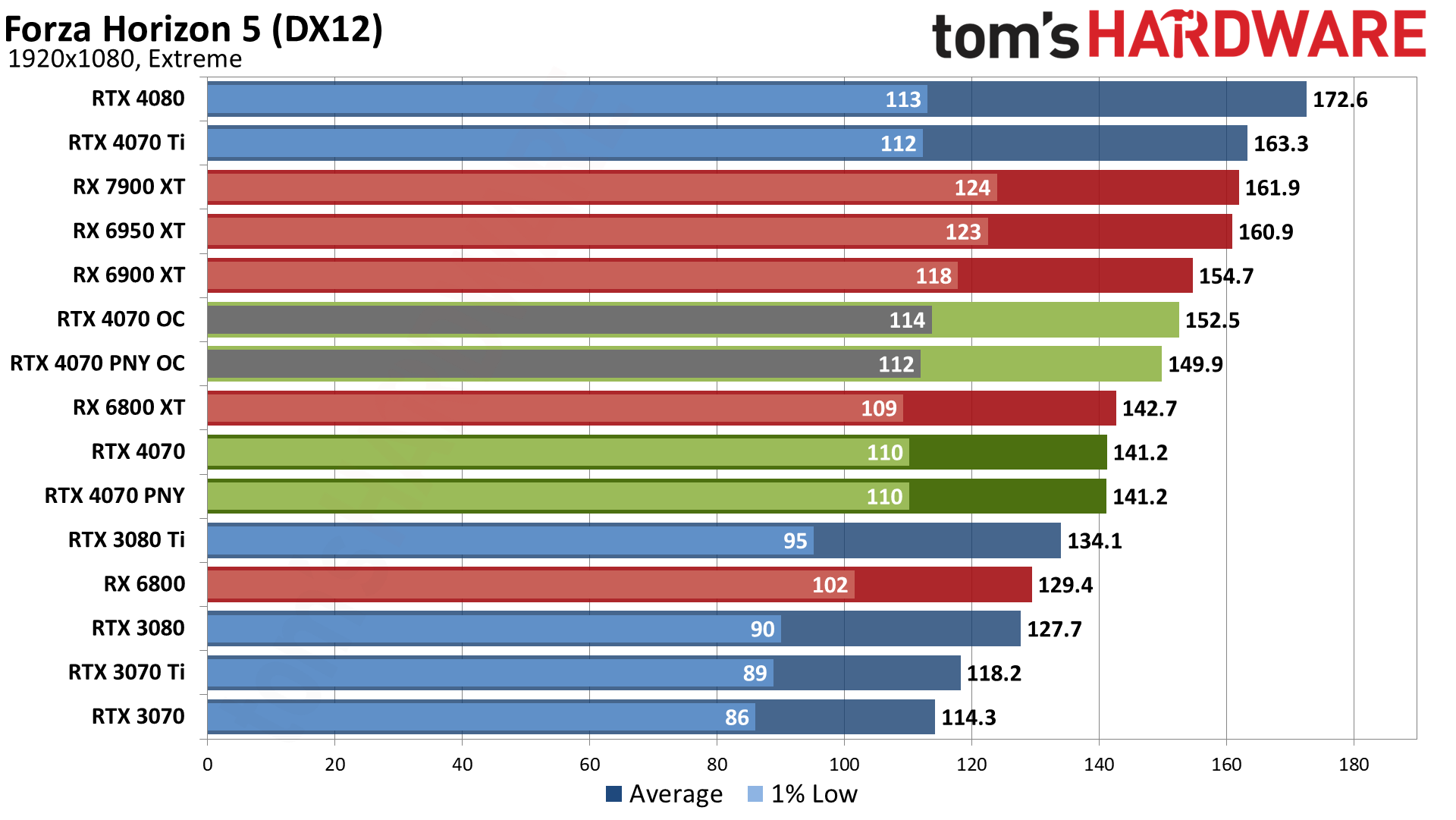

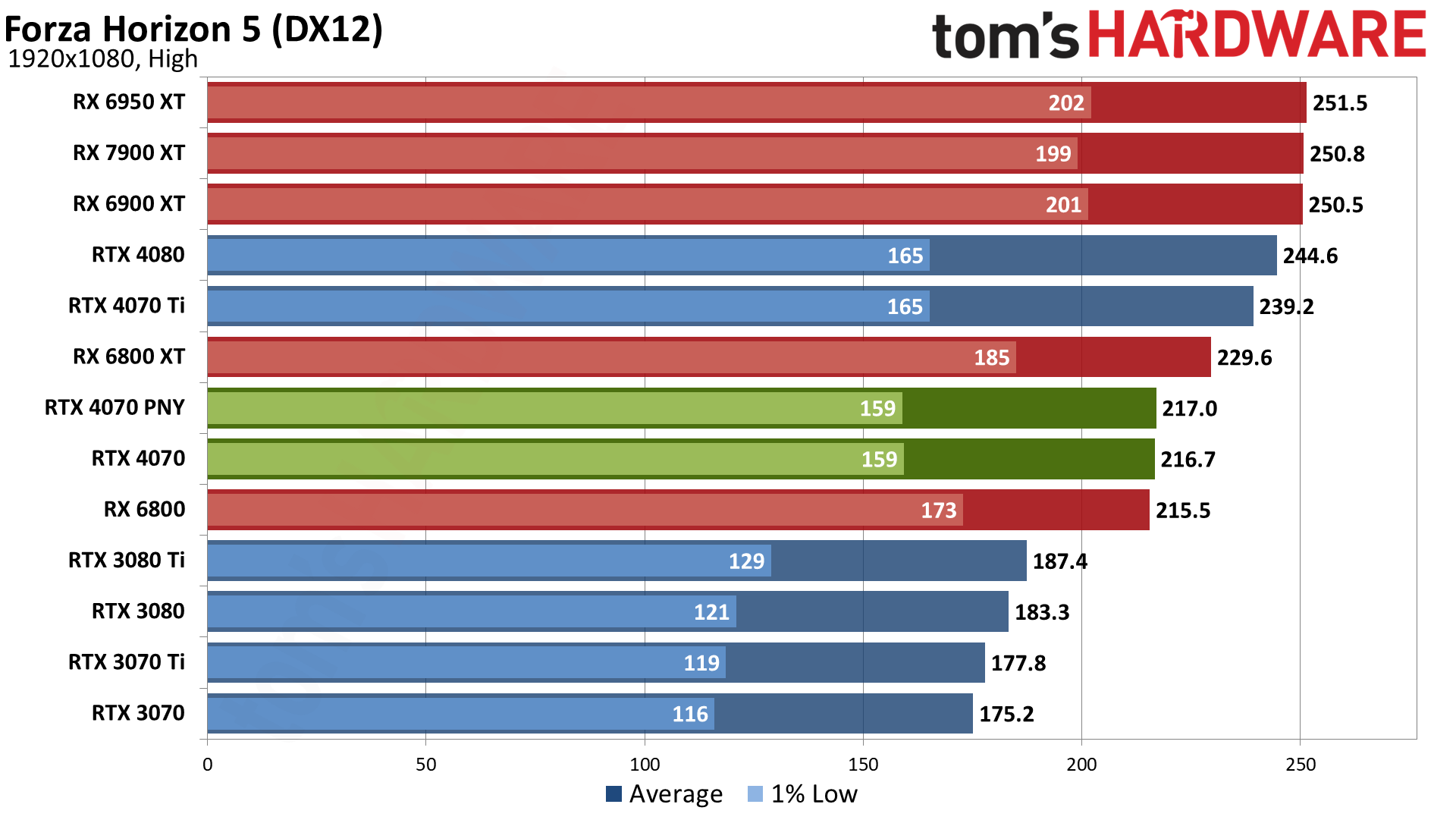

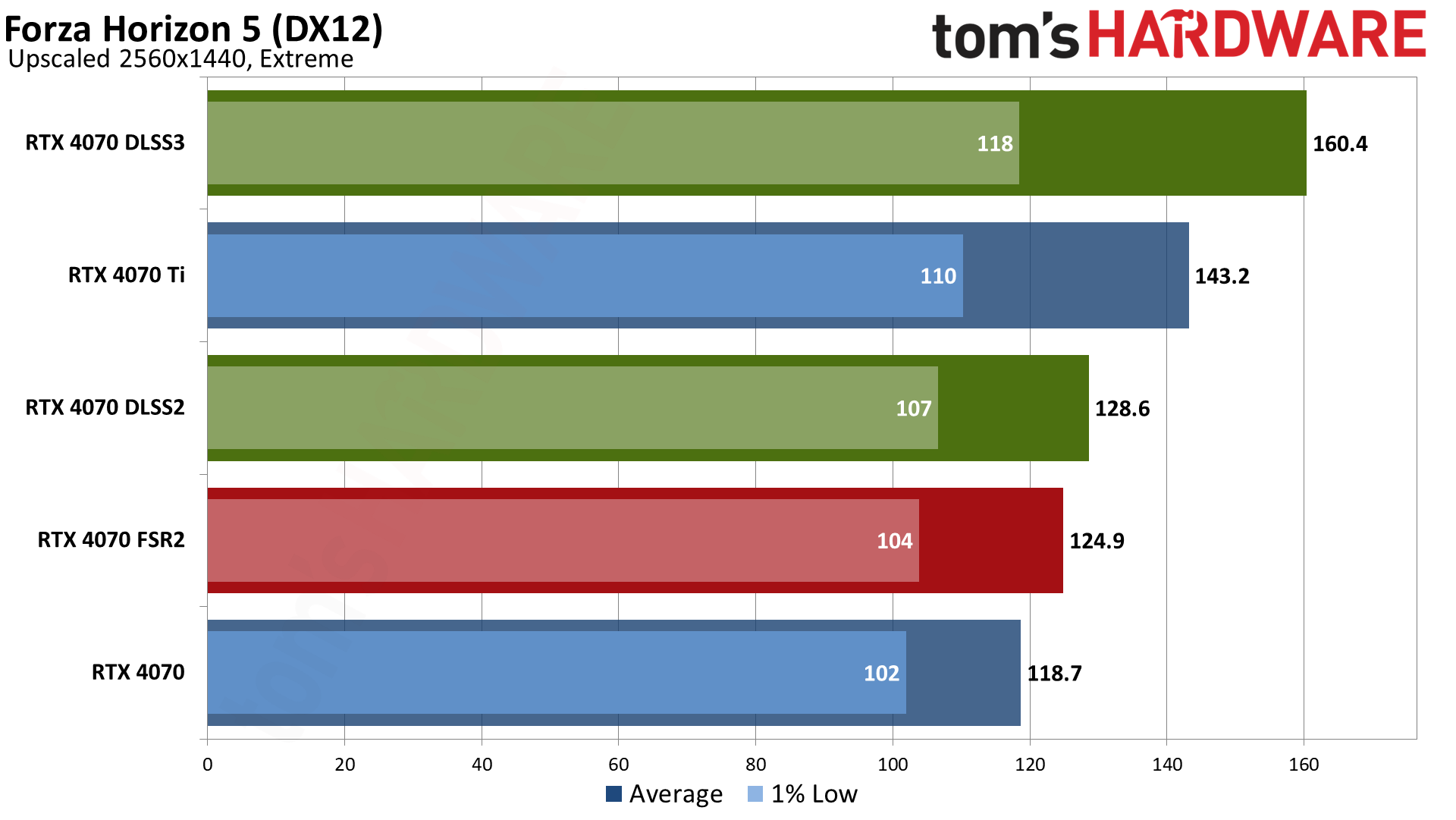

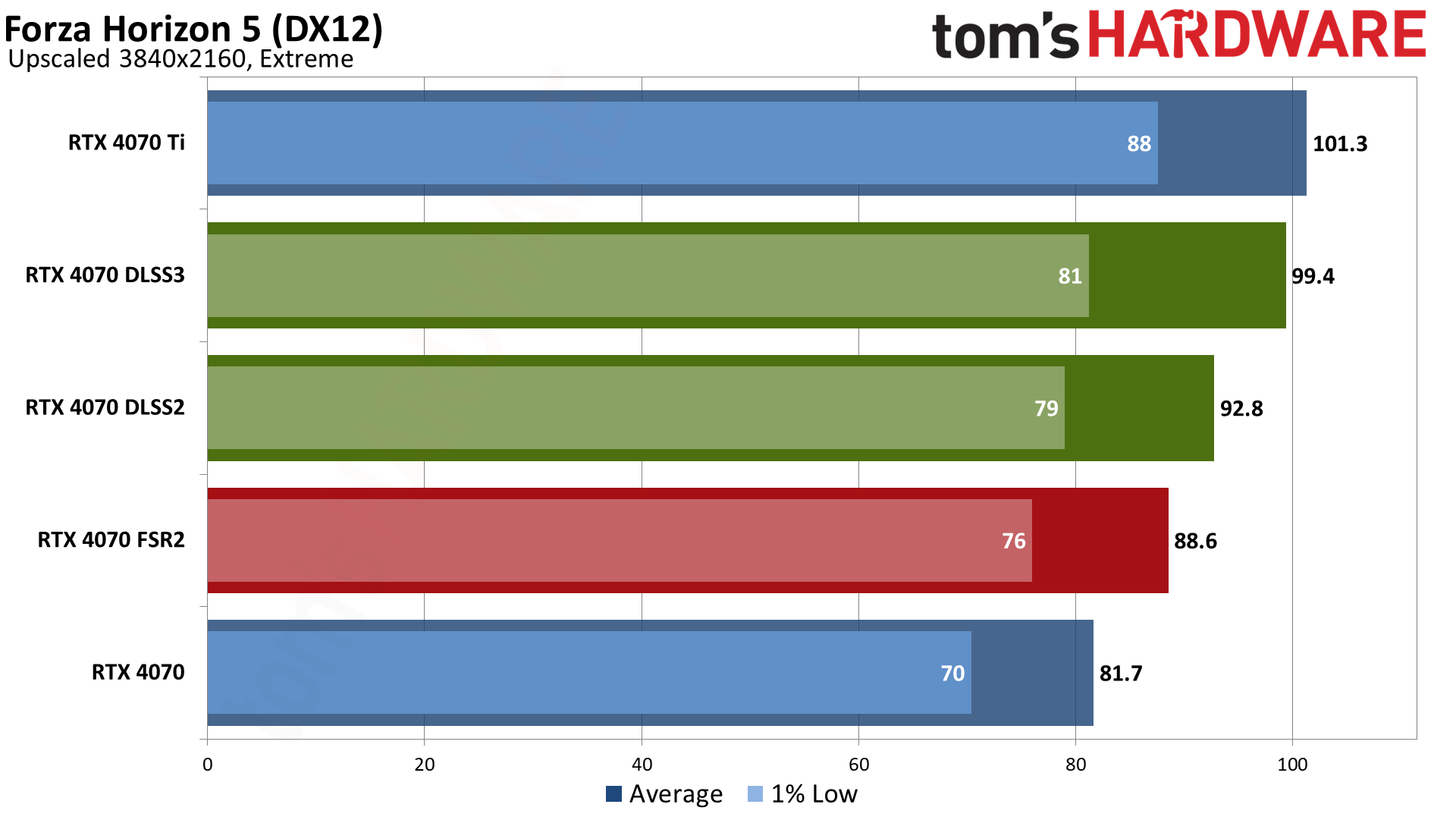

Forza Horizon 5 got a patch in late 2022 that added DLSS 2 and FSR 2.2 support, and now last month another patch added DLSS 3 Frame Generation. The game can definitely run into CPU limits, but the gains with DLSS 3 were a bit muted compared to some of the other results.

At 1440p, native performance was 119 fps with 30ms latency. DLSS upscaling only increase performance by 8%, and FSR was even less noticeable with a mere 5% improvement — and latency dropped to 28–29ms. DLSS 3 gave a 25% boost compared to just upscaling, with a slight increase in latency to 34ms. (Nvidia reported a slightly higher 176 fps with DLSS 3 in their testing, but we weren’t able to replicate that figure.)

The results at 4K were possibly even more perplexing. We expected a more sizeable boost to performance, but it was still only 14% from DLSS upscaling and 8% from FSR 2.2. Latency went from 44ms native to 39ms with DLSS and 41ms with FSR. DLSS 3 this time only provided 7% higher performance than DLSS2, with latency increasing to 47ms. It’s not clear if this is something with the game engine, but the gains are quite muted compared to other games.

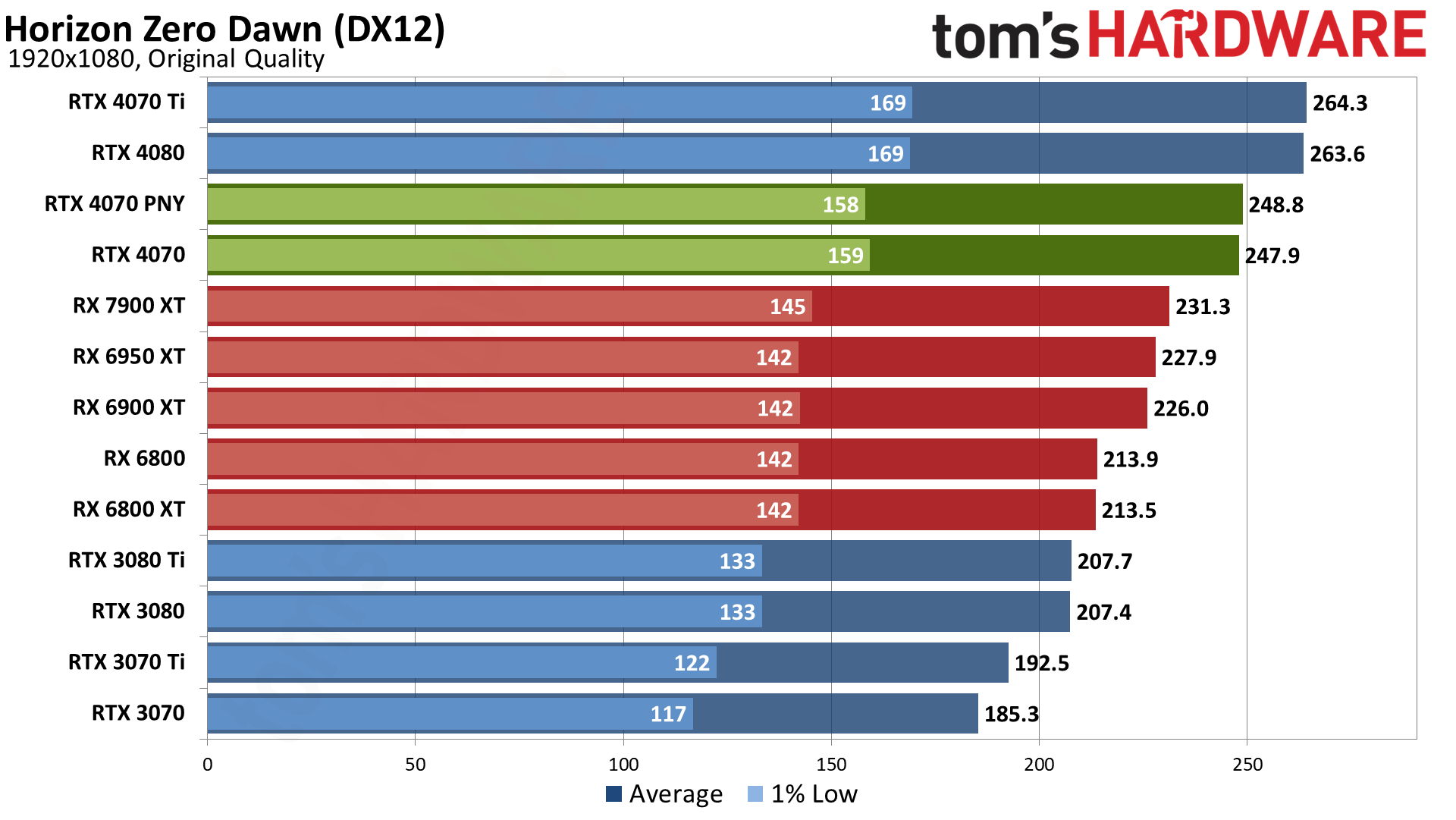

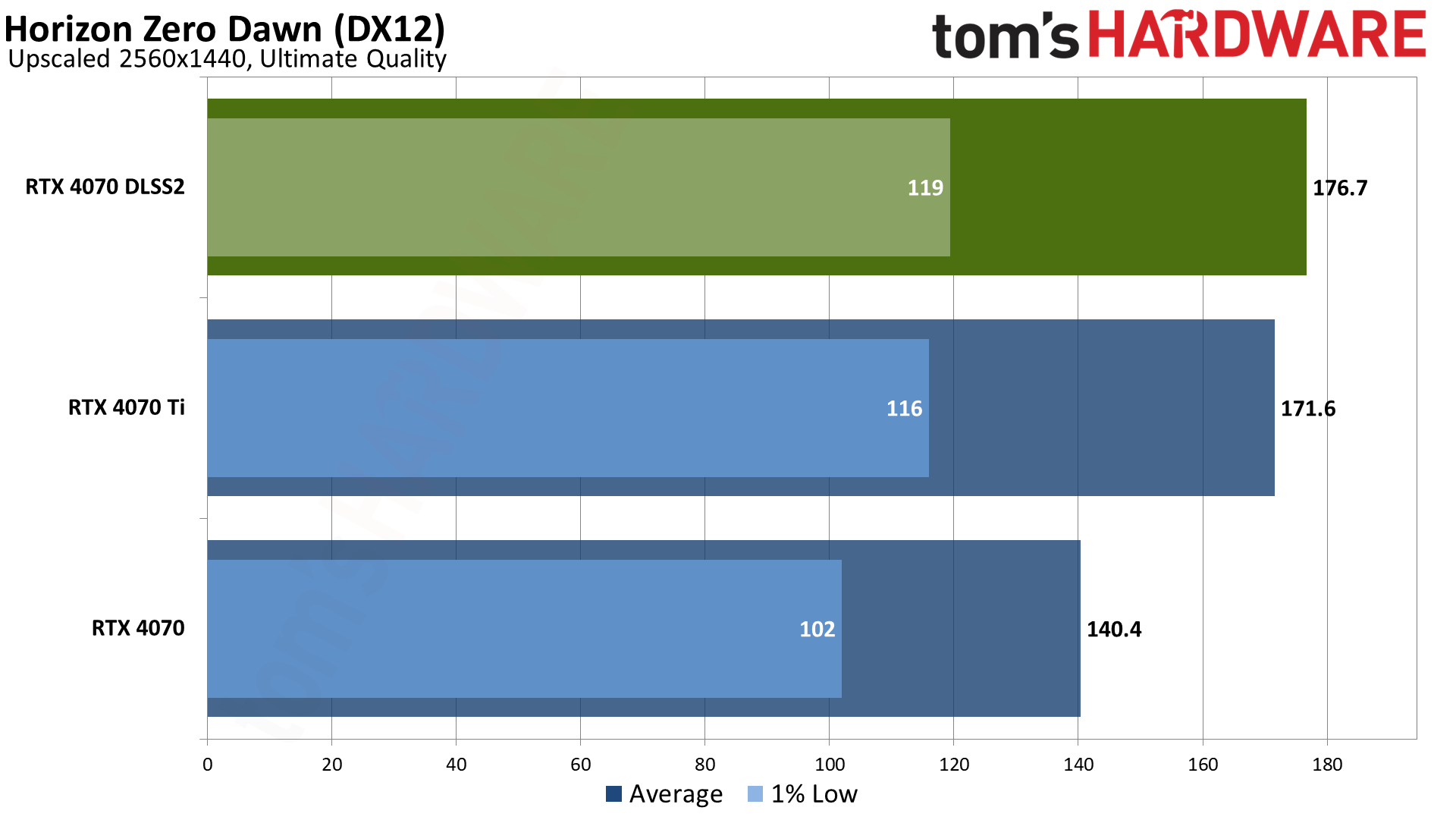

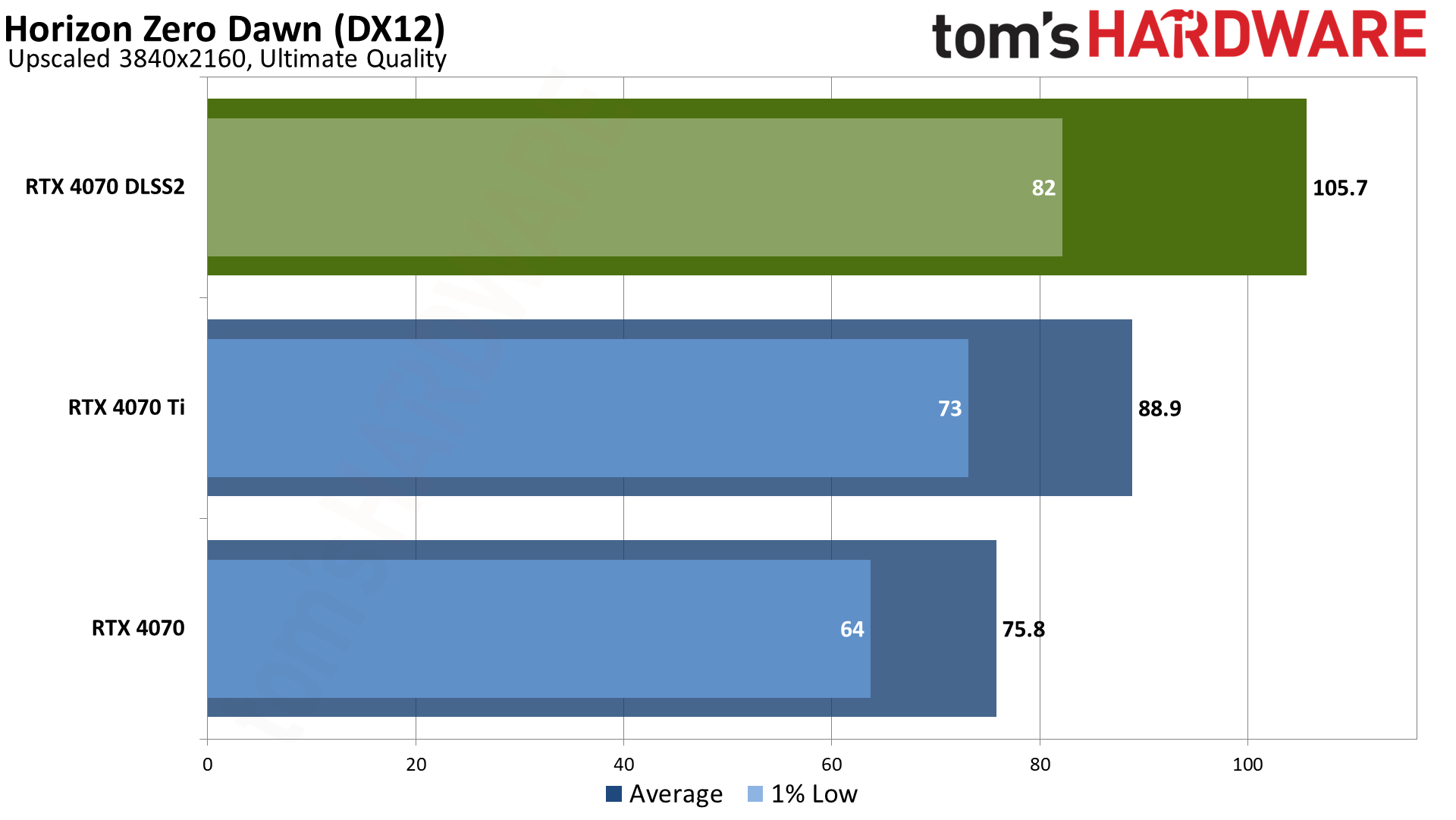

Horizon Zero Dawn is pretty old compared to some of the other games, and it only supports DLSS 2 upscaling. At 1440p, we may be hitting a bit of a CPU bottleneck, but DLSS still provided a 25% improvement to performance. Things were better at 4K, where DLSS delivered a more substantial 40% increase, which is a typical result for 4K quality upscaling in rasterization games.

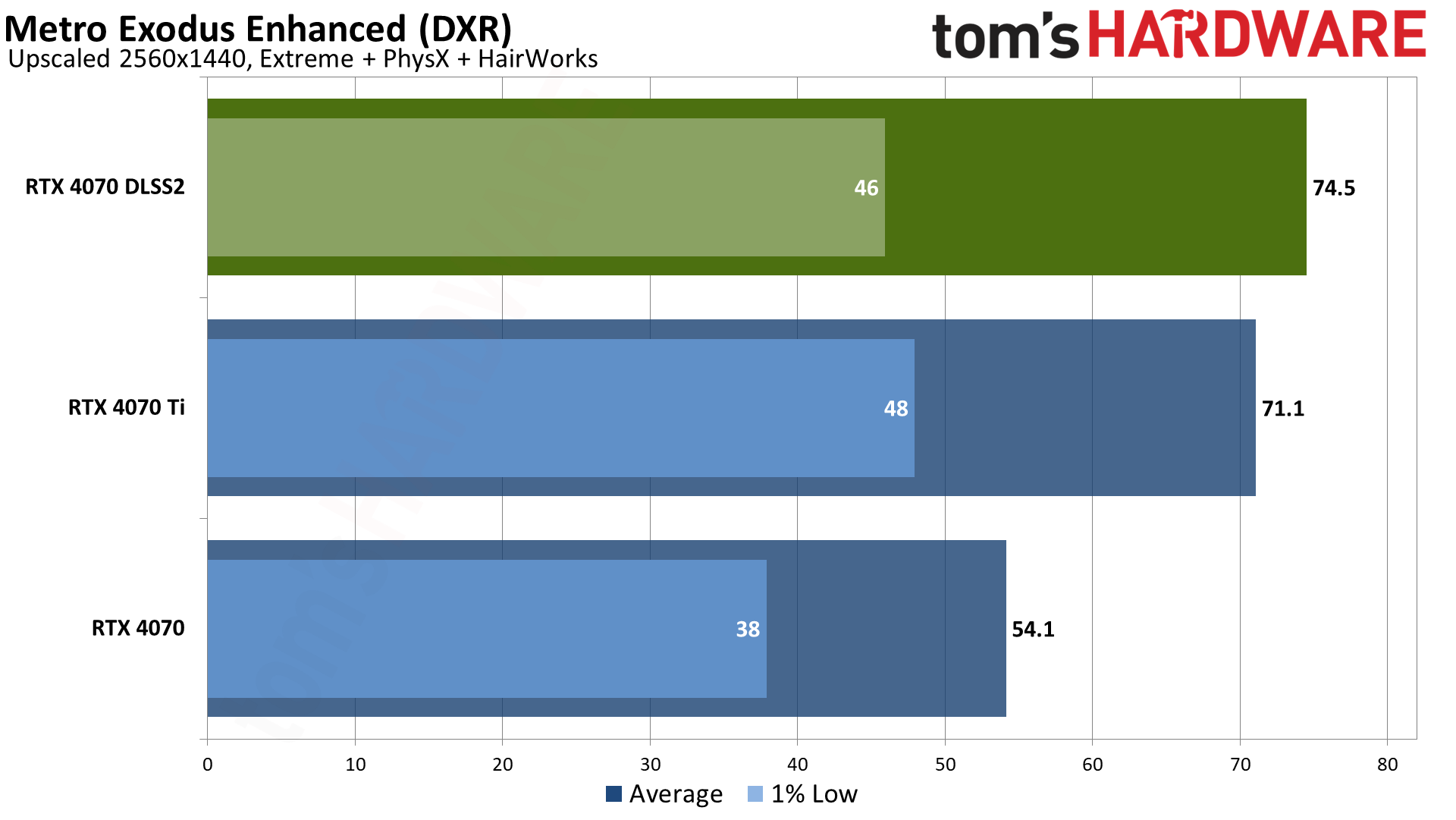

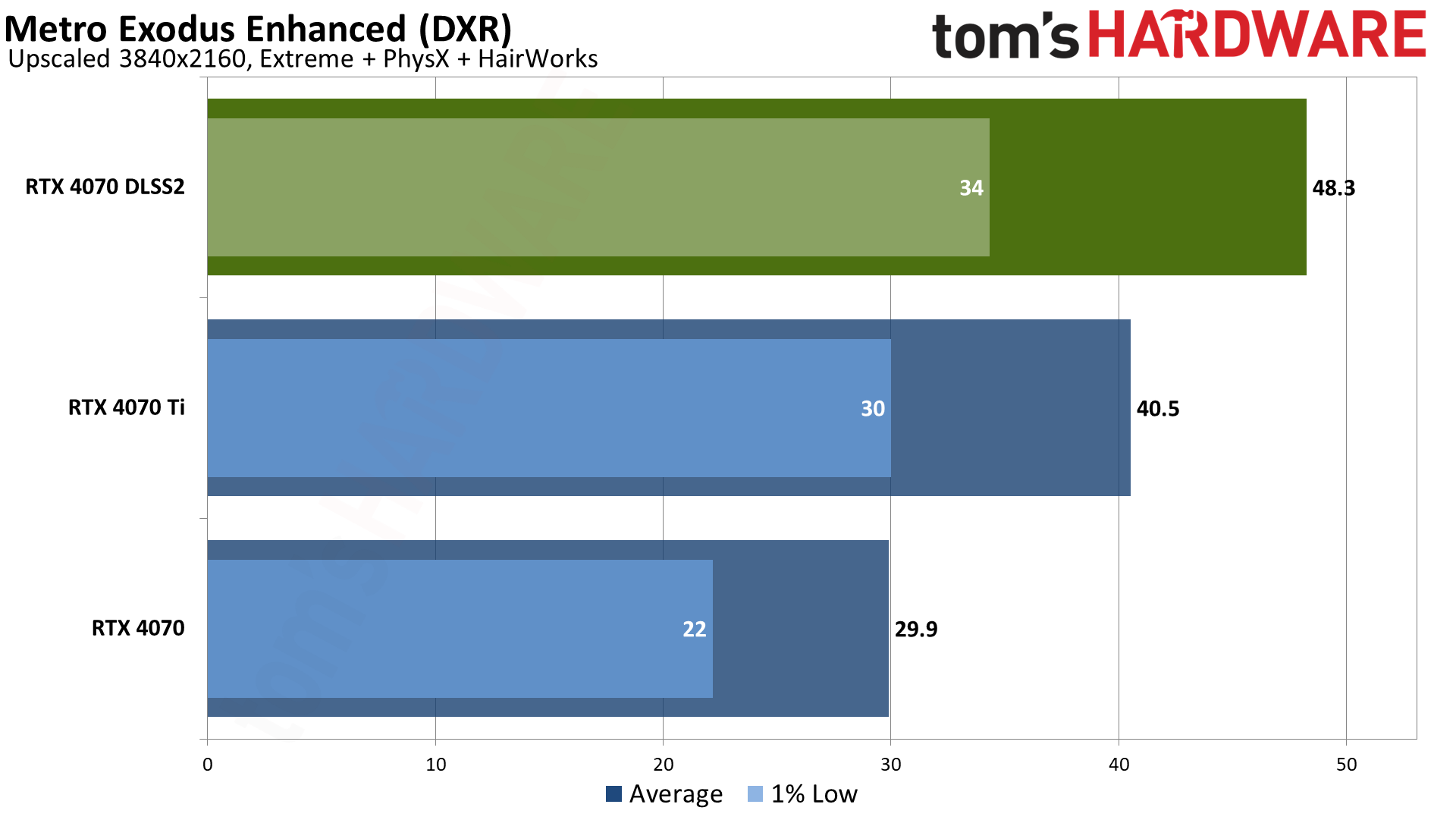

Metro Exodus was one of the first ray tracing games, and it also had DLSS 1.0 support way back in the day. It was later updated with DLSS 2 support, and we’re using the Enhanced version of the game that has increased ray tracing features. Still, it’s one of the less demanding DXR games in our test suite.

At 1440p, performance improved by 38% thanks to DLSS Quality mode upscaling. That’s enough to slightly exceed the native performance of an RTX 4070 Ti, though of course that card would also improve if you enable DLSS. As usual, the gains at 4K are higher, with a 62% improvement. That’s not enough to get the game to 60 fps, but if you’re willing to switch to Balanced or Performance upscaling, you can get there on the RTX 4070.

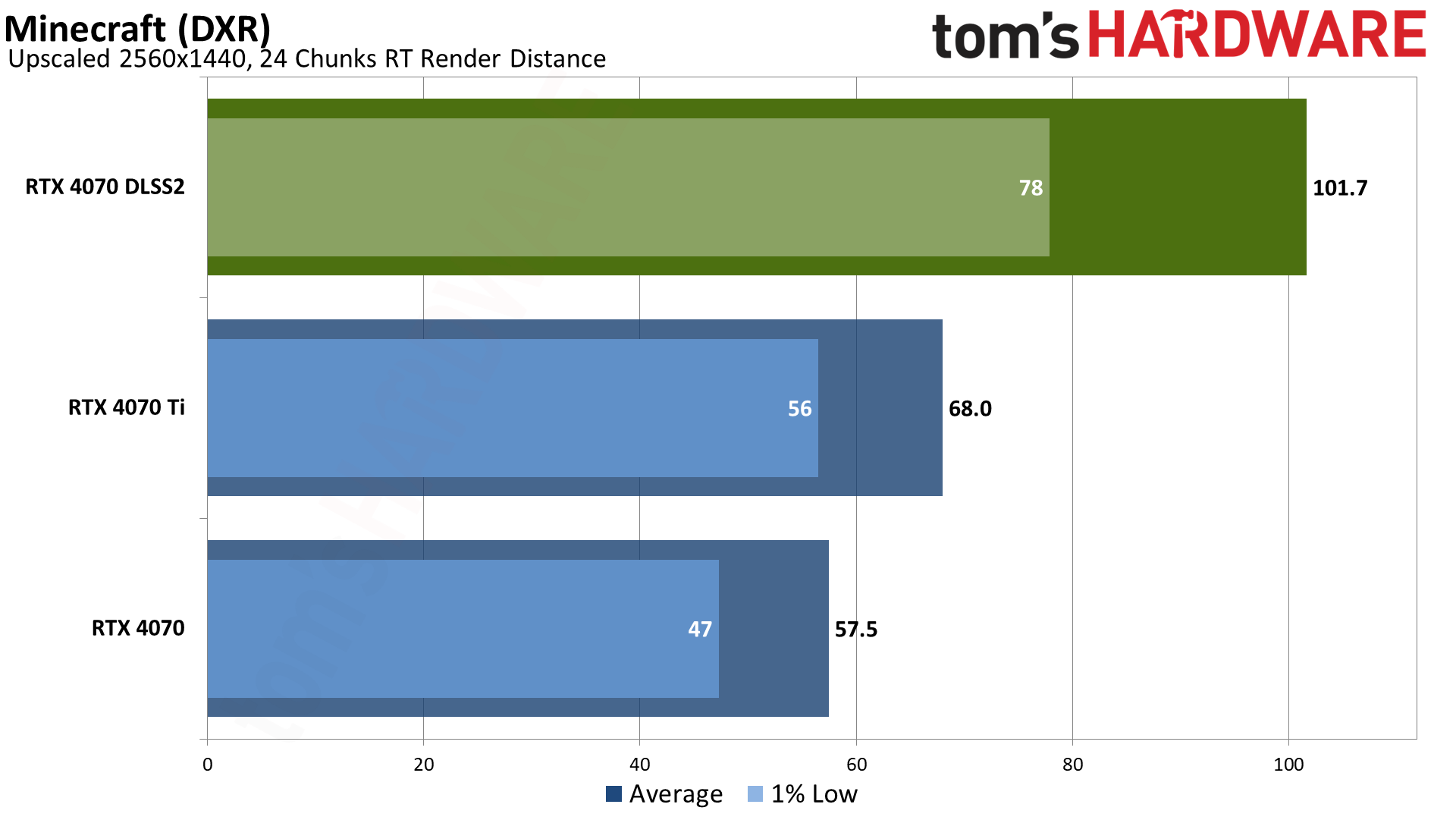

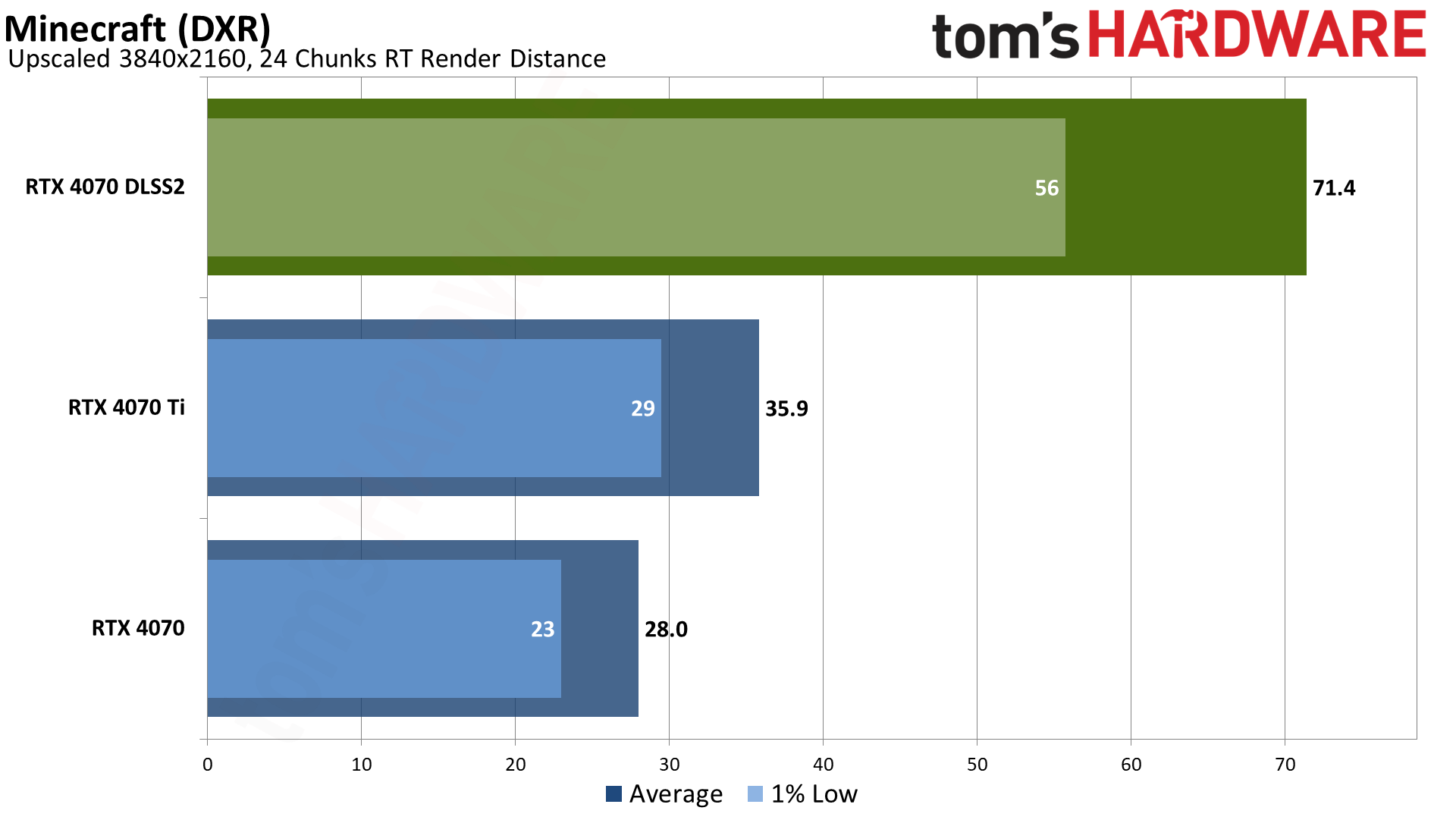

We need to note once again that there’s no way to specify the DLSS upscaling mode with Minecraft, so the game defaults to Quality upscaling at 1080p and below, Balanced upscaling for 1440p, and Performance upscaling for 4K and above. Combined with the full path traced rendering, that means we’ll get much larger improvements in performance from DLSS.

At 1440p, the RTX 4070 came up just short of 60 fps, but DLSS boosts performance by 77% to just over 100 fps. With 4X upscaling, Performance mode at 4K more than doubles the native framerate — it’s 155% faster. We’d love to have the option to choose the upscaling factor in Minecraft, just to keep things consistent with the way we’re testing other games, but there’s definitely a big performance benefit from the DLSS support for RTX owners.

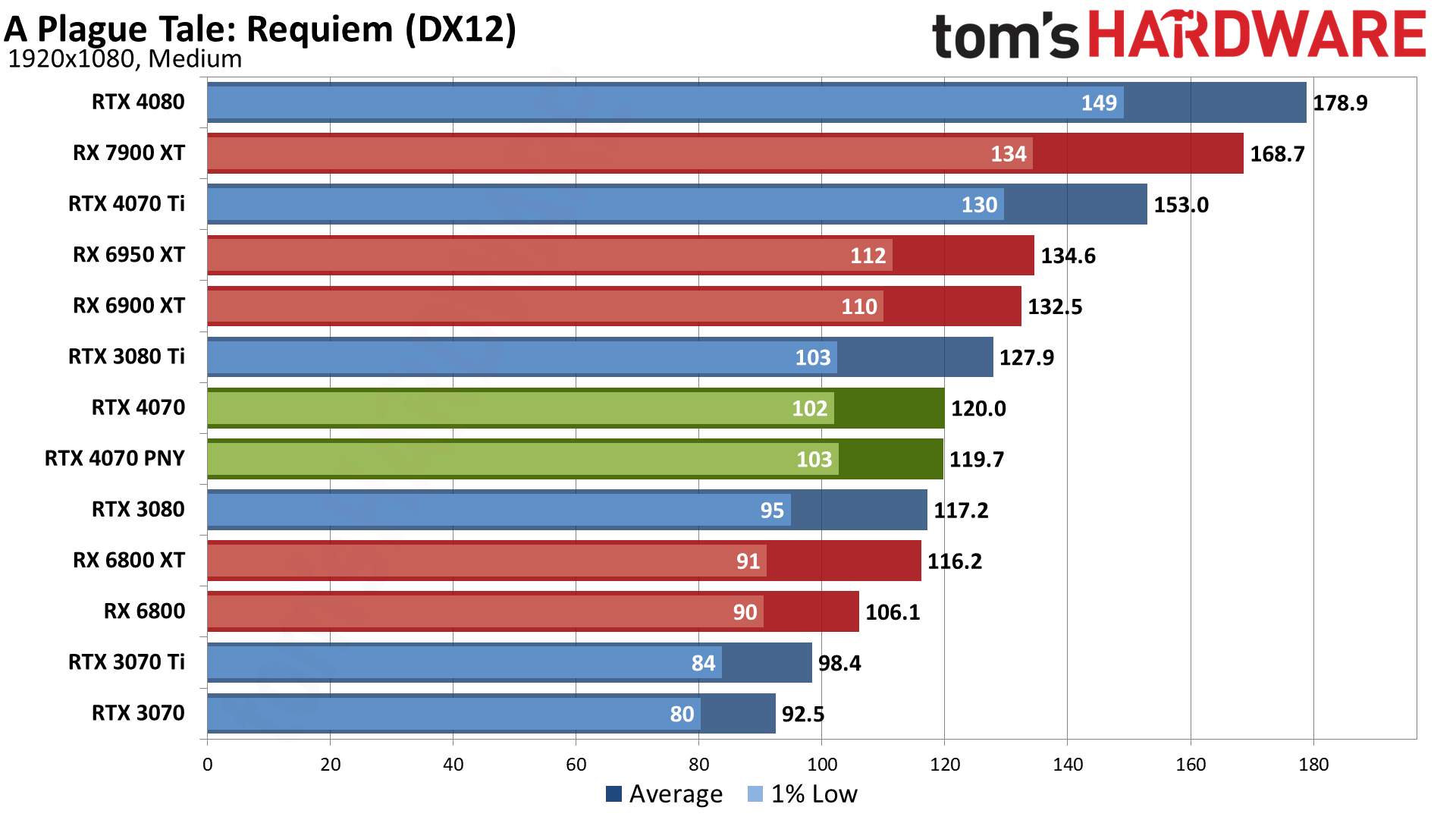

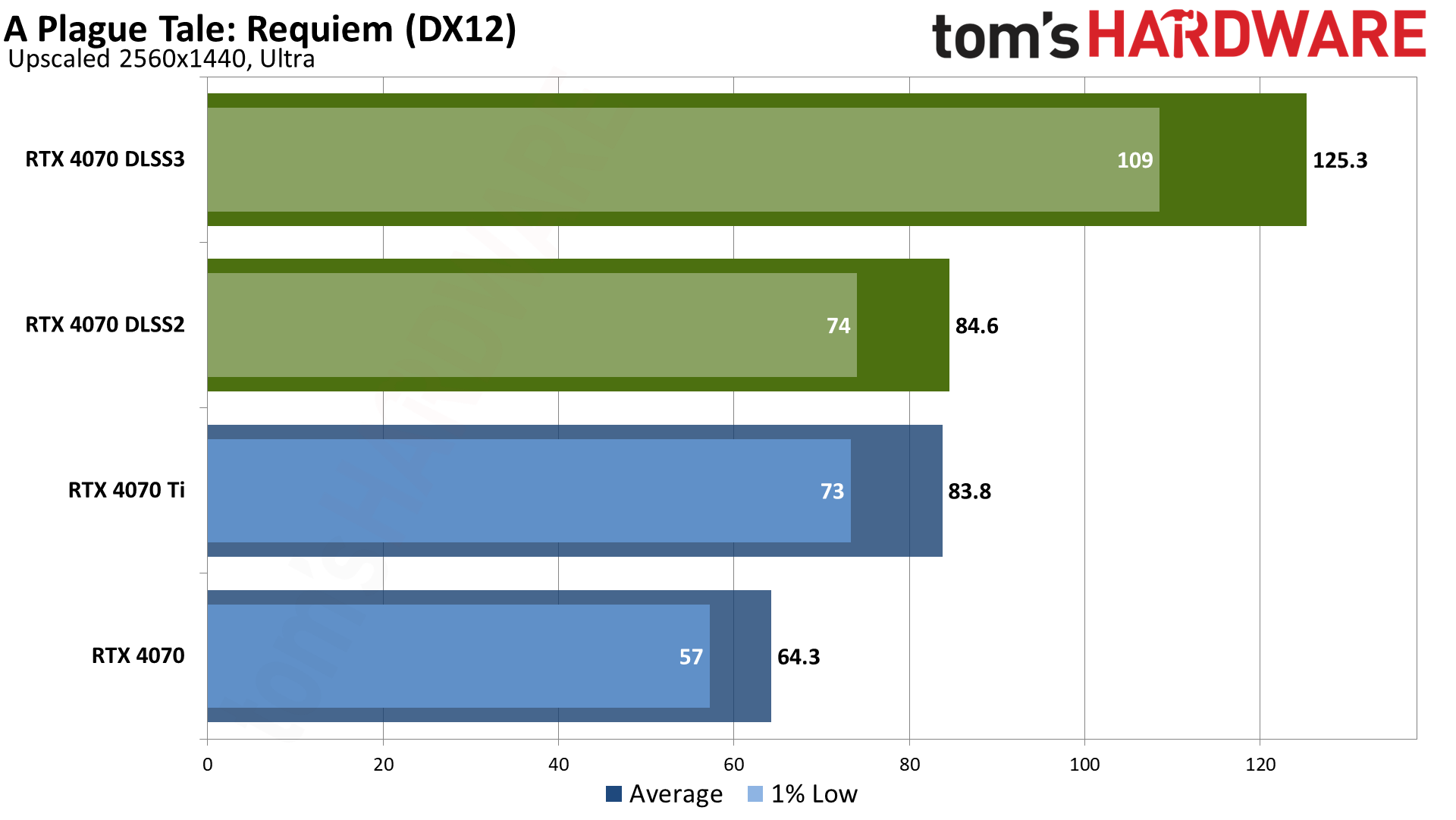

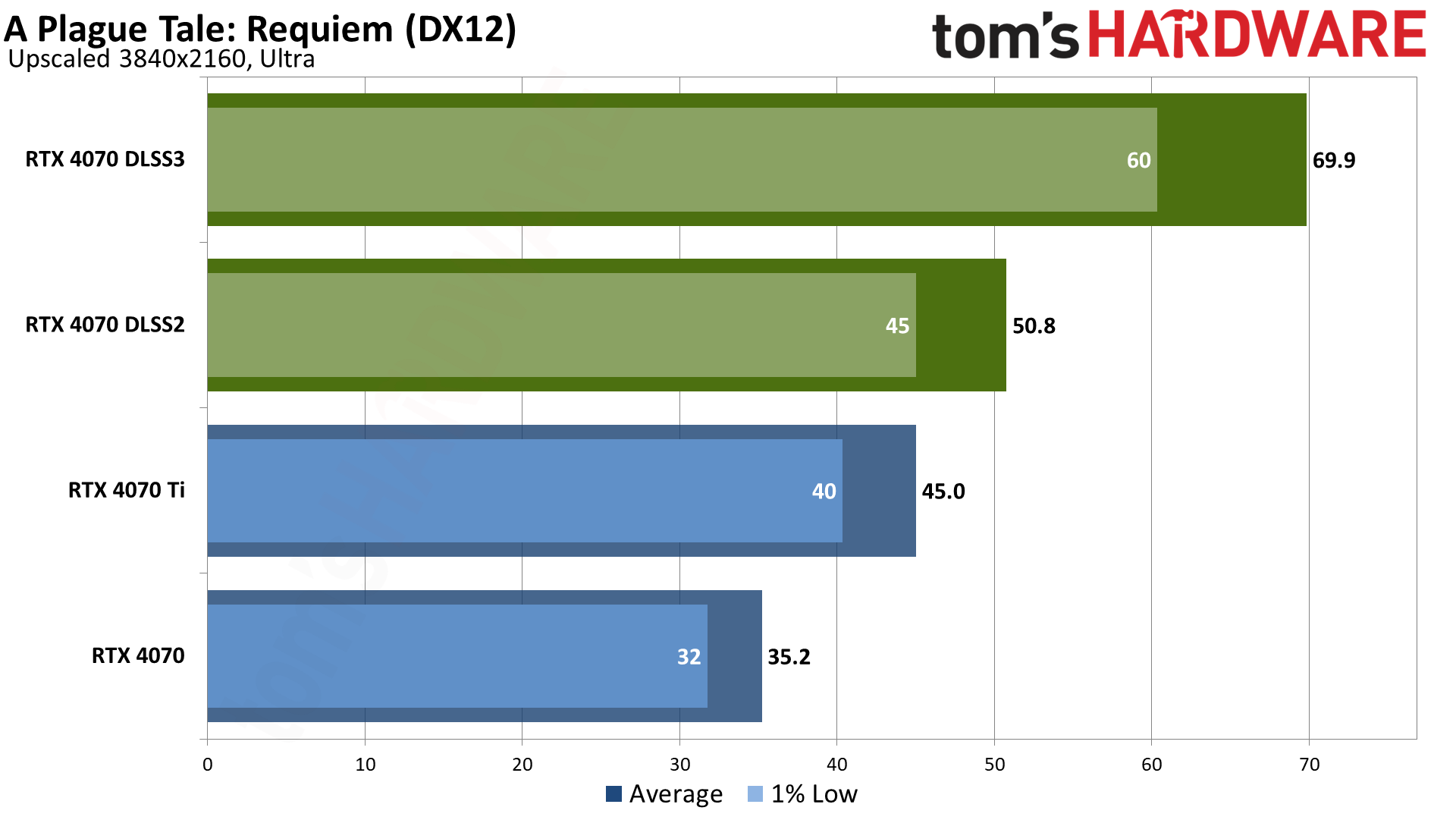

A Plague Tale: Requiem has DXR support for shadows, but that’s frankly the least interesting use of ray tracing in our opinion so we left it off. It does have DLSS 3 Frame Generation, however, and it provides some pretty sizeable gains here.

Performance at 1440p went from 64 fps to 85 fps with upscaling, a 32% improvement. Frame Generation provided an additional 48% increase, for a 95% net improvement. Latency meanwhile was 33ms native, 27ms with upscaling, and 42ms with Frame Generation.

Stepping up to 4K, the base level of performance was only 35 fps. Upscaling with DLSS 2 provided a 44% boost, and DLSS 3 gave another 38% — it seems like 4K Frame Generation tends to require quite a bit of computational power, so we don’t get anywhere near the theoretical doubling of performance. On the latency side, native ran at 53ms, DLSS 2 dropped that to 40ms, and DLSS 3 pushed it back up to 69ms.

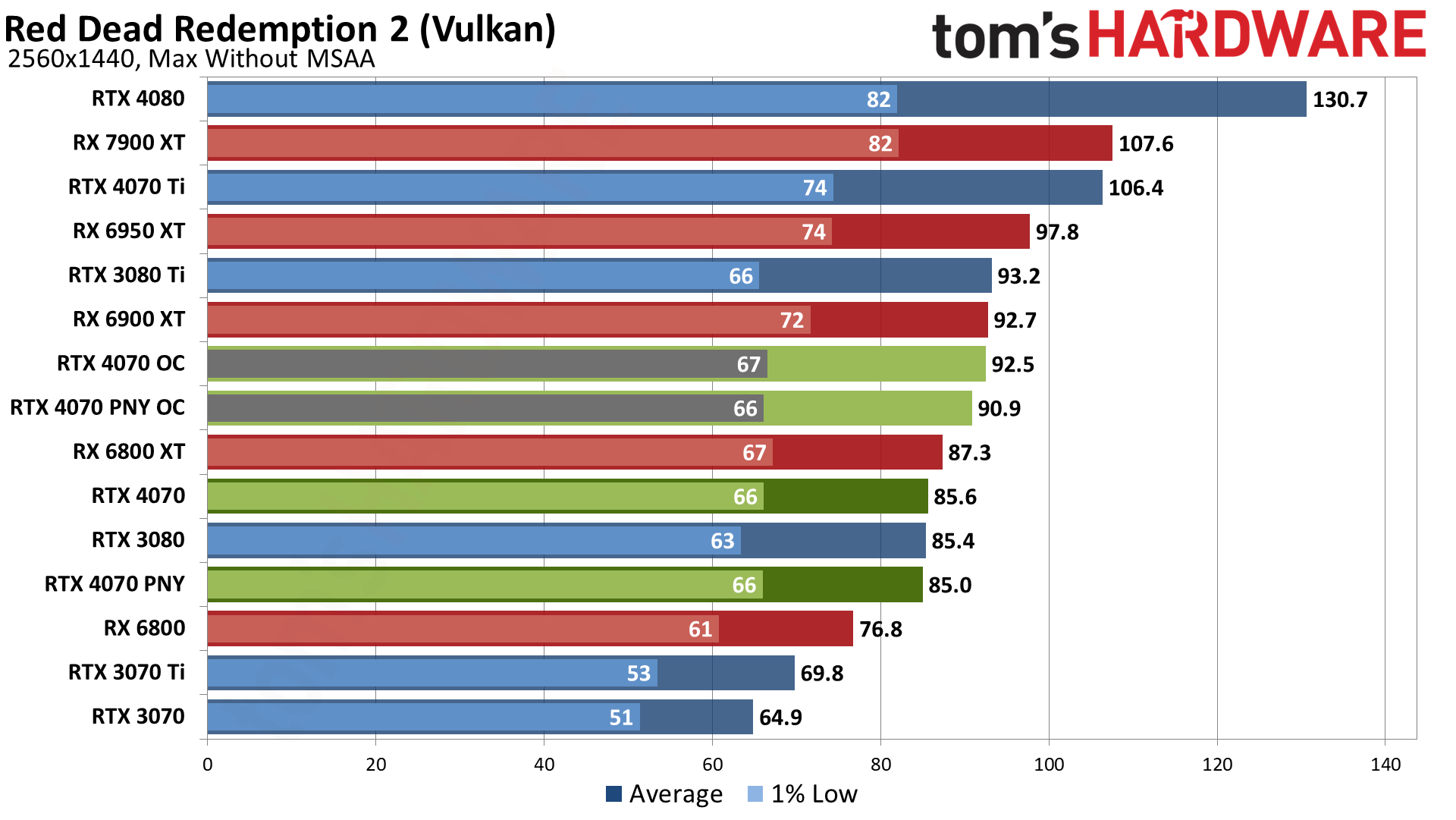

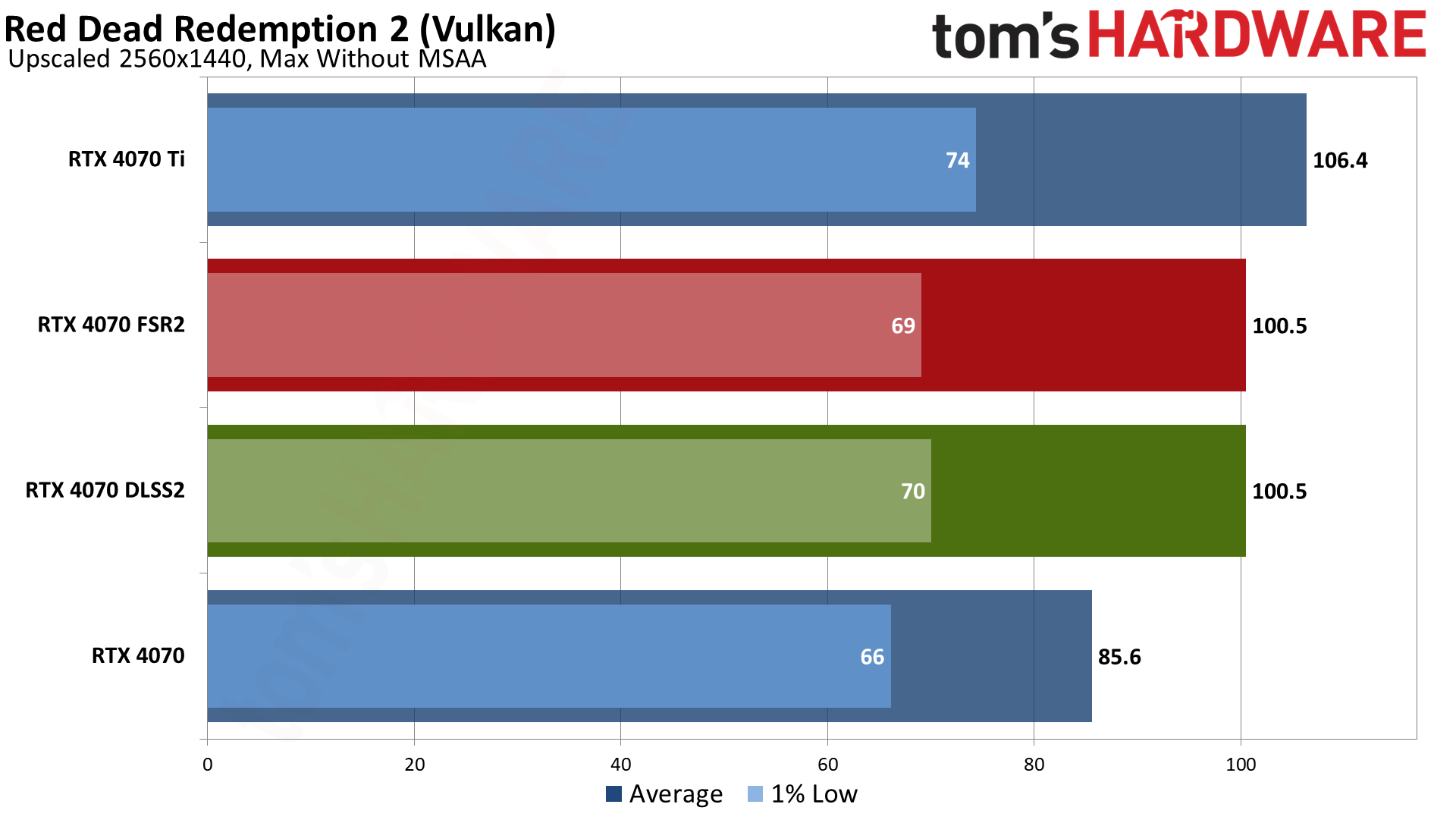

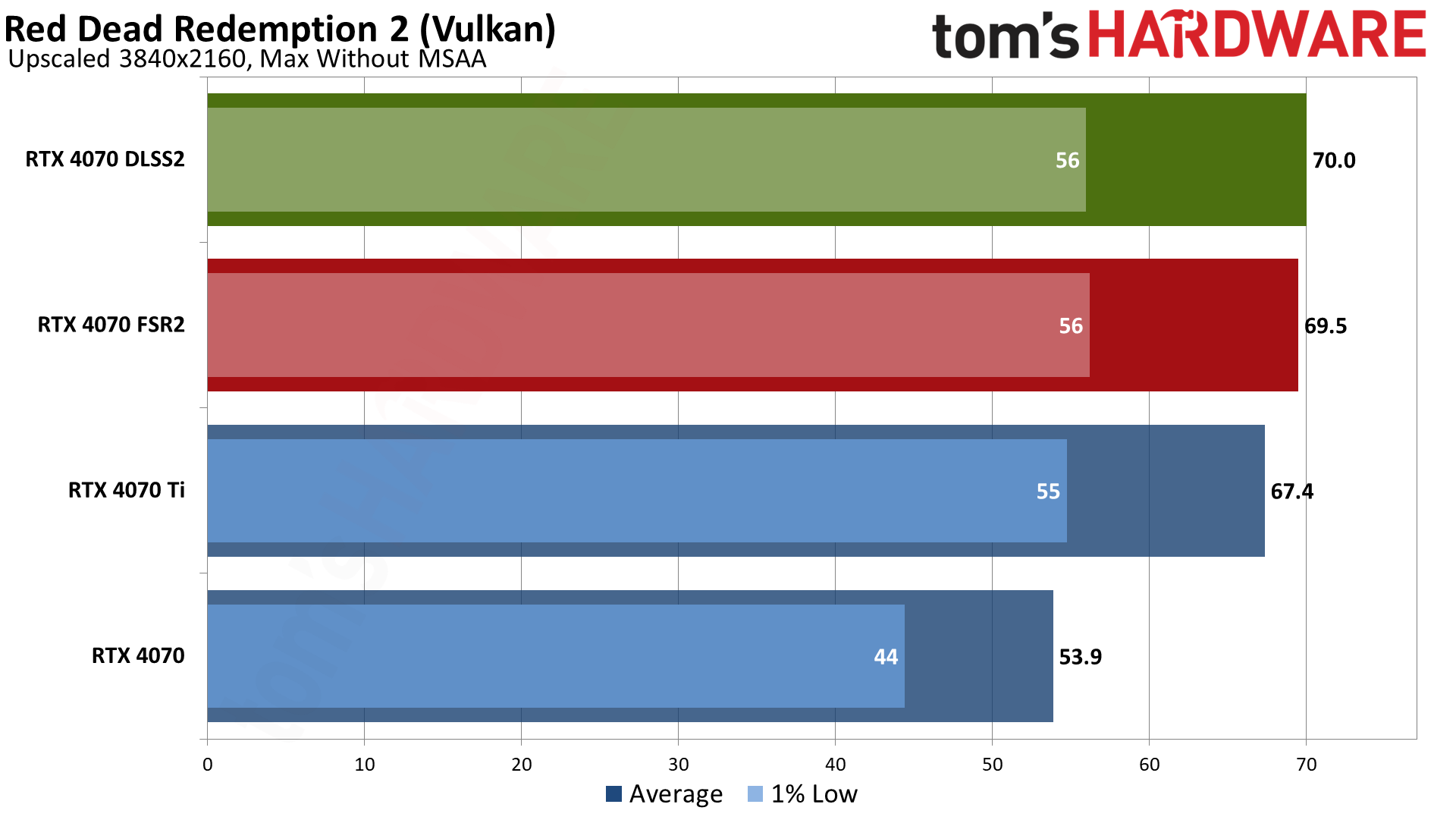

Red Dead Redemption 2 is another game where the gains from upscaling are relatively muted. At 1440p, DLSS 2 and FSR 2 gave just 17% more performance, though latency improved slightly from 41ms to 35ms. We did get larger gains at 4K, to the tune of 30% higher performance, but that’s still on the lower end of the spectrum. Latency at 4K was 65ms at native, and 50–51ms with DLSS and FSR upscaling.

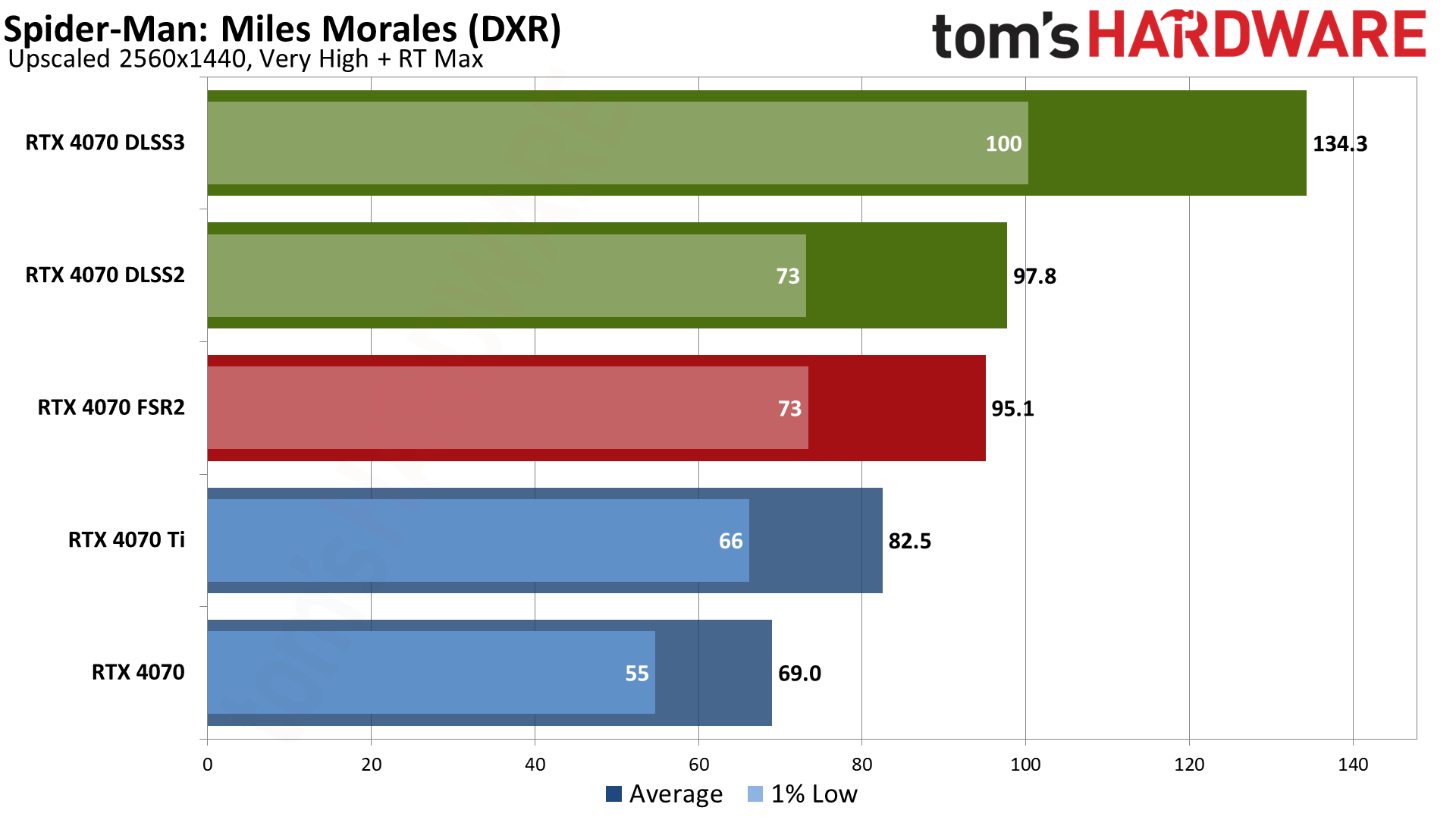

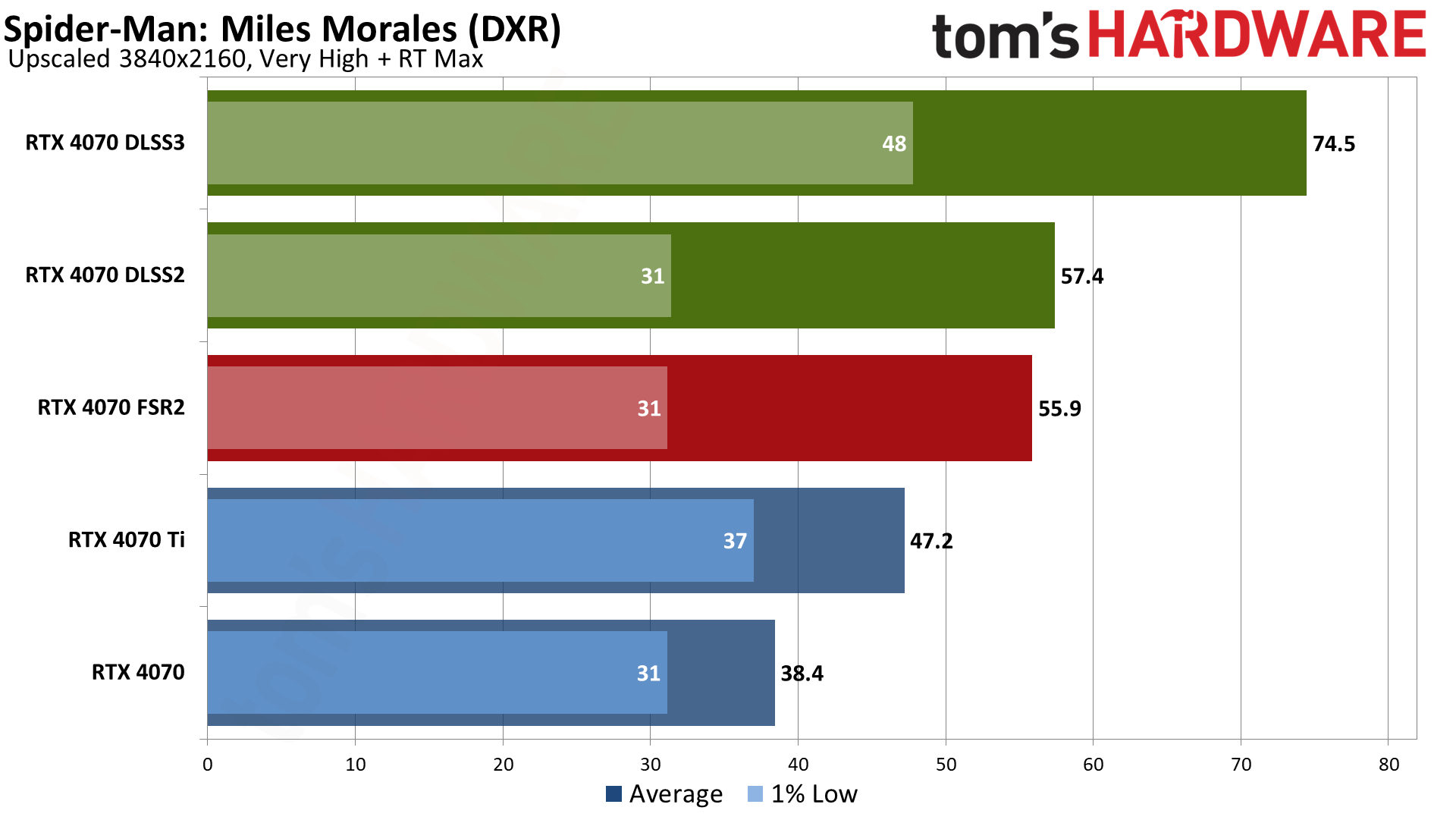

Spider-Man: Miles Morales is one of the currently rare games where you can choose between DLSS 2, DLSS 3, FSR 2, XeSS, and even IGTI (Insomnia’s own upscaling algorithm). Again, we’re only testing FSR and DLSS, though we’ve previously tested the other modes.

1440p with maxed out DXR settings was already very playable on the RTX 4070 at 59 fps, and DLSS and FSR upscaling improve performance by around 40%. Frame Generation yields an additional 37% boost, or 95% faster than native. Latency was 46ms at native compared to 30ms with DLSS or FSR, and Frame Generation increased that to 47ms — so basically the same latency as native but with roughly double the framerate.

The 4K results tell a similar story, just with lower numbers. DLSS improved performance by 50% while FSR gave a slightly smaller 45% increase. Frame Generation tacked on another 30%, or 94% higher than native. Latency again wasn’t improved by DLSS 3, but neither was it substantially worse. Native 4K had 73ms, DLSS 2 and FSR 2 got 49ms, and DLSS 3 latency was 81ms.

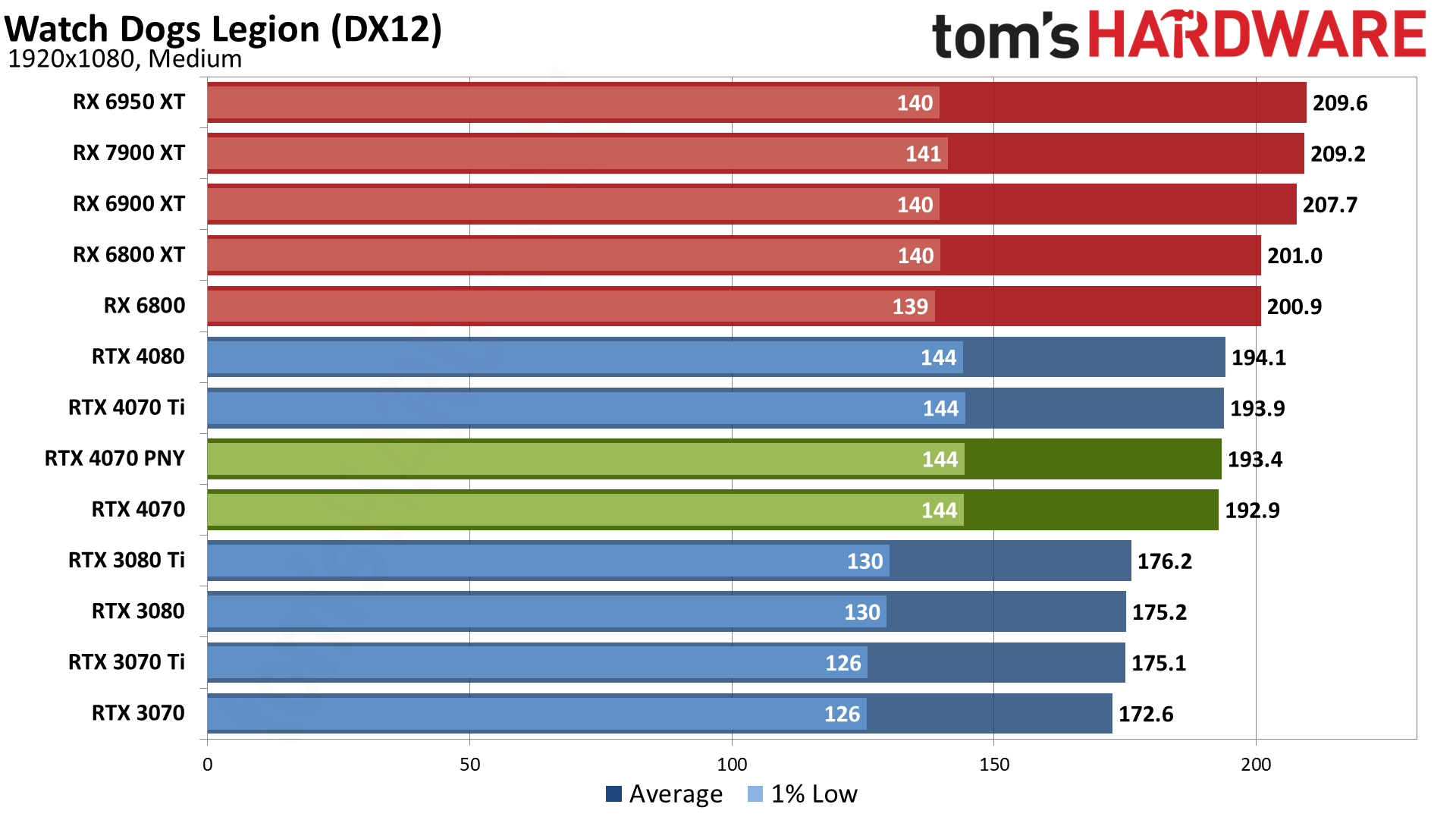

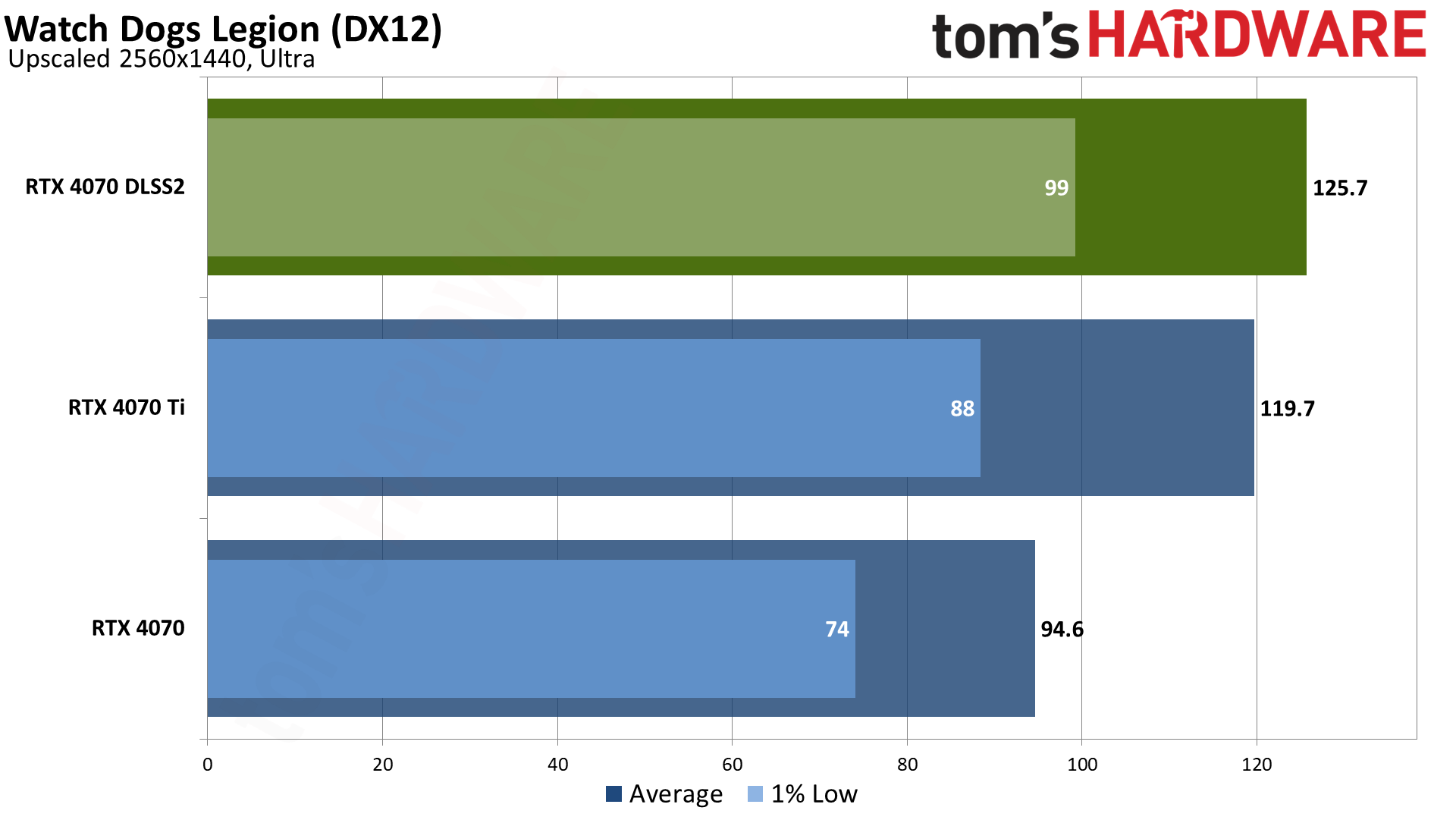

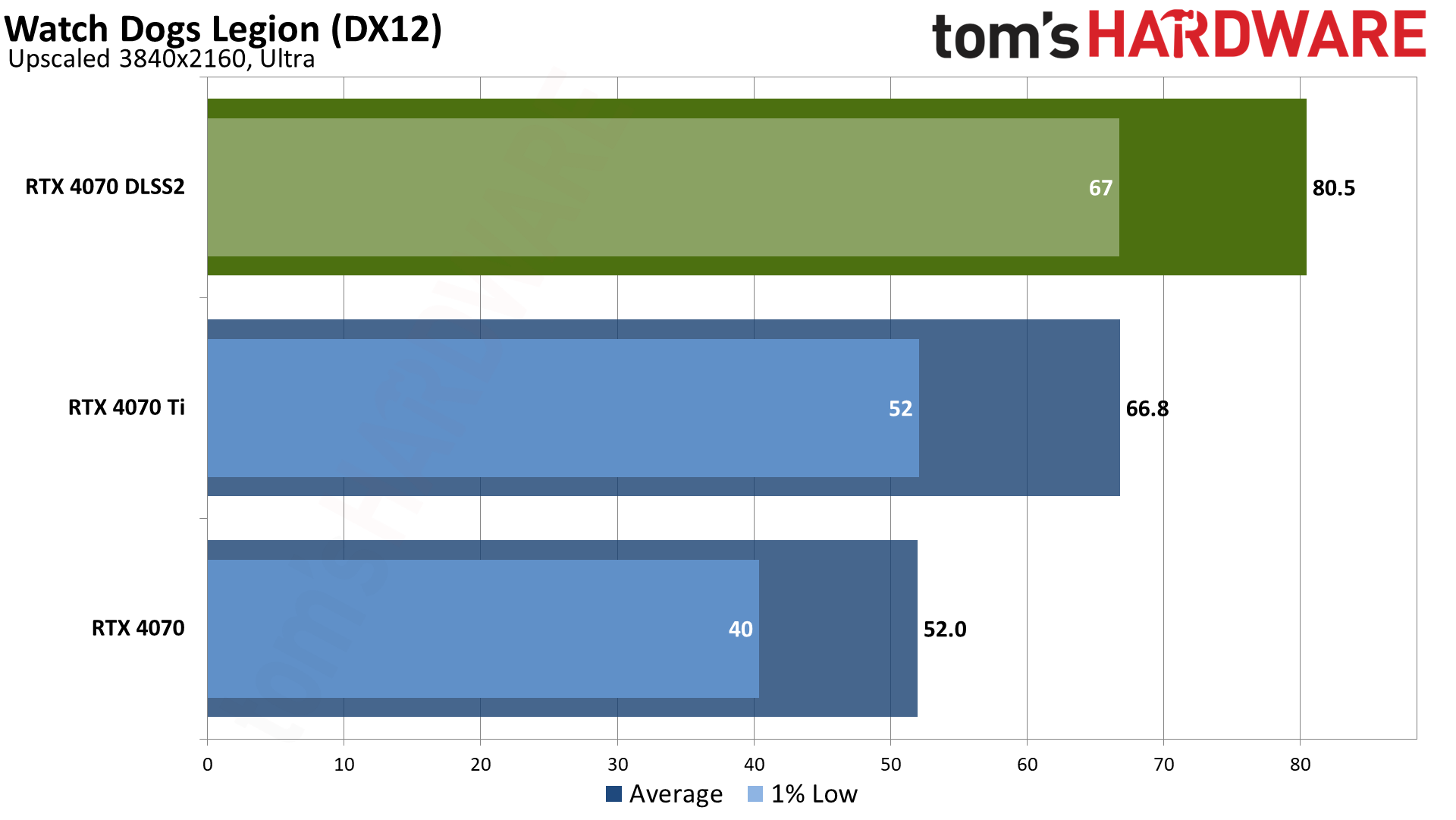

The last of our upscaling tests comes from Watch Dogs Legion, which has been around for a few years and has DXR reflections support, which we didn’t use. At 1440p, performance increased 33% thanks to DLSS Quality upscaling. As usual, 4K showed even larger gains of 55%, going from just shy of 60 fps to a very smooth 81 fps.

RTX 4070 Upscaling, the Bottom Line

In summary, upscaling technologies continue to improve, and they’re becoming even more critical for mainstream GPUs if you want to hit 60 fps or more, particularly in games that use multiple ray tracing effects. If you’re still thinking every game should be played at native resolution without any form of upscaling, you’ll either want to stick with 1440p or lower resolution displays, or you’ll need to shell out for the top-shelf GPUs like the RTX 4090.

We haven’t really changed our opinion on the ranking of the various upscaling modes since our last deep dive: DLSS 2 provides slightly higher quality upscaling, while FSR 2 works on basically everything. Intel’s XeSS meanwhile has two modes, one for Arc GPUs with XMX cores, and a separate mode for non-Arc GPUs that uses DP4a (basically 4-bit integer) calculations. DP4a mode ends up delivering a lower quality result in our experience, and AMD’s FSR2 generally delivers better performance and quality.

DLSS 3 Frame Generation continues to be a bit controversial, especially in terms of the marketing. If you just look at the raw performance and think it’s the same as what you get from upscaling solutions, it’s not. The number of frames delivered to your display increases, but there’s no additional user input for the generated frames, and the algorithm adds latency — especially when compared with DLSS 2 and Reflex. We do appreciate having the choice, however, and we also like the fact that DLSS 3 requires games to support Reflex. Of course that’s only a benefit if you’re not using an Nvidia GPU, but with roughly 80% of the dedicated GPU market, that’s the majority of the market.

It's good that AMD has an alternative to DLSS, but unfortunately FSR 2 mostly ends up being used in games that also support DLSS, and for games that only support FSR 2, it generally provides equal benefit to all GPUs. AMD wins some good will for creating solutions that aren’t tied to proprietary hardware, but Nvidia still ends up as the clear GPU market leader.

- MORE: Best Graphics Cards

- MORE: GPU Benchmarks and Hierarchy

- MORE: All Graphics Content

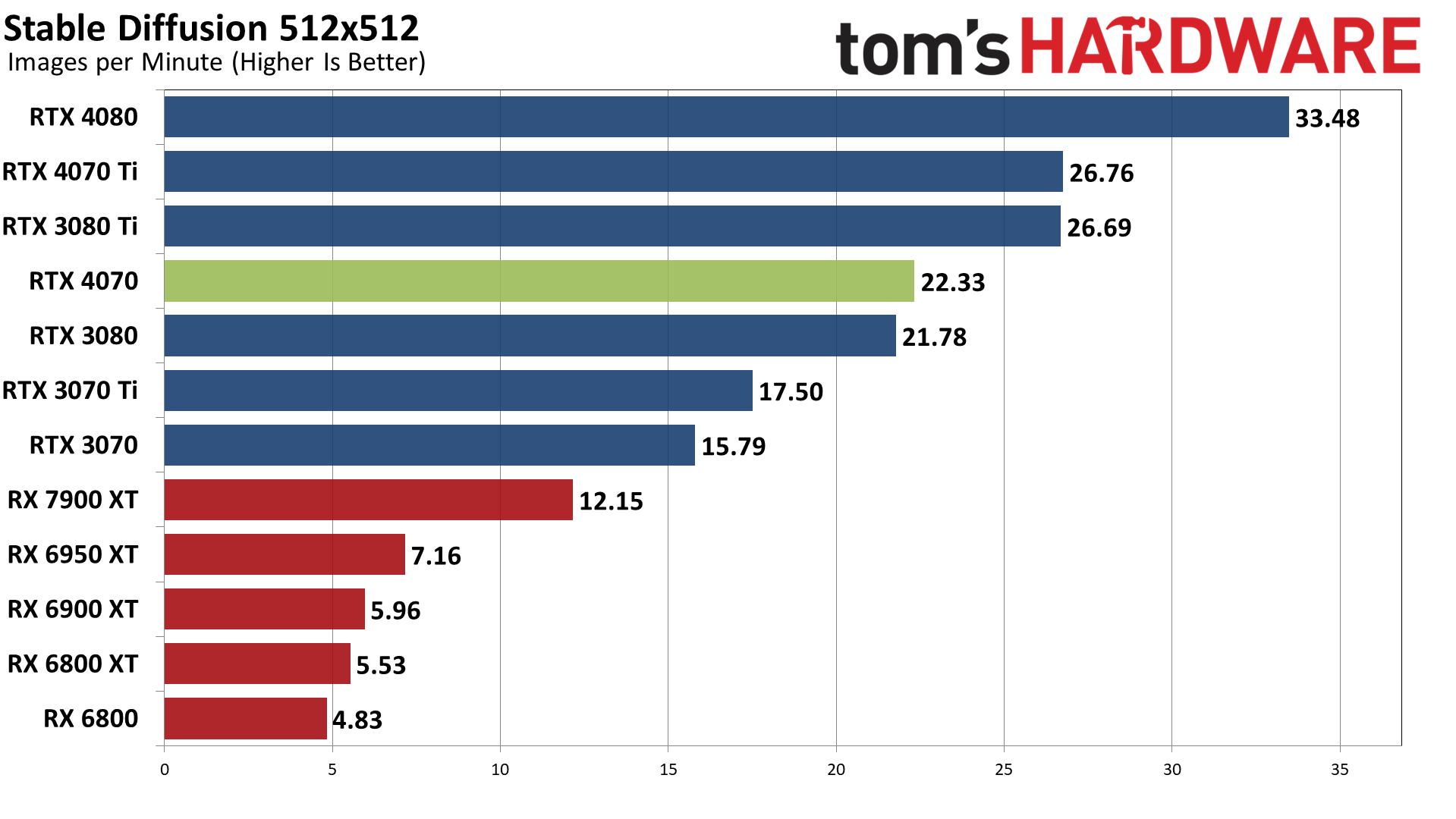

GPUs are also used with professional applications, AI training and inferencing, and more. Along with our usual proviz tests, we've added Stable Diffusion benchmarks on the various GPUs. AI is a fast-moving sector, and it seems like 95% or more of the publicly available projects are designed for Nvidia GPUs. Those Tensor cores aren't just for DLSS, in other words. Let's start with our AI testing and then hit the professional apps.

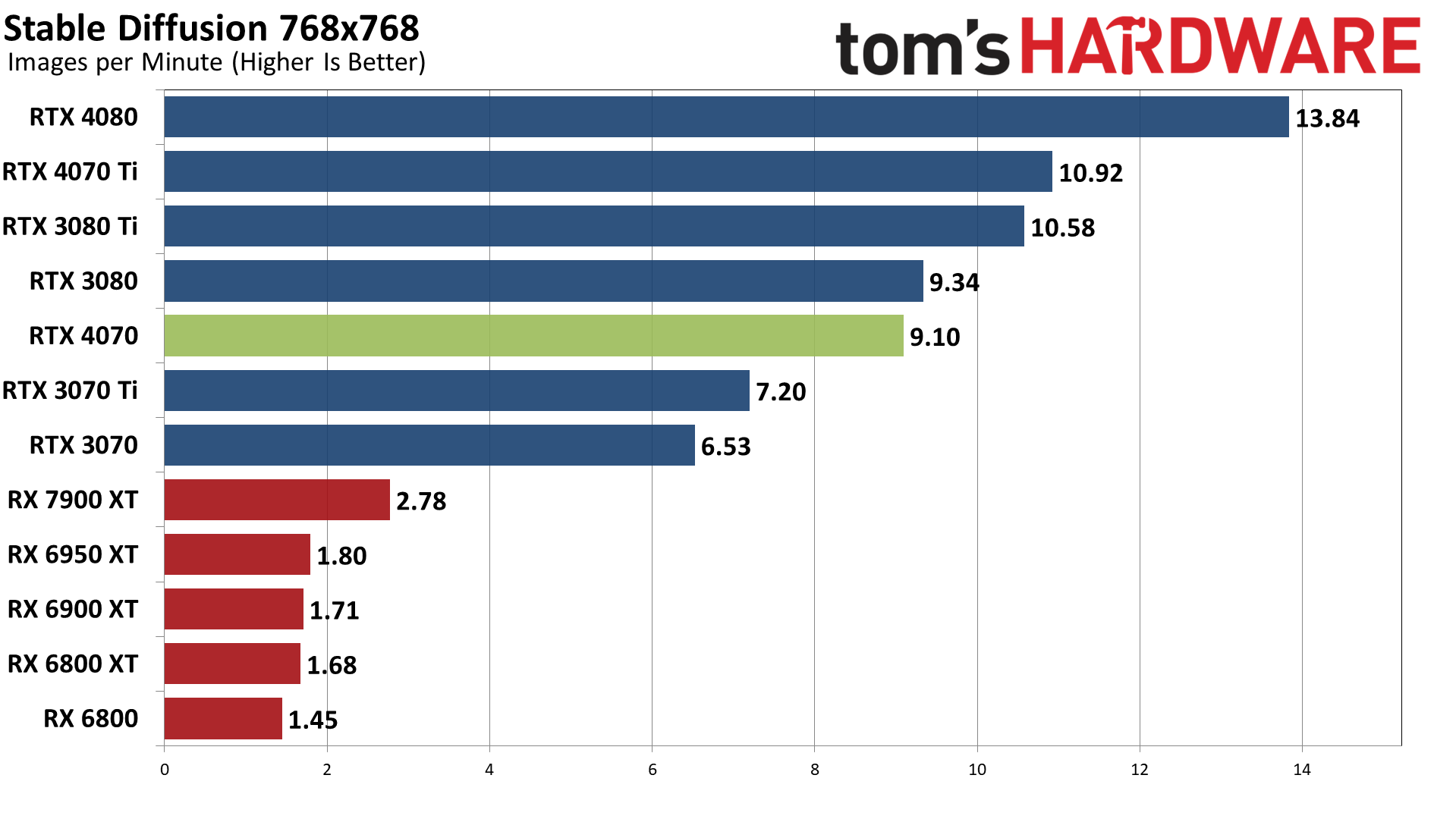

We've tested Stable Diffusion using two different targets: 512x512 images (the original default) and 768x768 images (HuggingFace's 2.x model). For Nvidia GPUs, mostly we find that the higher resolution target scales the time to render proportionally with the number of pixels: a 768x768 image has 2.25X as many pixels as a 512x512 image and so it should take about 2.25X longer to generate.

For AMD GPUs, Nod.ai's Shark repository offers the best performance we've found so far, but only if you're doing 512x512 images; 768x768 output cuts performance to about one-fourth the speed. And Intel? Yeah, I've got 512x512 working at a pretty decent rate (9.2 images per minute on the Arc A770 16GB), but I haven't managed to get 768x768 working yet.

If you're okay with 512x512 images, the RTX 4070 can crank out around 22 images per minute. That's 84% faster than AMD's RX 7900 XT, and that's not even trying to use the newer FP8 functionality (though Stable Diffusion might not benefit as much from that as other workloads, as it might need the additional precision of FP16). Moving up to 768x768 Stable Diffusion 2.1 images, the RTX 4070 still plugs along at over nine images per minute (59% slower than 512x512), but for now AMD's fastest GPUs drop to around a third of that speed.

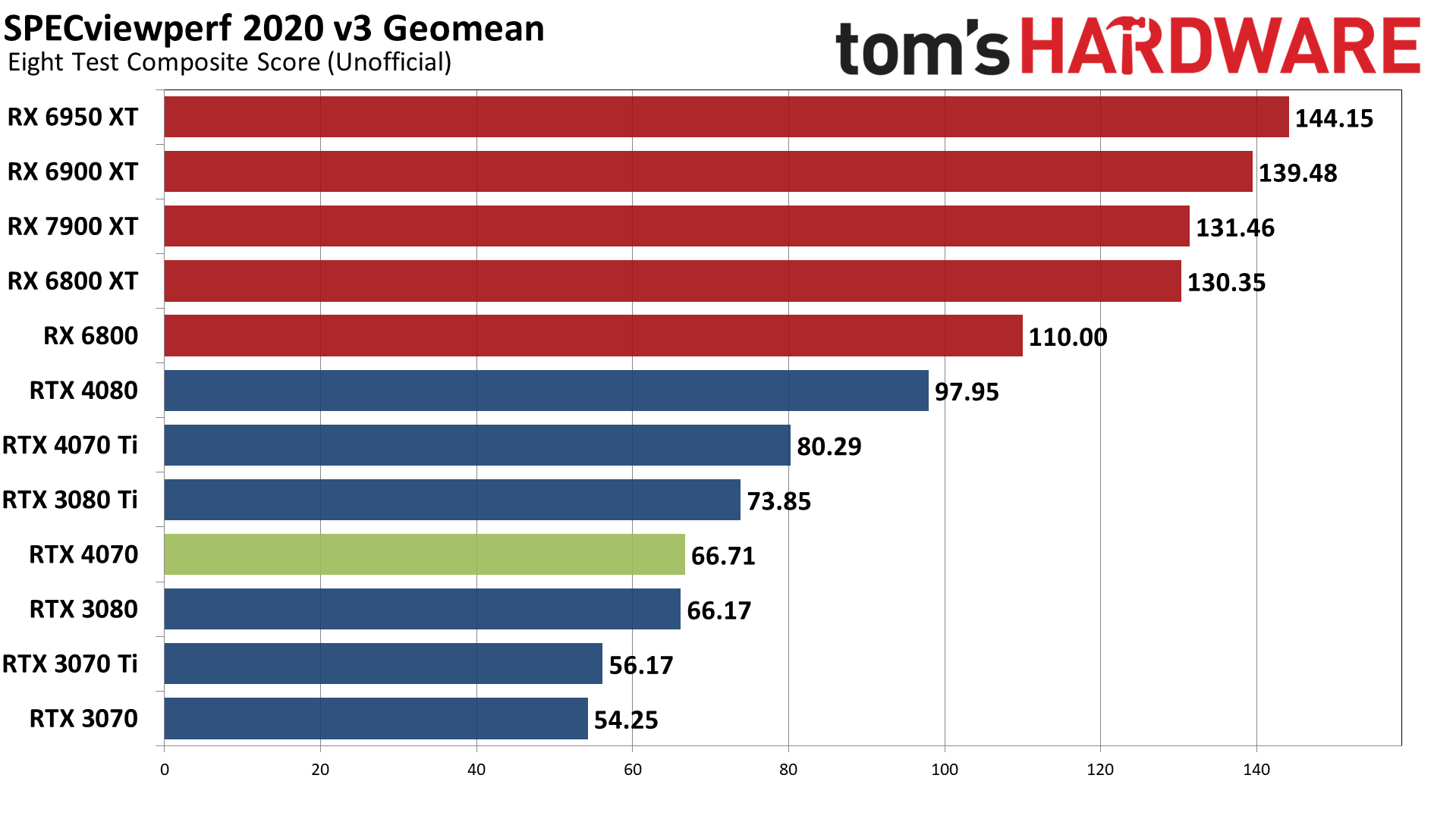

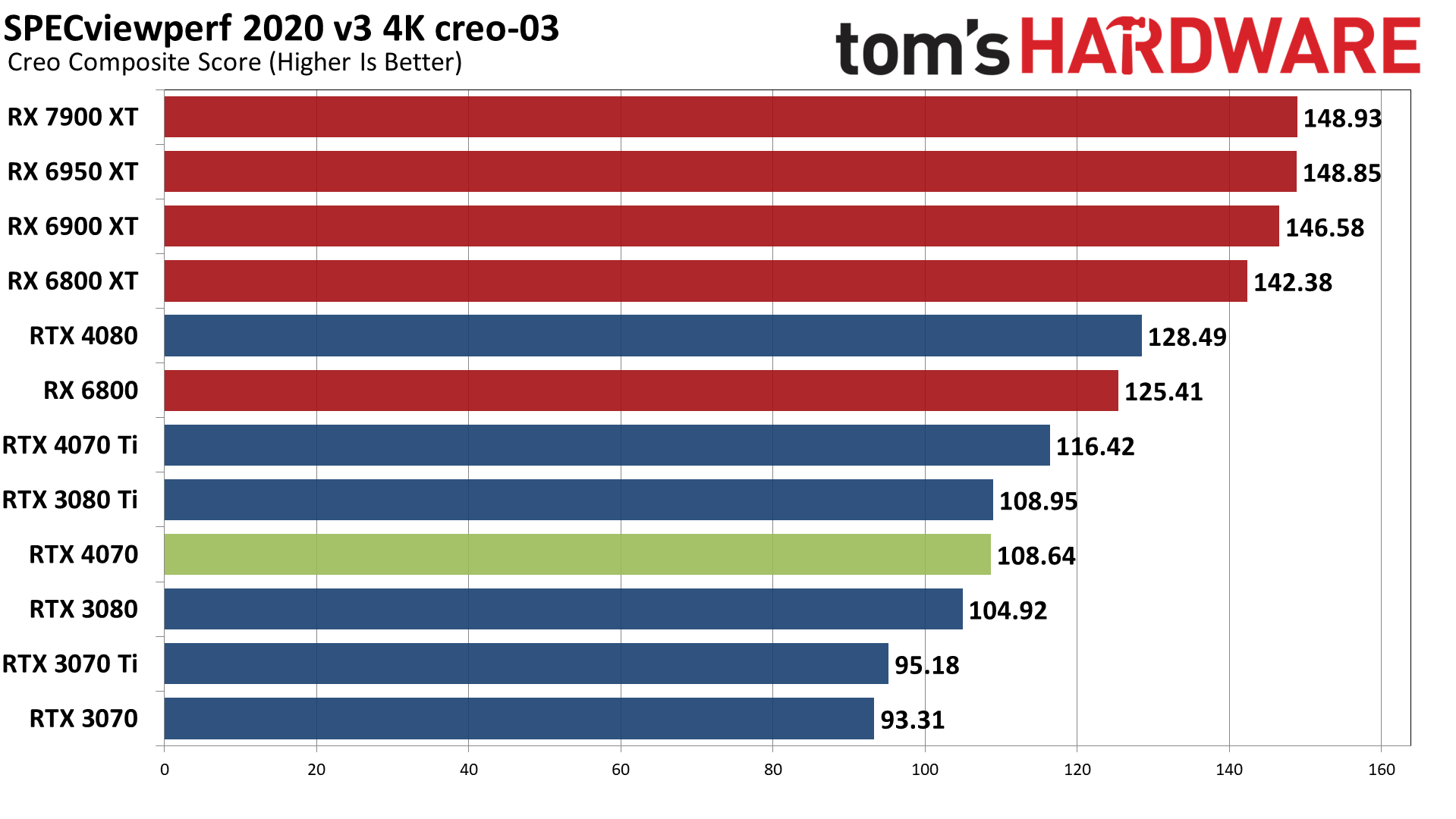

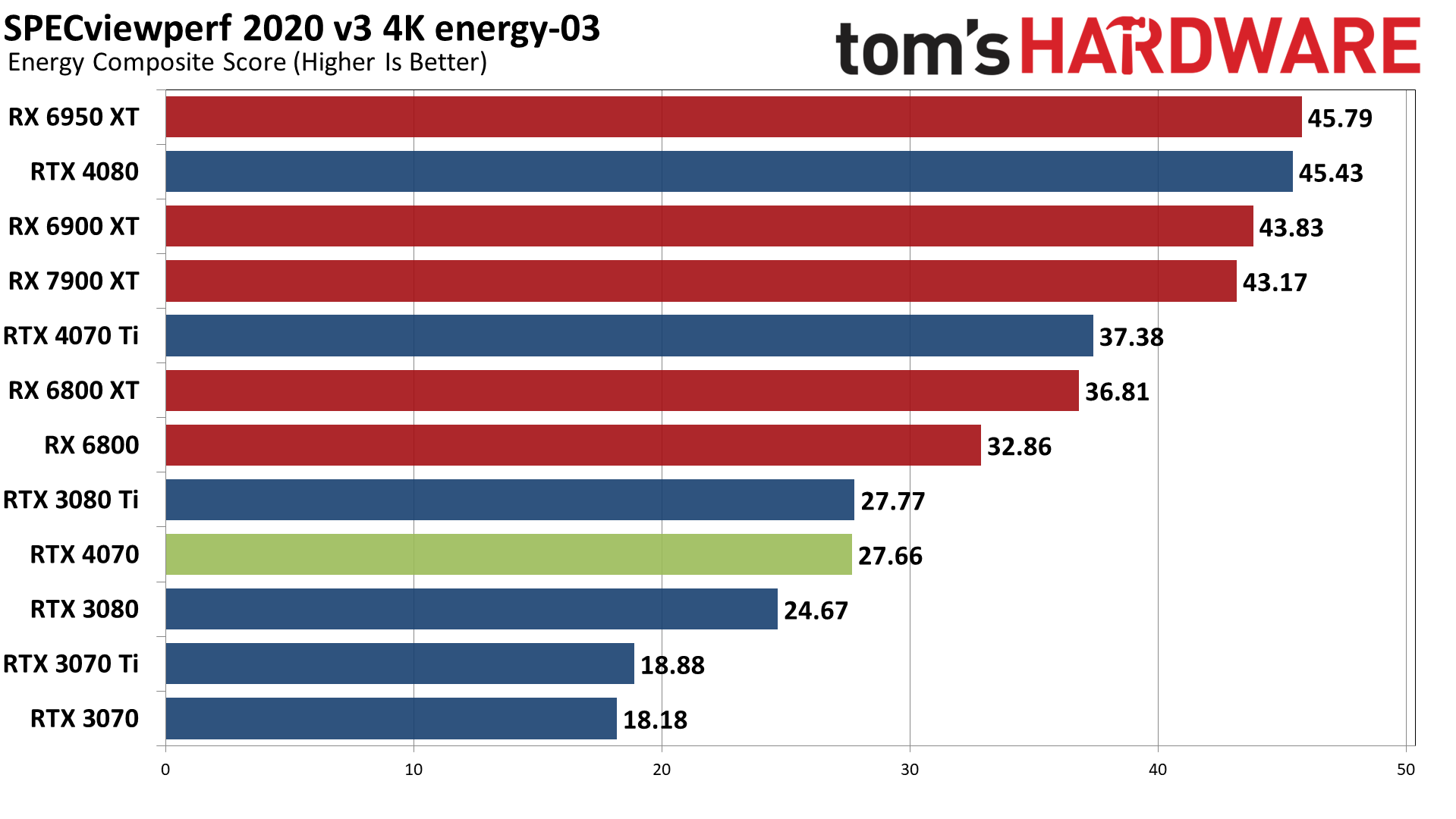

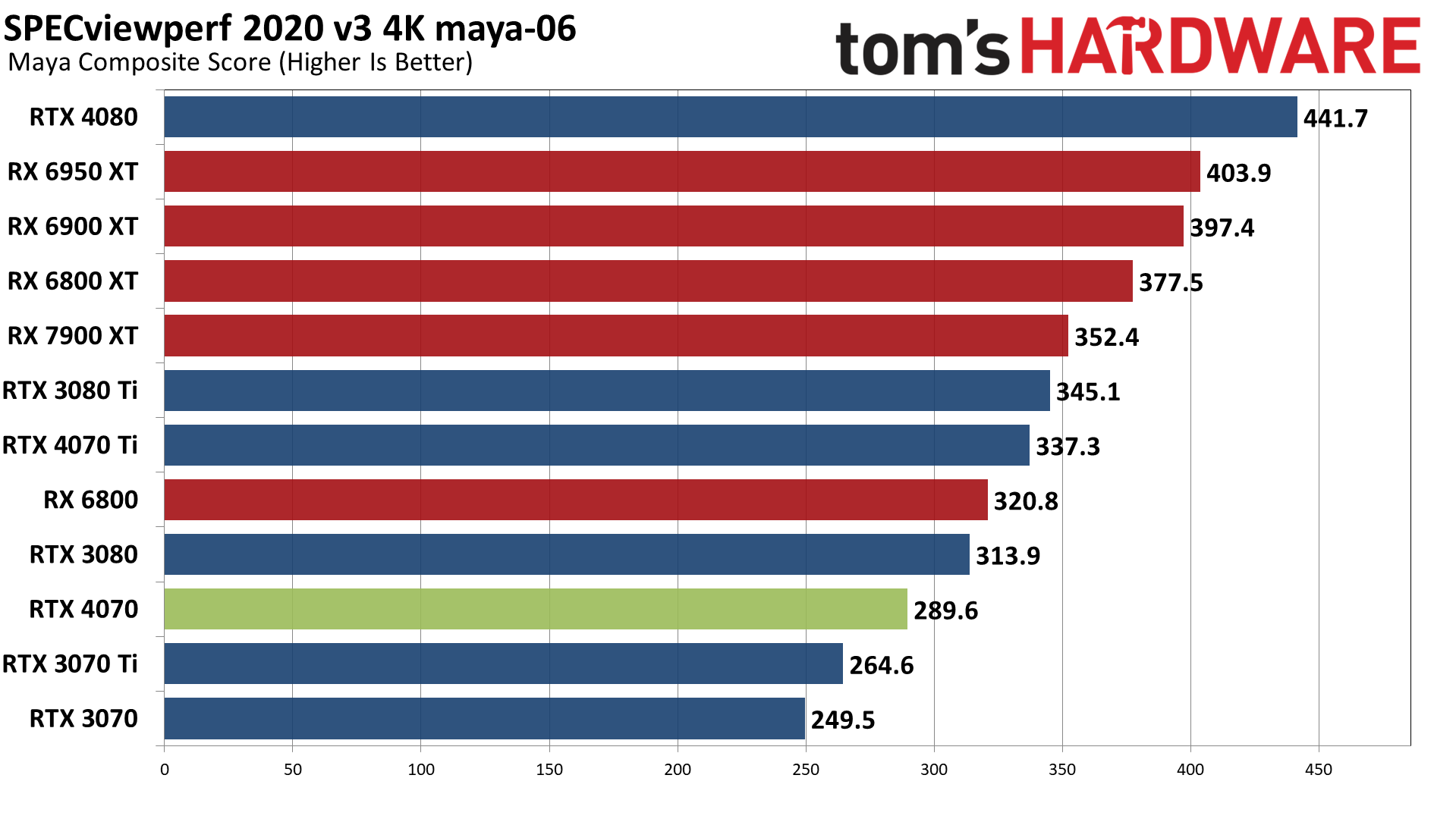

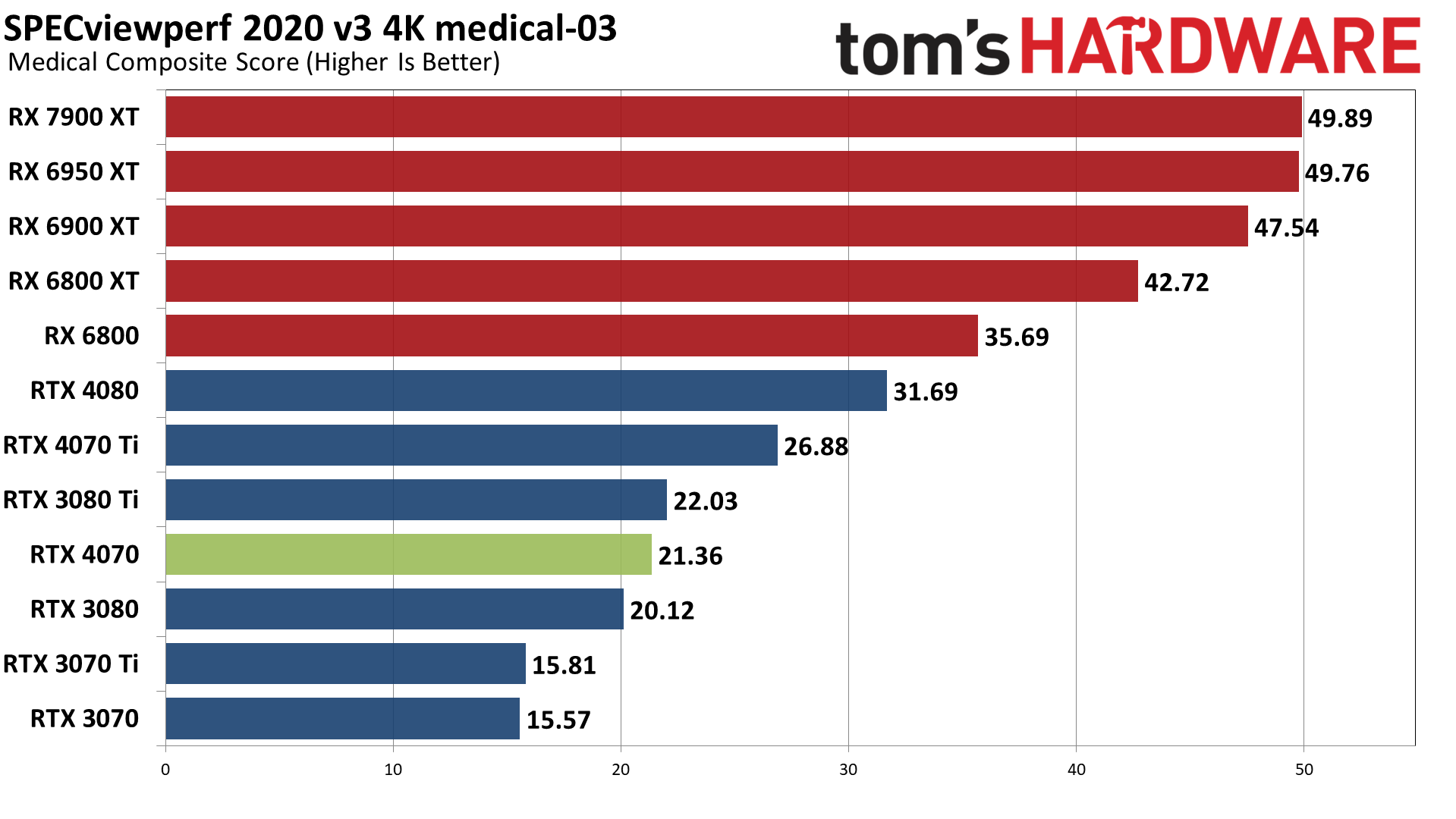

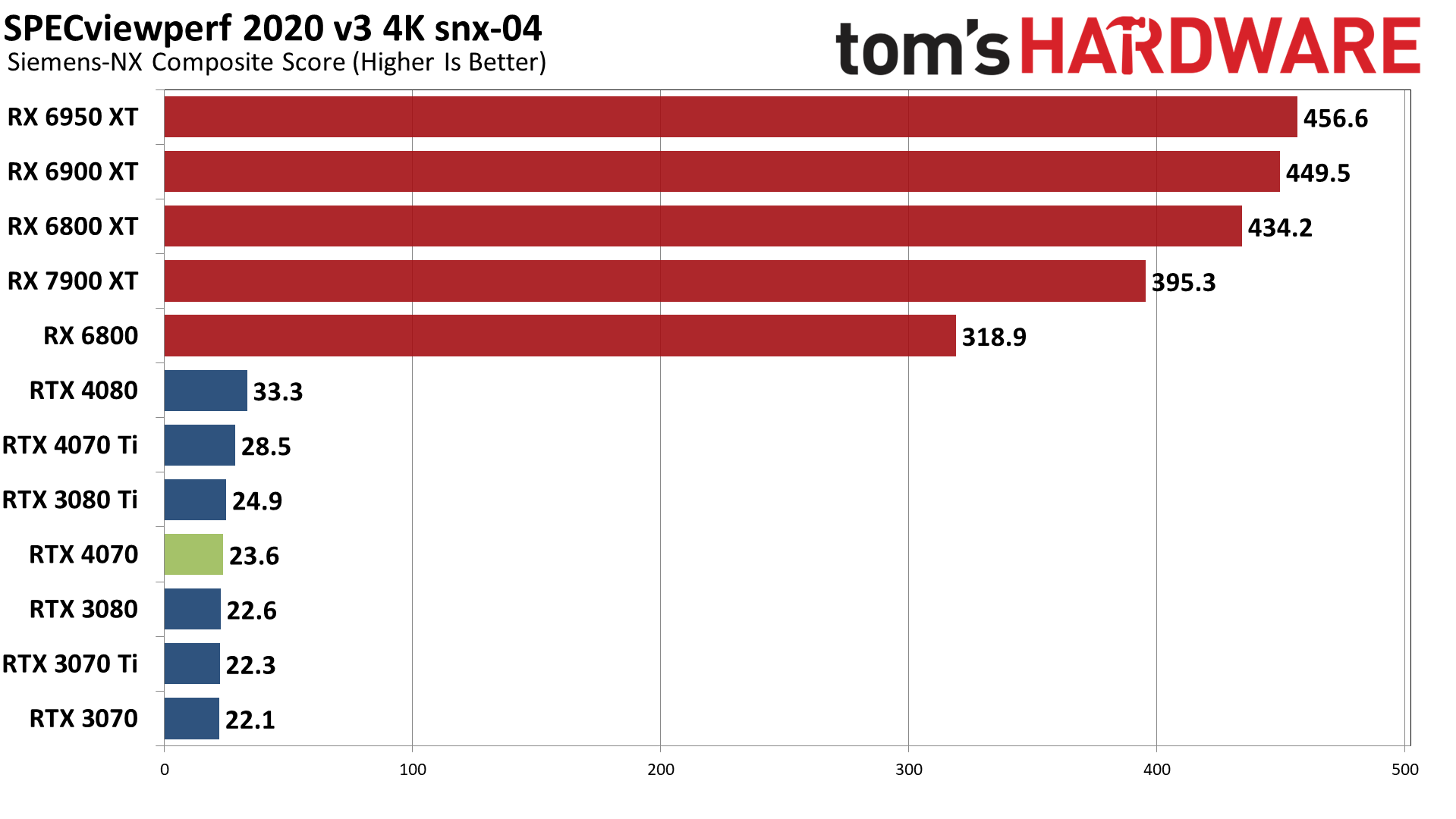

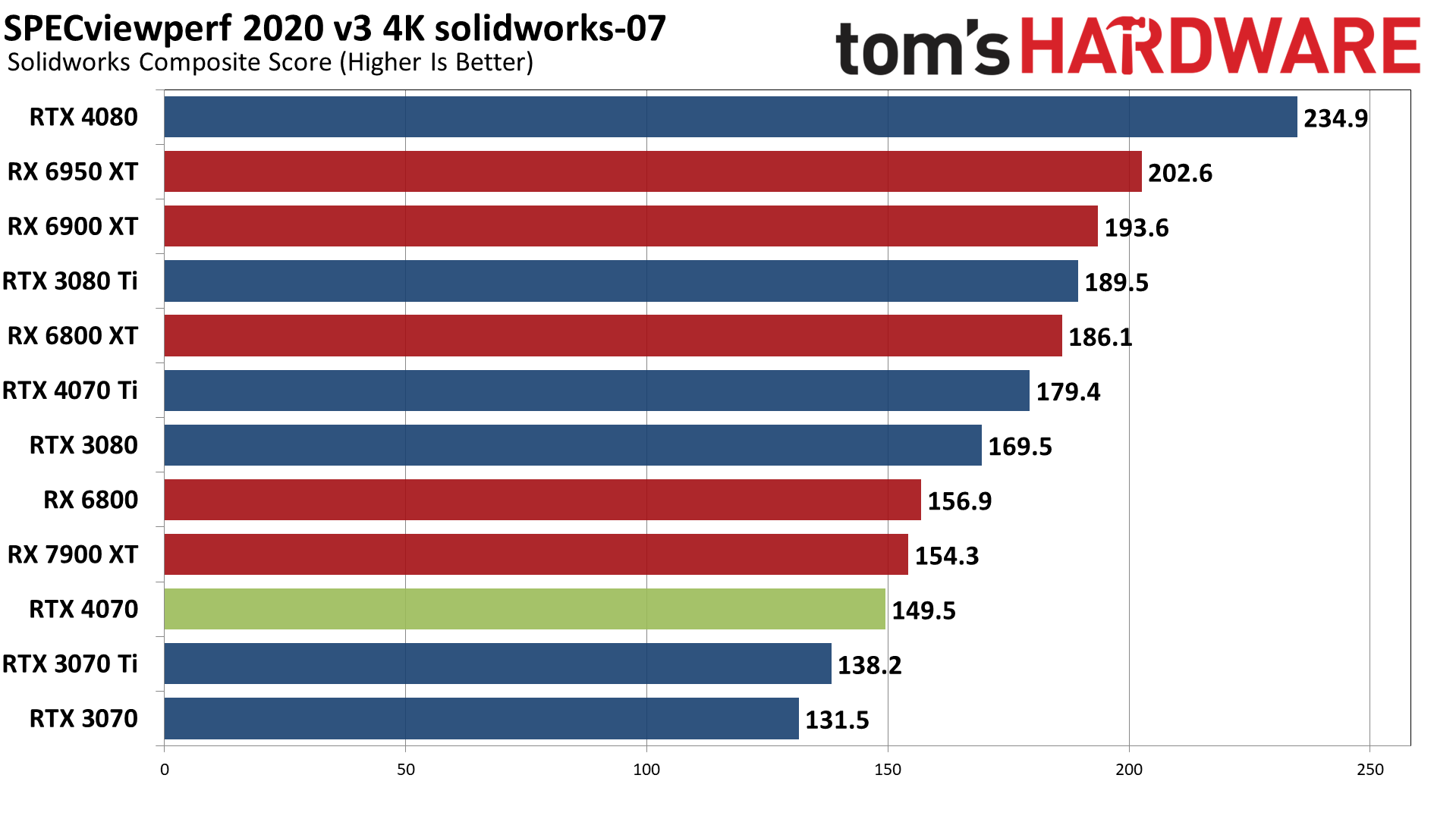

SPECviewperf 2020 consists of eight different benchmarks, and we use the geometric mean from those to generate an aggregate "overall" score. Note that this is not an official score, but it gives equal weight to the individual tests and provides a nice high-level overview of performance. Few professionals use all of these programs, however, so it's typically more important to look at the results for the application(s) you plan to use.

Across the eight tests, Nvidia's RTX 4070 basically ties the RTX 3080, just as we saw with our gaming performance results. AMD's more recent drivers provided a substantial boost to performance, something that you can really only match with Nvidia's professional series cards.

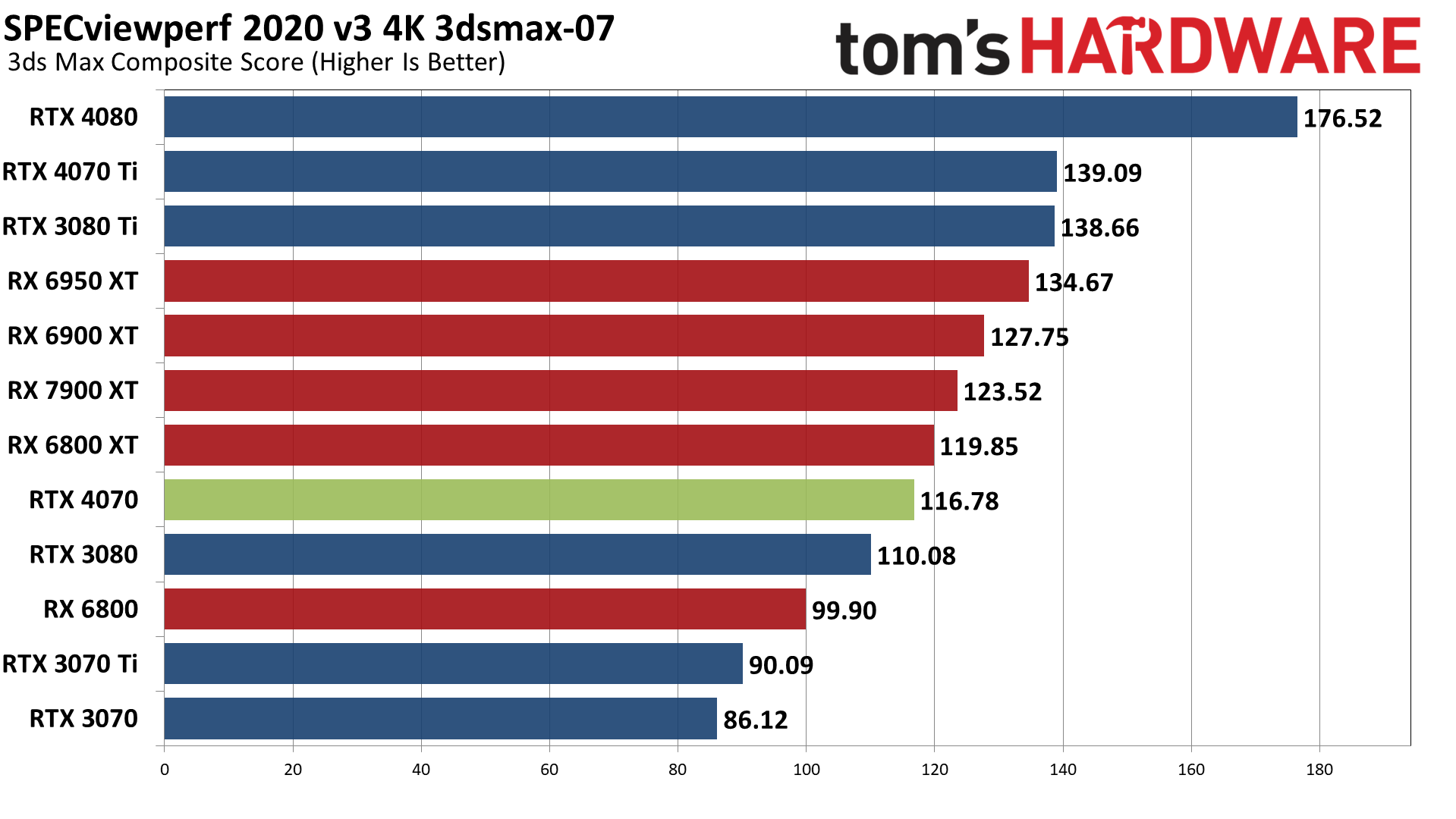

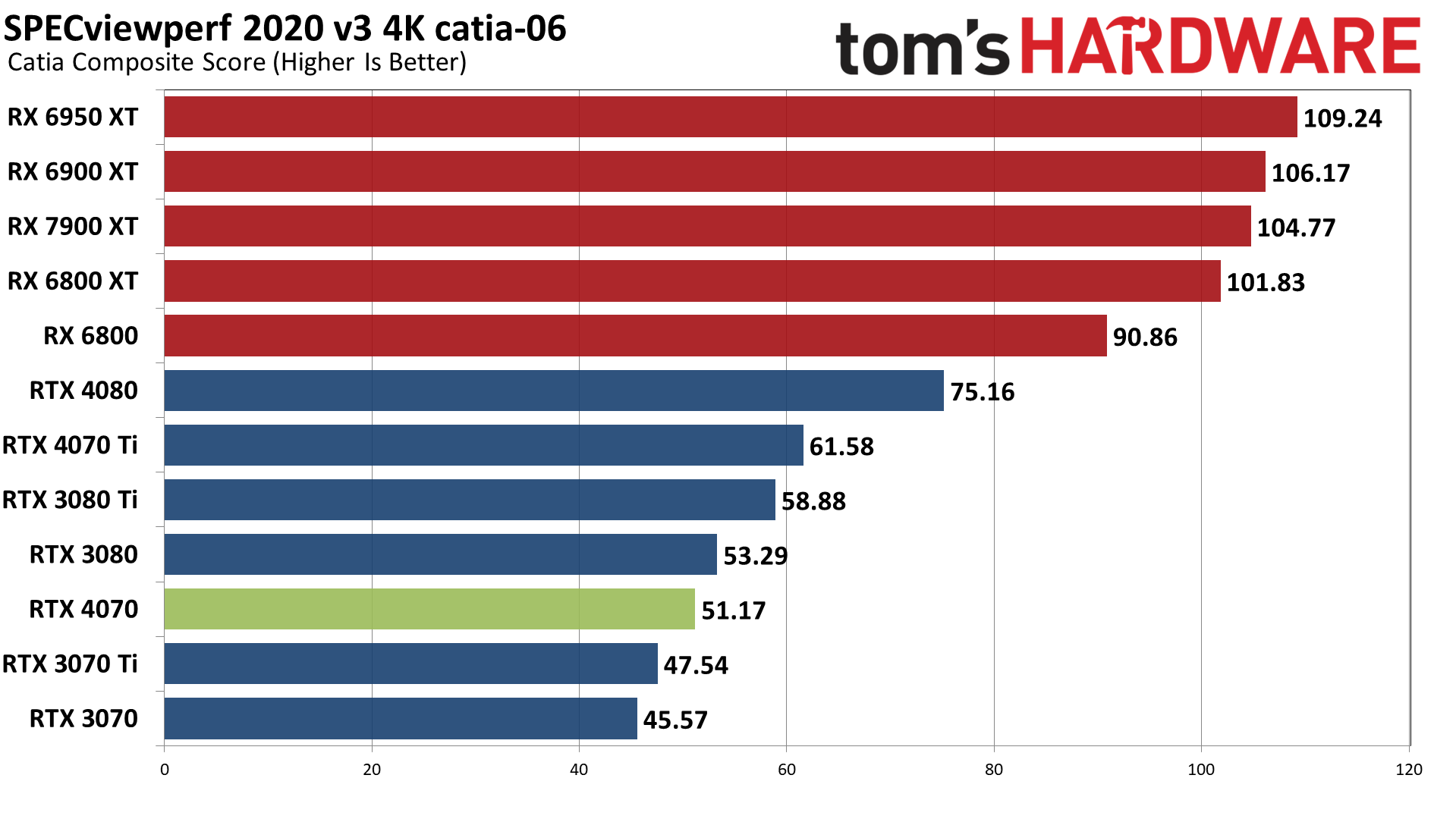

AMD score particularly well snx-04 (or if you prefer, Nvidia's consumer RTX cards do very poorly). AMD also tends to score higher in catia-06, creo-03, energy-03, and medical-03, while Nvidia GPUs do better in 3dsmax-07 — with maya-06 and solidworks-07 being more neutral. If you use any of these applications on a regular basis, that could be enough to sway your GPU purchasing decision.

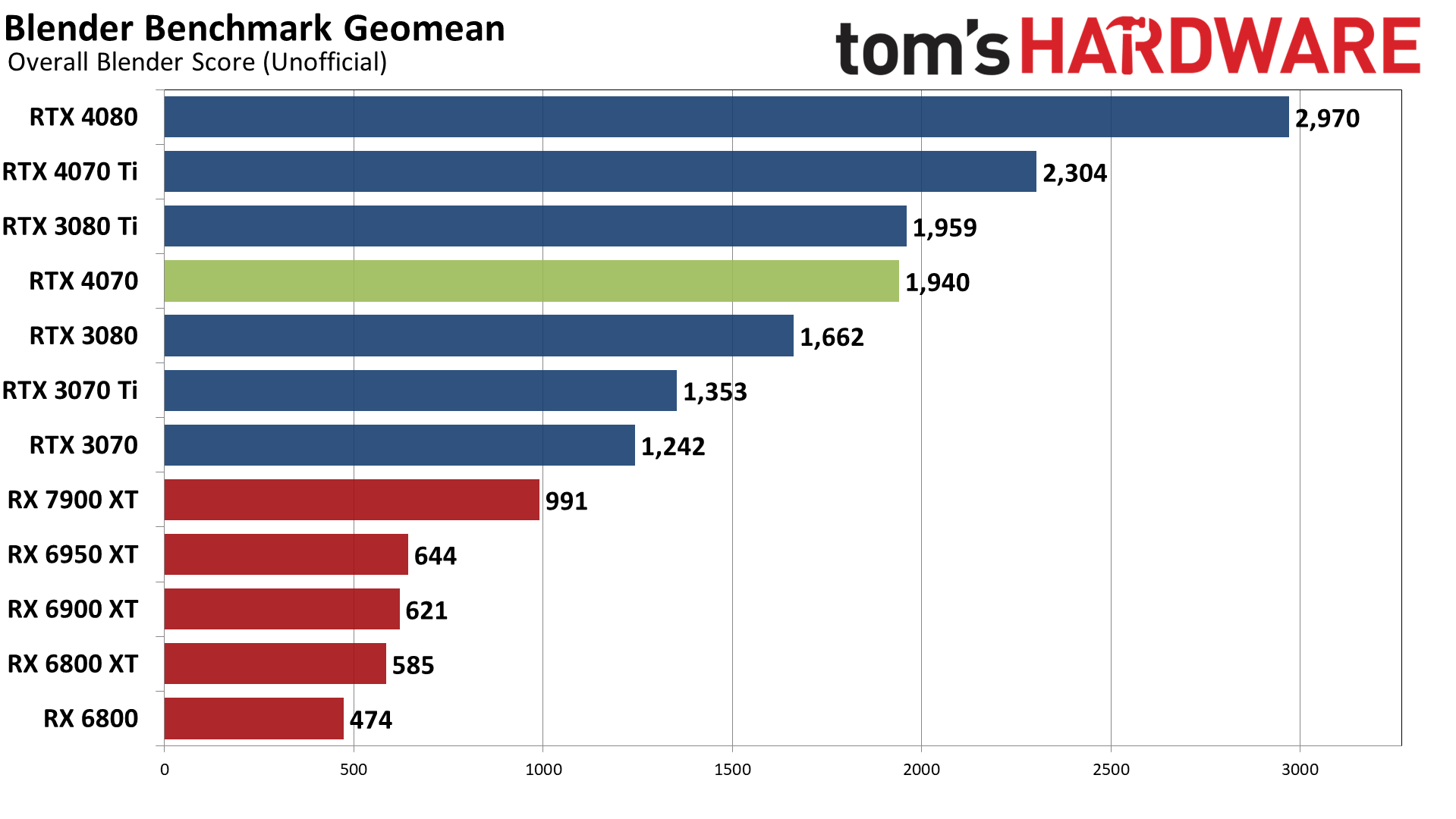

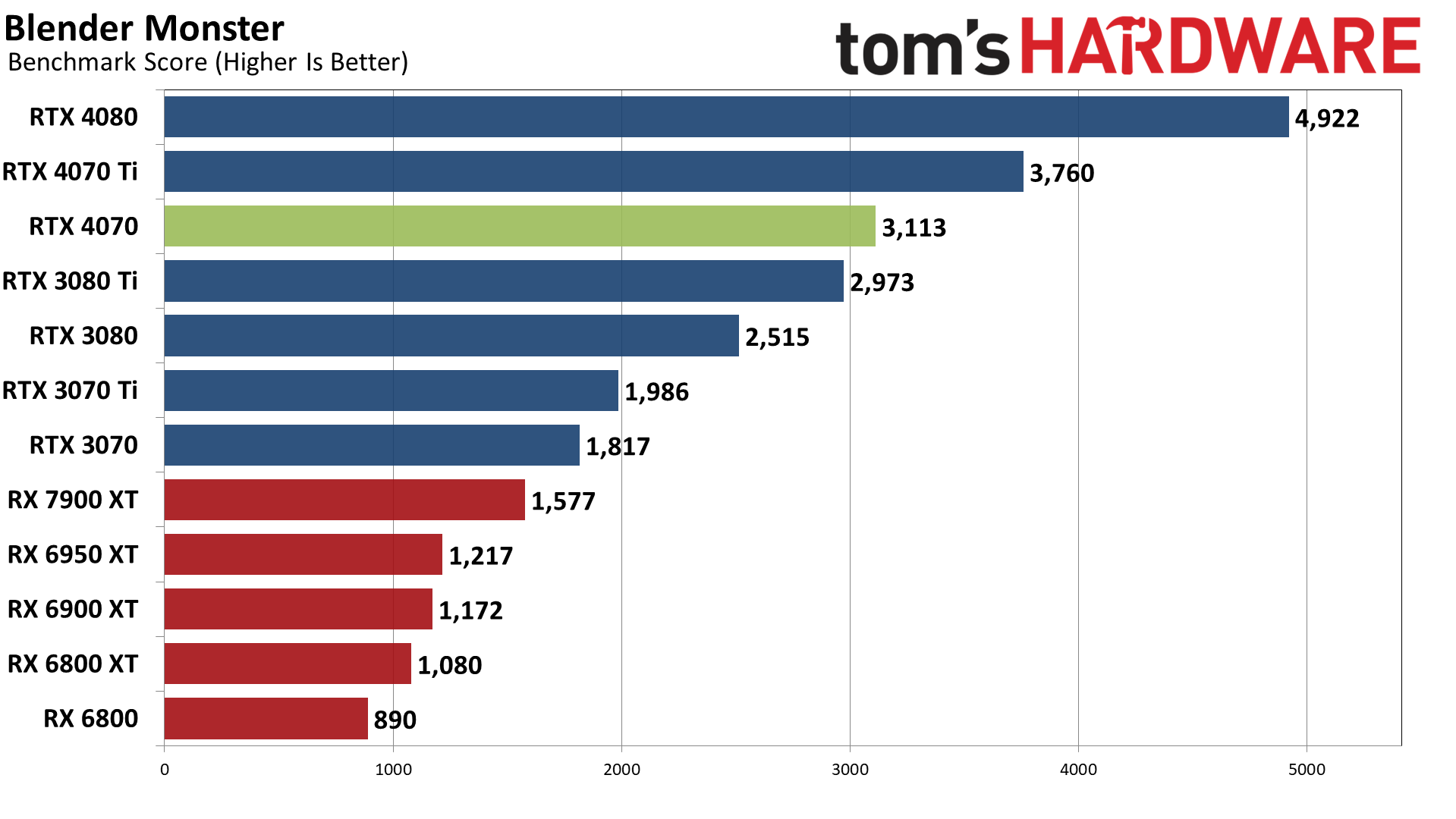

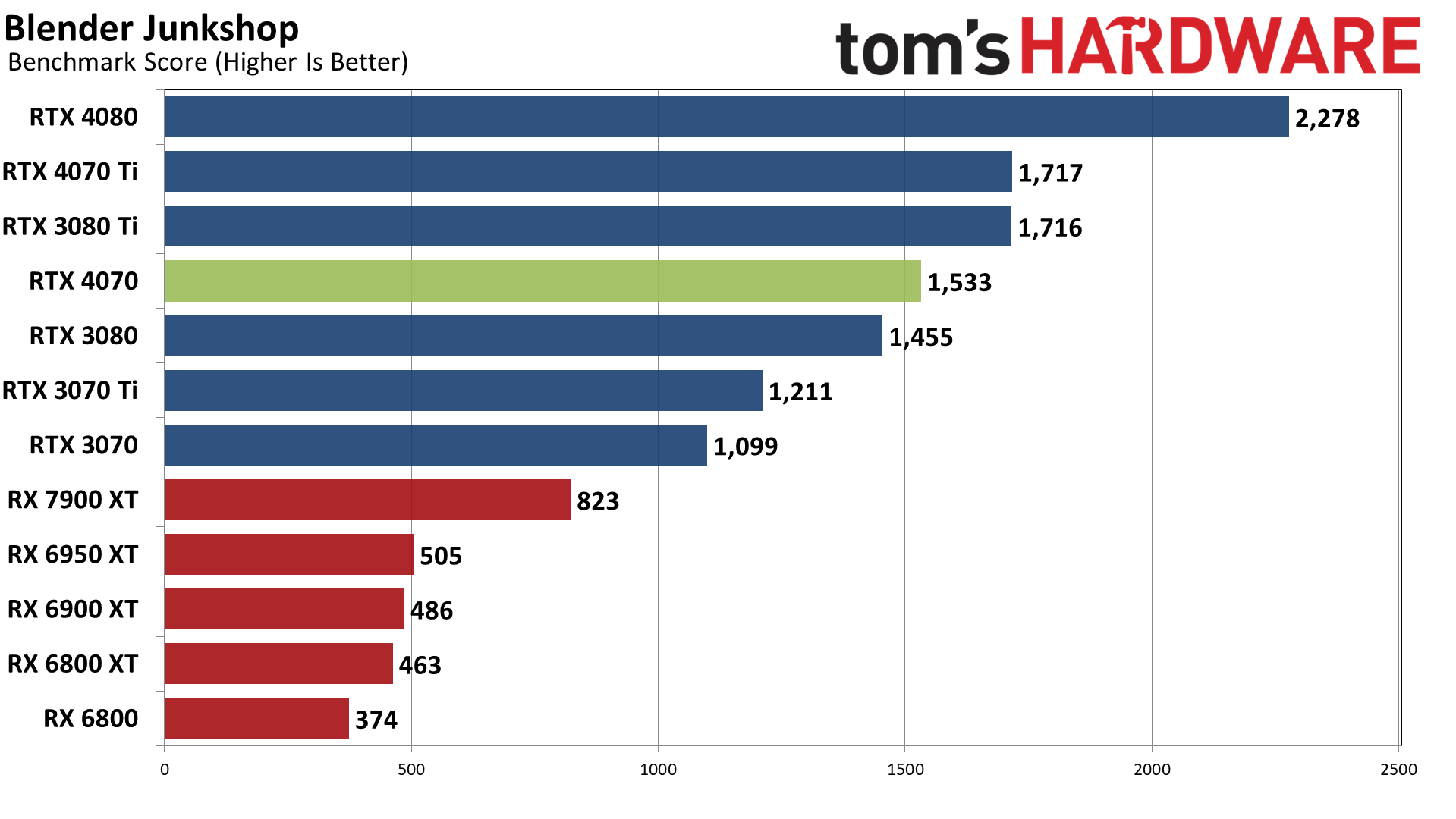

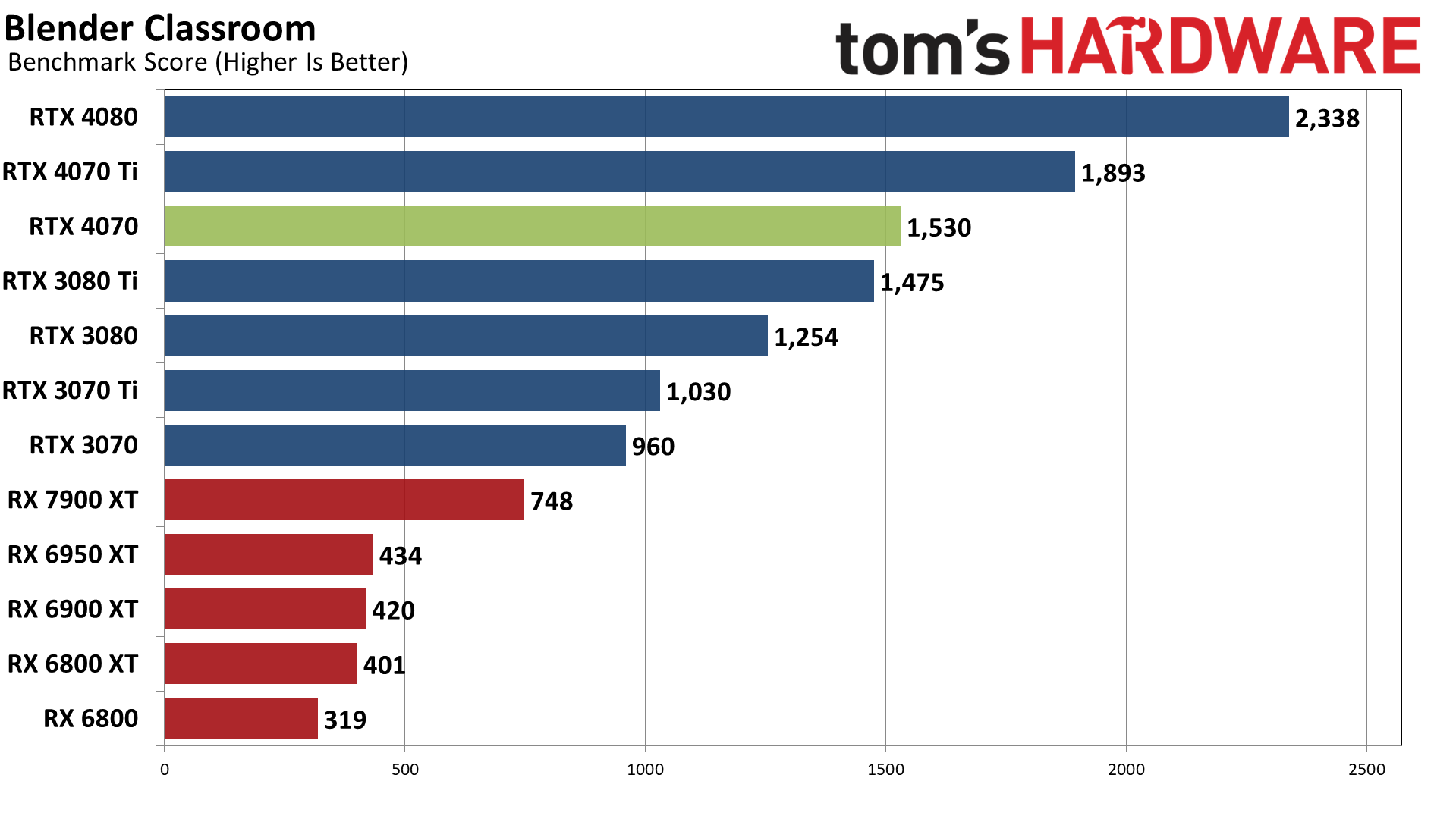

Moving on to 3D rendering, Blender is a popular open-source rendering application, and we're using the latest Blender Benchmark, which uses Blender 3.50 and three tests. Blender 3.50 includes the Cycles X engine that leverages ray tracing hardware on AMD, Nvidia, and even Intel Arc GPUs. It does so via AMD's HIP interface (Heterogeneous-computing Interface for Portability), Nvidia's CUDA or OptiX APIs, and Intel's OneAPI — which means Nvidia GPUs have some performance advantages due to the OptiX API.

The RTX 4070 ends up basically tied with the 3080 Ti this time, winning in two of three individual scenes as well as in the aggregate score. It's interesting that Junkshop seems to favor the 3080 Ti, but we don't have any clear indication why that might be so. AMD's GPUs meanwhile fall far behind, and even the RTX 3070 delivers better performance in our testing.

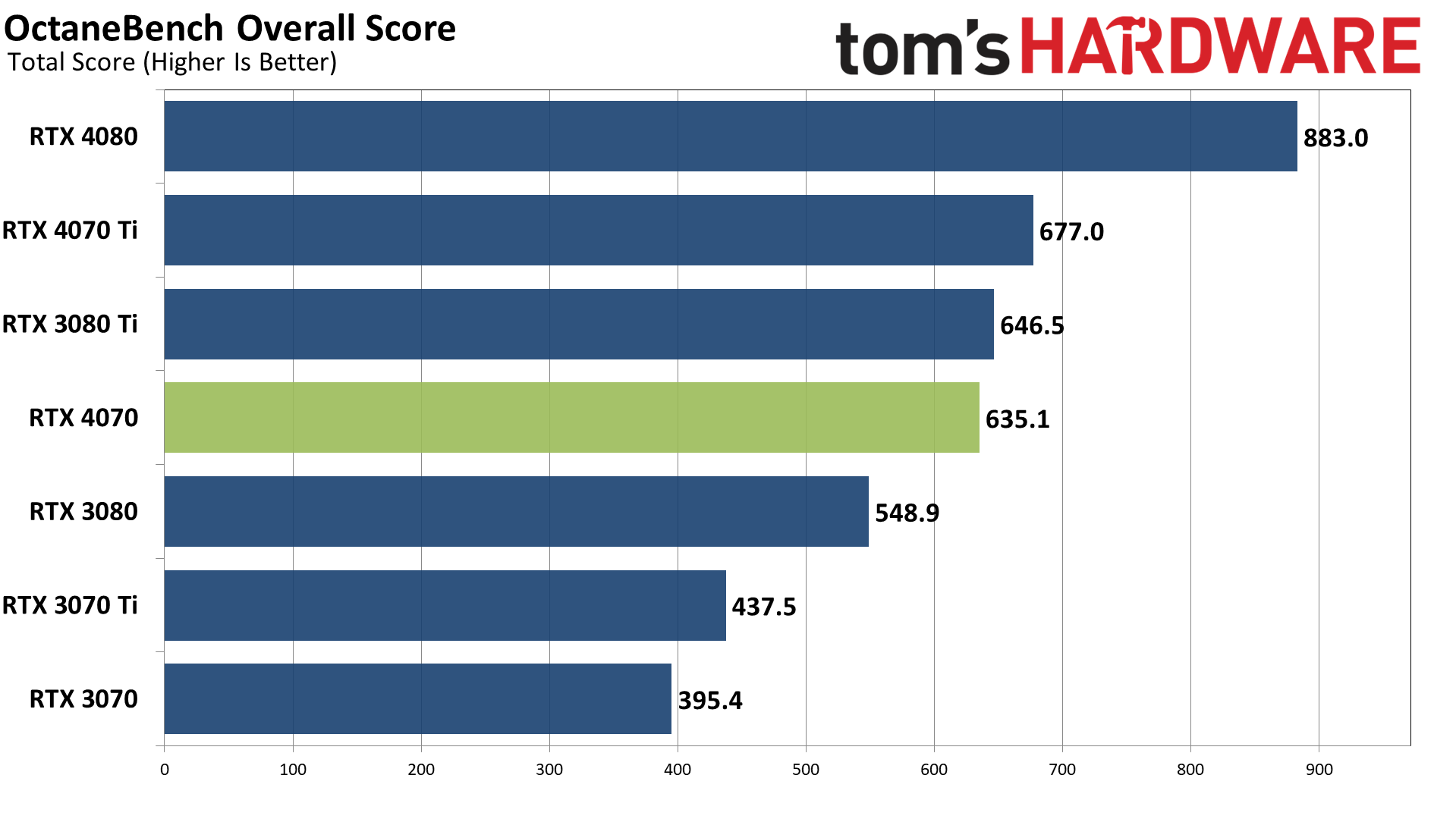

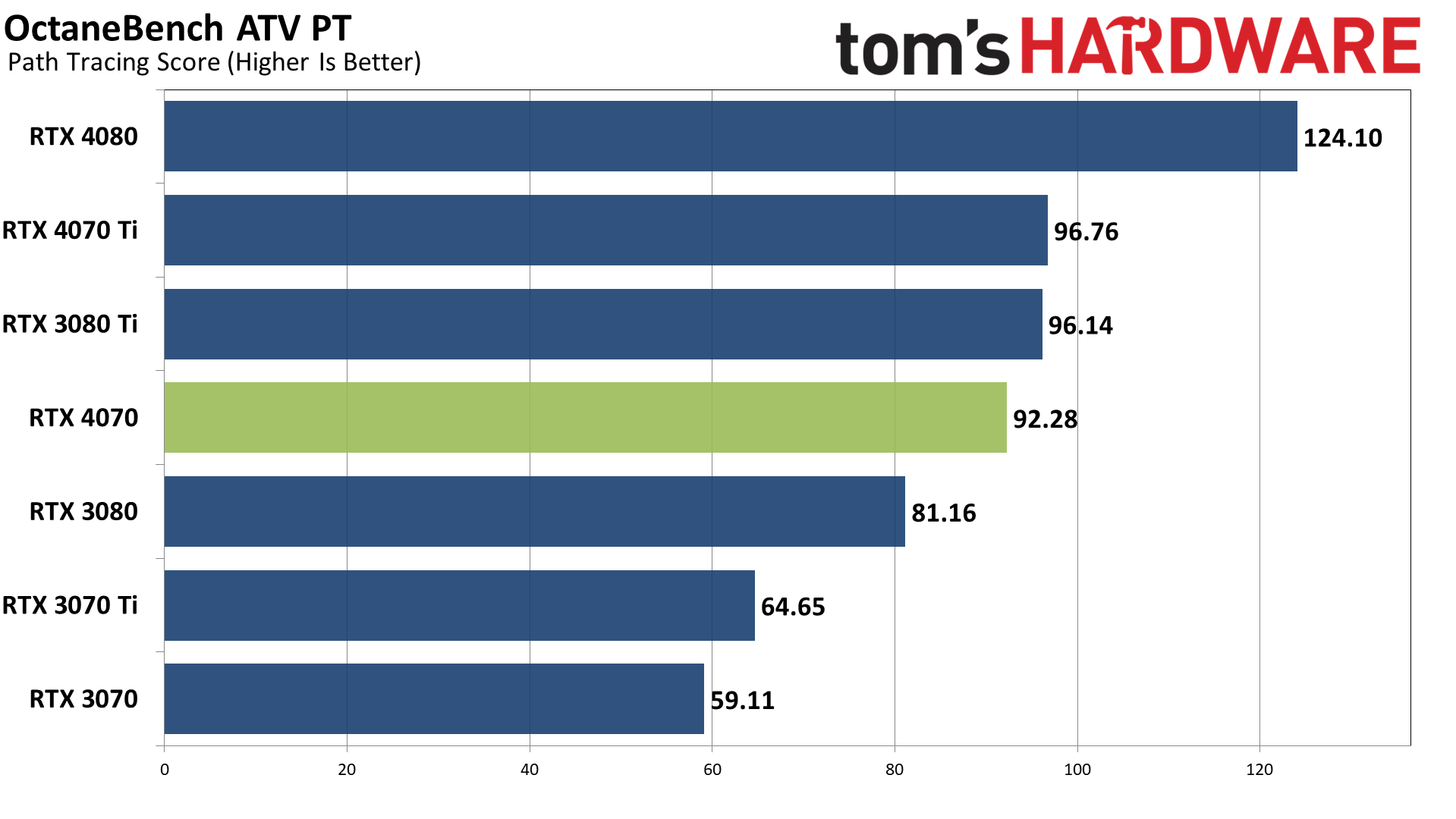

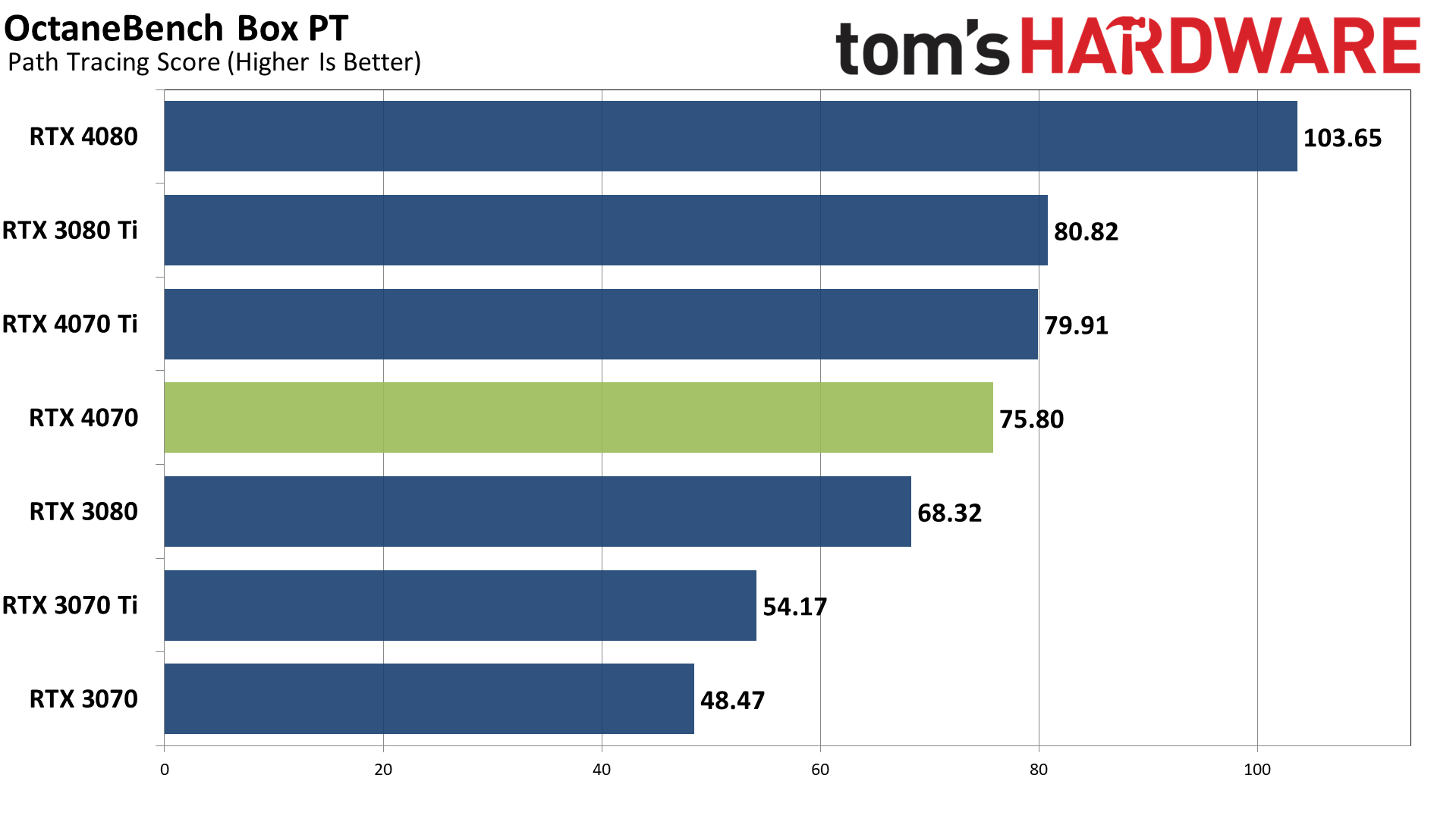

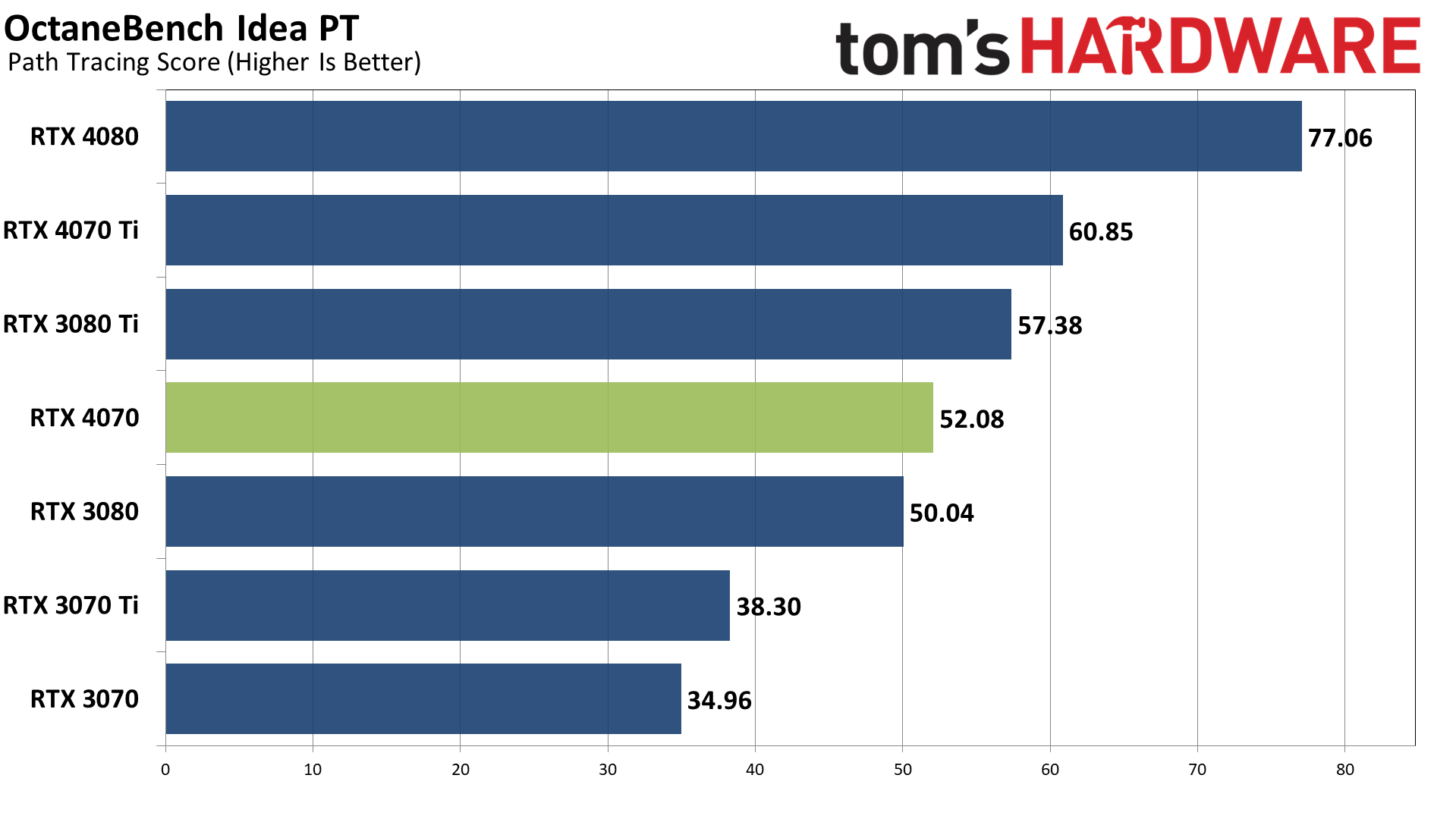

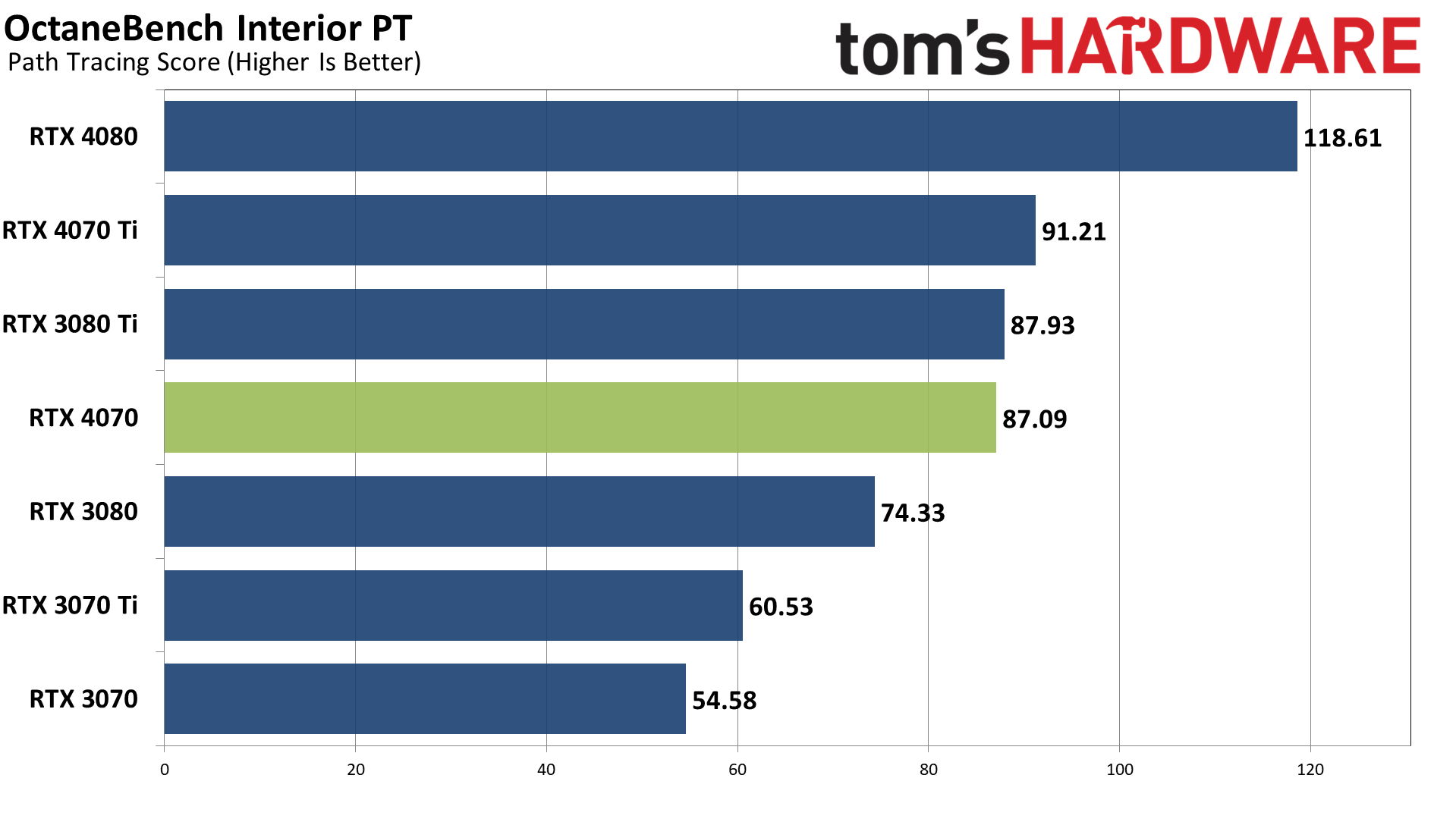

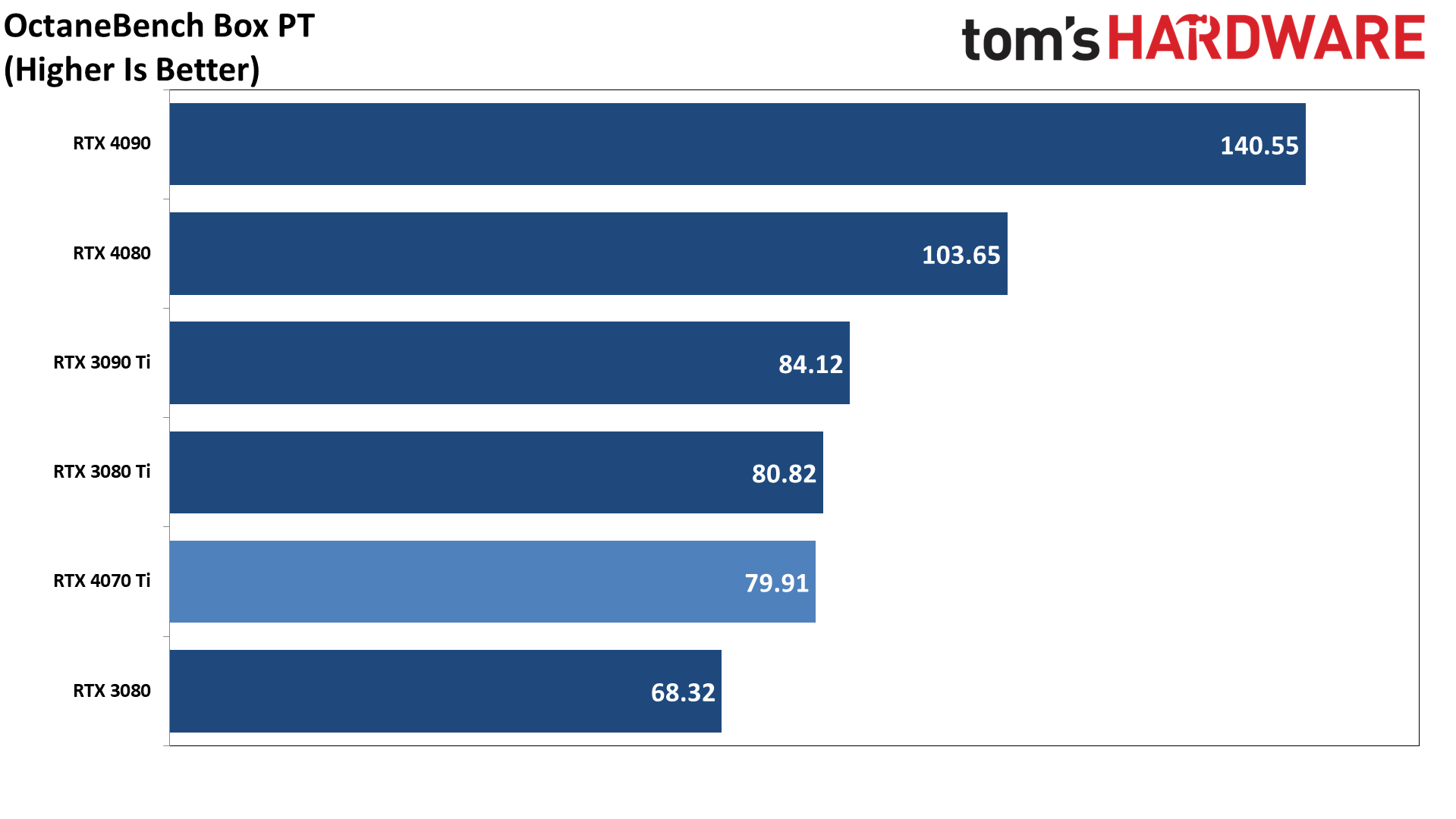

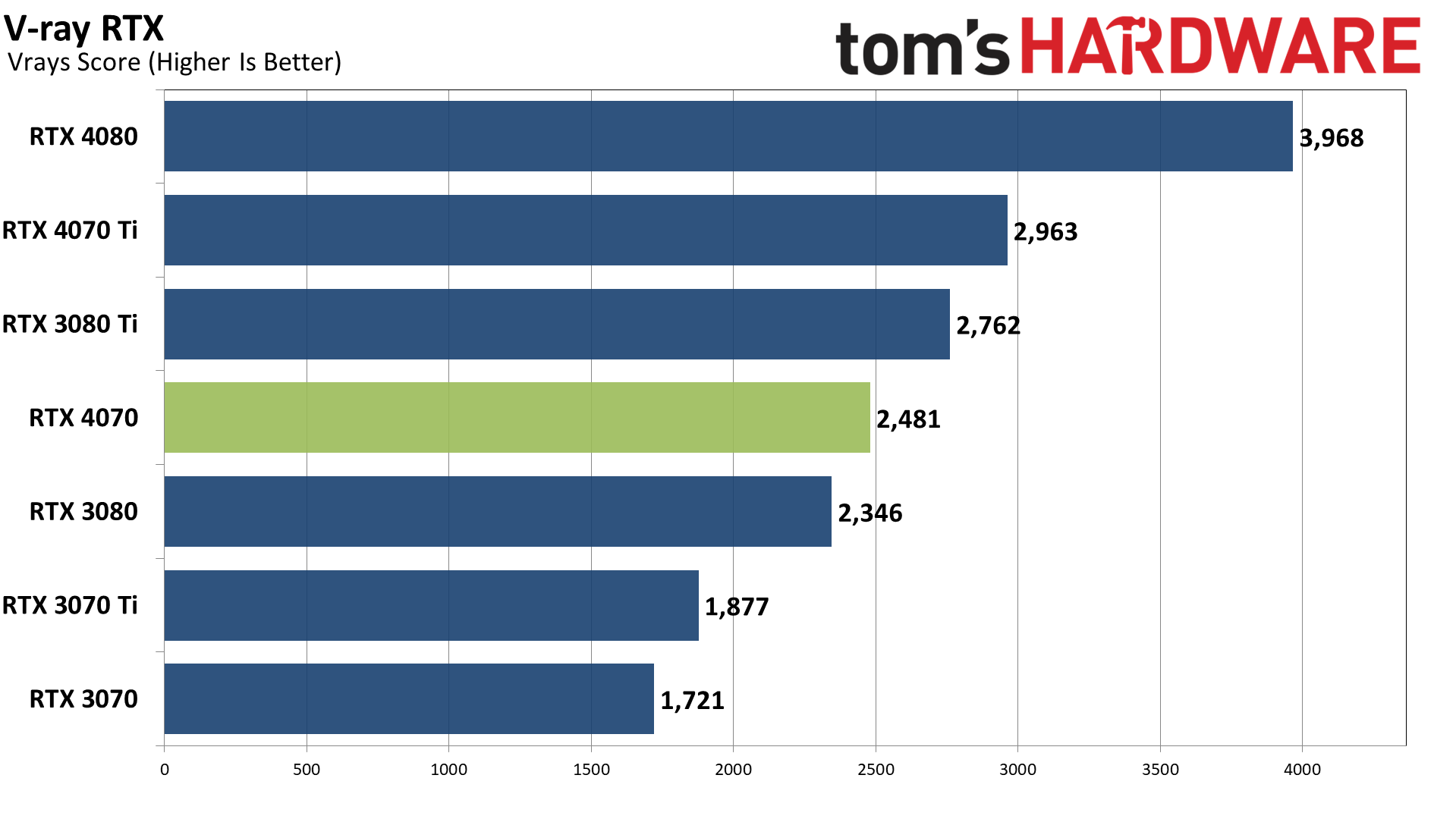

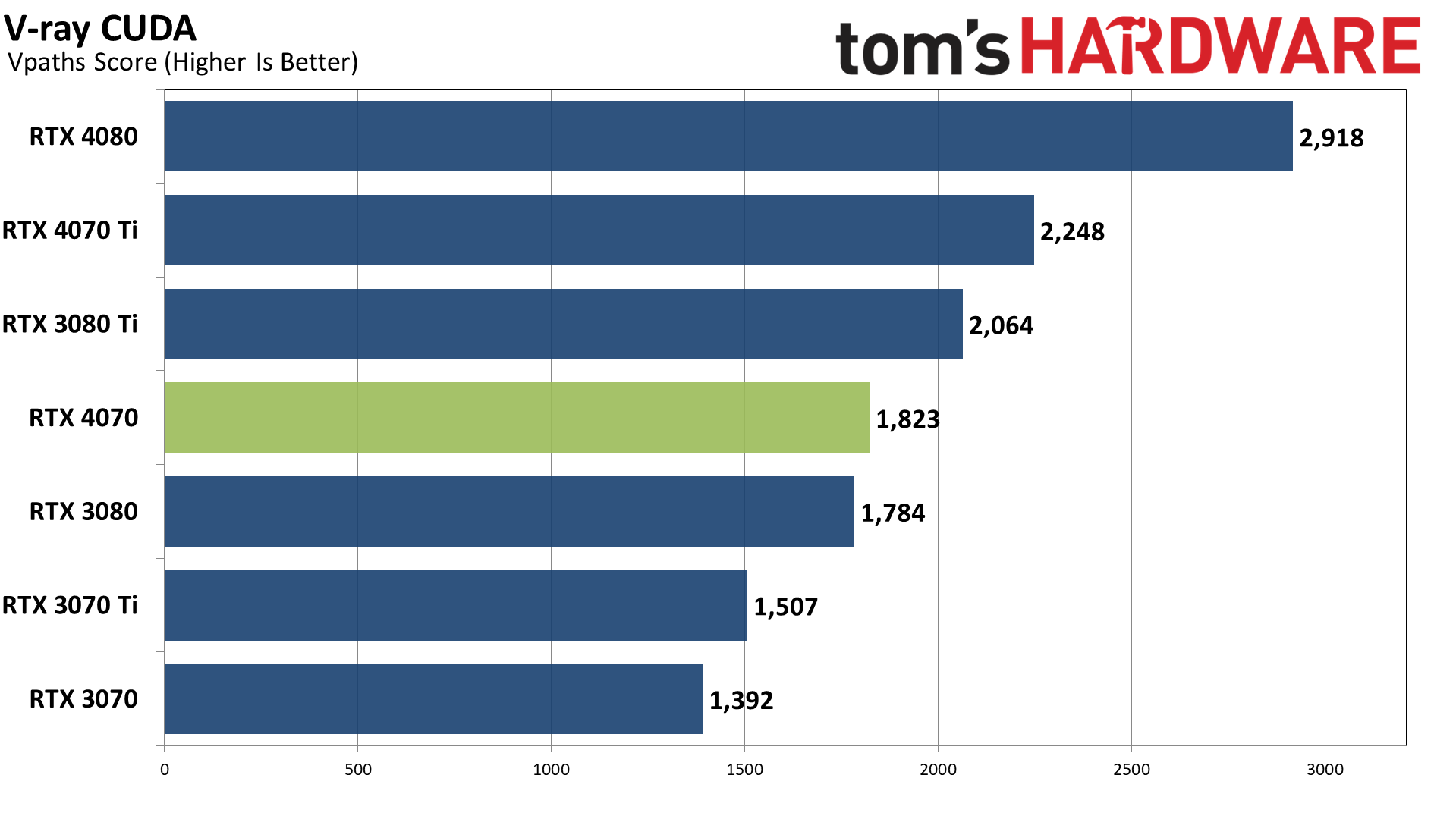

Our final two professional applications only have ray tracing hardware support for Nvidia's GPUs. OctaneBench pegs the RTX 4070 as being just a touch behind the RTX 3080 Ti but decently ahead of the vanilla RTX 3080. V-ray goes the other route and puts performance just barely ahead of the 3080 but a decent amount behind the 3080 Ti.

- MORE: Best Graphics Cards

- MORE: GPU Benchmarks and Hierarchy

- MORE: All Graphics Content

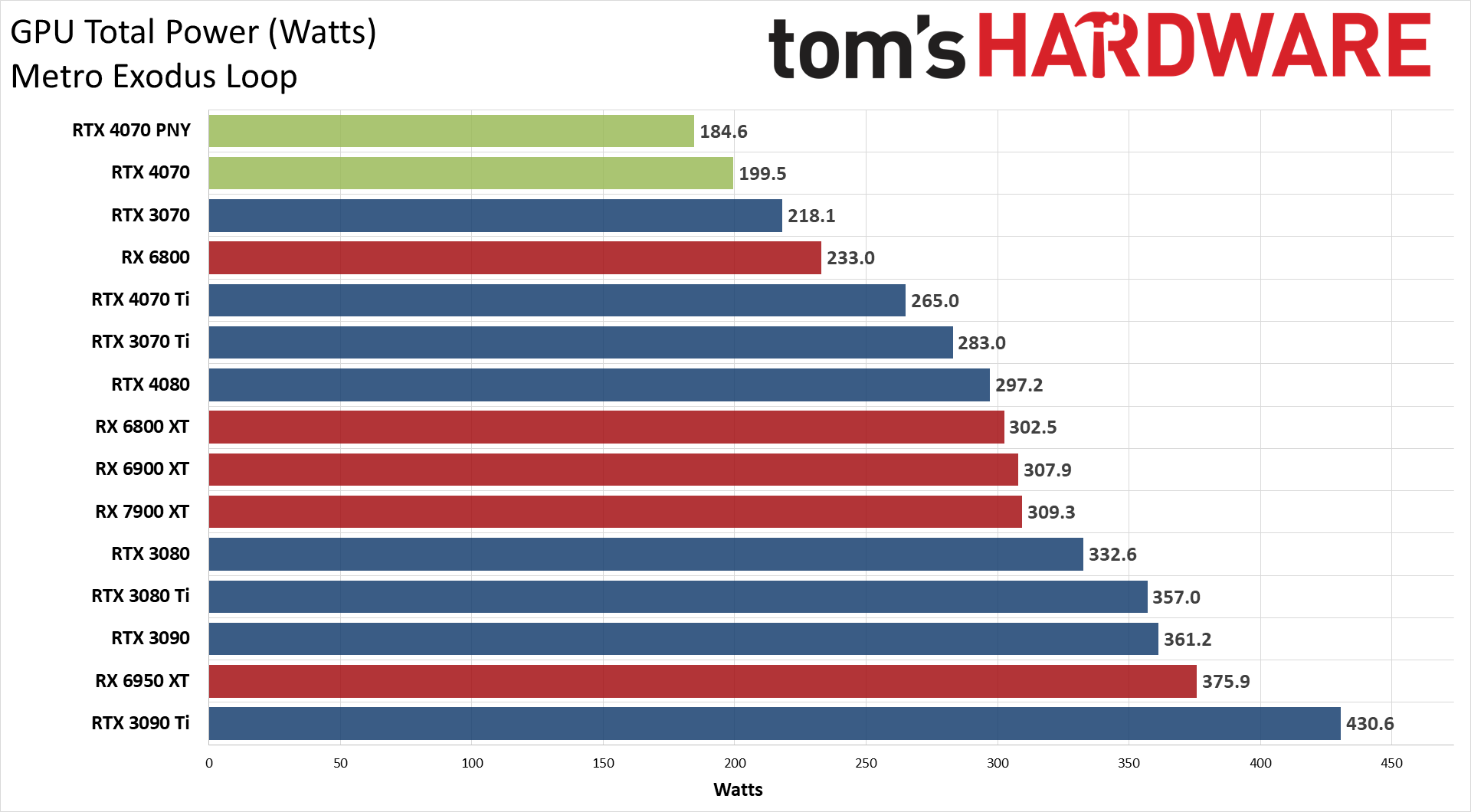

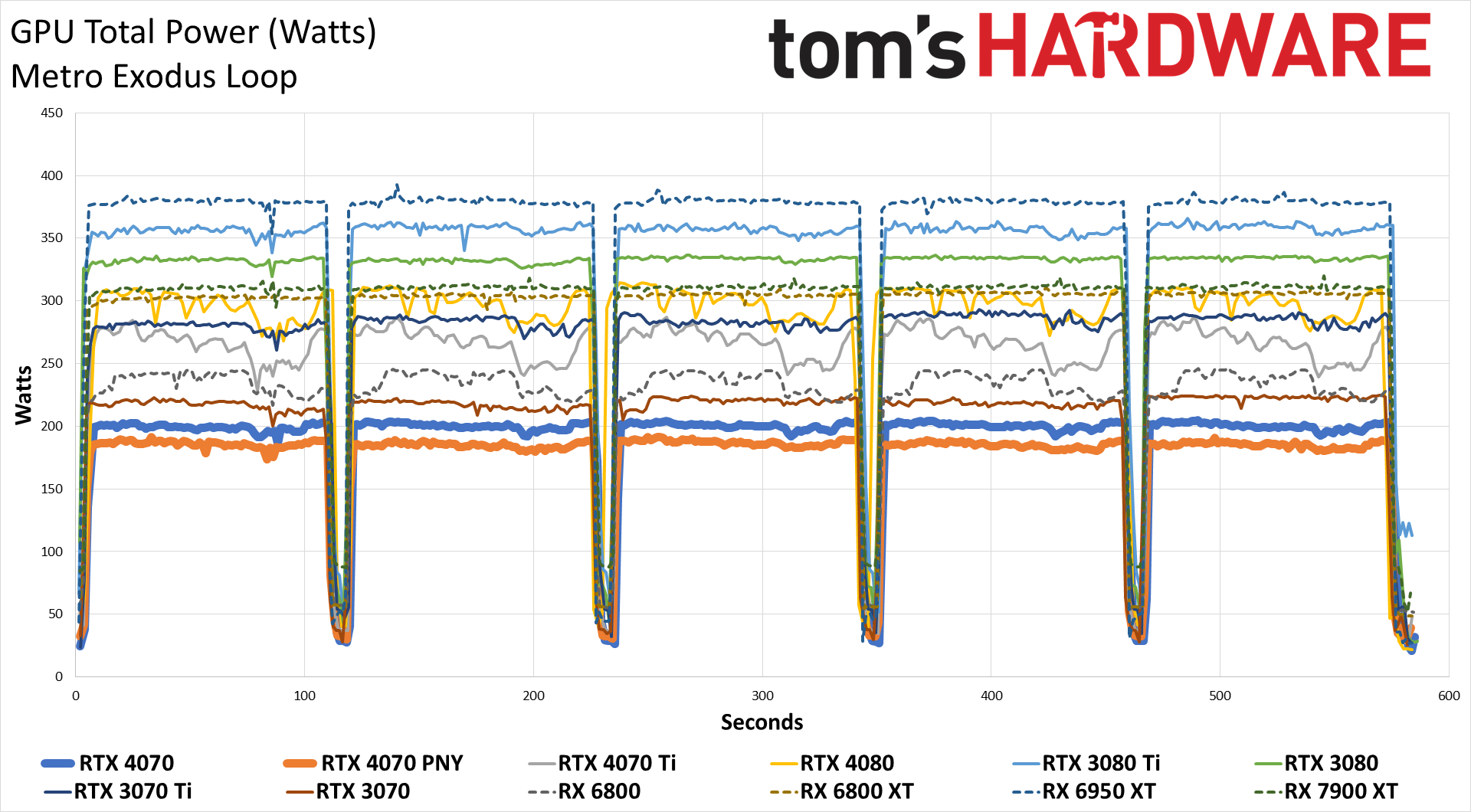

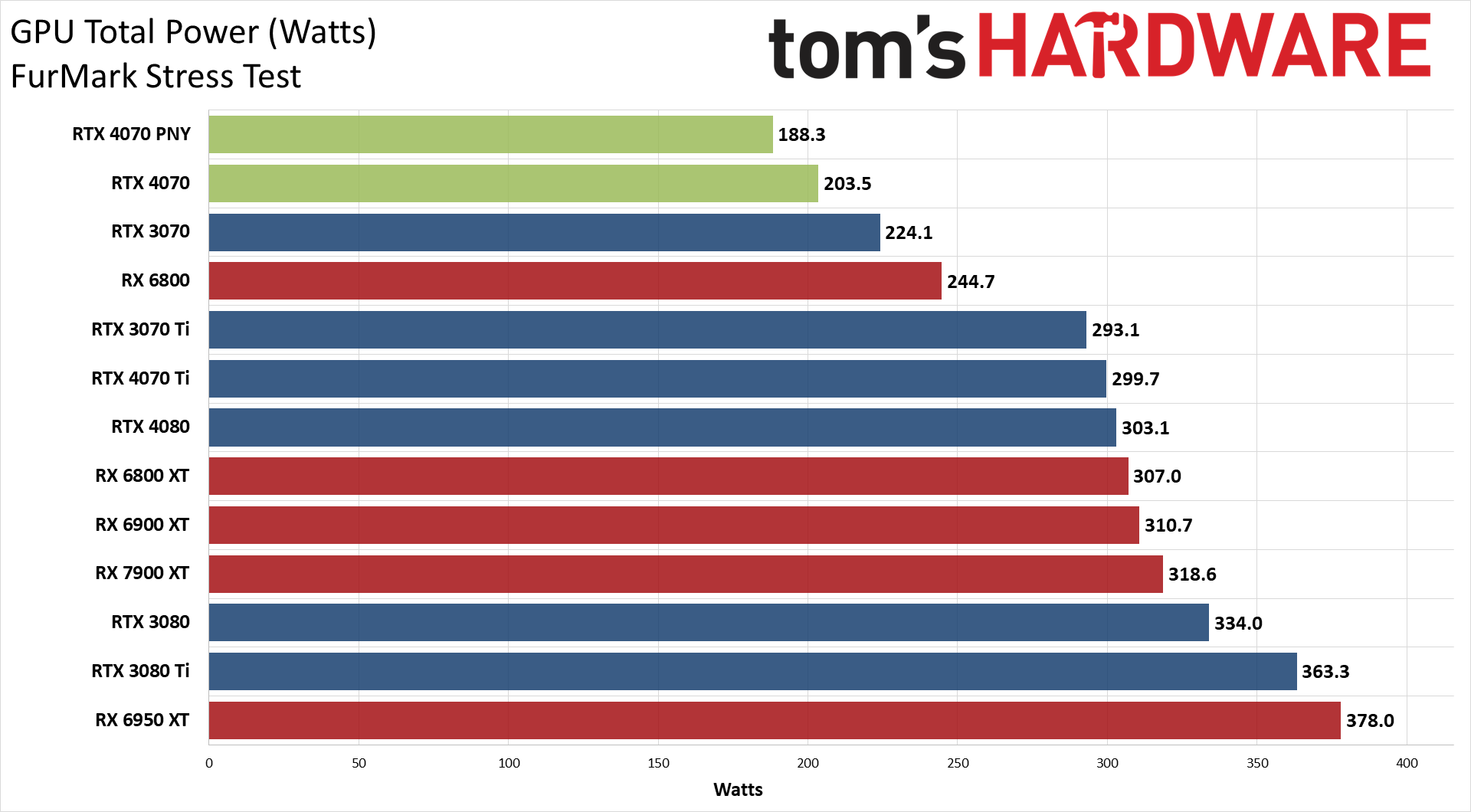

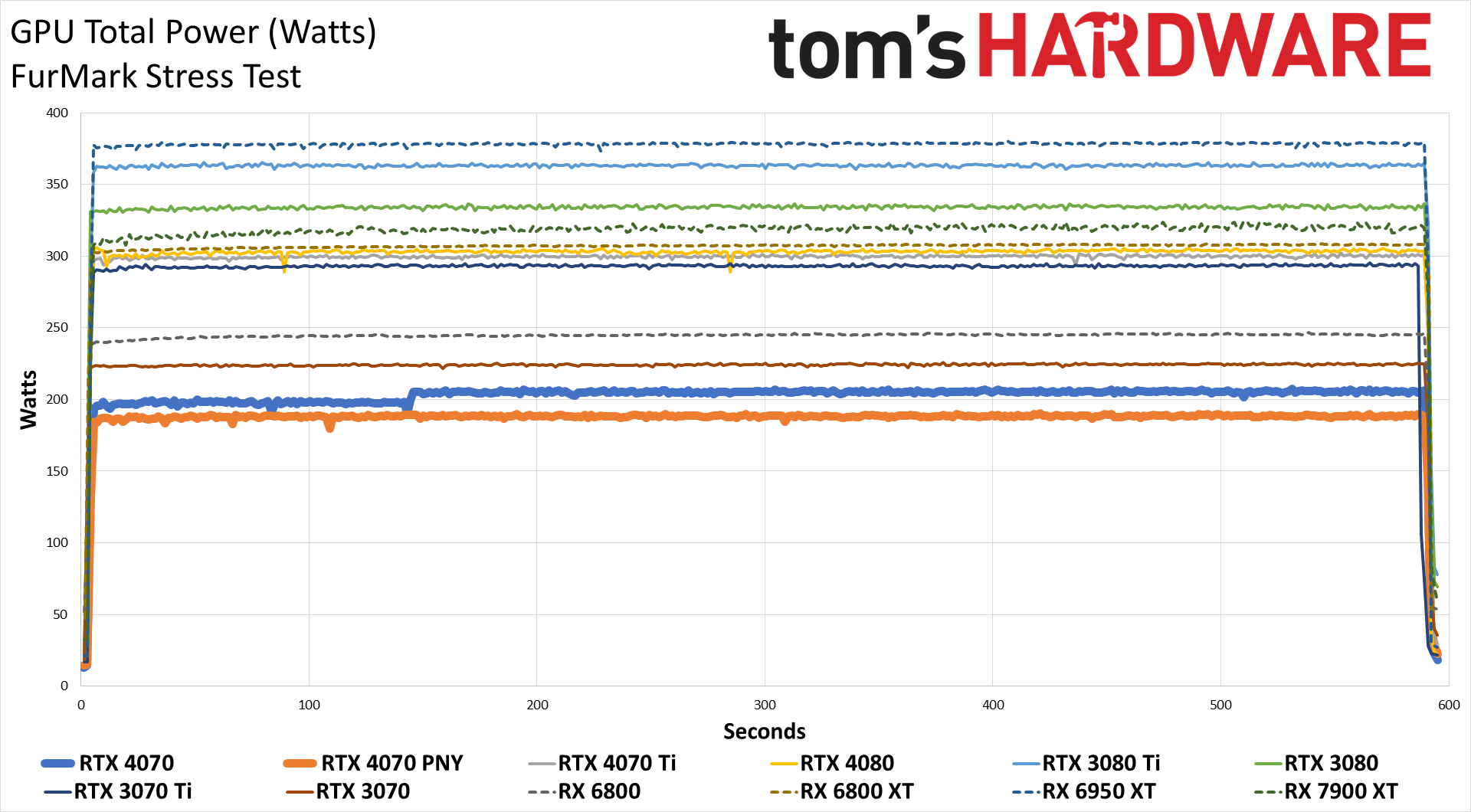

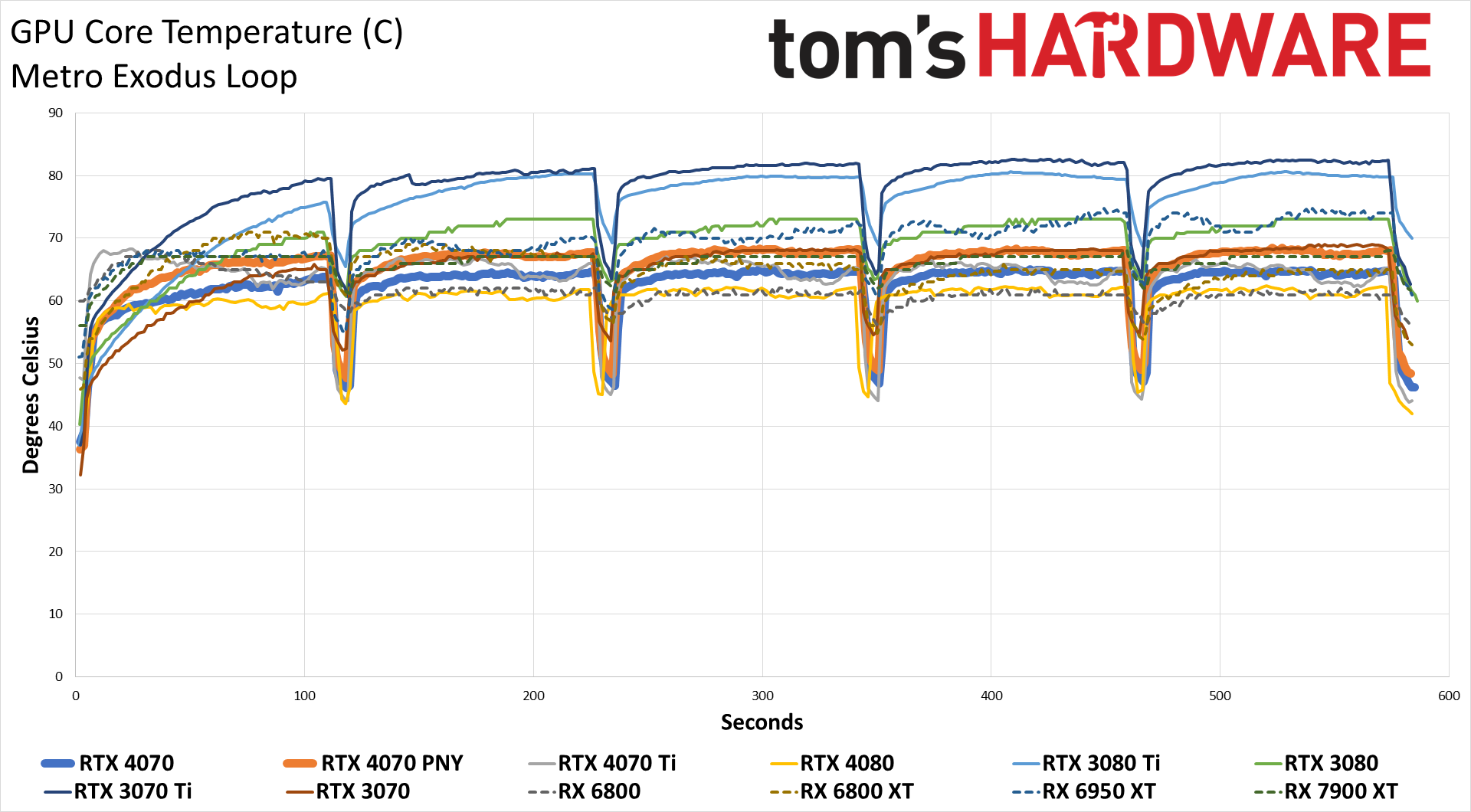

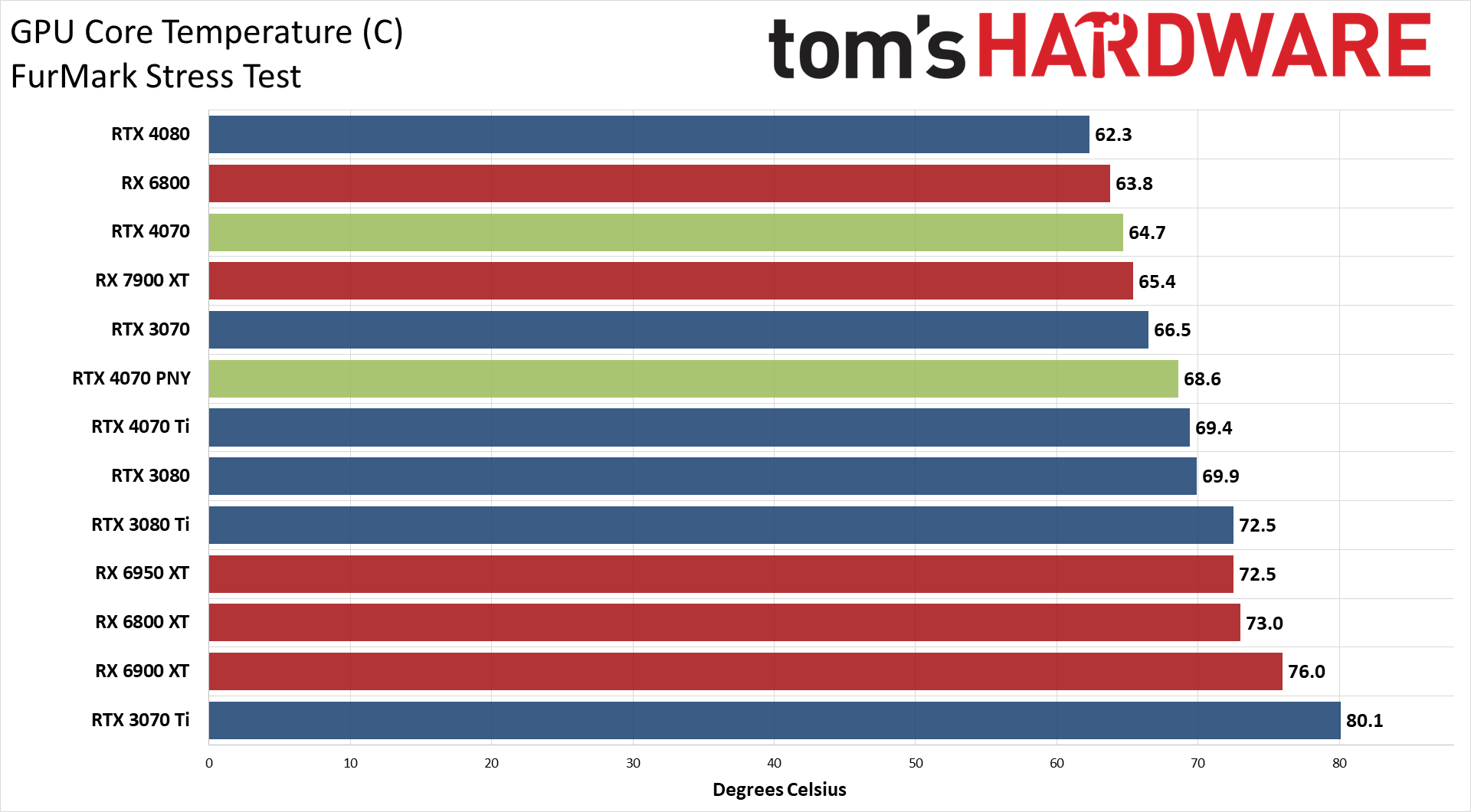

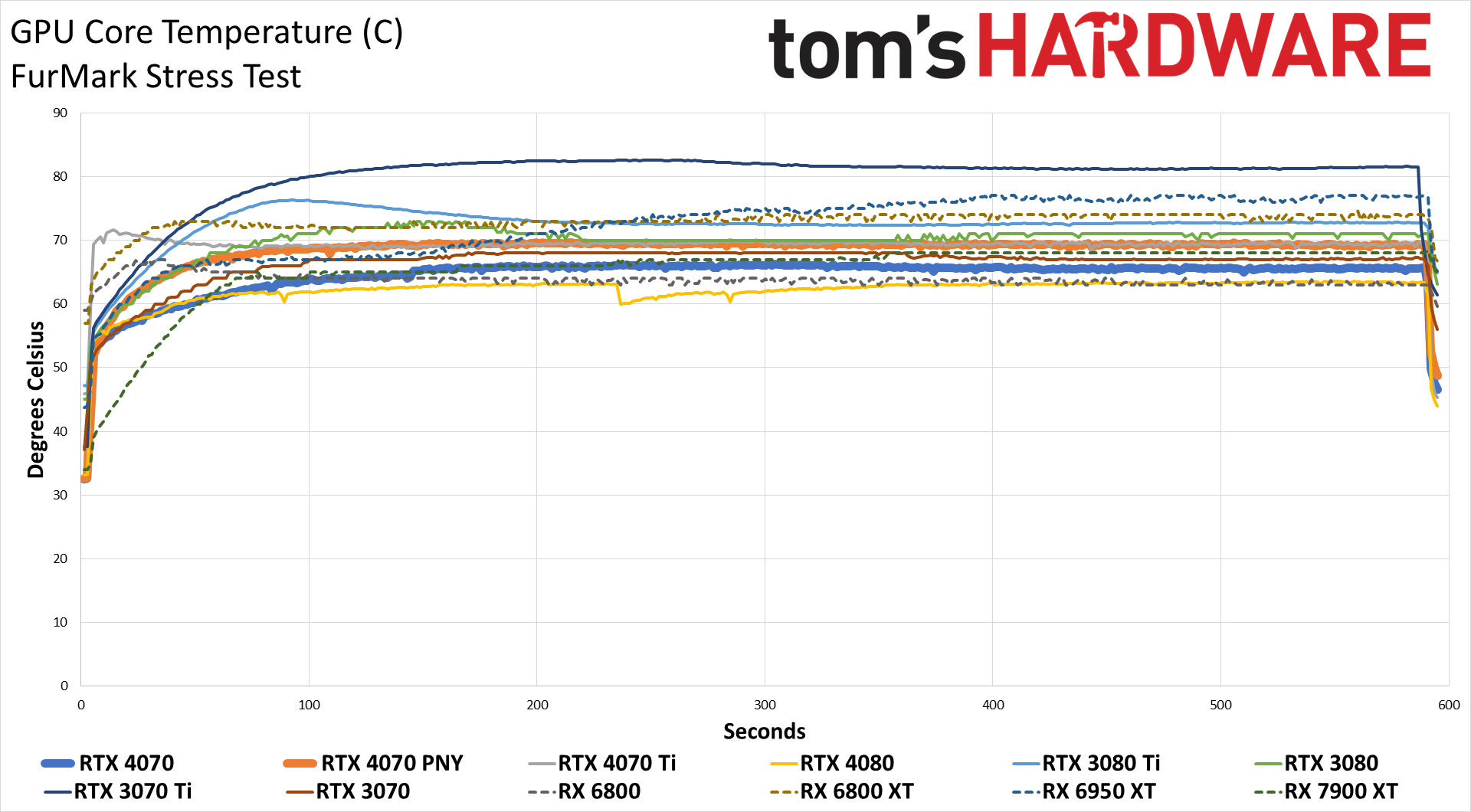

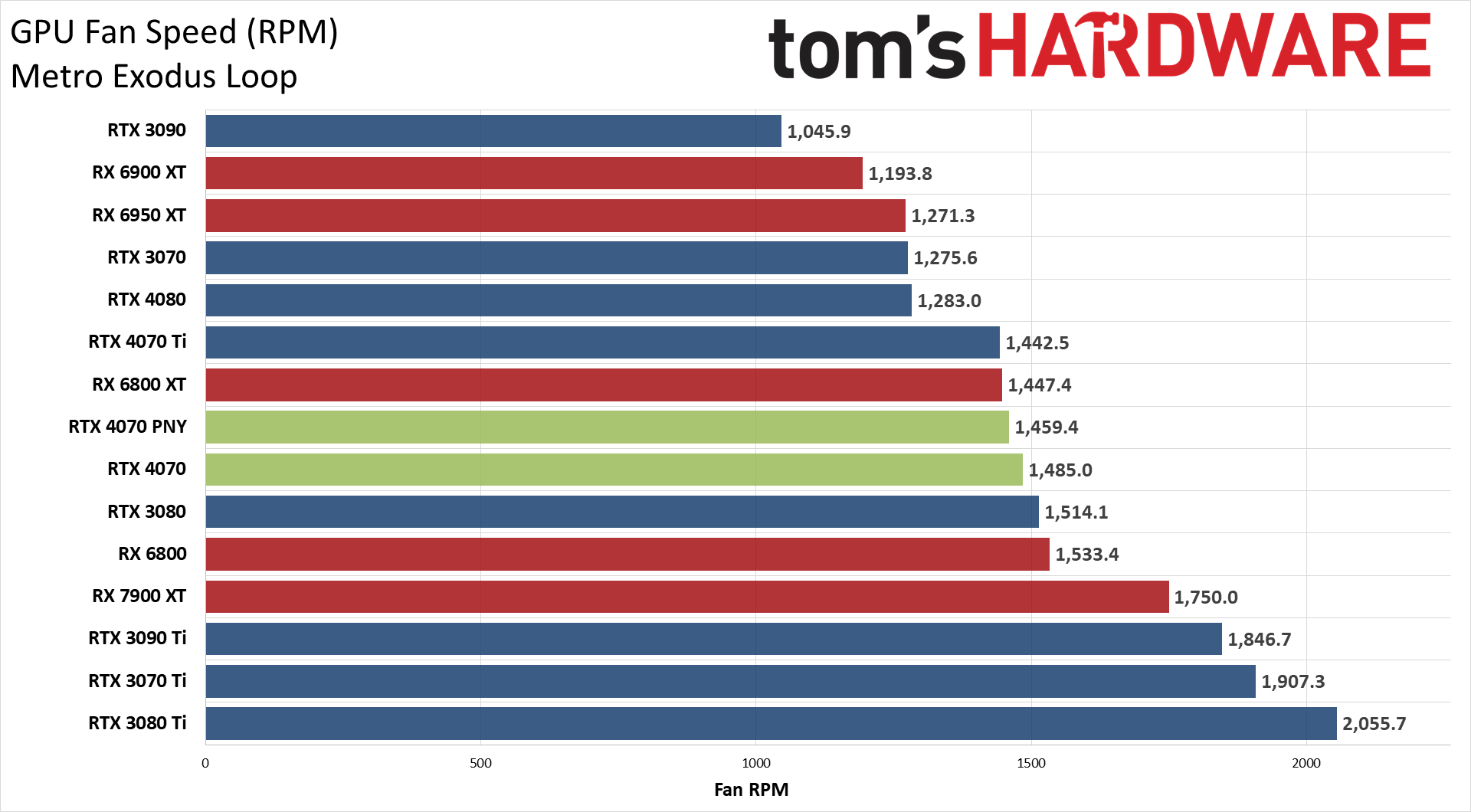

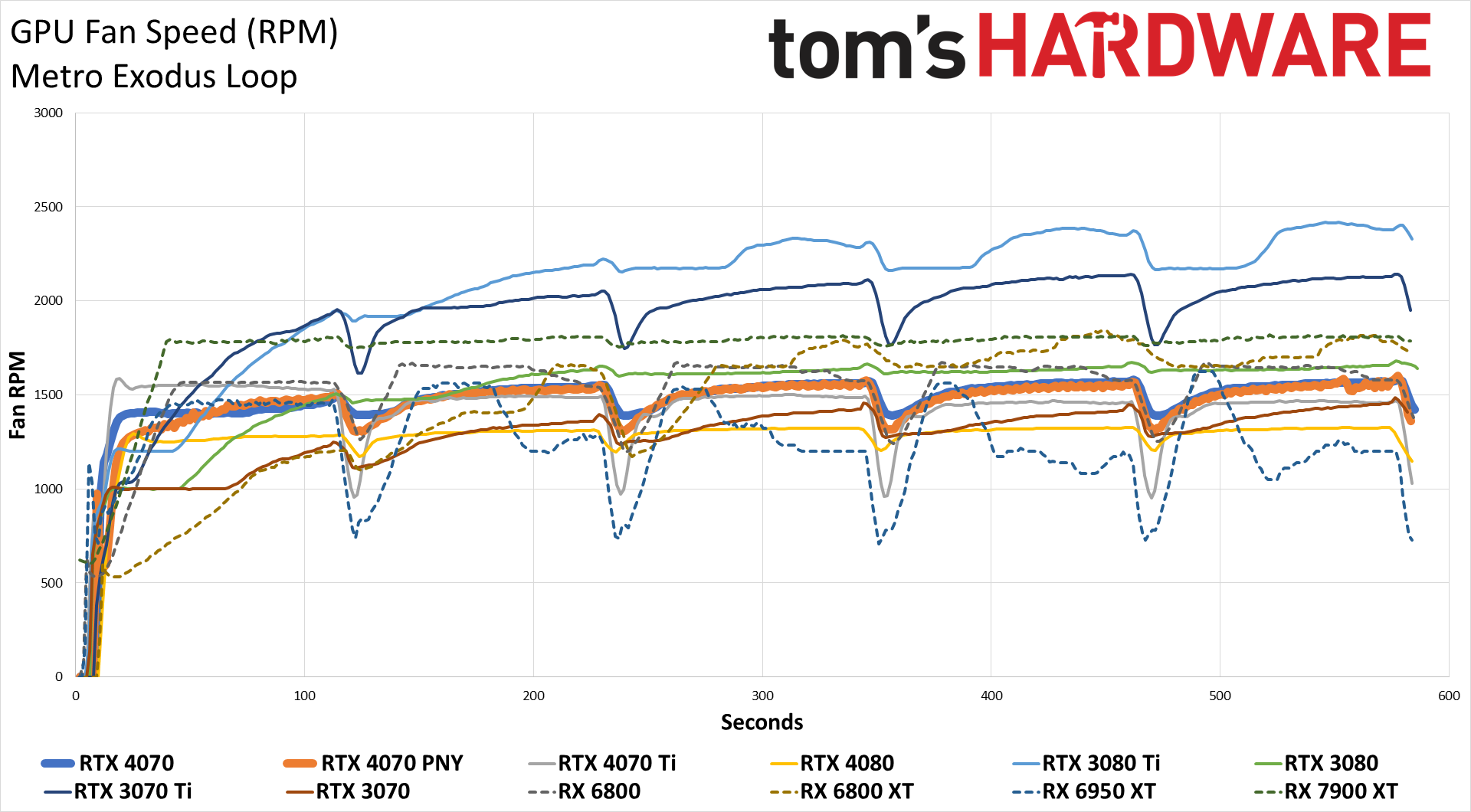

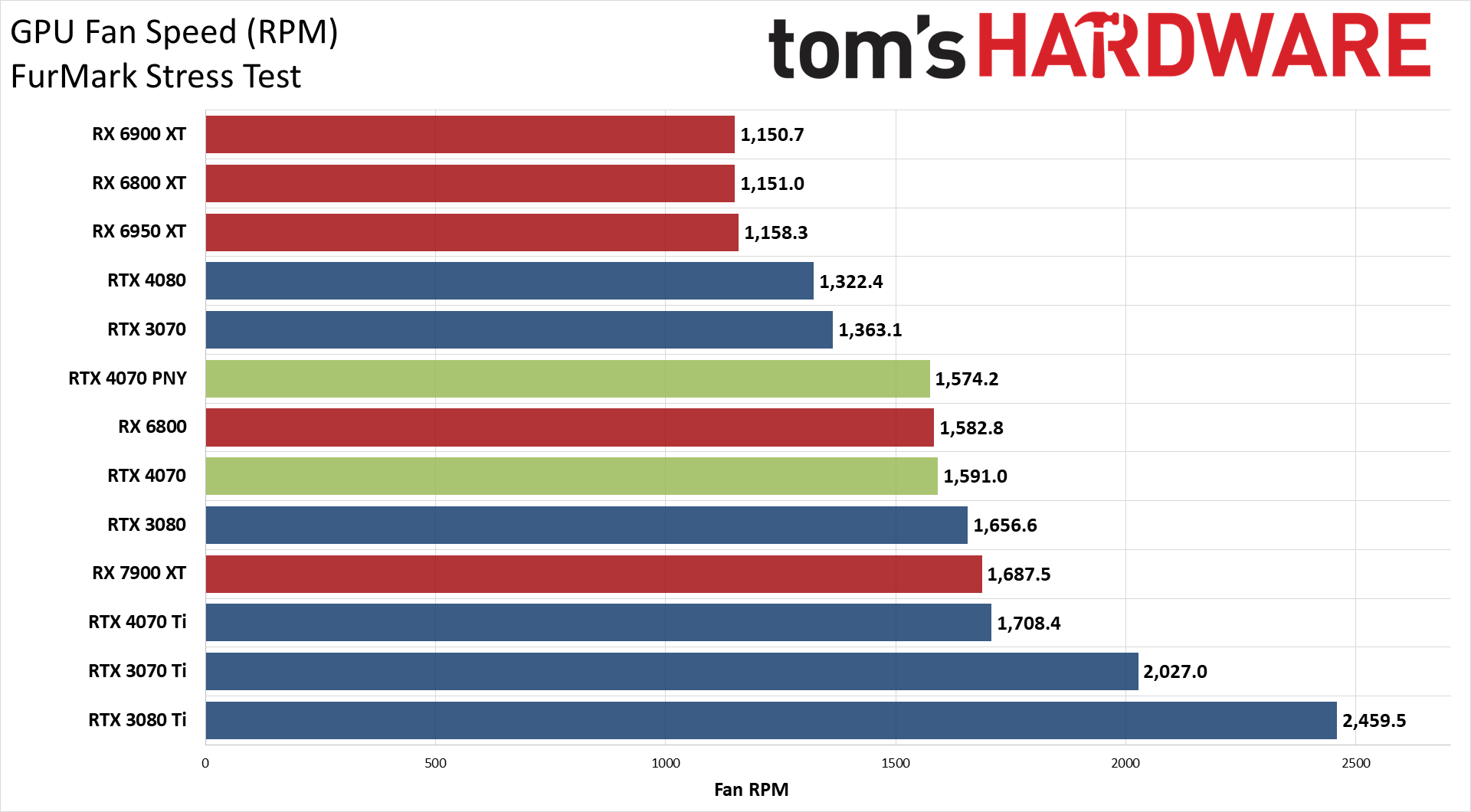

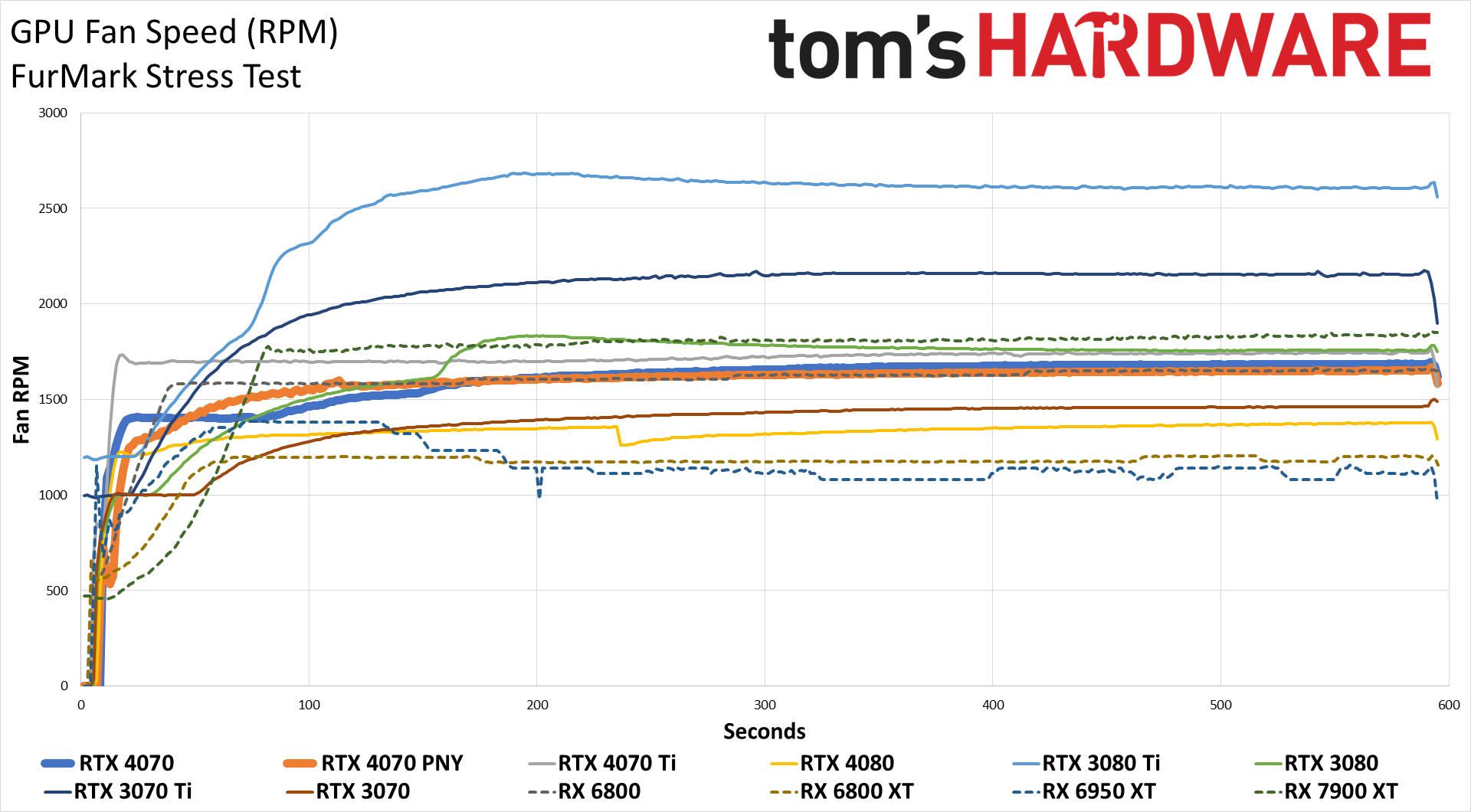

We measure real-world power consumption using Powenetics testing hardware and software. We capture in-line GPU power consumption by collecting data while looping Metro Exodus (the original, not the enhanced version) and while running the FurMark stress test. We also check noise levels using an SPL meter. Our test PC for Powenetics remains the same old Core i9-9900K as we've used previously, to keep results consistent.

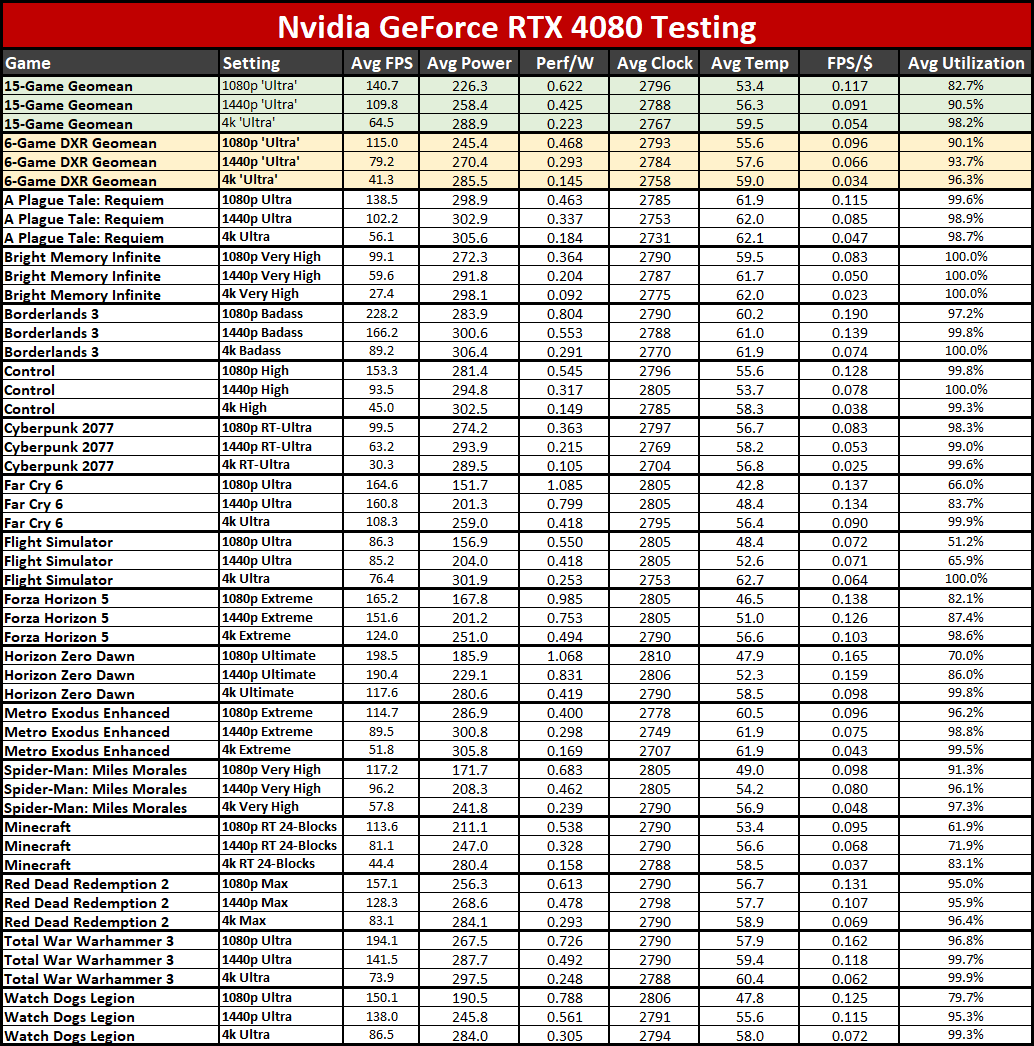

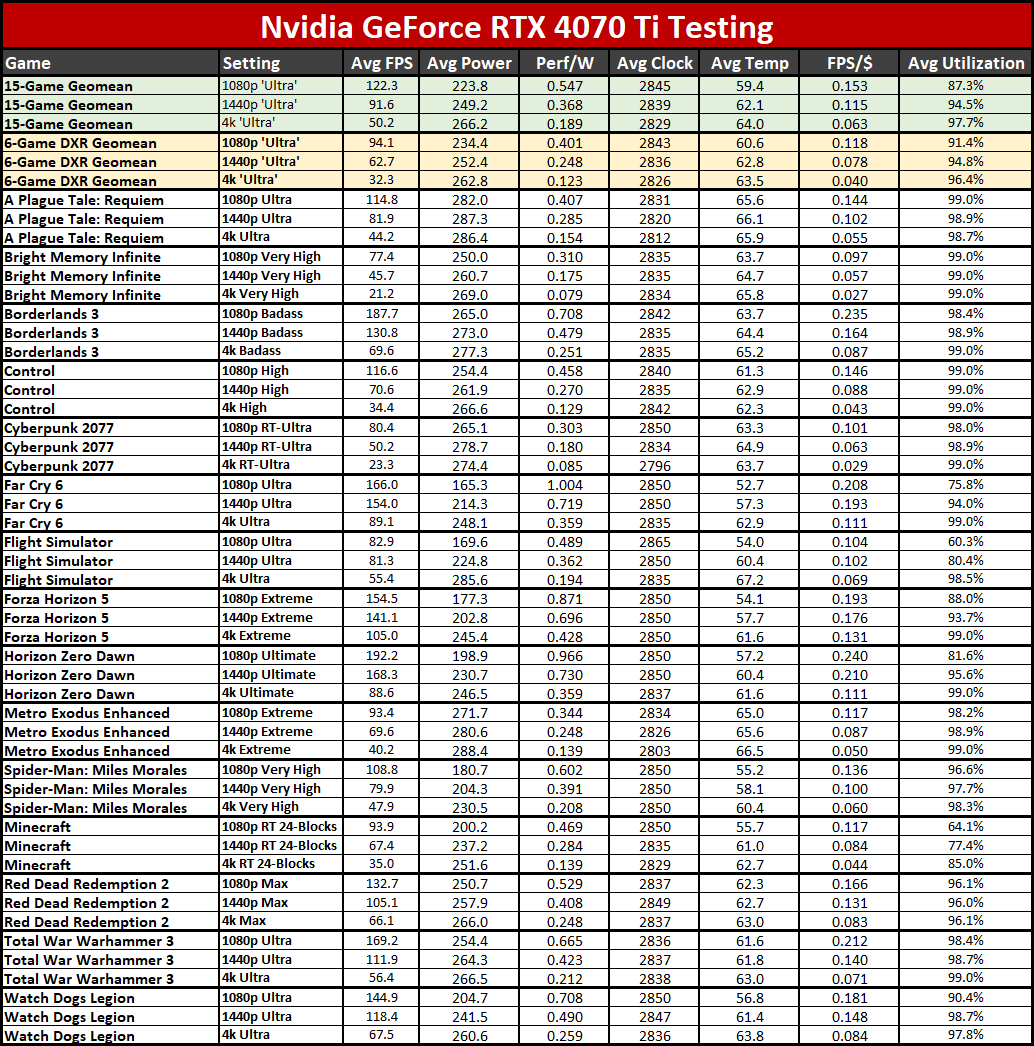

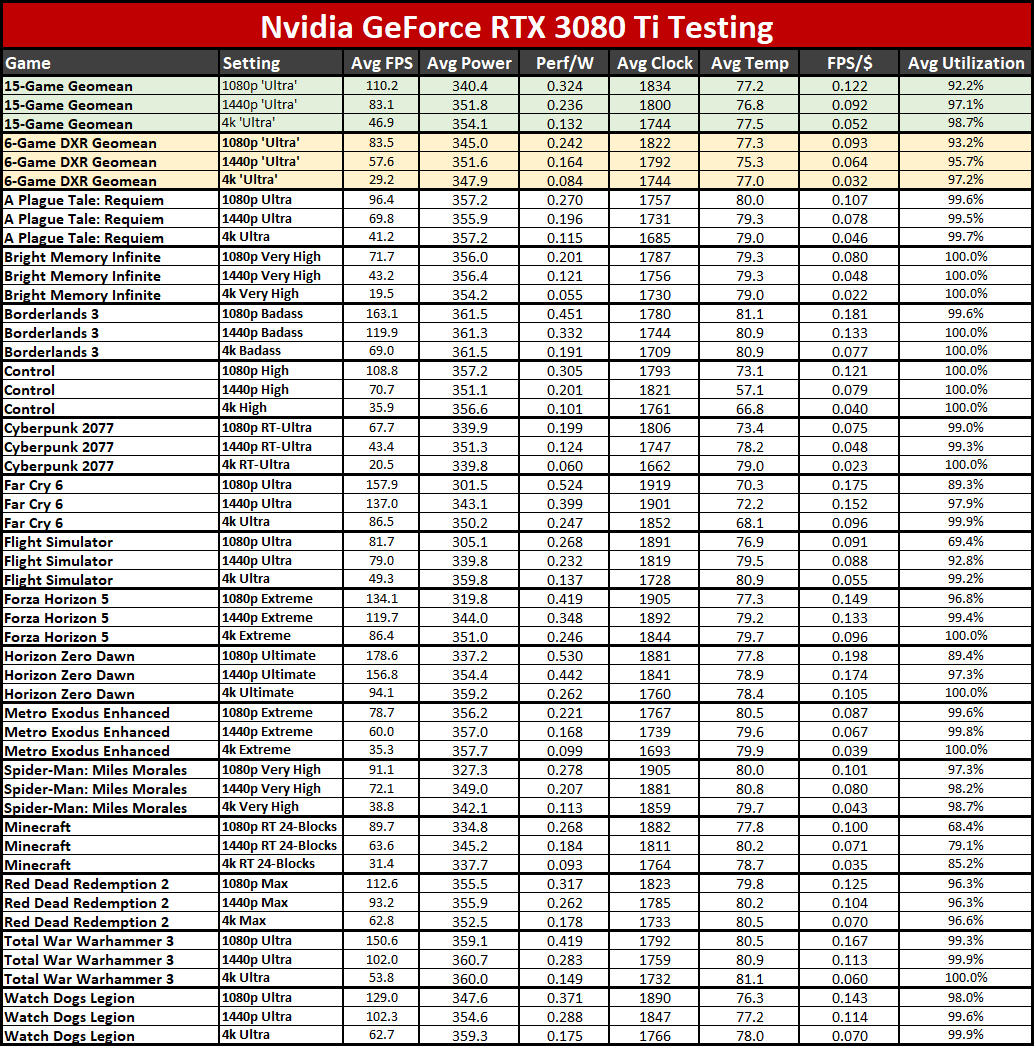

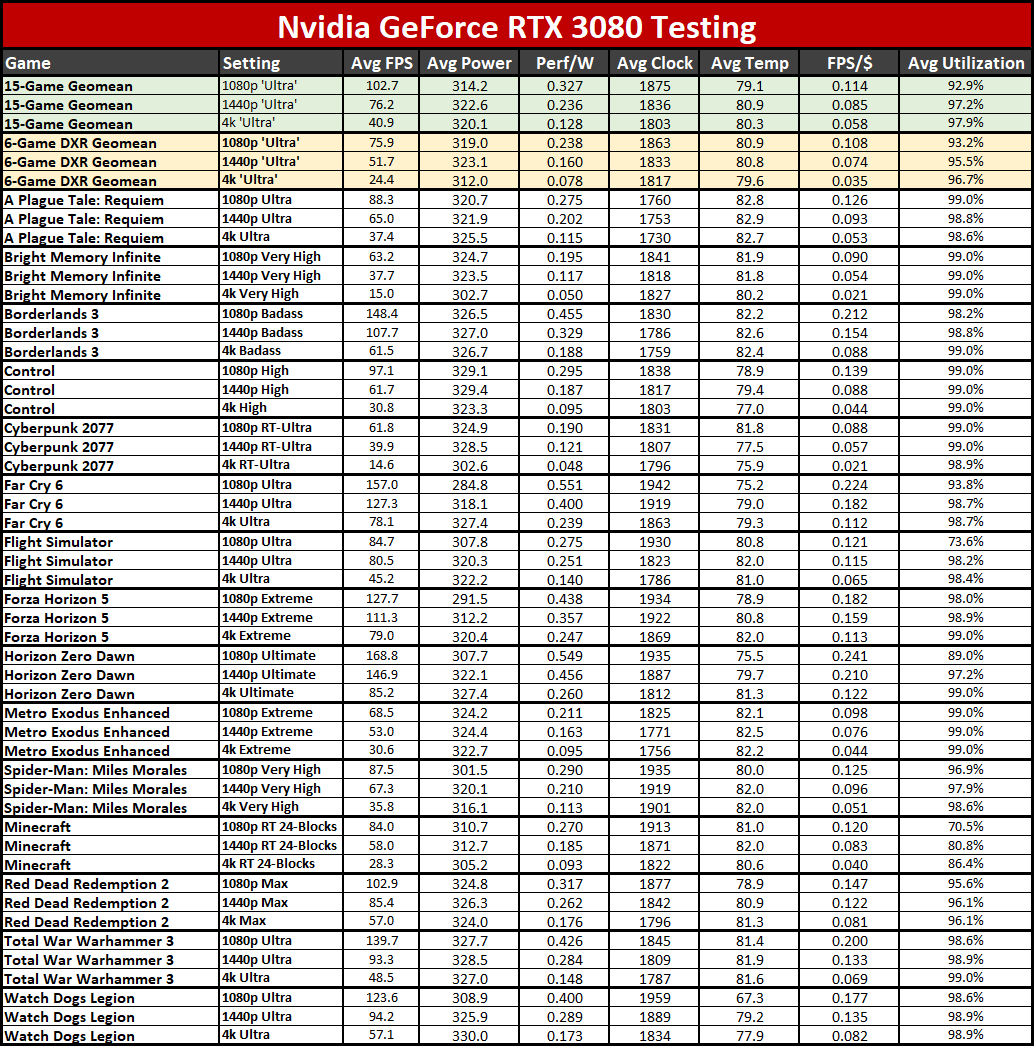

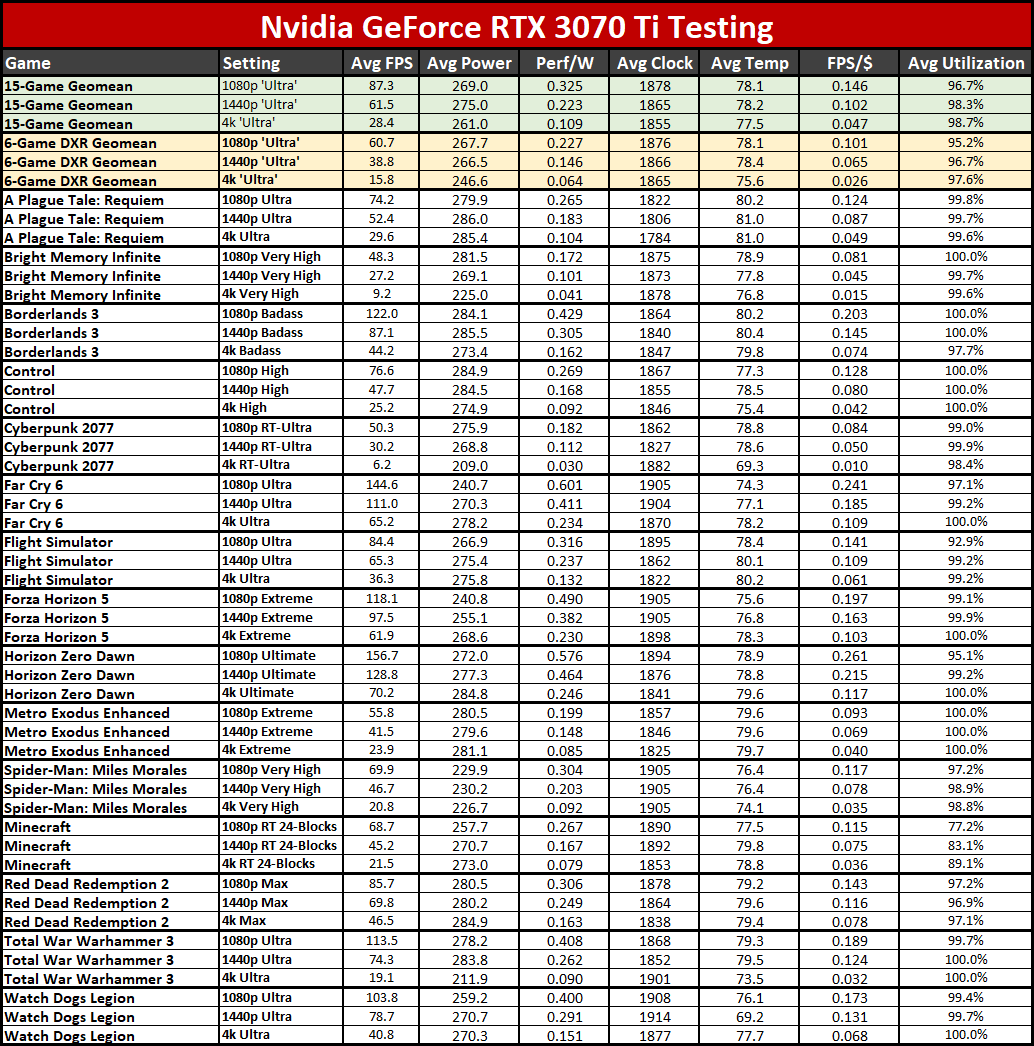

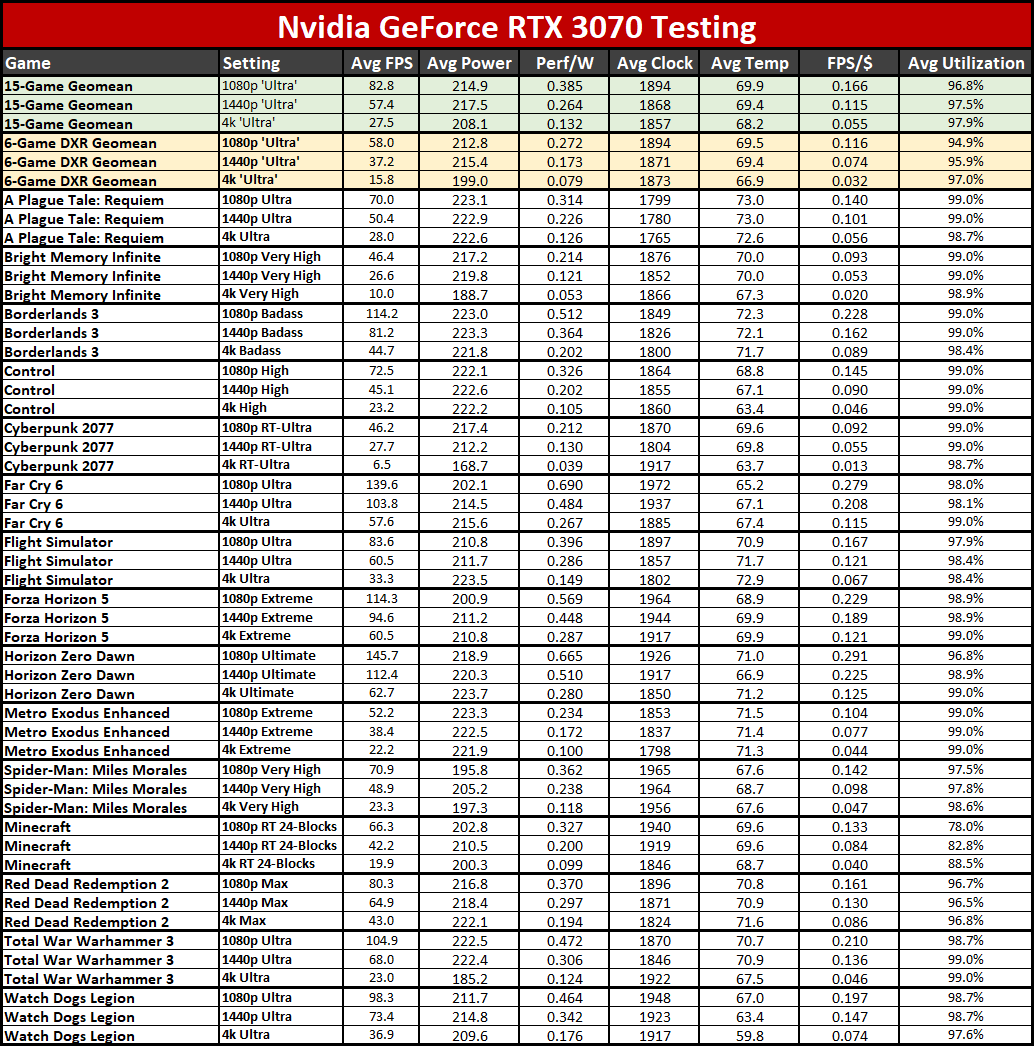

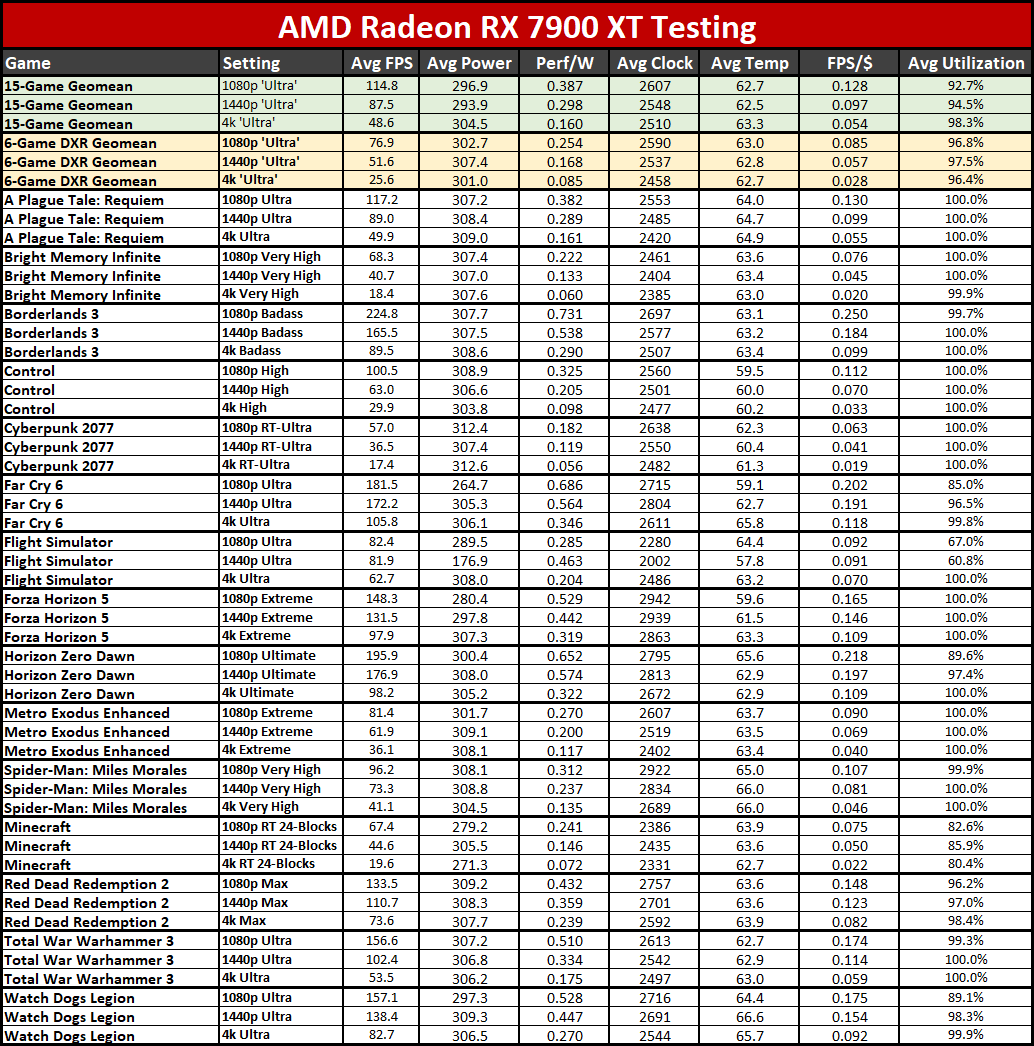

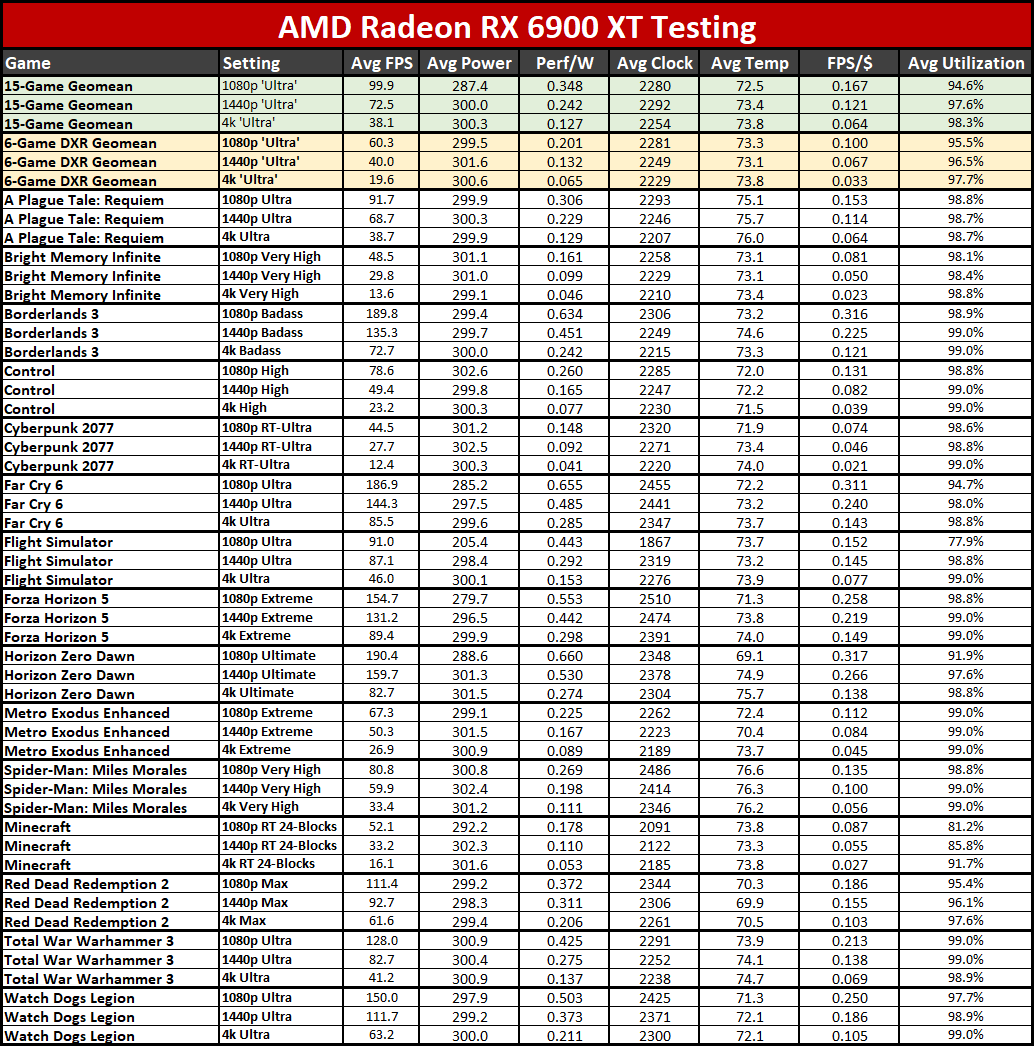

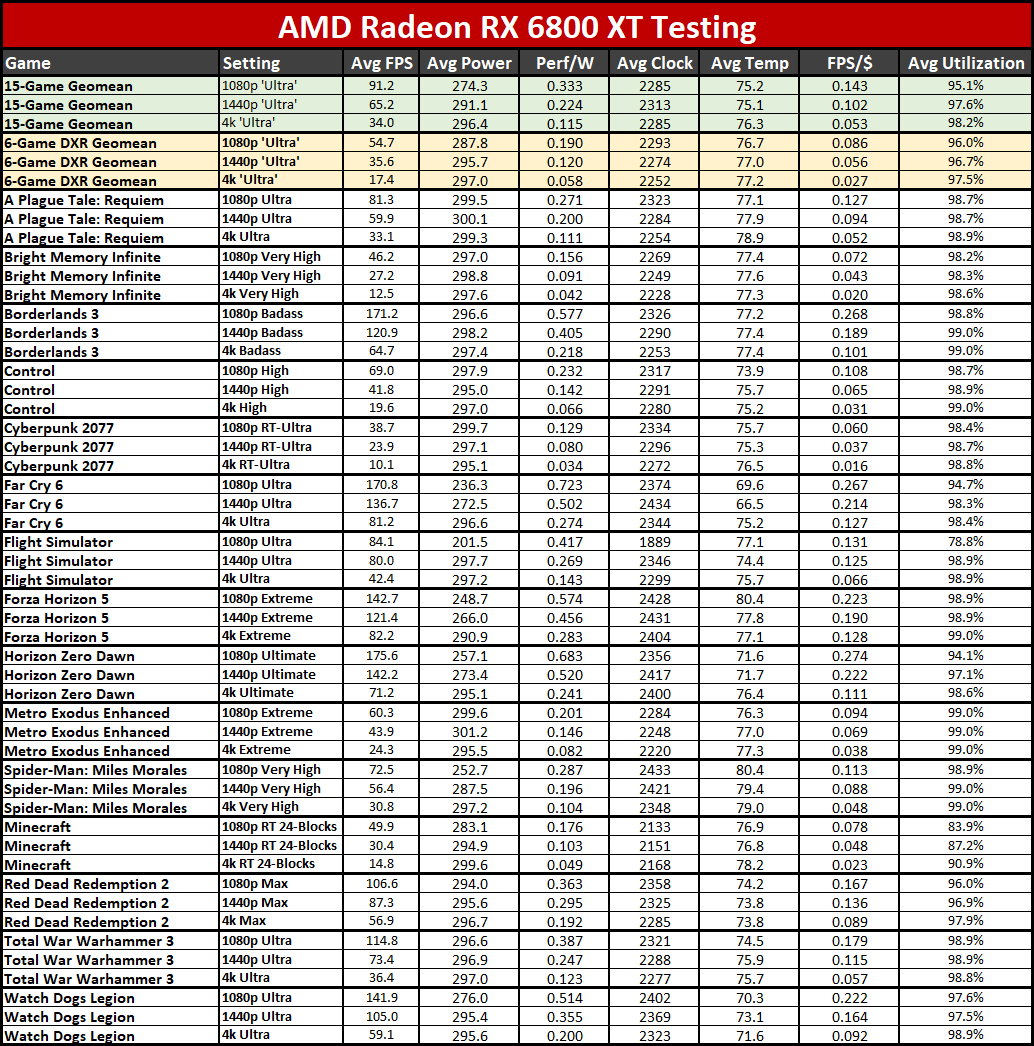

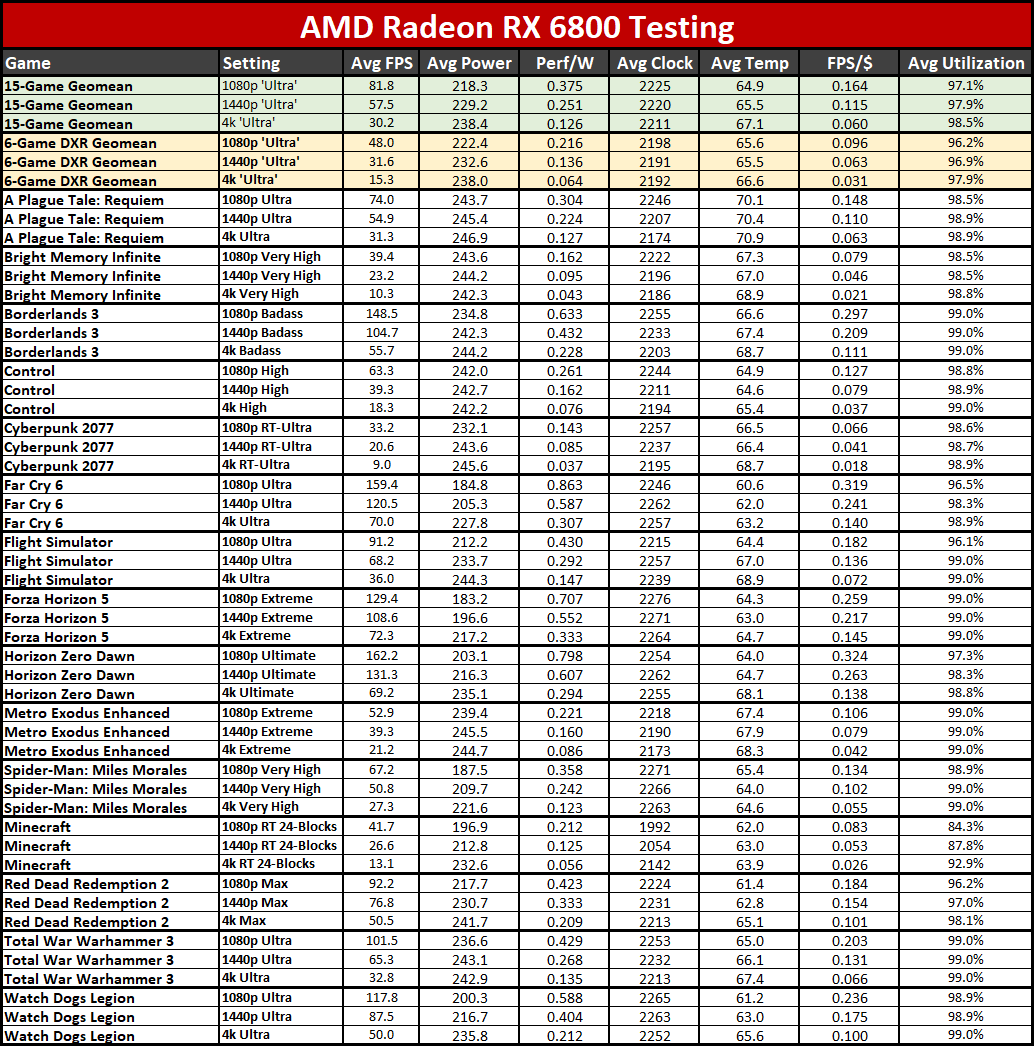

We also collect power data on our newer 13900K platform using PCAT v2 hardware, which gives a wider view of power use and efficiency. We'll start with the gallery of our PCAT results.

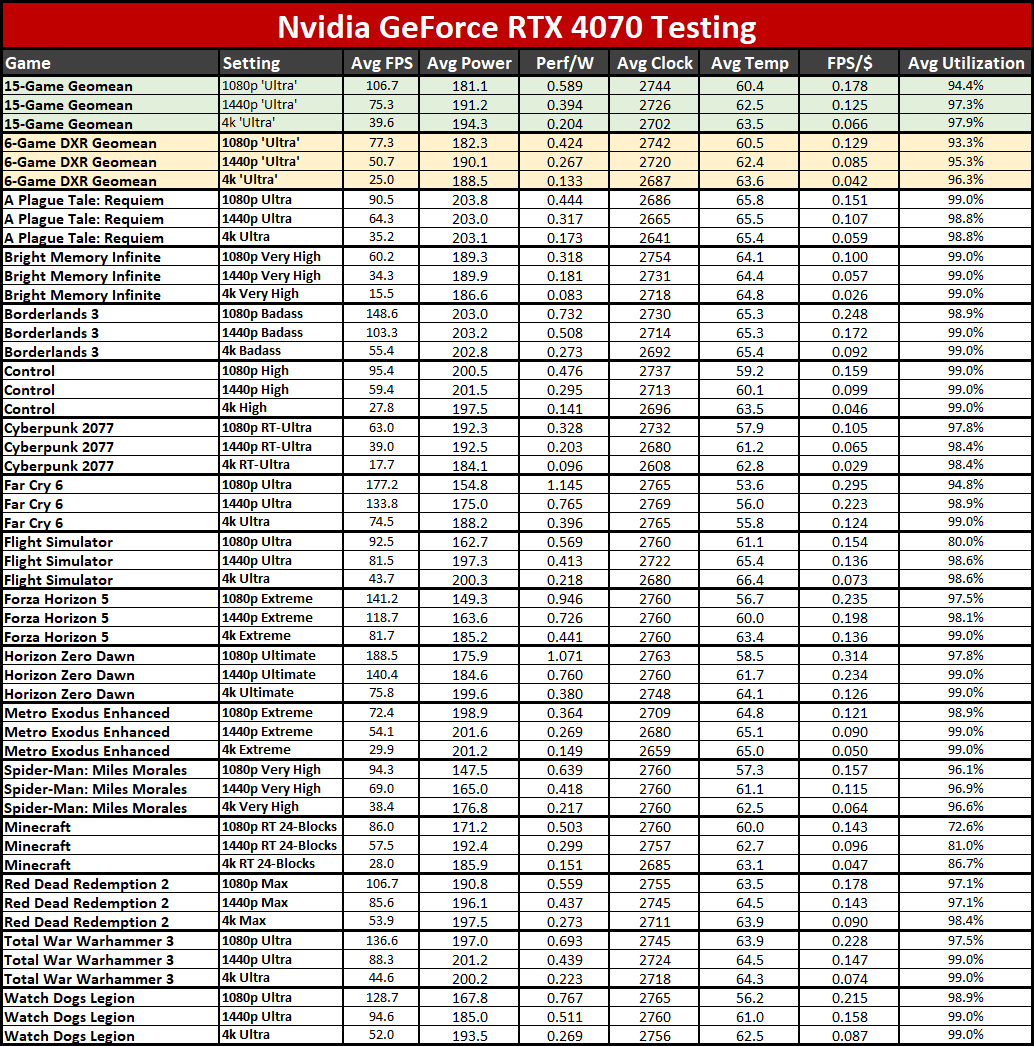

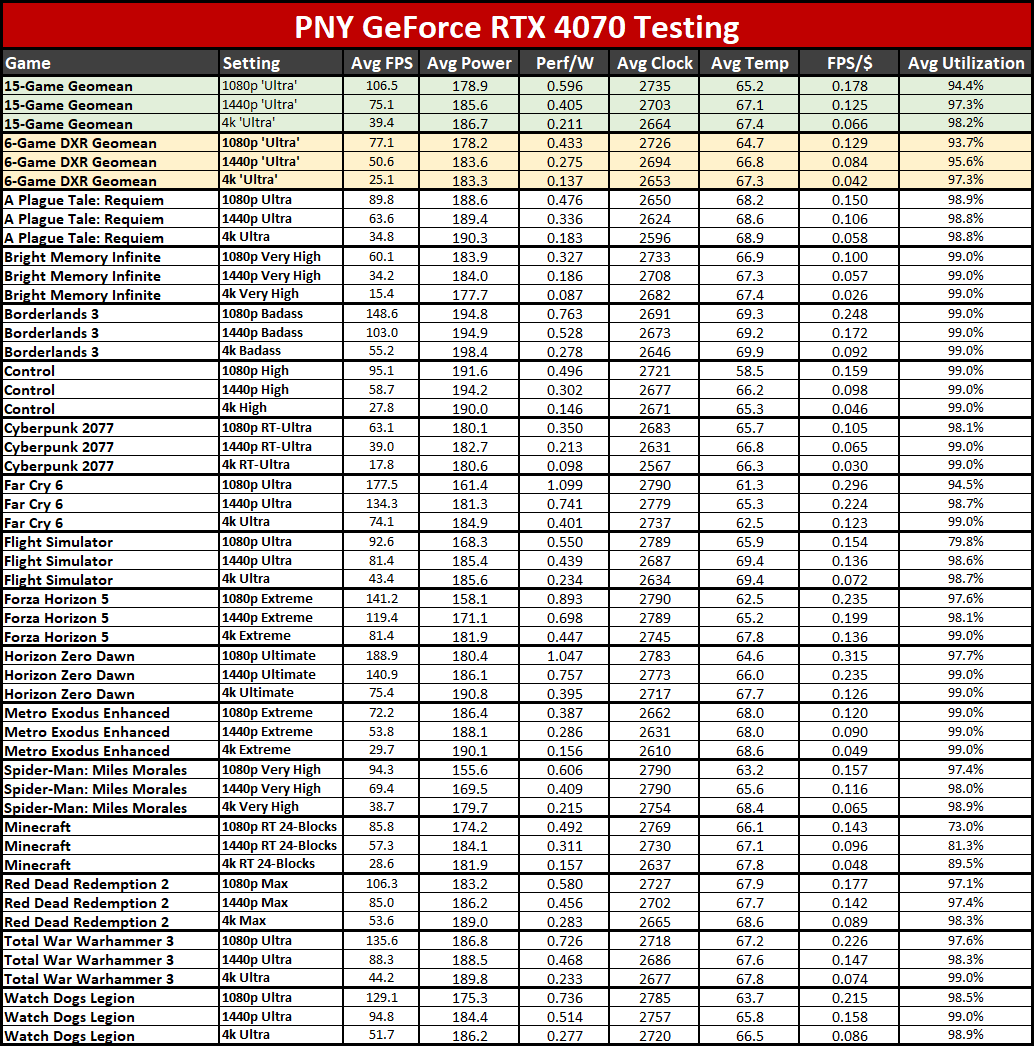

There's a lot of data in the above tables, but we'll mostly focus on the 4070 results. Maximum power use is officially rated for 200W on the reference RTX 4070, and both the Nvidia and PNY models generally stay at or below that limit. Actually, the PNY model tends to use 5–10W less power than the Founders Edition across our test suite.

The most demanding games, in terms of GPU power use, were Borderlands 3, Control, Metro Exodus Enhanced, A Plague Tale: Requiem, and Total War: Warhammer 3. At the other end of the spectrum, Far Cry 6, Forza Horizon 5, Minecraft, Spider-Man: Miles Morales, and Watch Dogs Legion all tend to stay well below the 200W mark.

Efficiency on the RTX 4070 cards is near the top of the list, with only the RTX 4080 sometimes surpassing it. It's no surprise that the previous generation RTX 30-series and RX 6000-series cards can't keep up with the latest GPUs in the efficiency department — it would be pretty terrible if efficiency decreased gen over gen.

The value proposition is another strong point for the RTX 4070. It's generally the best bang for the buck, at least if you're only focusing on the cost of the graphics card and the performance it offers. AMD's RX 6950 XT does come close, however — depending on the current street price.

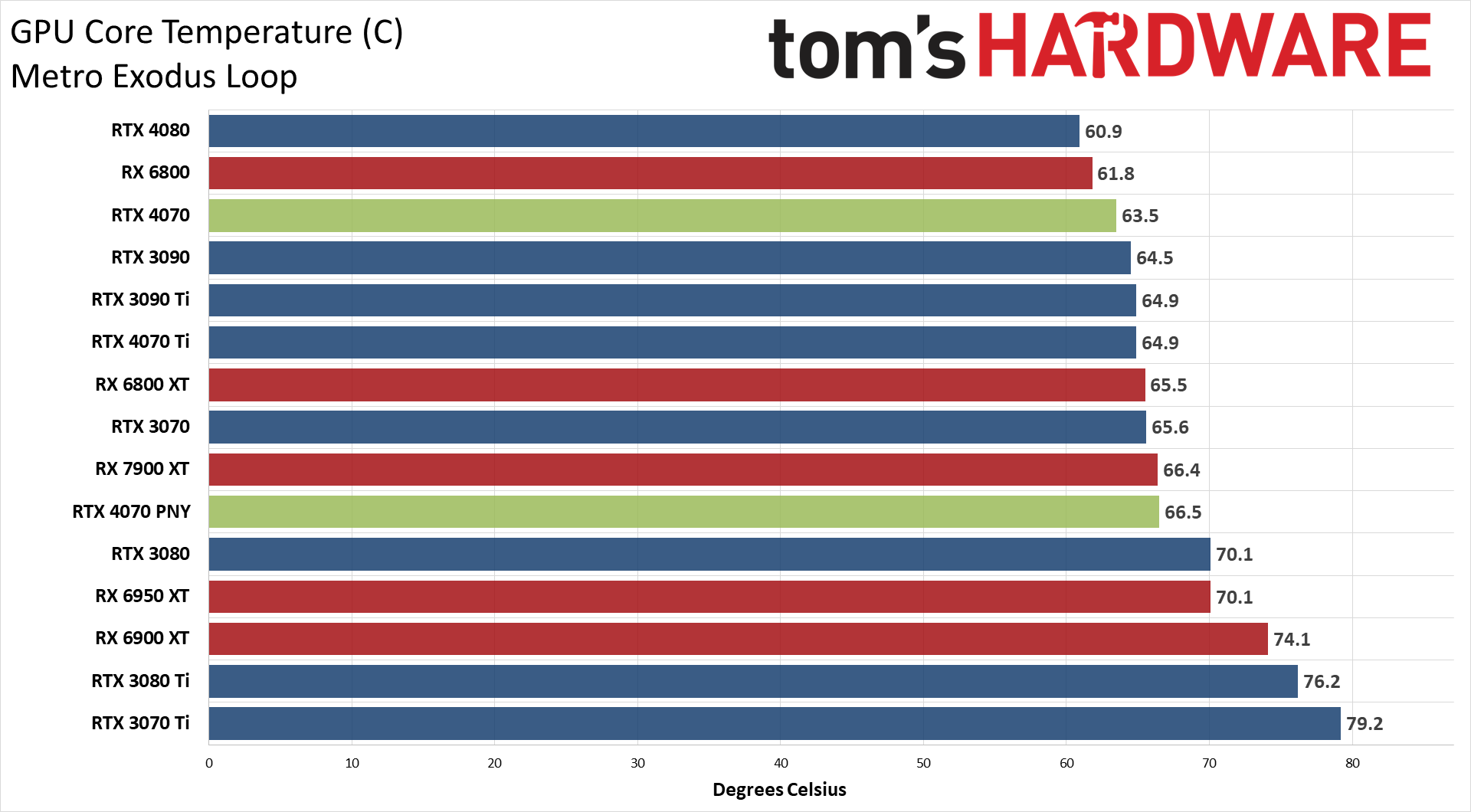

Average clocks while gaming were consistently in the 2.7 GHz range, with the Founders Edition clocking a bit higher than the PNY model overall. As usual, Nvidia's rated boost clock is quite conservative and most games will exceed it by about 150–200 MHz. Temperatures stayed below 70C for all of our tests, with the Founders Edition beating the PNY by about 5C on average.

Powenetics Testing

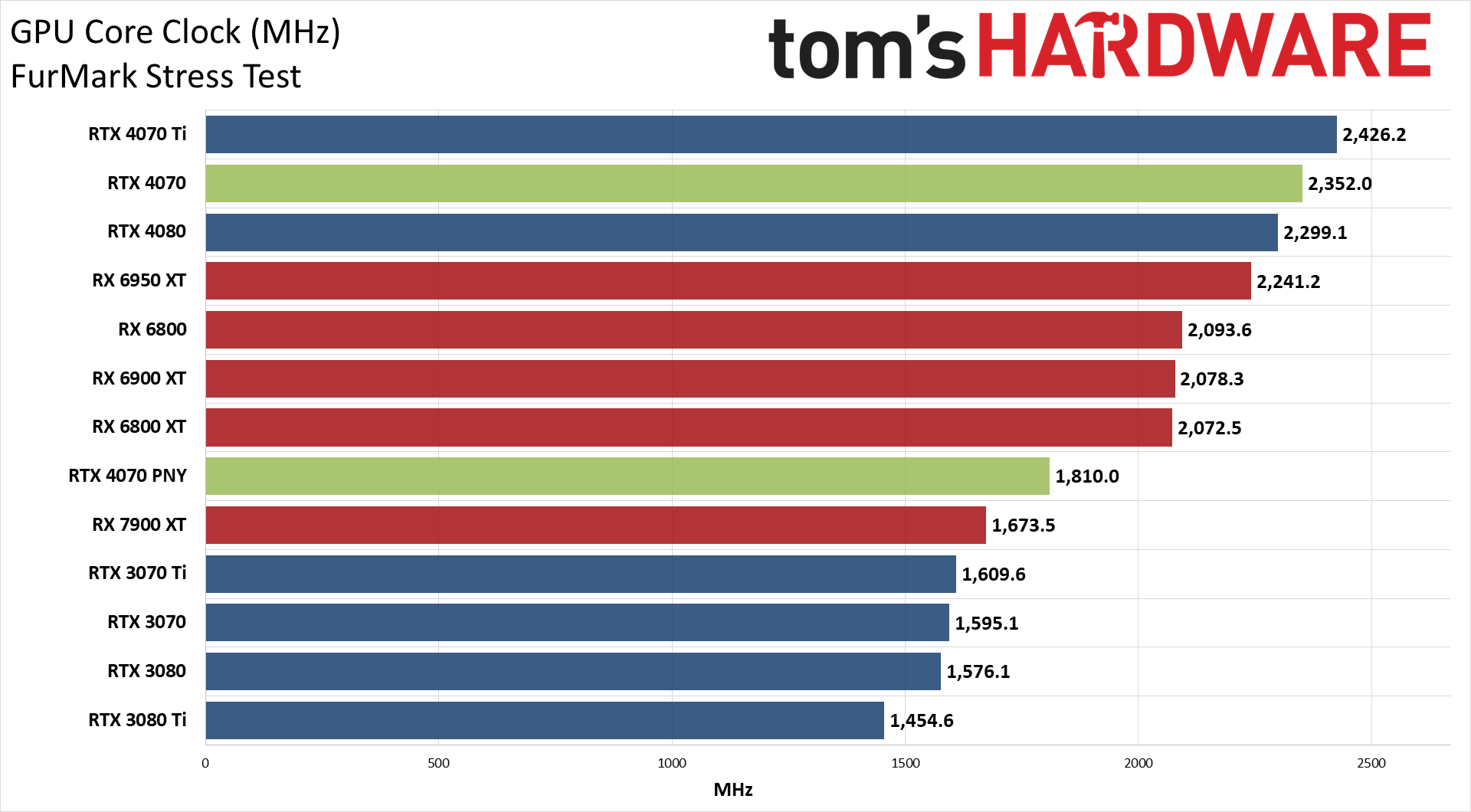

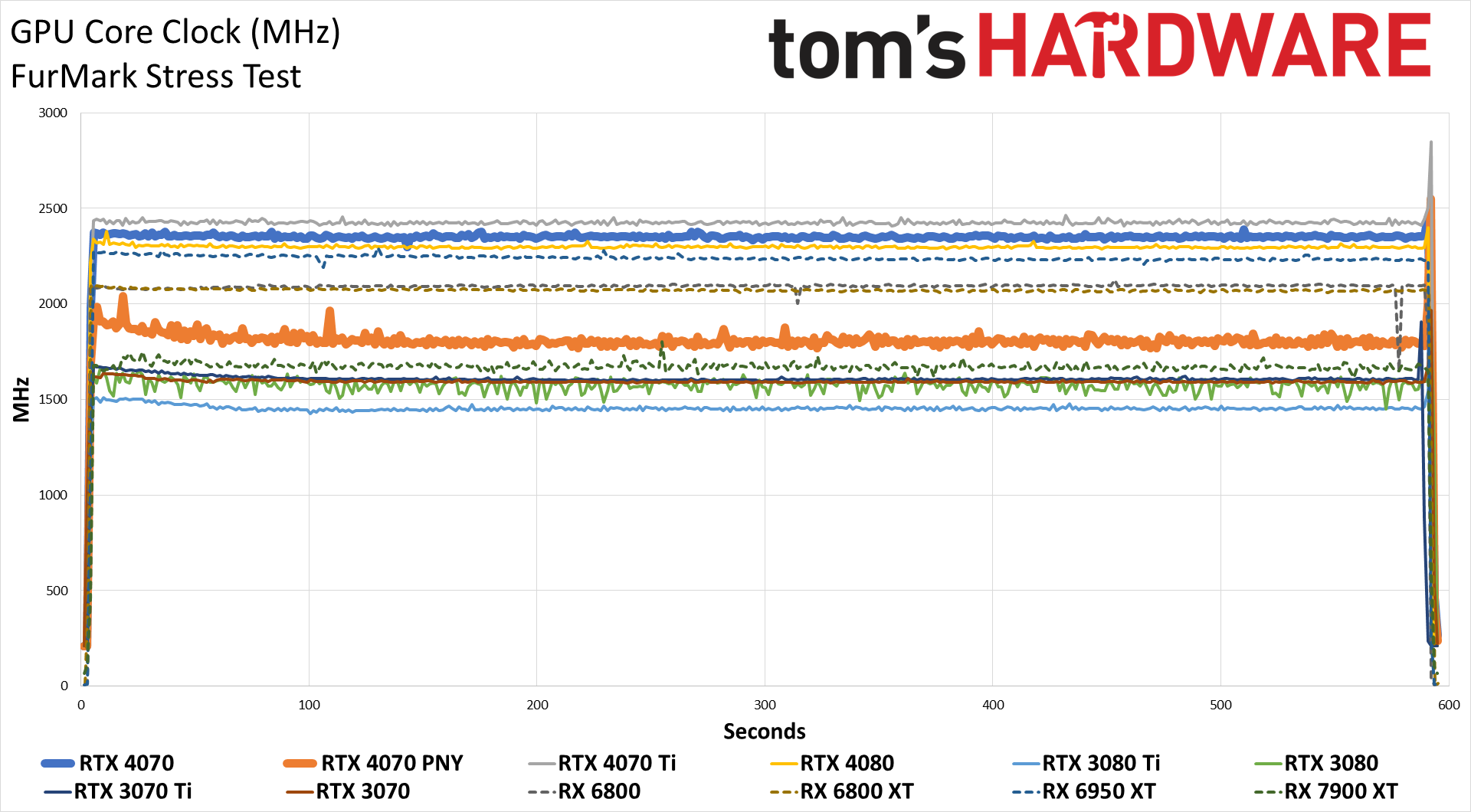

Our previous power testing focused more on pushing GPUs to their limit, and while that's a valid approach, we do feel the above power, clocks, and temperature data taken while running our normal gaming benchmarks presents a better view of what to expect. Still, we're keeping the Powenetics testing around as it's also interesting. We run Metro Exodus at settings that will basically max out power draw where possible, and the same goes for FurMark. Here's the data.

There's little question of which cooler and card are the superior design. PNY takes a conservative approach that drops the GPU clocks more in order to stay within the desired power envelope, and it even tends to run about 10–15W below the rated 200W TGP. Nvidia's Founders Edition meanwhile clocks a bit higher, runs a bit cooler, and uses a bit more power.

There's also a curious bump in power use on the Founders Edition that occurred about two minutes into the test, where it goes from just under 200W to suddenly using 205W. There was no corresponding change in clocks, fan speeds, or temperature, so we don't have a good explanation other than noting that it happened in our testing.

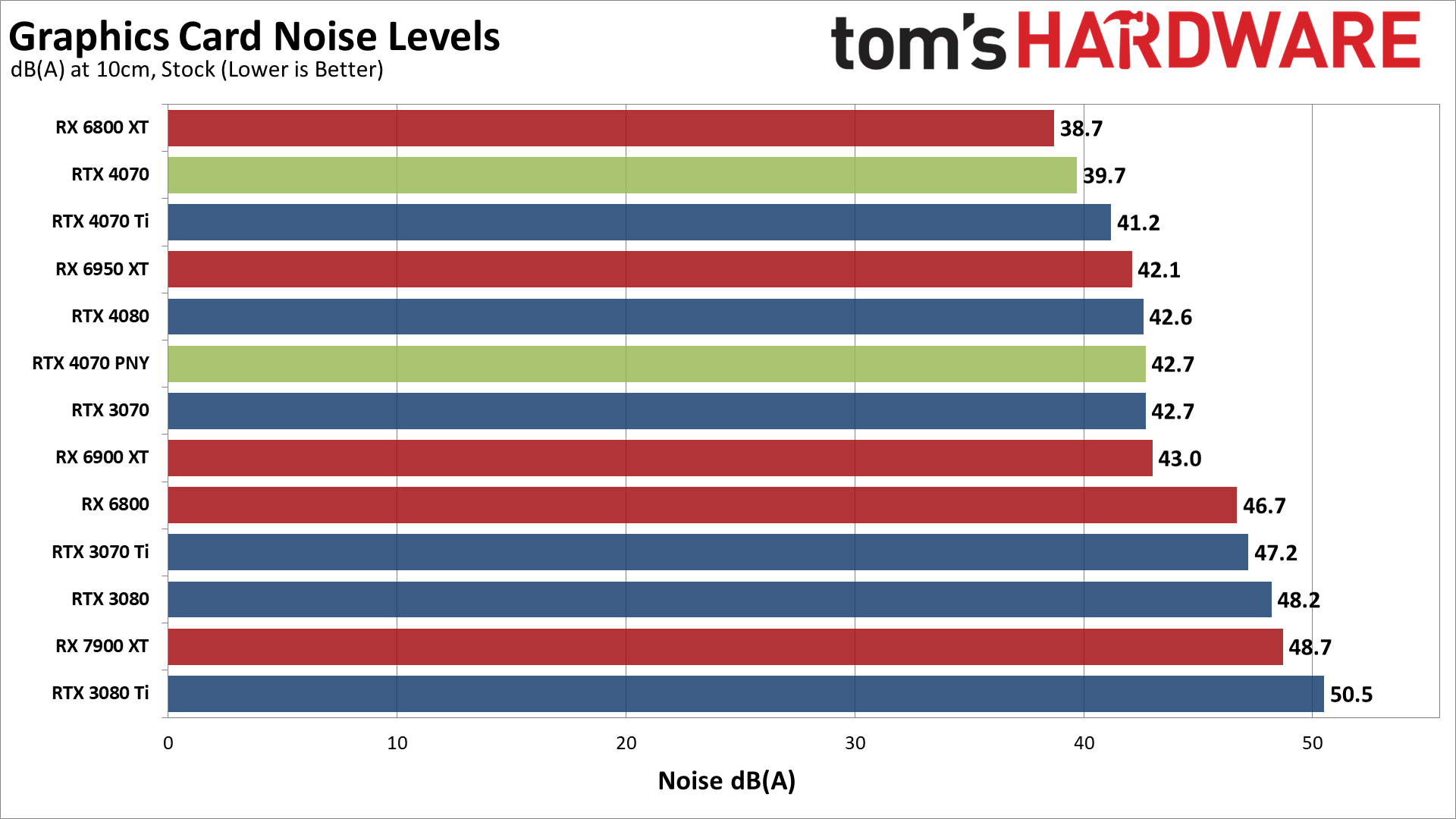

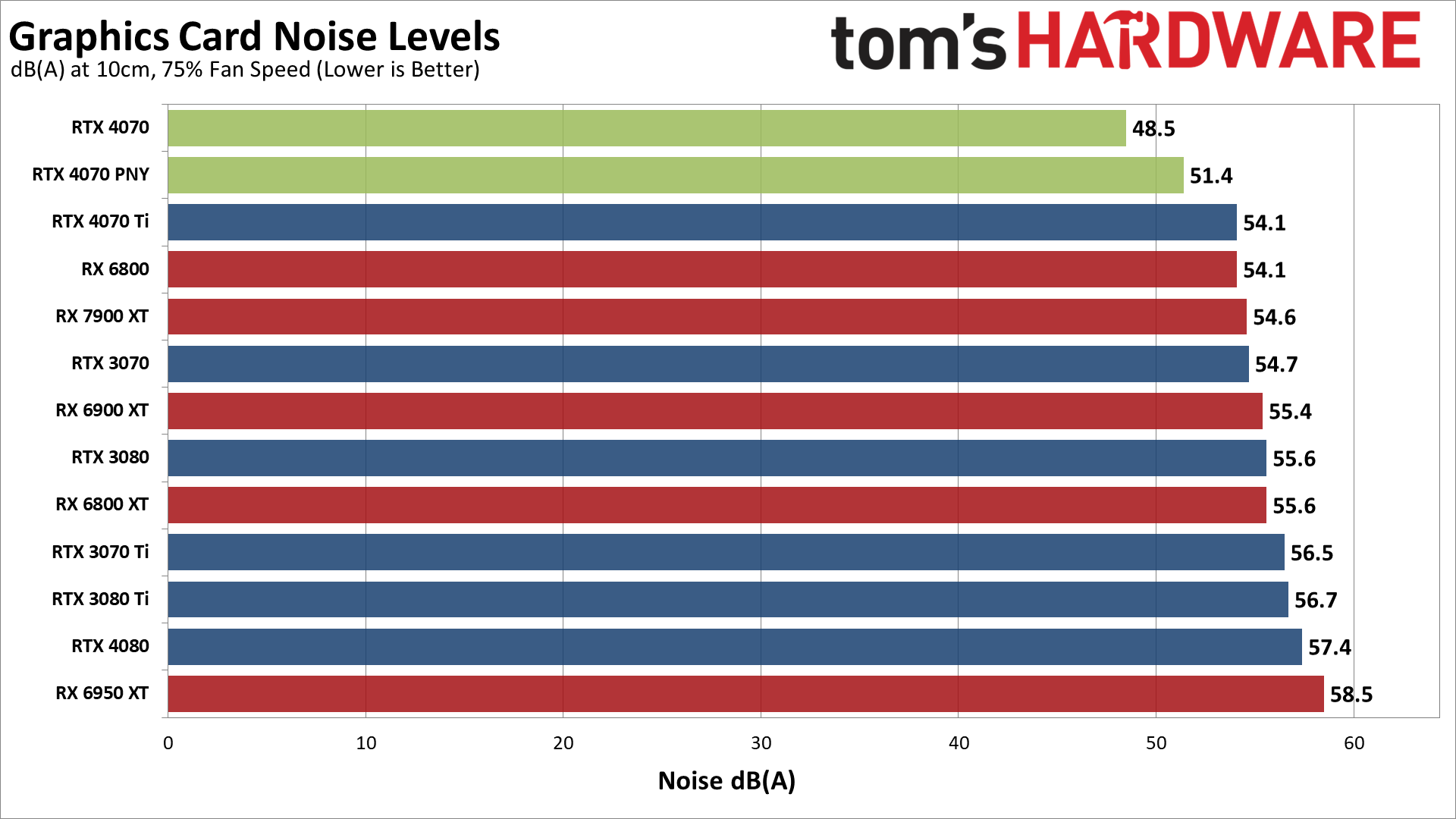

While the power and other data can be interesting, the noise produced by the cards' fans arguably rates as being the more important aspect. We check noise levels using an SPL (sound pressure level) meter placed 10cm from the card, with the mic aimed right at the center of one fan: the center fan if there are three fans, or the the right fan for two fans — or the only fan facing to the left of the card in the case of the RTX 4070 Founders Edition. This helps minimize the impact of other noise sources like the fans on the CPU cooler. The noise floor of our test environment and equipment is around 31–32 dB(A).

After running Metro for over 15 minutes, the RTX 4070 Founders Edition settled in at a fan speed of 56% and a noise level of 39.7 dB(A). That's about as quiet as almost any high-end GPU we've tested during the past couple of years, though not particularly surprising considering the card only has to dissipate 200W. PNY's RTX 4070 ended up being slightly louder at 42.7 dB(A) with 57% fan speed, likely because the two fans aren't of the same level as the Nvidia fans (plus one Nvidia fan is on the opposite side of the card).

We also tested with a static fan speed of 75%, which caused the RTX 4070 Founders Edition to generate 48.5 dB(A) of noise. The PNY card had a noise level of 51.4 dB(A) with the fans at 75%. We don't expect the cards would normally ever hit that level without overclocking, but it does give you some idea of what you can expect in warmer environments.

- MORE: Best Graphics Cards

- MORE: GPU Benchmarks and Hierarchy

- MORE: All Graphics Content

To say that the RTX 4070 Ti was poorly received at launch would be an understatement. It wasn't a terrible card, but people hoped for a lot lower pricing on the 4070 Ti. Unlike some of the other GPUs, it has basically been in stock since launch day at MSRP. With the RTX 4070, Nvidia seems to be getting the message from gamers that $800 or more is too much. With inflation issues and a downward spiraling economy, a lot of people are looking to save money rather than blowing it on a graphics card upgrade.

Dropping the entry price of the RTX 40-series down to $599 with the RTX 4070 helps, no doubt, though it's still a $100 increase over the previous-gen RTX 3070. Then again, inflation isn't just hitting individuals, so increasing the generational price by 20% after two and a half years might be justifiable. Maybe. If not, kick back and wait for the other 40-series GPUs to arrive, or AMD's lower-tier RX 7000-series parts.

Compared to the previous-gen parts, the RTX 4070 ends up trading blows with the RTX 3080 across our test suite. There are times when it's 5–10 percent faster, and other instances where it's 5–10 percent slower. Of course, that's not counting DLSS 3, which can boost performance by another 30% or more. But as we've said many times, DLSS 3's Frame Generation doesn't directly translate into universally better performance. It can smooth out visuals, but it adds latency and still needs a decent base level of performance before it's acceptable.

The most impressive aspect of the RTX 4070 isn't the performance or the price, but rather the efficiency. Even though it's not clearly superior to an RTX 3080, it's close enough that we'd give it the edge. The fact that it needs 120W less power to reach the same level of performance is just the cherry on top.

Just don't go into the RTX 4070 expecting twice the performance for half the price. As Jensen put it last year, those types of gains aren't happening anymore, especially as we reach the scaling limits of Moore's Law (RIP). Instead, Nvidia is offering more of an alternative to the last generation's top GPU, the RTX 3080... now at a lower price, with significantly lower power requirements, and with some extra features like DLSS 3 and AV1 encoding support.

If you were previously eyeing an upgrade to an RTX 3070 for $500, the RTX 4070 is a far better deal. It's 33% faster across our entire test suite, not counting DLSS 3, for a 20% increase in price. And you get to choose whether or not you want to turn on Frame Generation, plus the option to stream in AV1 if that's your thing.

AMD's only answer, for now, is to point at less efficient, previous-gen offerings. "Look, the RX 6950 XT is on sale for the same price!" If you didn't pick up an RX 6000-series sometime in the past couple of years, or even some time since the end of Ethereum mining last summer, what has changed that it would suddenly make sense?

Speaking of cryptocurrency mining, while we didn't test the RTX 4070, rumblings are coming from that sector once more. Hopefully we don't repeat the cycle of sold-out GPUs, and the best-case coins are only netting a bit more than a dollar a day on a 4090. But Gaben save us if another Bitcoin price spike comes along — or if not cryptocoins, it might be ChatGPT-powered AIs buying all our gaming GPUs.

If you've been holding out for an affordable RTX 40-series GPU, the RTX 4070 is probably about as good as it will get in the near term. Sure, we'll see an RTX 4060, maybe even a 4060 Ti, and RTX 4050 as well. But we really don't like the thought of buying a GPU with less than 12GB VRAM these days, and it's tough to see Nvidia slapping more than 8GB on some of the upcoming models. Yes, they might cost less, but they'll require a lot more in the way of compromise.

If you're a mainstream gamer still hanging on to an RTX 2070, or an even older GTX 1070, and you're finally ready to upgrade, hopefully, you can scrape together the $600 needed for an RTX 4070. Of course, we can always wish for lower prices and higher performance, but with the current market conditions, the 4070 is about as good as we're likely to get.

Speaking of which, both retail availability and reviews of the custom RTX 4070 cards will arrive tomorrow.

- MORE: Best Graphics Cards

- MORE: GPU Benchmarks and Hierarchy

- MORE: All Graphics Content