The Nvidia GeForce RTX 4060 drops the entry price for the Ada Lovelace architecture and RTX 40-series GPUs to $299. It lands between the former RTX 3060 and RTX 3050 in pricing, and while there are always compromises as you move down the price and performance ladder, it represents a potentially great value proposition. For those on a budget, it could be one of the best graphics cards, assuming performance is up to snuff.

There are deserved complaints about limiting the AD107 GPU at the heart of this card to a 128-bit memory interface, though the lower price compared to the RTX 4060 Ti takes out some of the sting. Still, the previous generation RTX 3060 came with a 192-bit interface and 12GB of memory, so this represents a clear step back in that area. We'll discuss this more on the next page, as it's an important topic.

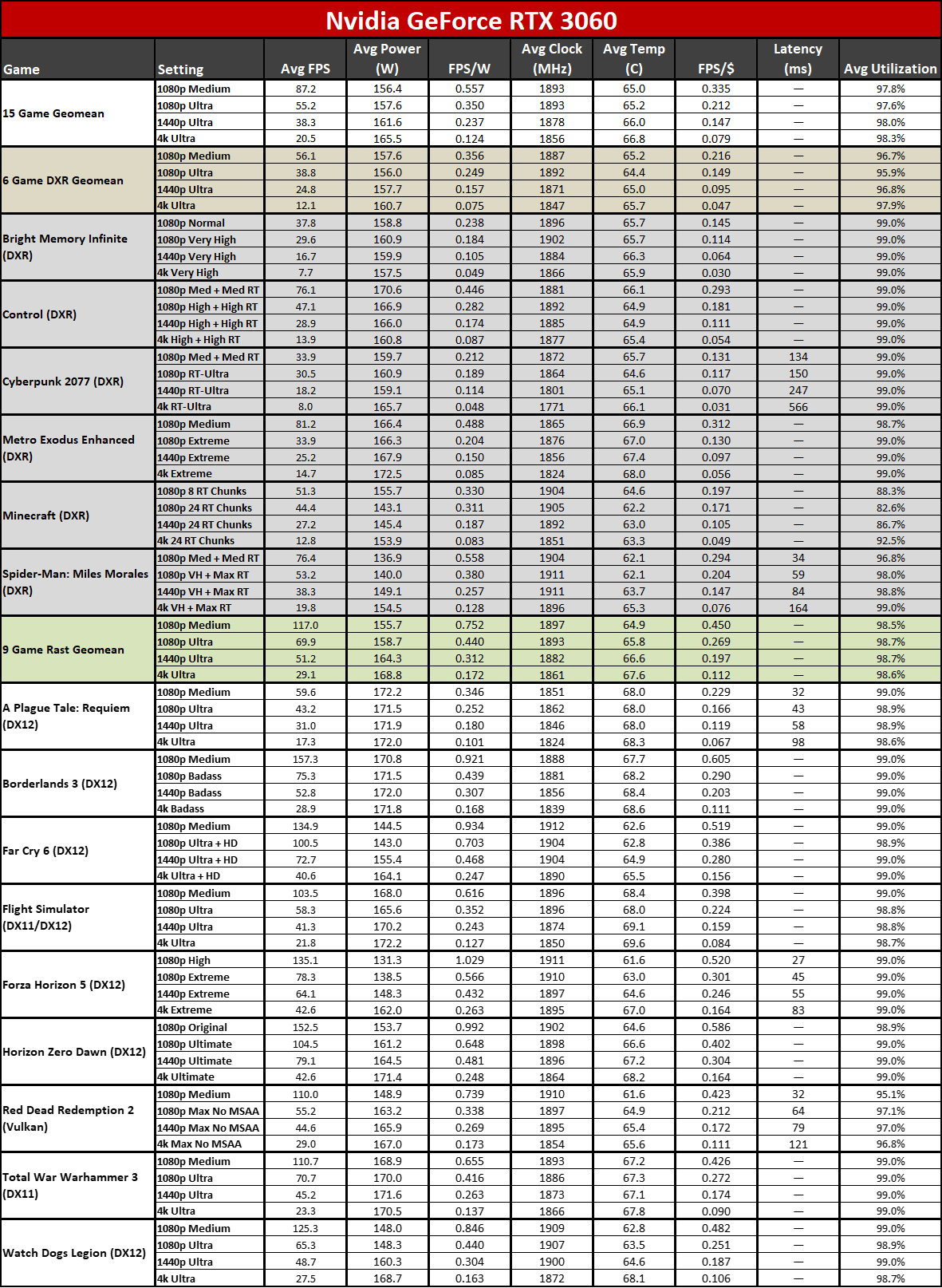

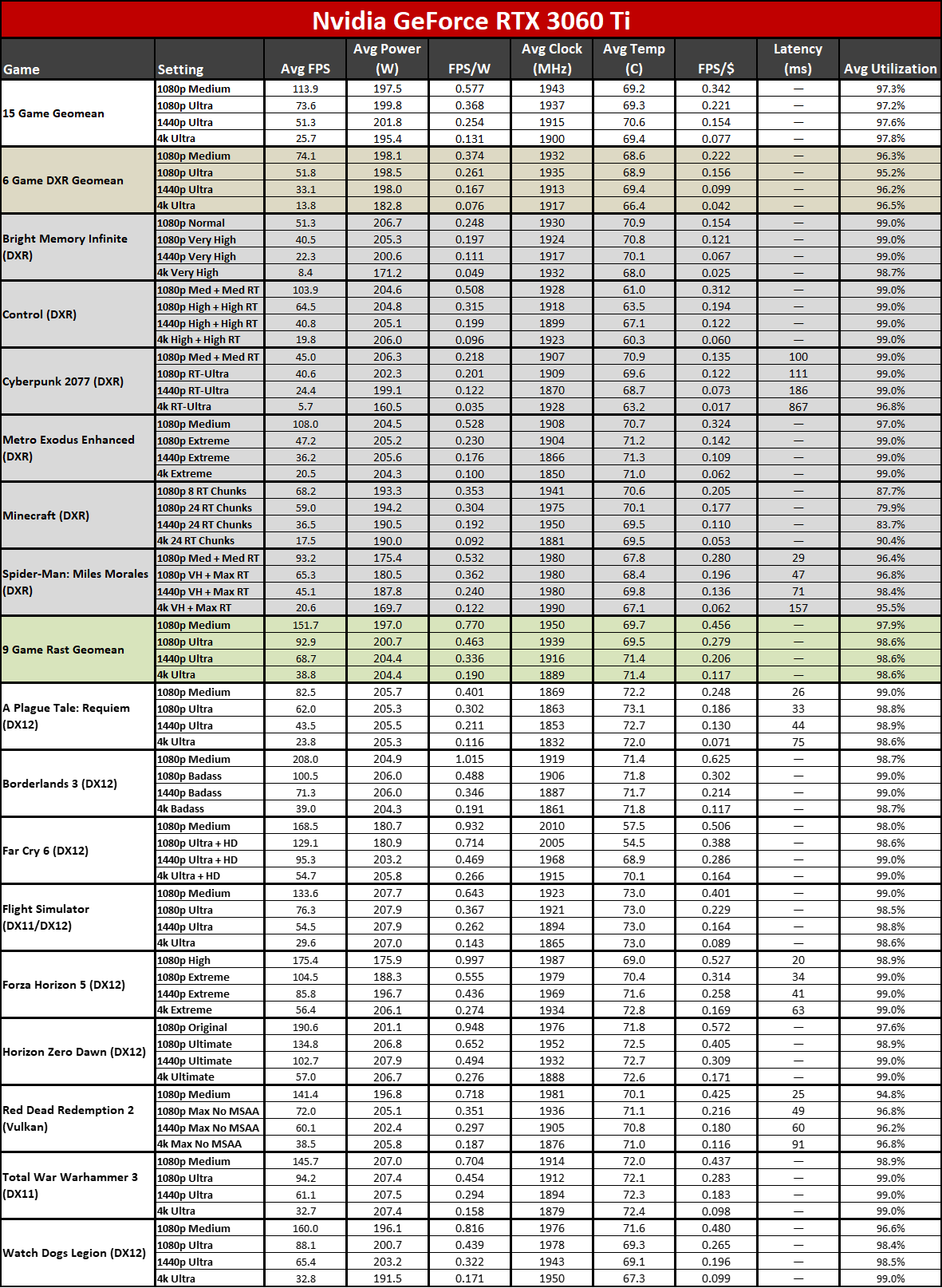

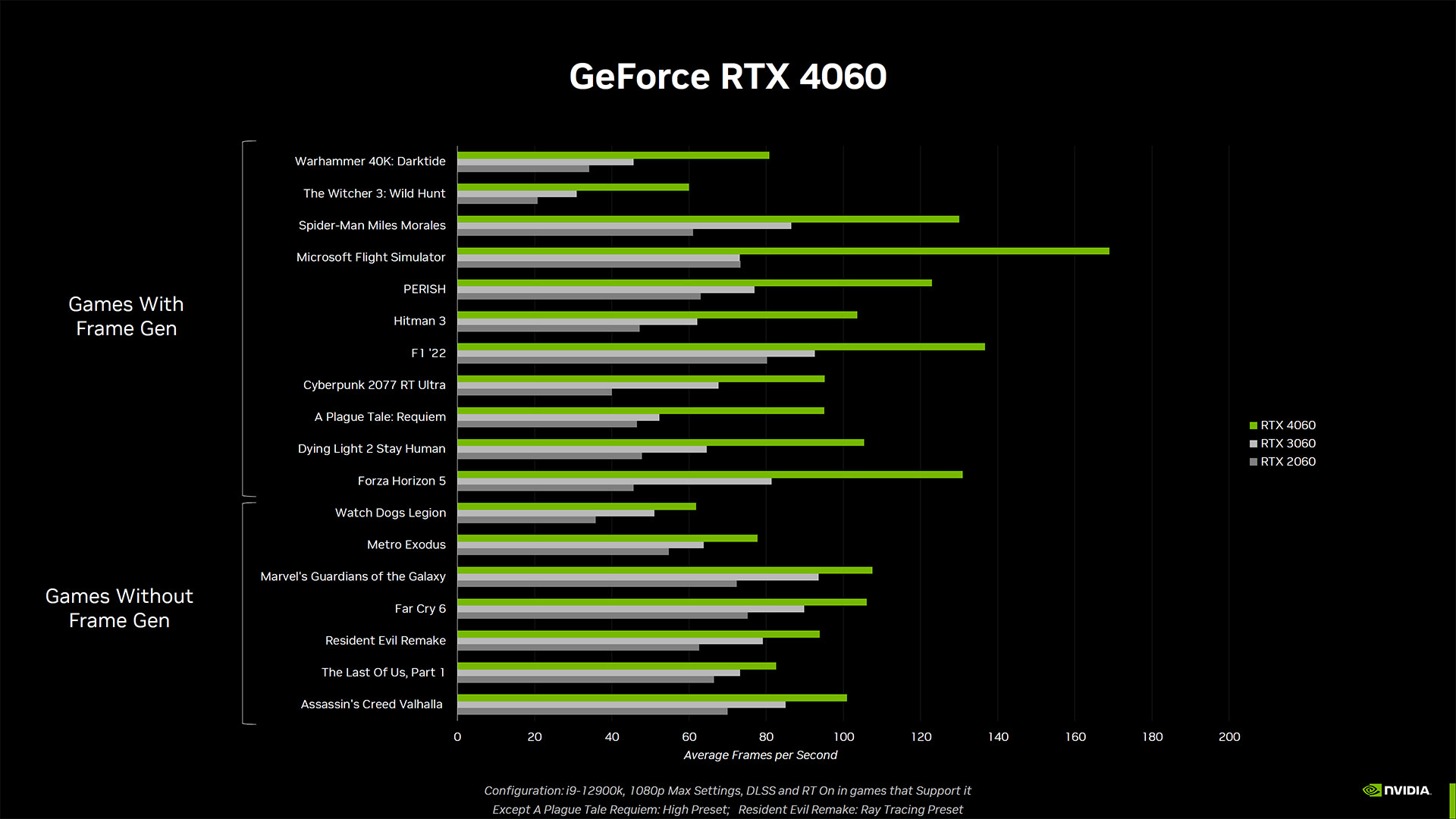

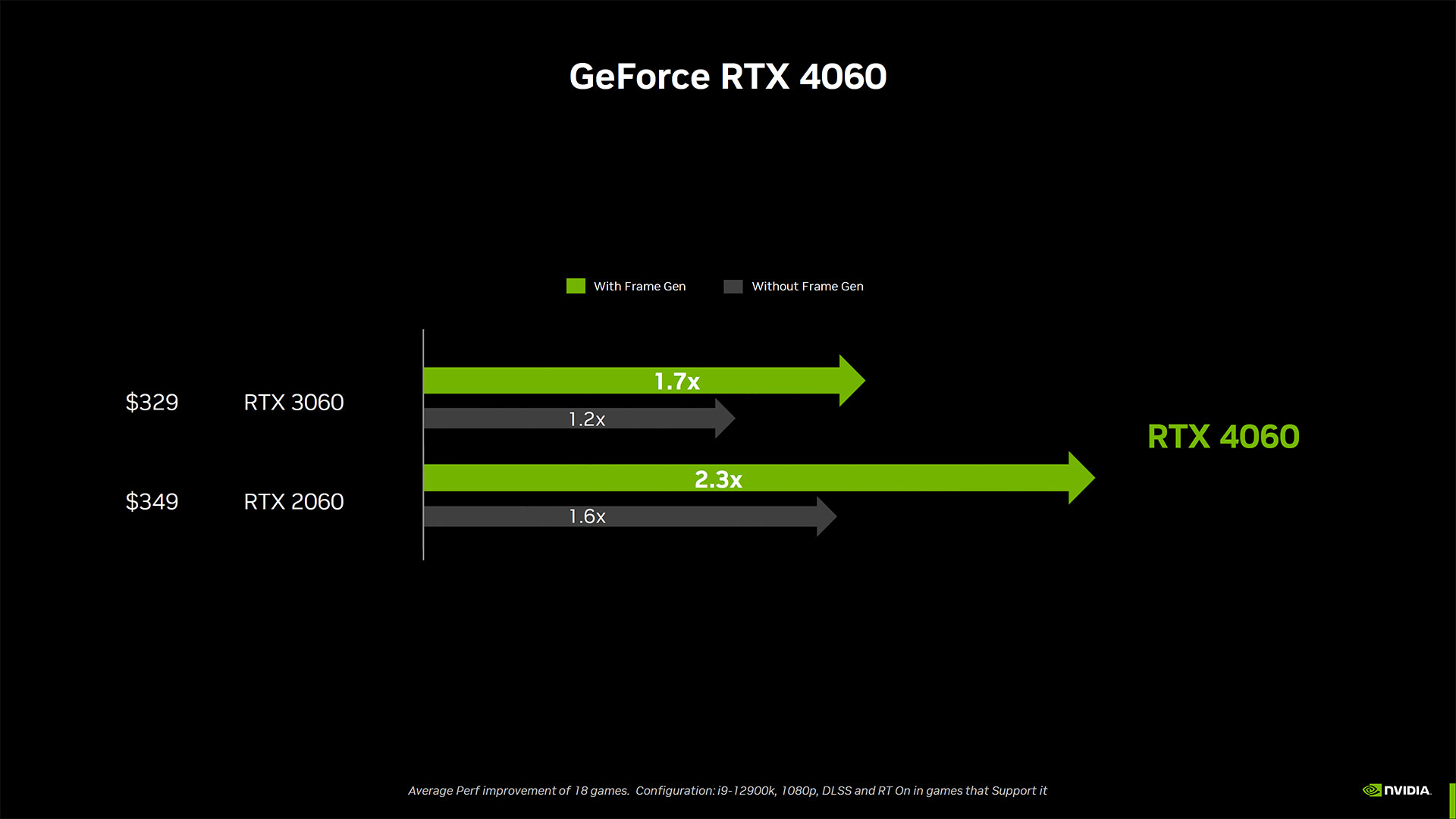

We'll update the GPU benchmarks hierarchy later today, now that the embargo is over. The bottom line shouldn't be too surprising: In the vast majority of games, the new the RTX 4060 easily outperforms the previous generation RTX 3060, and it almost catches the RTX 3060 Ti. Toss in DLSS 3's Frame Generation and the dramatically improved efficiency and you can make a reasonable case for buying an RTX 4060 over a previous gen card. You won't tap into new levels of performance, but you'll get all the latest Nvidia features and upgrades.

The chief competition from AMD comes from two places. The latest generation Radeon RX 7600 undercuts Nvidia's price by up to $50 now, while the previous generation RX 6700 XT starts at $309, basically matching the RTX 4060 on price but providing 50% more memory and potentially better overall performance. Depending on pricing and availability, the RTX 3060 Ti (and other 30-series GPUs) may also be an interesting option, but we don't expect such cards to stick around for long, unless you're willing to purchase a used card.

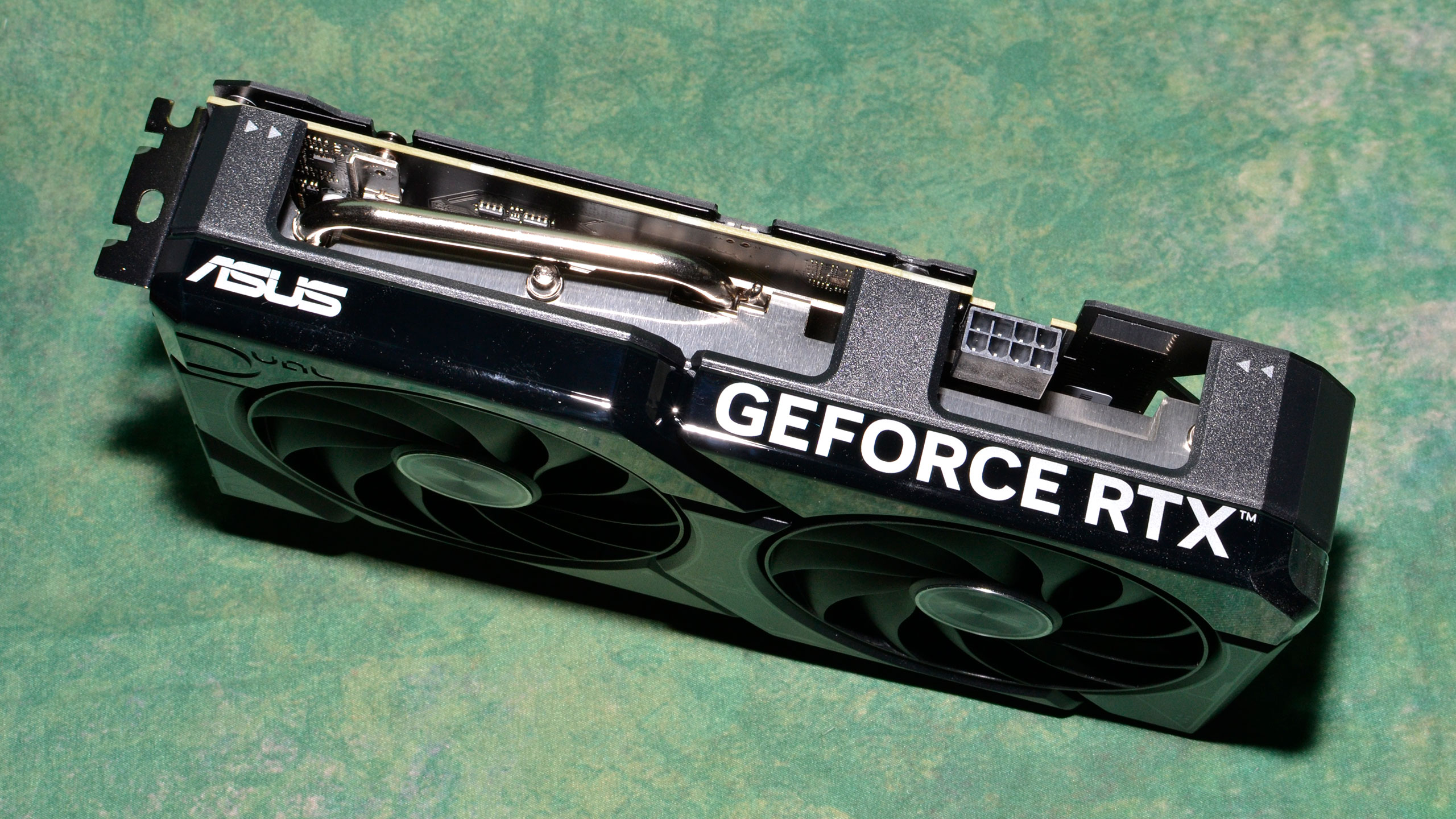

Let's check the specifications, which were revealed over a month ago with the RTX 4060 Ti announcement. Nvidia allows reviews of the $299 MSRP cards today, while more expensive models are under embargo until tomorrow. We received an Asus RTX 4060 Dual OC model from Nvidia, which comes with a modest factory overclock, but it's still priced at $299.

There are twelve GPUs listed in the above table if you scroll to the right, representing the most useful comparisons for the RTX 4060, but the first column is the most pertinent. The new GeForce RTX 4060 uses Nvidia's AD107 GPU, which is also the same chip found in the RTX 4060 and 4050 Laptop GPUs.

The RTX 4060 uses the full AD107 chip, with 24 streaming multiprocessors (SMs), each with 128 CUDA cores. That gives the total shader count of 3,072. Astute mathematicians will note that this is less than the previous generation RTX 3060's 3,584 shaders. However, as with the rest of the RTX 40-series lineup, clock speeds are significantly higher at 2460 MHz, compared to 1777 MHz on the 3060. The result is that peak compute performance ends up being 19% higher.

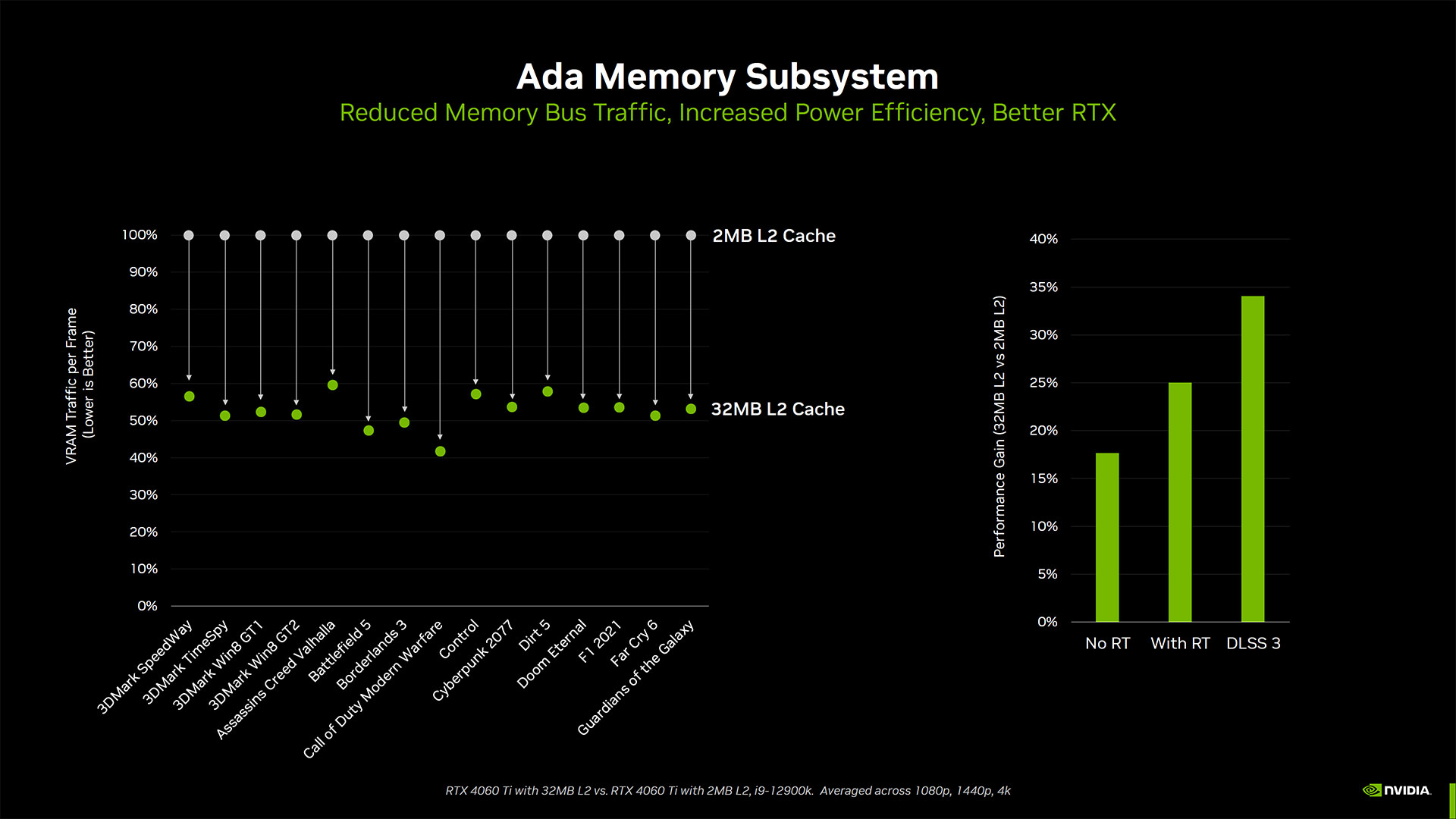

Memory bandwidth is lower for raw throughput, at 272 GB/s compared to the RTX 3060's 360 GB/s. But the L2 cache has ballooned from 3MB on the 3060 to 24MB on the 4060, and Nvidia says that improves the effective bandwidth by 67% — to 453 GB/s. We'll discuss the memory subsystem and its ramifications more on the next page.

One thing to note is that the RTX 4060 comes with an x8 PCIe interface, just like the RTX 4060 Ti, where the RTX 4070 and above use an x16 link width. This is similar to the RX 7600 and previous generation RTX 3050, where cutting the additional PCIe lanes helps to keep the die size smaller. This shouldn't matter much with most modern PCs, but if you're planning to upgrade an older PC that only supports PCIe 3.0 with an RTX 4060, you may lose a bit of performance compared to what we'll show in our benchmarks.

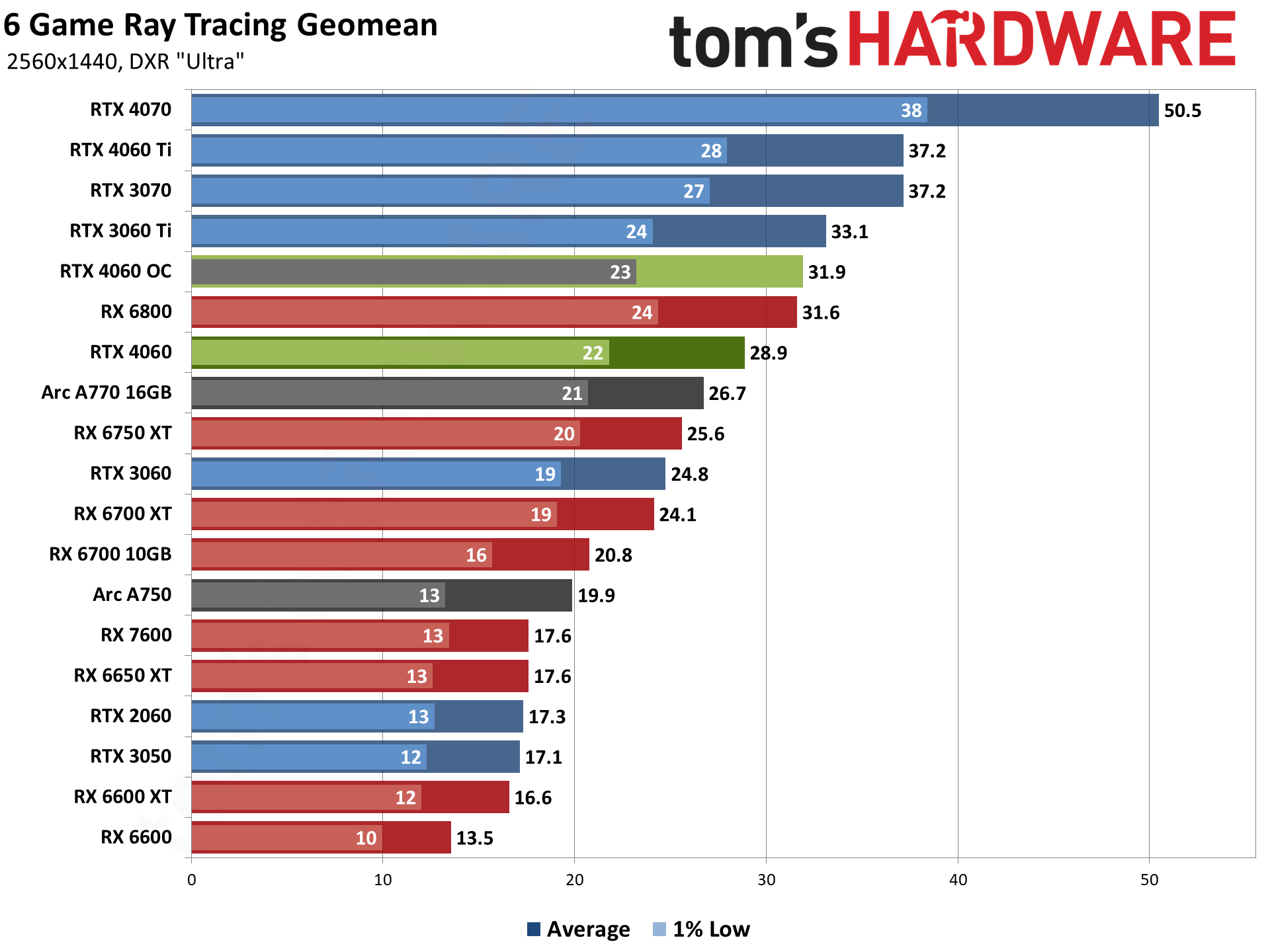

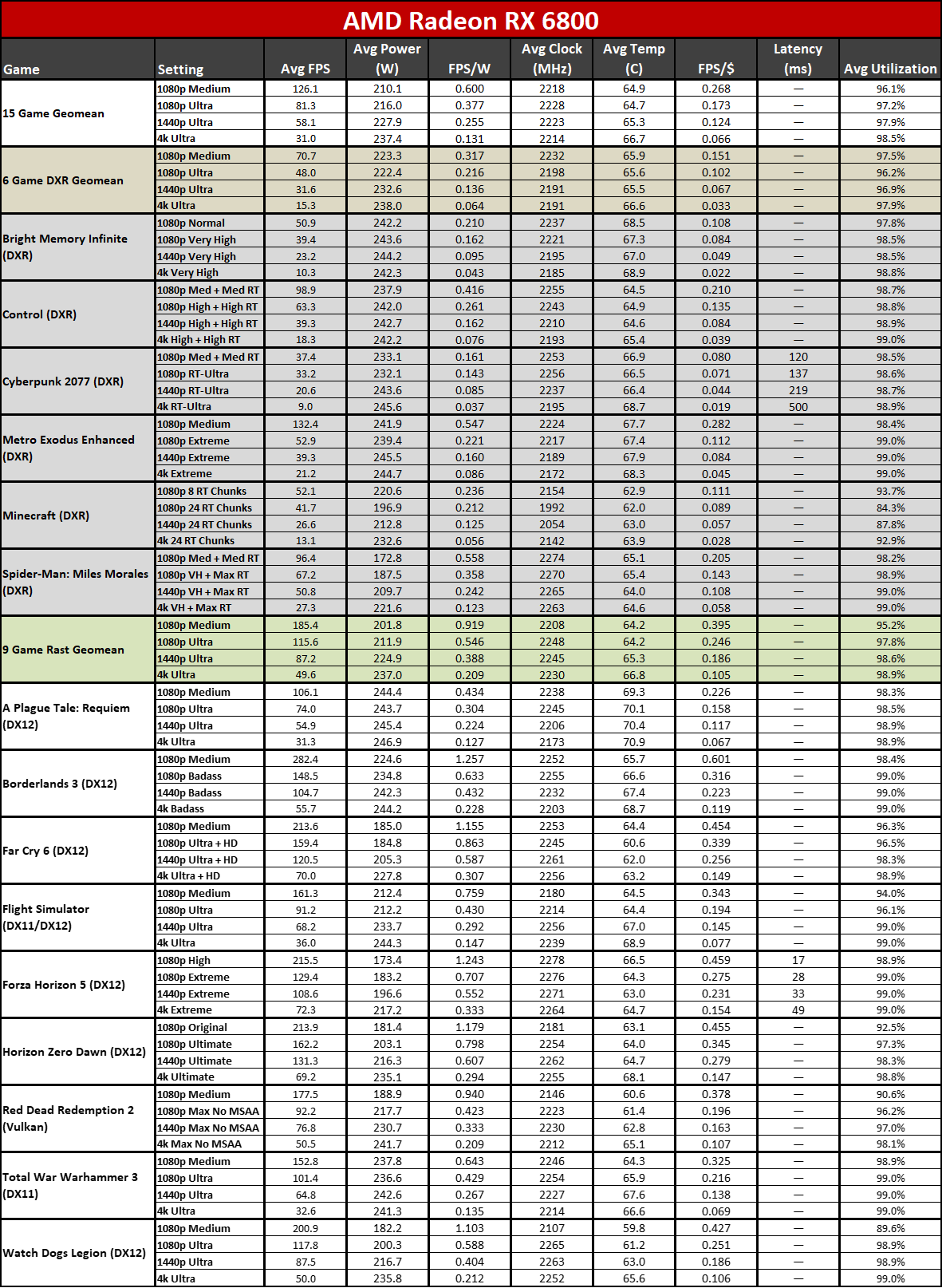

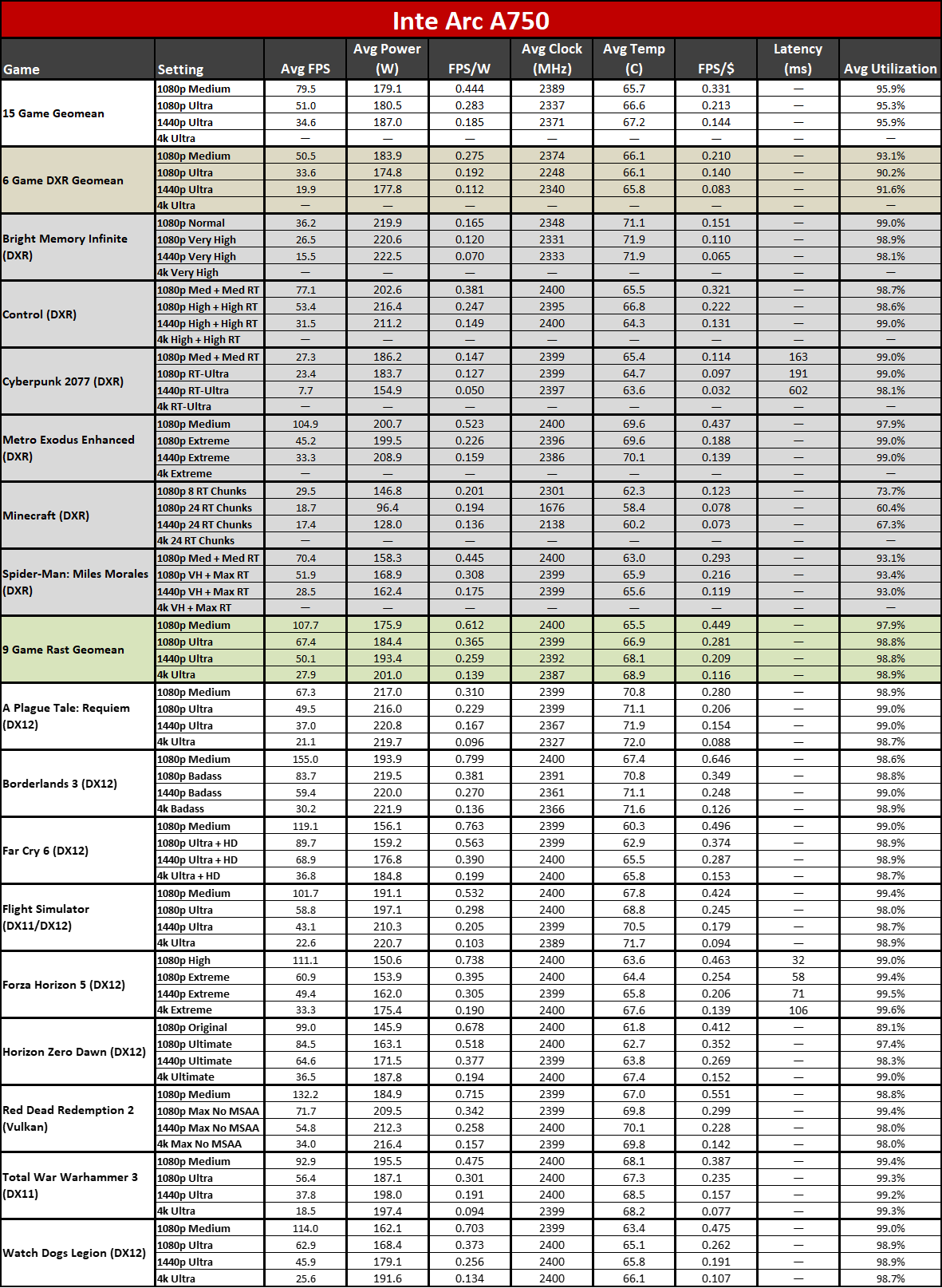

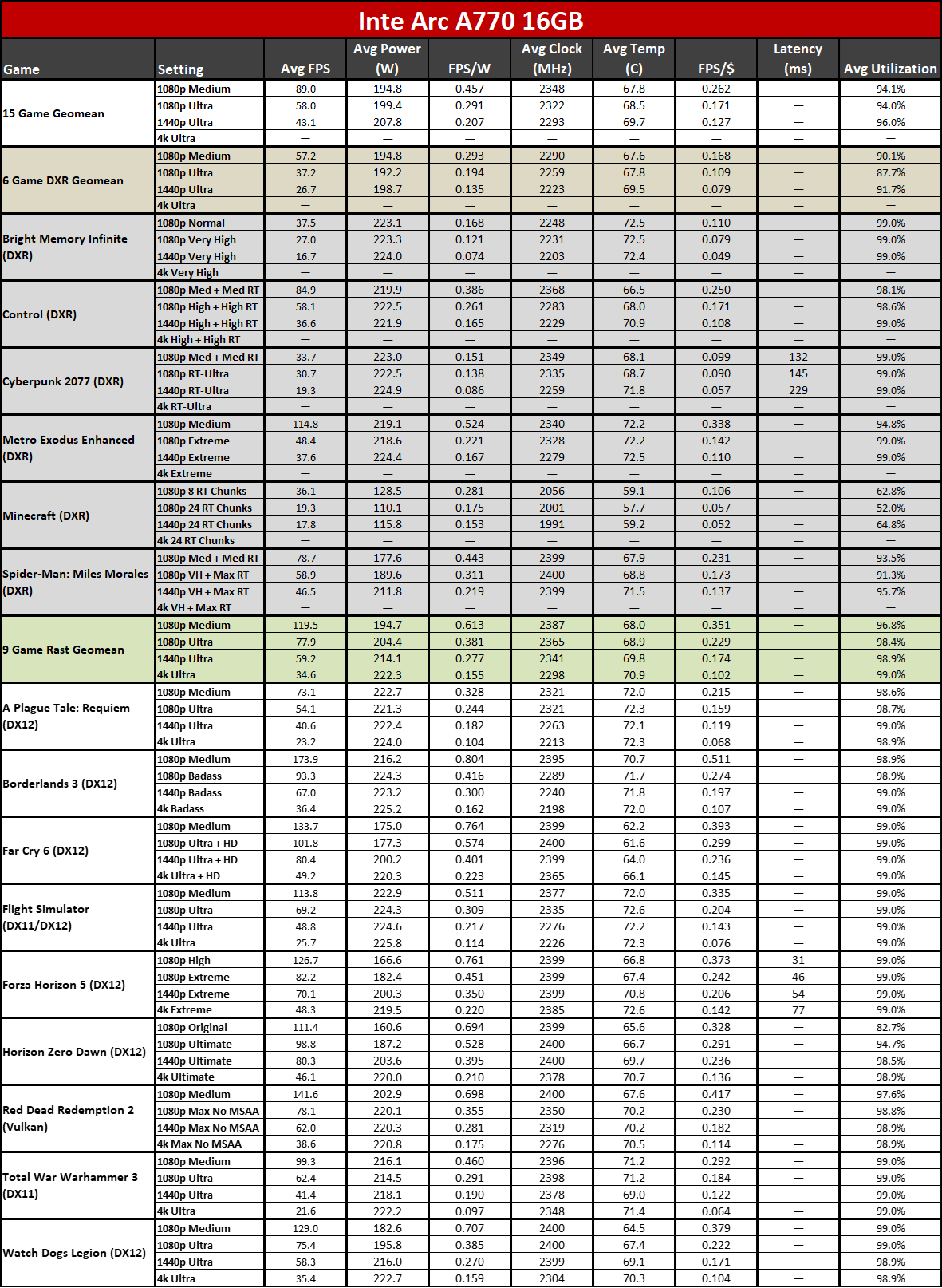

Looking at the competition based on relatively similar pricing, we have a lot of options. AMD has the new RX 7600 8GB card, along with the previous generation RX 6700 XT 12GB and RX 6700 10GB. From Intel, there's the Arc A770 8GB and Arc A750. Then Nvidia also has to contend with existing cards like the RTX 3060, RTX 3060 Ti, and RTX 3070. It's a safe bet that Nvidia can match or exceed cards from AMD and Intel when it comes to ray tracing performance and AI workloads, but it will likely lose out to the 3060 Ti and above in those same tasks. Rasterization performance should prove to be a tougher battle for the newcomer.

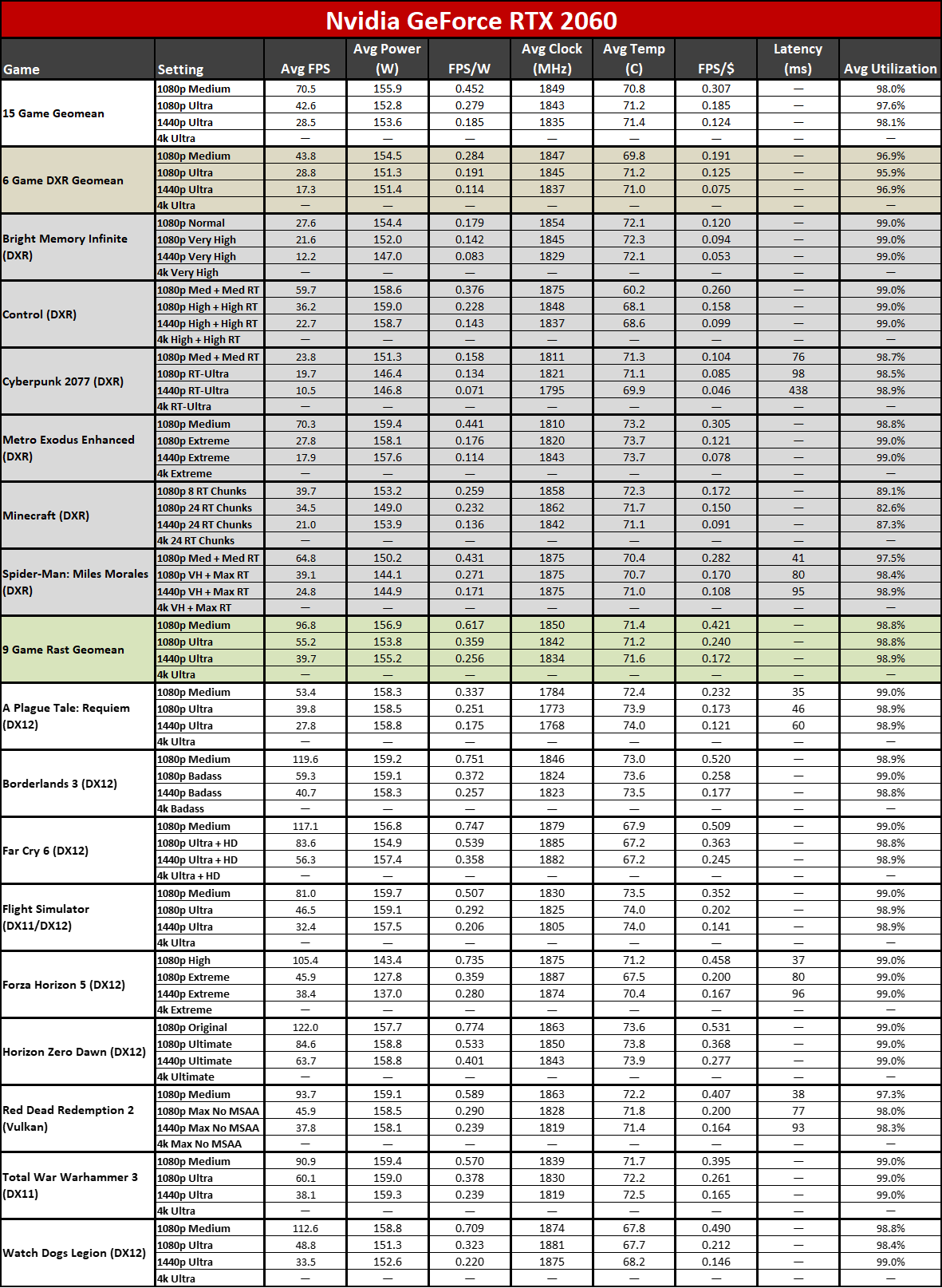

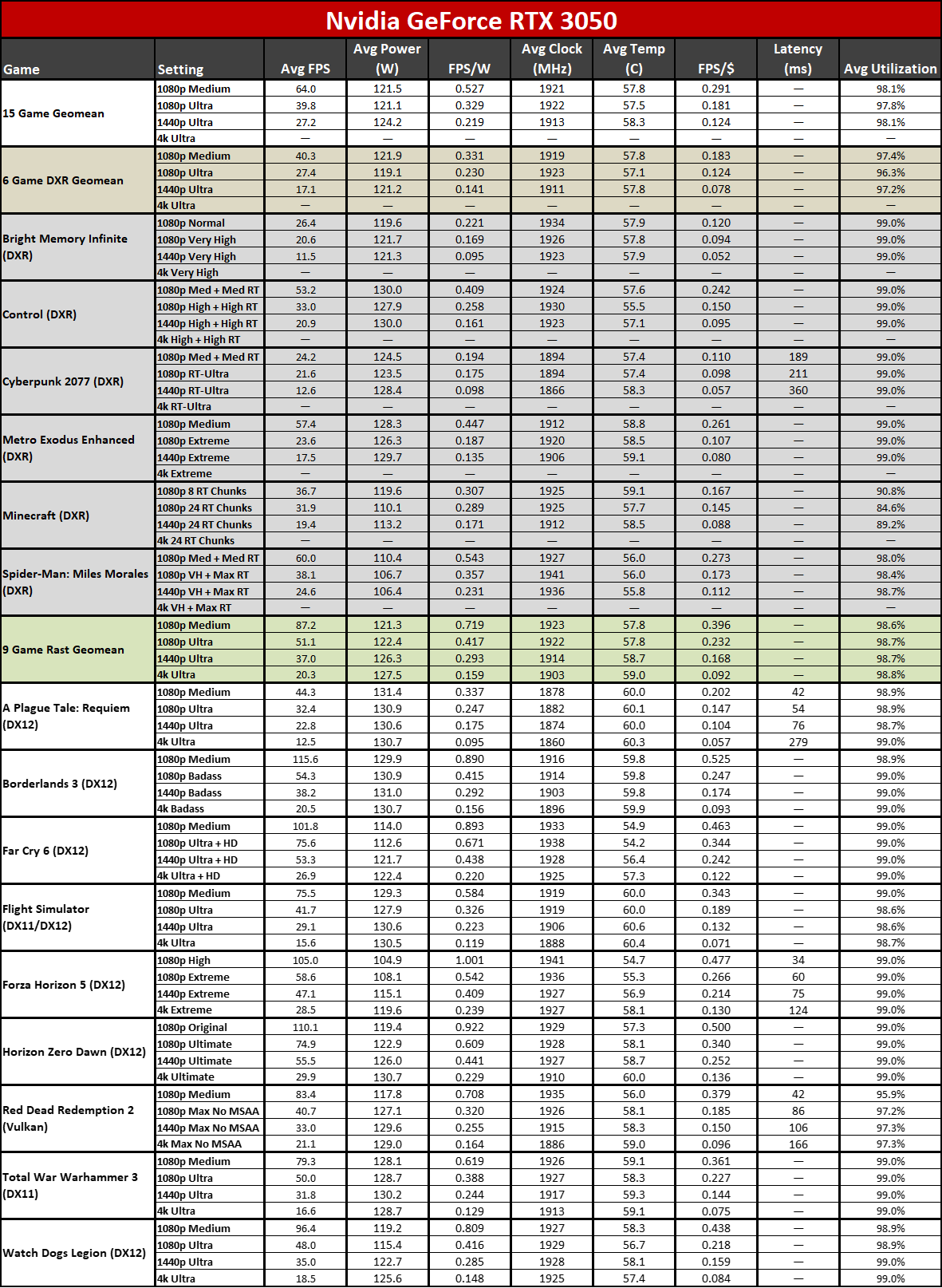

We'll also include results from the RTX 2060, which launched in early 2019. Many gamers skip a generation or two on hardware, and Nvidia (like AMD and the RX 7600) is pitching the RTX 4060 as a great upgrade path for people still using cards like the GTX 1060, RTX 2060, or RX 570/580/590. For all the complaining about the RTX 40-series and its higher generational pricing, it's also nice to see Nvidia match or beat the pricing on the two previous generation GPUs. The RTX 2060 launched at $349 and later dropped to $299, while the RTX 3060 launched at $329 but rarely saw that price until the last few months.

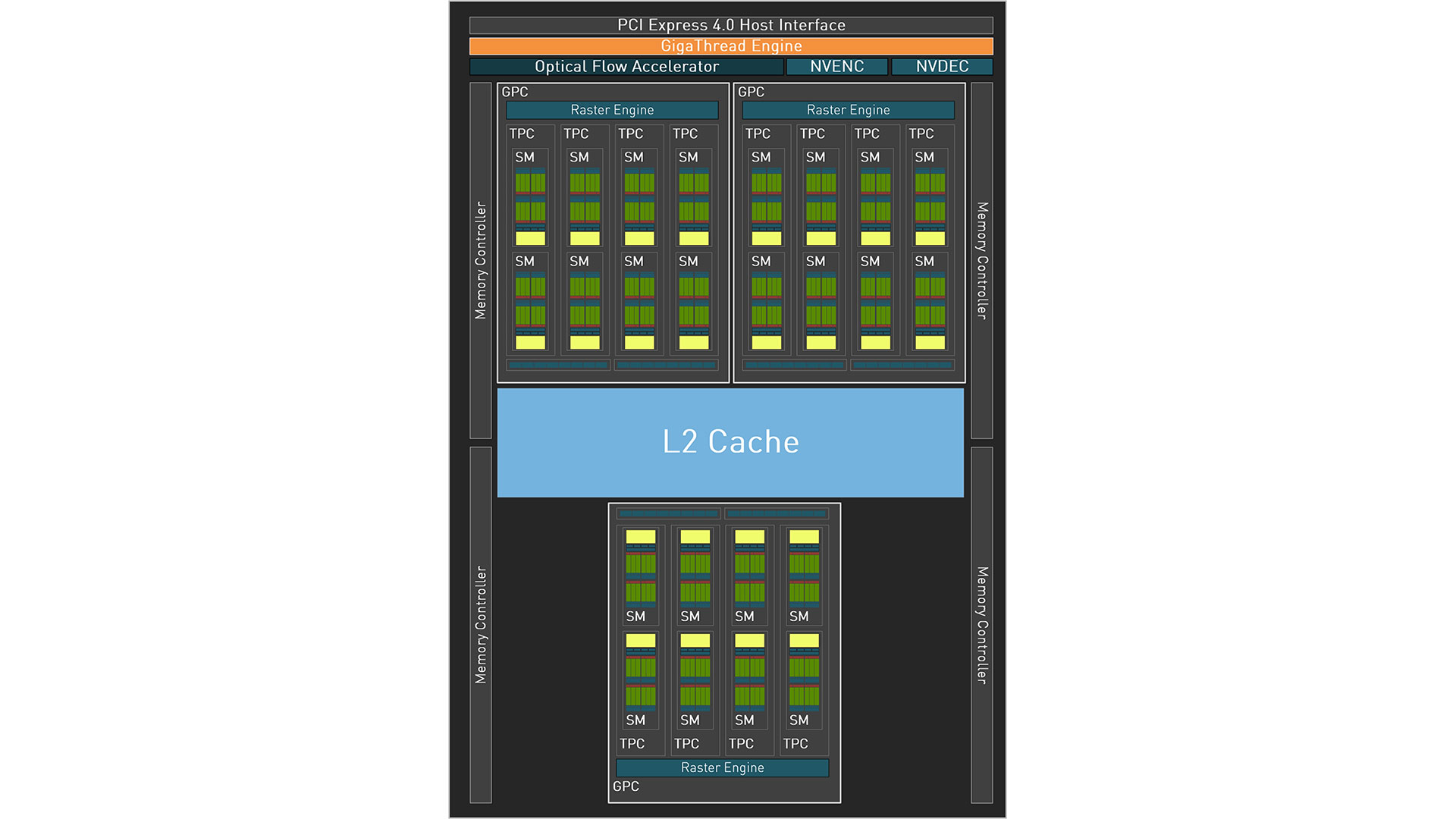

The block diagram for the RTX 4060 / AD107 shows just how much Nvidia has trimmed things down in order to hit mainstream pricing. Most of the other Ada chips have multiple NVDEC / NVENC blocks, but AD107 has just one of each. As noted above, there are also just 24 total SMs, spread among three GPCs (Graphics Processing Clusters). Finally, Nvidia provides up to 8MB of L2 cache per 32-bit memory channel, but the RTX 4060 only has 6MB enabled for a total of 24MB. (The mobile RTX 4060 gets the full 32MB, if you're wondering.)

As with other Ada Lovelace chips, the RTX 4060 includes Nvidia's 4th-gen Tensor cores, 3rd-gen RT cores, new and improved NVENC/NVDEC units for video encoding and decoding with AV1 support, and a significantly more powerful Optical Flow Accelerator (OFA). The latter is used for DLSS 3, and all indications are that Nvidia has no intention of trying to enable Frame Generation on Ampere and earlier RTX GPUs.

The tensor cores now support FP8 with sparsity. It's not clear how useful that is for various workloads, but AI and deep learning have certainly leveraged lower precision number formats to boost performance without significantly altering the quality of the results — at least in some cases. It will ultimately depend on the work being done, and figuring out just what uses FP8 versus FP16, plus sparsity, can be tricky.

Of course, running AI models on a budget mainstream card like the RTX 4060 isn't really the primary goal. Yes, Stable Diffusion will work, and we'll show test results later. Other AI models that can fit in the 8GB VRAM will run as well. However, anyone that's serious about AI and machine learning will almost certainly want a GPU with more processing muscle and more VRAM.

- MORE: Best Graphics Cards

- MORE: GPU Benchmarks and Hierarchy

- MORE: All Graphics Content

Is 8GB of VRAM Insufficient?

One of the biggest complaints you're sure to hear about the RTX 4060 is that it only has 8GB of VRAM. You may also hear complaints about the 128-bit memory interface, which is related but also a separate consideration. Dropping down to a 128-bit bus on the 4060 definitely represents a compromise, but there are many factors in play.

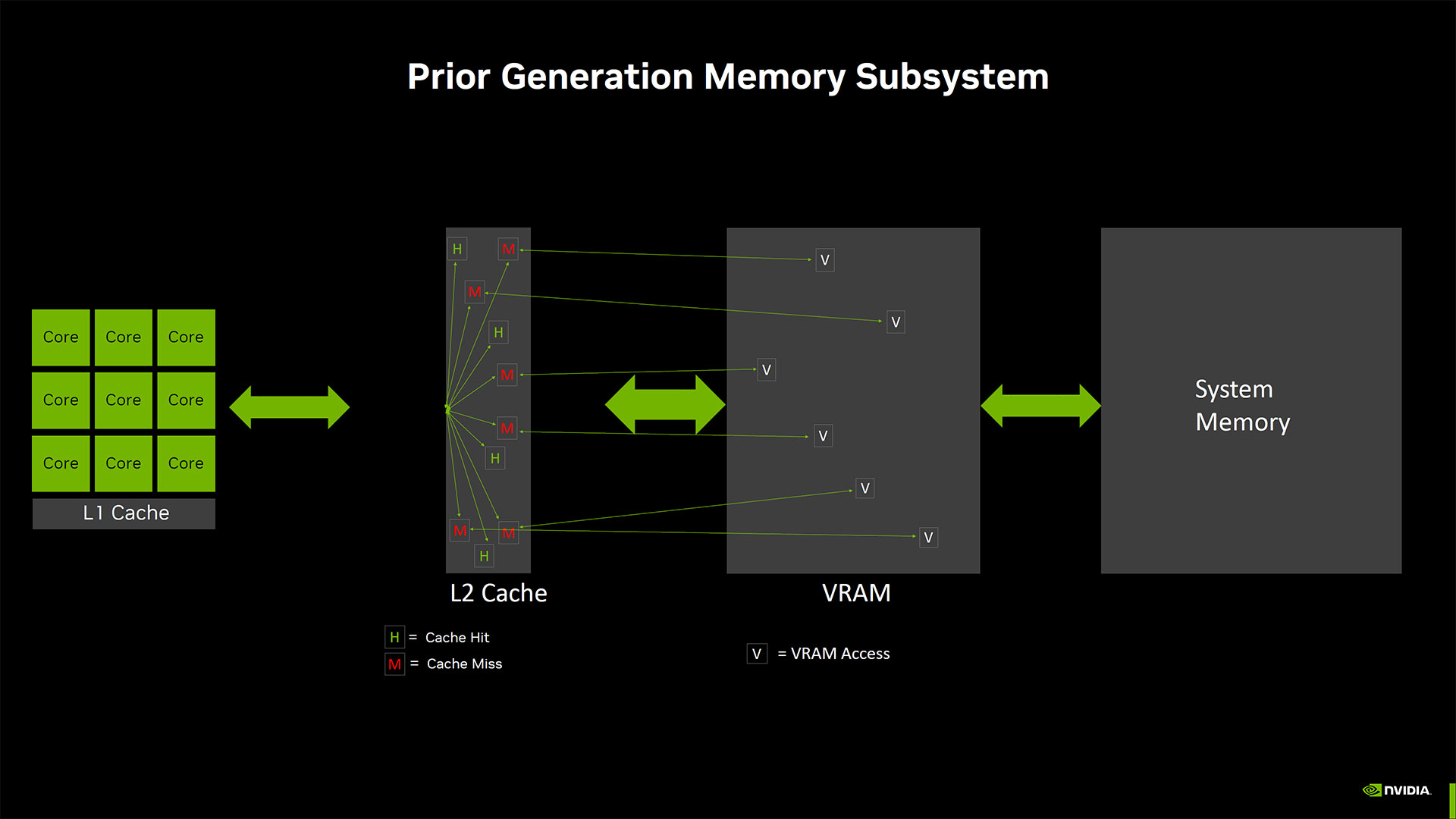

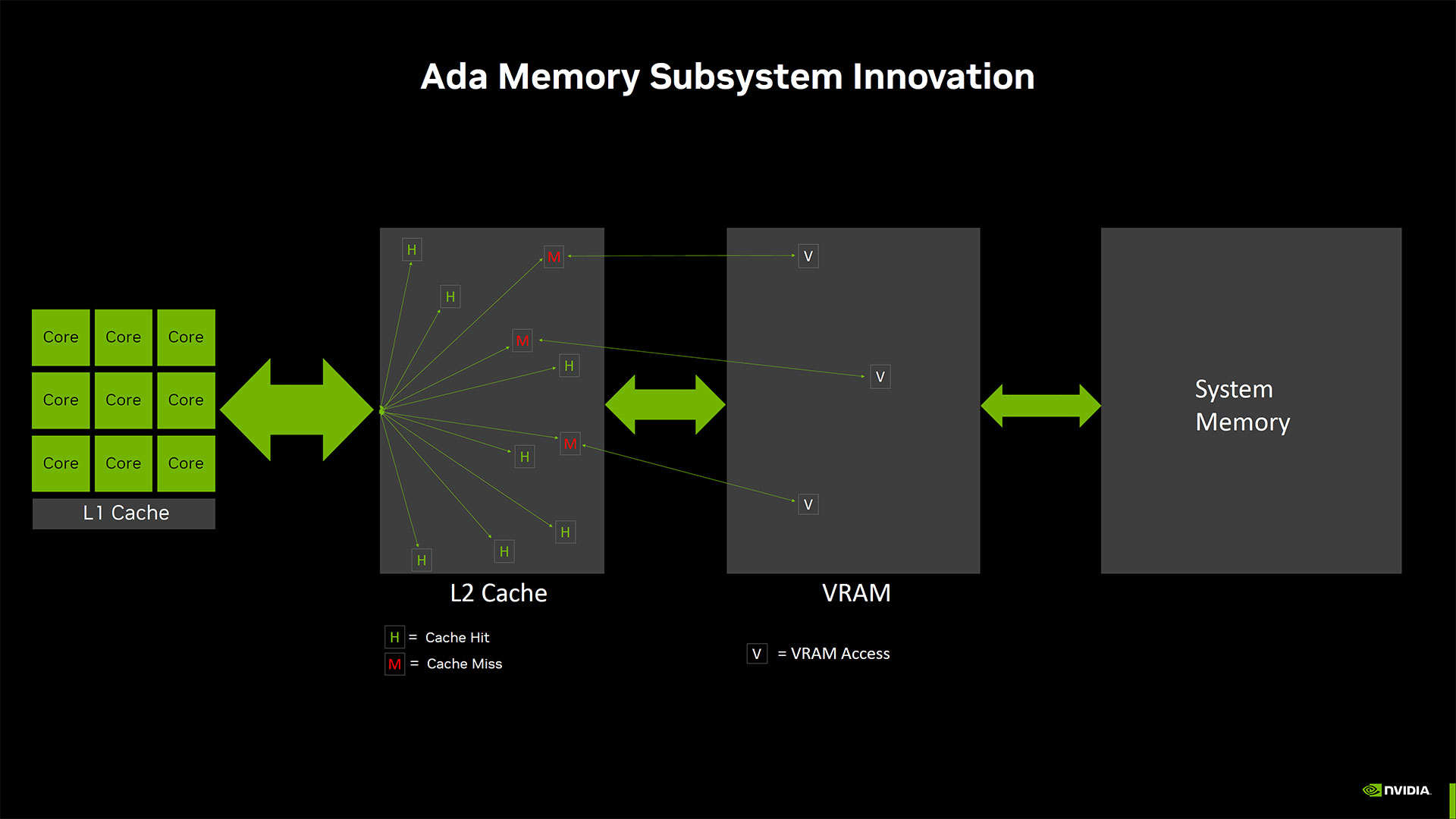

First, we need to remember that the significantly larger L2 cache really does help a lot in terms of effective memory bandwidth. Every memory access fulfilled by the L2 cache means that the 128-bit interface didn't even come into play. Nvidia says that the hit rate of the larger 24MB L2 cache on the RTX 4060, compared to what the hit rate would have been with an Ampere-sized 2MB L2, is 67% higher. Nvidia isn't lying, and real-world performance metrics will prove this point — AMD also lists effective bandwidth on many of its RDNA 2 and RDNA 3 GPUs, for the same reason.

At the same time, there are edge cases where the larger L2 cache doesn't help as much. Running at 4K or even 1440p will reduce the cache hit rates, but certain applications simply hit memory in a different way that can also reduce hit rates. We've heard that emulation is one such case, and hashing algorithms (i.e. cryptocurrency mining, may it rest in peace) are another. But for typical PC gaming, the 8X larger L2 cache (relative to the 3060) will help a lot in reducing GDDR6 memory accesses.

The other side of the coin is that a 128-bit interface inherently limits how much VRAM a GPU can have. If you use 1GB chips, you max out at 4GB (e.g. the GTX 1650). The RTX 4060 uses 2GB chips, which gets it up to 8GB. Nvidia could have also put chips on both sides of the PCB (which used to be quite common), but that increases complexity and it would bump the total VRAM all the way up to 16GB. That's arguably overkill for a $300 graphics card, even in 2023.

Here's the thing: You don't actually need more than 8GB of VRAM for most games, when running at appropriate settings. We've explained why 4K requires so much VRAM previously, and that's part of the story. The other part is that many of the changes between "high" and "ultra" settings — whatever they might be called — are often of the placebo variety. Maxed out settings will often drop performance 20% or more, but image quality will look nearly the same as high settings.

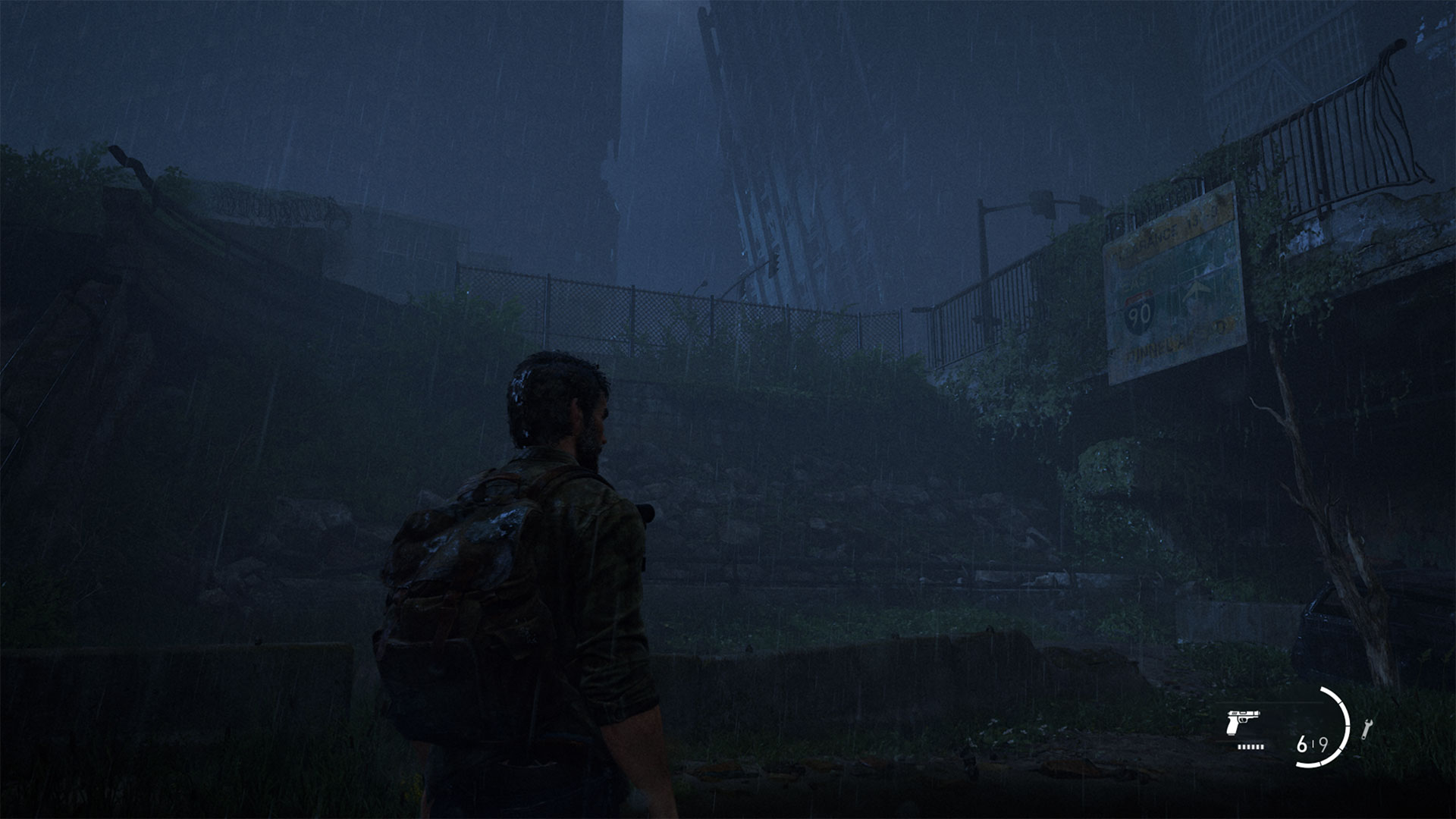

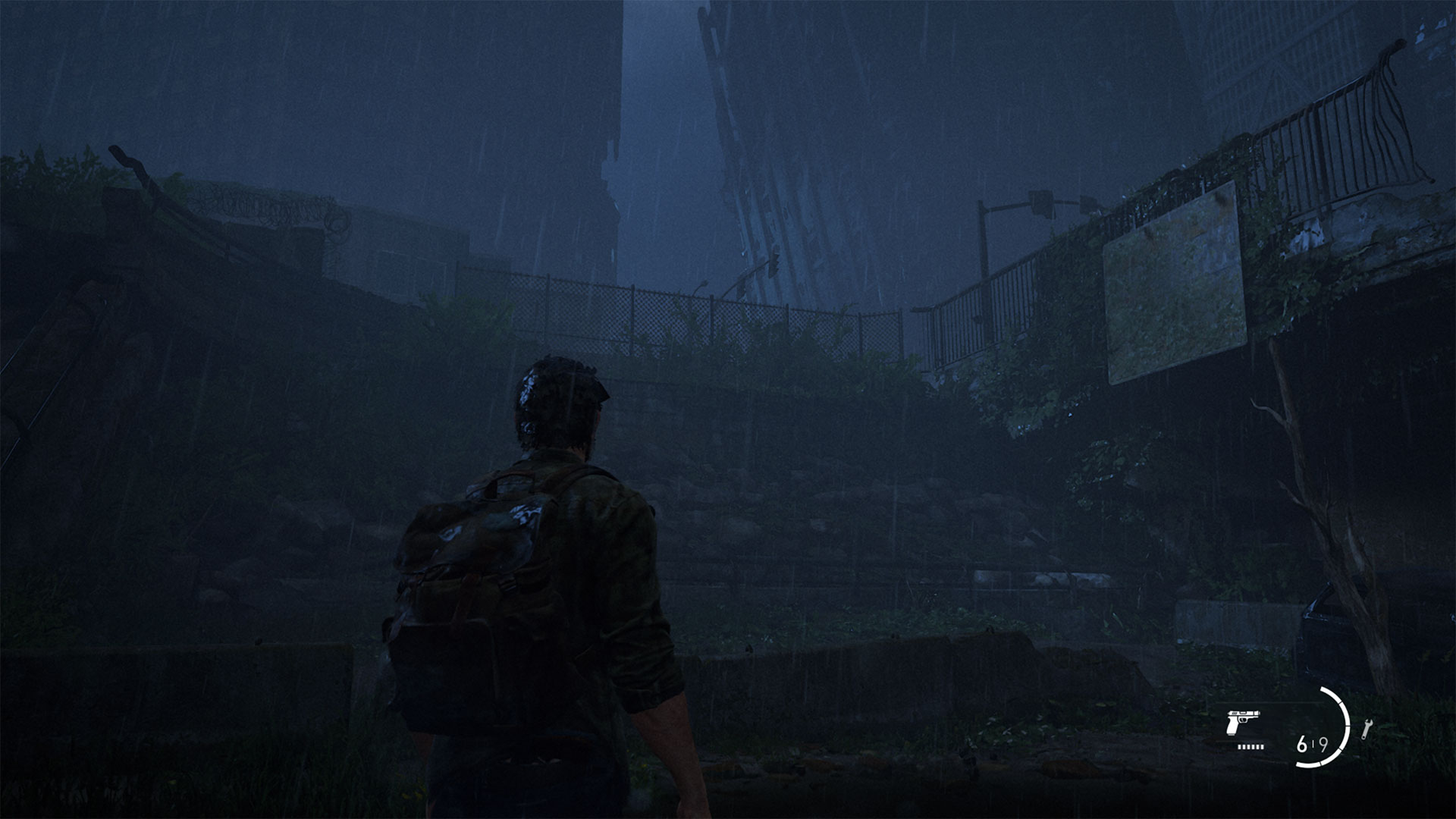

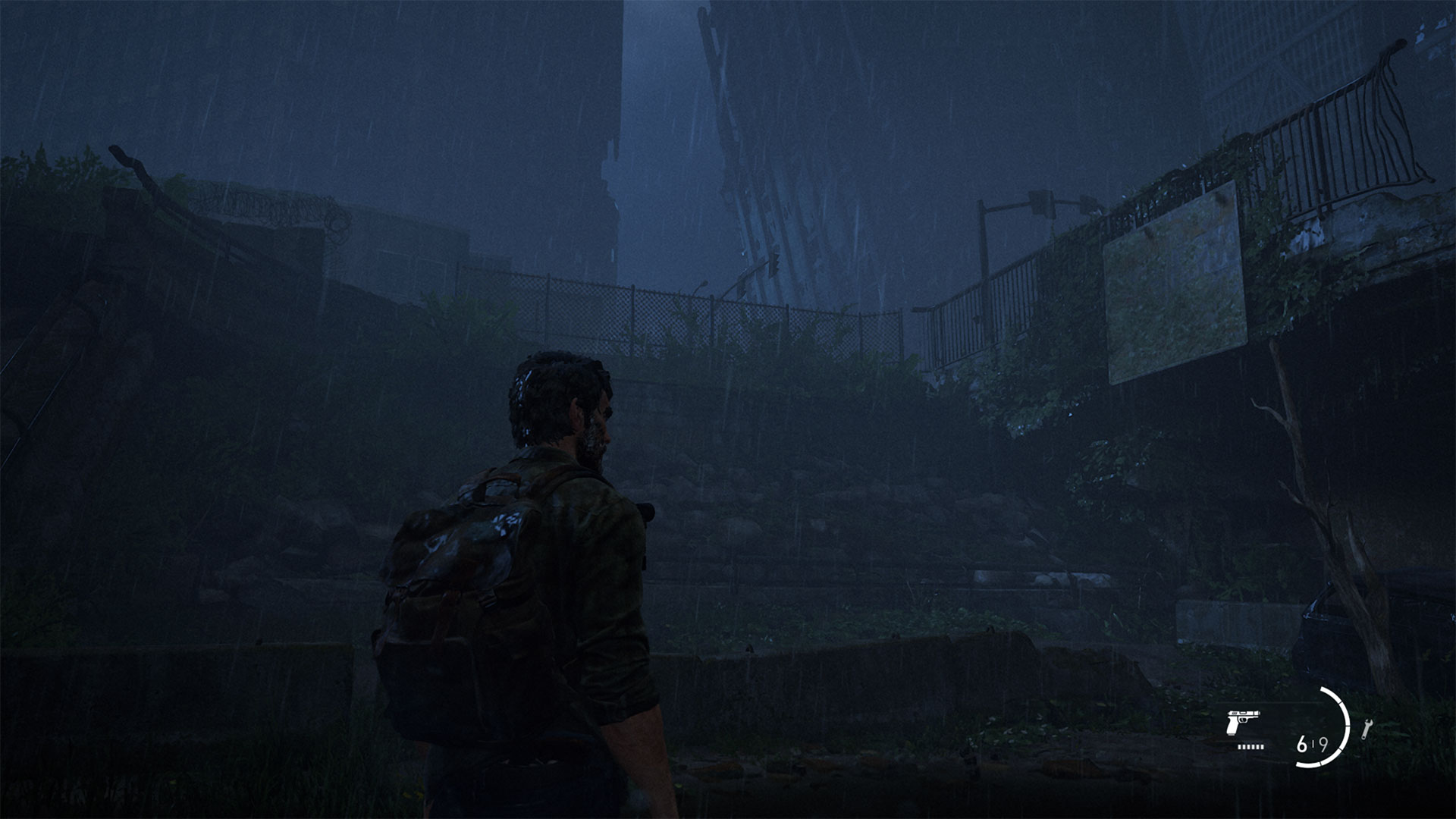

Look at the above gallery from The Last of Us, Part 1 as an example. This is a game that can easily exceed 8GB of VRAM with maxed out settings. The most recent patch improved performance, however, and 8GB cards run much better now — almost like the initial port was poorly done and unoptimized! Anyway, we've captured the five presets, and if you look, there aren't really any significant differences between Ultra and High, and you could further reduce the differences by only turning down texture quality from ultra to high.

The placebo effect is especially true of HD texture packs, which can weigh in at 40GB or more but rarely change the visuals much. If you're running at 1080p or 1440p, most surfaces in a game will use 1024x1024 texture resolutions or lower, because the surface doesn't cover more than a thousand pixels. In fact, most surfaces will likely opt for 256x256 or 128x128 textures, and only those that are closest to the viewport will need 512x512 or 1024x1024 textures.

What about the 2048x2048 textures in the HD texture pack? Depending on the game, they may still get loaded into VRAM, even if the mipmapping algorithm never references them. So you can hurt performance and cause texture popping for literally zero improvement in visuals.

Related to this is texture streaming, where a game engine will load in low resolution textures for everything, and then pull higher resolution textures into VRAM as needed. You can see this happening in some games right as a level loads: Everything looks a bit blurry and then higher quality textures "pop" in.

If the texture streaming algorithm still tries to load higher resolution textures when they're not needed, it may evict other textures from VRAM. That can lead to a situation where the engine and GPU will have to shuttle data over the PCIe interface repeatedly, as one texture gets loaded and kicks a different texture out of VRAM, but then that other texture is needed and has to get pulled back into VRAM.

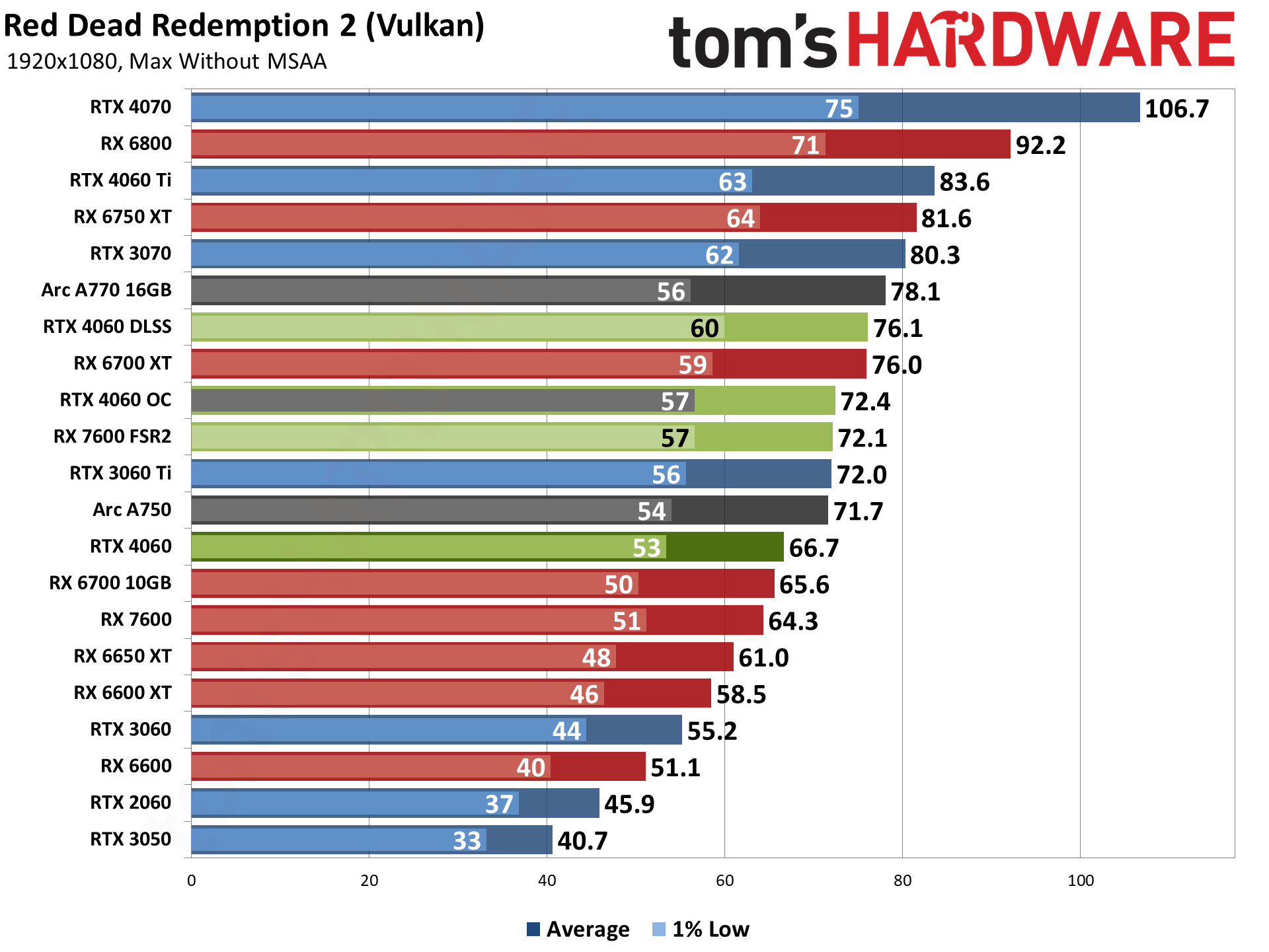

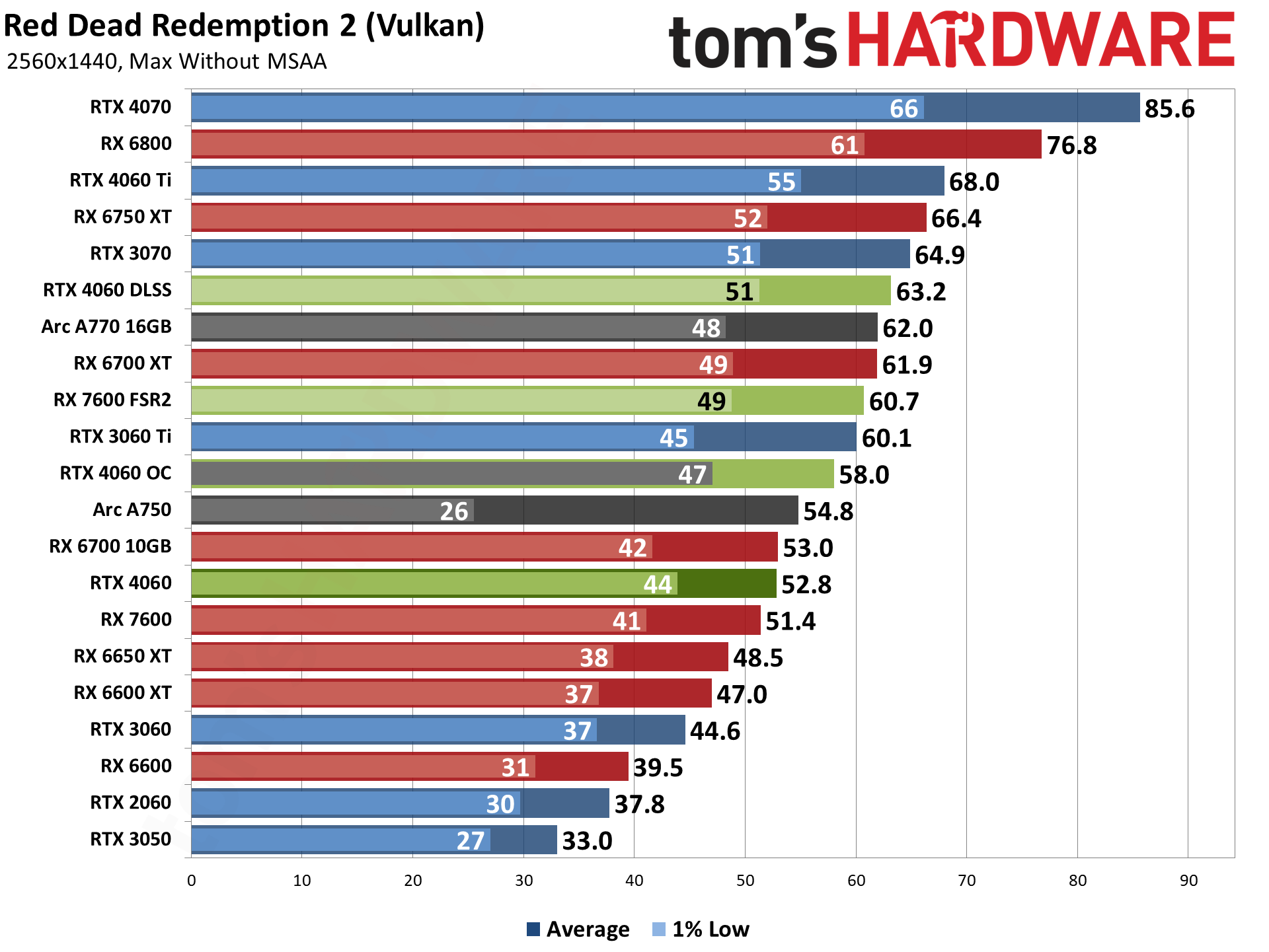

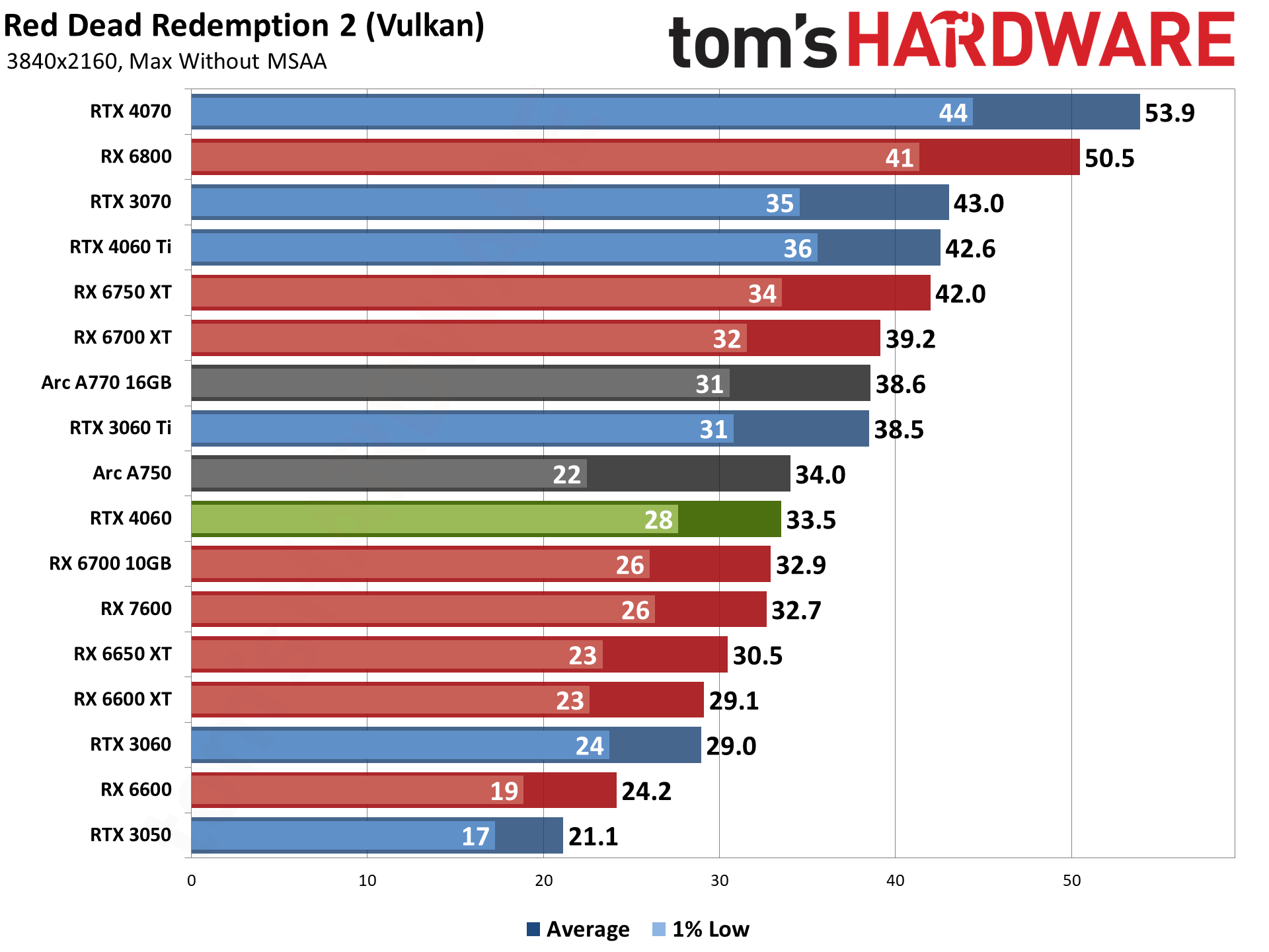

Fundamentally, how all of this plays out is up to the game developers. Some games (Red Dead Redemption 2, as an example) will strictly enforce VRAM requirements and won't let you choose settings that exceed your GPU's VRAM capacity — you can try to work around this, but the results are potentially problematic. Others, particularly Unreal Engine games, will use texture streaming, sometimes with the option to disable it (e.g. Lord of the Rings: Gollum). This can lead to the aforementioned texture popping.

If you're seeing a lot of texture popping, or getting a lot of PCI Express traffic, our recommendation is to turn the texture quality setting down one notch. We've done numerous tests to look at image quality, and particularly at 1080p and 1440p, it can be very difficult to see any difference between the maximum and high texture settings in modern games.

For better or worse, the RTX 4060 is the GPU that Nvidia has created for this generation. Many of the design decisions were probably made two years ago. Walking back from 12GB on the 3060 to 8GB on the 4060 is still a head scratcher, unfortunately.

The bottom line is this. If you're the type of gamer that needs to have every graphics option turned on, we're now at the point where a lot of games will exceed 8GB of VRAM use, even at 1080p. Most games won't have issues if you drop texture quality (and possibly shadow quality) down one notch, but it still varies by game. So if the thought of turning down any settings triggers your FOMO reflex, the RTX 4060 isn't the GPU for you.

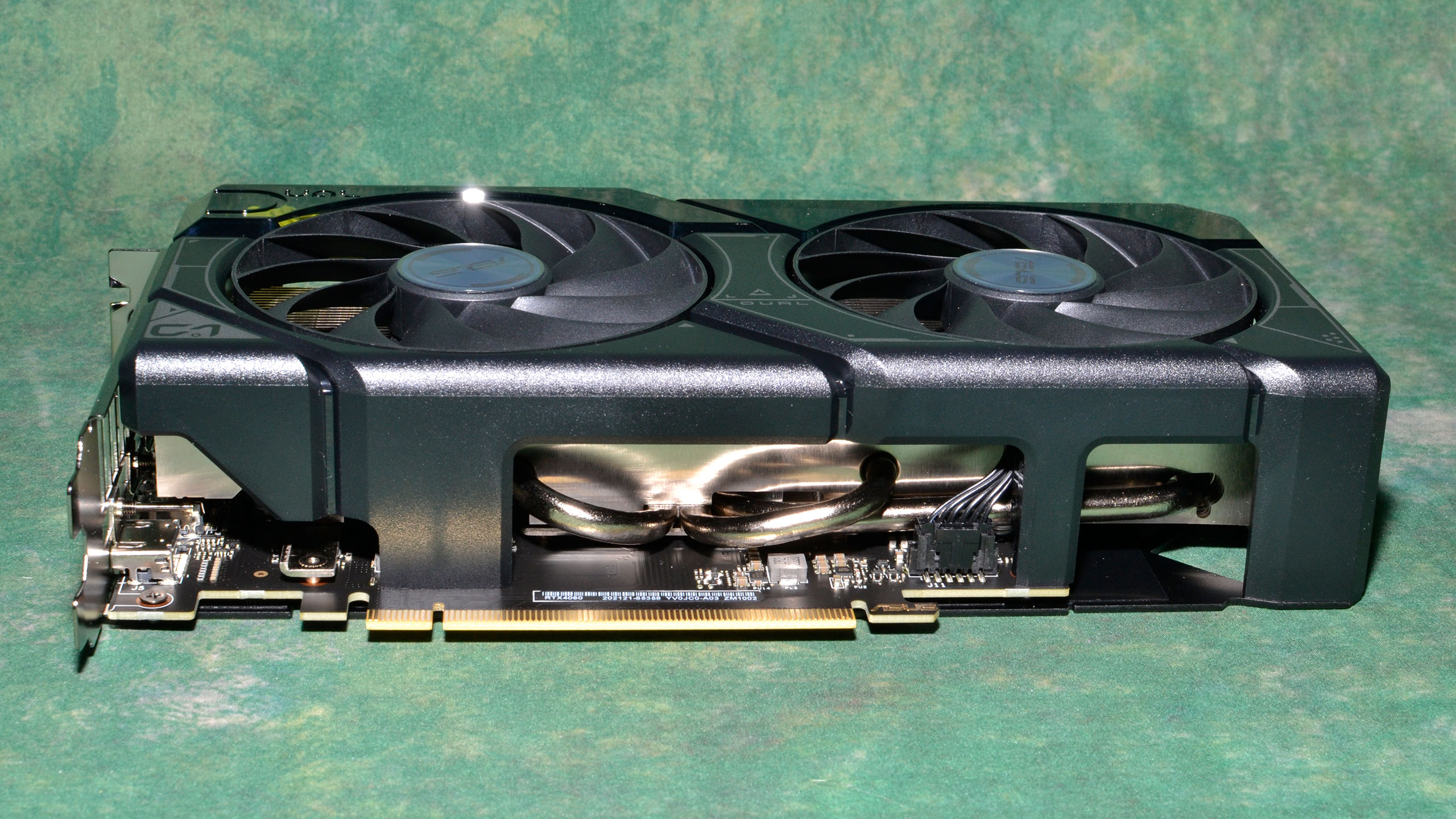

Asus GeForce RTX 4060 Dual OC

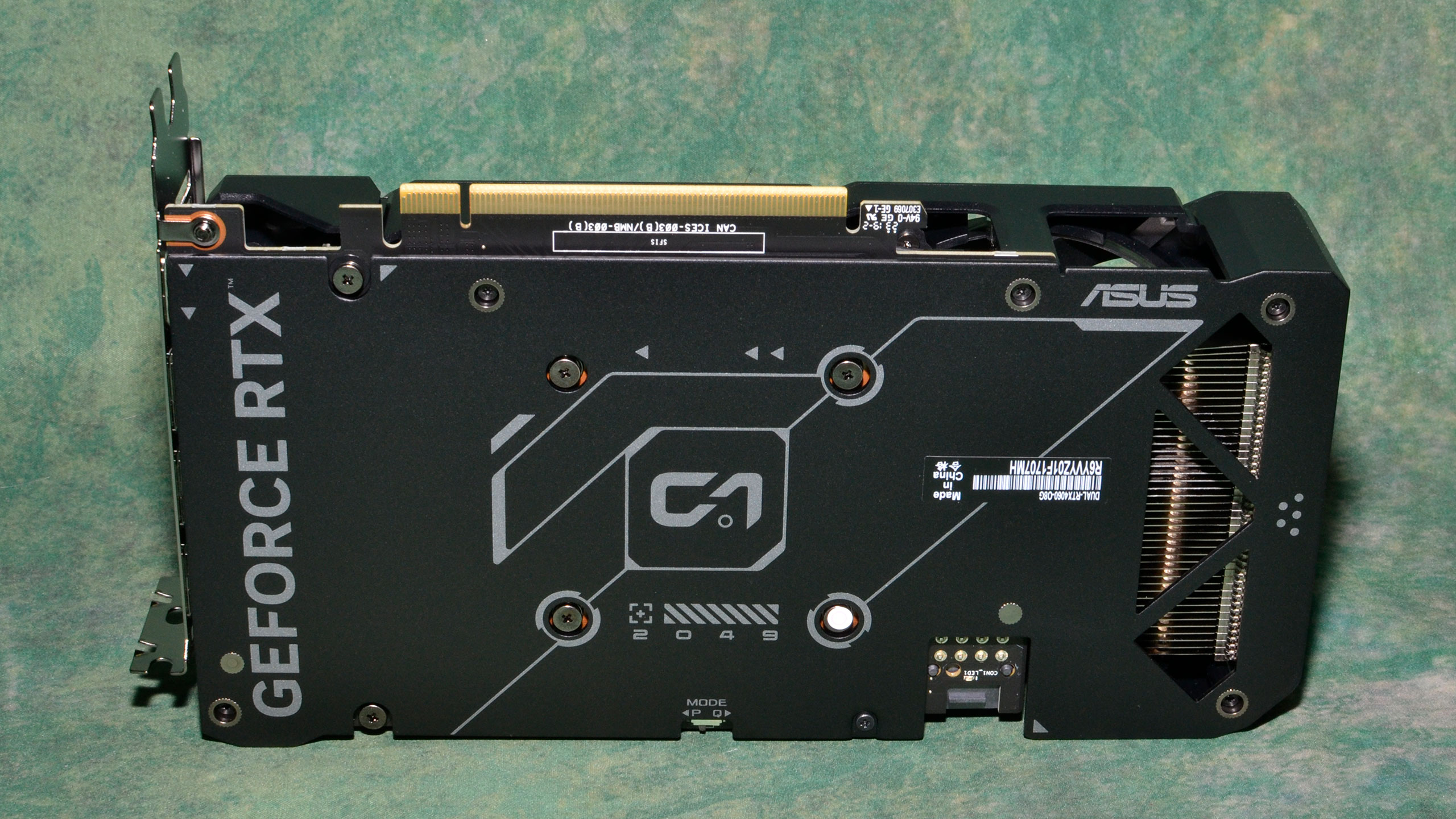

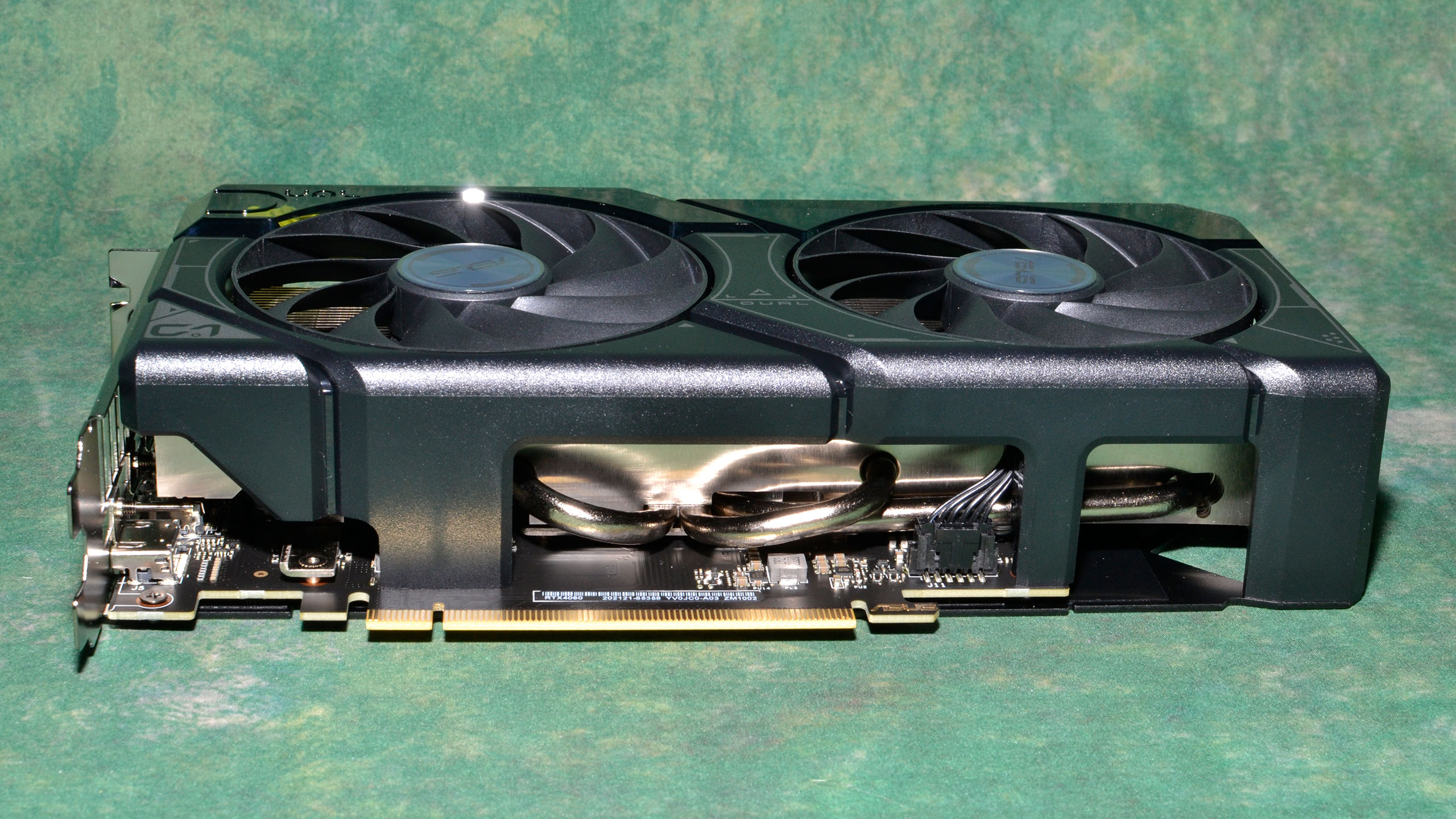

Nvidia won't be making any RTX 4060 Founders Edition cards, leaving it up to the AIC (Add-In Card) partners to come up with appropriate designs. Obviously, with a base 115W TGP (Total Graphics Power), we shouldn't need any extreme designs to deal with the heat. Except, we'll probably still get them.

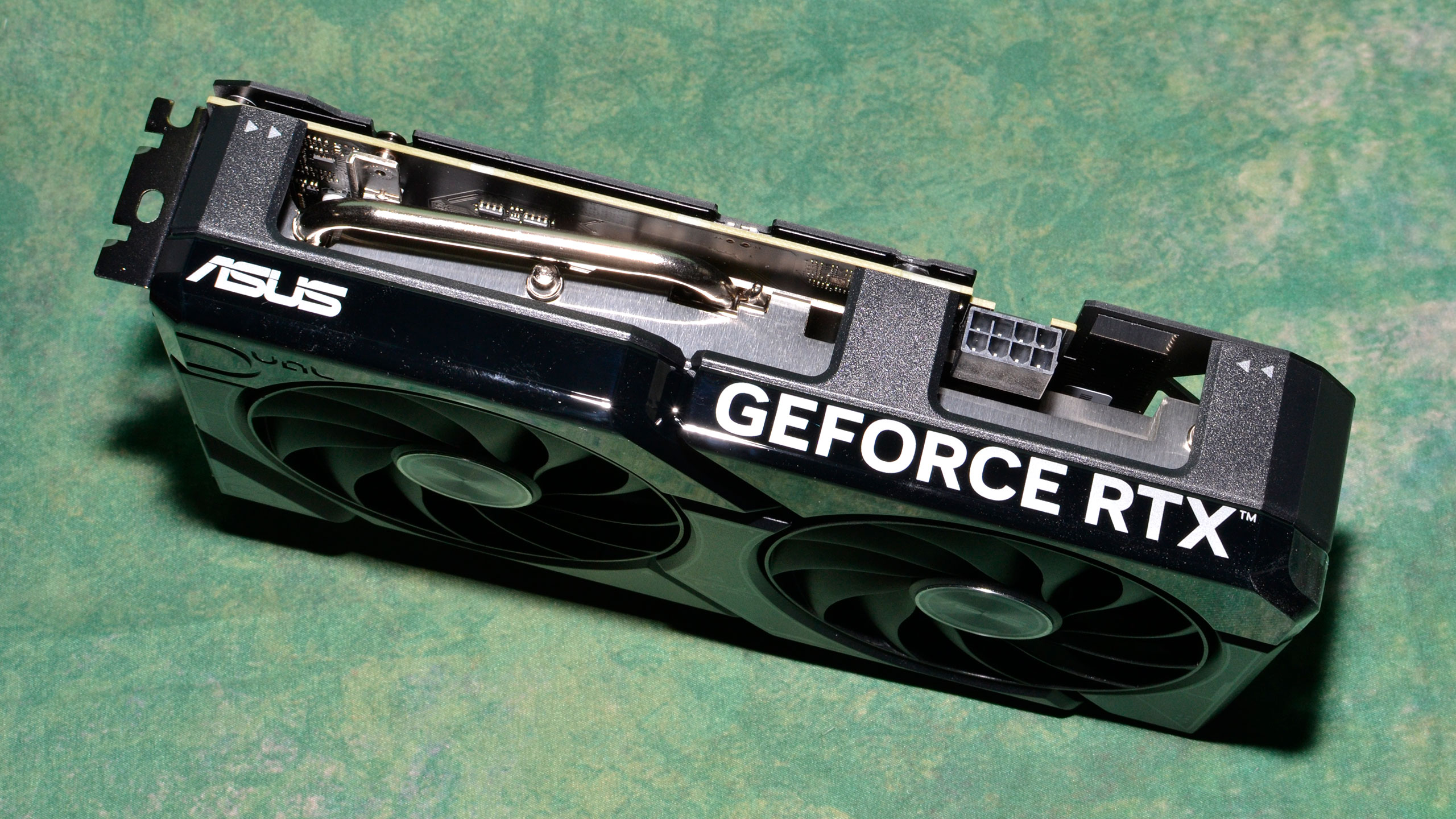

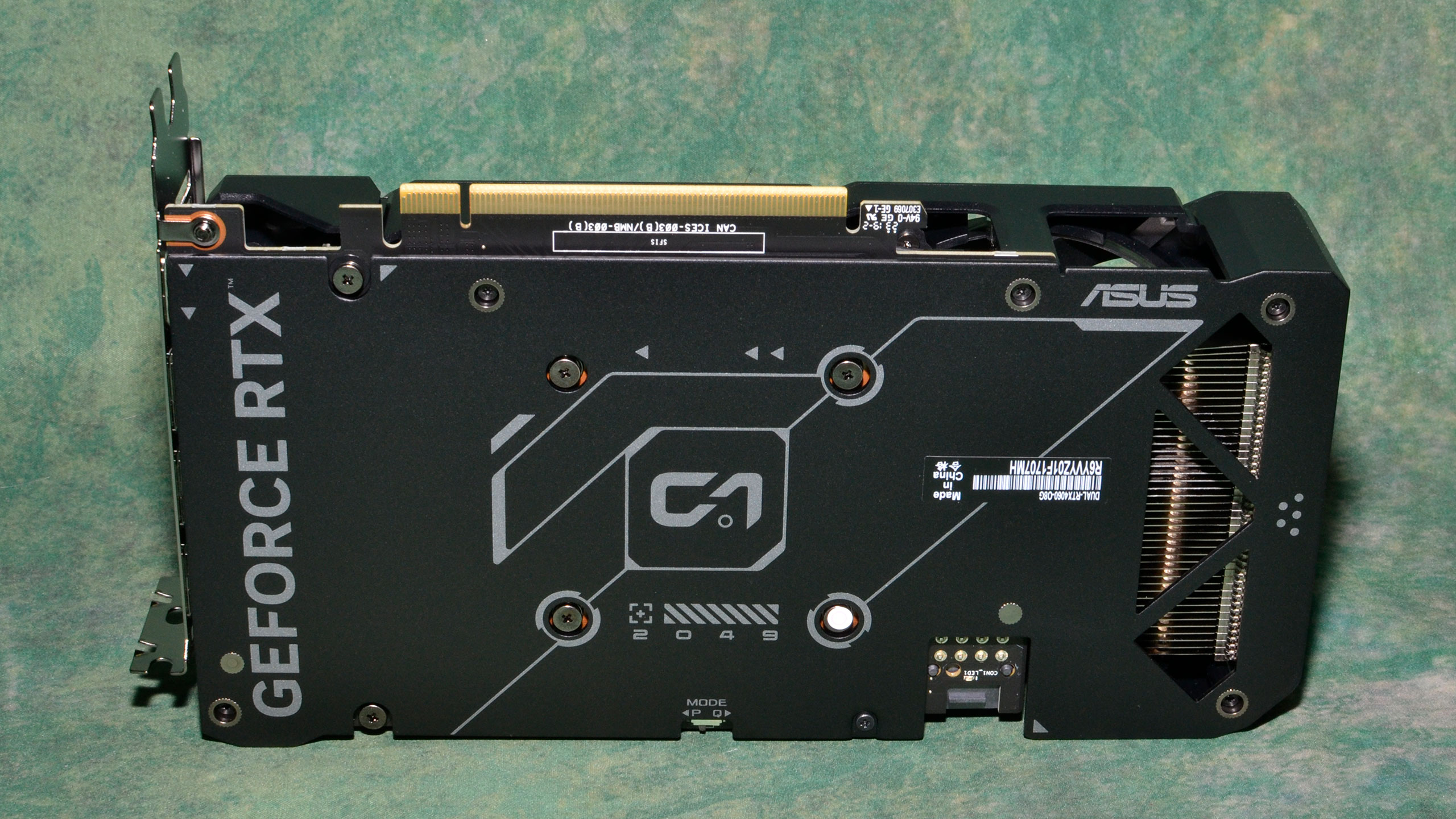

Case in point: The Asus RTX 4060 Dual OC that Nvidia sent us for the launch review. It's not a massive card, but it does occupy 2.5-slots of width, which effectively means you can't use the two adjacent slots. Not that it matters to most people, as these days graphics cards are one of the few expansion cards that are still in regular use. Additional M.2 slot adapters and USB 3.2 adapters are another possible use, along with video capture cards, but even those aren't particularly common.

The lack of an RTX 4060 Founders Edition means we're probably not going to see any cards using 16-pin power connectors, which is just fine by us. The Asus card has a single 8-pin connector, which on its own can provide 150W of power, never mind the 75W from the PCIe x16 slot. Even with a modest factory overclock, the Asus 4060 won't come anywhere near hitting those potential power limits.

There's not much to say about the packaging for the Asus card, other than that there's a lot of empty space. It's the same box dimensions as we've seen with substantially larger triple-fan Asus cards, but there's probably a benefit to doing a "one size fits all" box.

Besides the card, you get a little cardboard punchout card holder and a small manual... and that's it. Not that we expected anything extra here.

The Asus RTX 4060 Dual OC measures 229x124x48 mm (our measurements), which is moderately compact even if it's a 2.5-slot width. It's also a rather light card, weighing in at 639g, so you shouldn't have issues with the card sagging. You can likely fit the card into many mini-ITX cases, provided they can accommodate the wider girth.

Asus uses two custom 89mm fans with an integrated rim, which should easily handle any cooling requirements when combined with the heatsink. The heatsink features four heatpipes, and in a nice change of pace, the fins are horizontally oriented so that air will flow out the IO bracket as well as the rear of the card, rather than out of the top and bottom of the card. That means it should dump less heat into the interior of your PC case.

For a base model RTX 4060, there's a lot to like with the Asus design. The aesthetics are subdued, with no RGB lighting, so if you're trying to put together a "stealth-build" PC, it would be a good fit. You do get a few extras as well, which aren't normally seen with base model GPUs.

On the top, there's a switch to toggle between "quiet" and "performance" modes. It's in performance mode by default, which is where we left it. The quiet mode likely just alters the fan speed curve slightly to favor lower RPMs over lower temperatures.

Video outputs consist of the typical triple DisplayPort 1.4a and single HDMI 2.1 outputs — nothing new there. All four ports are capable of driving up to a 4K 240Hz display, using Display Stream Compression, which is what our Samsung Odyssey Neo G8 supports.

- MORE: Best Graphics Cards

- MORE: GPU Benchmarks and Hierarchy

- MORE: All Graphics Content

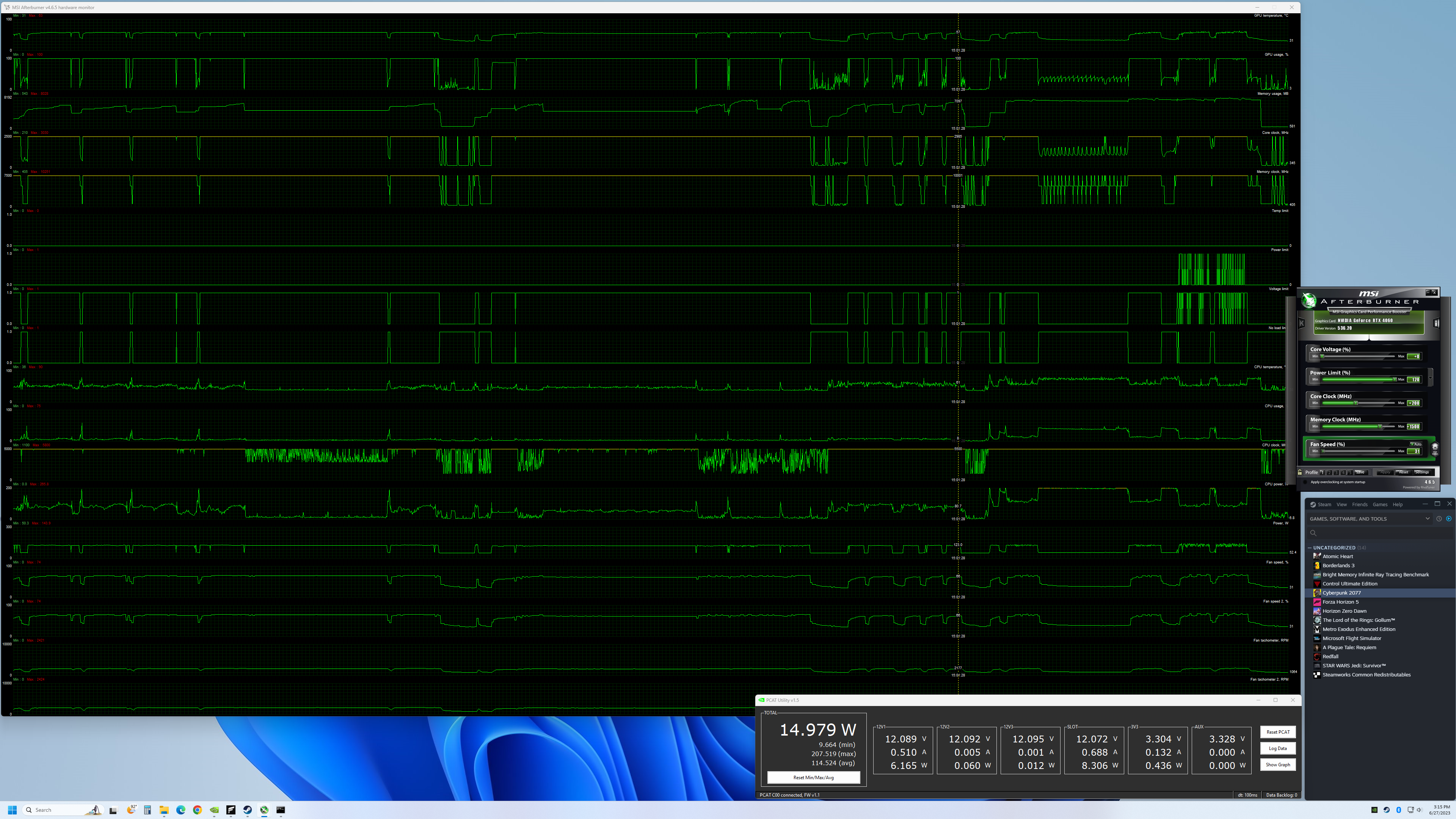

Asus RTX 4060 Overclocking

Our overclocking process doesn't aim to fully redline the hardware, but instead looks to find "reasonably stable and safe" overclocks. We start by maxing out the power limit (using MSI Afterburner), which is 120% for the Asus RTX 4060 Dual OC — that will vary by card manufacturer and model. We also set a fan speed curve that starts at 30% at 30C and ramps up to 100% at 80C. This is probably more than the GPU needs, but for overclocking it's usually a good idea to keep temperatures lower if possible.

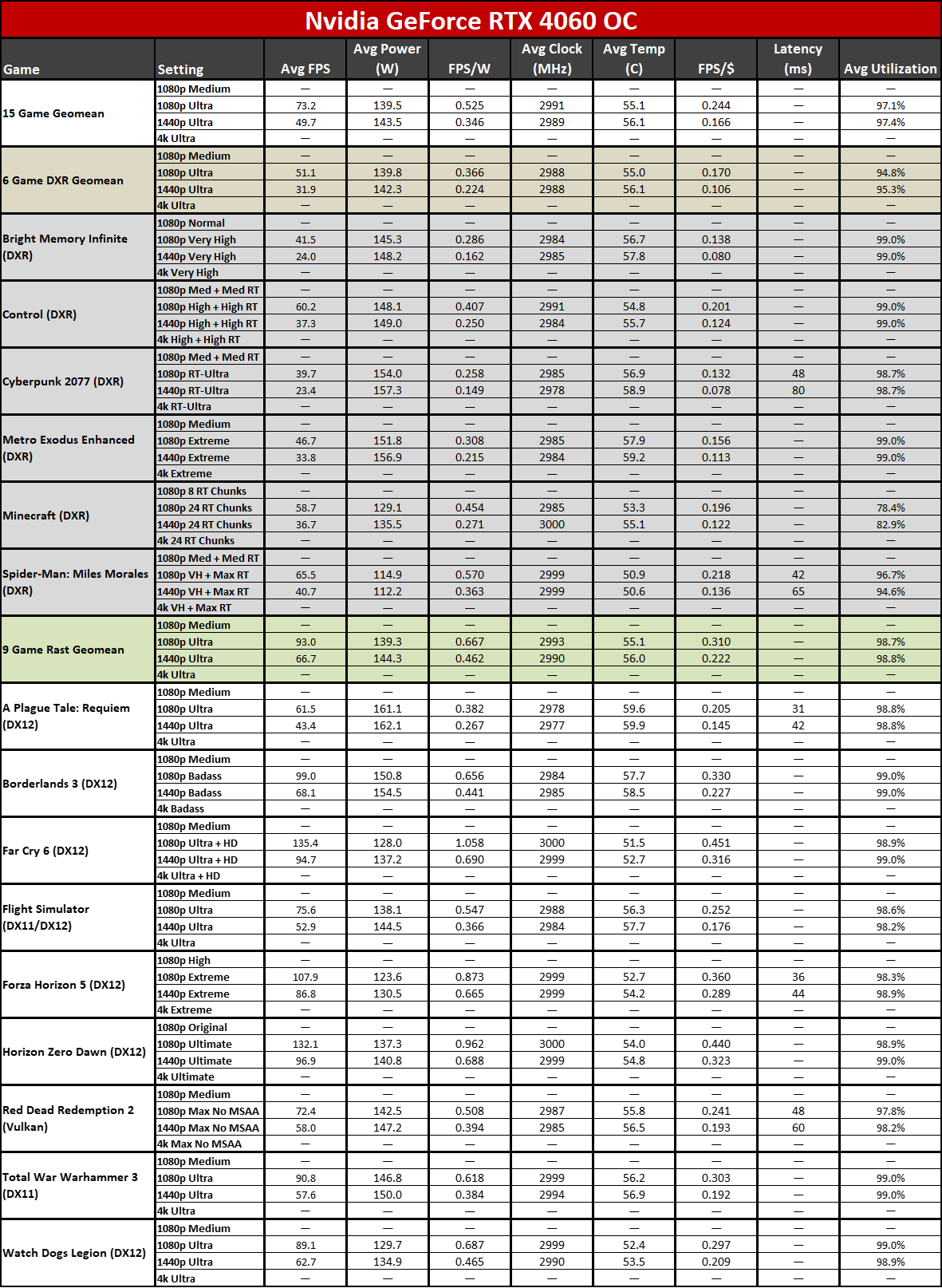

Next, we look for the maximum stable GPU core overclock. We were able to hit up to +225 MHz on the RTX 4060 with our initial testing, and backed off slightly to a +200 MHz overclock after additional tuning. Note that as usual, there's no way to increase the GPU voltage short of doing a voltage mod, which is often a limiting factor for RTX 40-series cards. We saw boost clocks very close to 3GHz with our overclocked testing.

The RTX 4060 comes with GDDR6 clocked at 17 Gbps, which isn't a "normal" speed — most chips are rated for 14, 16, 18, or 20 Gbps. That should give us a decent amount of headroom, and sure enough, we were able to hit a stable +1750 MHz (20.5 Gbps effective). Nvidia has error detect and retry for its memory, so even if a card appears stable, you may not get better performance with a maximum VRAM overclock. We backed off to +1500 MHz (20 Gbps) for our testing.

Our overclocks represent a theoretical 8.1% boost to GPU performance and a 17.6% increase to memory bandwidth. That means the best we should see from the OC is about 15% higher performance, and many games will end up limited by the GPU compute, yielding gains closer to 8–10 percent.

The above image shows the results of our overclocked settings, as well as a limited view of power, clocks, and temperatures (via Afterburner's line charts). The AD106 GPU ran quite cool, as did the GDDR6 memory. You can also see that GPU clocks were hitting around 3000 MHz while running games.

As is always the case with overclocking, there's trial and error. Our results aren't super aggressive, but they still may exceed what you can achieve with a different card. Our focus is first on stability (any crash means we drop clocks and try again until we don't get any problems), with maximum performance being a secondary concern.

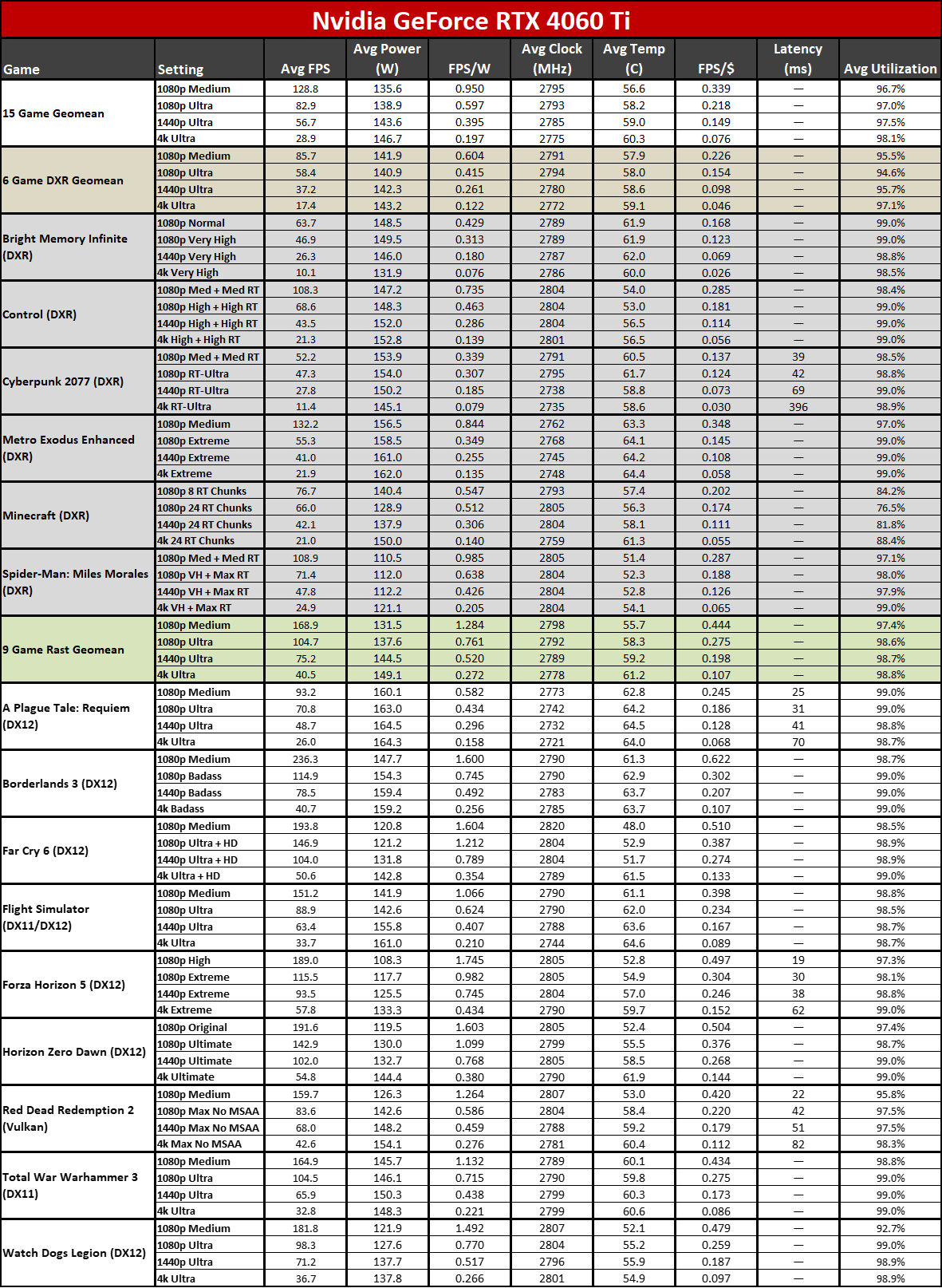

We'll include the manually overclocked results at 1080p ultra and 1440p ultra in the charts. Overall, performance improved by around 10% compared to factory stock settings. Needless to say, no amount of overclocking is likely to close the gap between the RTX 4060 and the 4060 Ti, as the latter has more L2 cache and 25% more GPU cores.

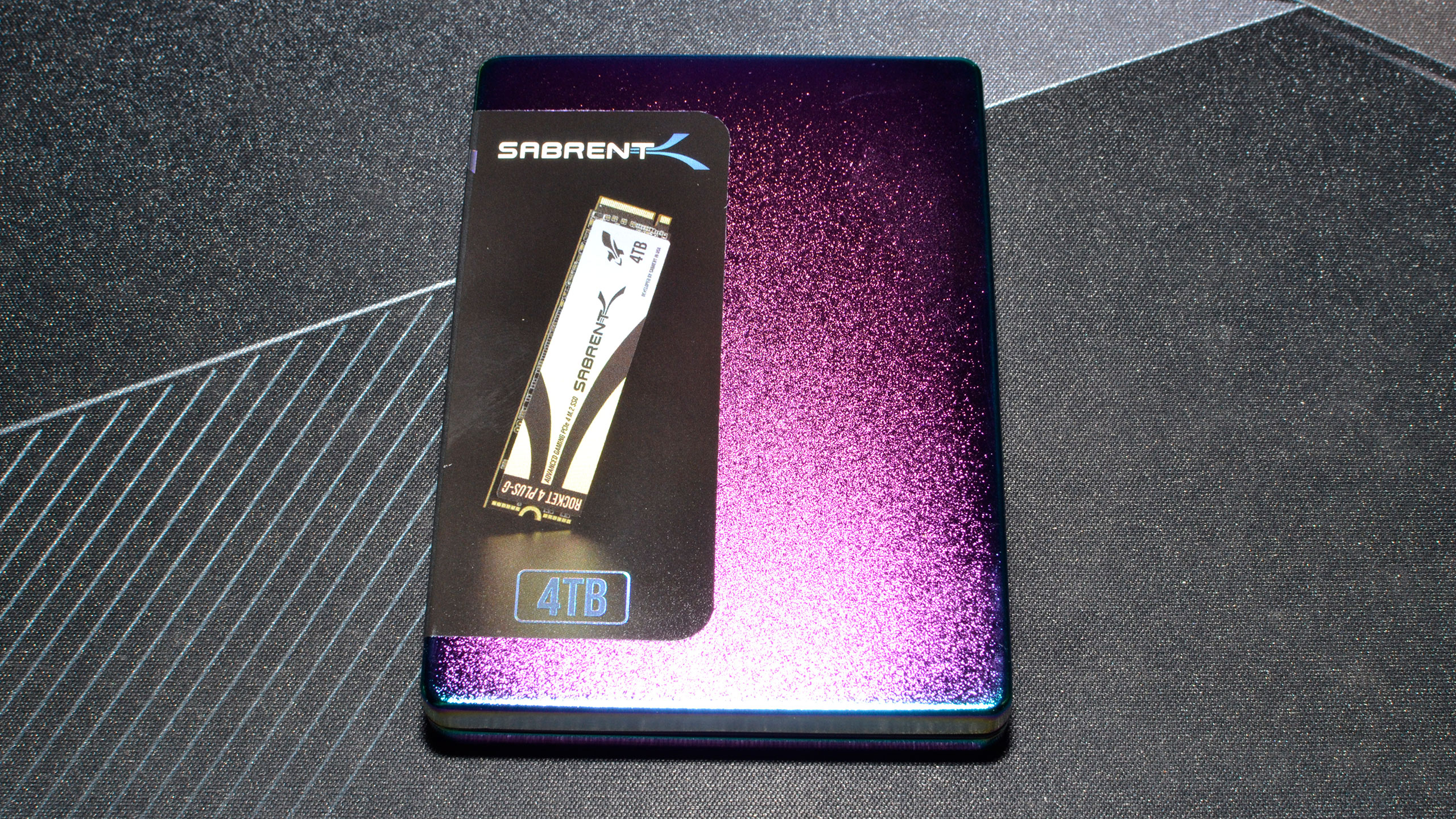

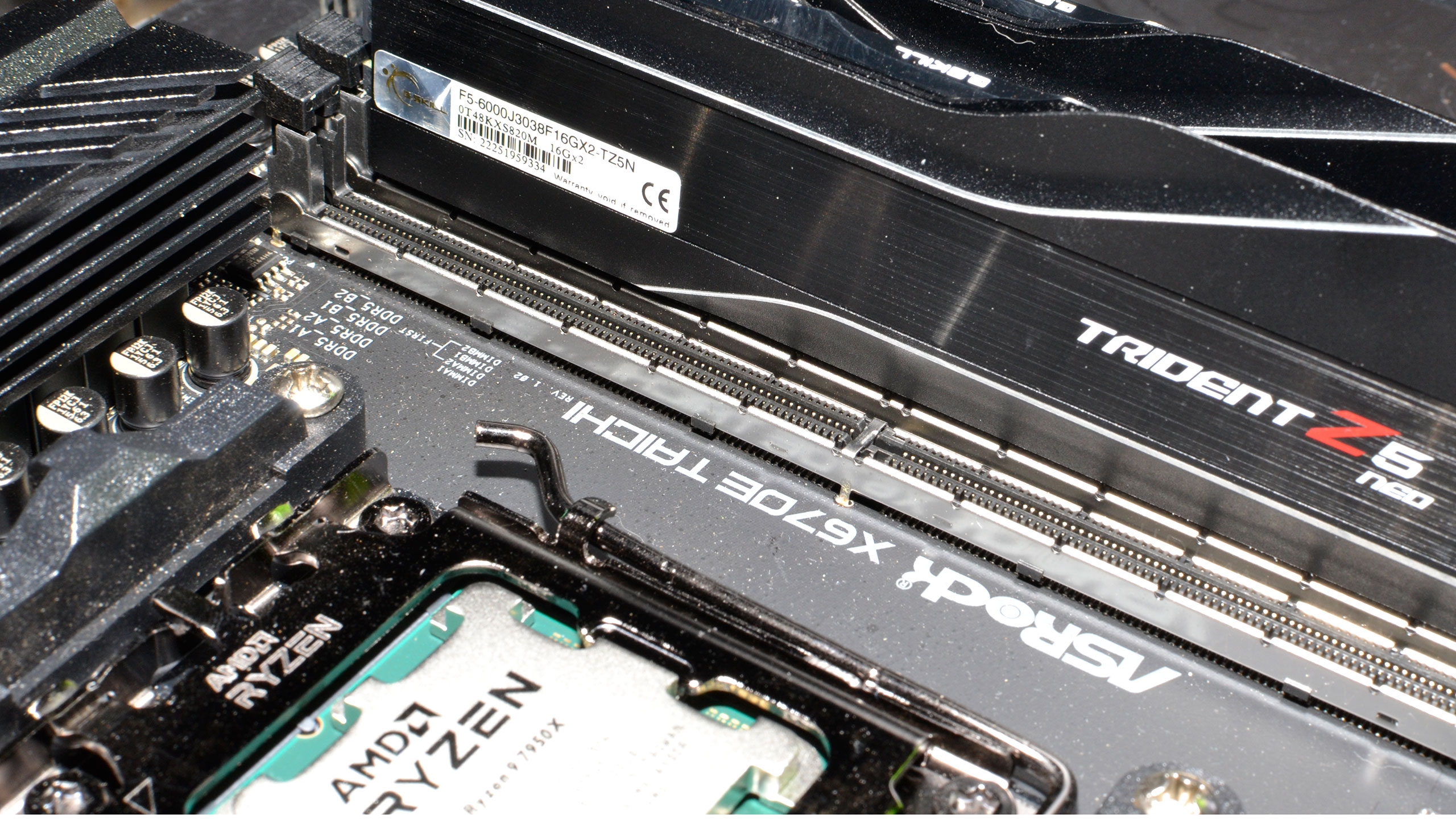

Nvidia RTX 4060 Test Setup

We updated our GPU test PC at the end of last year with a Core i9-13900K, though we continue to also test reference GPUs on our 2022 system that includes a Core i9-12900K for our GPU benchmarks hierarchy. (We'll be updating that later today, once the embargo has passed.) Our RTX 4060 review will use the 13900K results for gaming tests, which ensures, as much as possible, that we're not CPU limited. We also use the 2022 PC for AI tests and the professional workloads.

TOM'S HARDWARE INTEL 13TH GEN PC

Intel Core i9-13900K

MSI MEG Z790 Ace DDR5

G.Skill Trident Z5 2x16GB DDR5-6600 CL34

Sabrent Rocket 4 Plus-G 4TB

be quiet! 1500W Dark Power Pro 12

Cooler Master PL360 Flux

Windows 11 Pro 64-bit

TOM'S HARDWARE 2022 PC

Intel Core i9-12900K

MSI Pro Z690-A WiFi DDR4

Corsair 2x16GB DDR4-3600 CL16

Crucial P5 Plus 2TB

Cooler Master MWE 1250 V2 Gold

Corsair H150i Elite Capellix

Cooler Master HAF500

Windows 11 Pro 64-bit

OTHER GRAPHICS CARDS

AMD RX 7600

AMD RX 6800

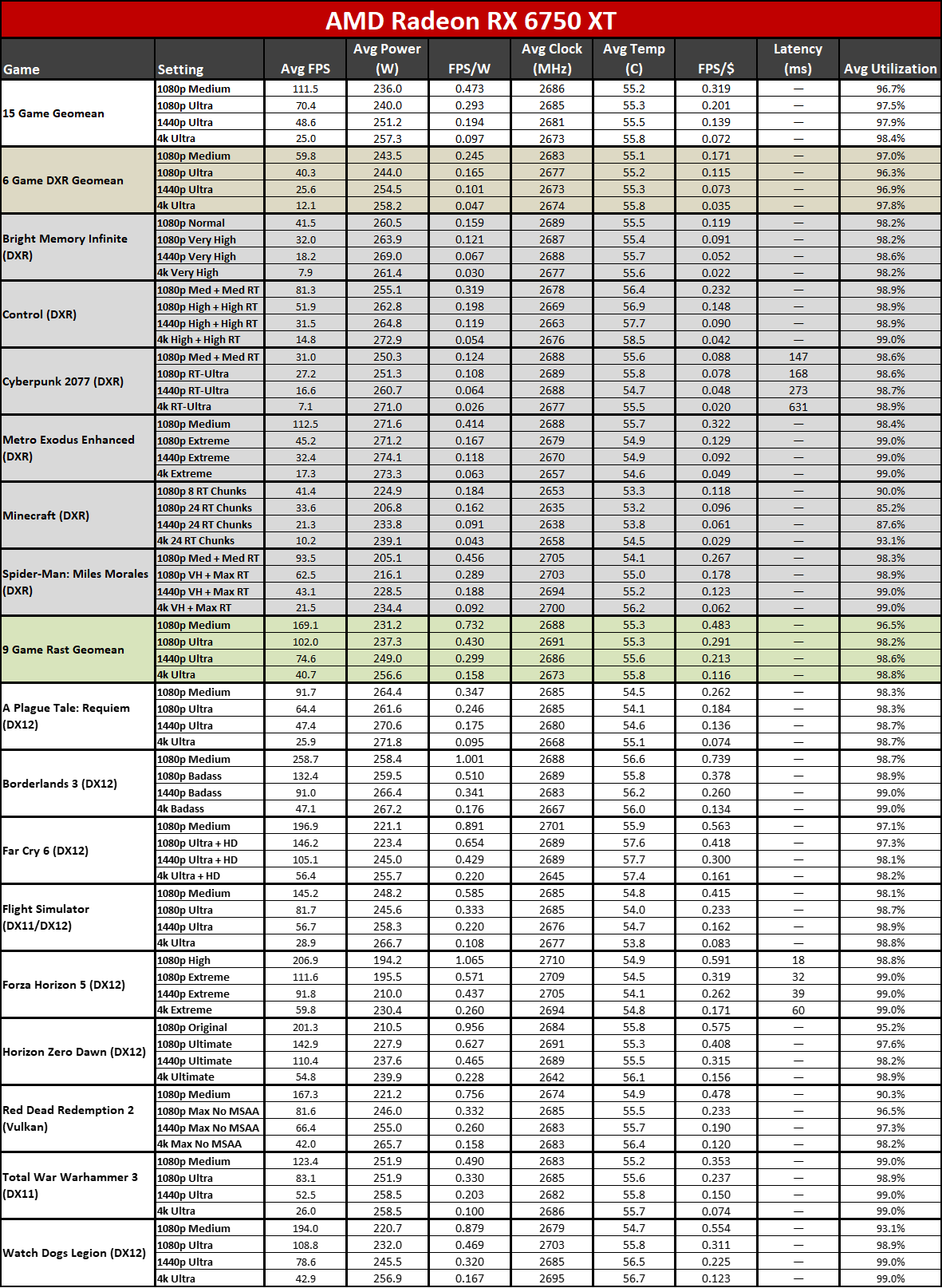

AMD RX 6750 XT

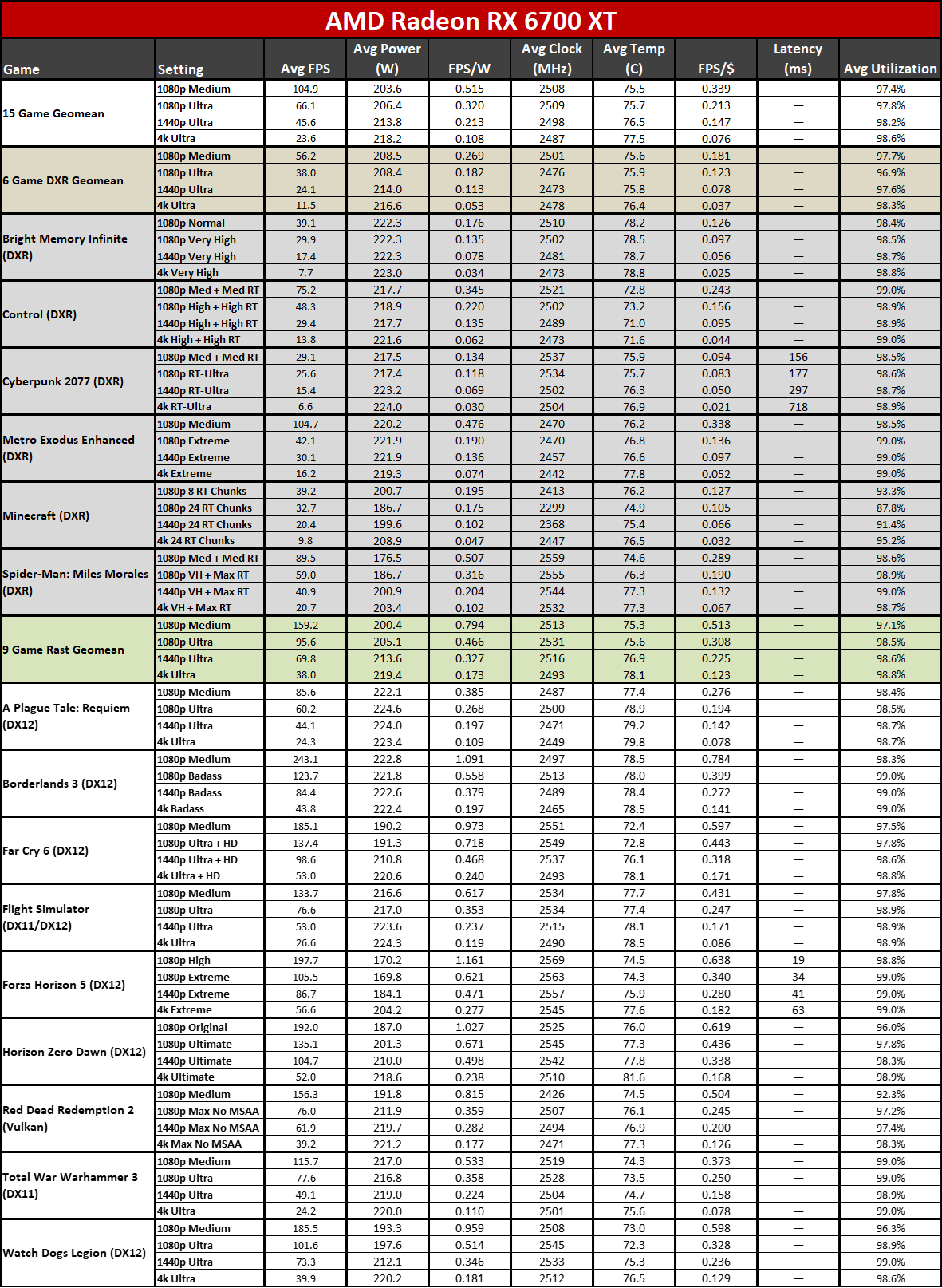

AMD RX 6700 XT

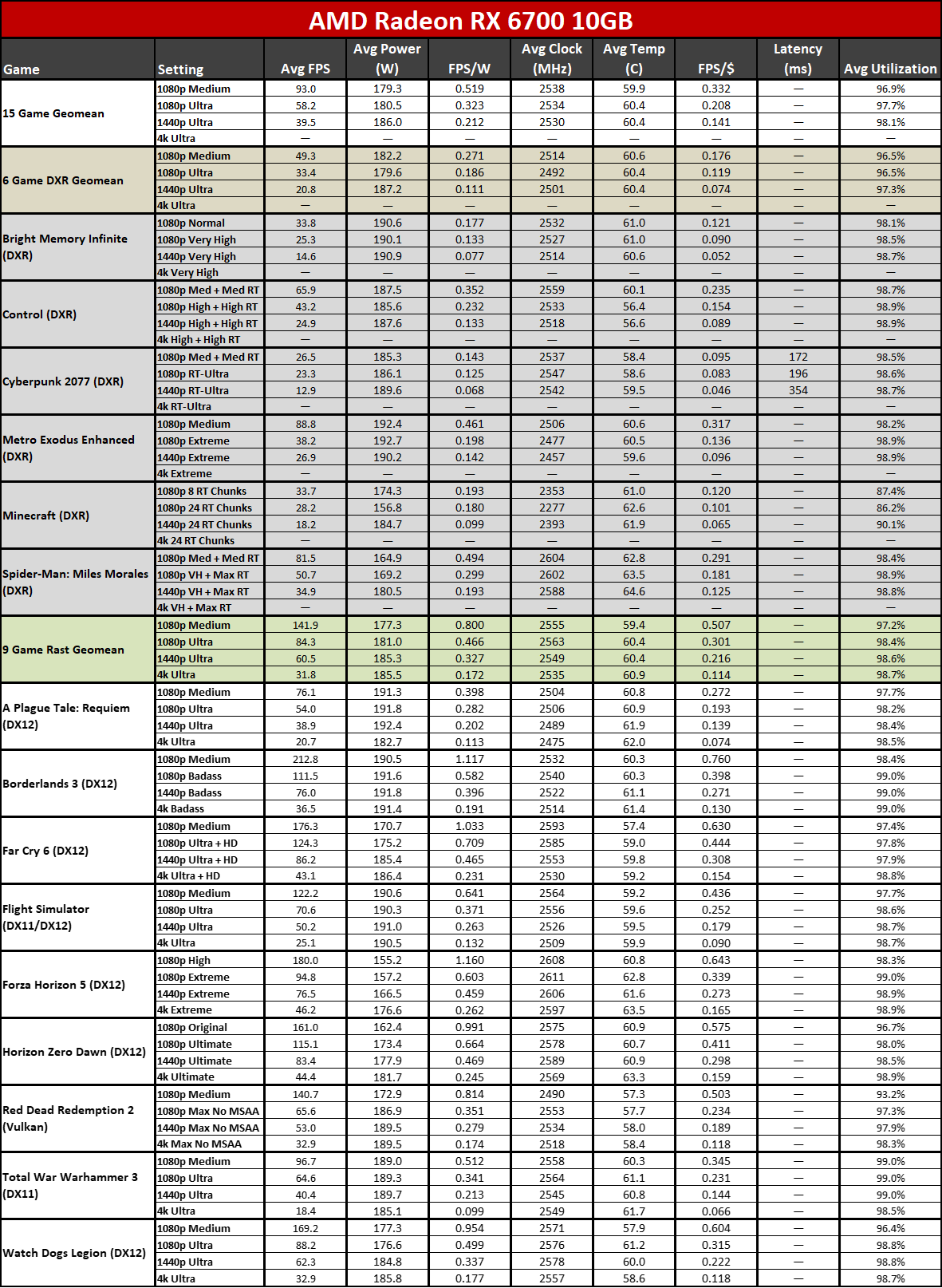

AMD RX 6700 10GB

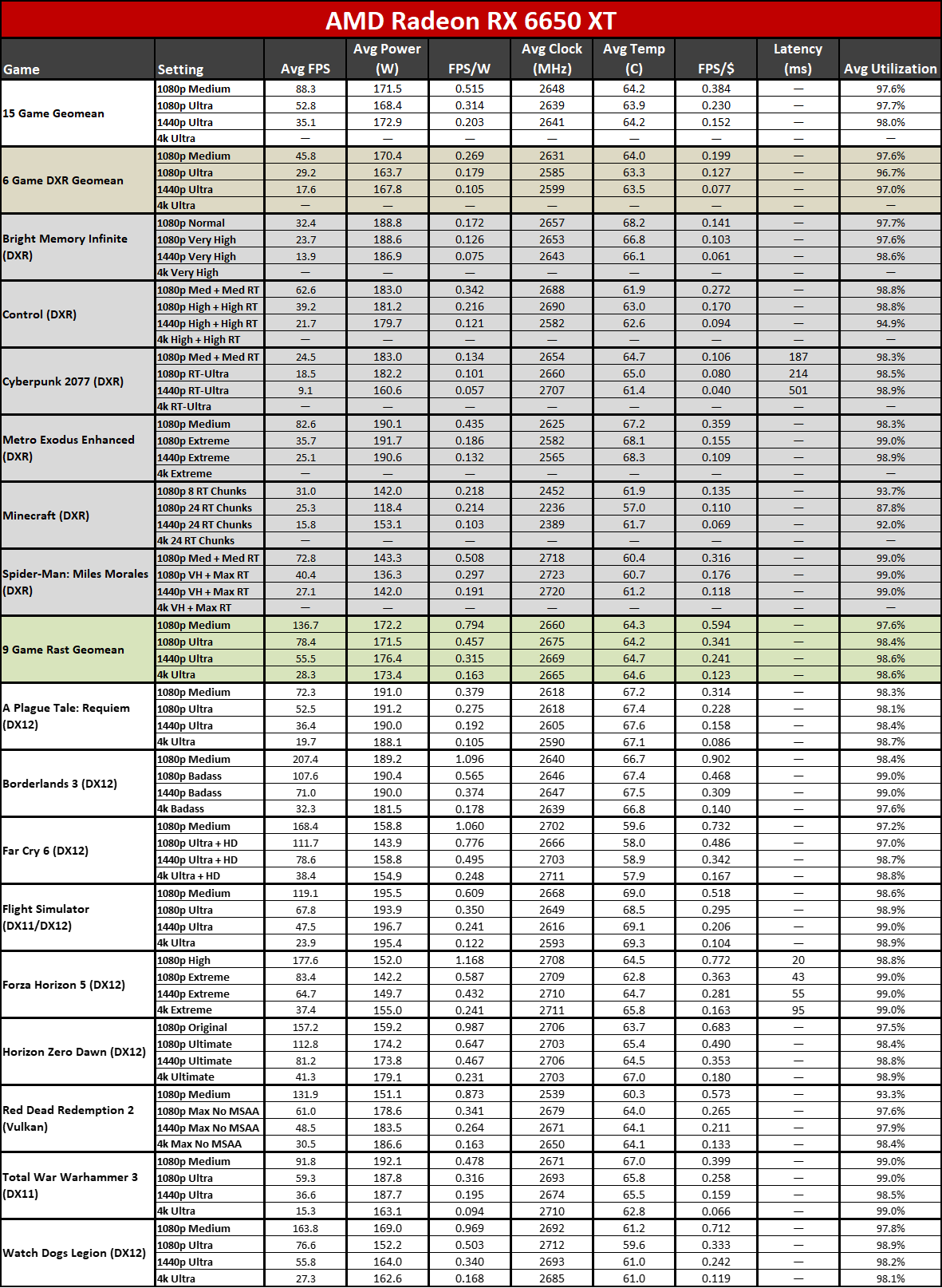

AMD RX 6650 XT

AMD RX 6600 XT

AMD RX 6600

Intel Arc A770 16GB

Intel Arc A750

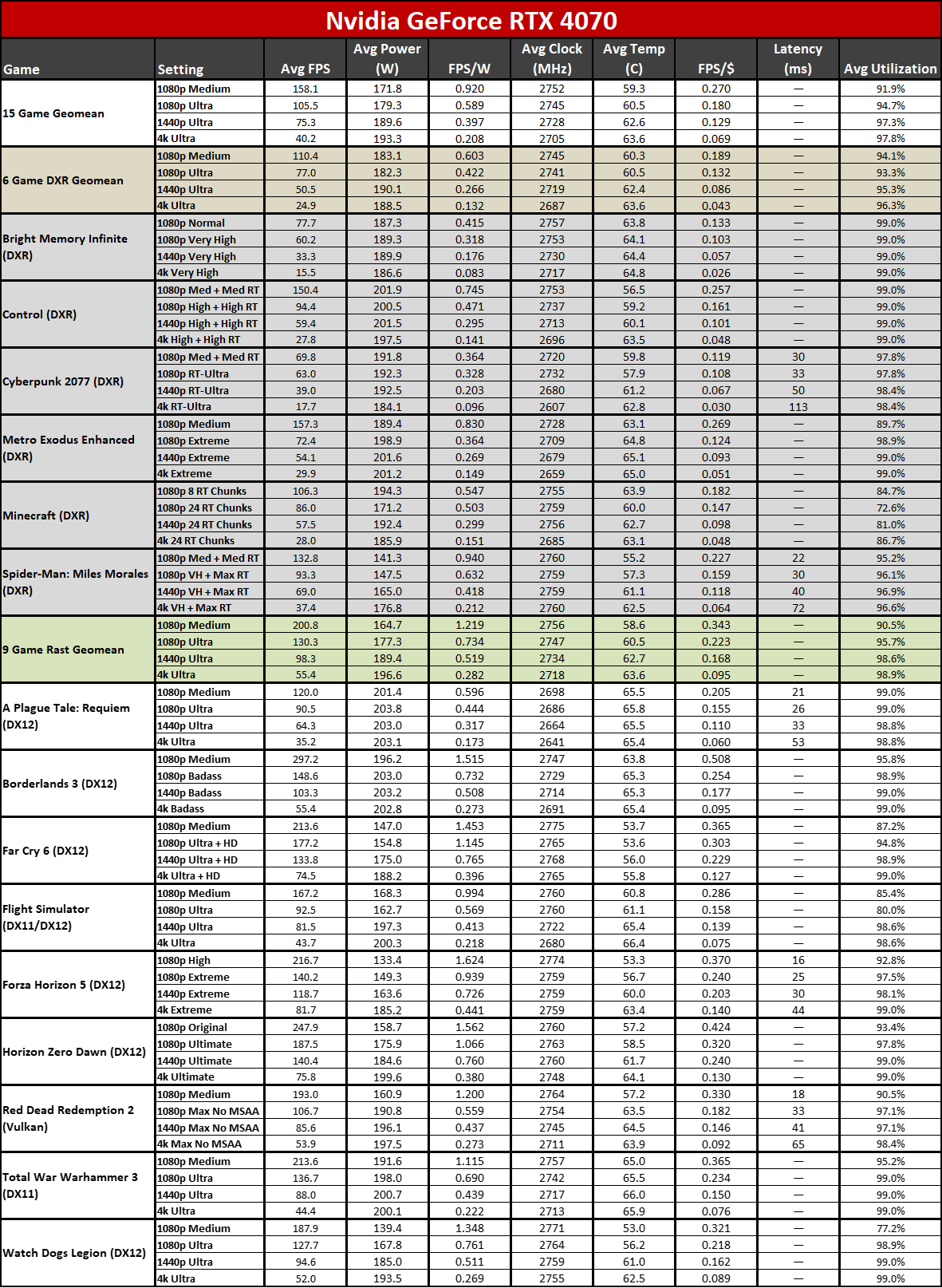

Nvidia RTX 4070

Nvidia RTX 4060 Ti

Nvidia RTX 3070

Nvidia RTX 3060 Ti

Nvidia RTX 3060

Nvidia RTX 3050

Nvidia RTX 2060

Multiple games have been updated over the past few months. We retested most of the cards for the RTX 4070, 4060 Ti, and RX 7600 reviews, and we've rechecked some cards to verify nothing significant has changed in the intervening month.

For this review, we're running Nvidia preview 536.20 drivers for the RTX 4060, while other Nvidia cards were tested with 531.41–531.93 drivers. For AMD, the GPUs used 23.2.1–23.5.2 drivers. Intel's Arc A770 cards were (re)tested with 4369–4514 drivers (the A770 16GB used the latest 4514 drivers and was just retested, if you're wondering).

Our current test suite consists of 15 games. Of these, nine support DirectX Raytracing (DXR), but we only enable the DXR features in six of the games. At the time of testing, 12 of the games support DLSS 2, five support DLSS 3, and five support FSR 2. We'll cover DLSS performance in a separate section on upscaling.

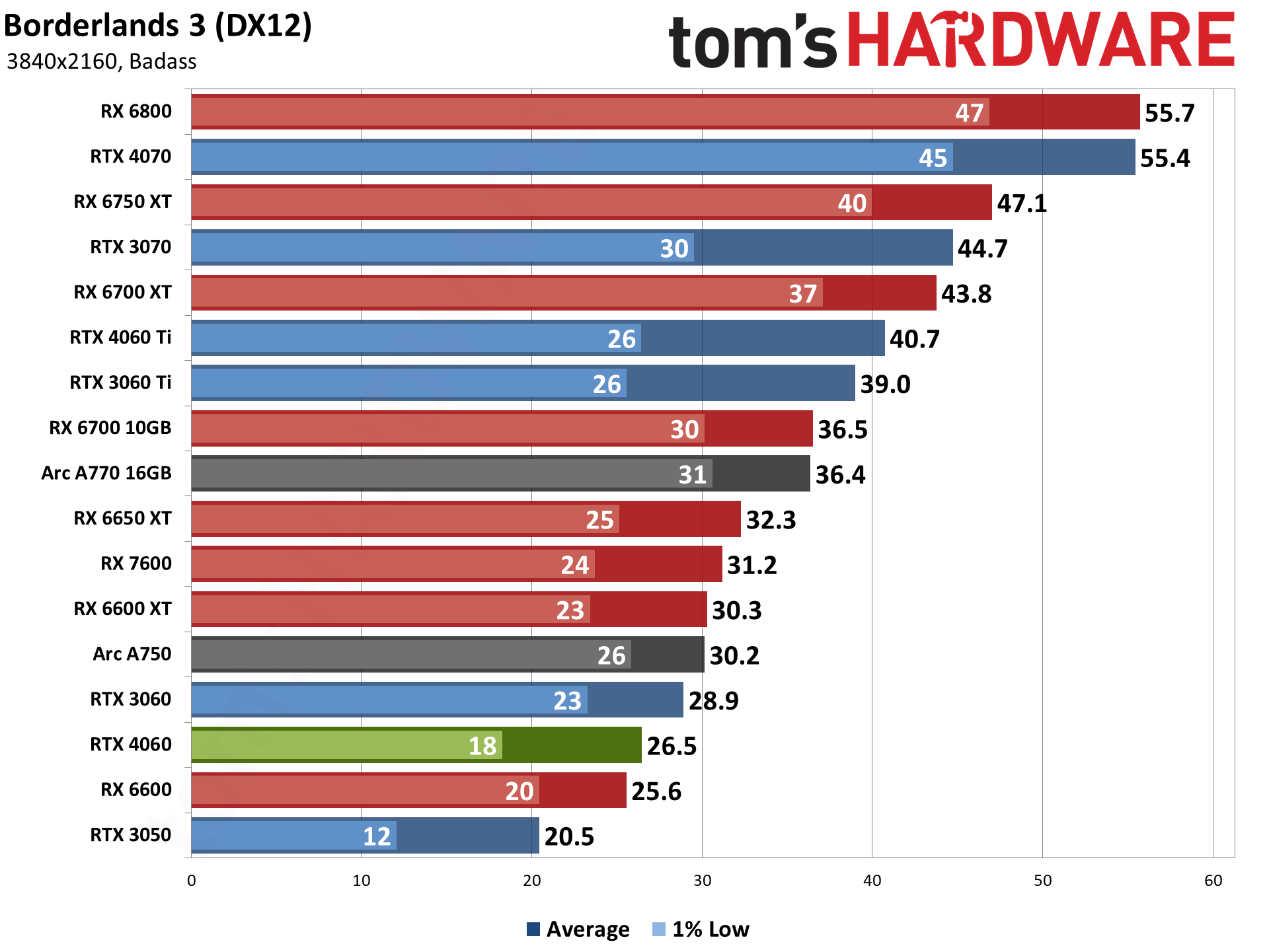

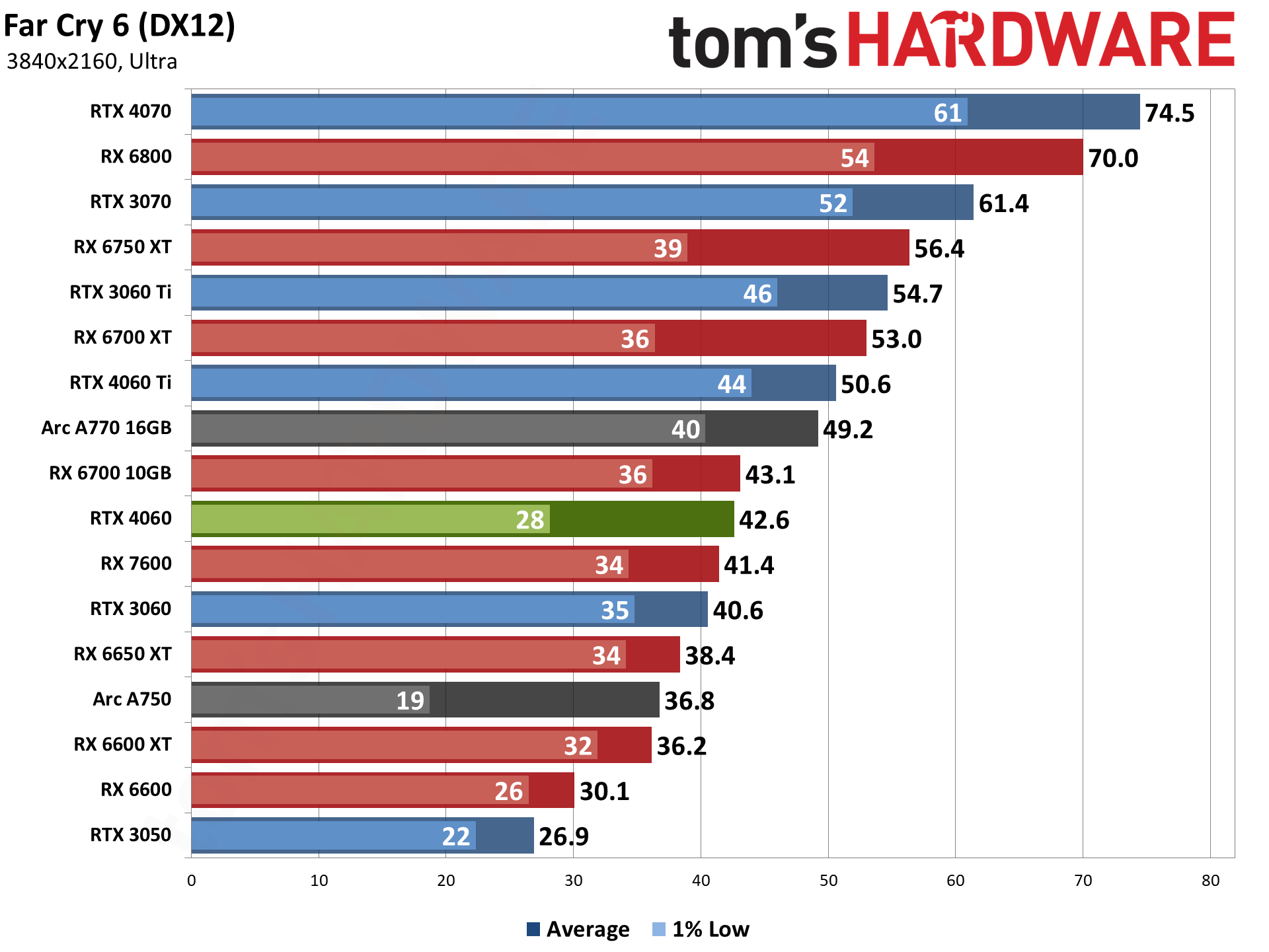

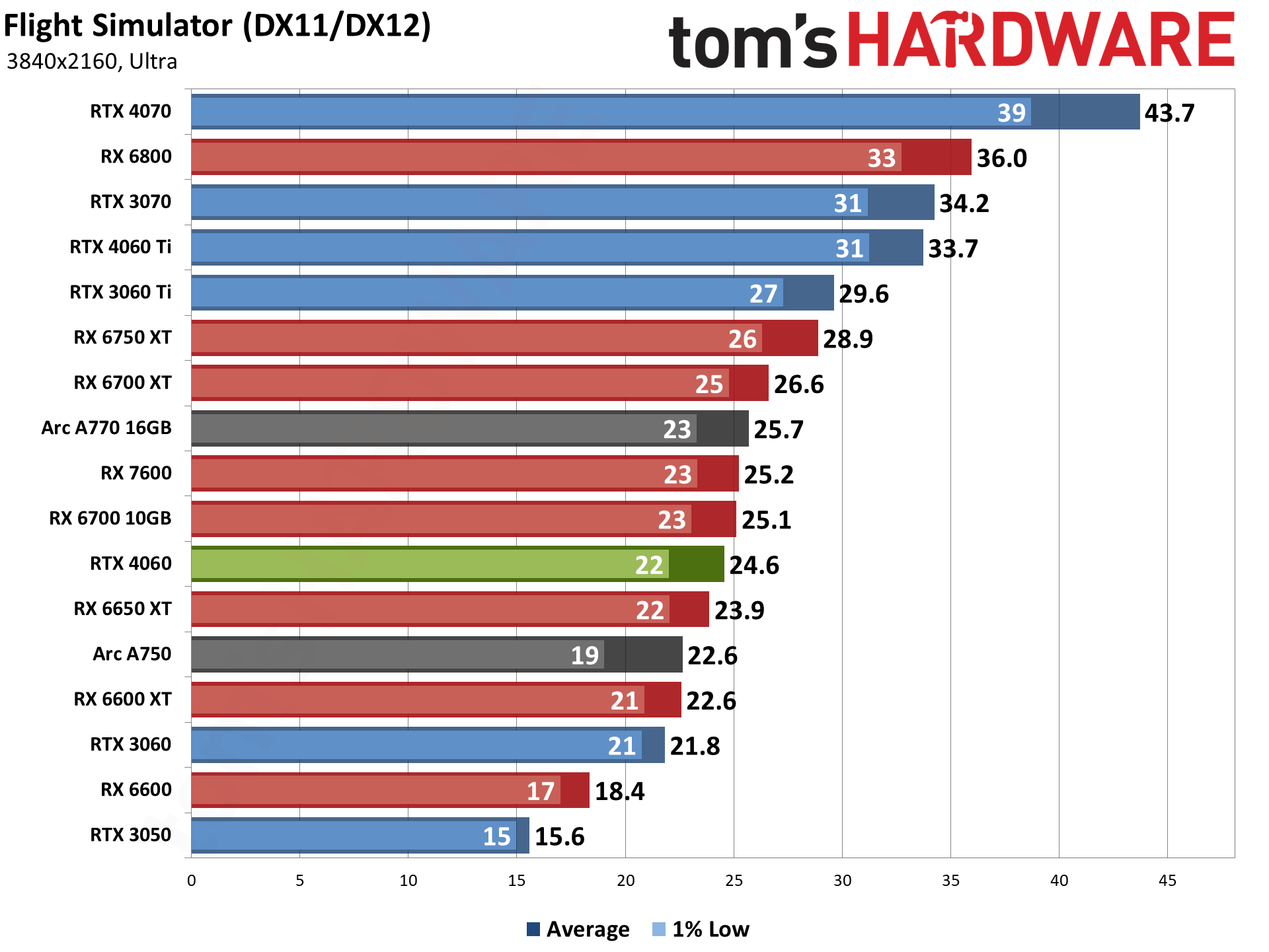

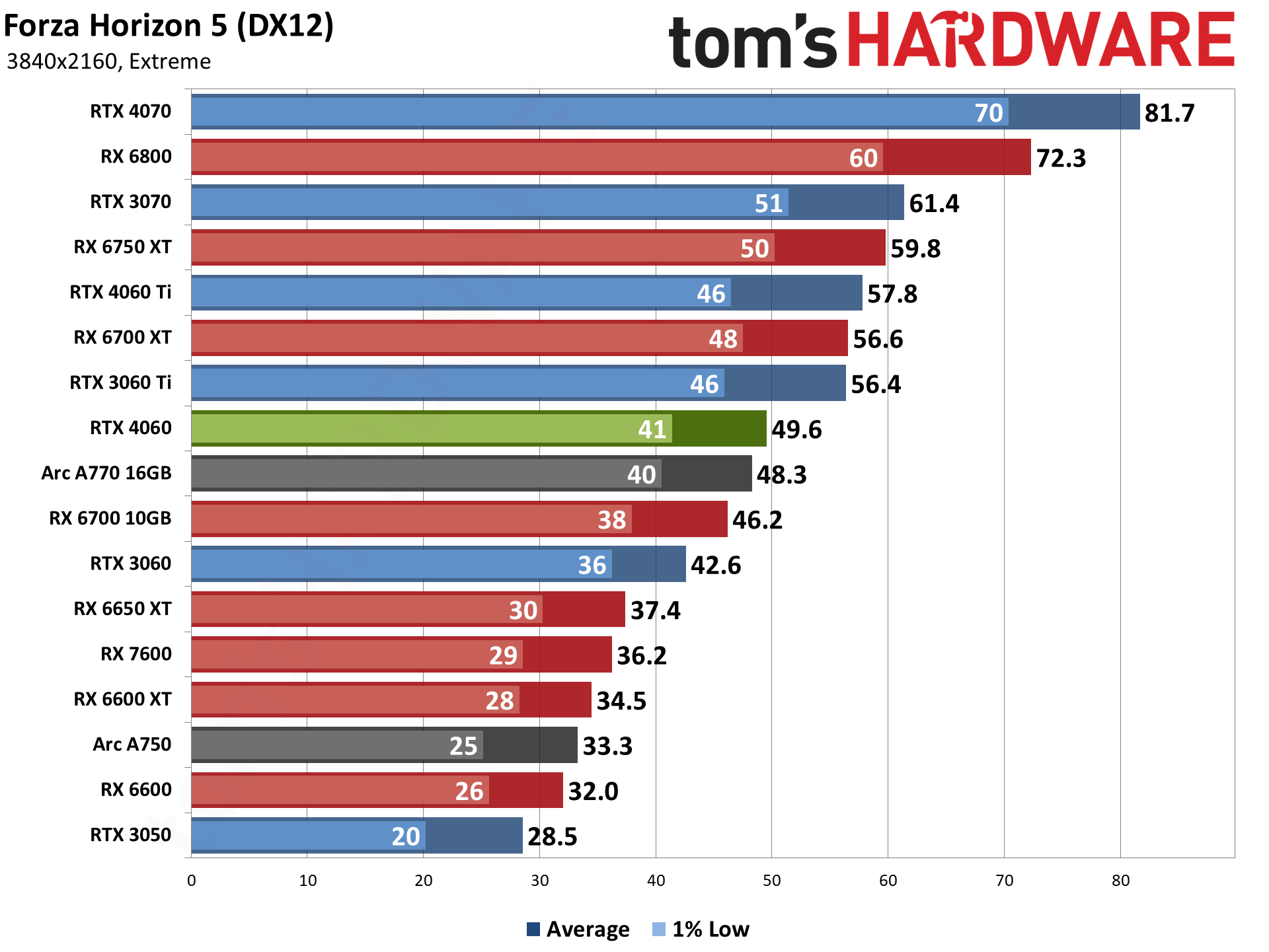

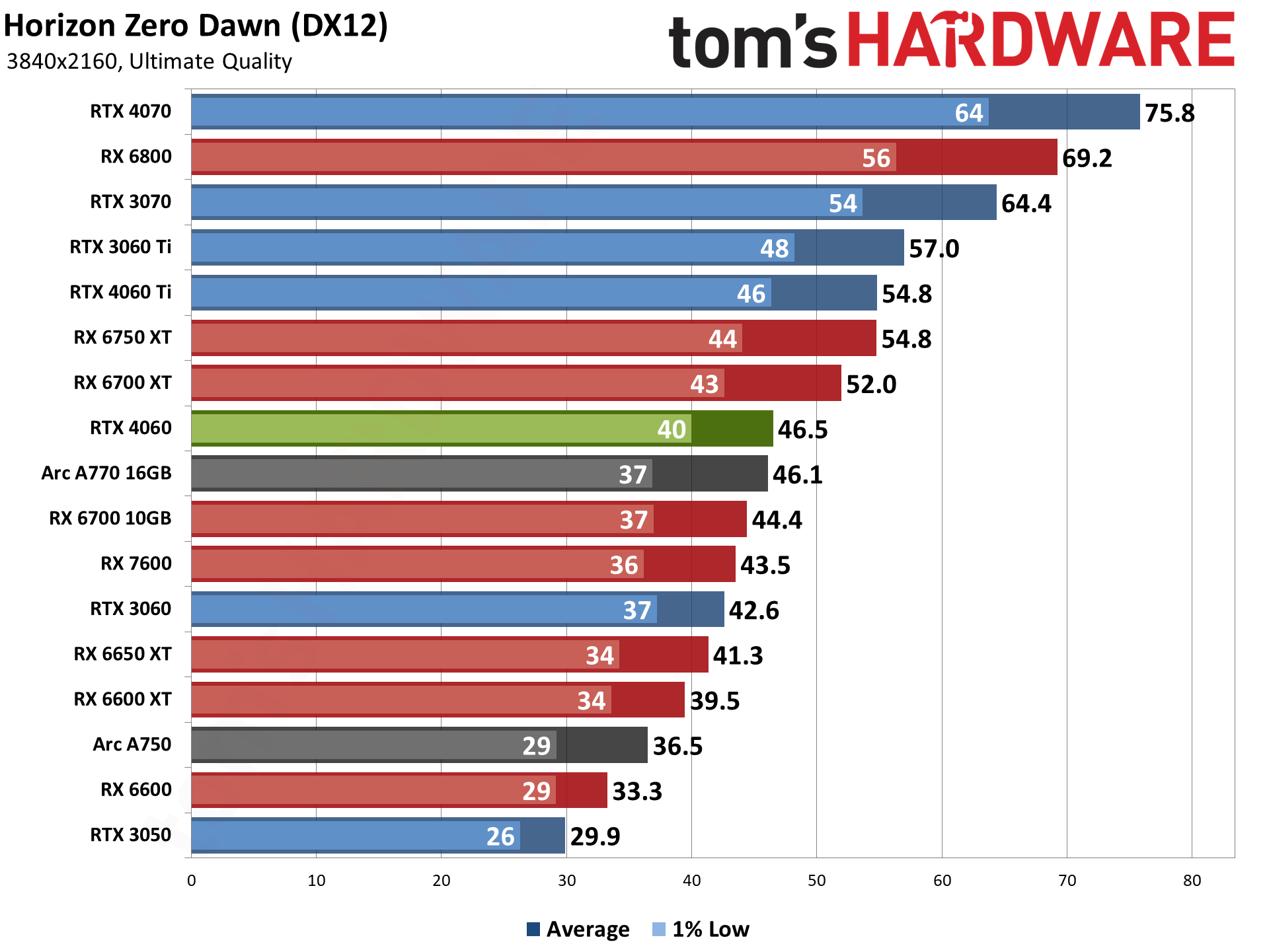

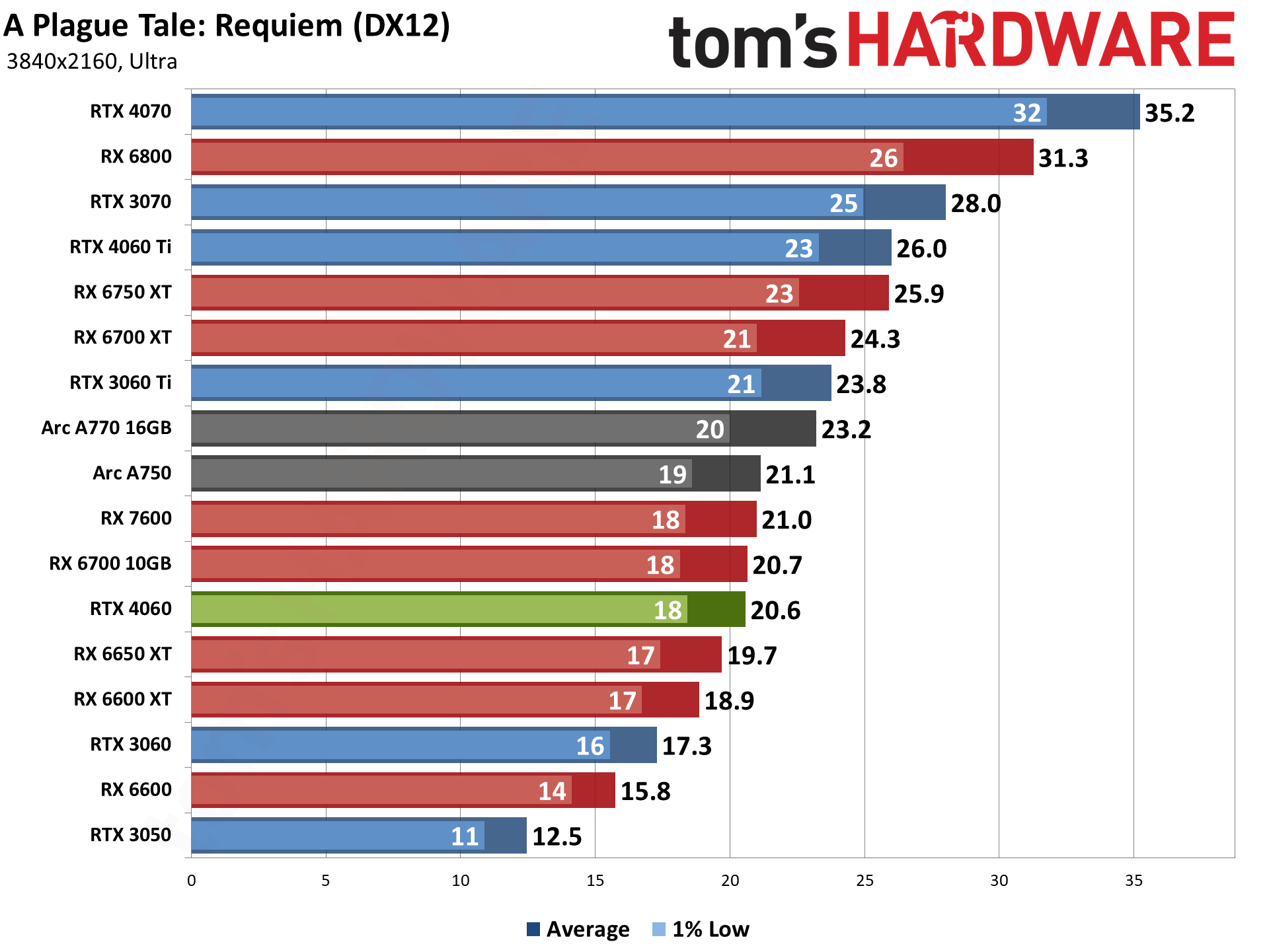

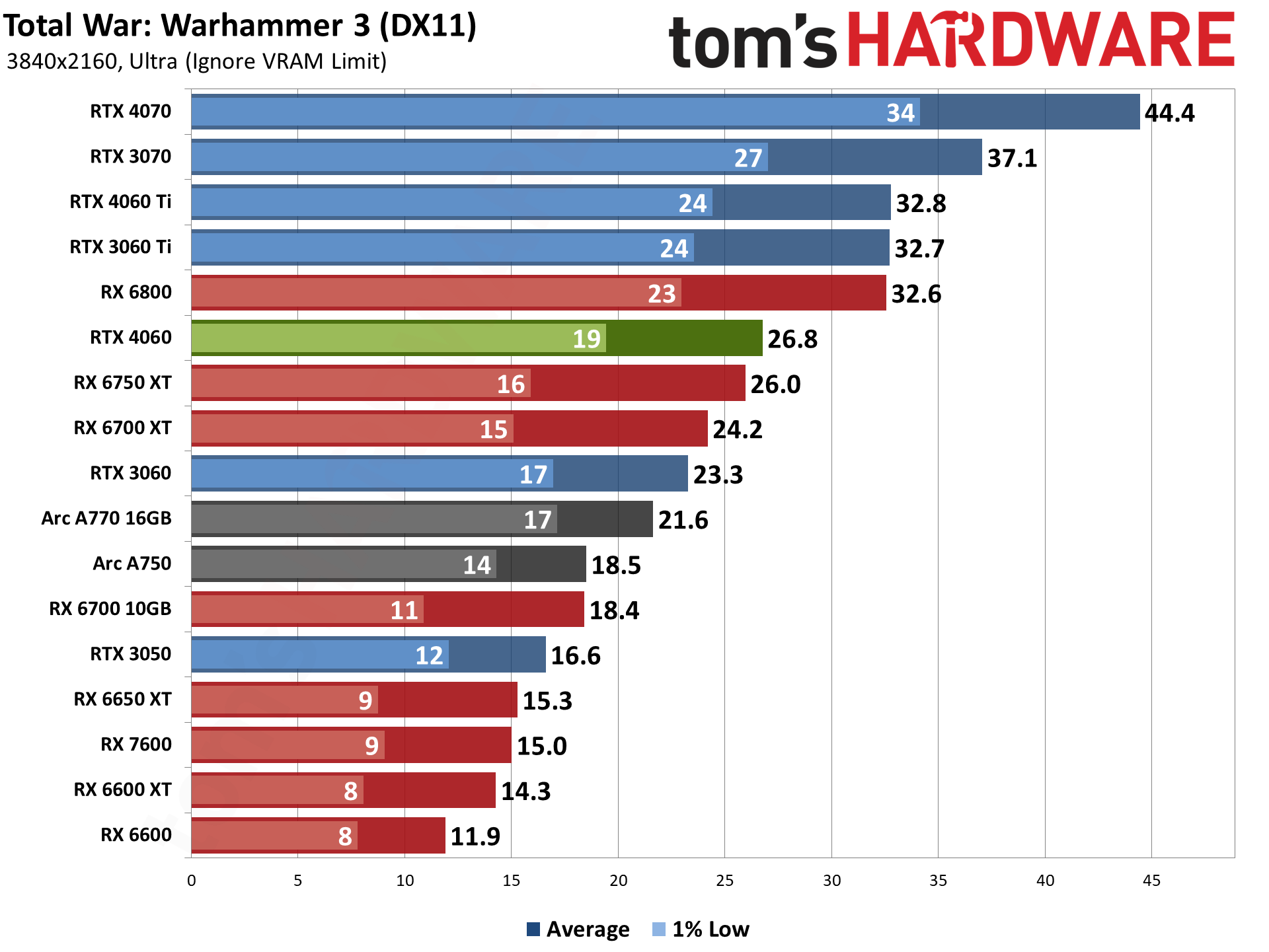

We tested the RTX 4060 at 1080p (medium and ultra), 1440p ultra, and 4K ultra — ultra being the highest supported preset if there is one, and in some cases maxing out all the other settings for good measure (except for MSAA or super sampling). The 4K results are mostly just for those who are interested in seeing how performance can collapse at that resolution with maxed out settings, because the 4060 definitely doesn't target 4K gamers.

Our PC is hooked up to a Samsung Odyssey Neo G8 32, one of the best gaming monitors around, allowing us to fully experience some of the higher frame rates that might be available. G-Sync and FreeSync were enabled, as appropriate. As you can imagine, getting anywhere close to the 240 Hz limit of the monitor proved difficult, as we don't have any esports games in our test suite.

When we assembled the new test PC, we installed all of the then-latest Windows 11 updates. We're running Windows 11 22H2, but we've used InControl to lock our test PC to that major release for the foreseeable future (critical security updates still get installed each month).

Our new test PC includes Nvidia's PCAT v2 (Power Capture and Analysis Tool) hardware, which means we can grab real power use, GPU clocks, and more during all of our gaming benchmarks. We'll cover those results in our page on power use.

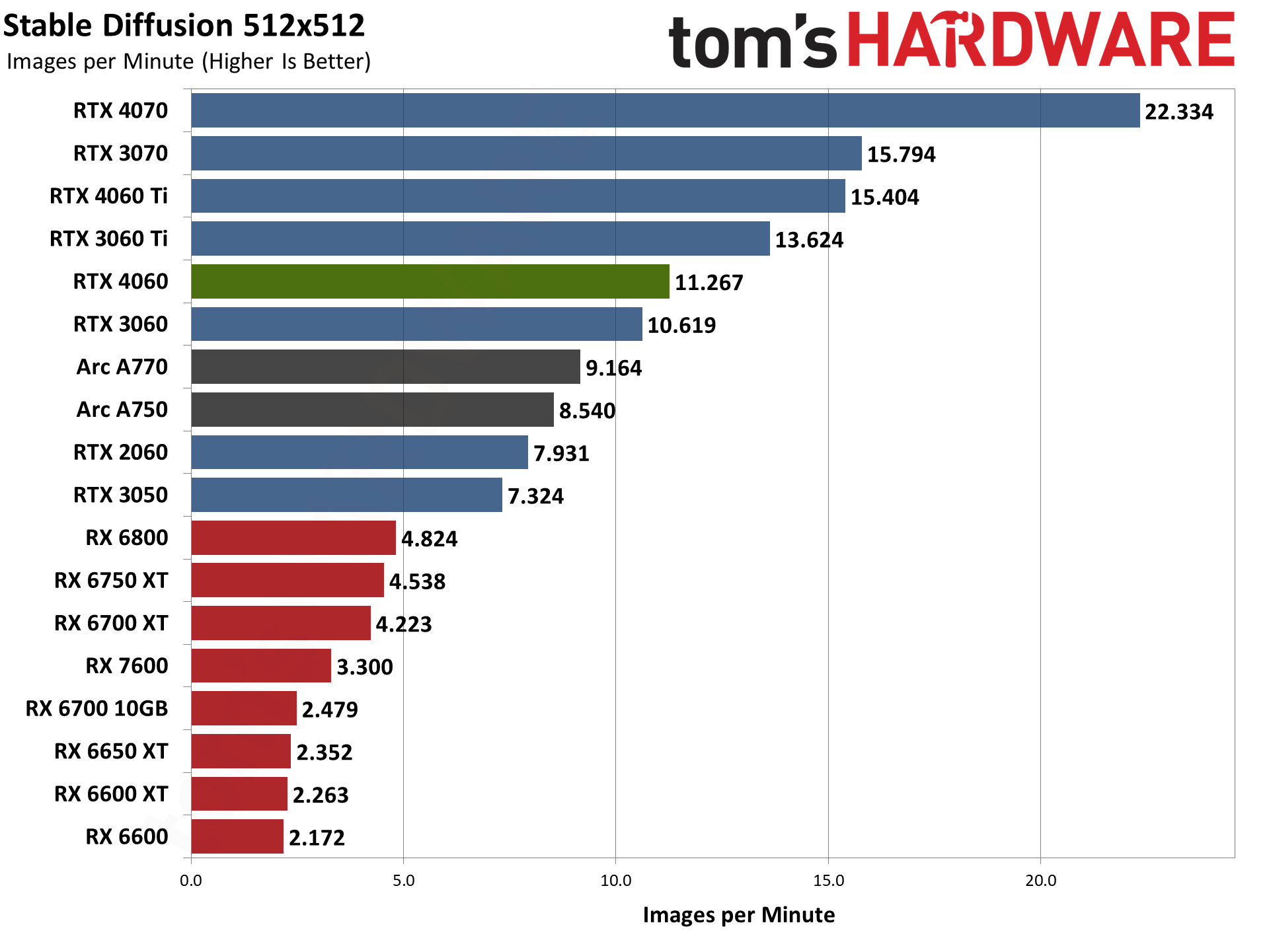

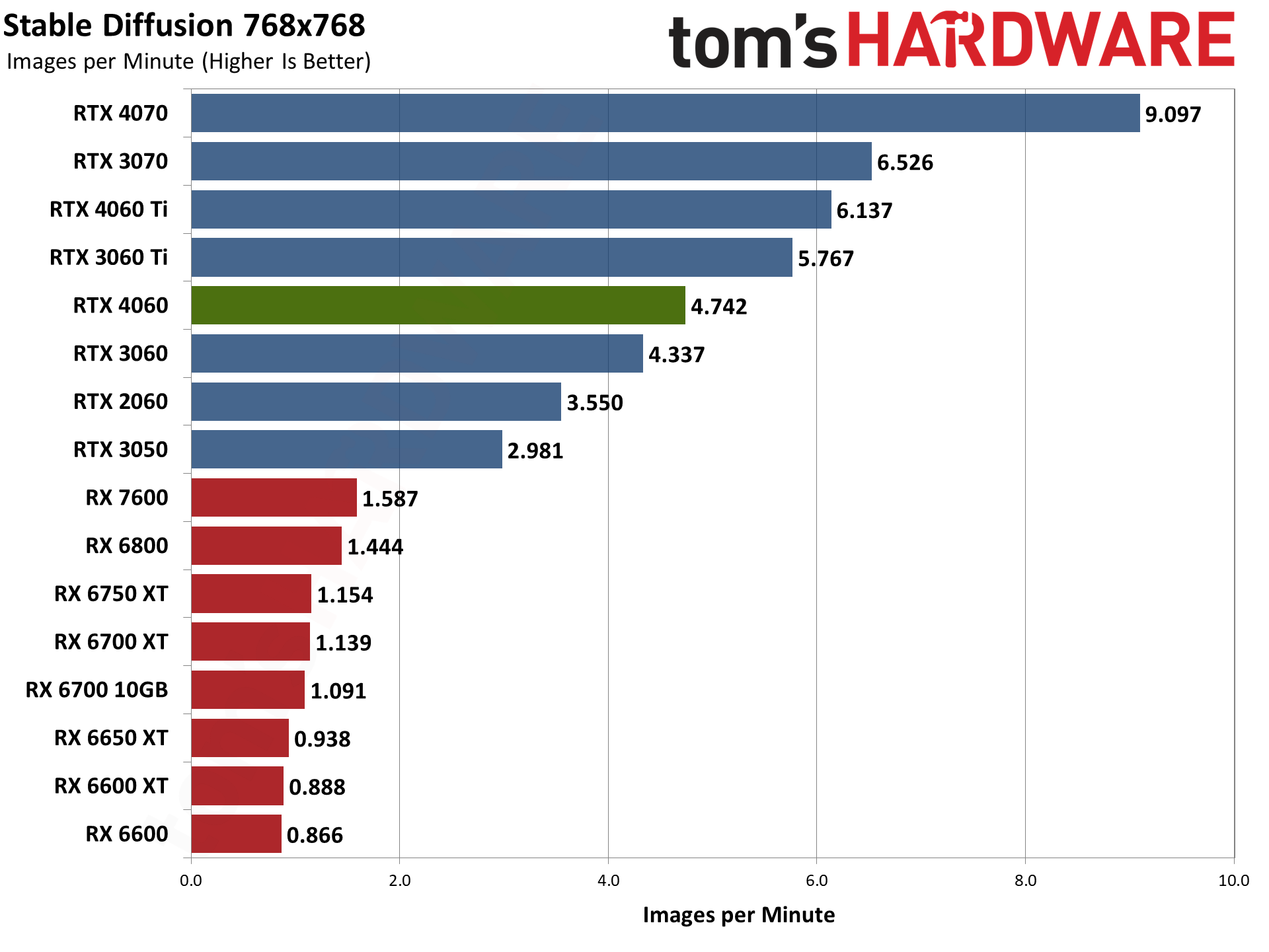

Finally, because GPUs aren't purely for gaming these days, we've run some professional application tests, and we also ran some Stable Diffusion benchmarks to see how AI workloads scale on the various GPUs.

- MORE: Best Graphics Cards

- MORE: GPU Benchmarks and Hierarchy

- MORE: All Graphics Content

The Nvidia RTX 4060 starts at $299, targeting "mainstream gamers" who don't tend to upgrade every generation of GPUs and may not have the latest and greatest hardware. Nvidia and AMD have both talked about this market, highlighting how many people are still using cards like the GTX 1060 and RTX 2060, and noting that the Steam Hardware Survey indicates 64% of surveyed PCs still run a 1080p resolution (though a lot of those are probably laptops as well, which tend to top out at 1080p outside of the more expensive offerings).

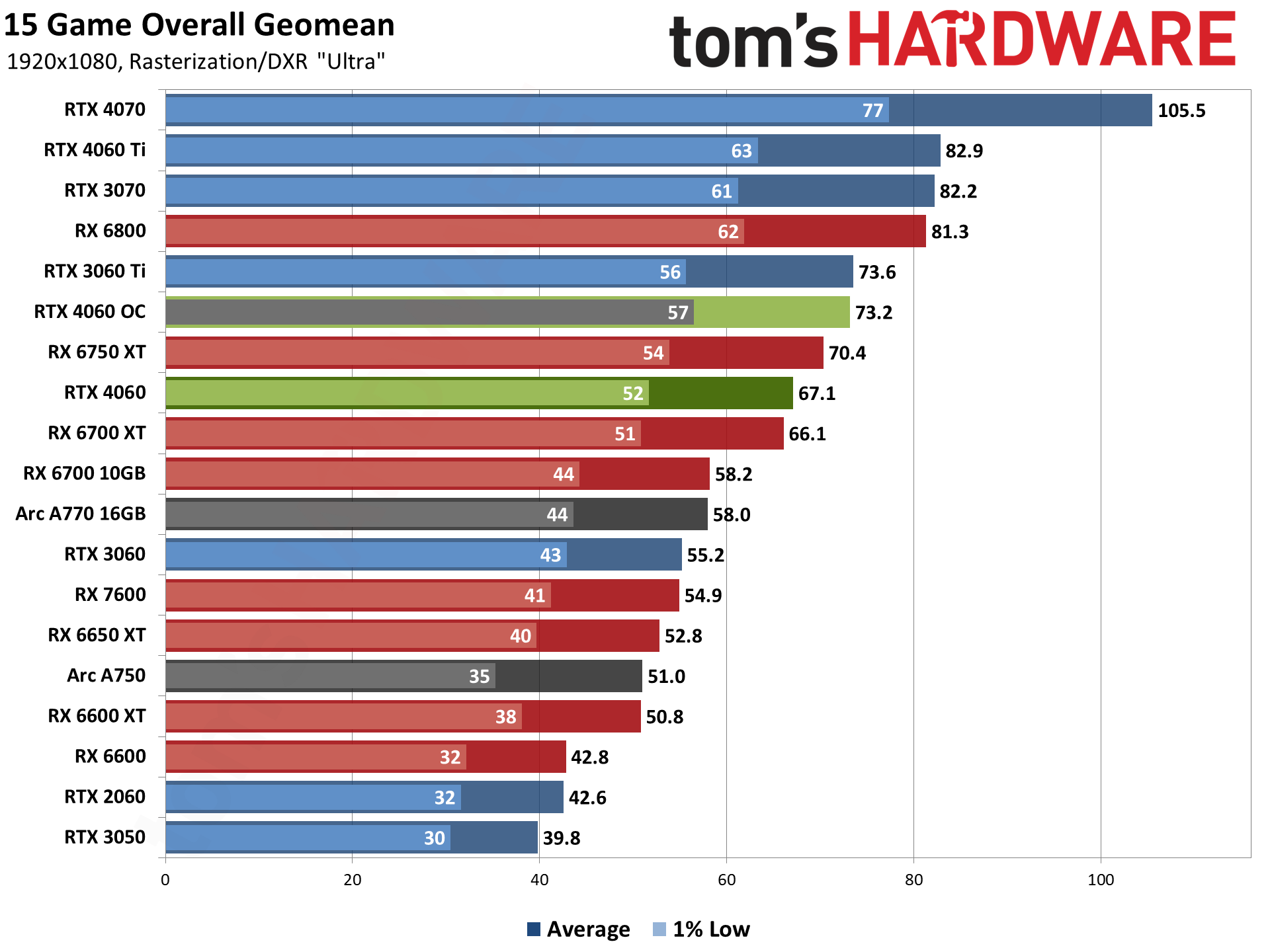

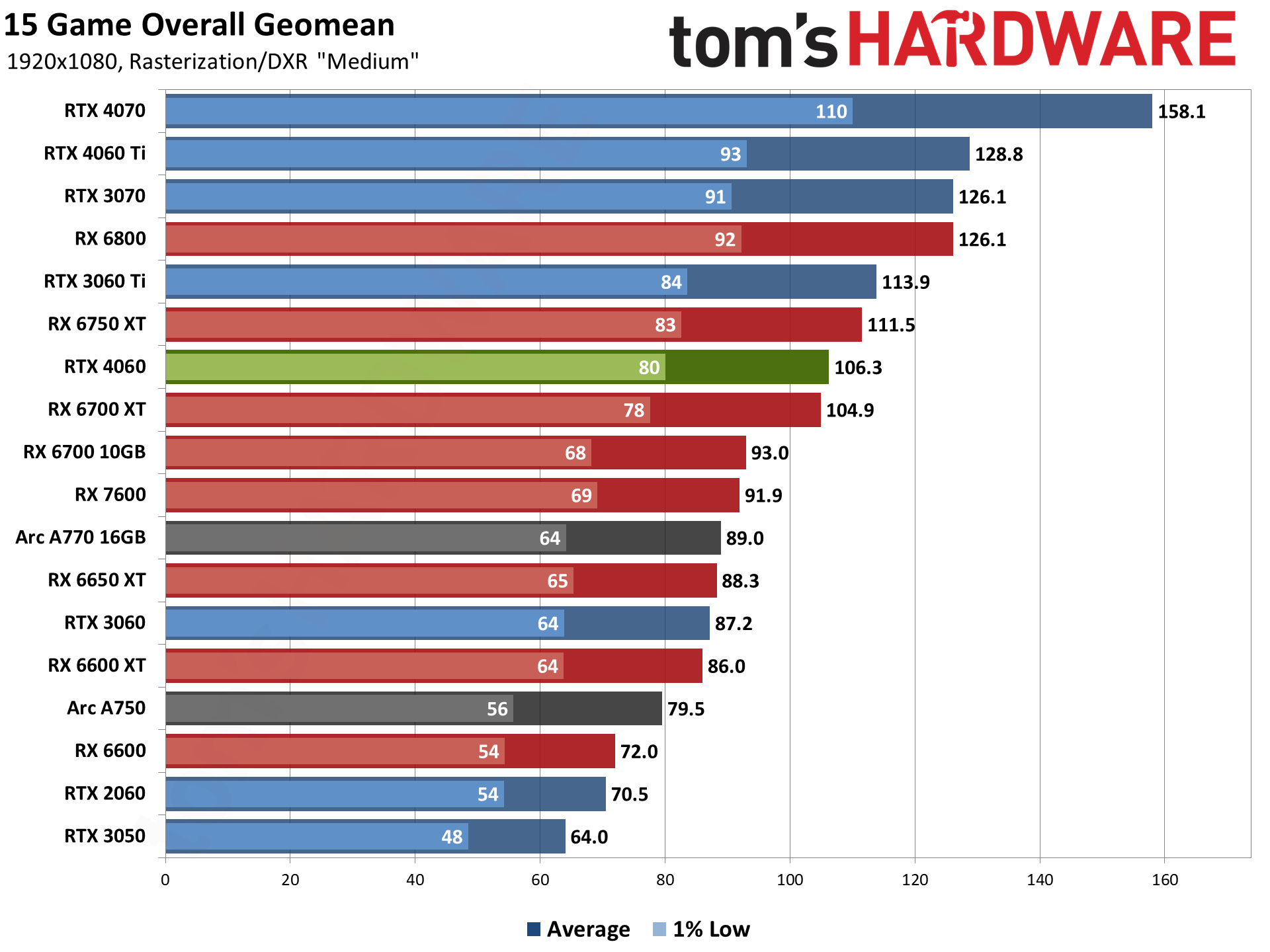

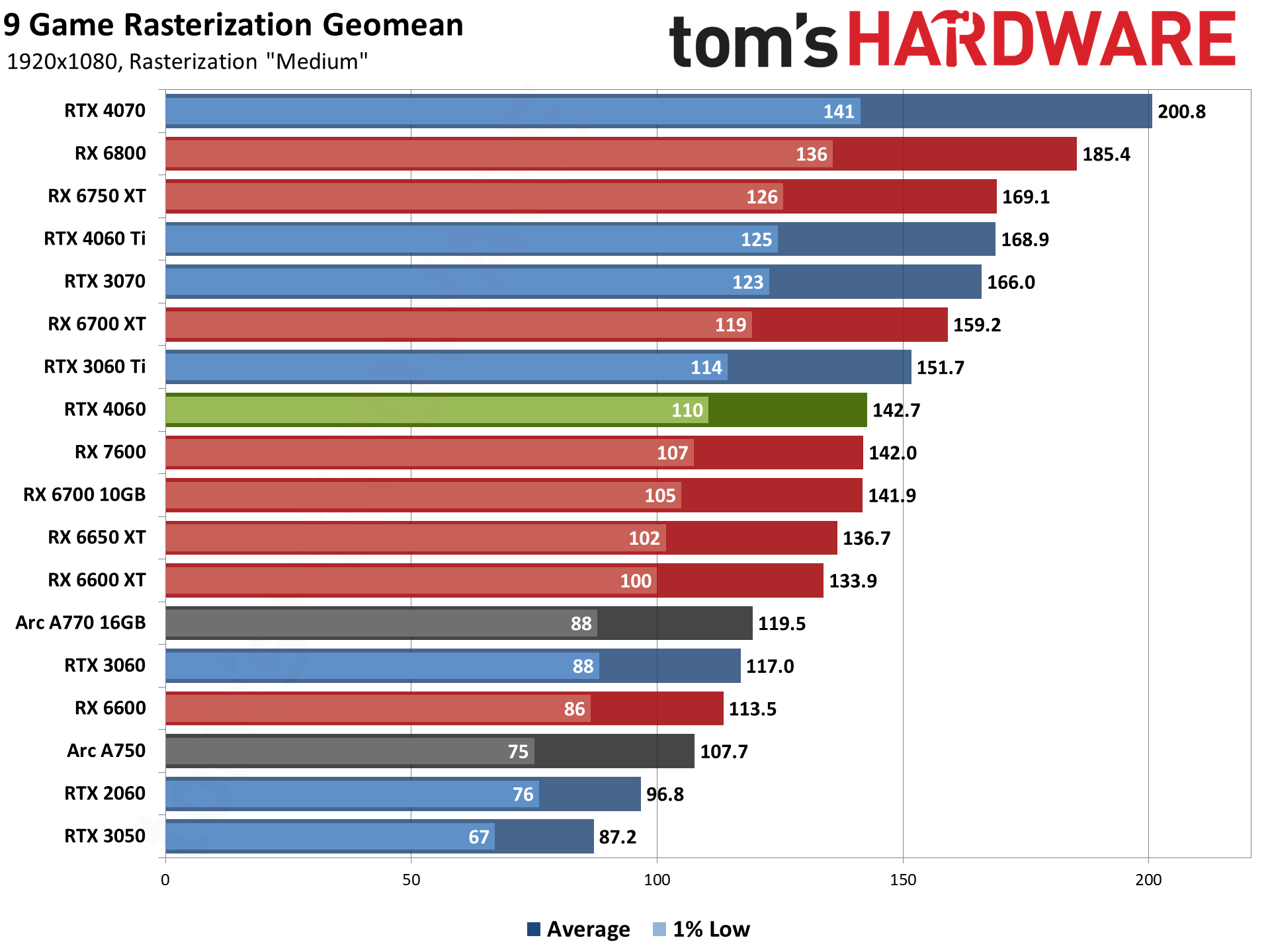

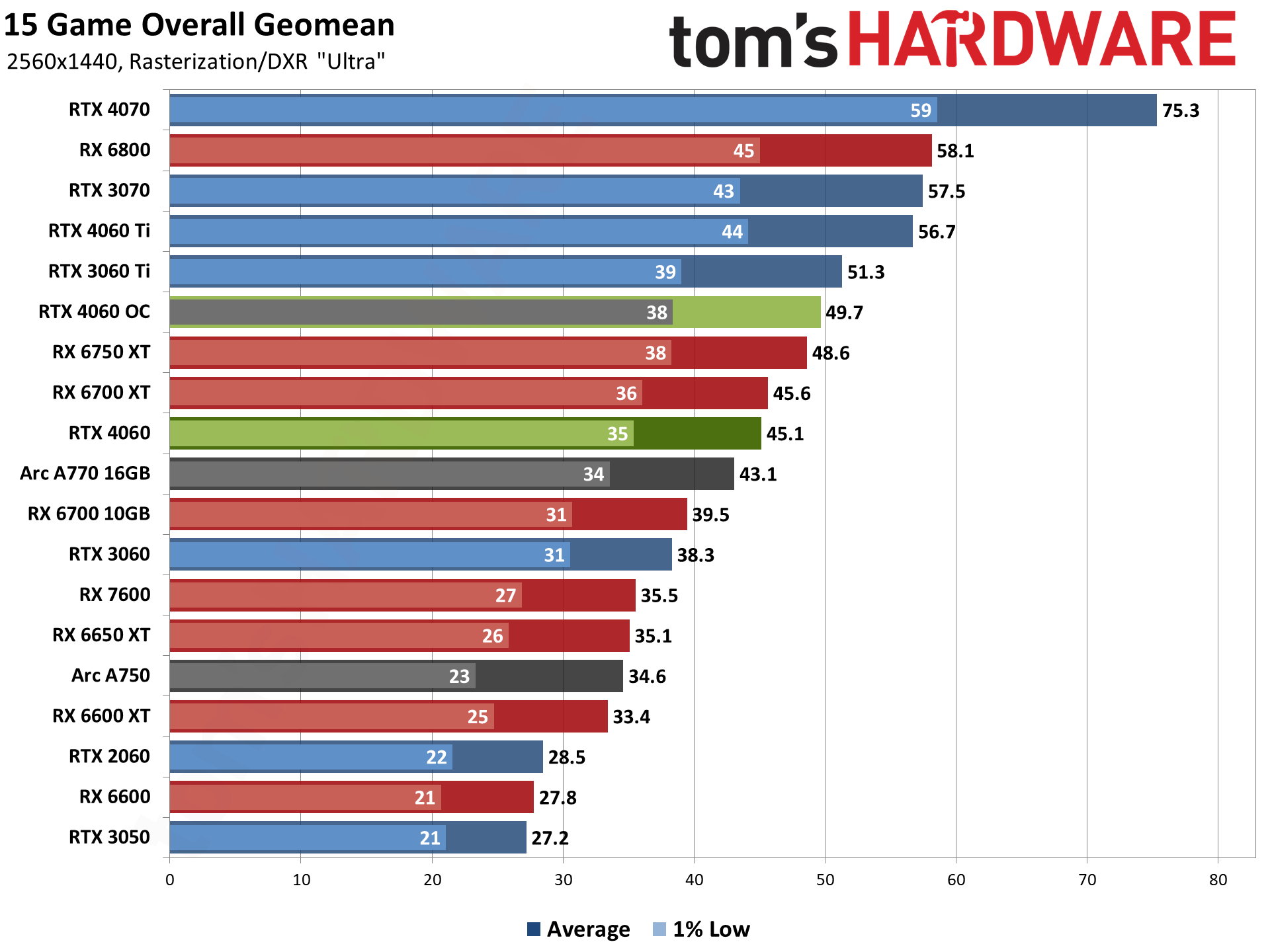

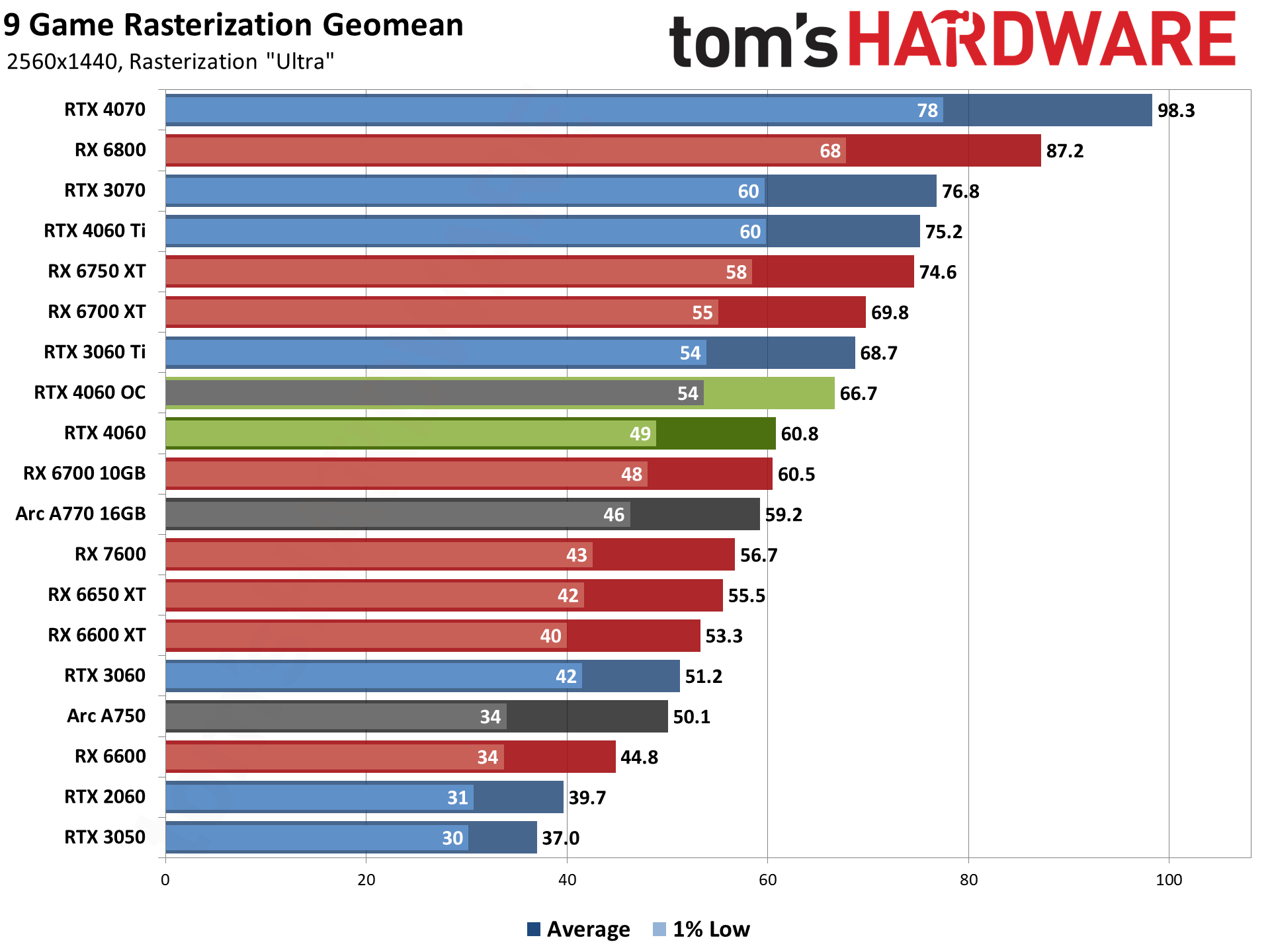

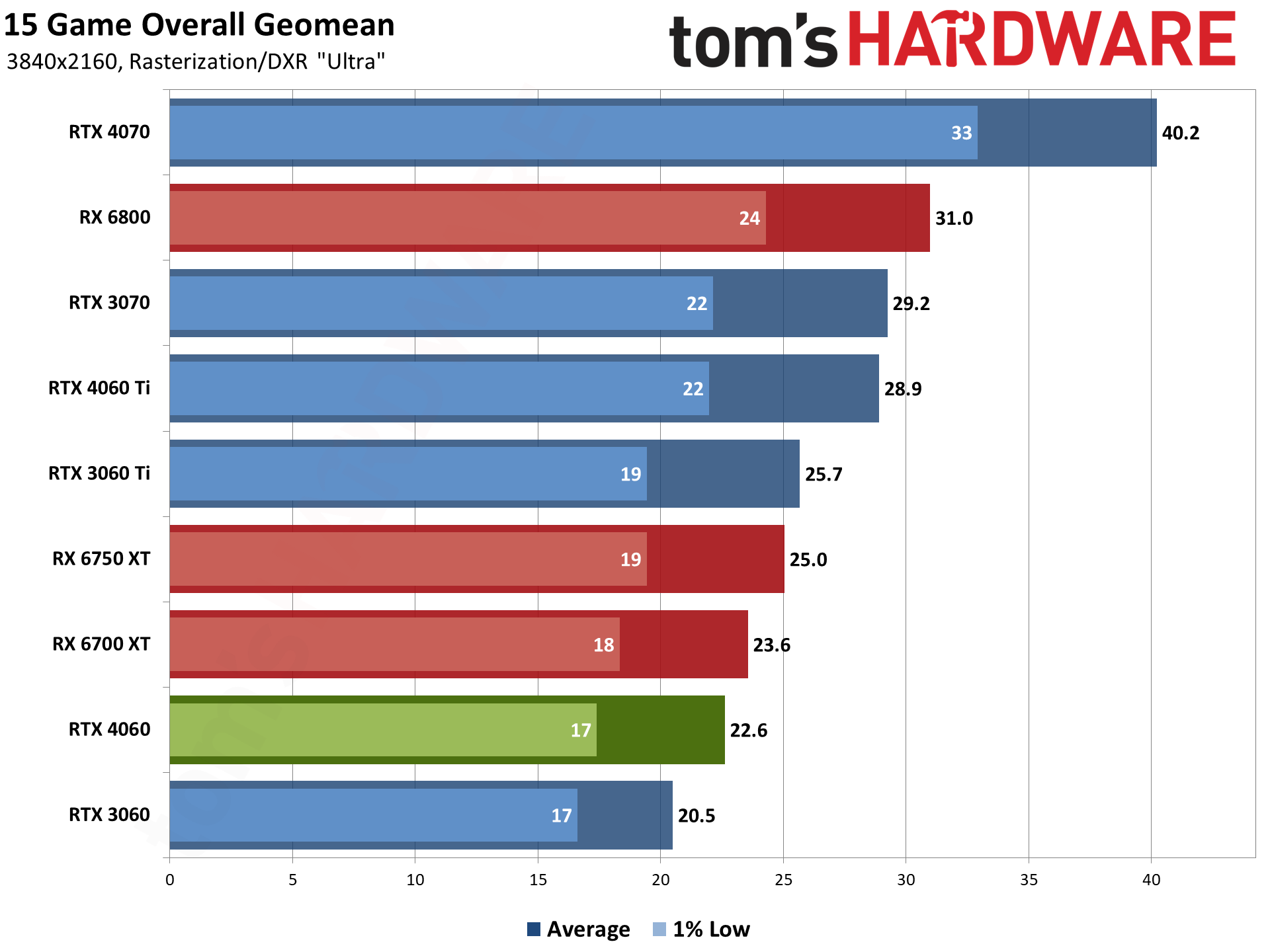

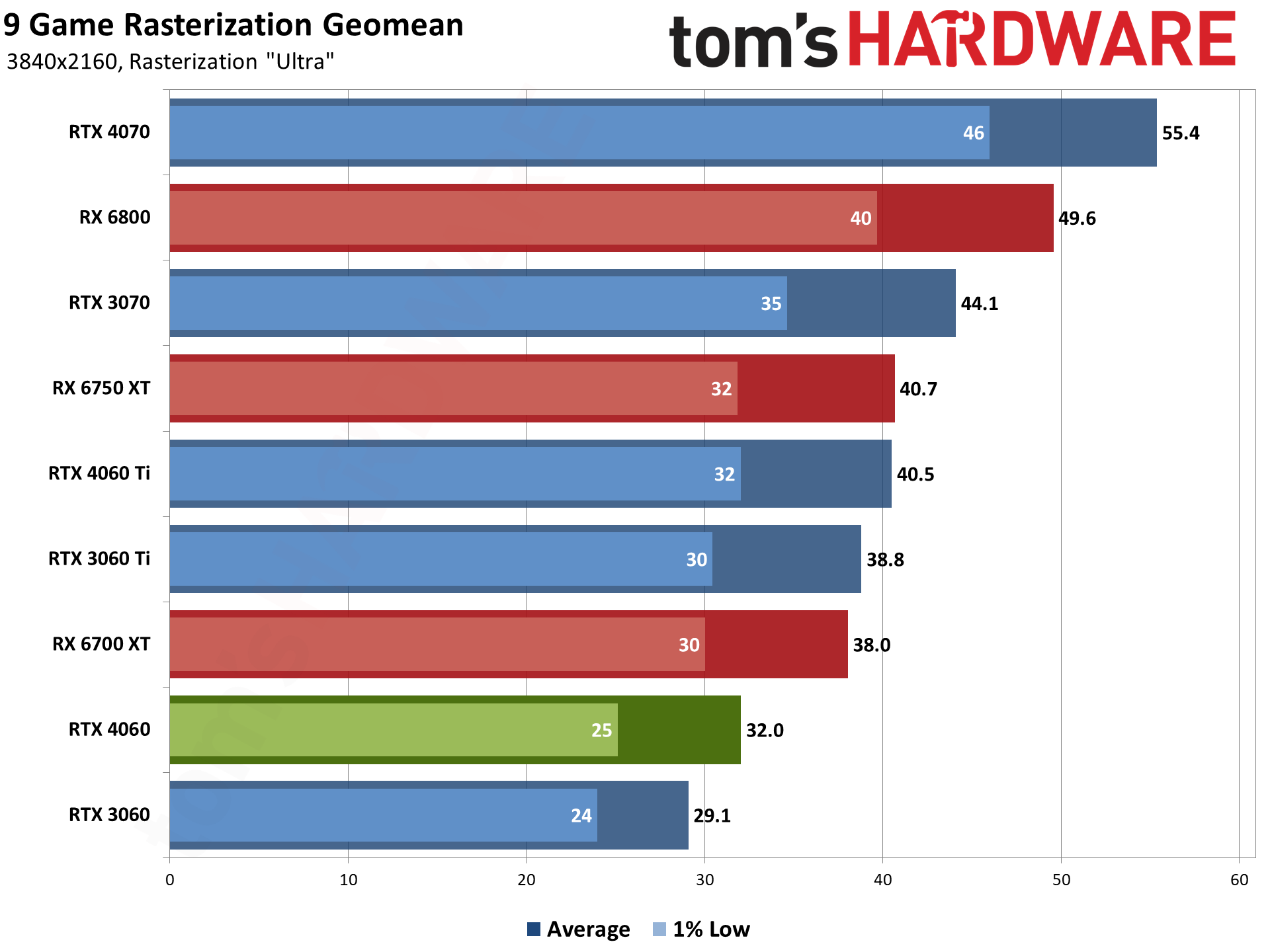

Our new test regimen gives us a global view of performance using the geometric mean all 15 games, including both the ray tracing and rasterization test suites. Then we've got separate charts for only the rasterization and ray tracing suites, plus charts for the individual games. If you don't like the "overall performance" chart, the other two are the same view that we've previously presented.

Our test suite is intentionally heavier on ray tracing games than what you might normally encounter. That's largely because ray tracing games tend to be the most demanding options, so if a new card can handle ray tracing reasonably well, it should do just fine with less demanding games. Ray tracing also feels increasingly like something we can expect to run well, when optimized properly, and Nvidia's hardware proves what's possible. We're now two generations on from Nvidia's RTX 20-series, and as we'll see shortly, the new $300 RTX 4060 basically matches the $700–$800 RTX 2080 that launched in mid-2018.

We have two charts, 1080p ultra and 1080p medium. Ultra settings may in some cases push the RTX 4060 a bit too hard, particularly in demanding DXR games, while the medium settings might not push hard enough. You can interpolate between the two to get a reasonable estimate of where 1080p high settings should land, which is arguably the sweet spot for cards like the RTX 4060.

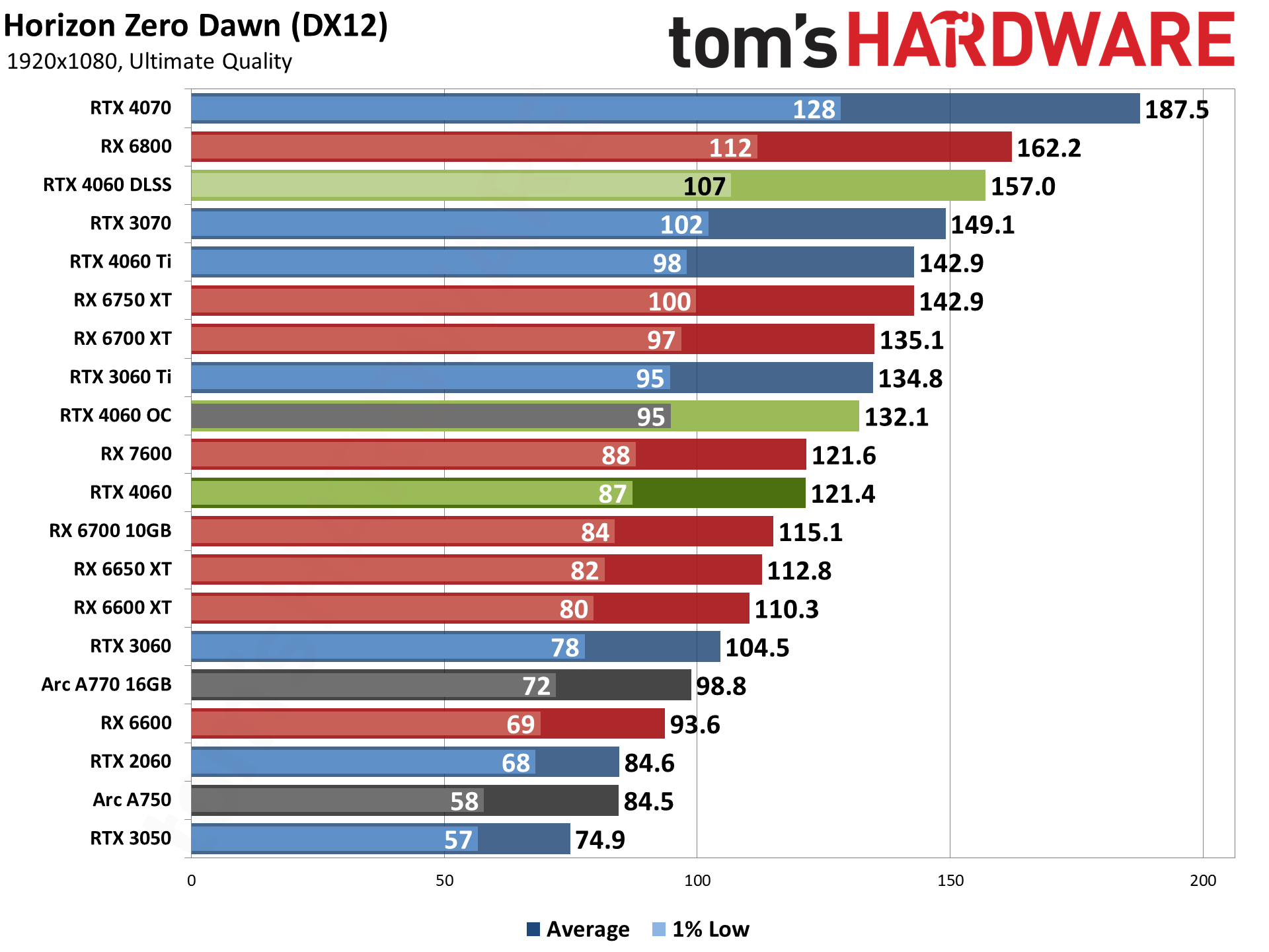

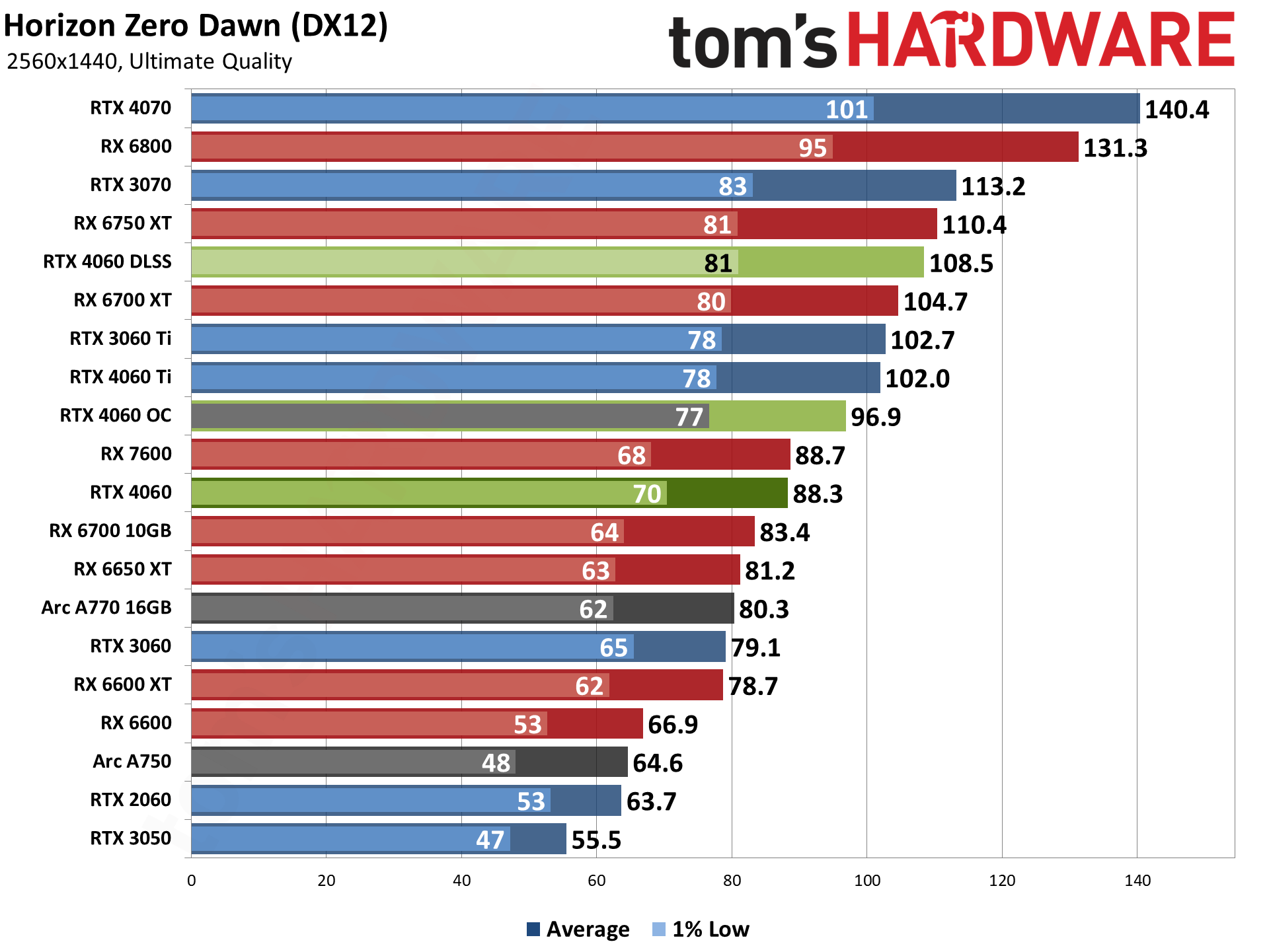

In terms of overall performance, at 1080p medium the RTX 4060 lands right between the RX 6700 XT and RX 6750 XT — and that was already a pretty narrow gap. It's also 16% faster than the newer RX 7600, 22% faster than the RTX 3060, and 51% faster than the two generations old RTX 2060. For 1080p ultra, the RTX 4060 still sits between the 6750 XT and 6700 XT, and is 22% faster than the RTX 3060 and RX 7600, and beats the RTX 2060 by 58%.

Most people aren't going to be thinking about upgrading from the RTX 3060 to the 4060, and that's for the best. Even though performance is higher, you have less VRAM and it's not that big of a performance jump. But if you're still holding on to an RTX 2060 or older GPU, you can get a pretty sizeable upgrade.

While we don't have the GTX 1060 6GB and GTX 1660 Super in these charts (because we're only including GPUs that support the DirectX 12 Ultimate API), we still use our older test suite for the GPU benchmarks hierarchy. Looking just at rasterization performance, the RTX 4060 delivers about 150% higher framerates than the GTX 1060 6GB, and nearly double (95% higher) the performance of the GTX 1660 Super.

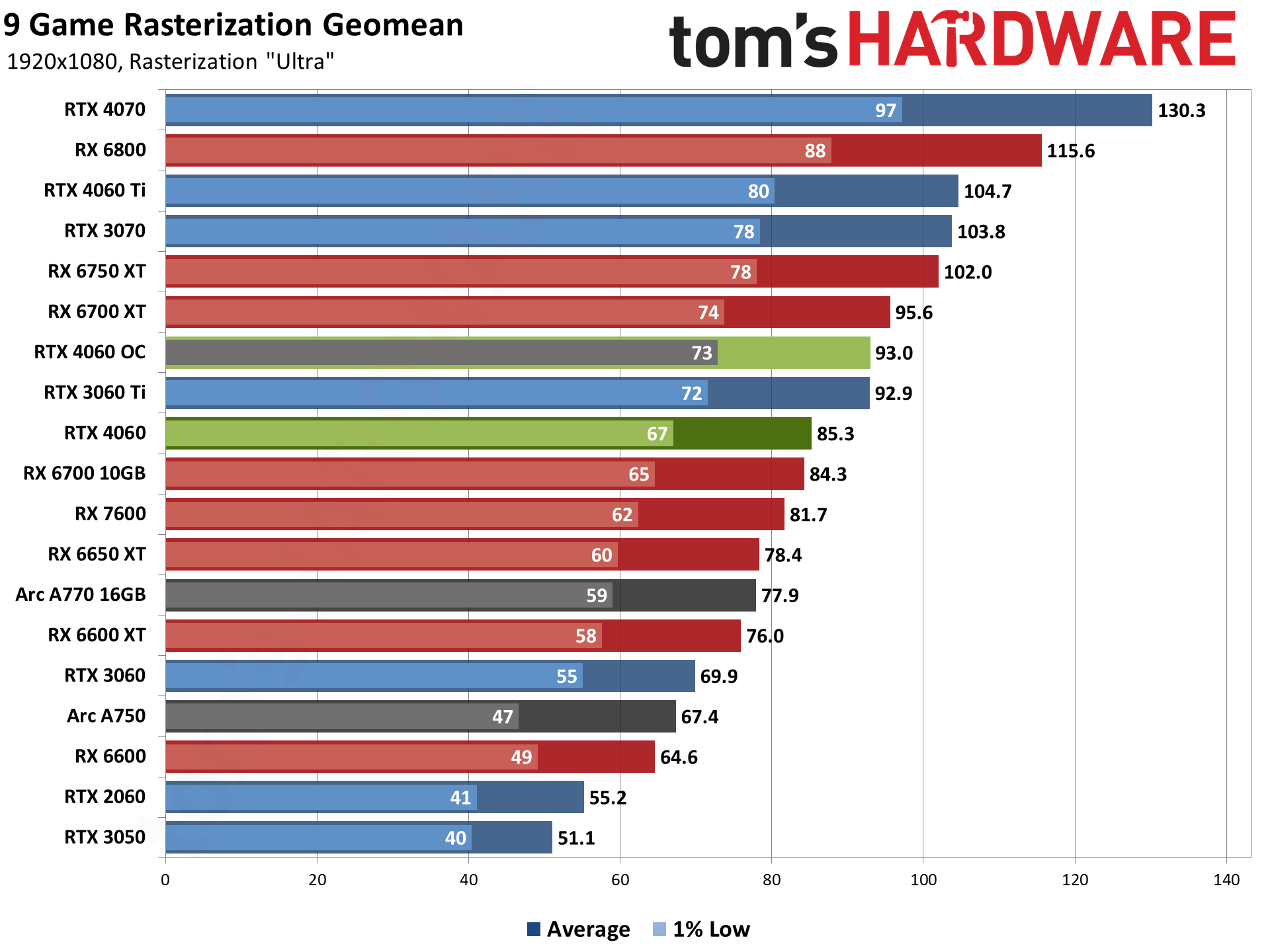

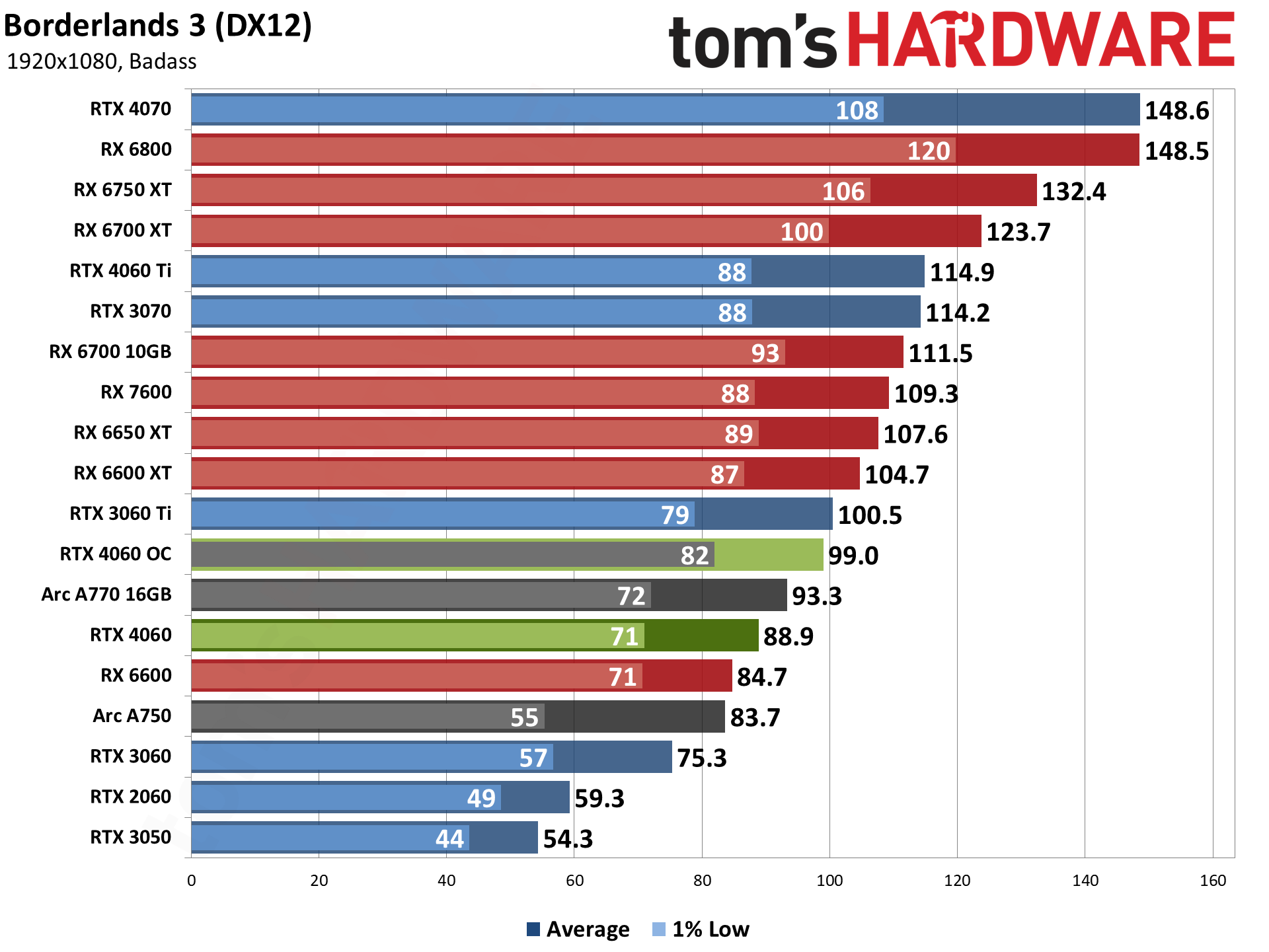

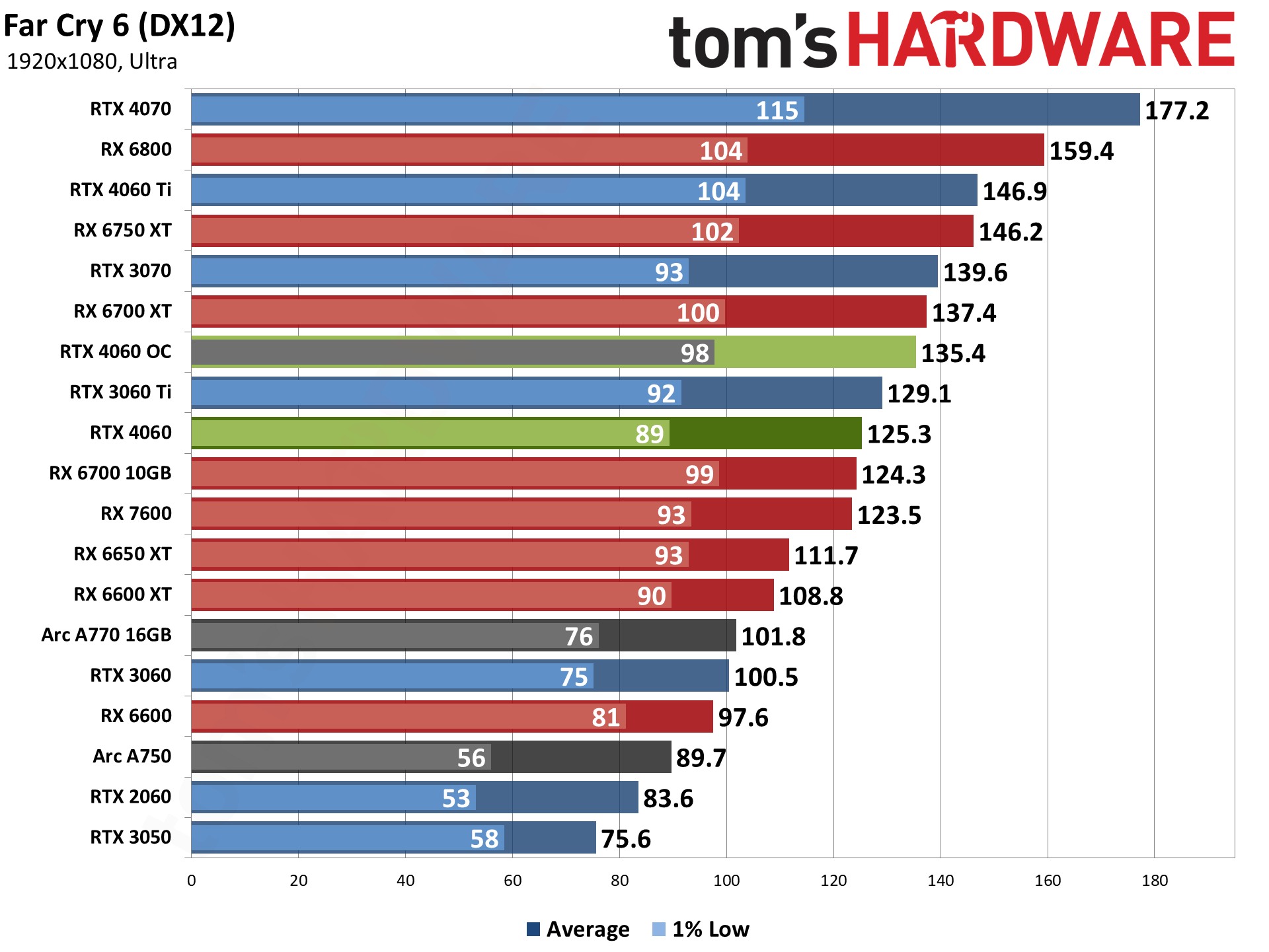

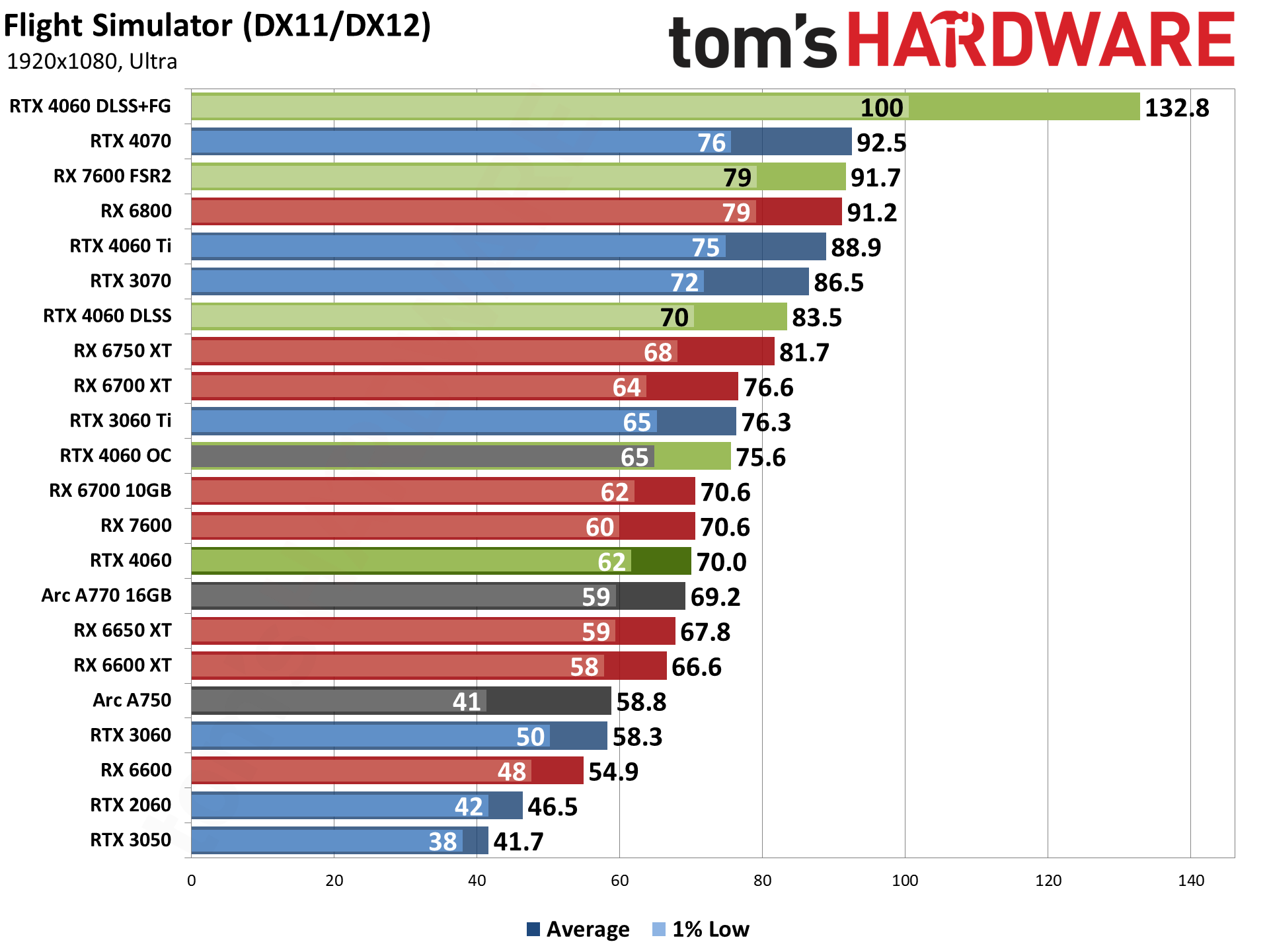

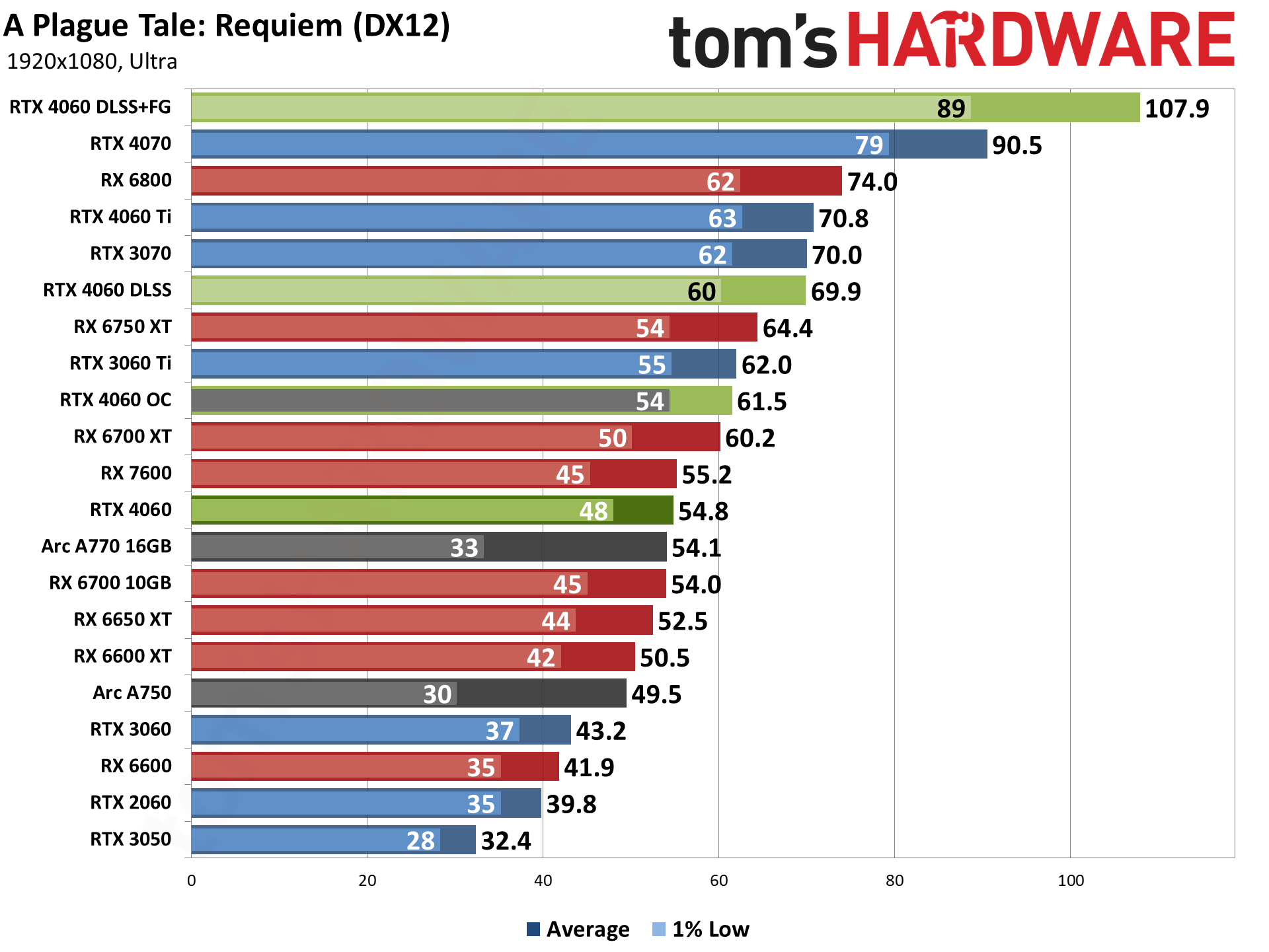

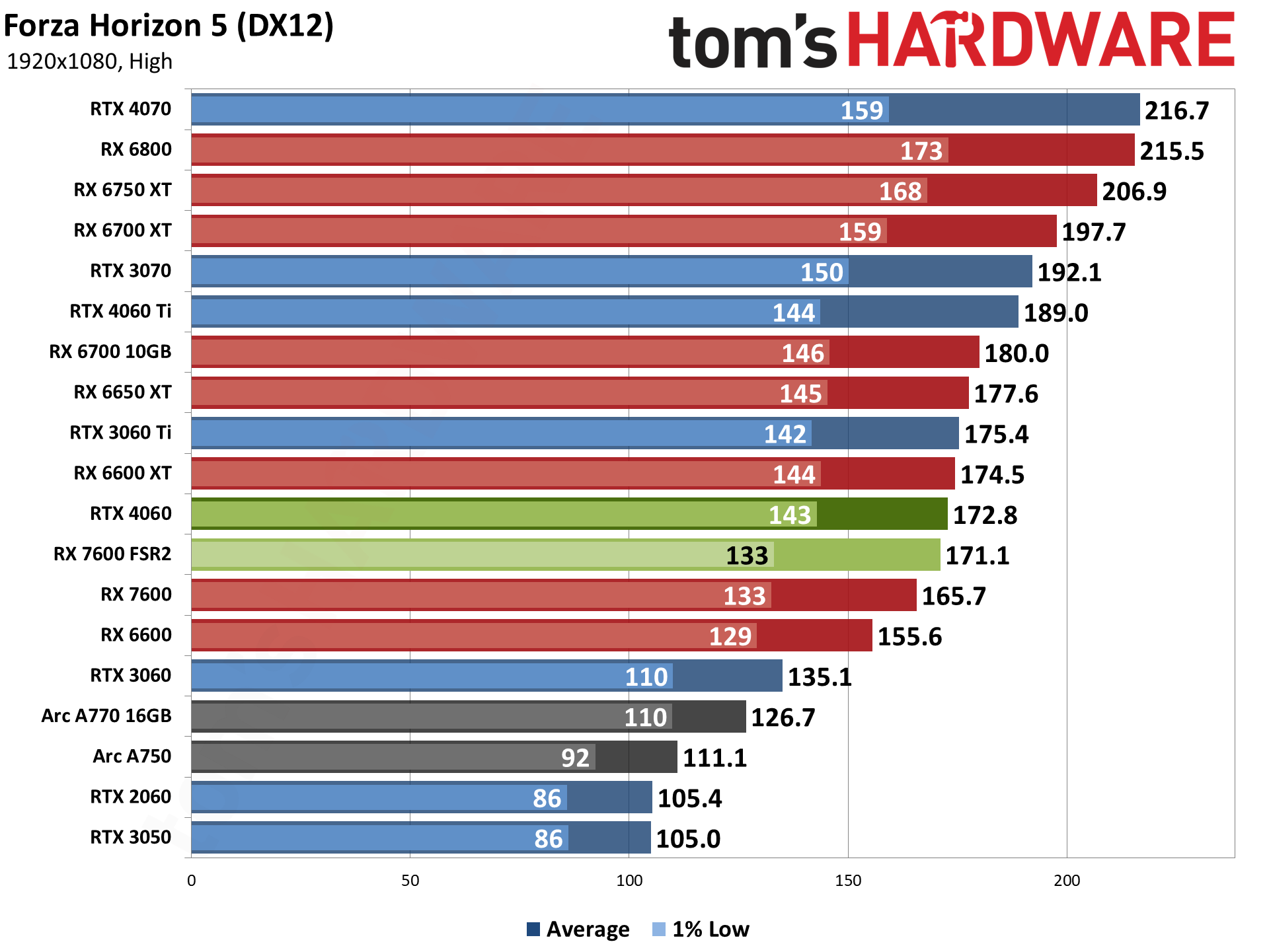

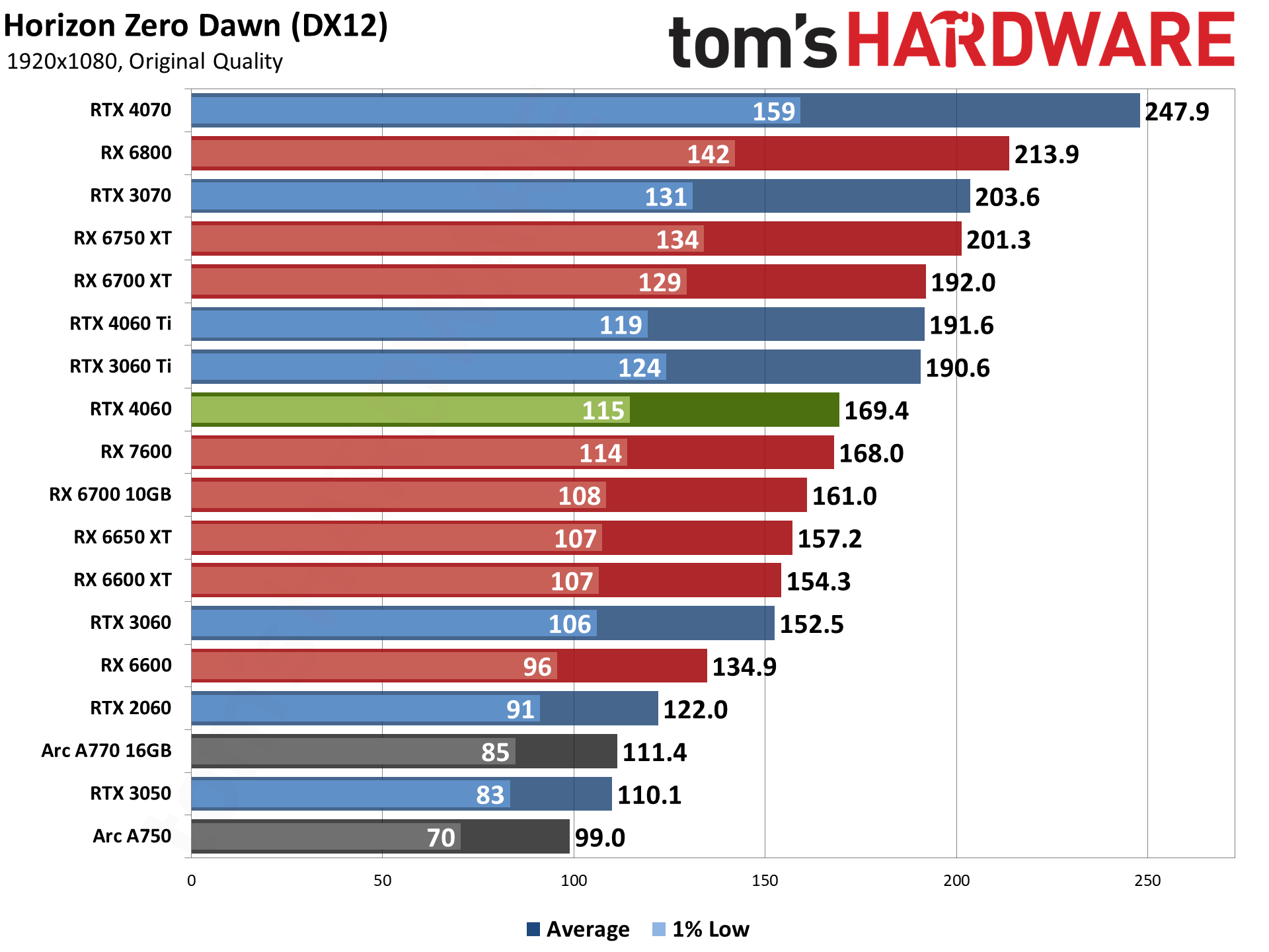

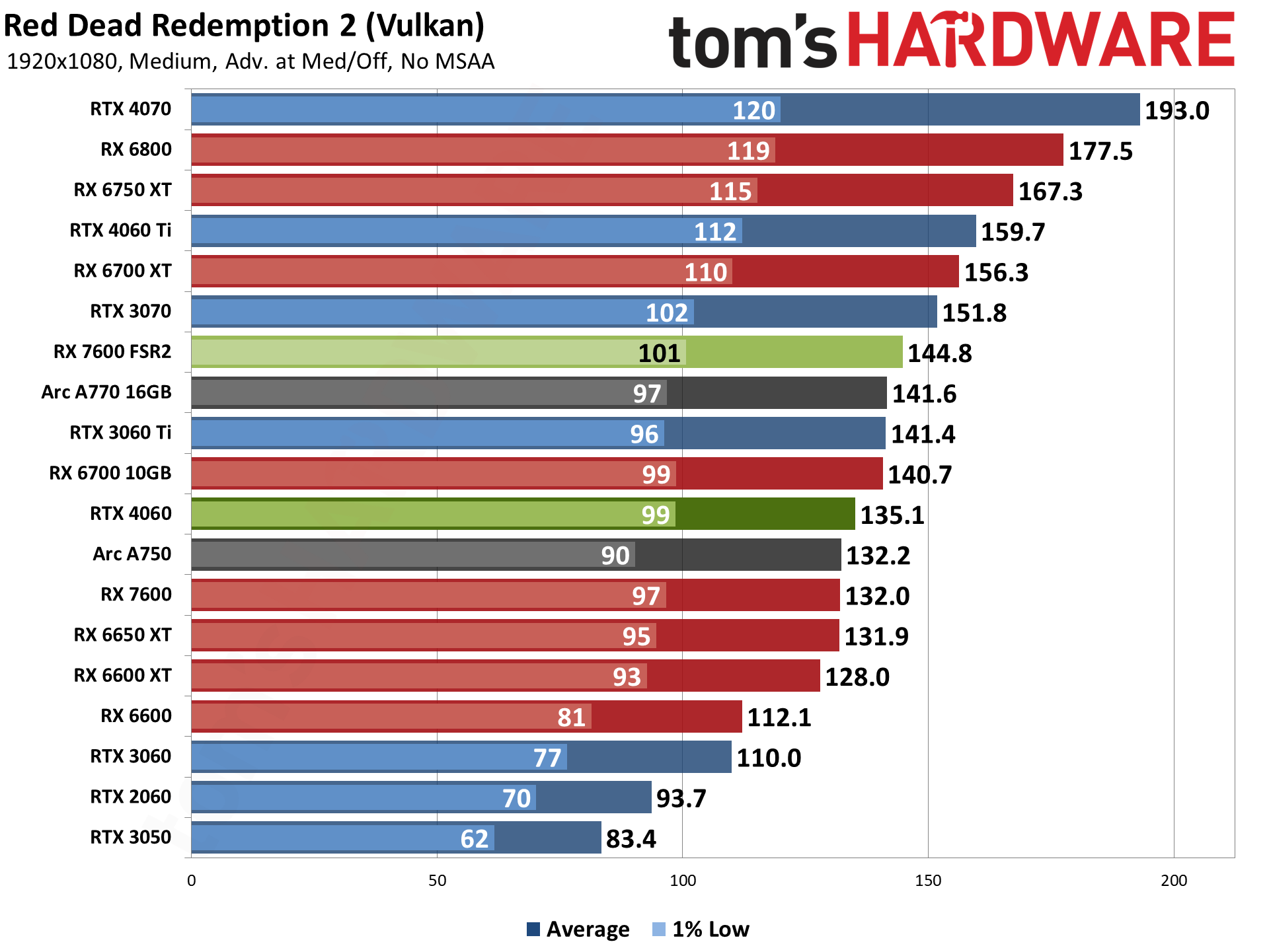

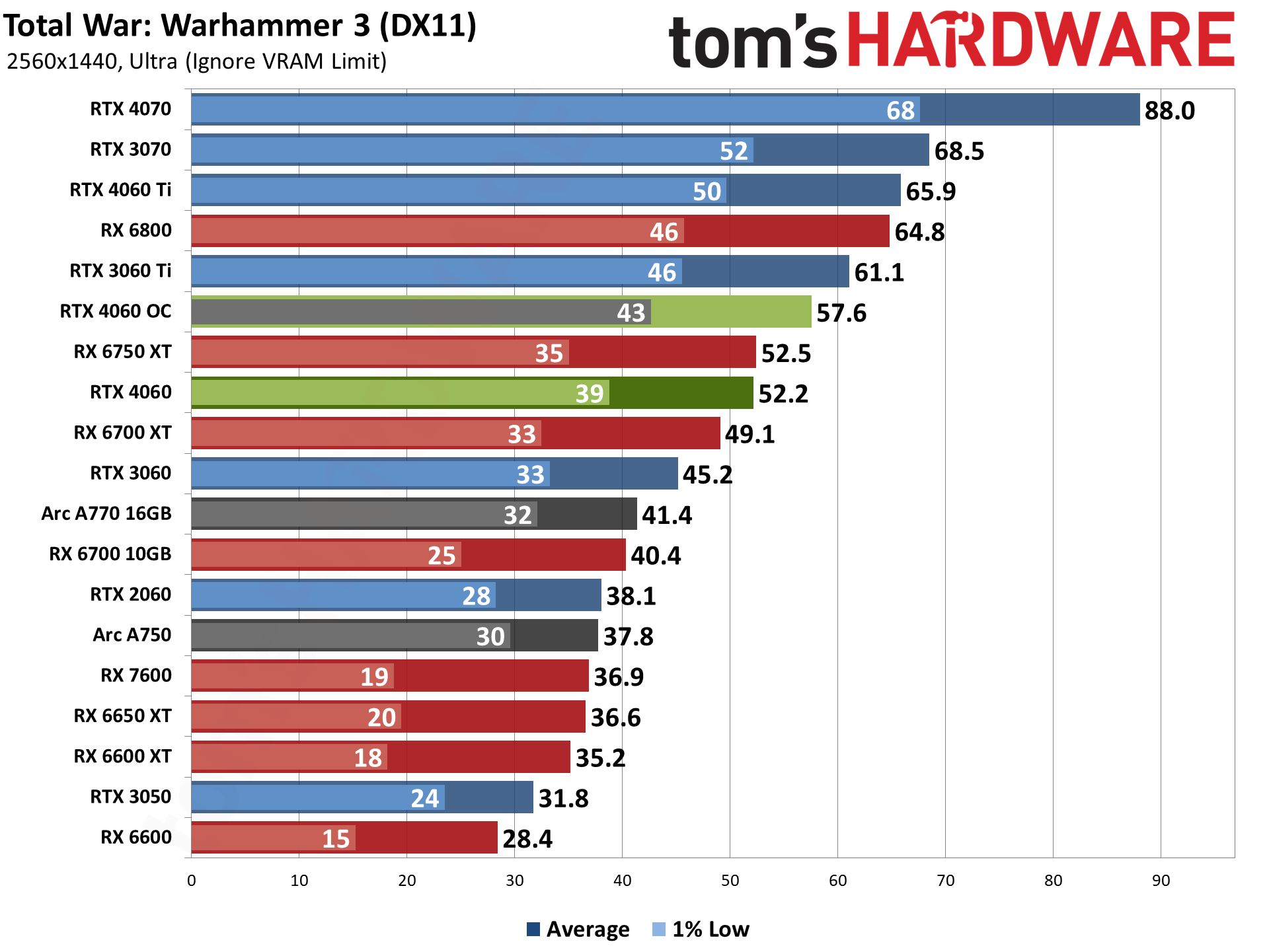

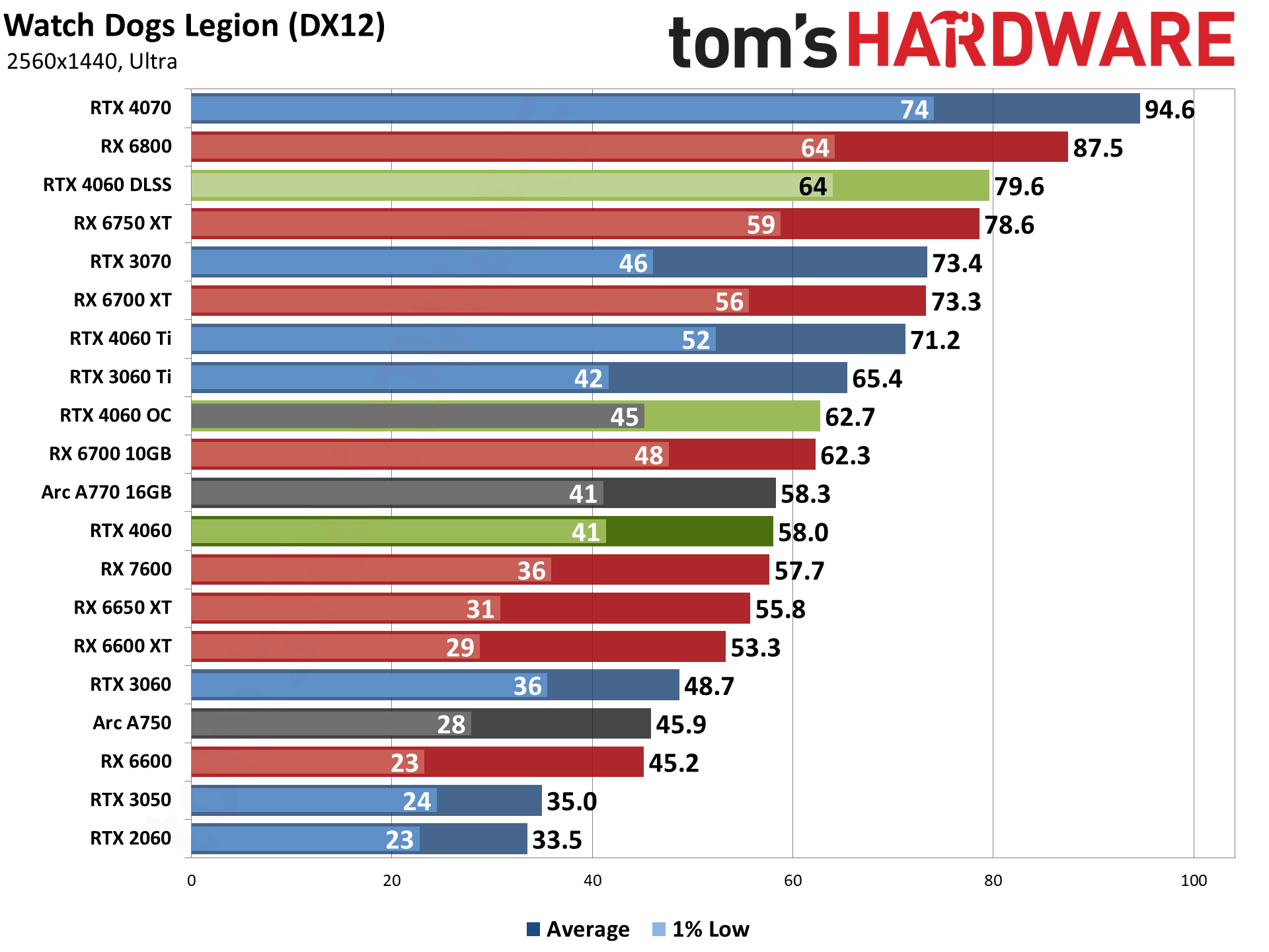

You can certainly make the argument that a $300 GPU isn't really intended for native DXR gaming, so here's our look at just the nine rasterization games in our test suite. Overall, the RTX 4060 ends up basically tied with the AMD RX 7600 at 1080p medium, with a 4.4% lead at 1080p ultra. It's not a clear win for either GPU, however.

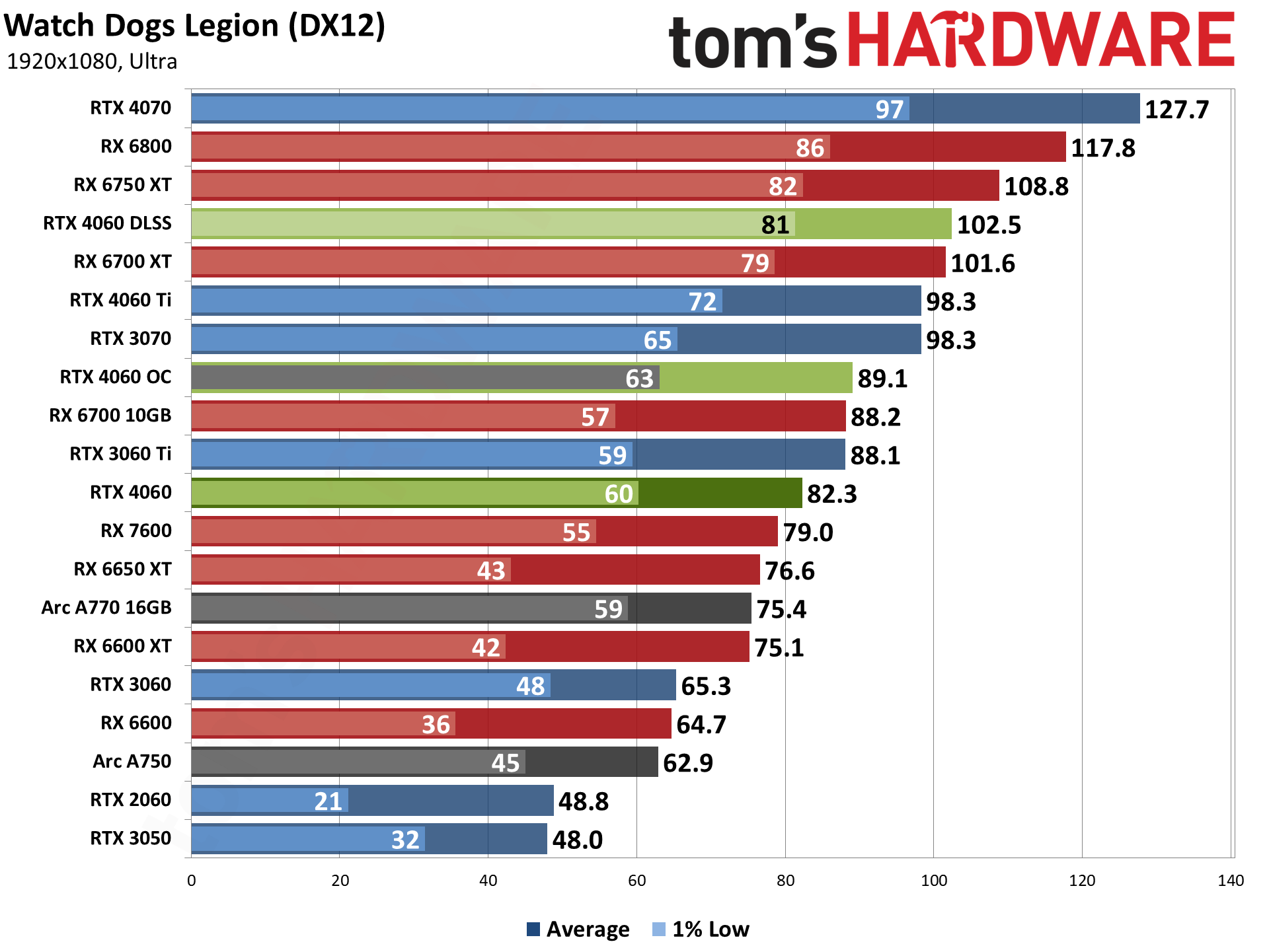

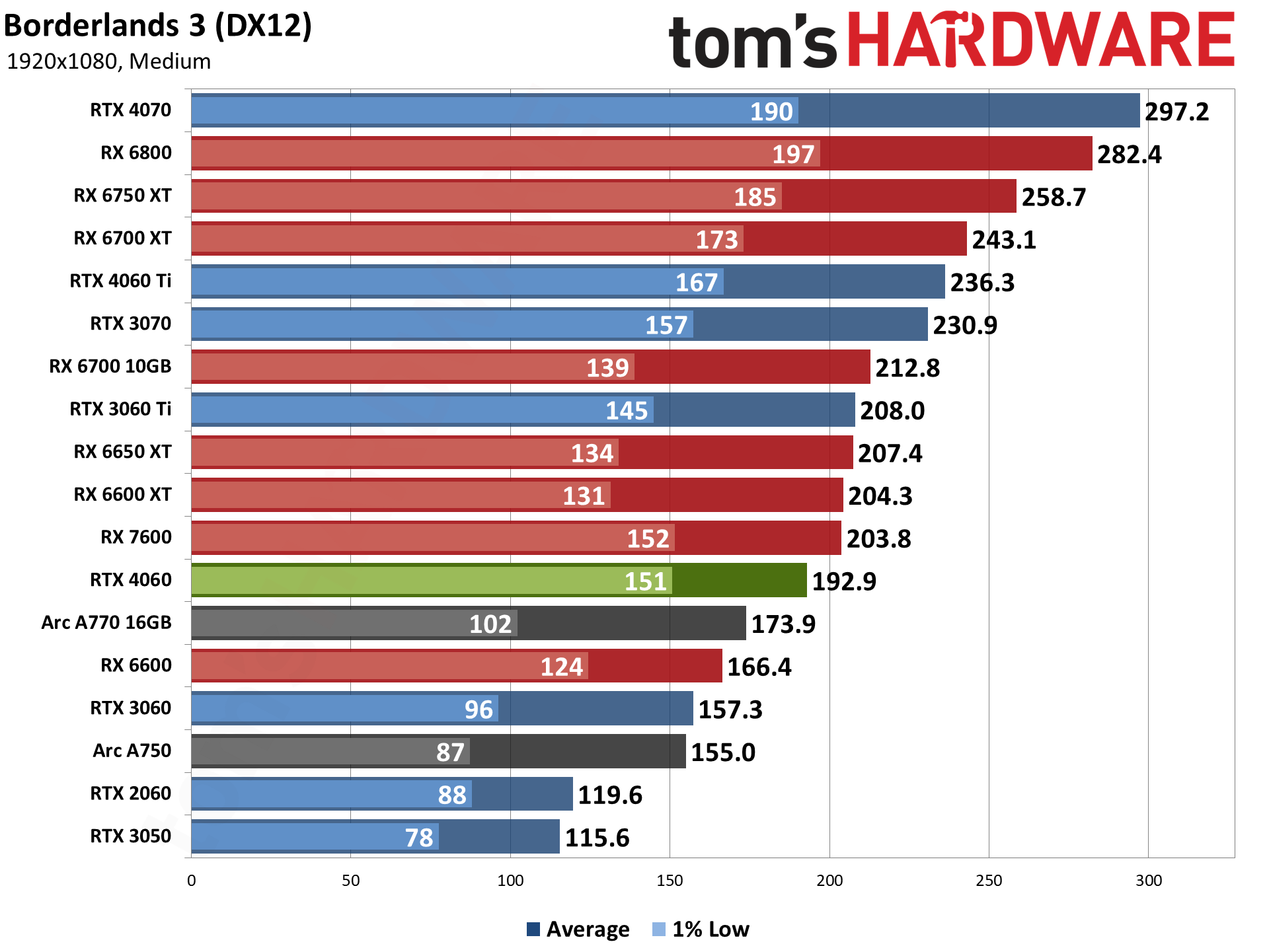

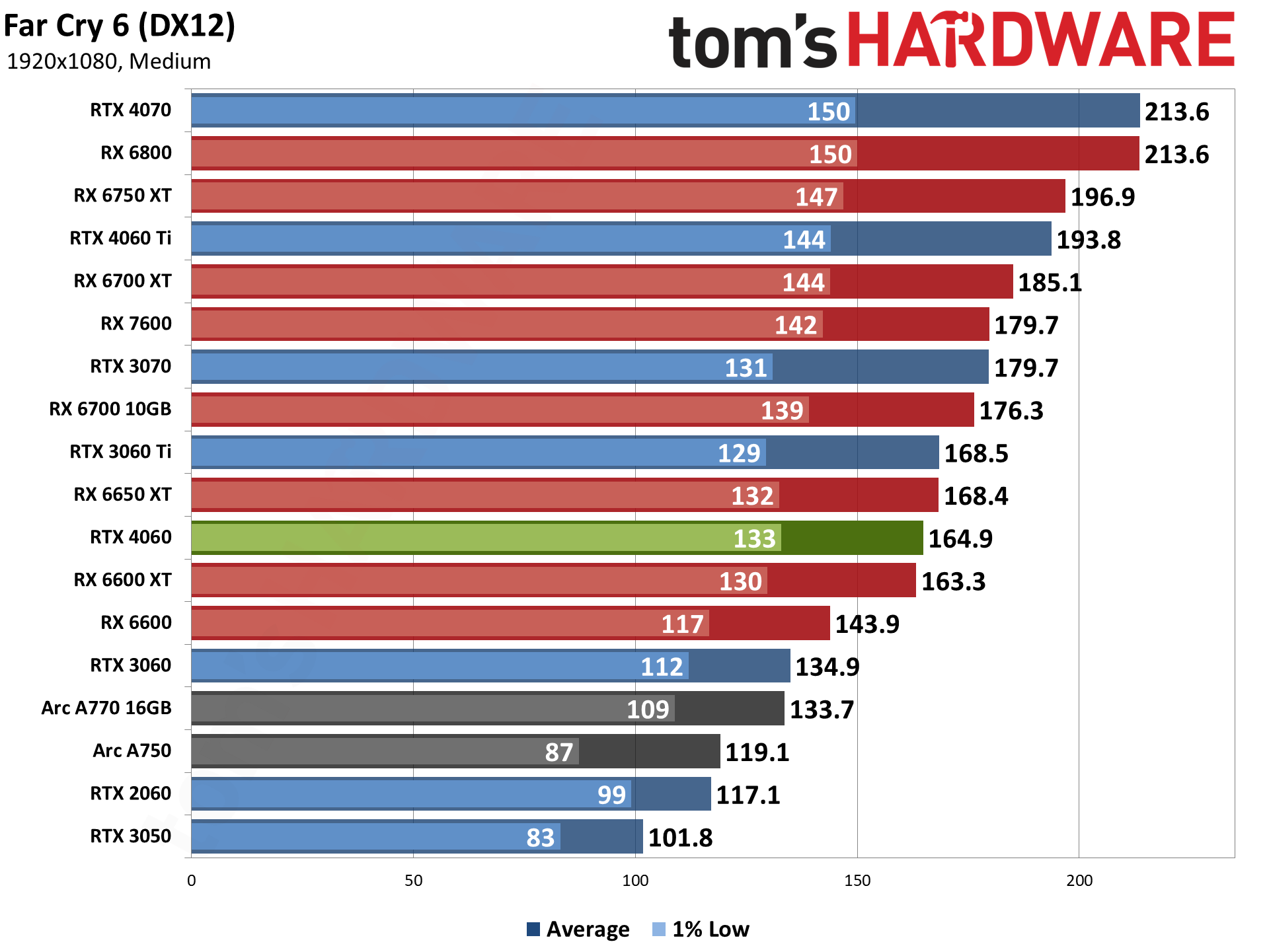

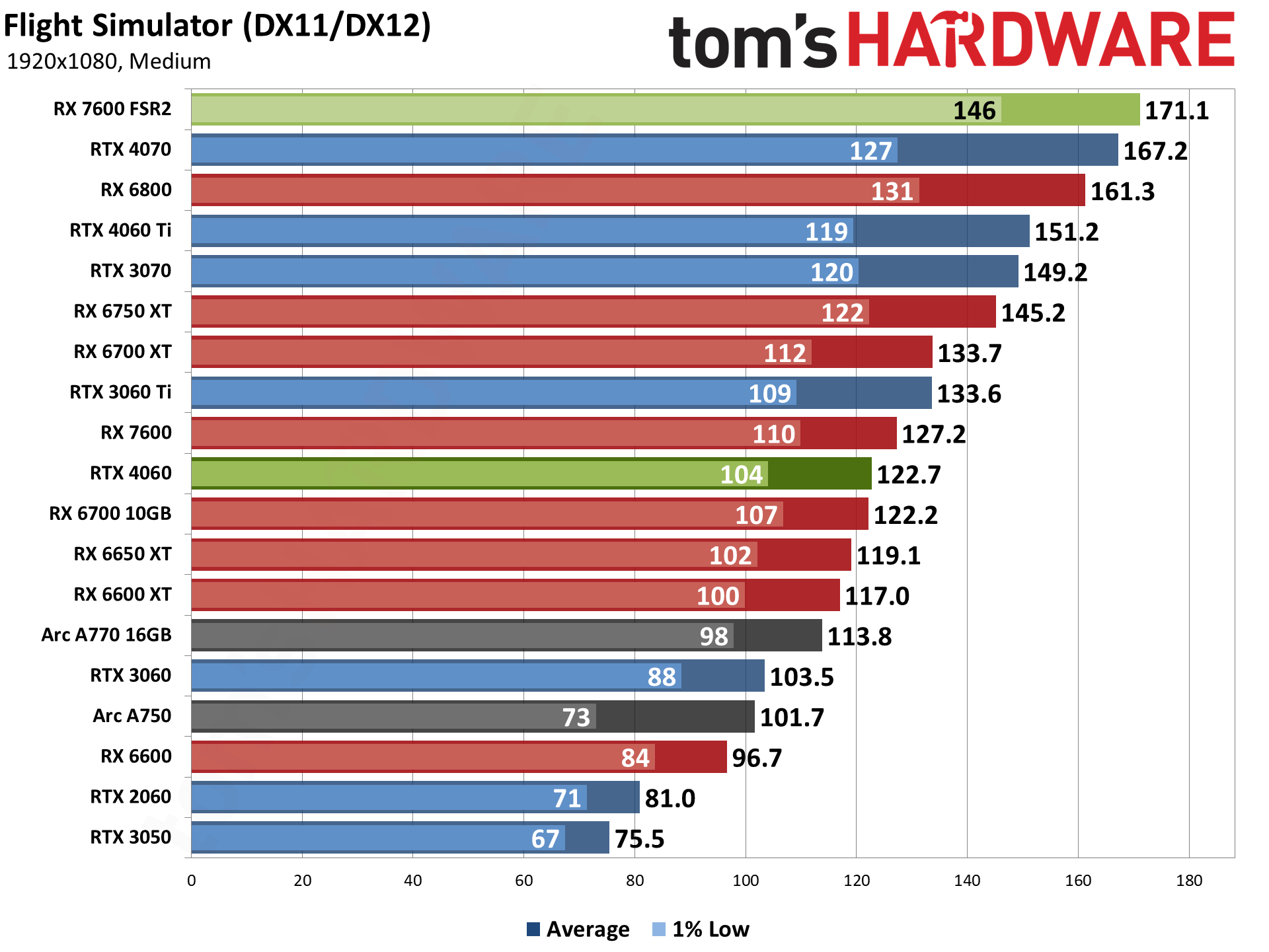

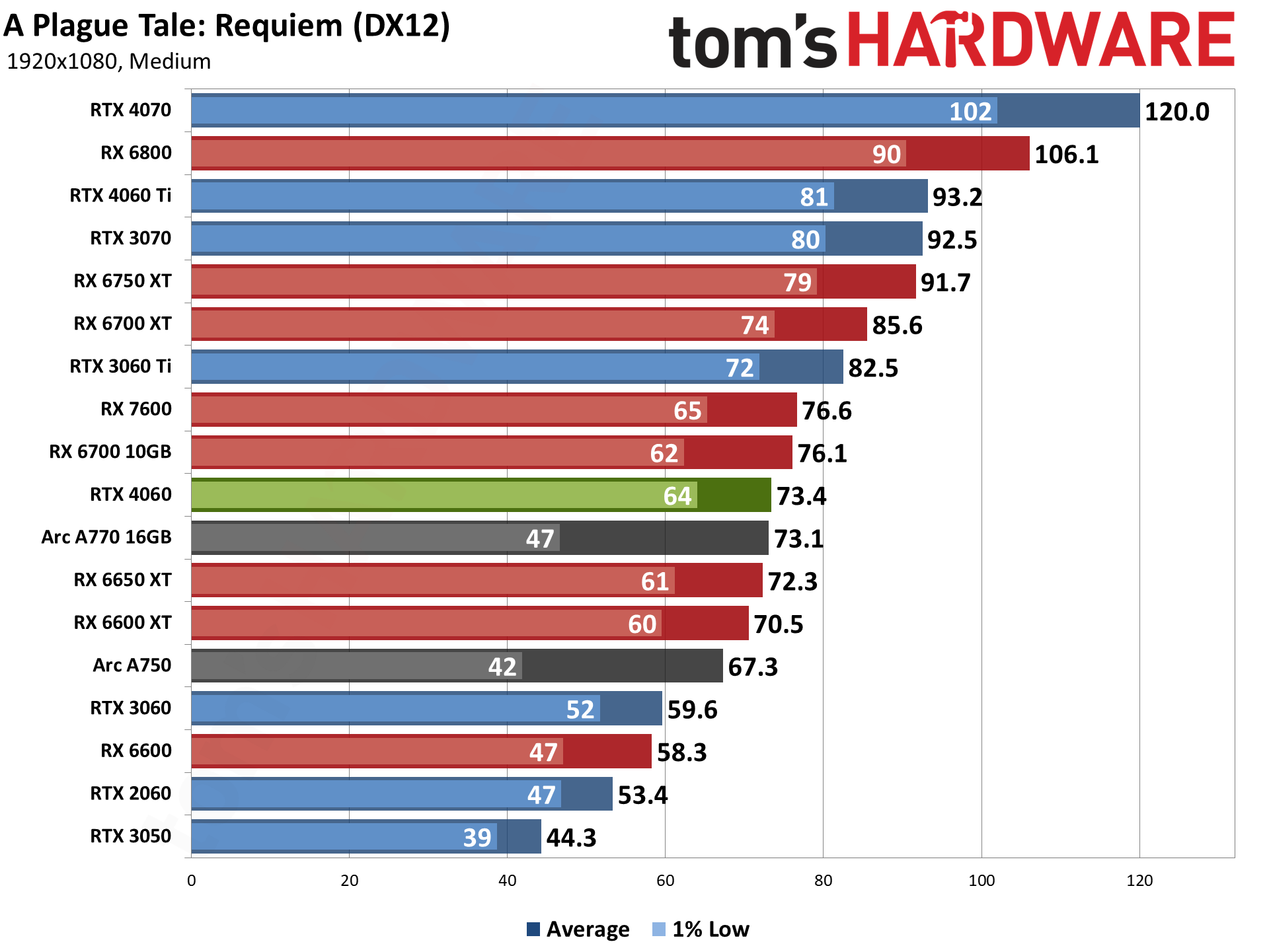

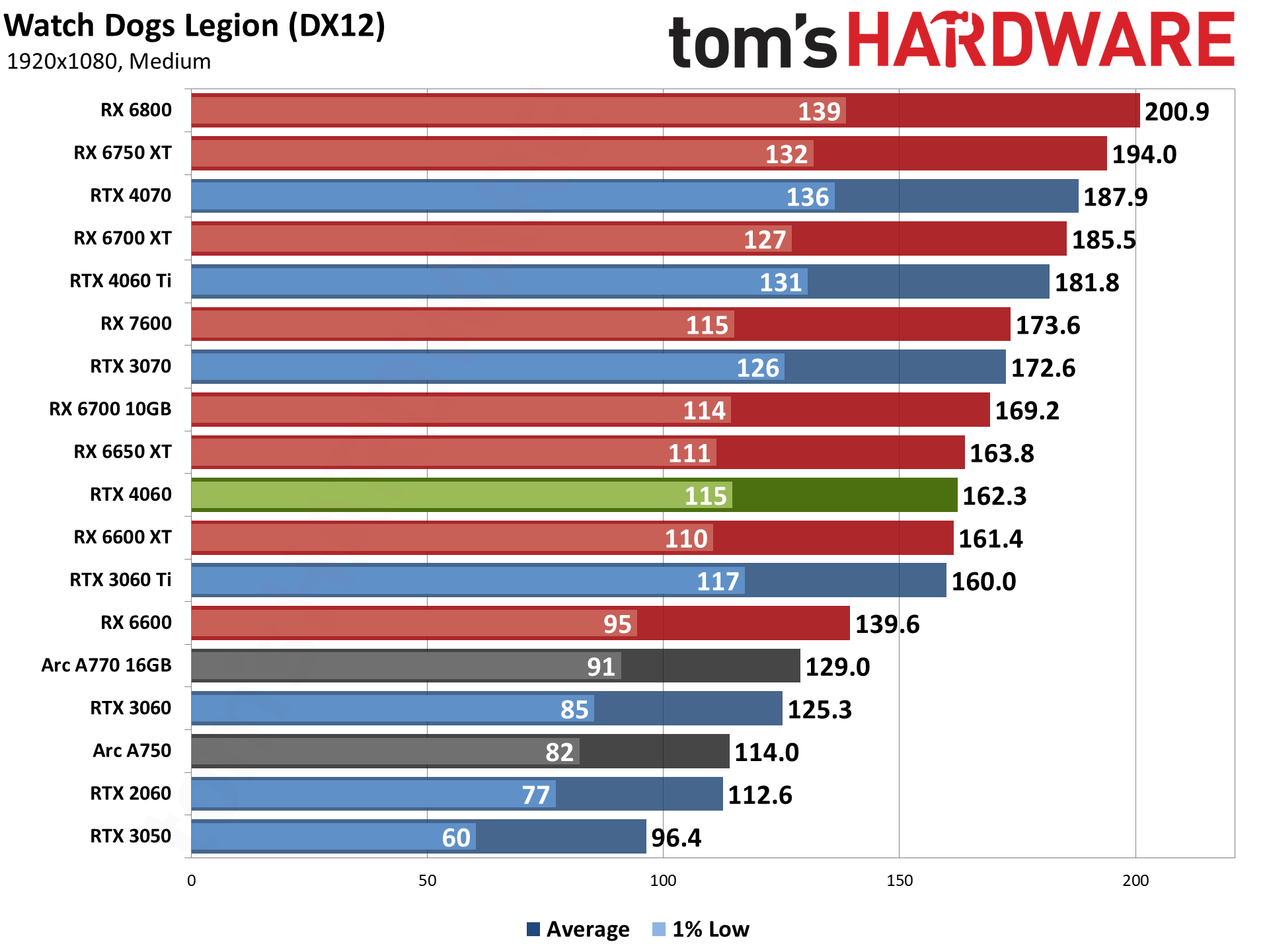

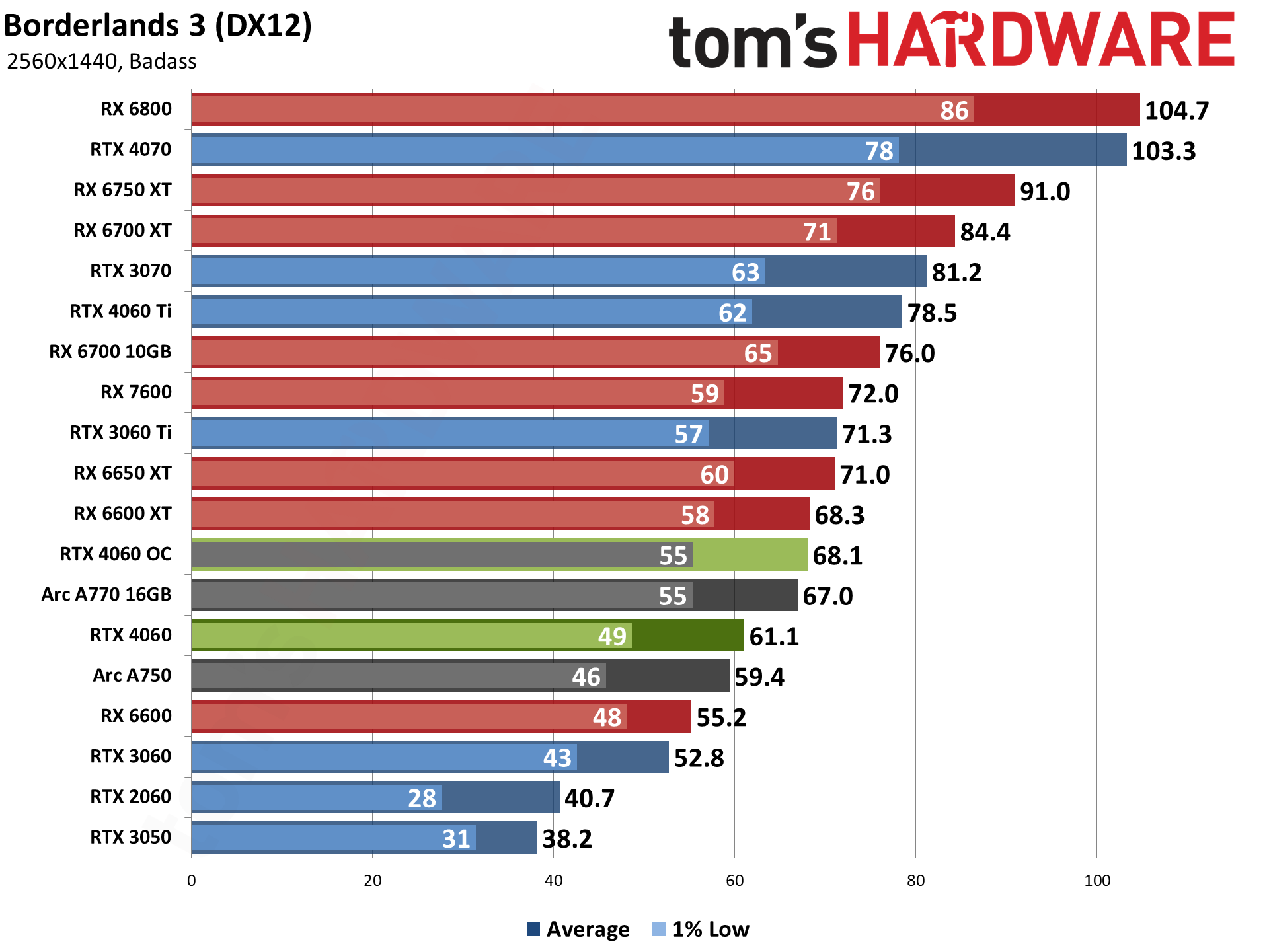

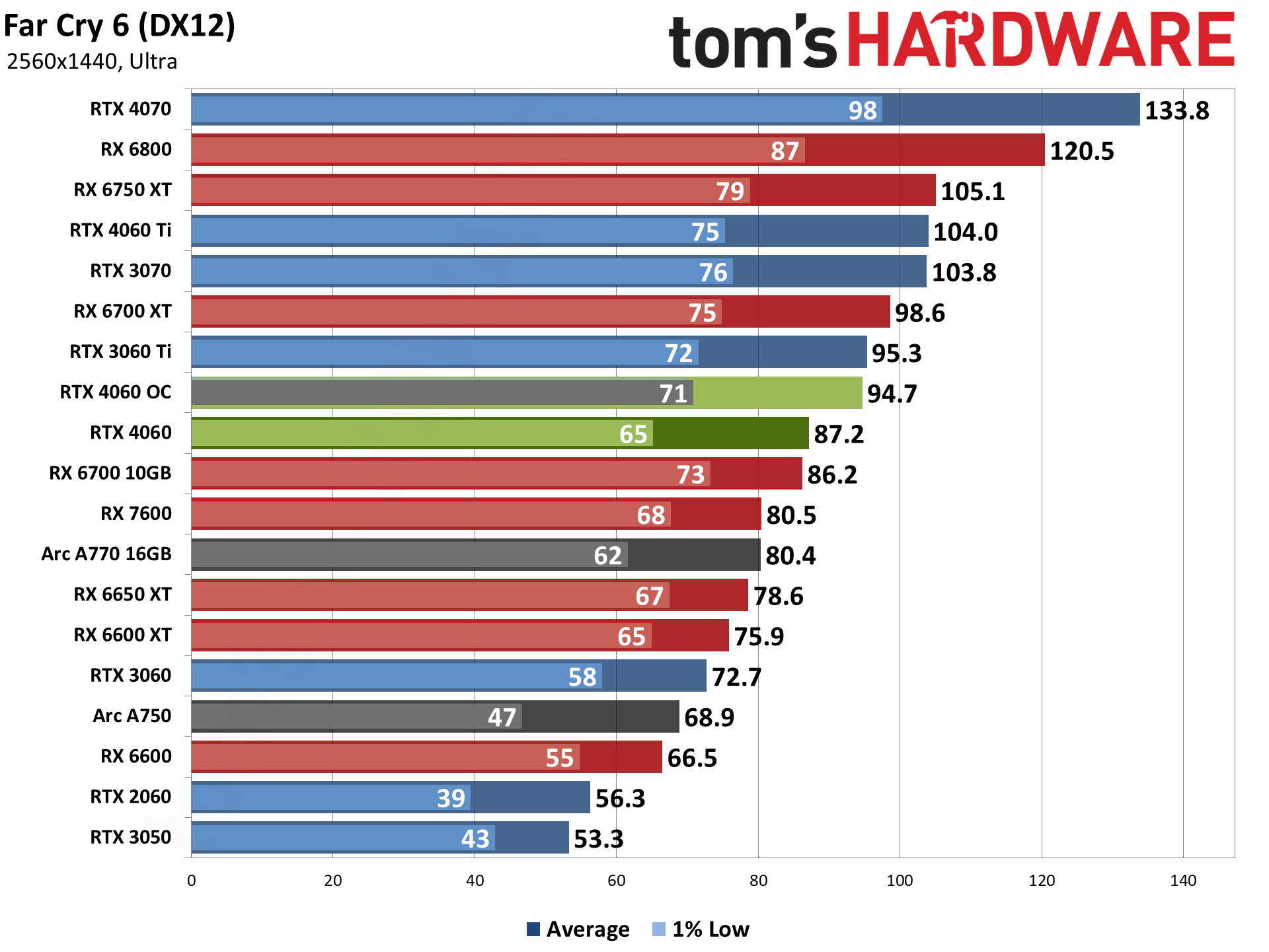

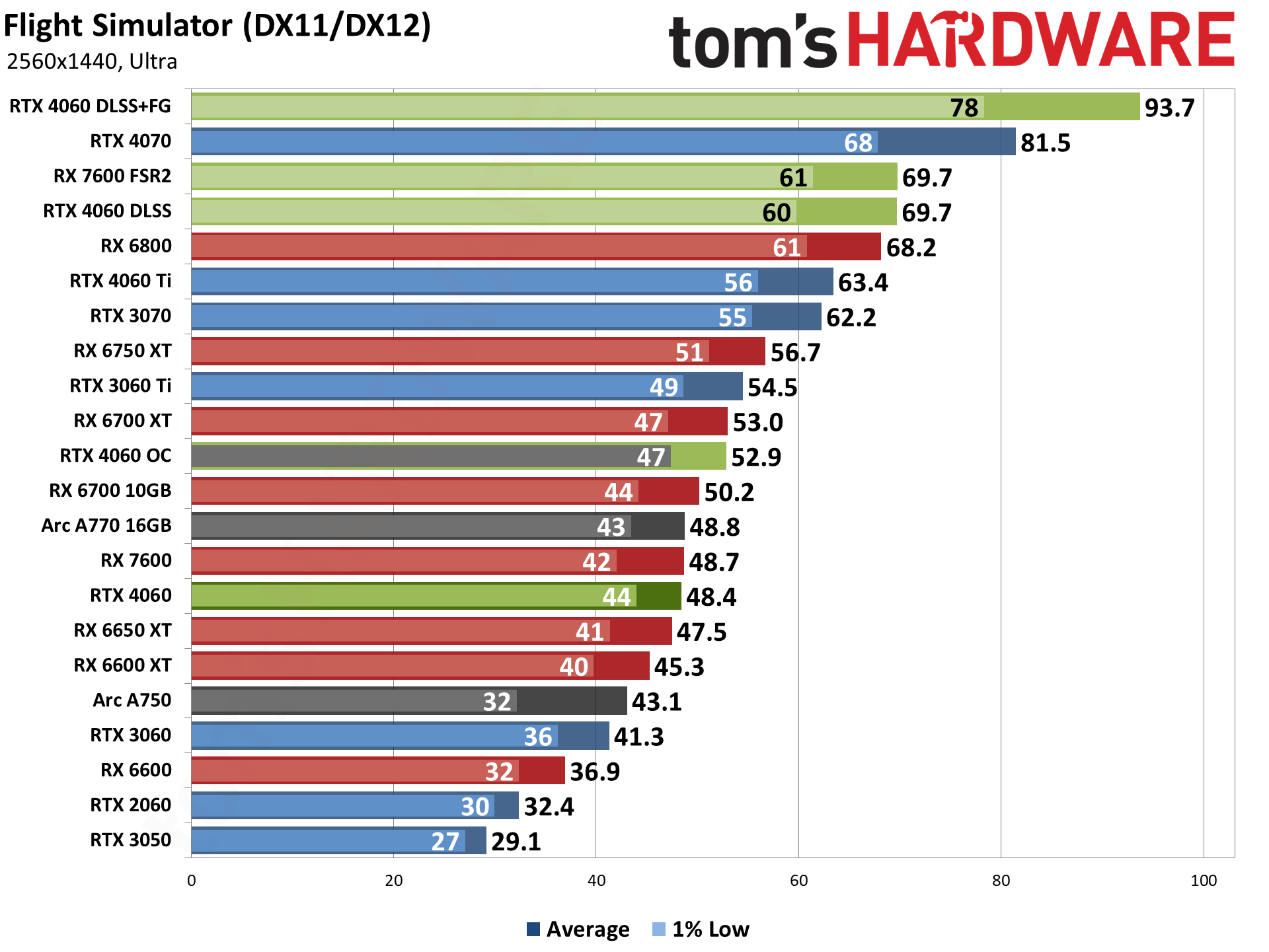

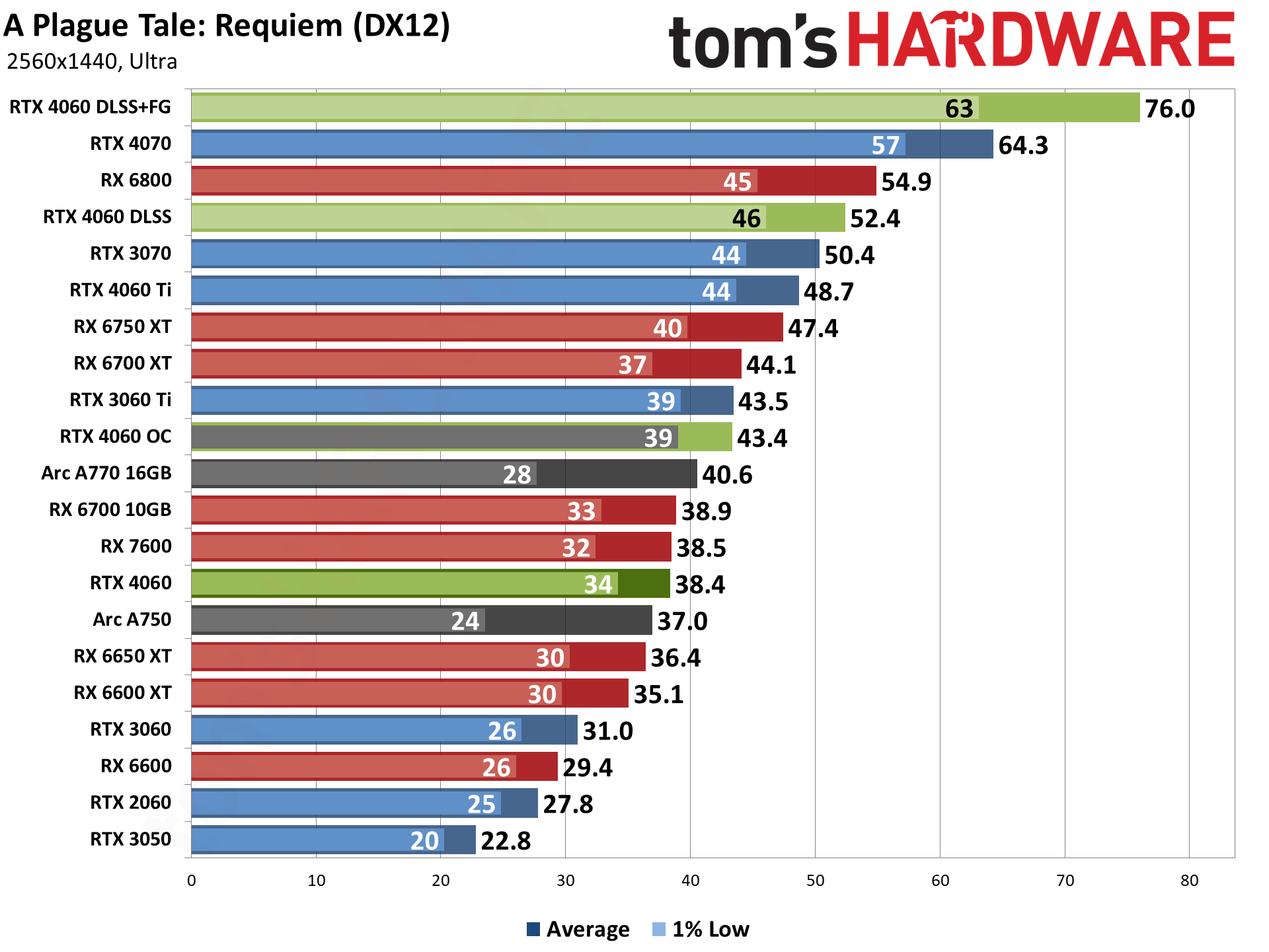

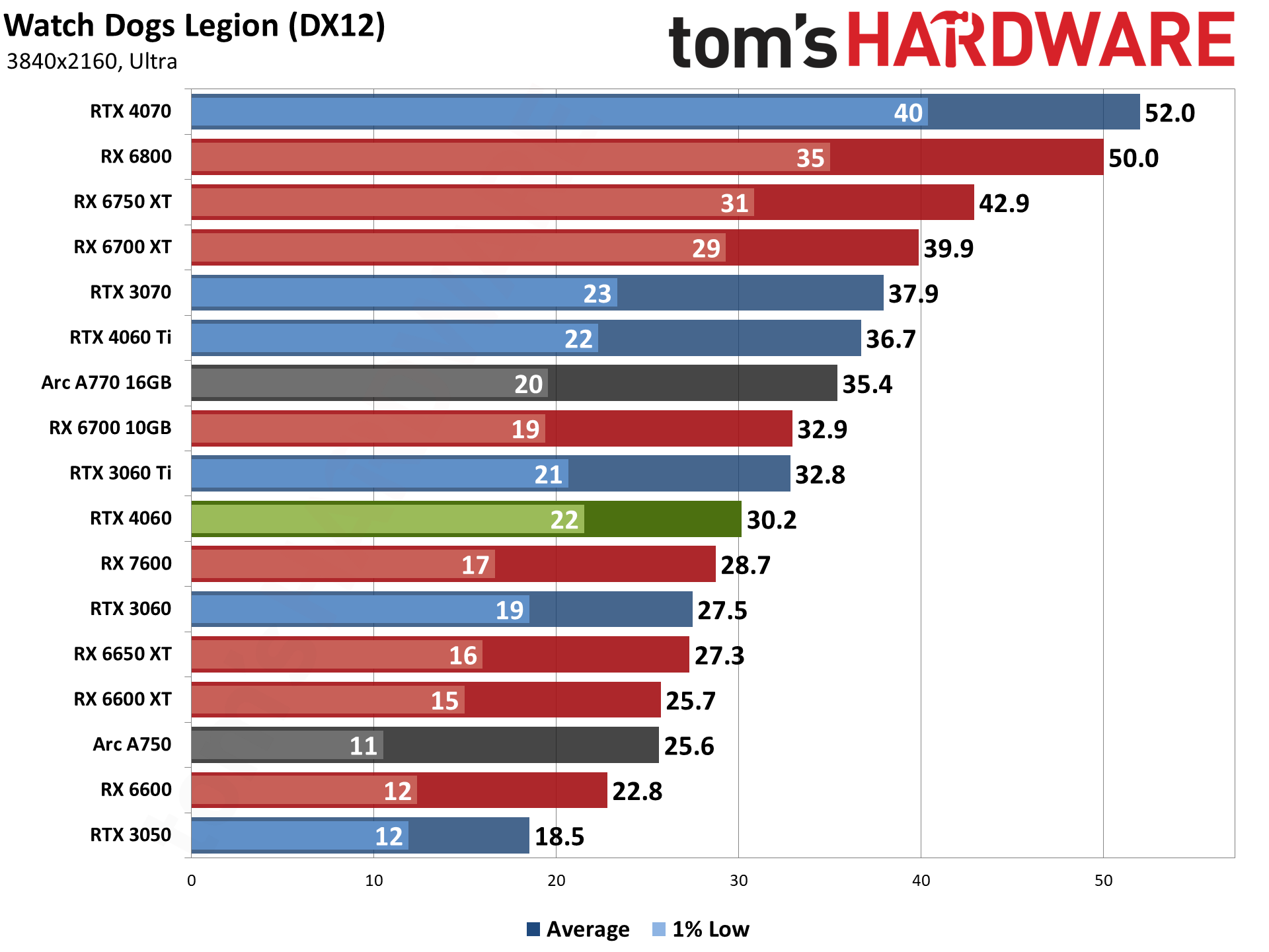

The RX 7600 takes a clear lead in Borderlands 3 for example, delivering 6% more performance at 1080p medium and 23% higher performance at 1080p ultra. AMD also has a 4% lead in A Plague Tale: Requiem and Flight Simulator, a 7% lead in Watch Dogs Legion, and a 9% lead in Far Cry 6 — but all of those are only at 1080p medium. Performance is basically tied or slightly favors the RTX 4060 at 1080p ultra in those same games.

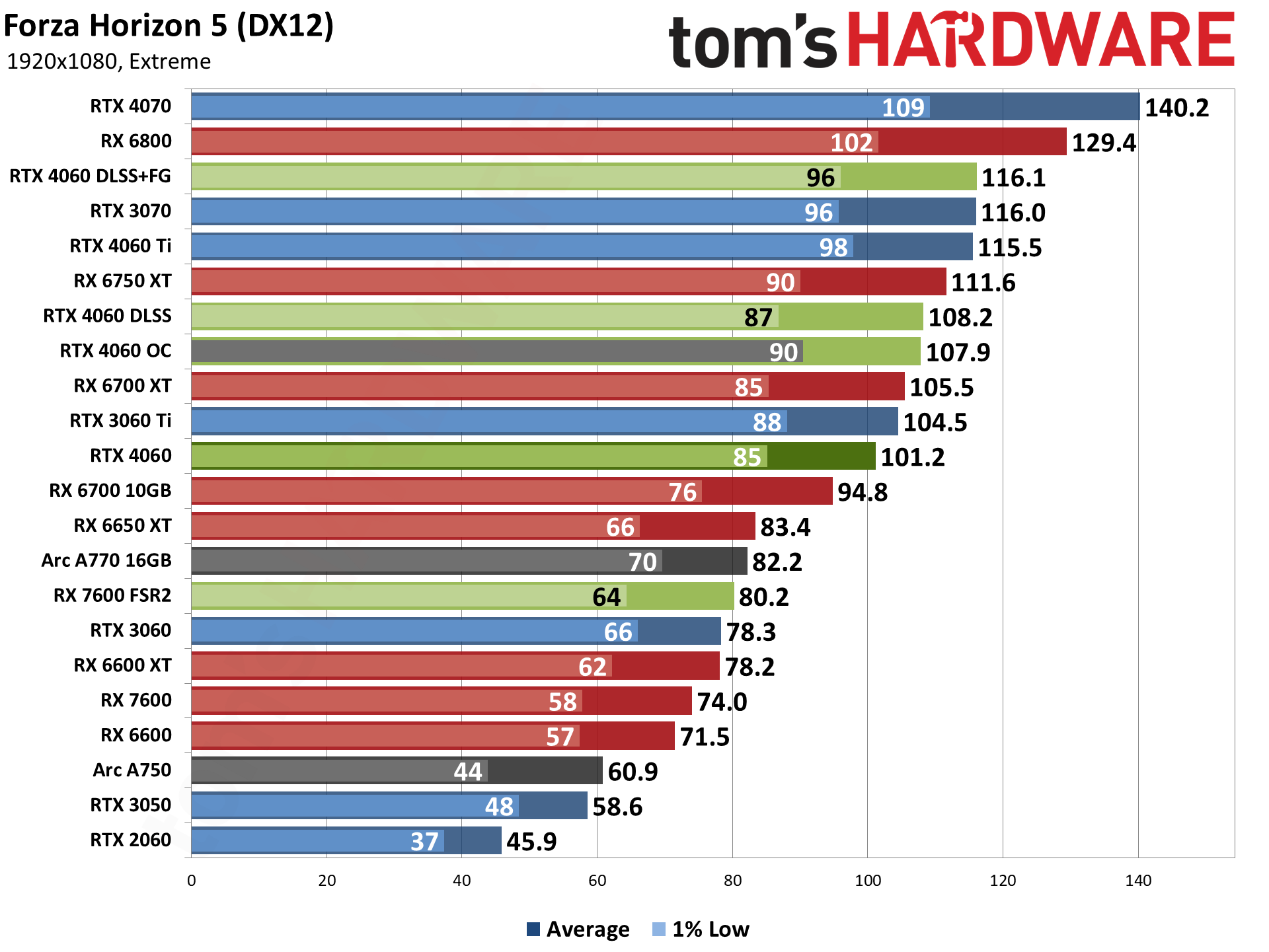

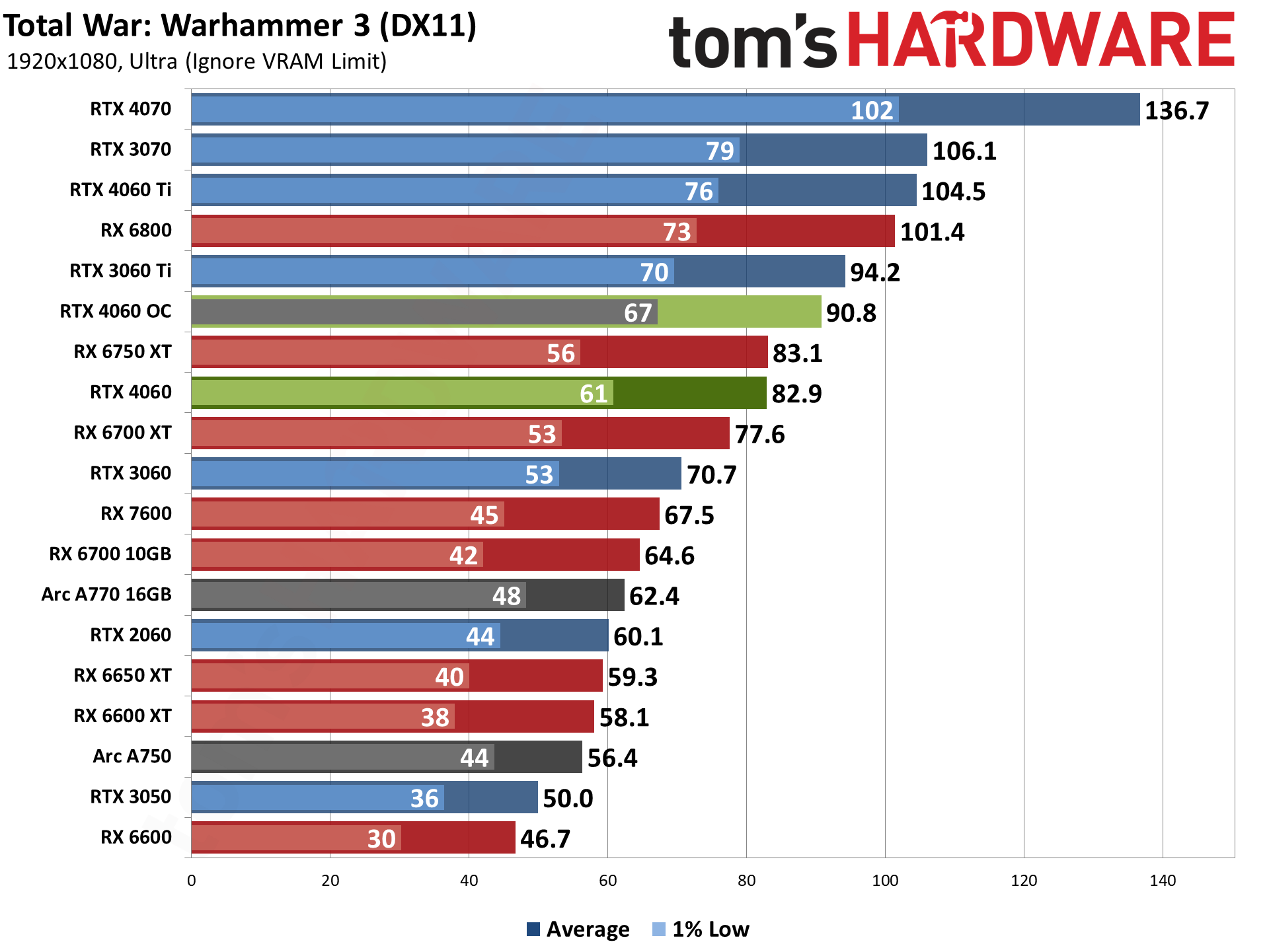

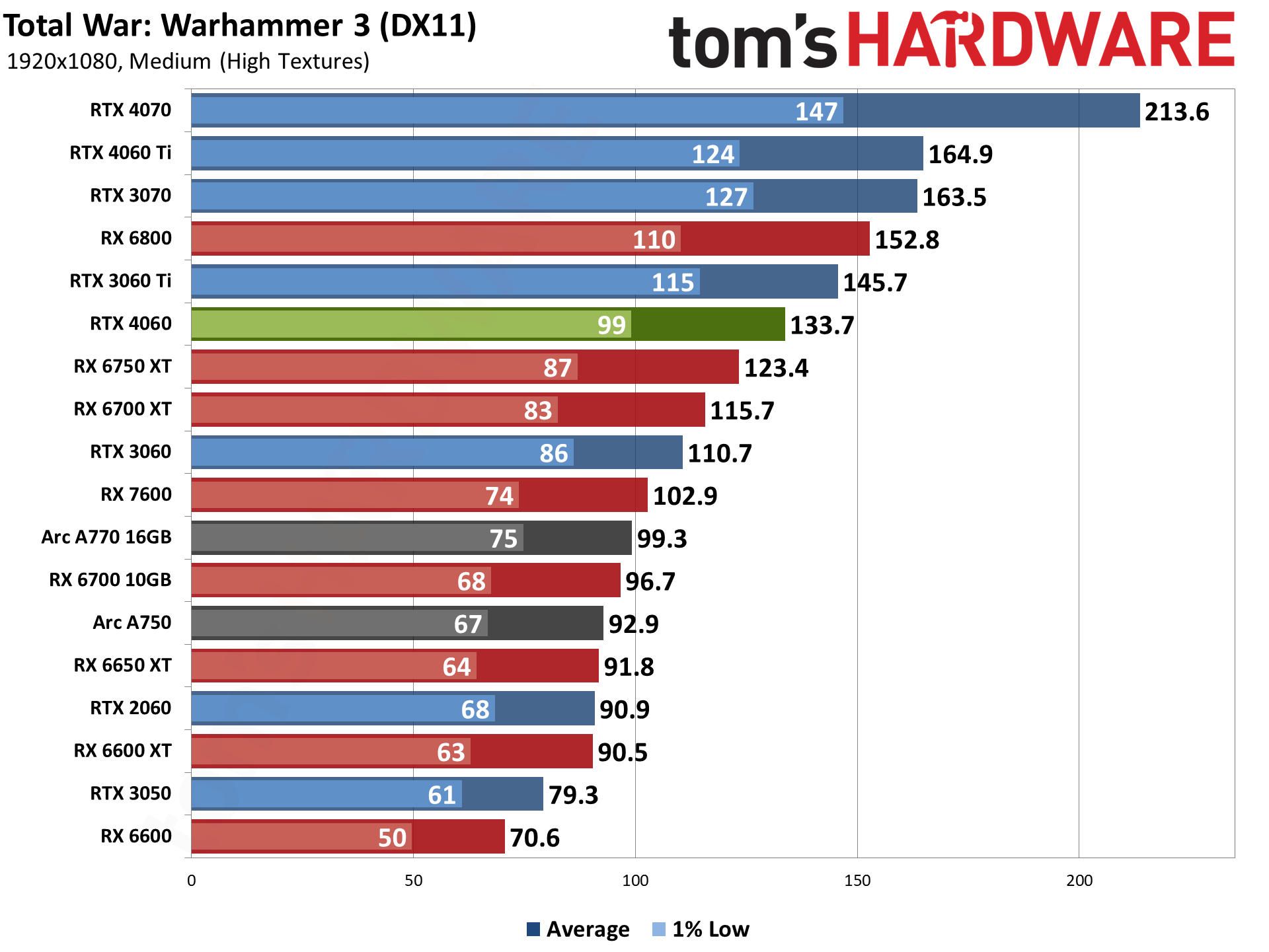

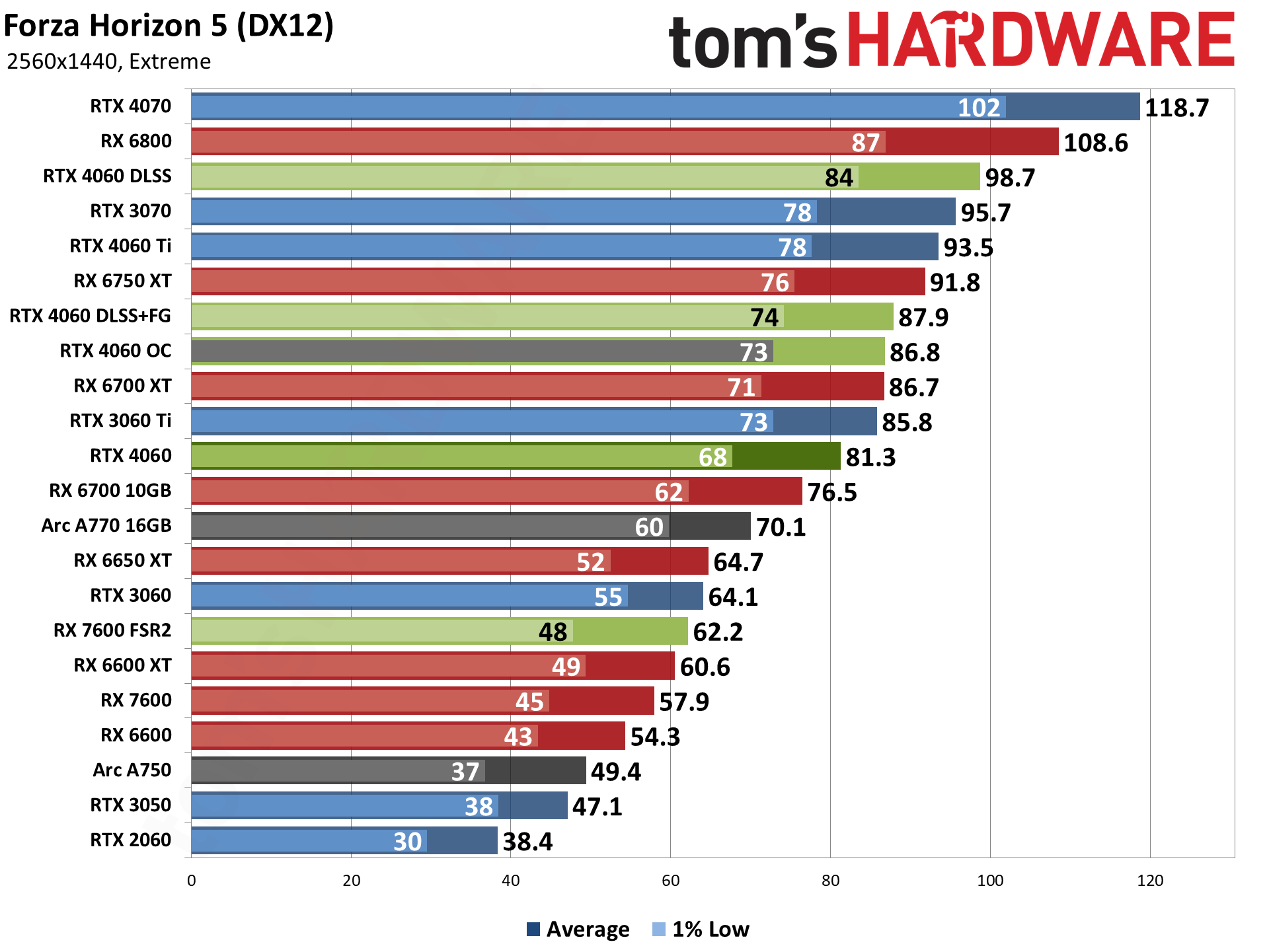

As for Nvidia, in our rasterization suite, Forza Horizon 5 and Total War: Warhammer 3 both give the RTX 4060 commanding leads. The 4060 is only 4% faster at 1080p high in Forza, but then it's 37% faster at 1080p extreme. Warhammer meanwhile gives the 4060 a 30% lead at 1080p medium and a 23% lead at 1080p ultra.

Looking at other GPUs, the RTX 4060 outperforms the RTX 3060 by 22%, which would be a noticeable upgrade but probably not worth doing on its own. It's around 50% faster than the RTX 2060, a much more worthwhile upgrade. It's also tied with the RX 6700 10GB, but 10–11 percent slower than the RX 6700 XT and RTX 3060 Ti.

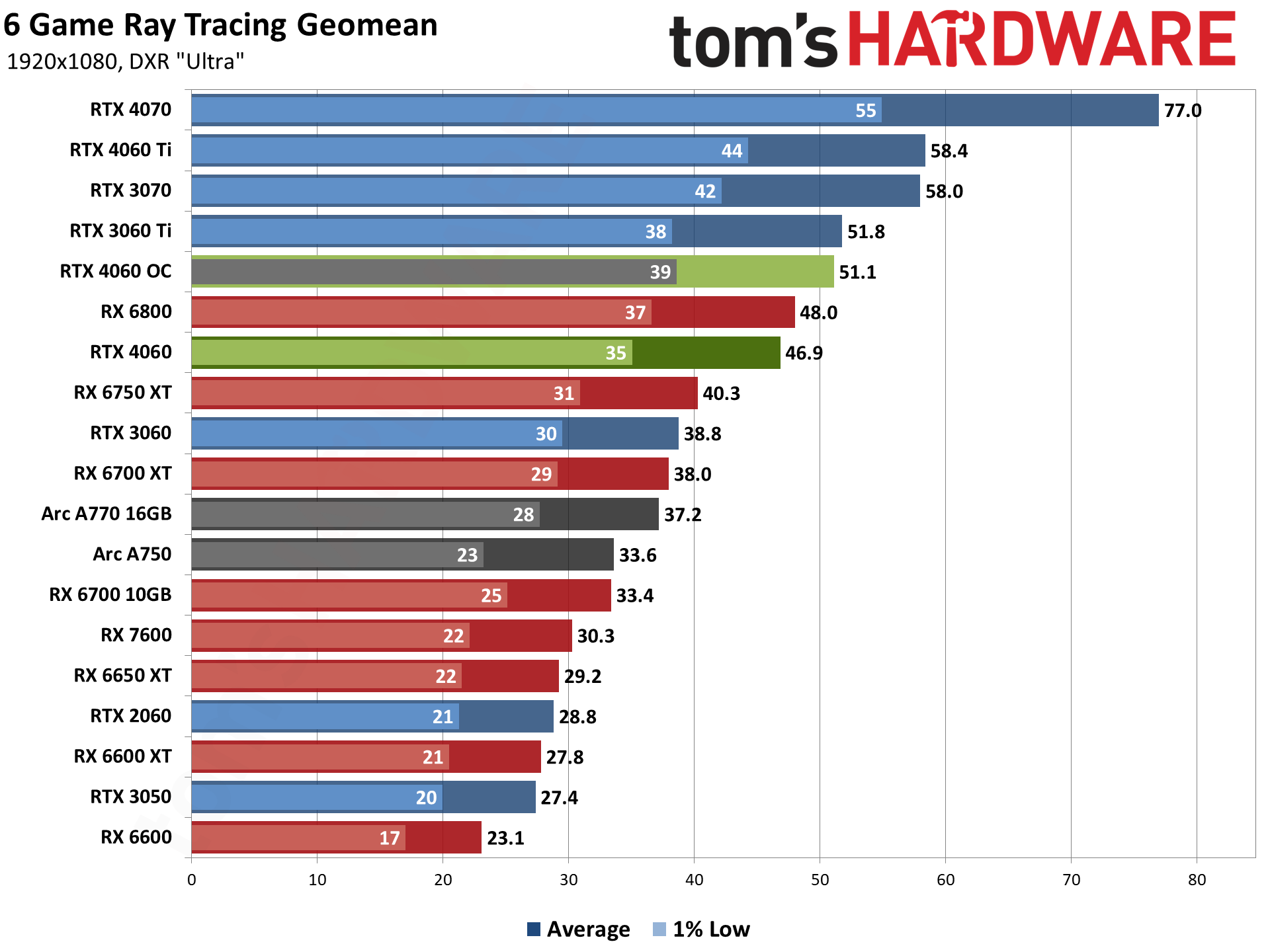

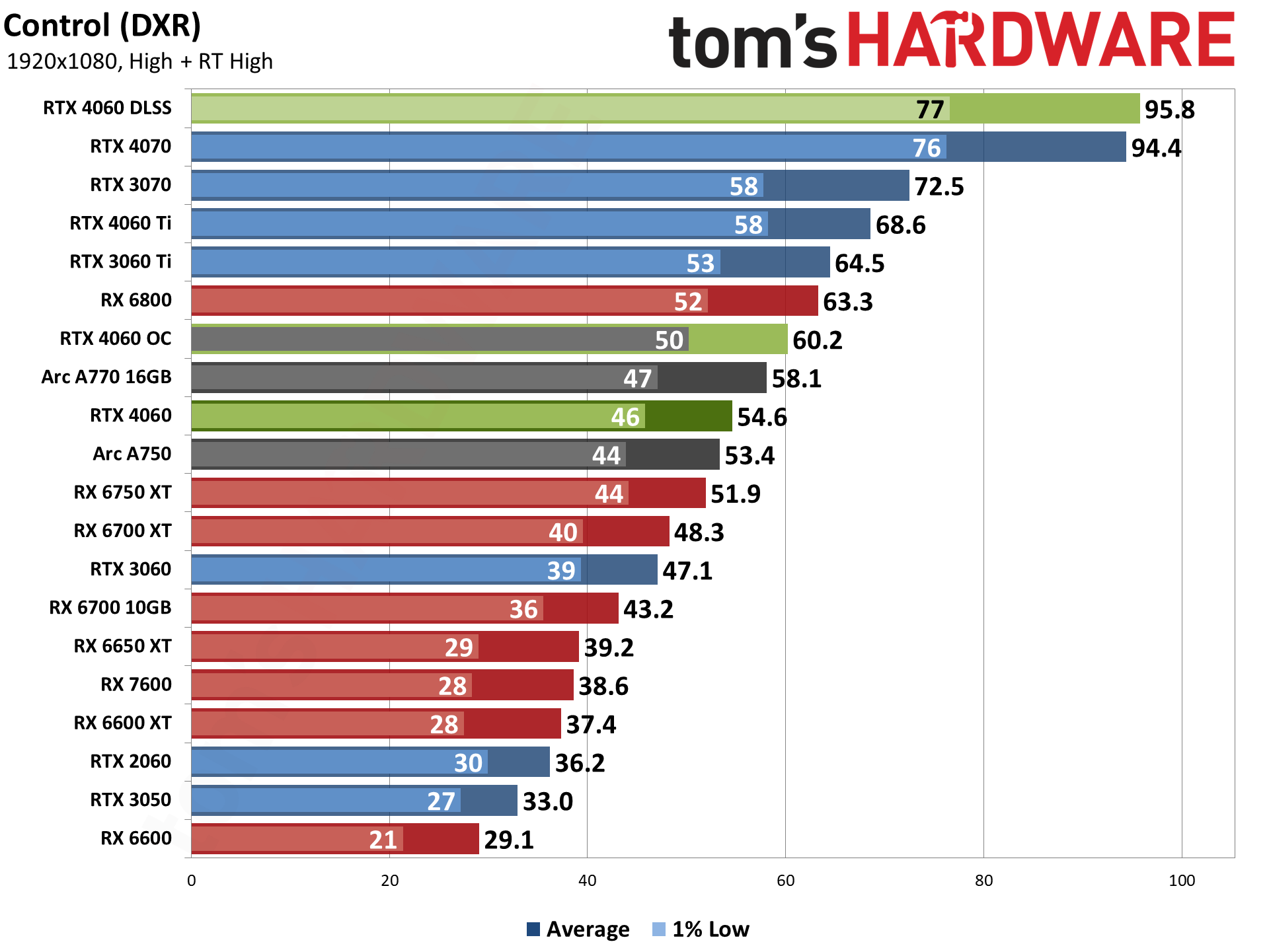

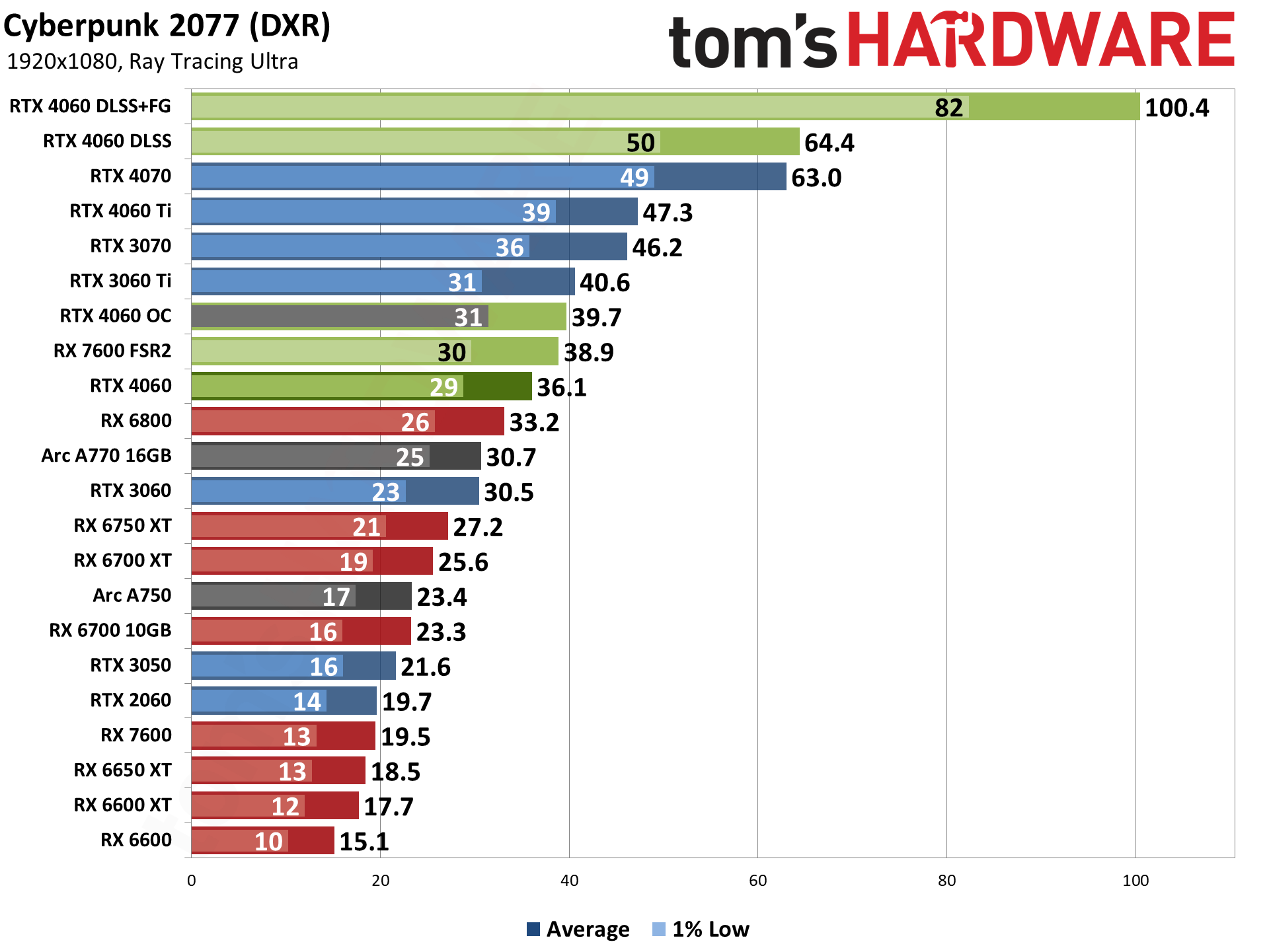

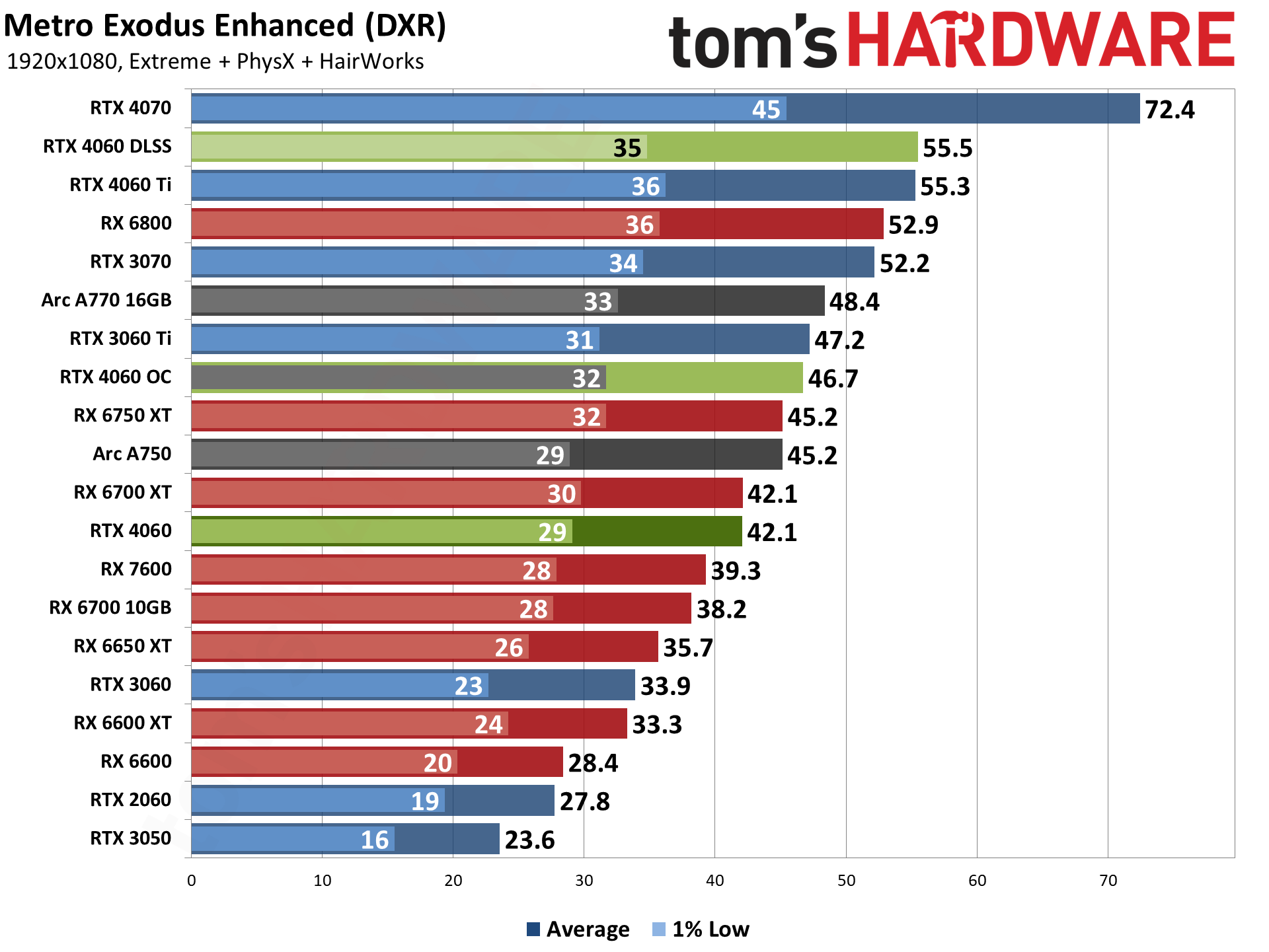

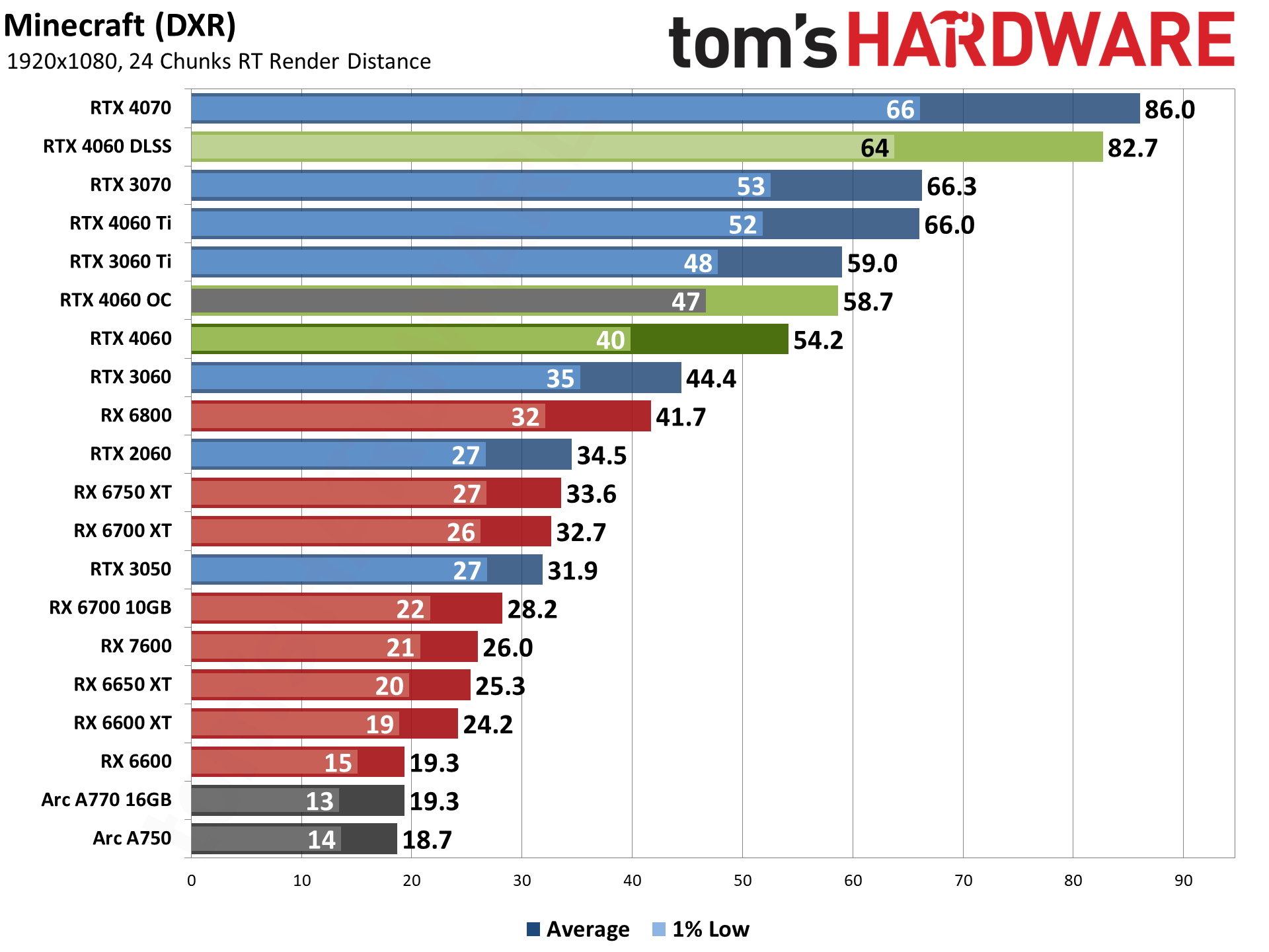

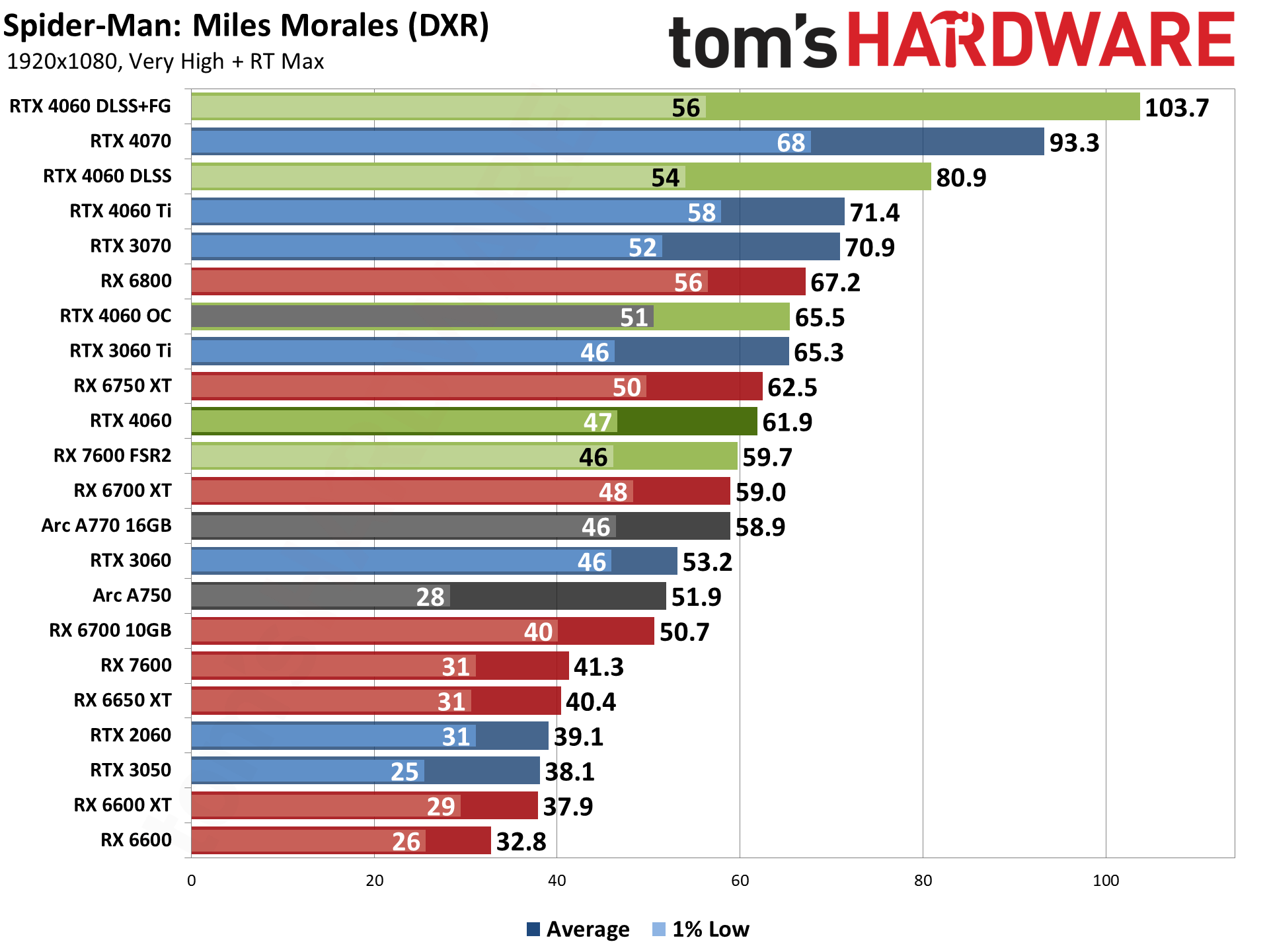

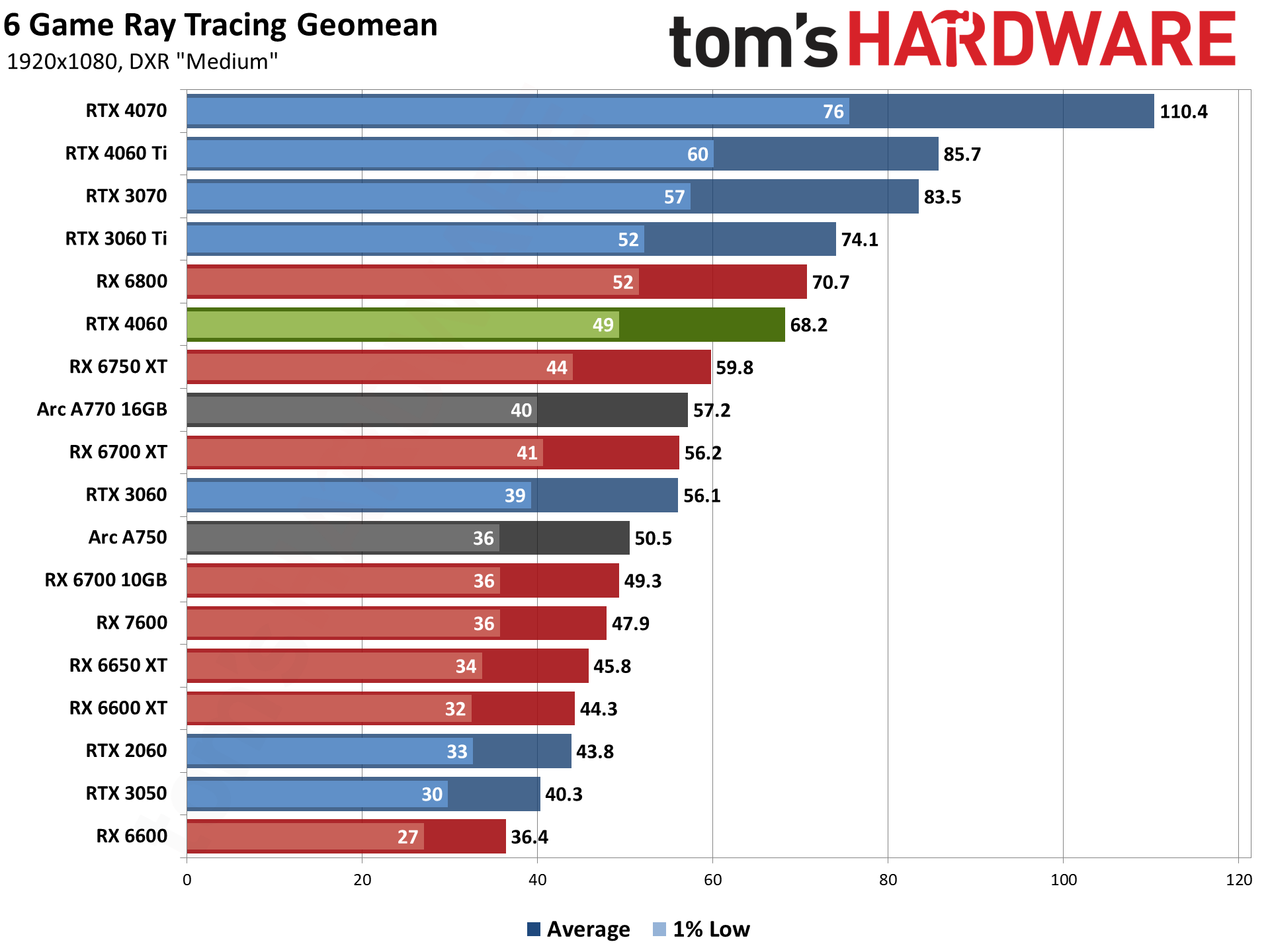

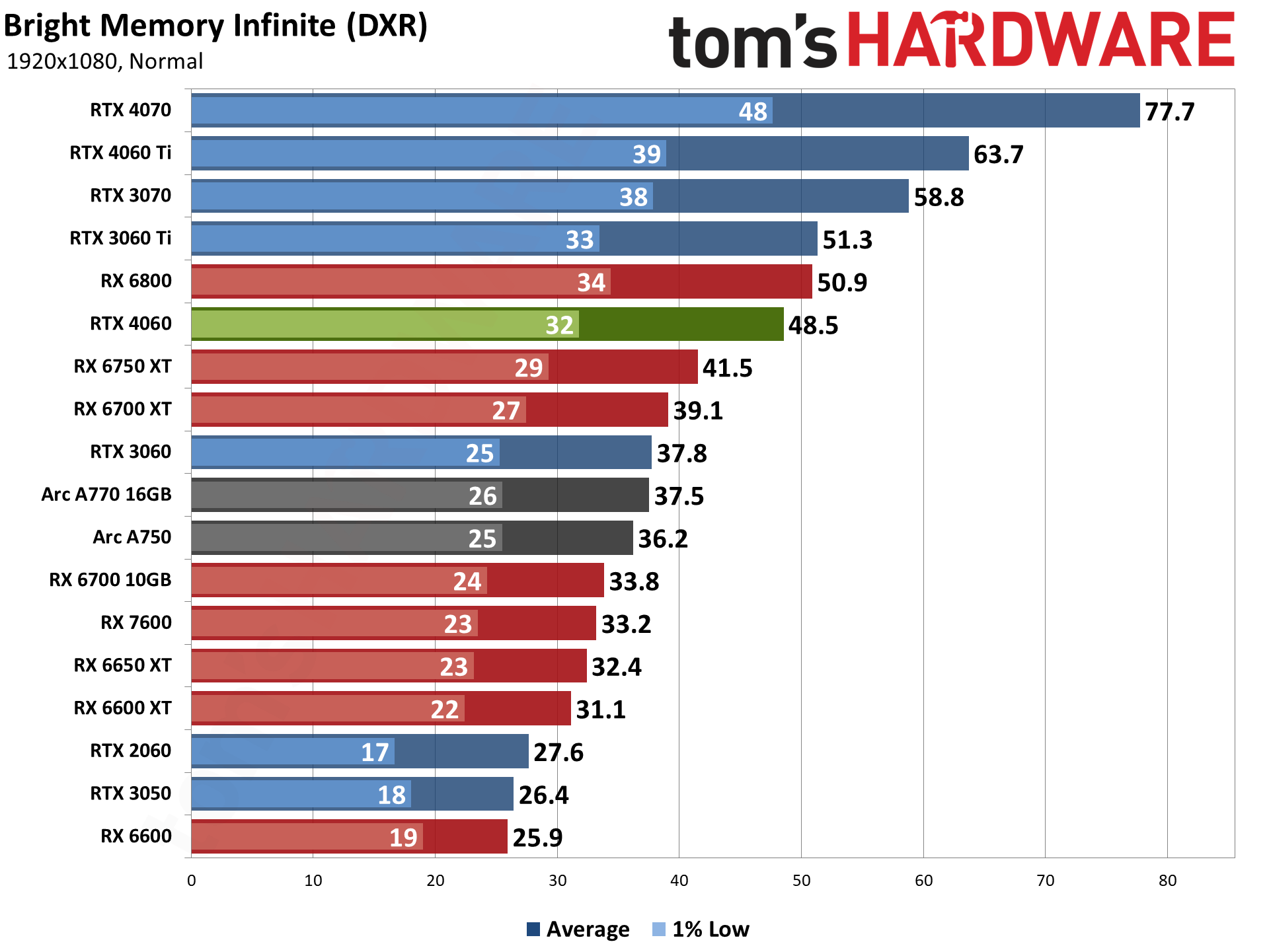

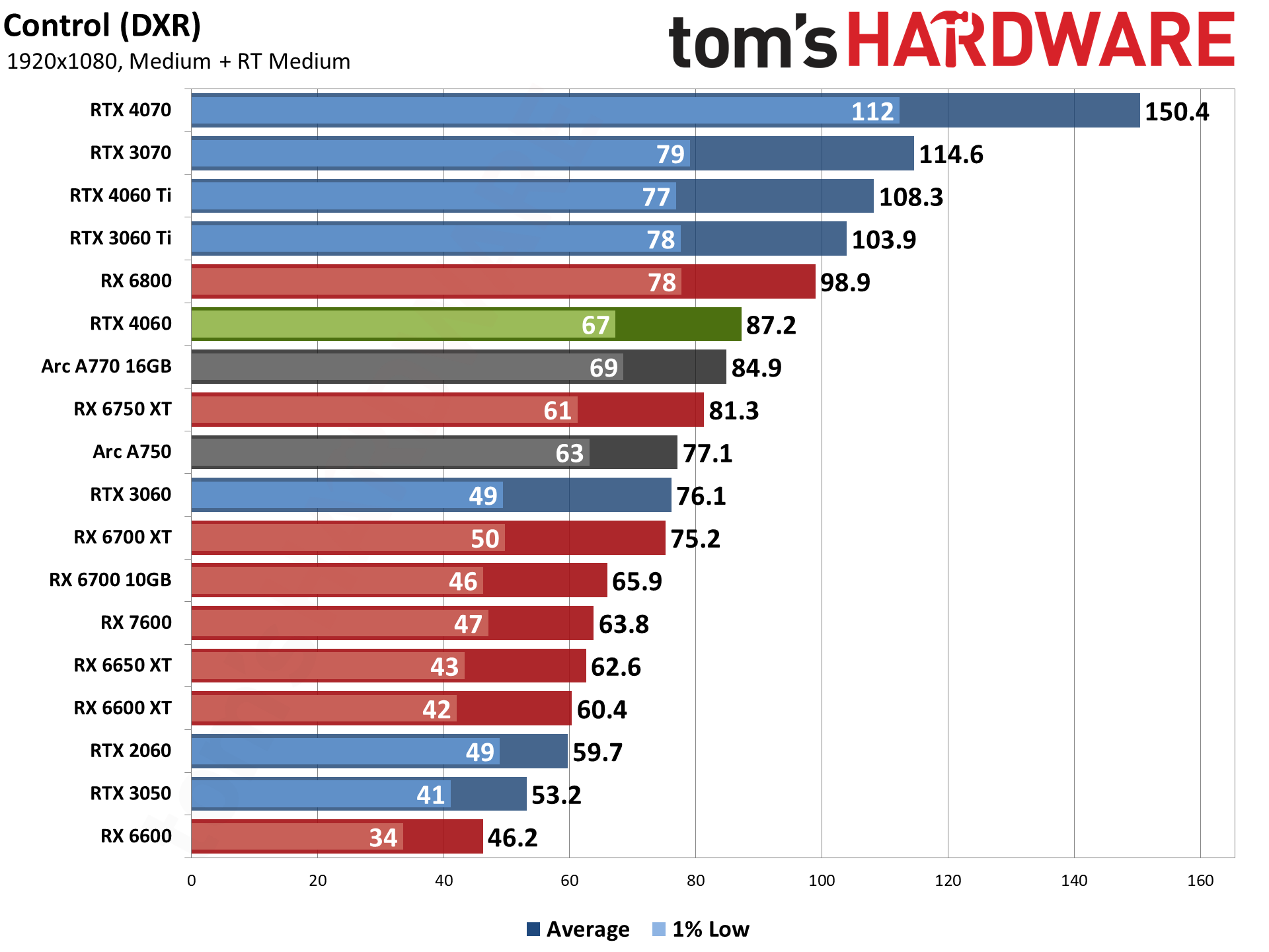

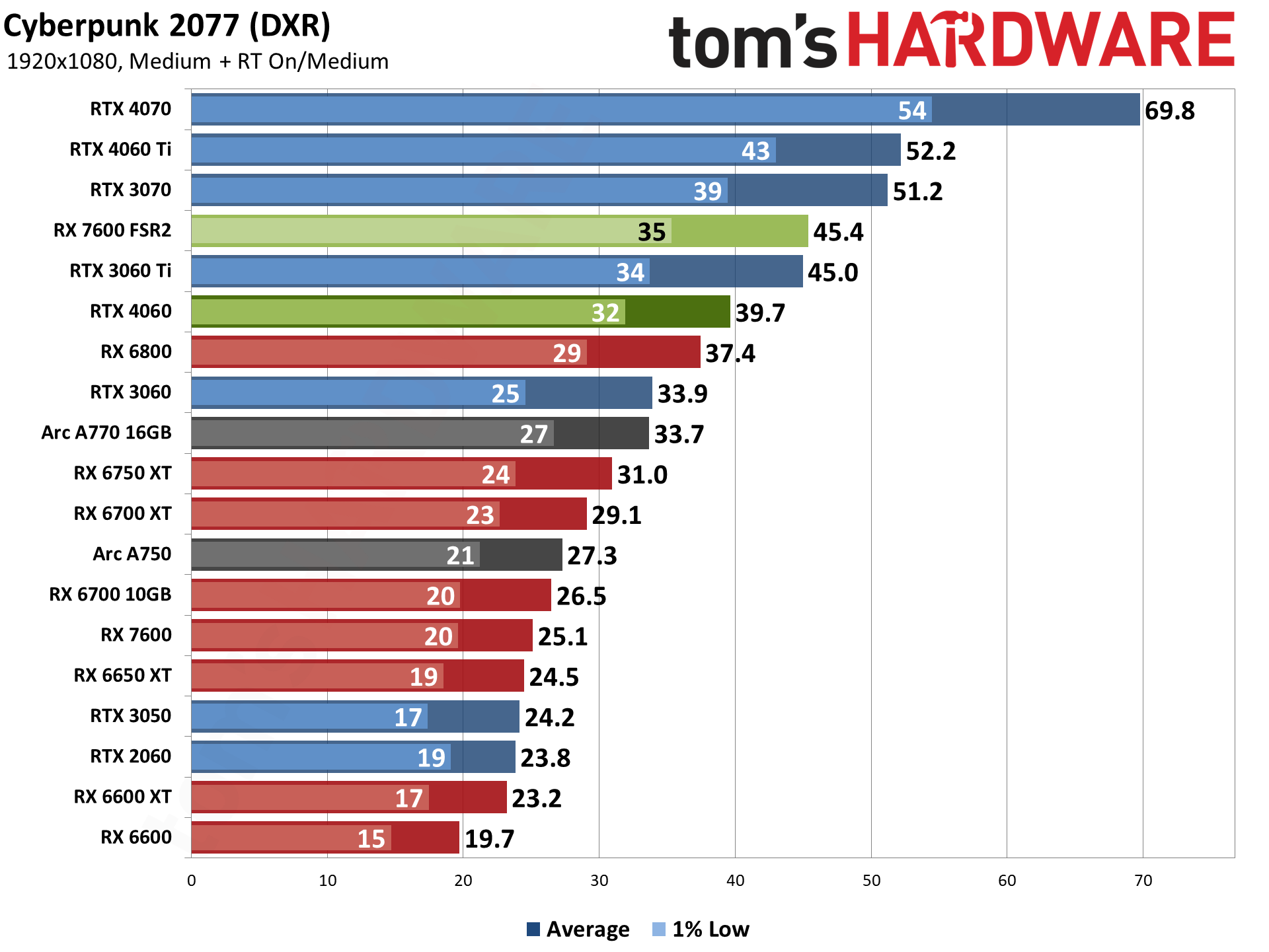

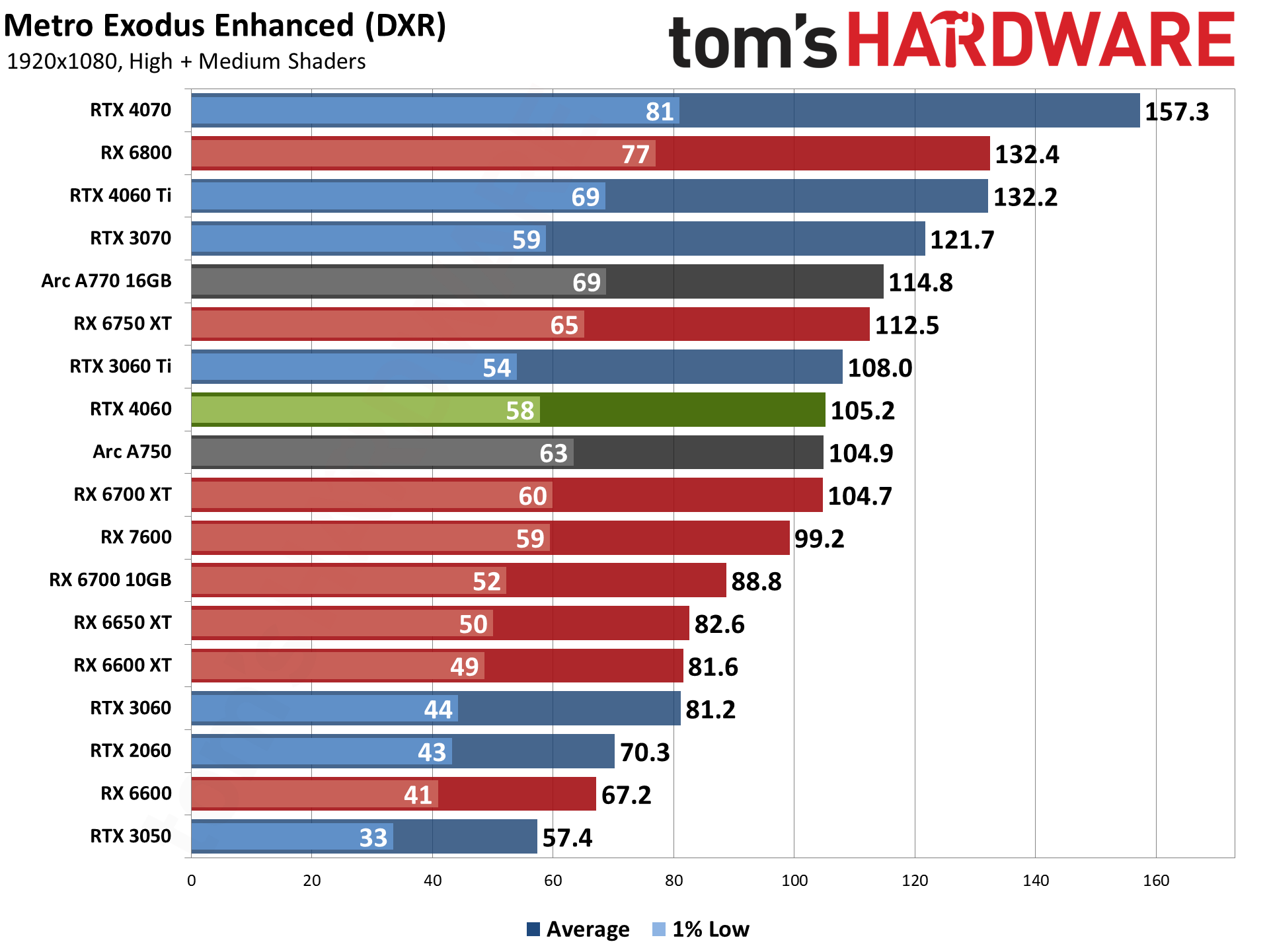

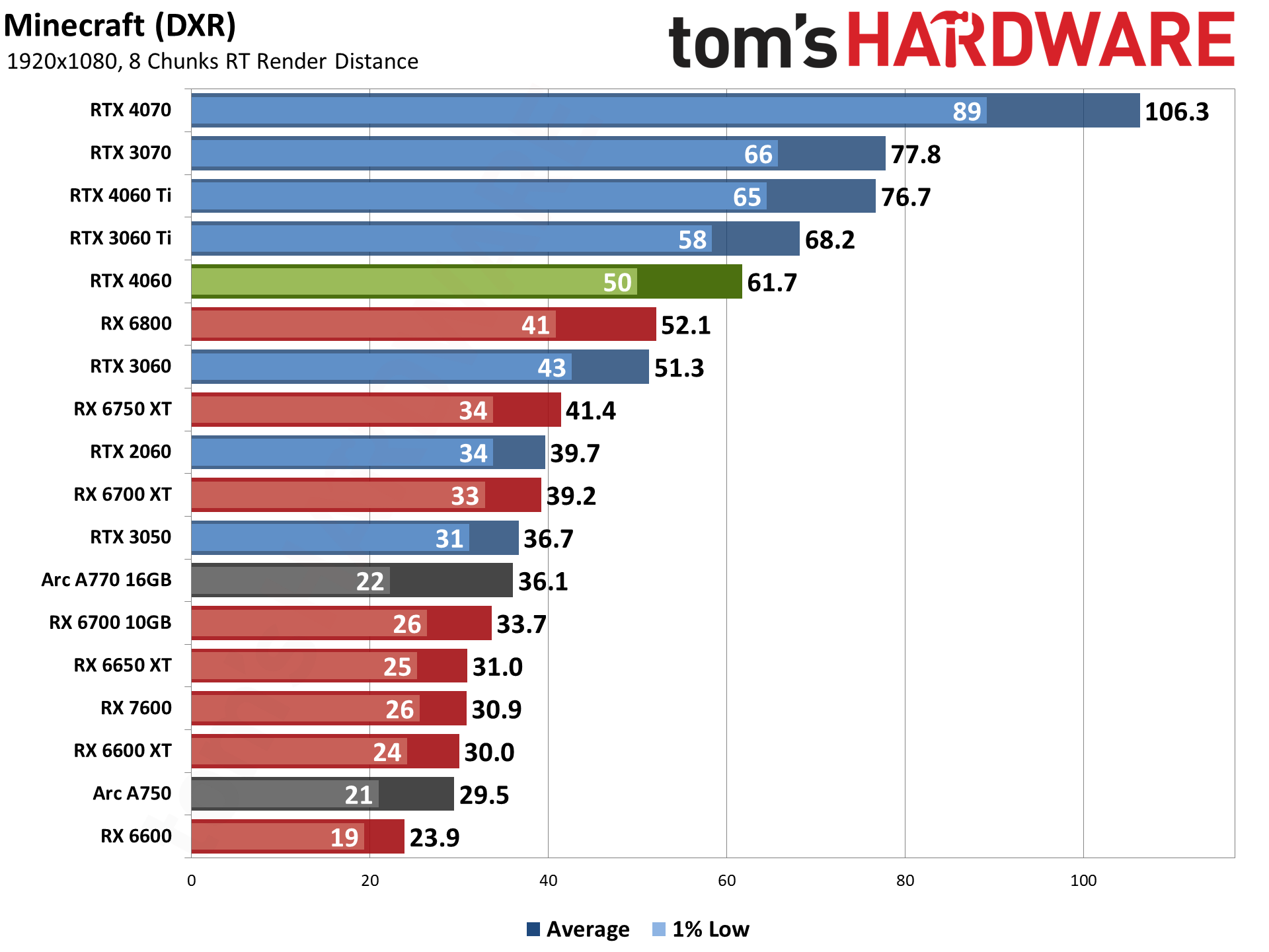

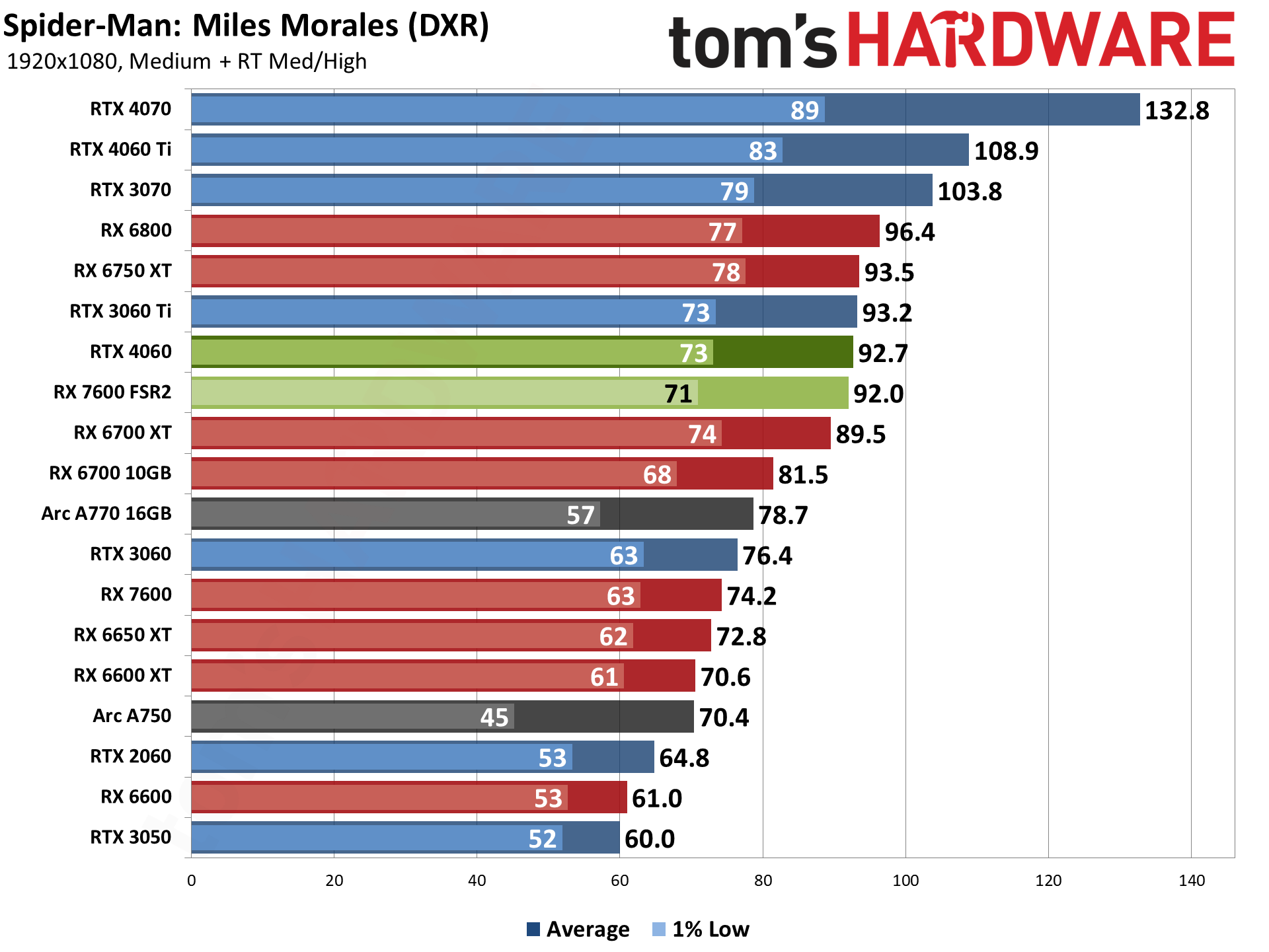

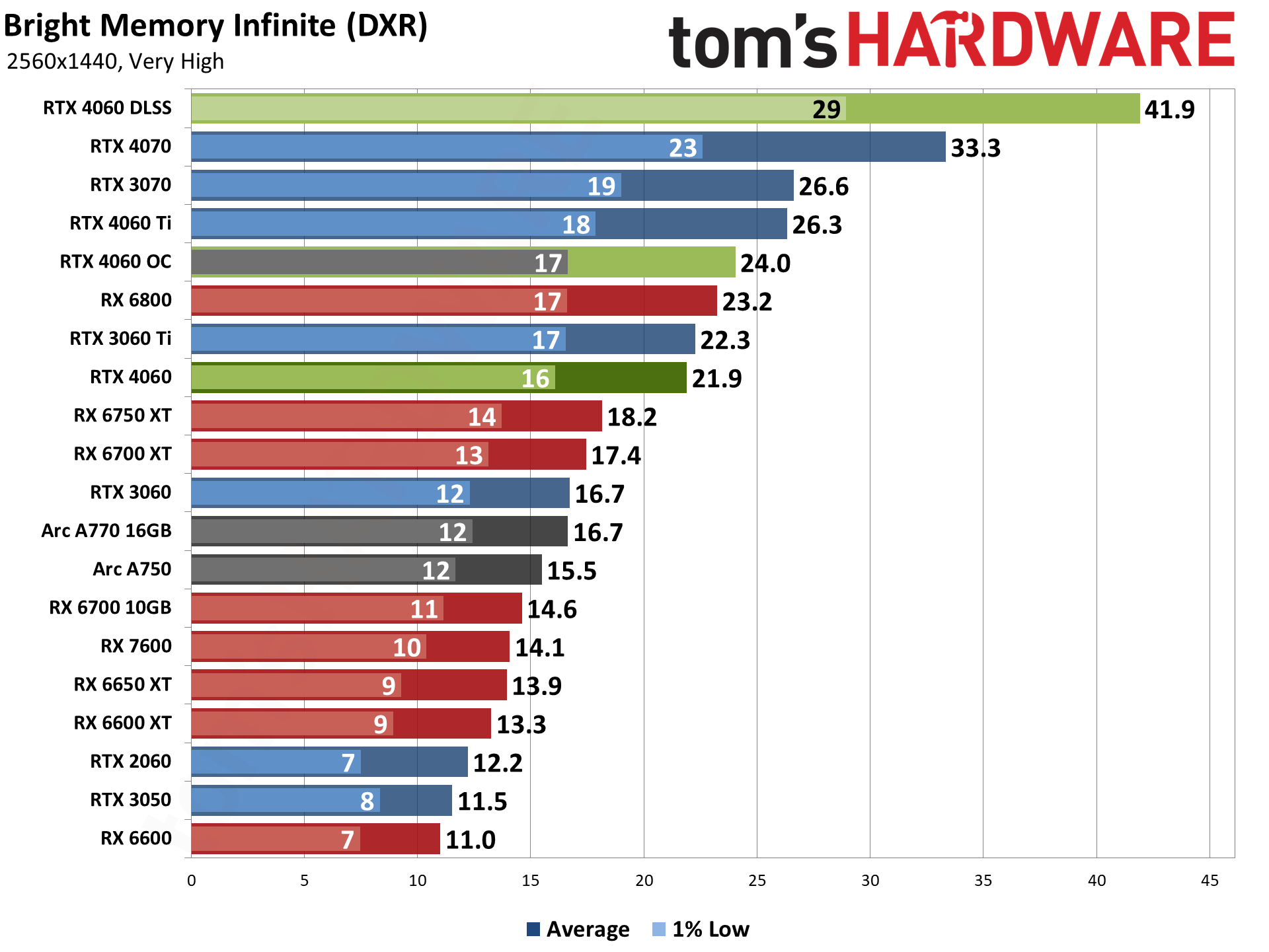

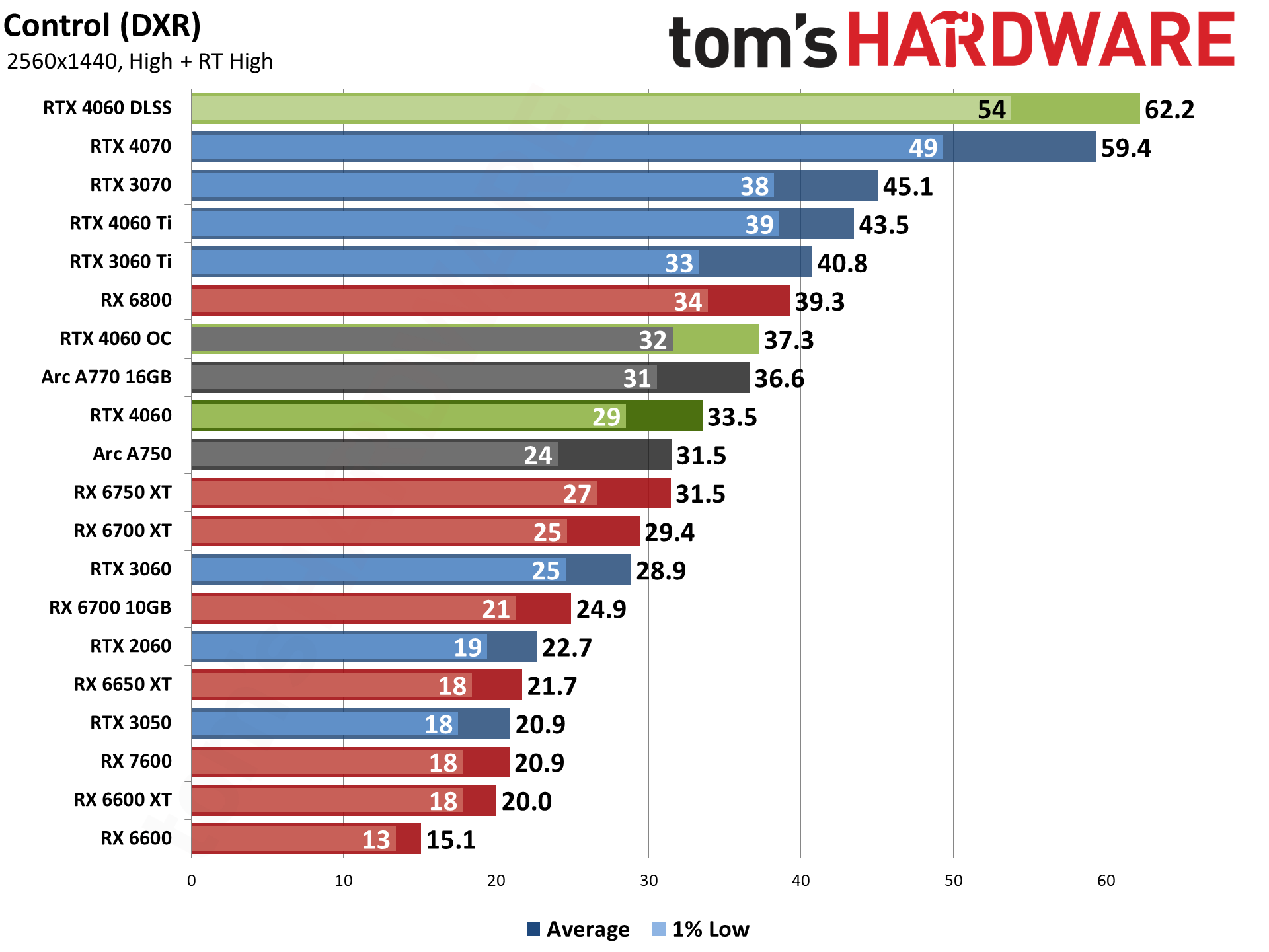

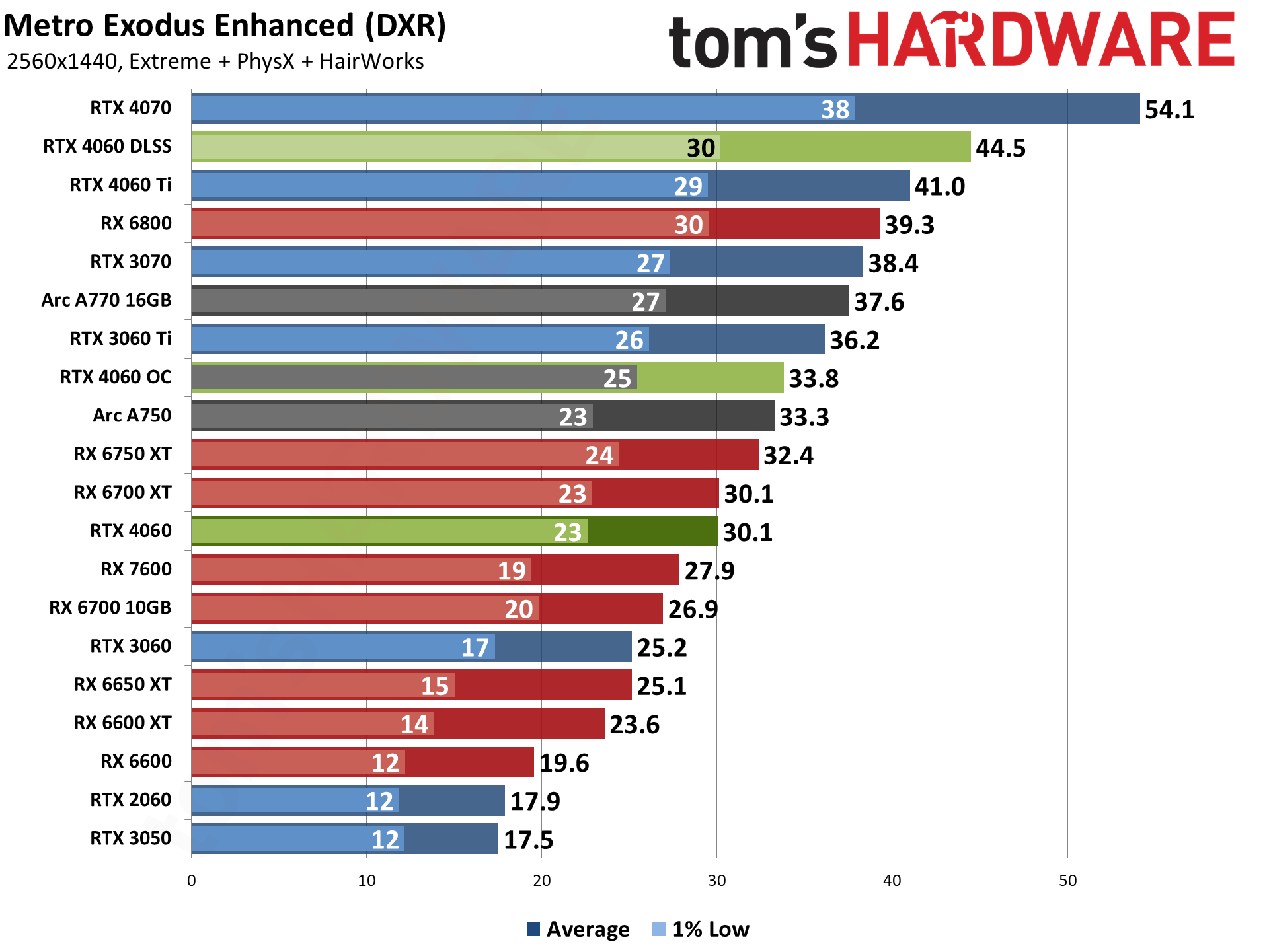

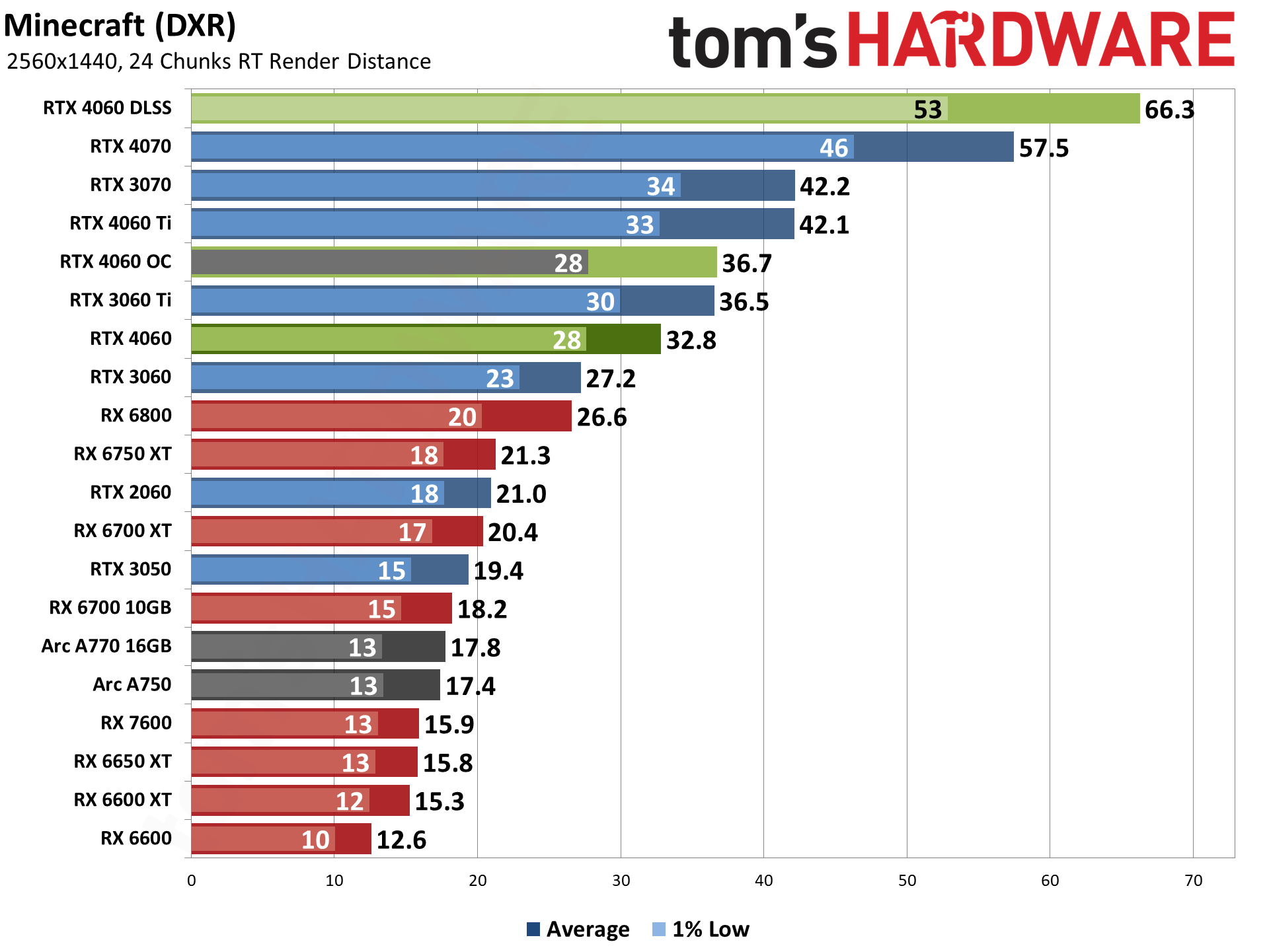

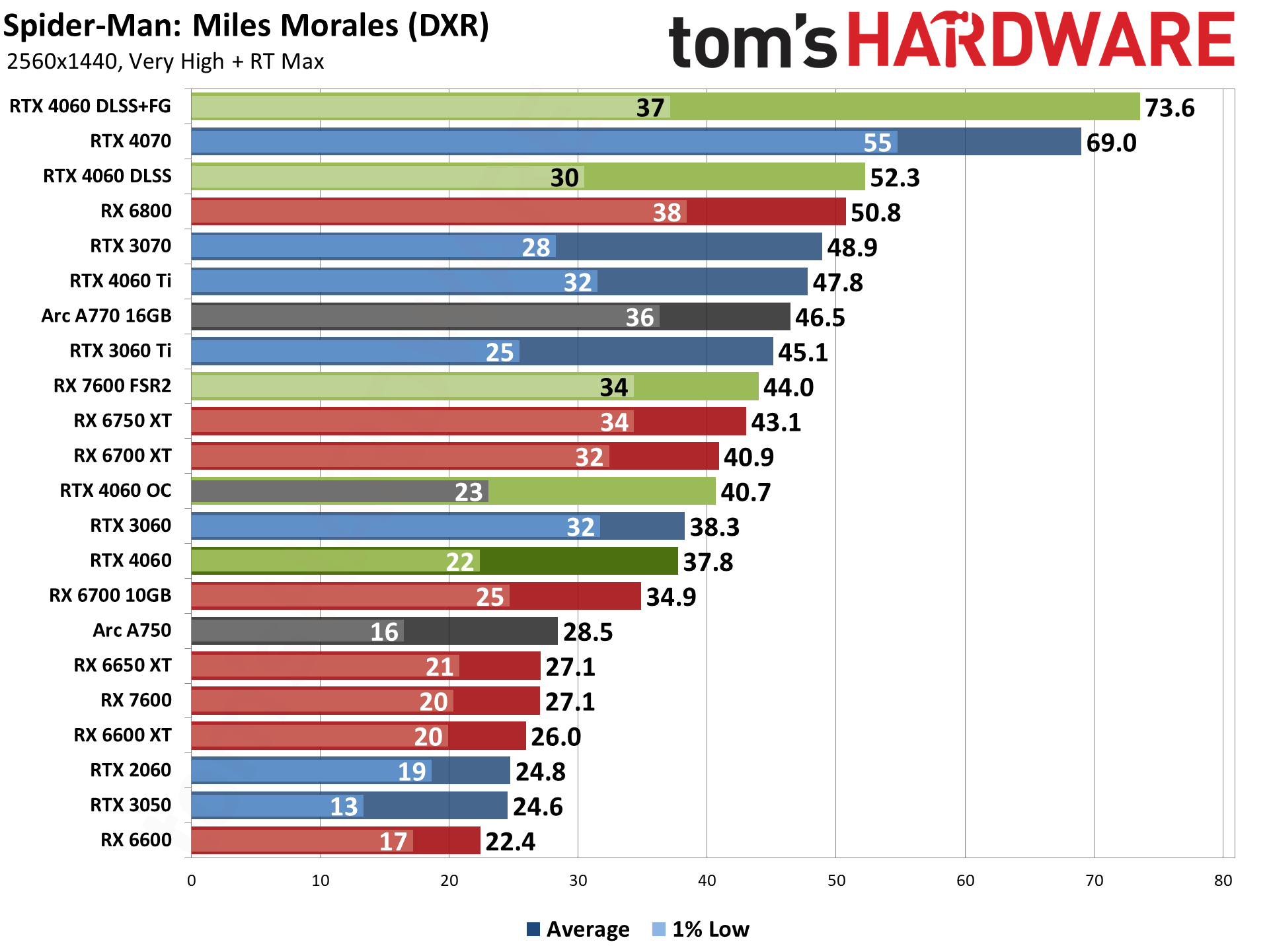

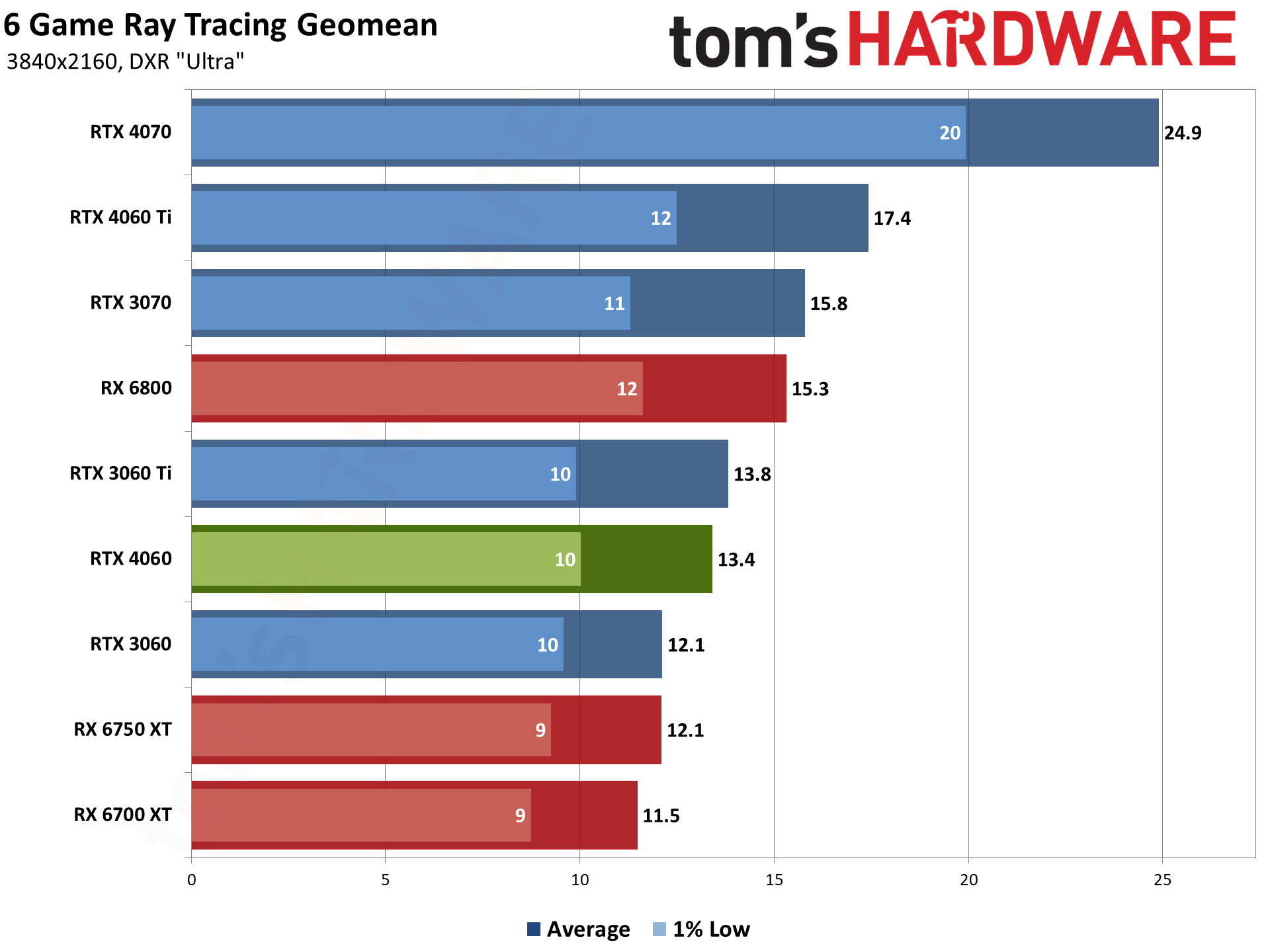

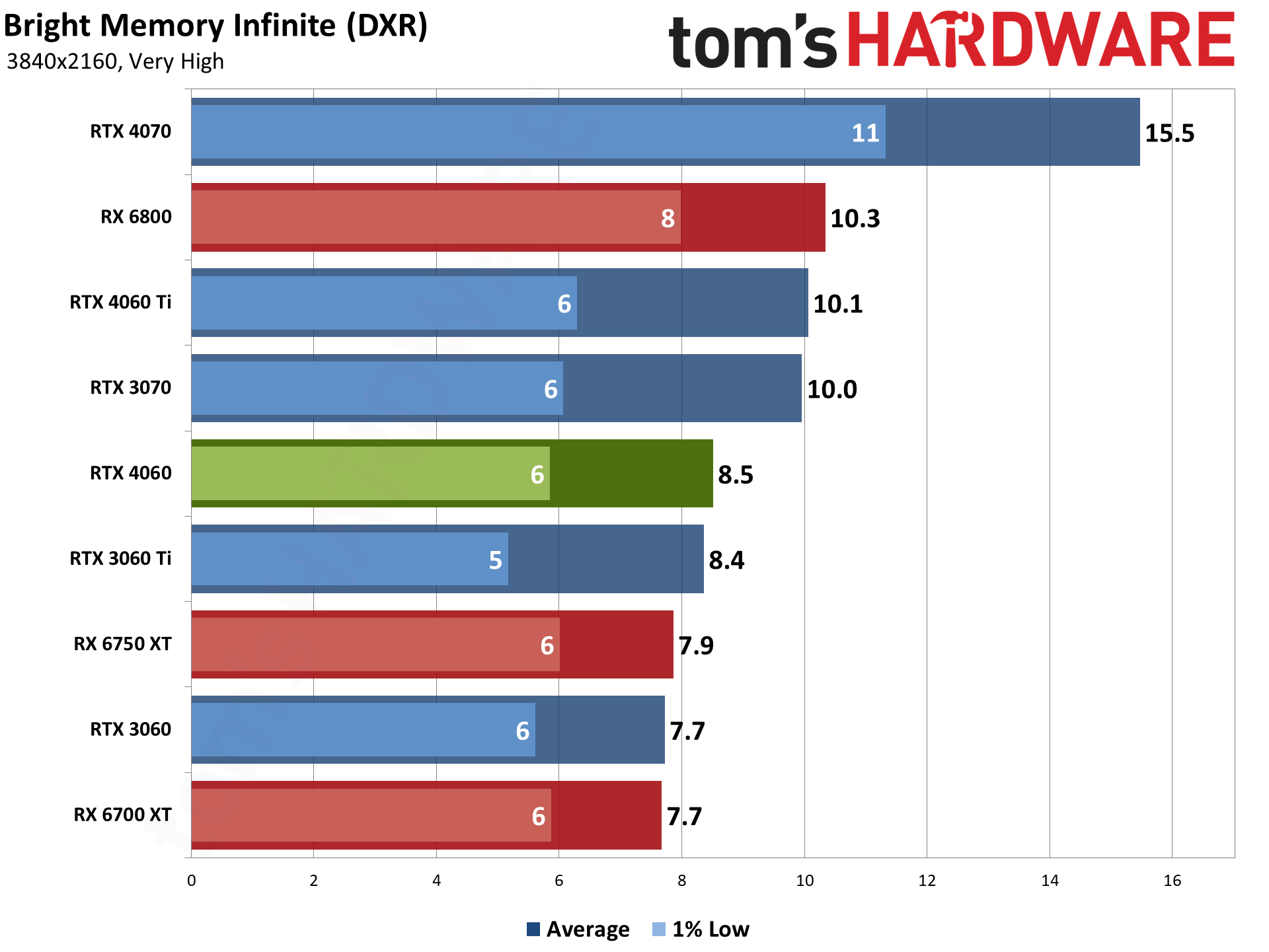

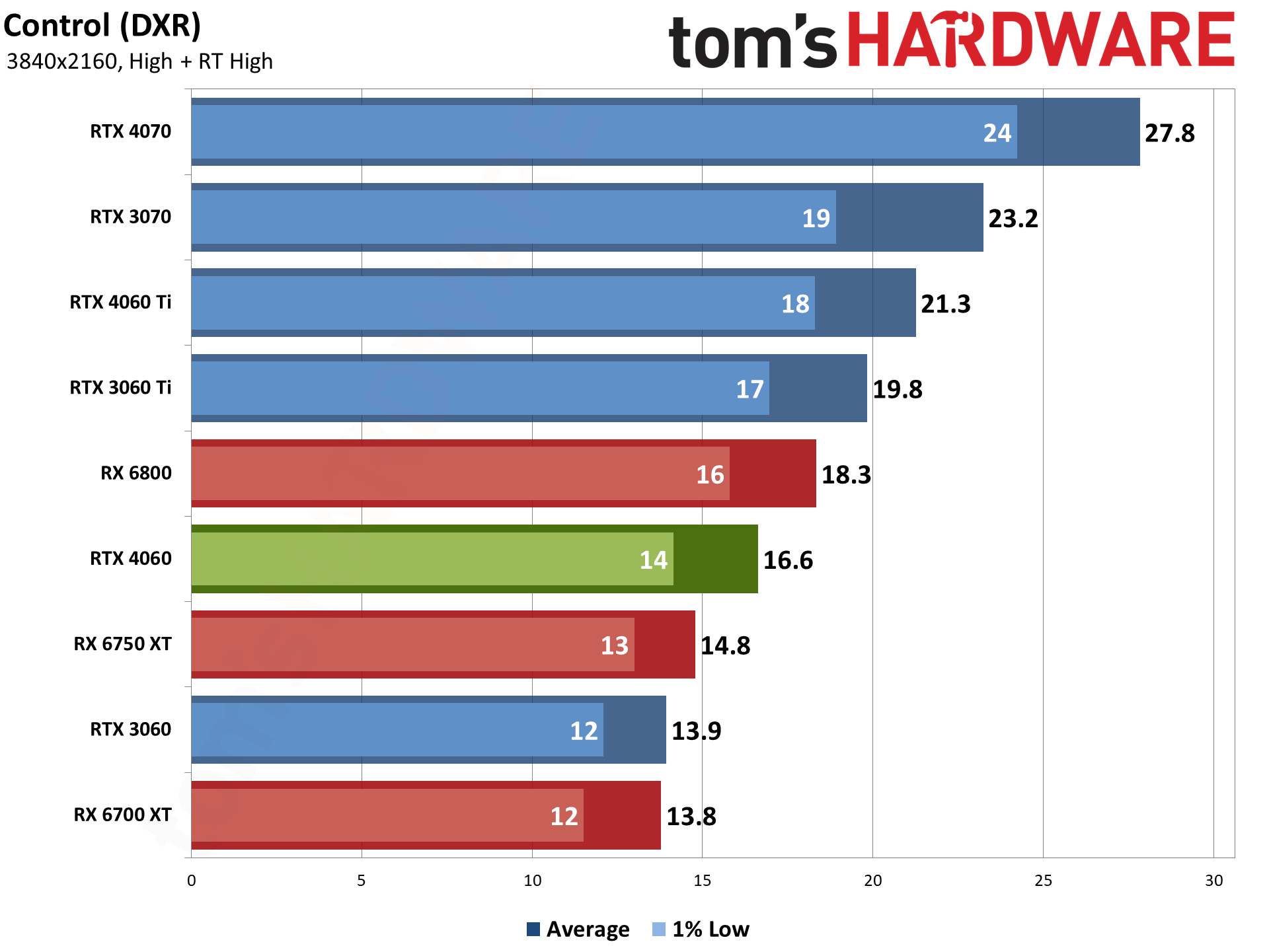

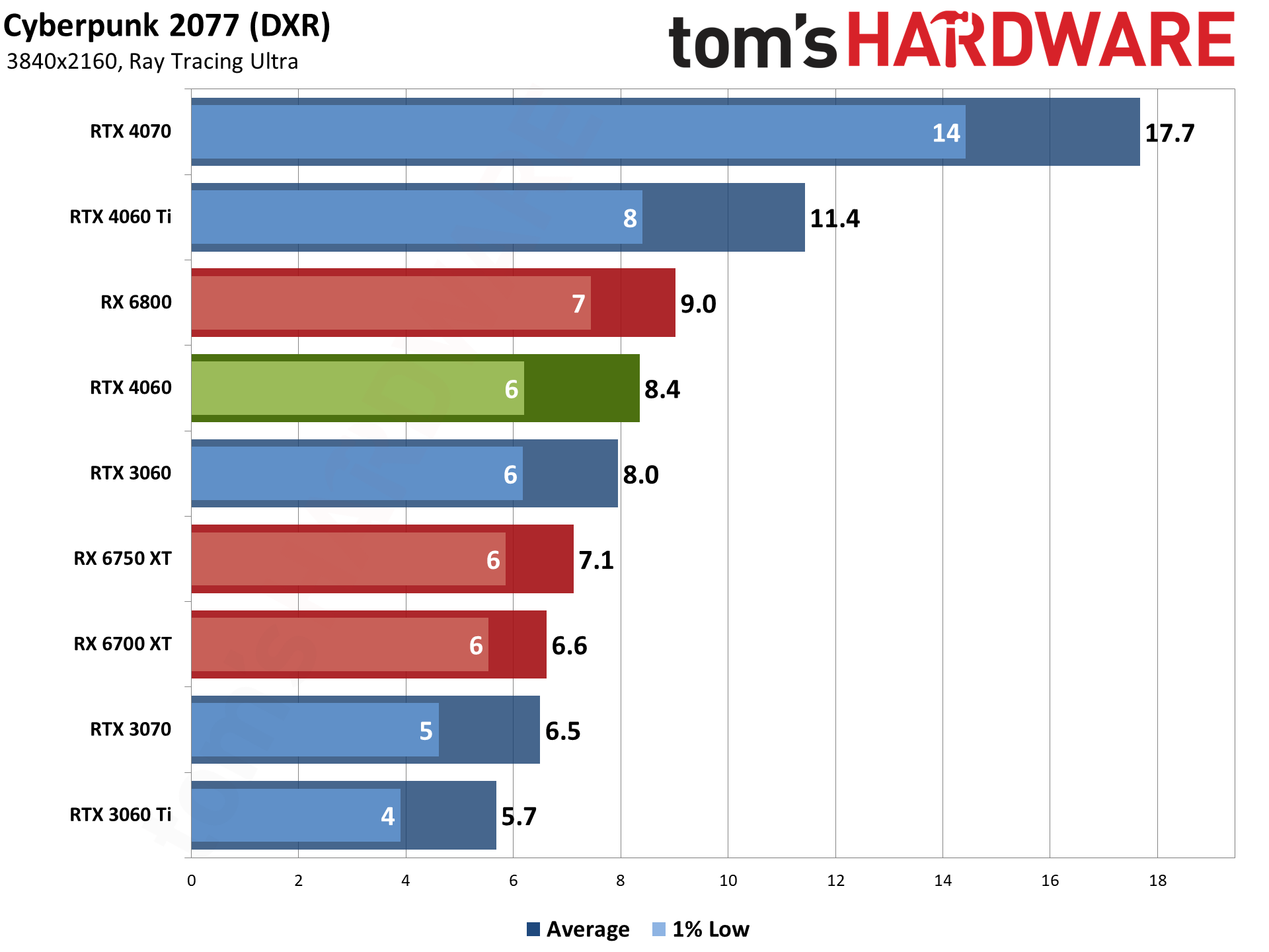

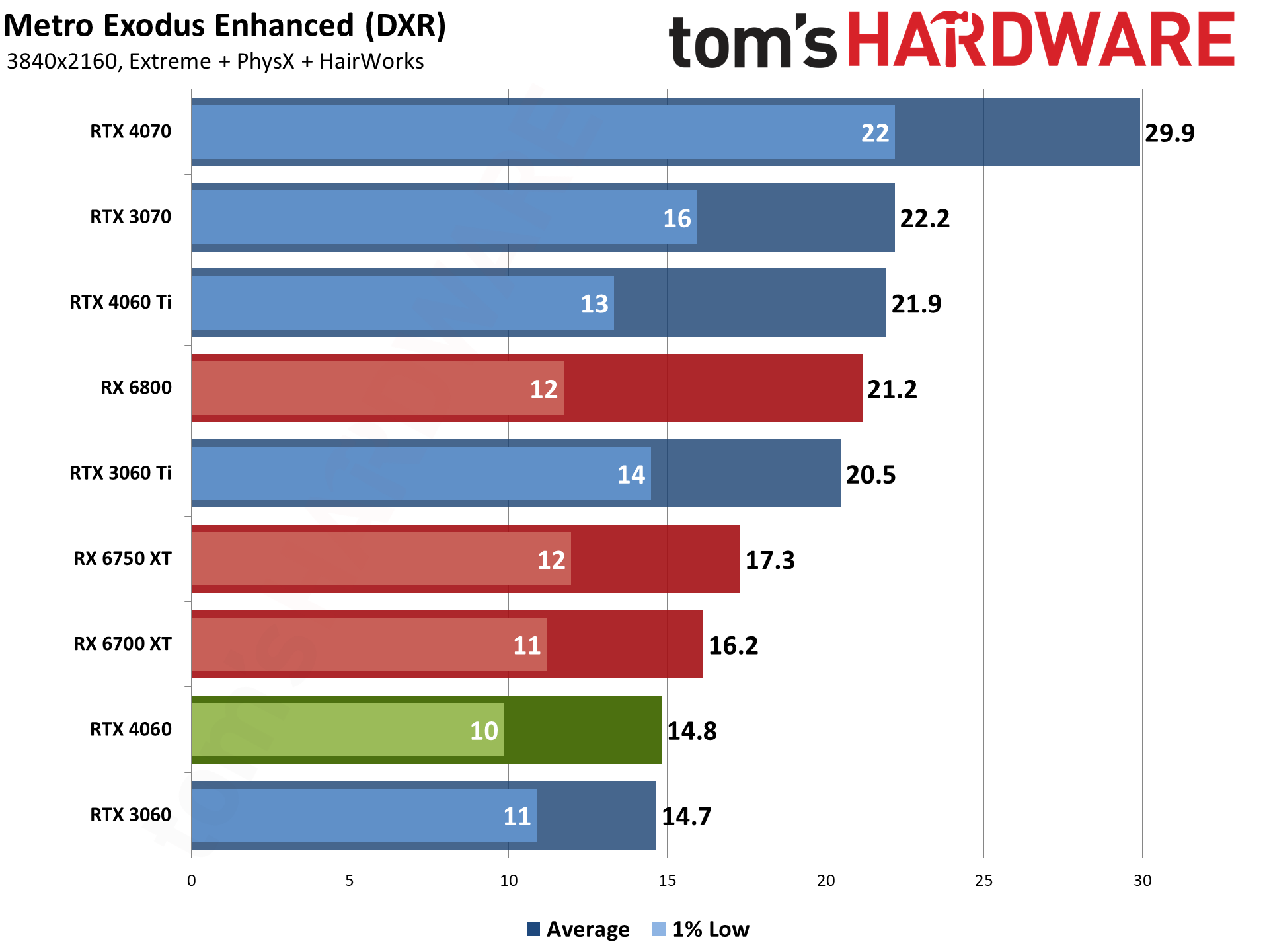

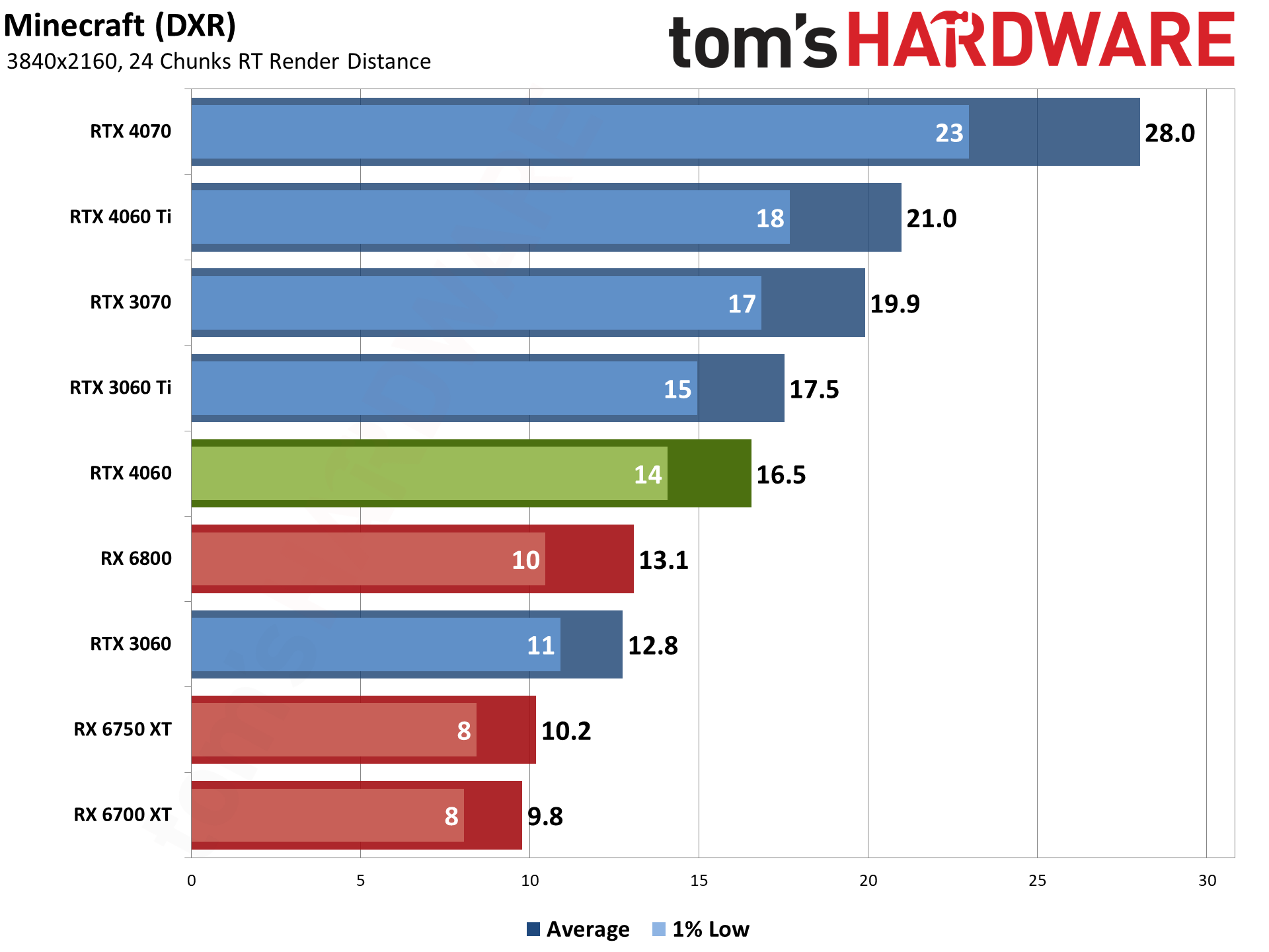

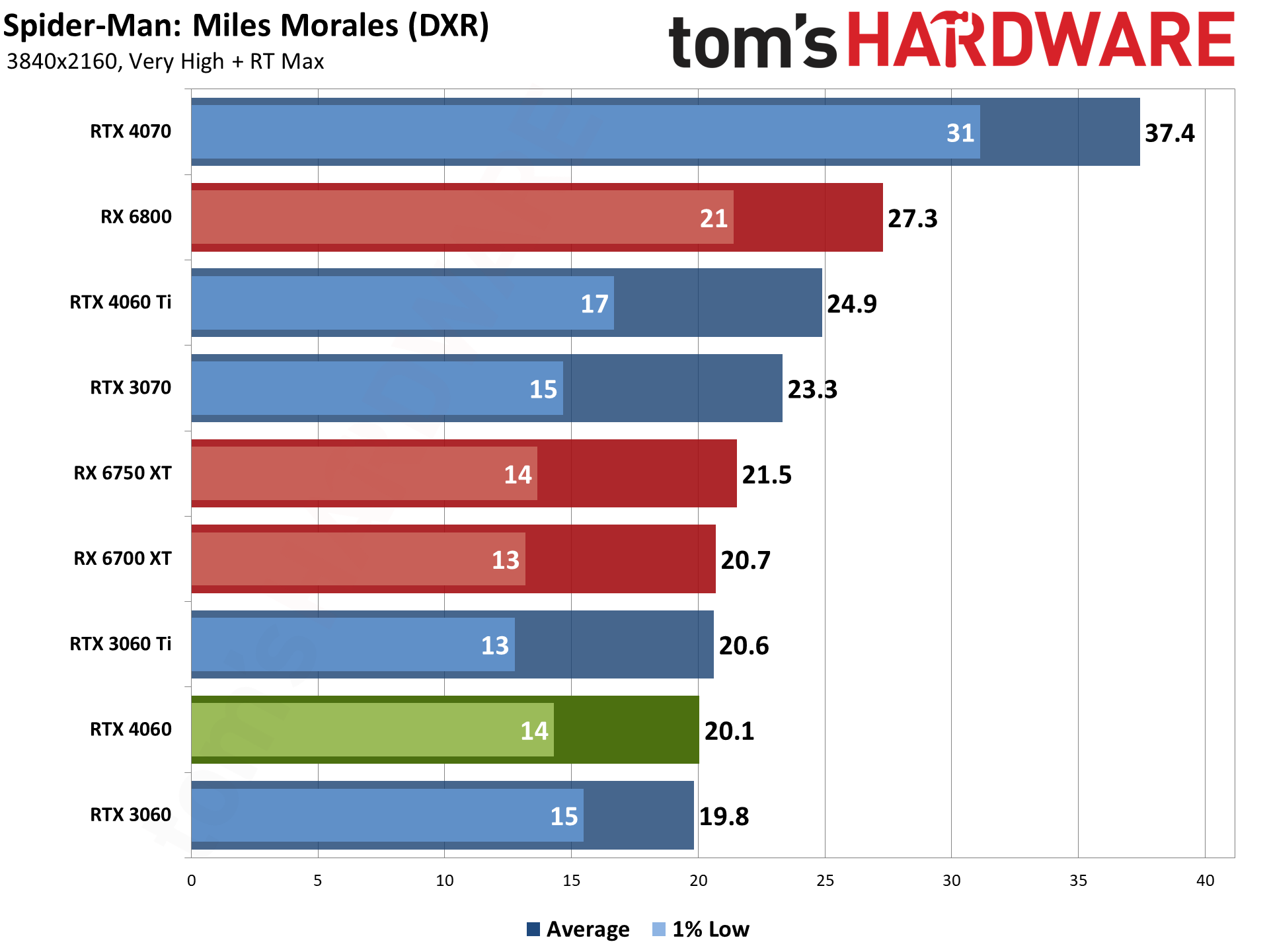

In our ray tracing (DXR) test suite, the RTX 4060 does much better against AMD. That's not too surprising, since Nvidia is now on its third generation RT hardware and has been pushing the API far more than AMD. Keep in mind that none of the games we're currently testing utilize the new Ada Lovelace architectural features like SER (Shader Execution Reordering), OMM (Opacity Micro-Maps), or DMM (Displacement Micro-Meshes).

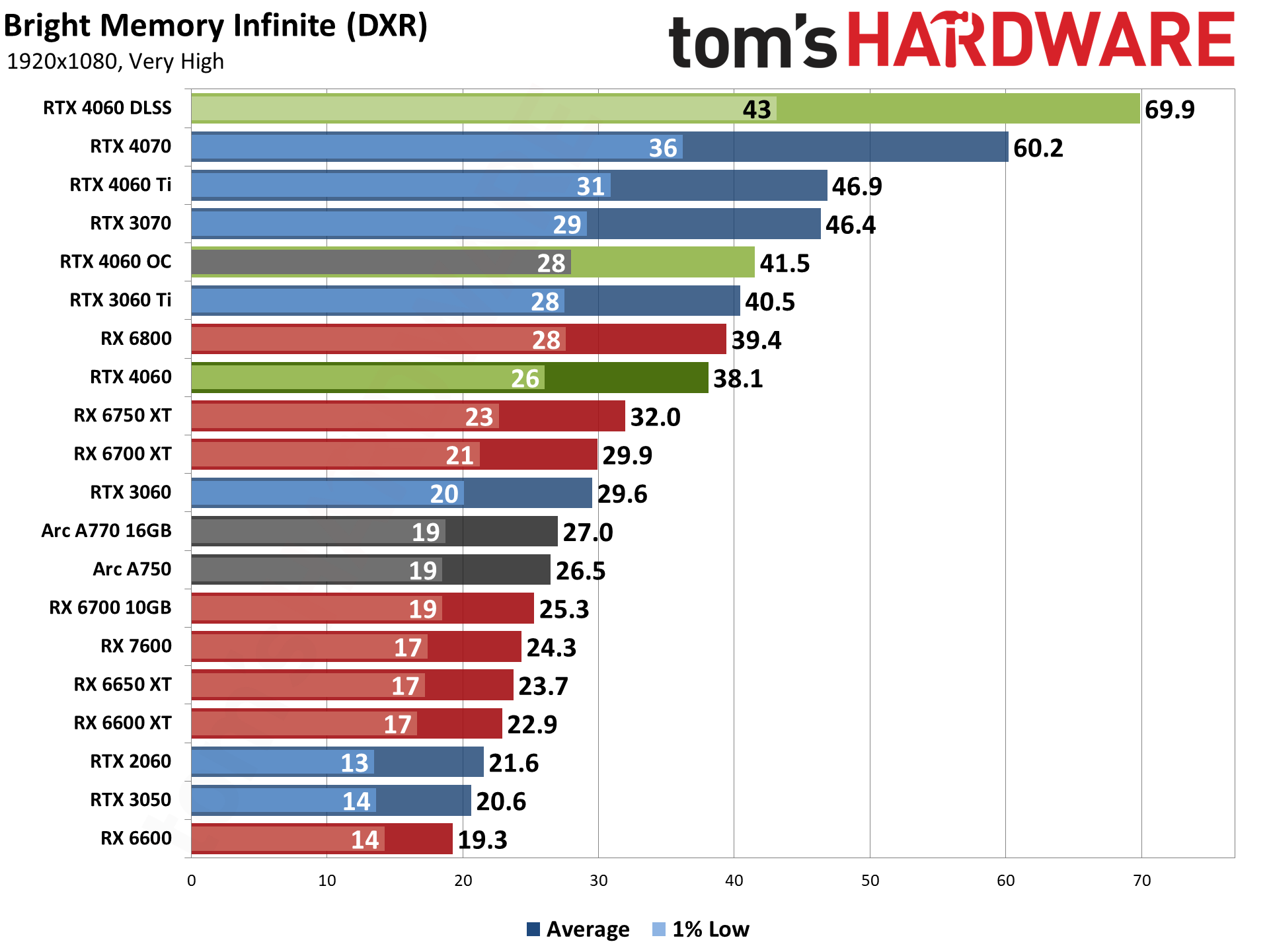

Compared with the RTX 3060, the 4060 improves overall DXR performance by 21–22 percent in our DXR test suite at 1080p medium and ultra settings. The range of improvement varies a bit, from 15% faster in Control to 30% faster in Metro Exodus Enhanced and Bright Memory Infinite Benchmark, but it's a pretty consistent gain. Similarly, it's 56% faster than the RTX 2060 at 1080p medium and 63% faster at 1080p ultra.

It's not all good news, though, as the RTX 3060 Ti still wins by about 10% overall. And let's be clear that a generational 20% improvement from Nvidia really isn't that big. It's a relatively minor step up compared to the previous generation, rather than the 50–75 percent improvement we saw in the prior generations (e.g. GTX 1060 versus GTX 960, or RTX 2060 versus GTX 1060).

- MORE: Best Graphics Cards

- MORE: GPU Benchmarks and Hierarchy

- MORE: All Graphics Content

Stepping up to higher resolutions can be a bit of an issue with the RTX 4060. The 8GB VRAM is certainly part of the equation, but a big part is simply the lack of raw horsepower. If you're playing games from several years back, stuff that came out around the time of the RTX 20-series launch, it does okay, but more demanding titles are another matter. The 24MB L2 cache also isn't large enough to effectively handle the various buffers and texture accesses at higher resolutions — it still helps, but hit rates aren't as high. Let's start with the 1440p results.

The RTX 4060 still beats the RTX 3060, but the margin has dropped to 18%, down from 22%. It's not a huge difference, but overall performance is also hit and miss at 1440p, as we'll see in the individual game results. Most of the games are playable, meaning they run at 30 fps or more, but the overall 45 fps average means there are games in our test suite that will drop well below that mark.

For 1440p, you'd be better off with high settings, and enabling DLSS Quality upscaling where available. DLSS incidentally got every game in our test suite over the 30 fps mark, even at ultra settings. Frame Generation (DLSS 3) isn't as widely supported, but it typically improved frames to screen by another 40–50 percent. Except in Forza Horizon 5 where the latest patch apparently broke Frame Generation. Oops.

The RTX 4060 also leads the RX 7600, this time by 27% overall. The margins in DXR games are much bigger than at 1080p, but Borderlands 3 is the only game where AMD's latest mainstream card can clearly beat the RTX 4060. And if you have an RTX 2060, the gains are also quite good with nearly 60% better 1440p performance overall.

Our rasterization suite shows that most of these games are still very playable, with many breaking 60 fps. A Plague Tale: Requiem is by far and away the worst performing of the group, averaging 38 fps, but DLSS and Frame Generation can push that up to 76 fps.

The lead over AMD's RX 7600 is only 8%, while the previous generation RX 6700 XT leads the 4060 by 15% — only Total War: Warhammer 3 gives the 4060 a slight lead over AMD's similarly priced previous generation GPU. If you're primarily interested in rasterization performance, it's still an easy pick over the newcomer, at least if you're only looking at pure performance and not accounting for power use.

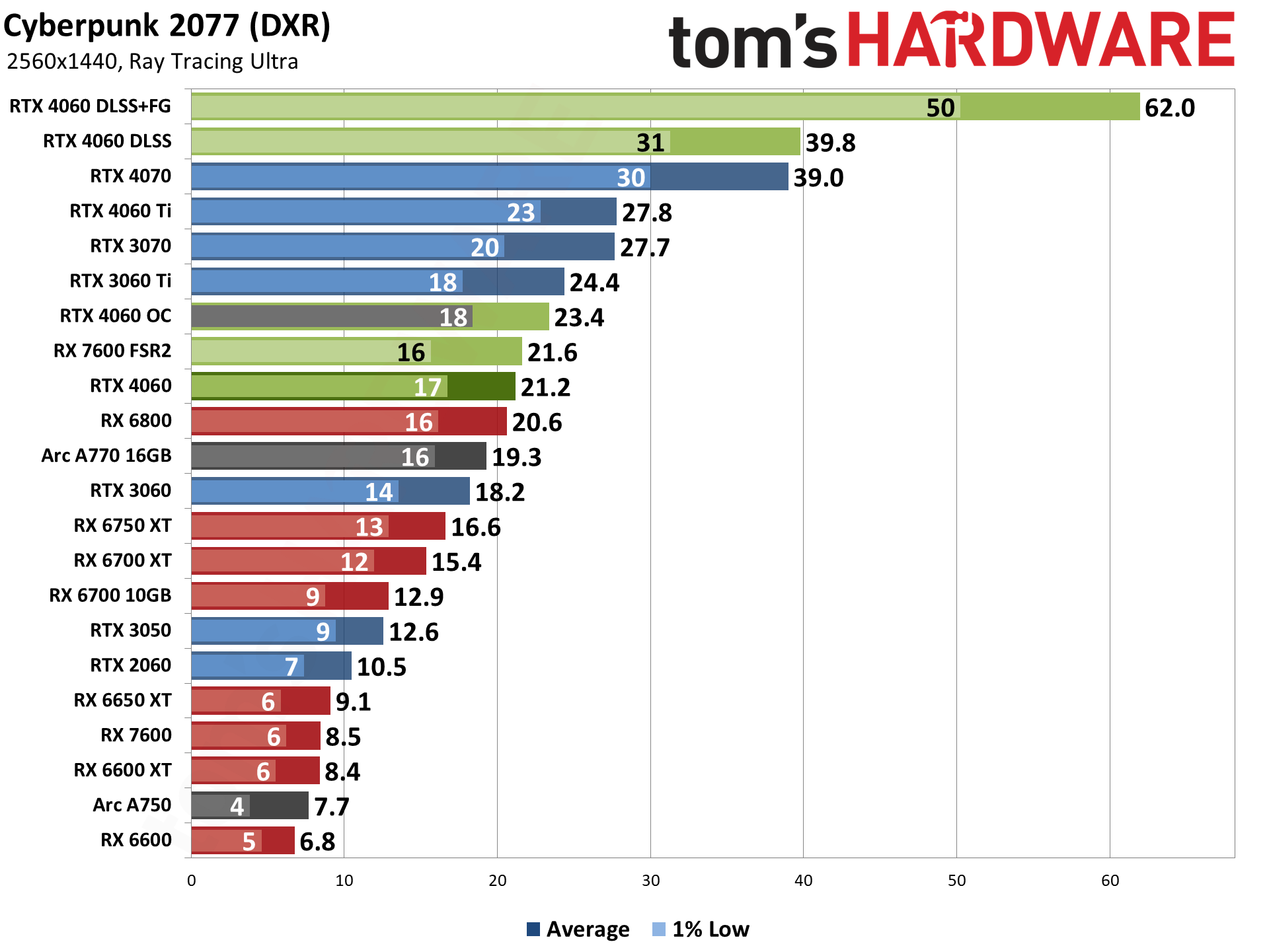

Ray tracing at 1440p without upscaling pushes the RTX 4060 hard. Cyberpunk 2077 and the Bright Memory Infinite Benchmark barely clear 20 fps, while the other games are all in the low to mid 30s. But all six of these games support DLSS upscaling, which can get you 40 fps or more using Quality mode. DLSS 3 Frame Generation in Cyberpunk 2077 and Spider-Man: Miles Morales can even break the 60 fps mark in those tow games.

Whether or not you think Frame Generation and DLSS upscaling are useful is another matter. I personally don't have any real issues with DLSS upscaling — in some cases, the AI upscaling and anti-aliasing can actually look better than native rendering, at least in games where the TAA anti-aliasing tends to be overly blurry and aggressive. Frame Generation is a different matter.

It's fine, but as we've noted in the past, the relatively large 40–50 percent performance gains (compared to just upscaling) don't really represent the real-world feel of the games. Cyberpunk 2077 might send 62 frames to the monitor every second, but half of those are "generated," which means the base input rate is only equal to 31 fps. In other words, the game will feel like it's running at half the Frame Gen rate, and if that's 30 fps or lower, it can feel very sluggish.

Looking at other GPUs, the competing AMD cards as usual tend to fall well behind in ray tracing performance. Where the RX 6700 XT is 15% faster in rasterization performance, with DXR it's 16% slower overall, and the more demanding the ray tracing rendering, the further behind it falls — so it's still tied in Metro Exodus Enhanced, holds a slight lead in Spider-Man: Miles Morales, but trails by 28% in Cyberpunk 2077 and by 38% in Minecraft. And that's without factoring DLSS.

Nvidia RTX 4060: 4K Gaming Performance

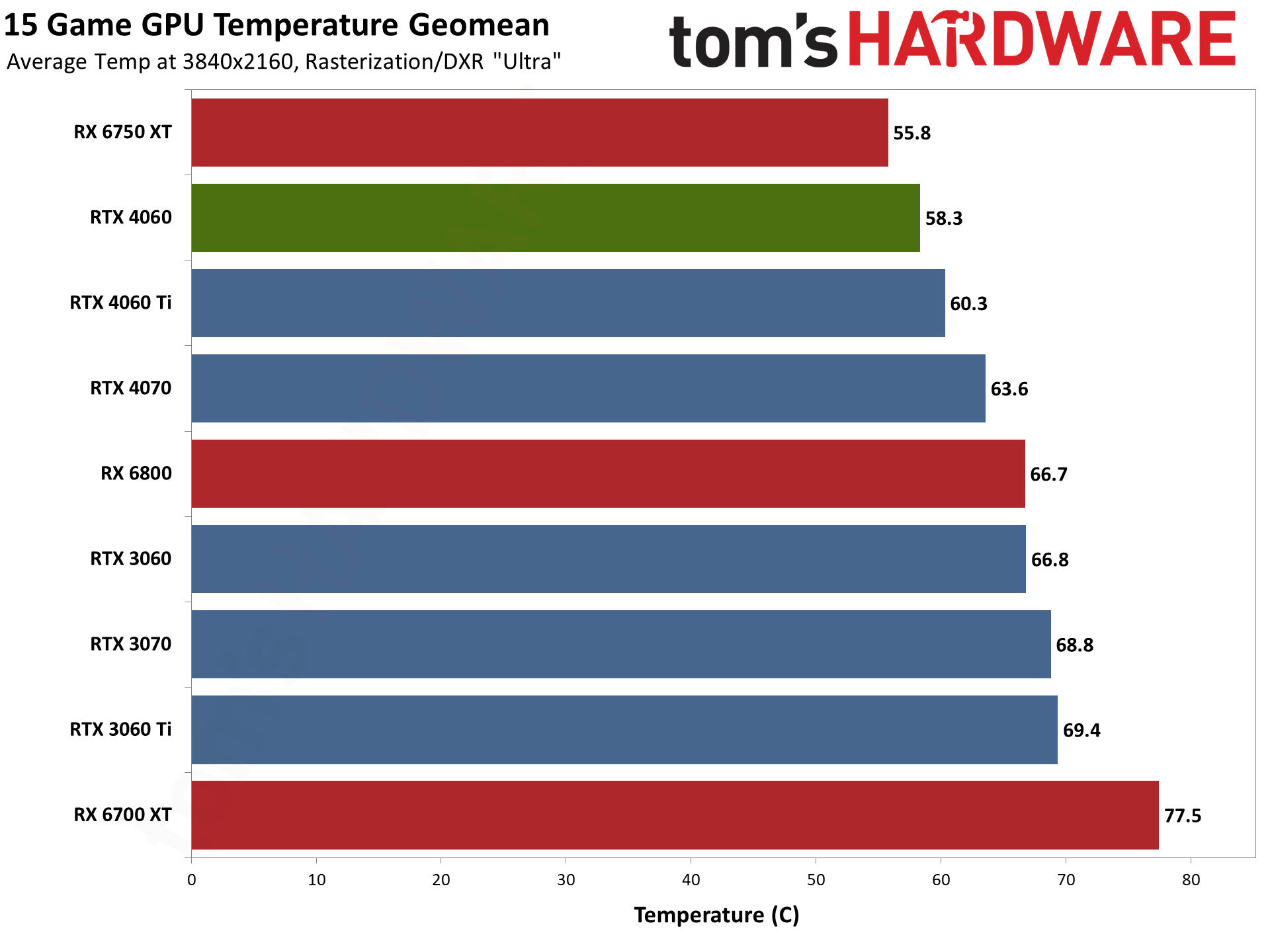

As you can already guess, the RTX 4060 really struggles at 4K in our relatively demanding test suite. Ray tracing at 4K in particular doesn't come anywhere near playable levels, and you'd need DLSS Performance mode upscaling and potentially Frame Generation to hit reasonable framerates.

The 8GB VRAM also definitely affects performance at 4K, more than at lower resolutions. Again, see our article on why 4K requires so much VRAM for further details on the situation. But we like to be complete in our testing, so we're including these results for the curious. We won't bother with additional commentary on the charts.

- MORE: Best Graphics Cards

- MORE: GPU Benchmarks and Hierarchy

- MORE: All Graphics Content

Nvidia RTX 4060 AI Performance

GPUs are also used with professional applications, AI training and inferencing, and more. Along with our usual professional tests, we've added Stable Diffusion benchmarks on the various GPUs. AI is a fast-moving sector, and it seems like 95% or more of the publicly available projects are designed for Nvidia GPUs. Those Tensor cores aren't just for DLSS, in other words. Let's start with our AI testing and then hit the professional apps.

We're using Automatic1111's Stable Diffusion version for the Nvidia cards, while for AMD we're using Nod.ai's Shark variant — we used the automatic build version 20230521.737 for these results, which is now a month out of date, launched with "--iree_vulkan_target_triple=rdna3-7900-windows" as recommended by AMD, or "rdna2-unknown-windows" for the RX 6000-series (that's the default).

For Intel GPUs, we used a tweaked version of Stable Diffusion OpenVINO. That hasn't been updated in a few months, and we couldn't get 768x768 image generation working, so we'll revisit the Arc results at some point in the future. (When we do, we'll update our main Stable Diffusion benchmarks page... which is also currently outdated.)

Tensor and matrix cores in modern GPUs were created specifically for this type of workload, so it's no surprise that Nvidia and Intel GPUs do quite a bit better than their AMD counterparts. The RTX 4060 can't quite match the RX 7900 XT and XTX at 512x512 image generation (those aren't shown here), but it's quite a bit faster than any other current AMD GPUs, and the (untuned on AMD) 768x768 results favor Nvidia even more.

There are some other interesting things to note. Raw memory bandwidth appears to be a bigger factor here as well, and the 4060 only leads the previous generation 3060 by 6% at 512x512, and 9% for 768x768 images. Along with having less memory, the RTX 4060 clearly isn't going to be an AI powerhouse. It's okay for basic stuff, but that's about it.

Intel's Arc GPUs seem to do decently as well, and perhaps a different library could further narrow the gap. The A770 16GB and A750 both deliver higher output rates than the RX 7600 and RX 6700 XT, for example.

There are other AI workloads, particularly those that use LLMs (Large Language Models) where VRAM capacity can be more important that computational performance. Running a local chatbot as an example required 10GB or even 24GB of VRAM for some of the models, and there are even larger GPT-3 based models for Nvidia's A100/H100 data center GPUs and DGX servers.

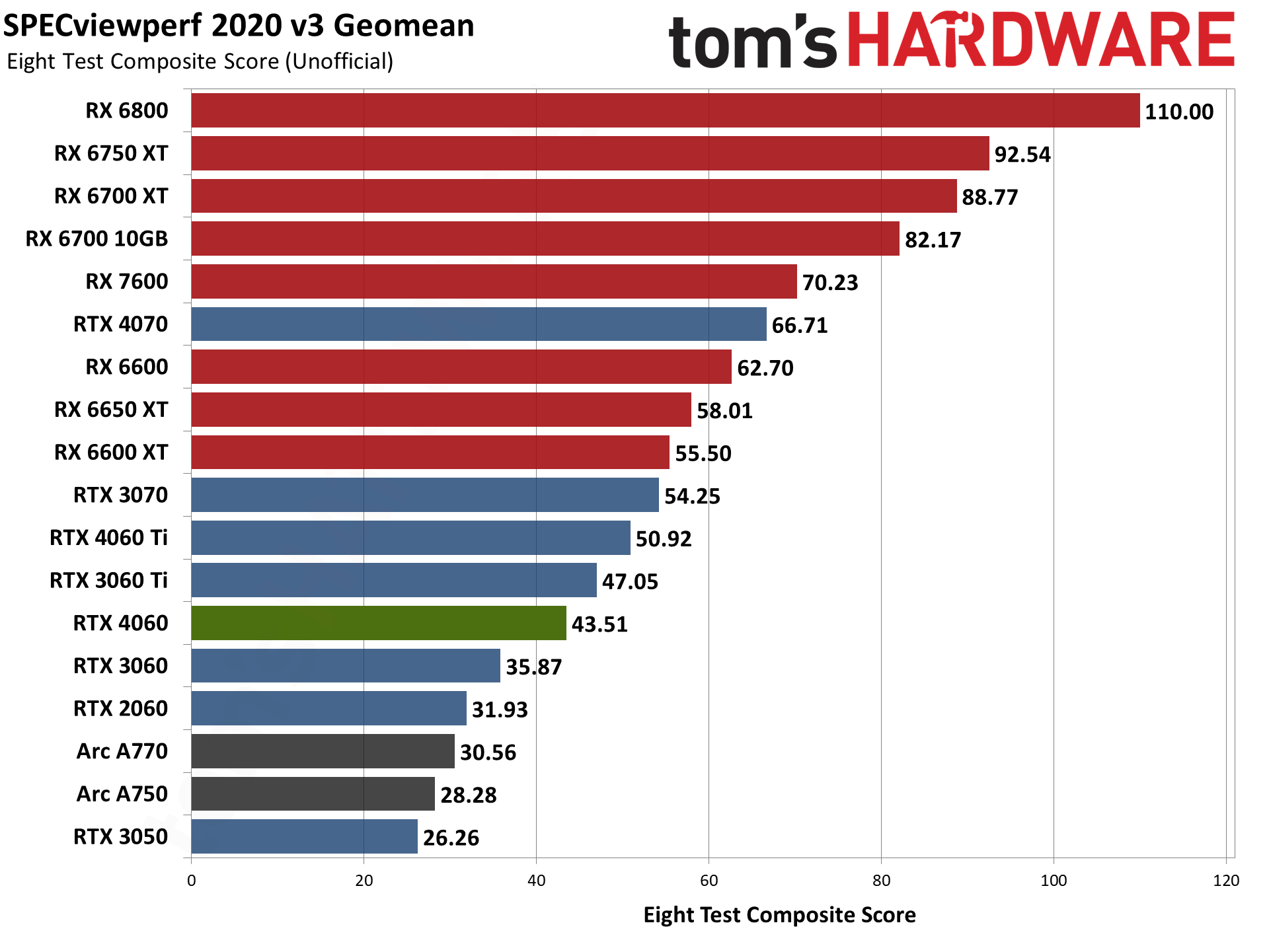

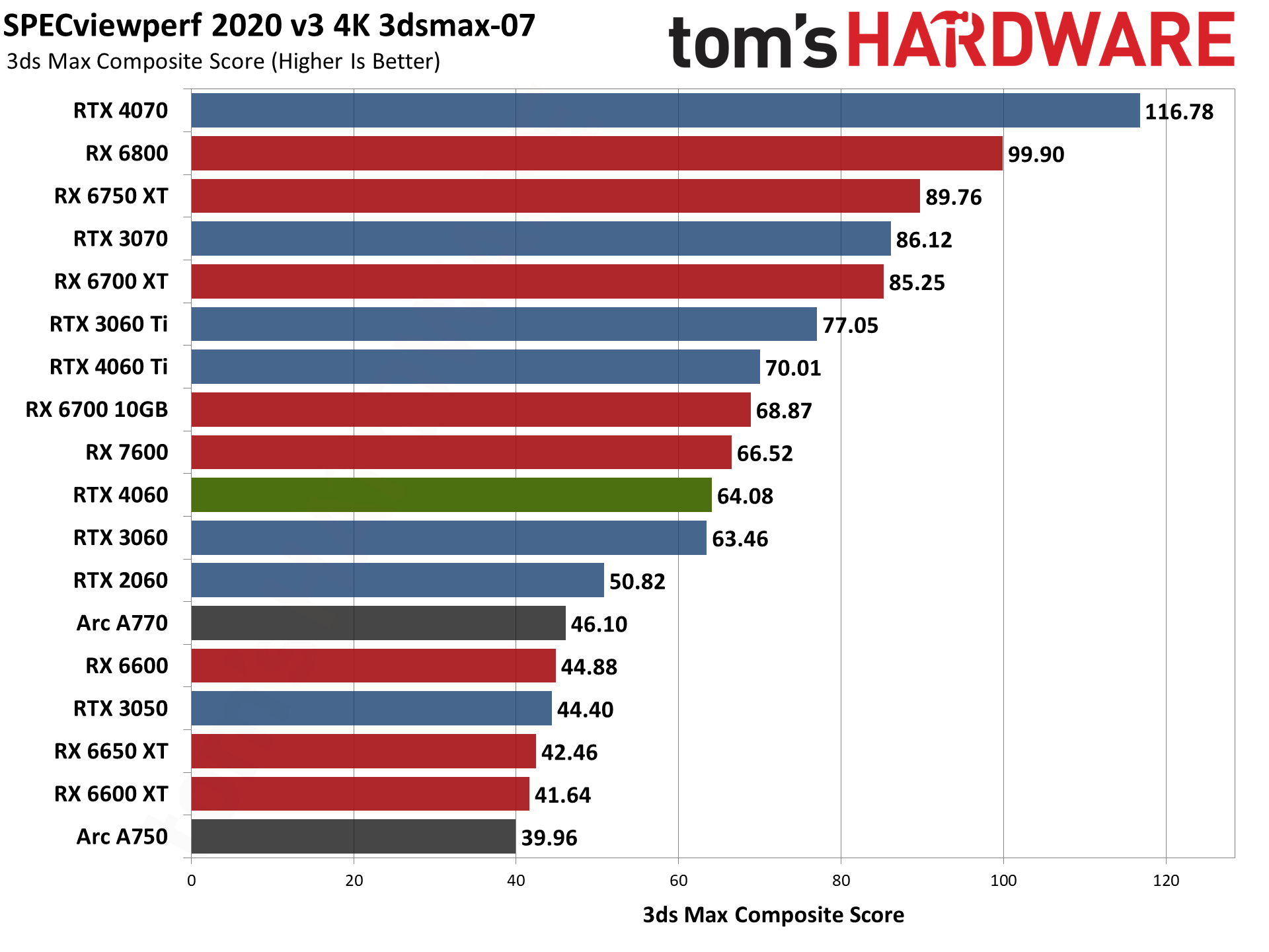

Nvidia RTX 4060 Professional Workloads

Most true professional graphics cards cost a lot more than the RTX 4060. They come with drivers that are better tuned for professional applications, at least in some cases, as well as improved support for those applications. Still, you can get by with a consumer GPU like the RTX 4060 in a pinch. Also note that some of our professional tests only run on Nvidia GPUs, so those charts won't have the AMD or Intel cards.

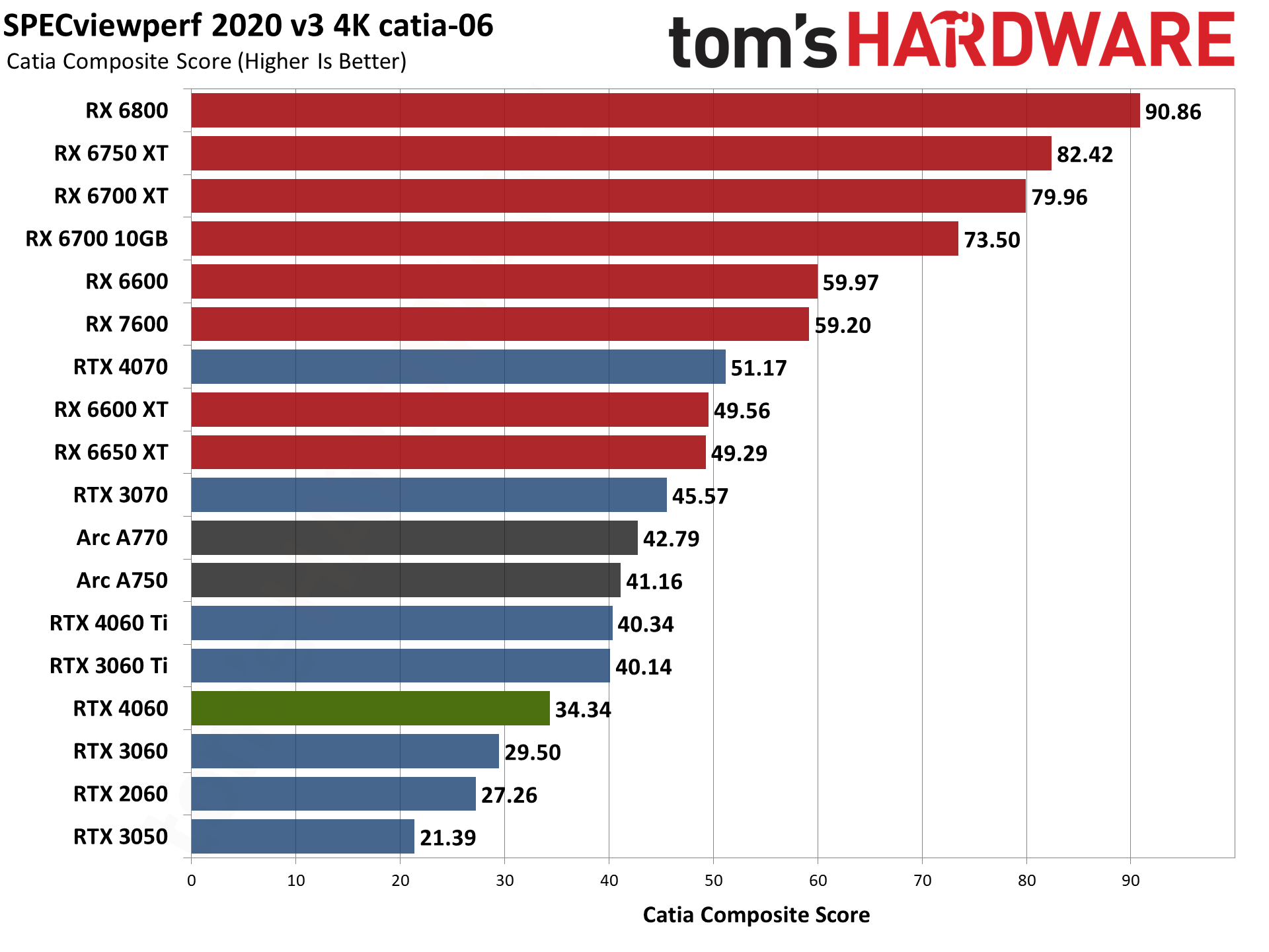

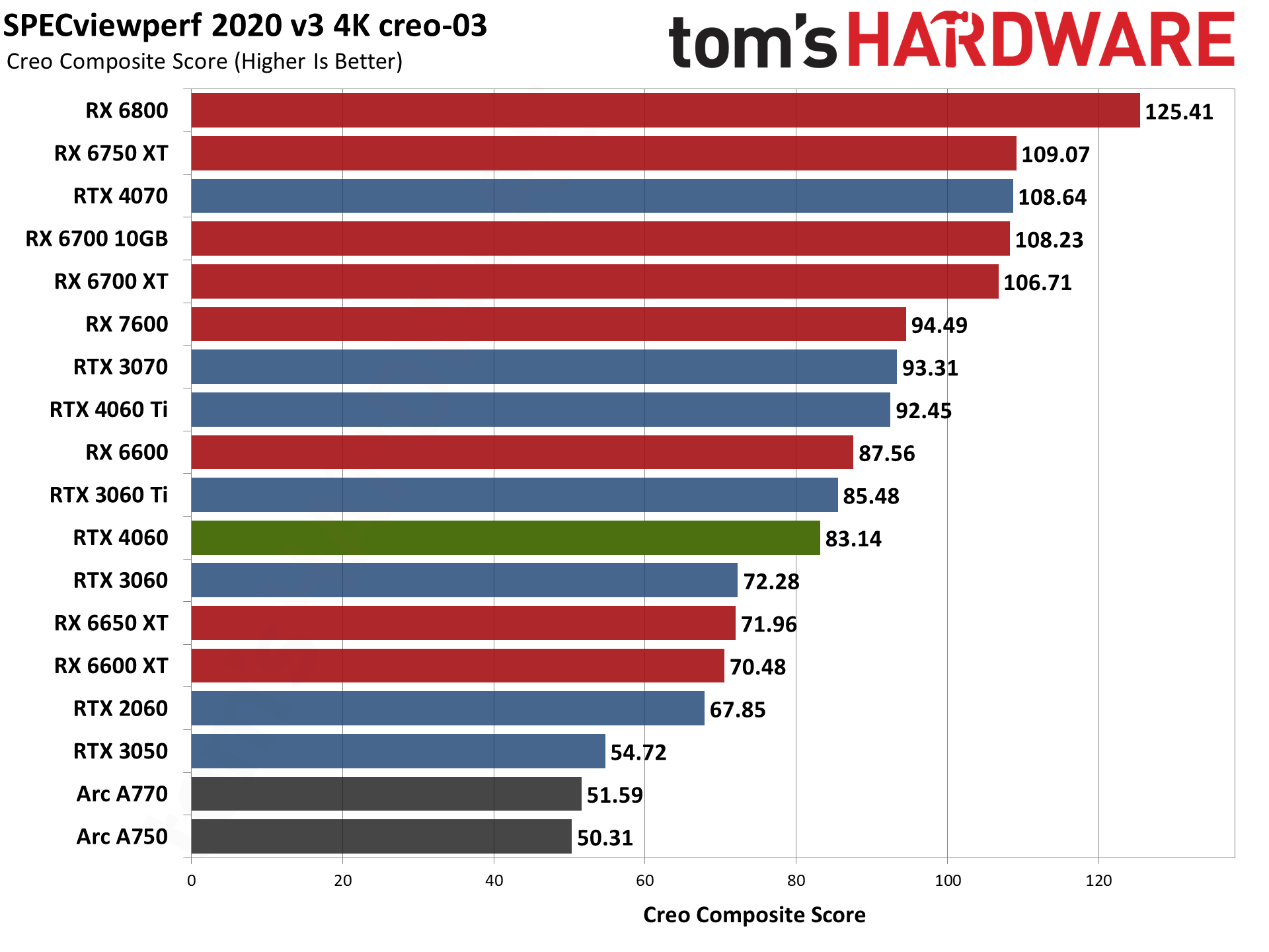

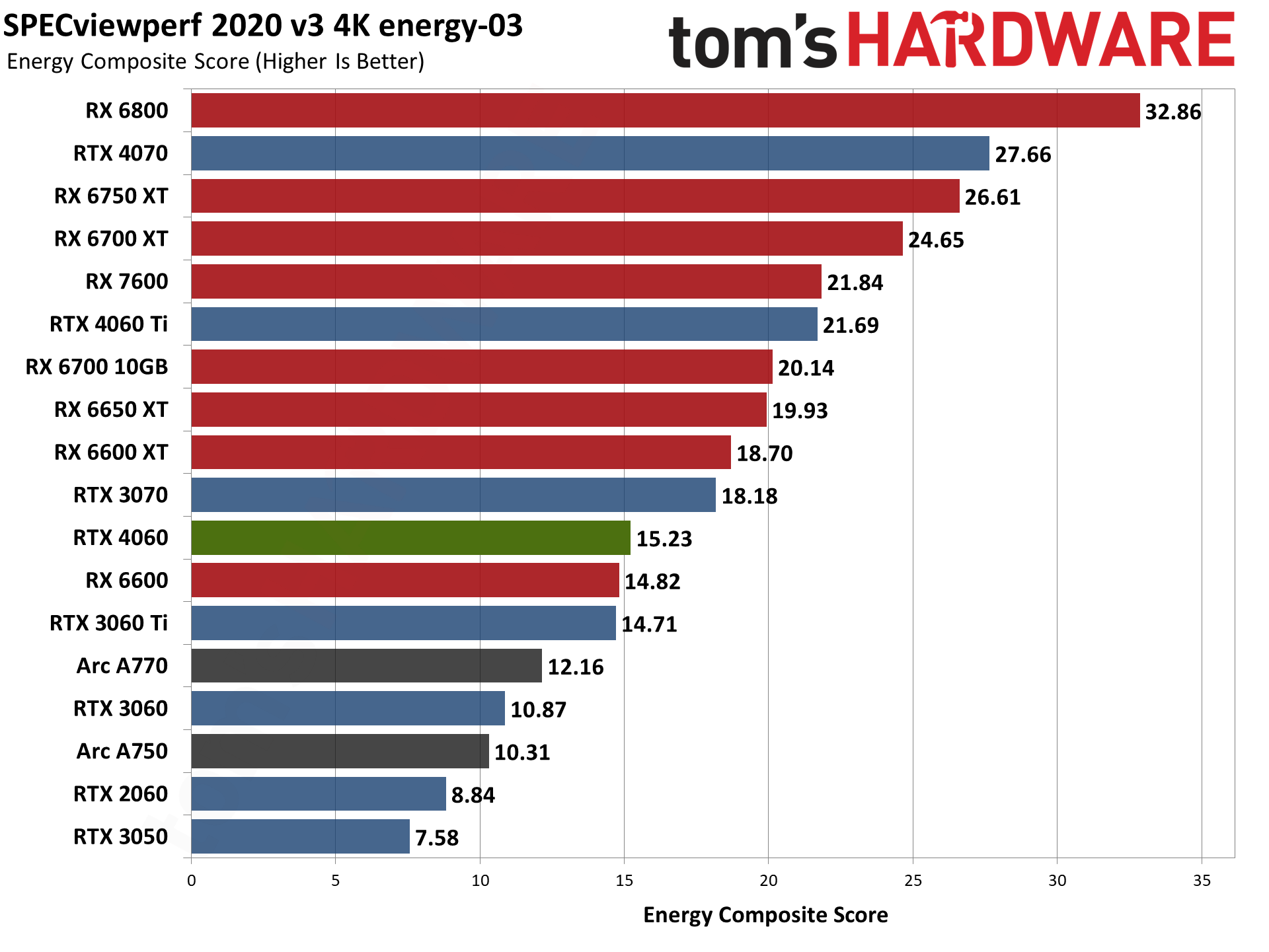

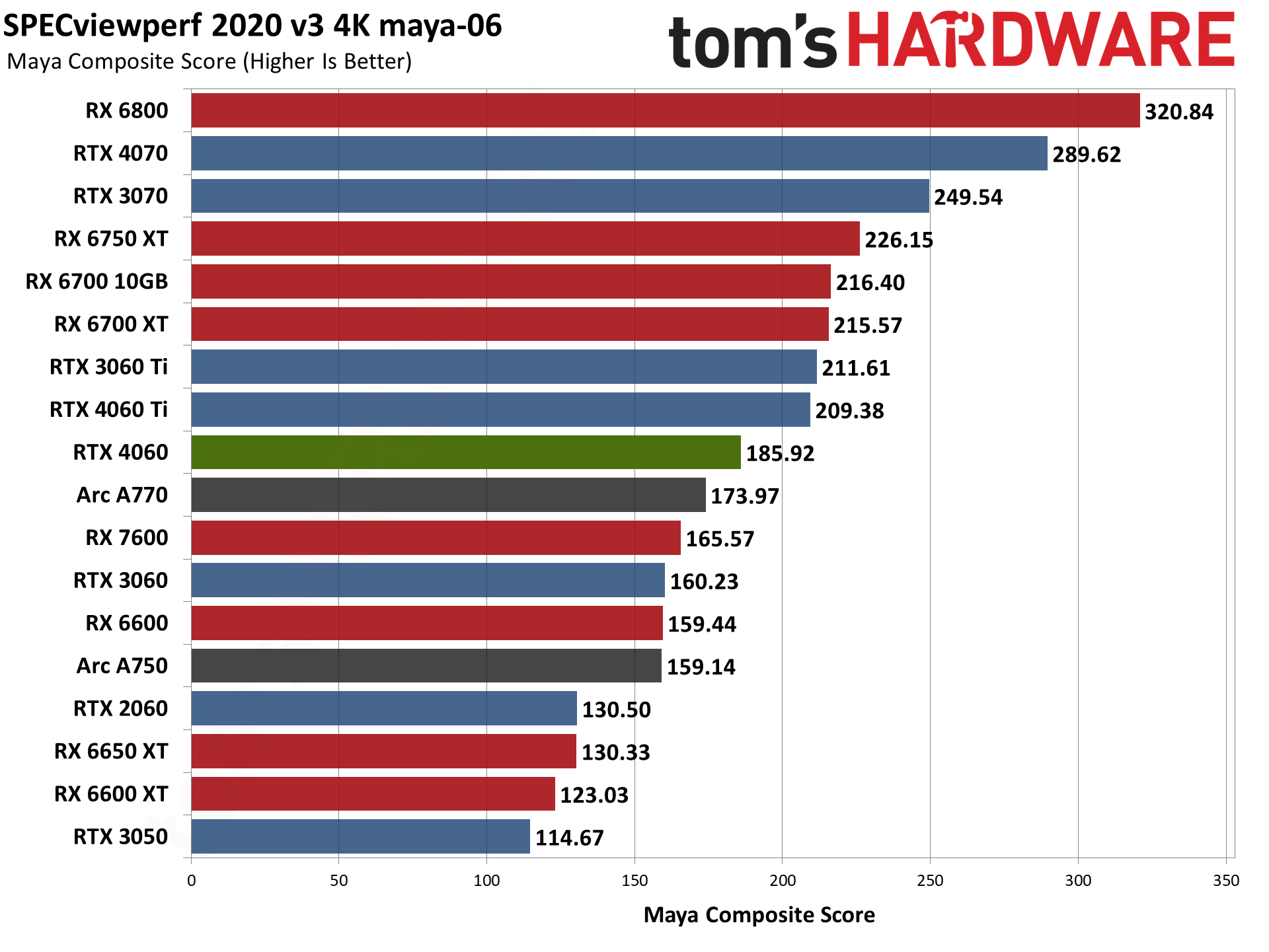

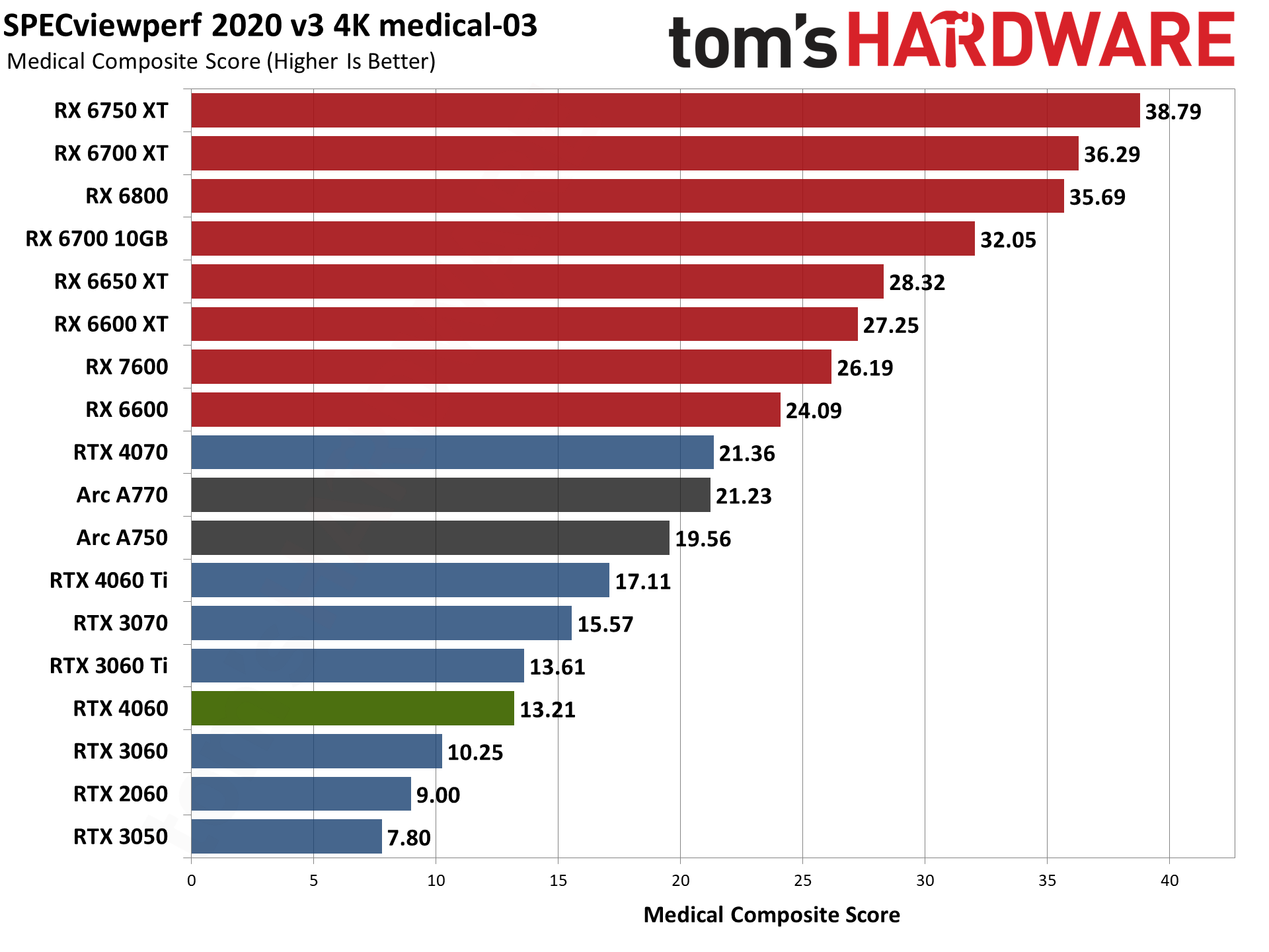

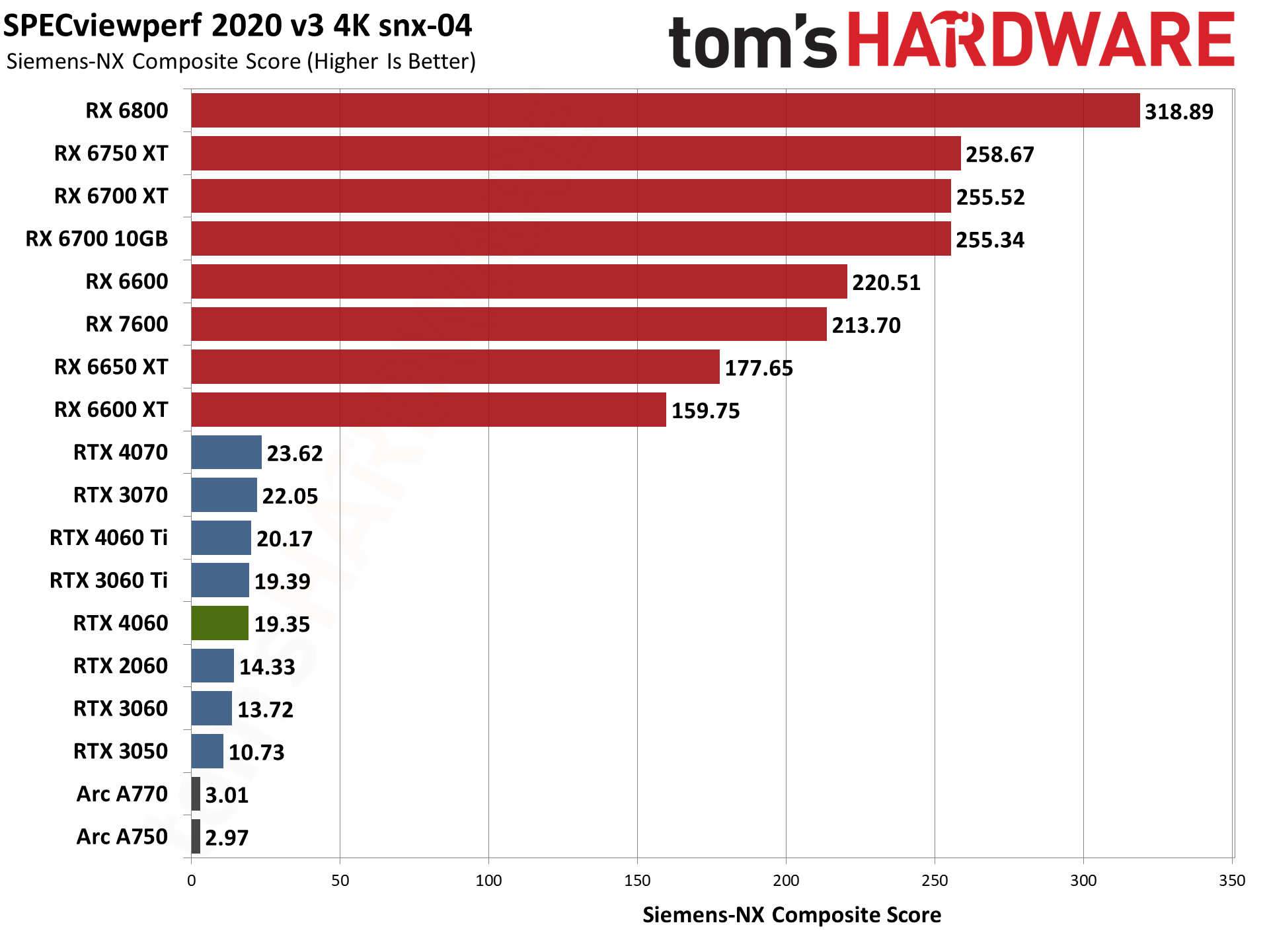

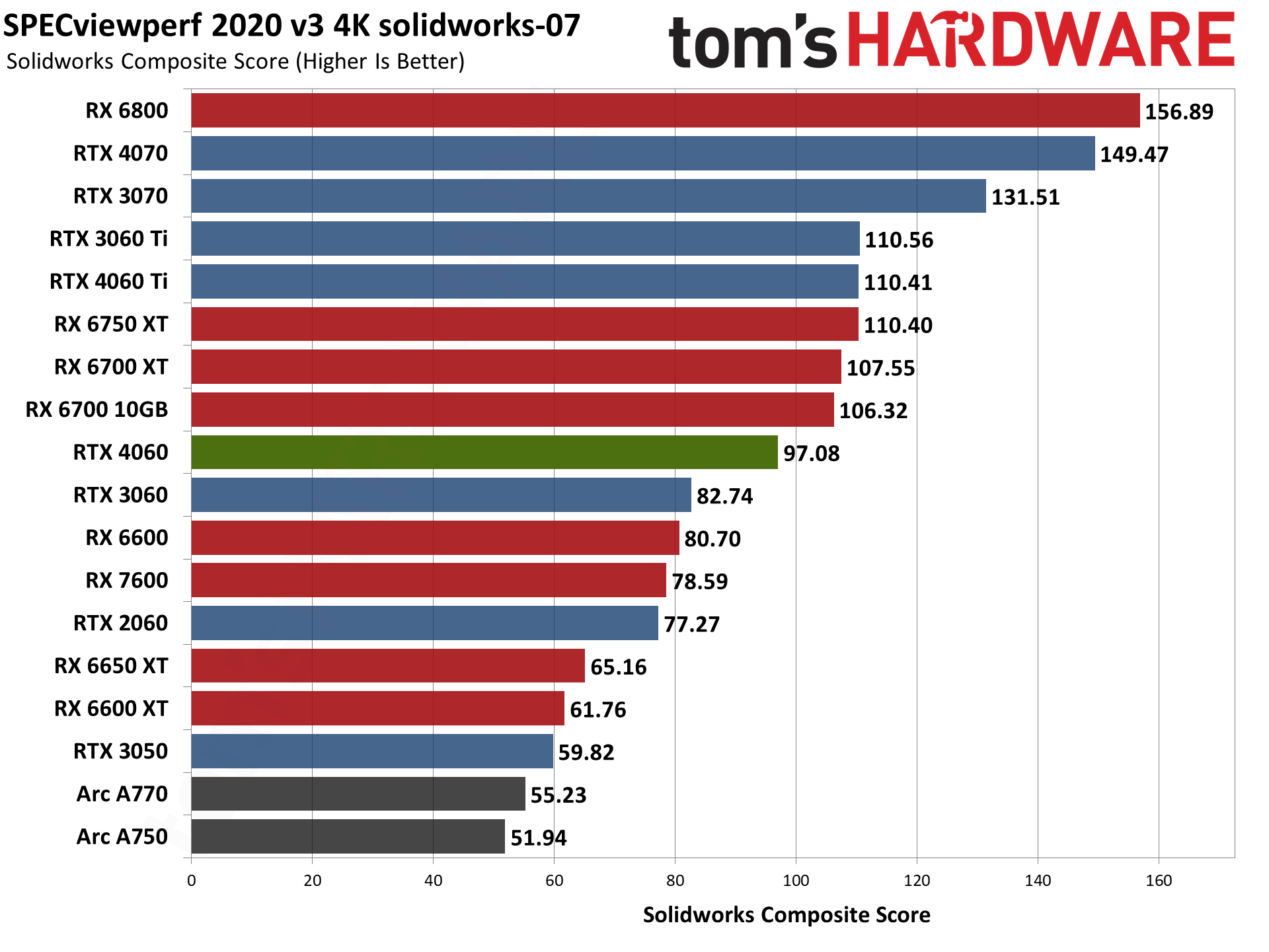

SPECviewperf 2020 consists of eight different benchmarks, and we use the geometric mean from those to generate an aggregate "overall" score. Note that this is not an official score, but it gives equal weight to the individual tests and provides a nice high-level overview of performance. Few professionals use all of these programs, however, so it's typically more important to look at the results for the application(s) you plan to use.

Nvidia's RTX 4060 as expected takes up its now traditional spot behind the RTX 3060 Ti but ahead of the RTX 3060. It manages to (barely) beat the 3060 Ti in one test, energy-03, but overall it's 21% faster than the 3060 and 8% slower than the 3060 Ti.

AMD's RX 7600 — and AMD GPUs in general — do much better in SPECviewperf than the Nvidia consumer GPUs, mostly thanks to the snx-04 test where they're about an order of magnitude faster than their Nvidia counterparts. Intel's Arc GPUs incidentally do okay in a couple of the tests, but generally rank near the bottom of the charts.

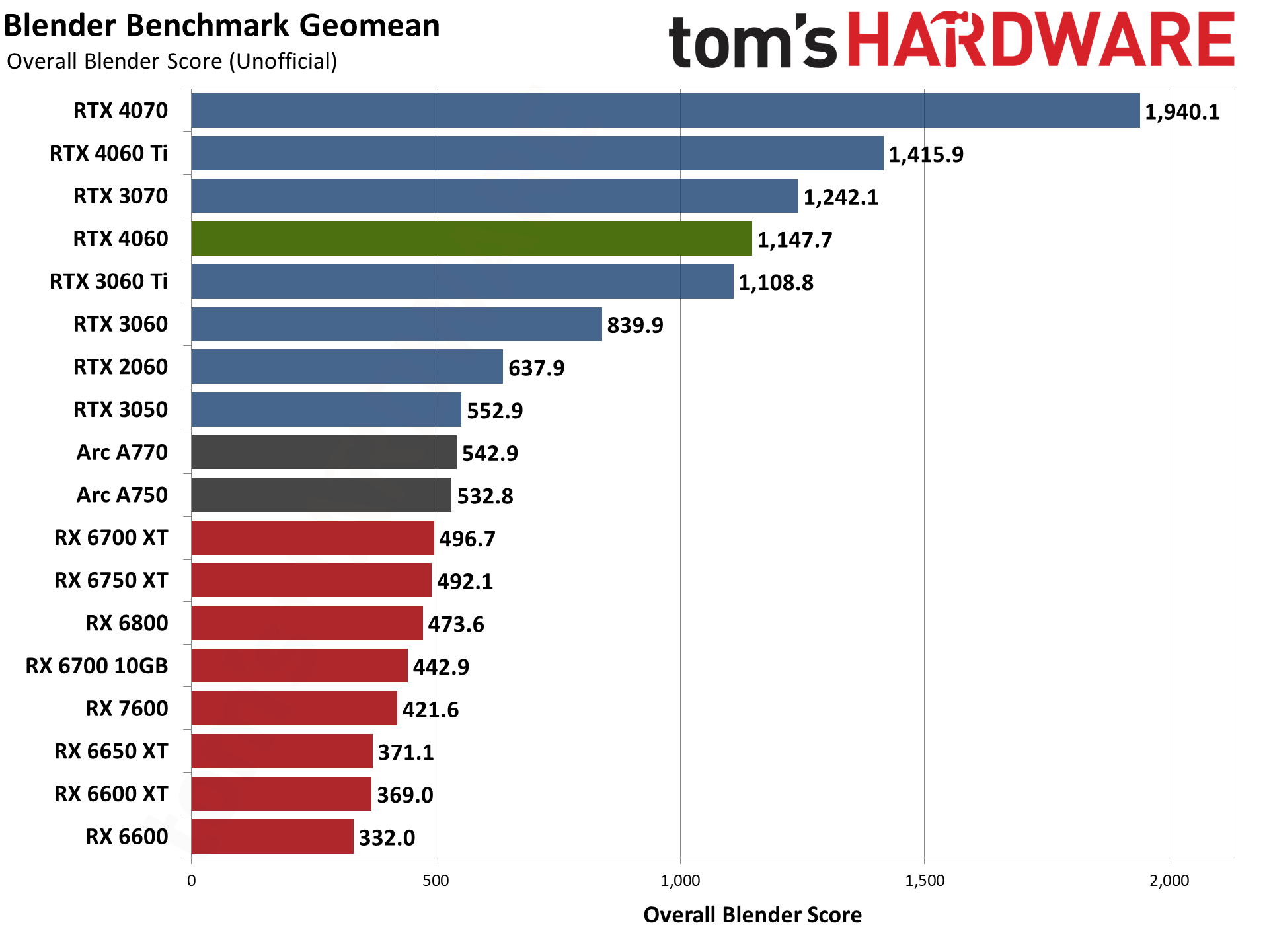

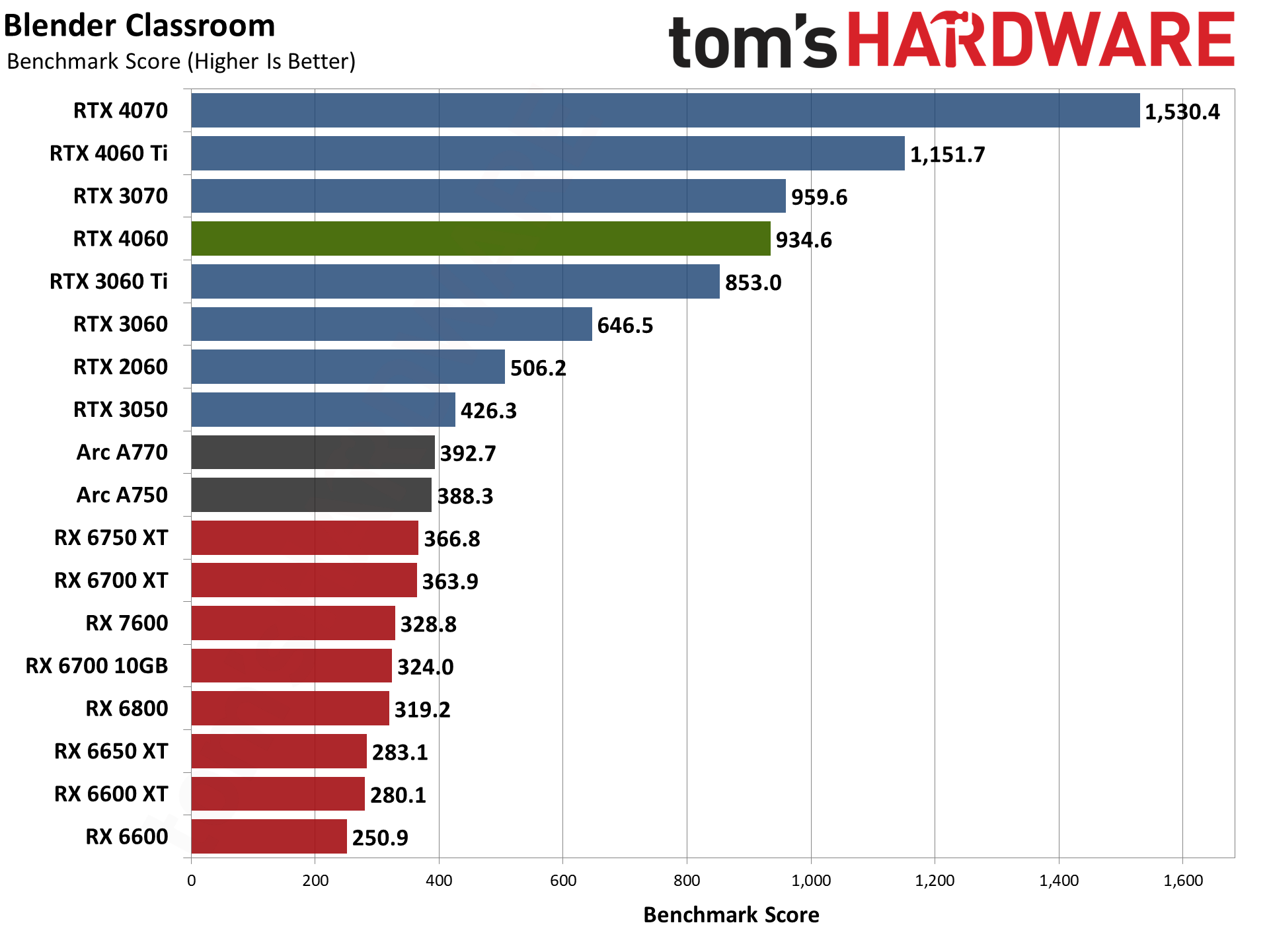

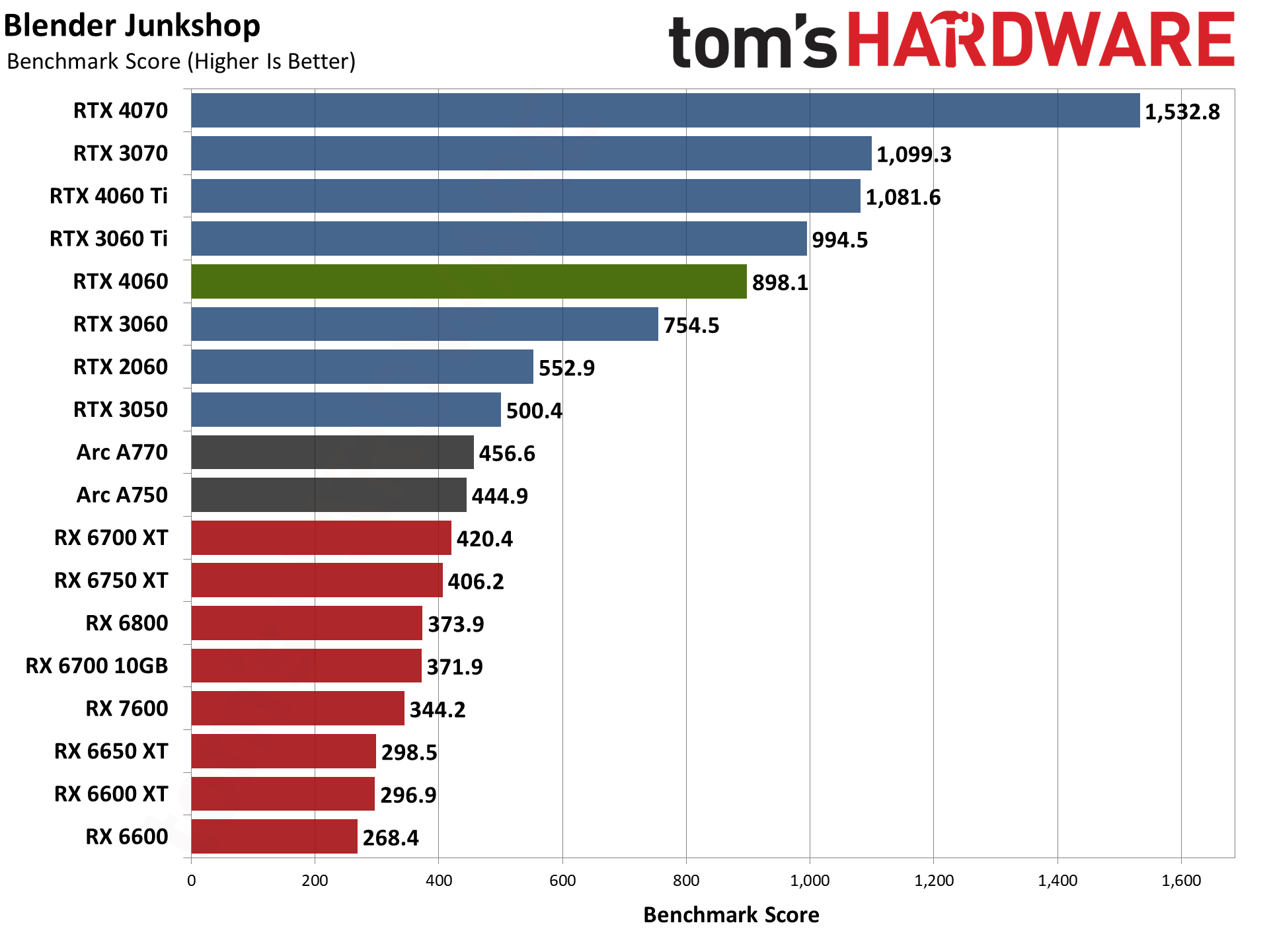

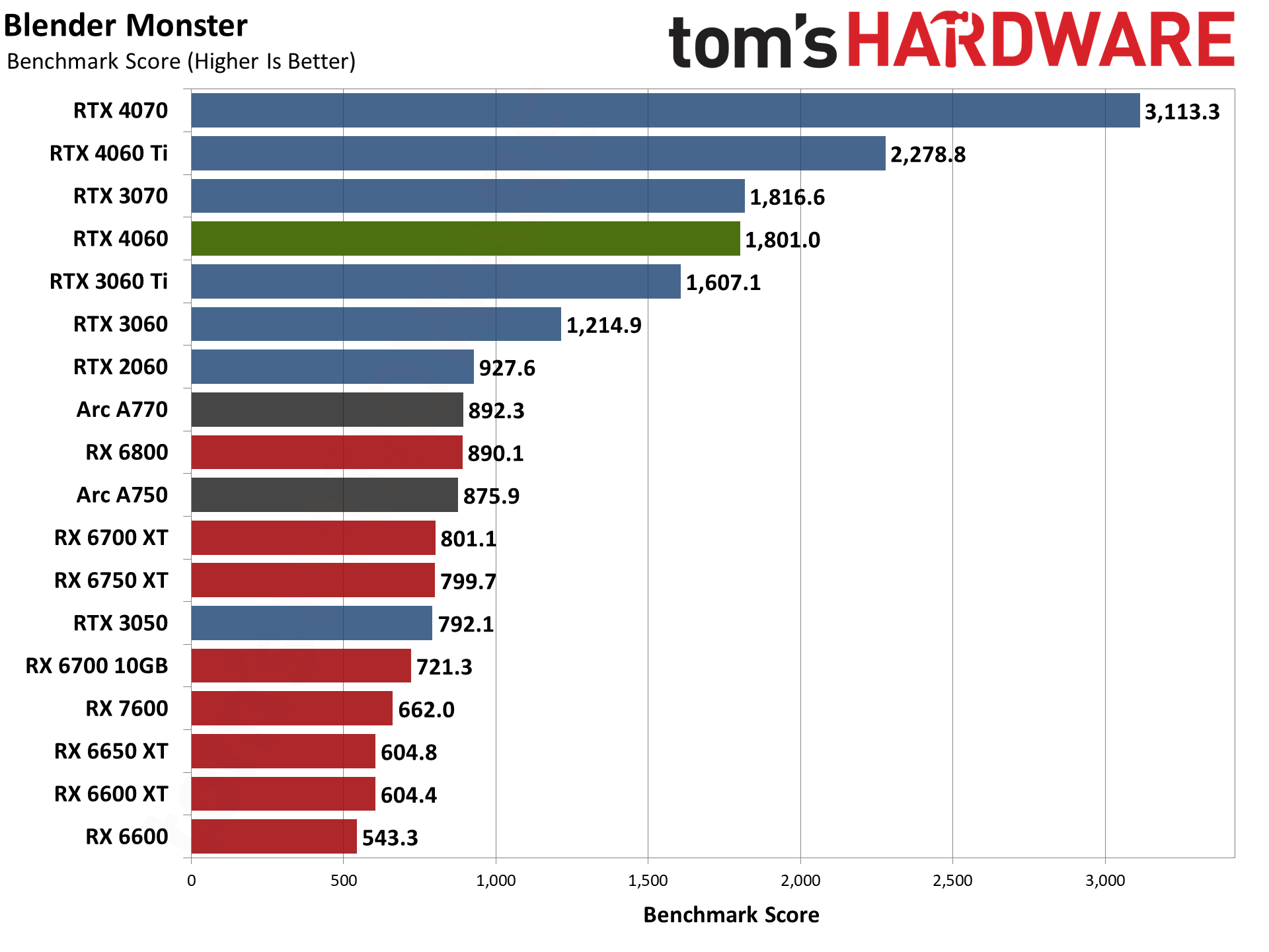

For 3D rendering, Blender is a popular open-source rendering application, and we're using the latest Blender Benchmark, which uses Blender 3.50 and three tests. Blender 3.50 includes the Cycles X engine that leverages ray tracing hardware on AMD, Nvidia, and even Intel Arc GPUs. It does so via AMD's HIP interface (Heterogeneous-computing Interface for Portability), Nvidia's OptiX API, and Intel's OneAPI — which means Nvidia GPUs have some performance advantages due to the OptiX API.

This time, the RTX 4060 actually ranks ahead of the RTX 3060 Ti in overall performance. It falls behind in the Junkshop scene, but performs quite a bit better in the Monster and Classroom scenes. AMD GPUs aren't as fast in Blender, or really in any heavy ray tracing apps, and even the RTX 3050 manages to deliver better overall performance.

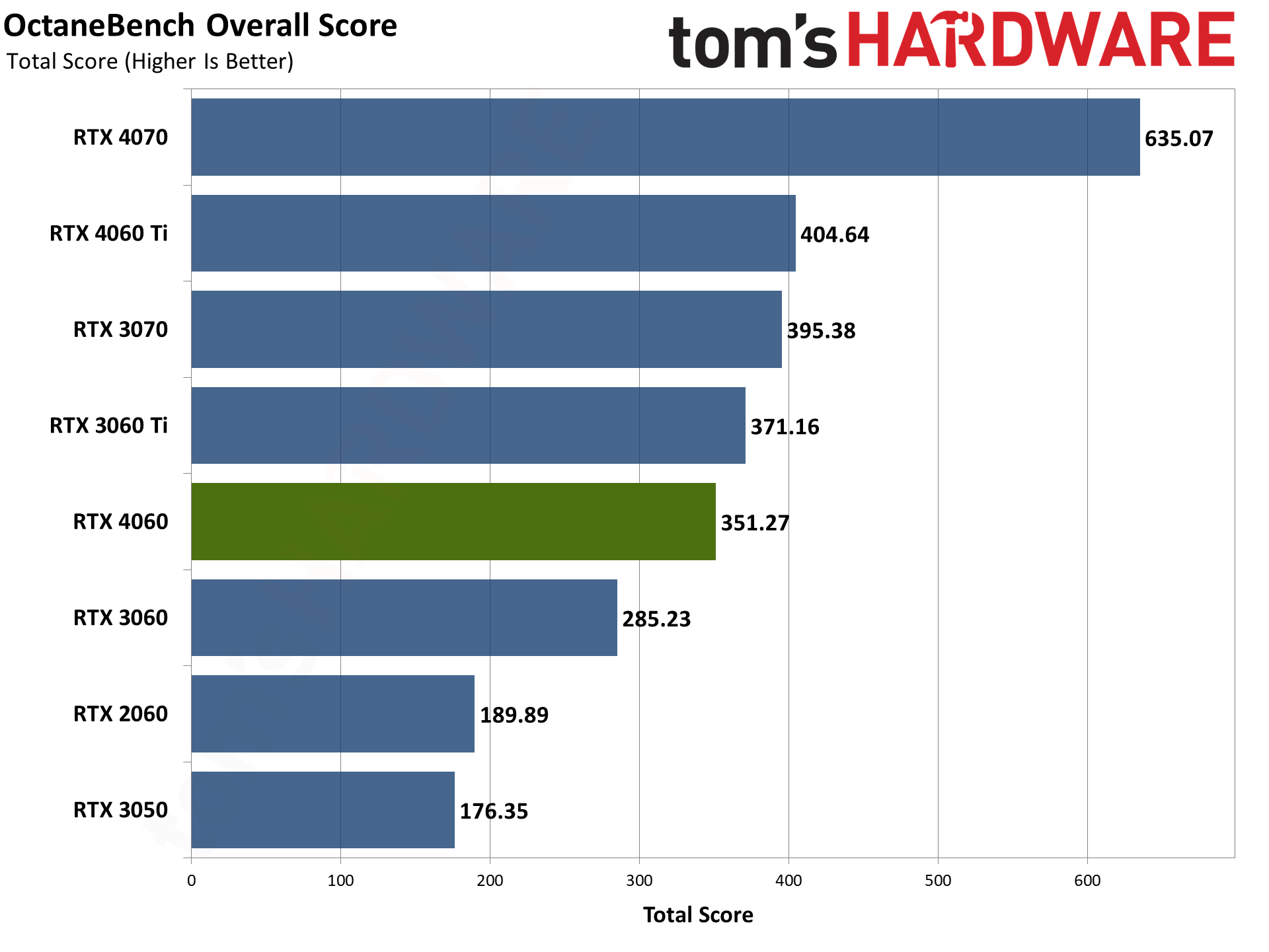

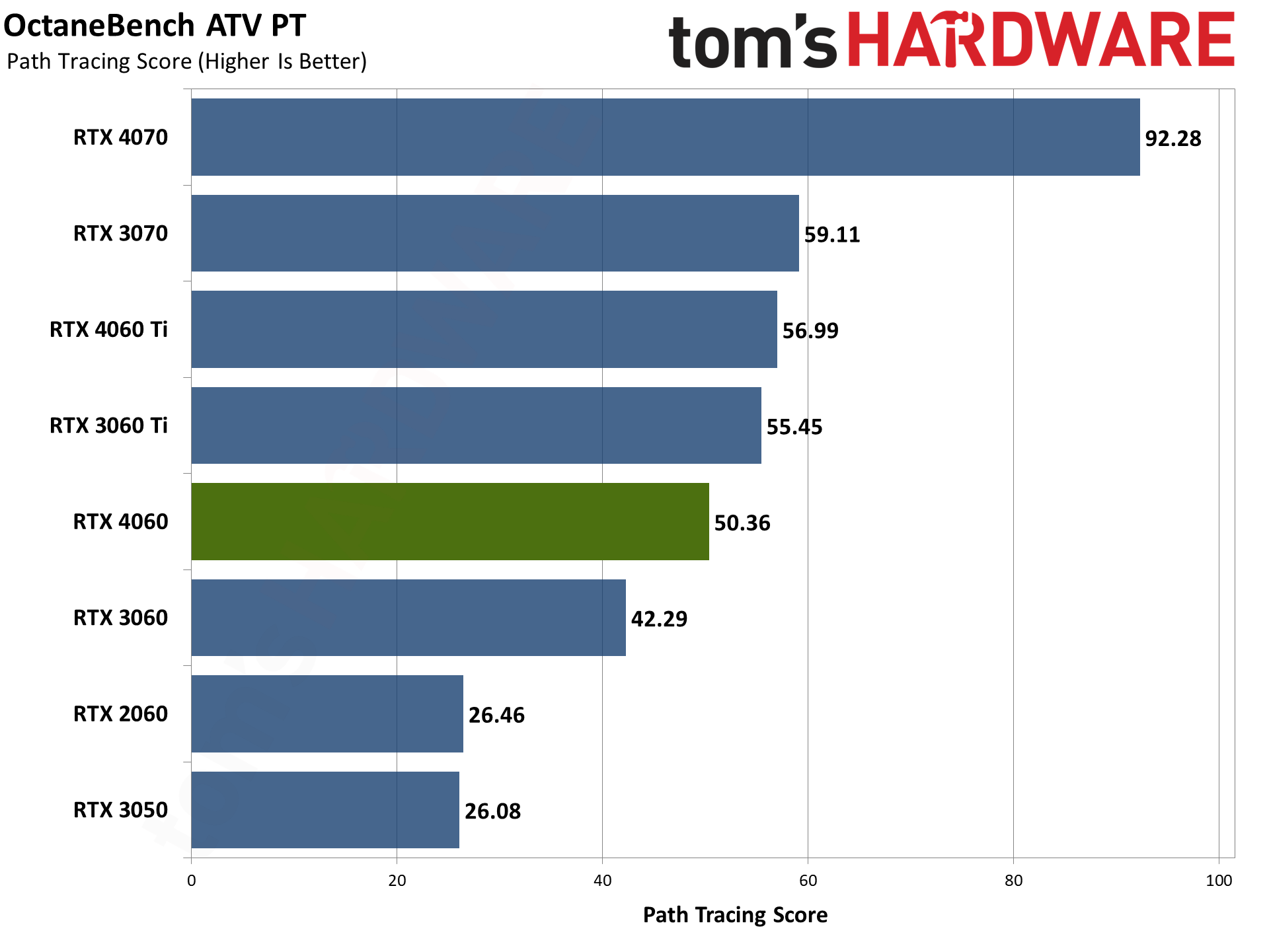

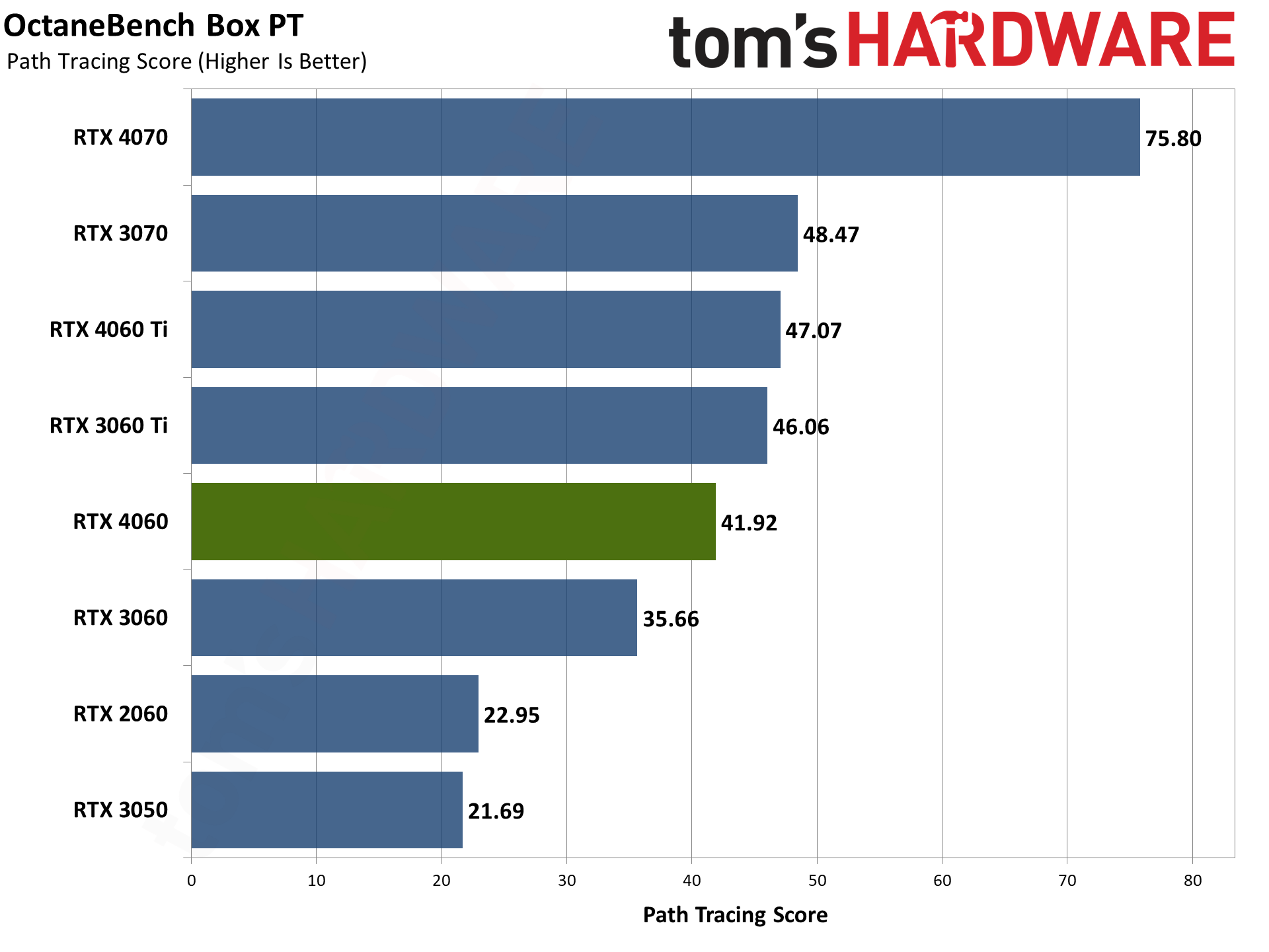

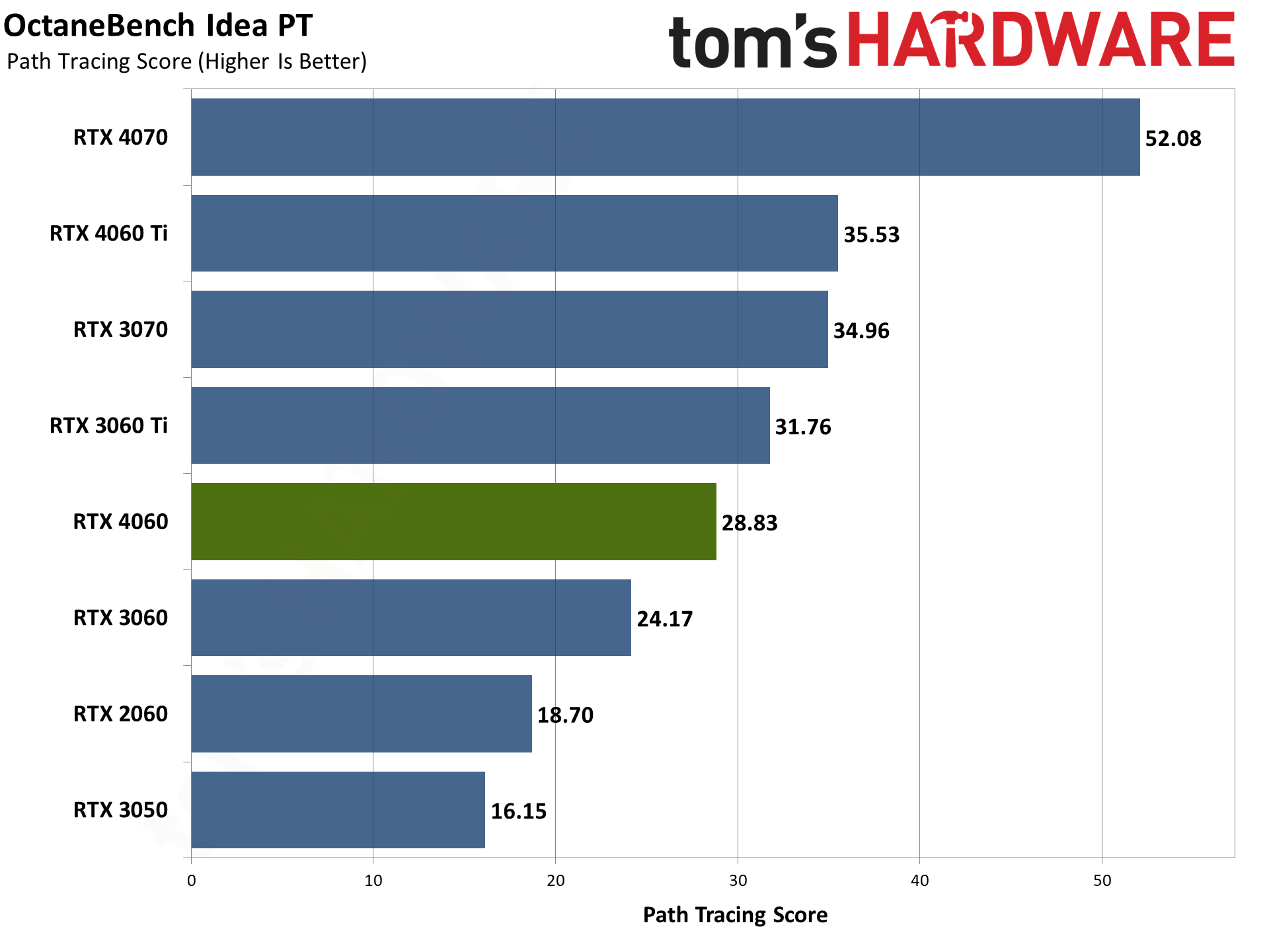

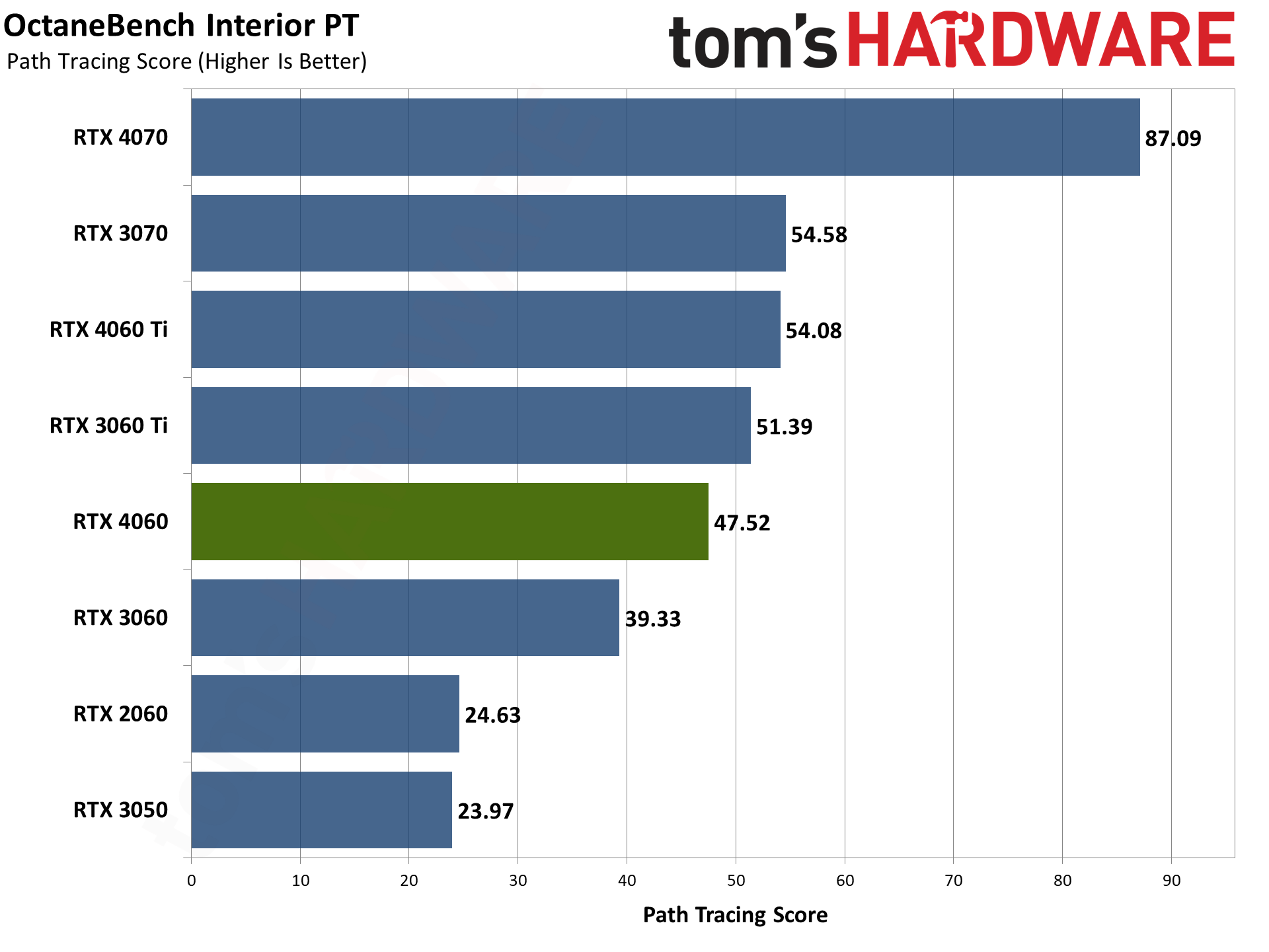

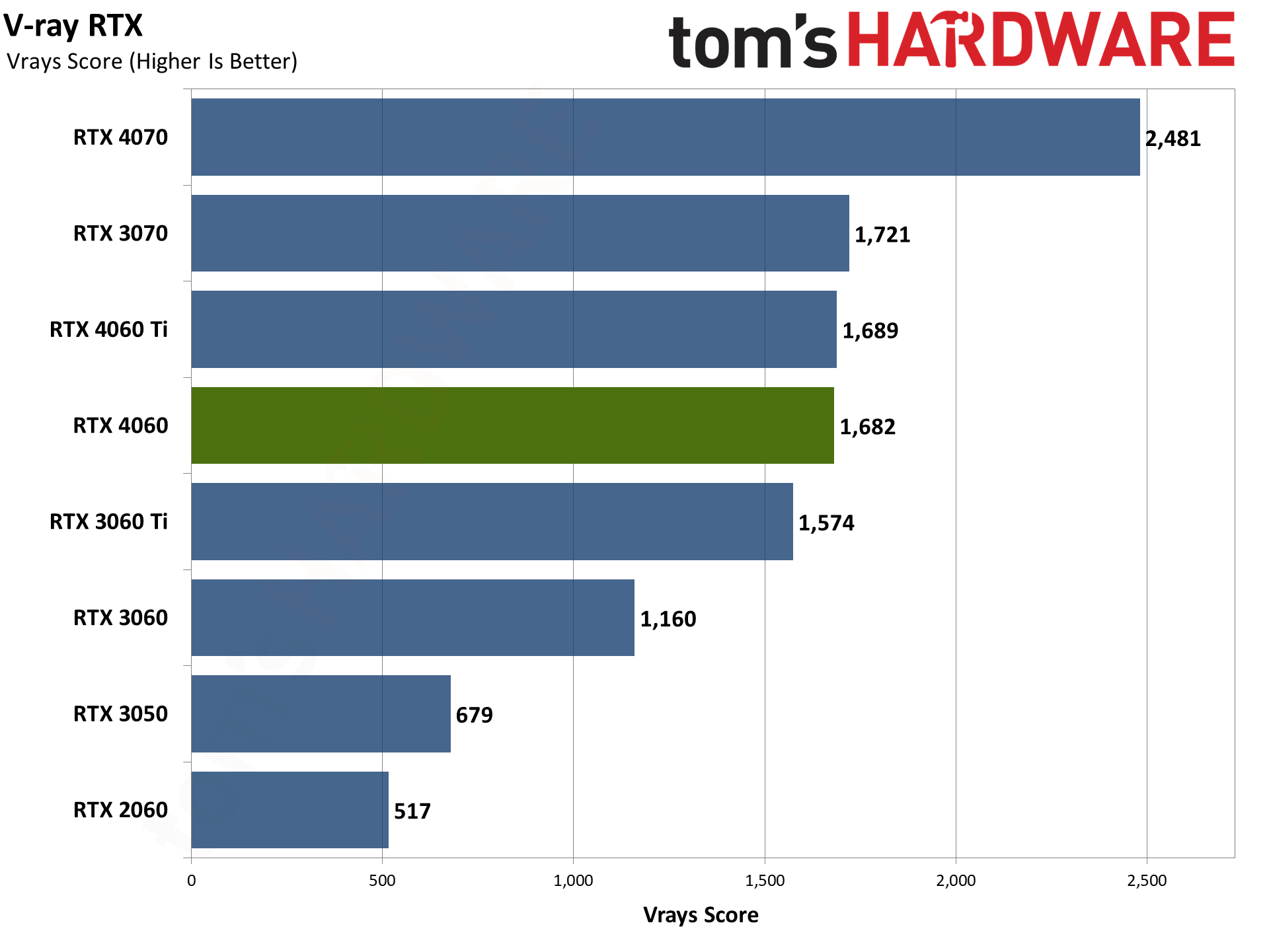

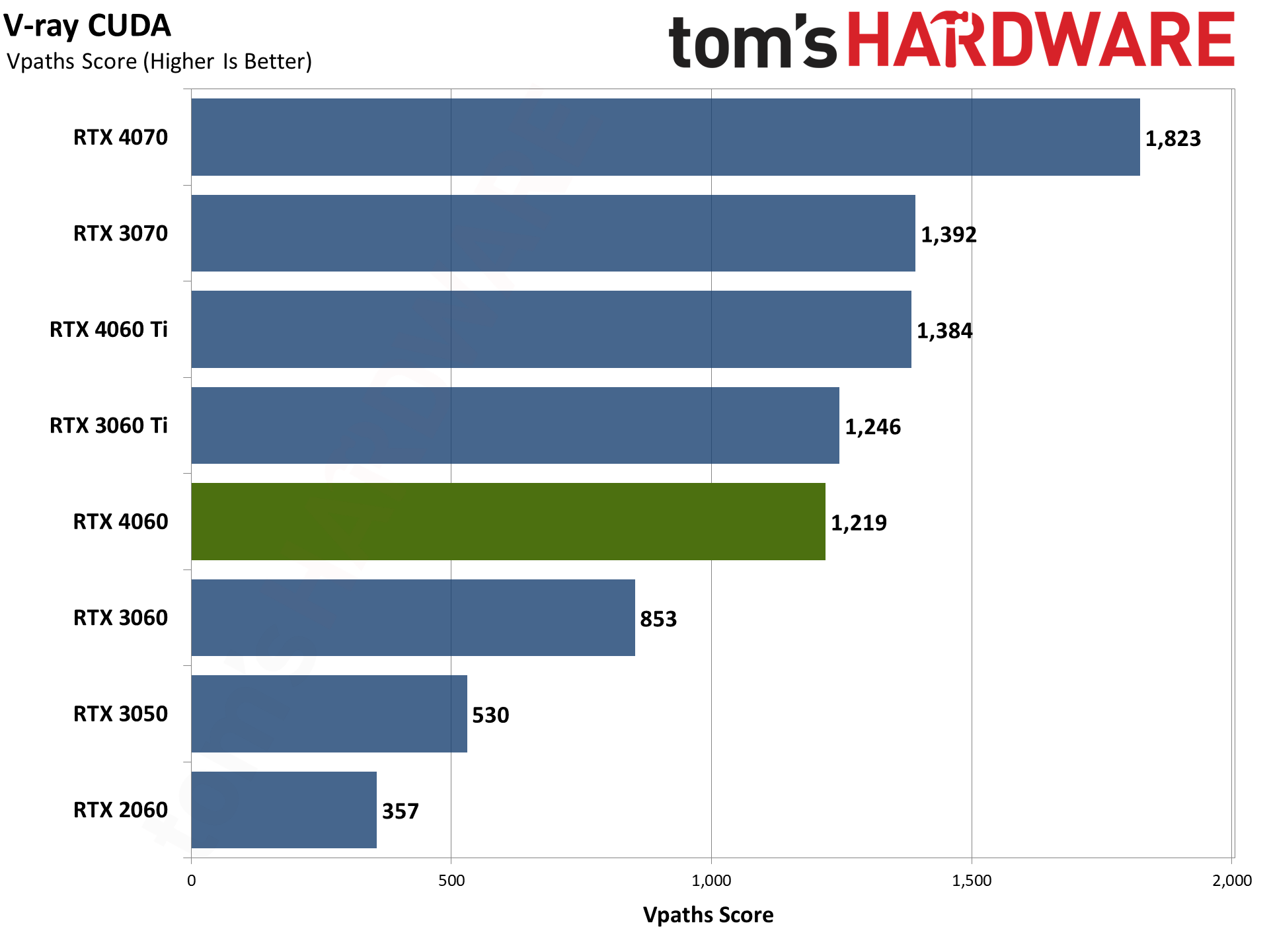

Our final two professional applications only have ray tracing hardware support for Nvidia's GPUs. OctaneBench puts the RTX 4060 just being the 3060 Ti again, while V-ray has the 4060 nearly tied in CUDA mode, and slightly ahead when using OptiX rendering. The RTX 3060 trails by a decent margin in either case.

- MORE: Best Graphics Cards

- MORE: GPU Benchmarks and Hierarchy

- MORE: All Graphics Content

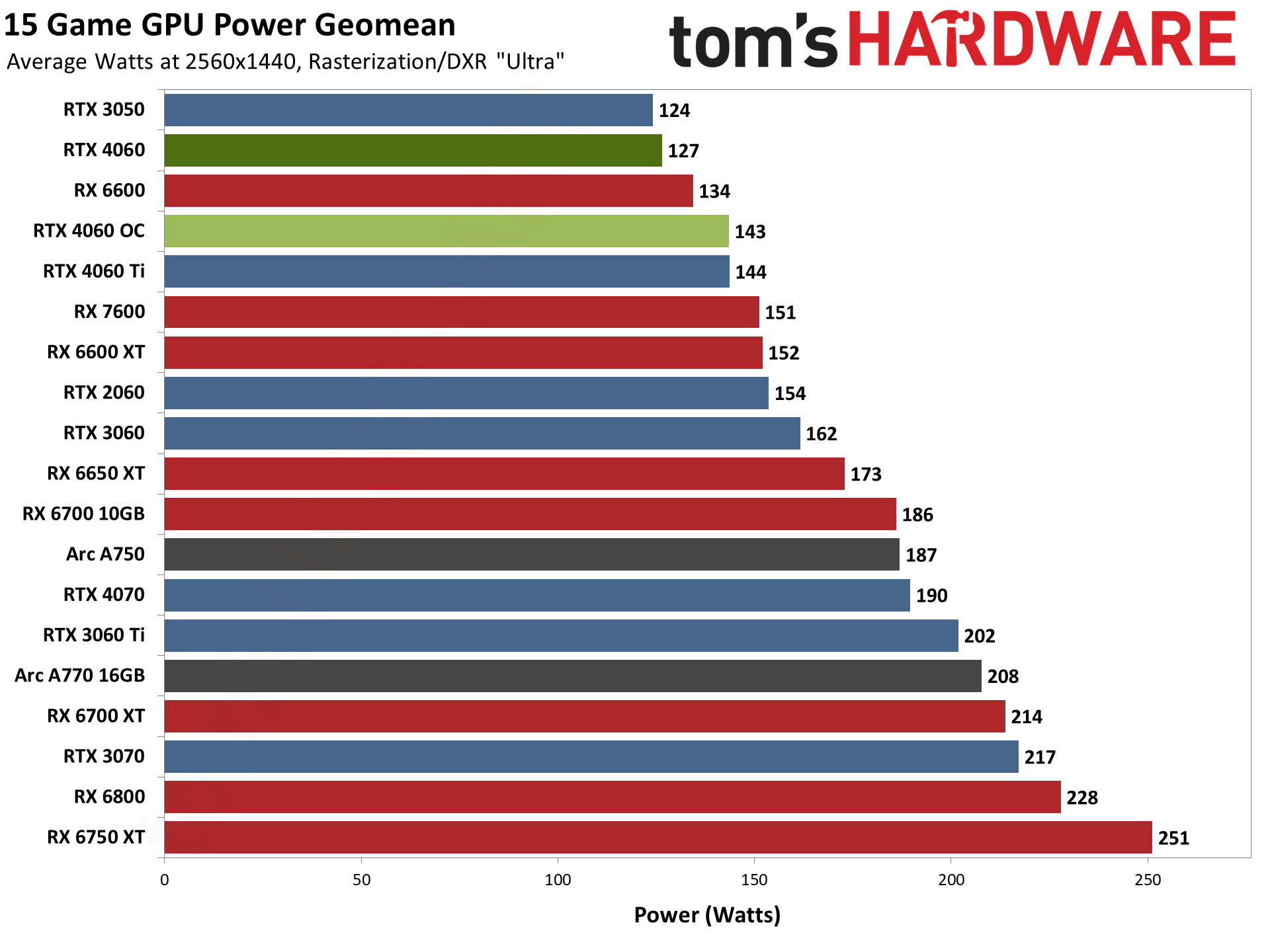

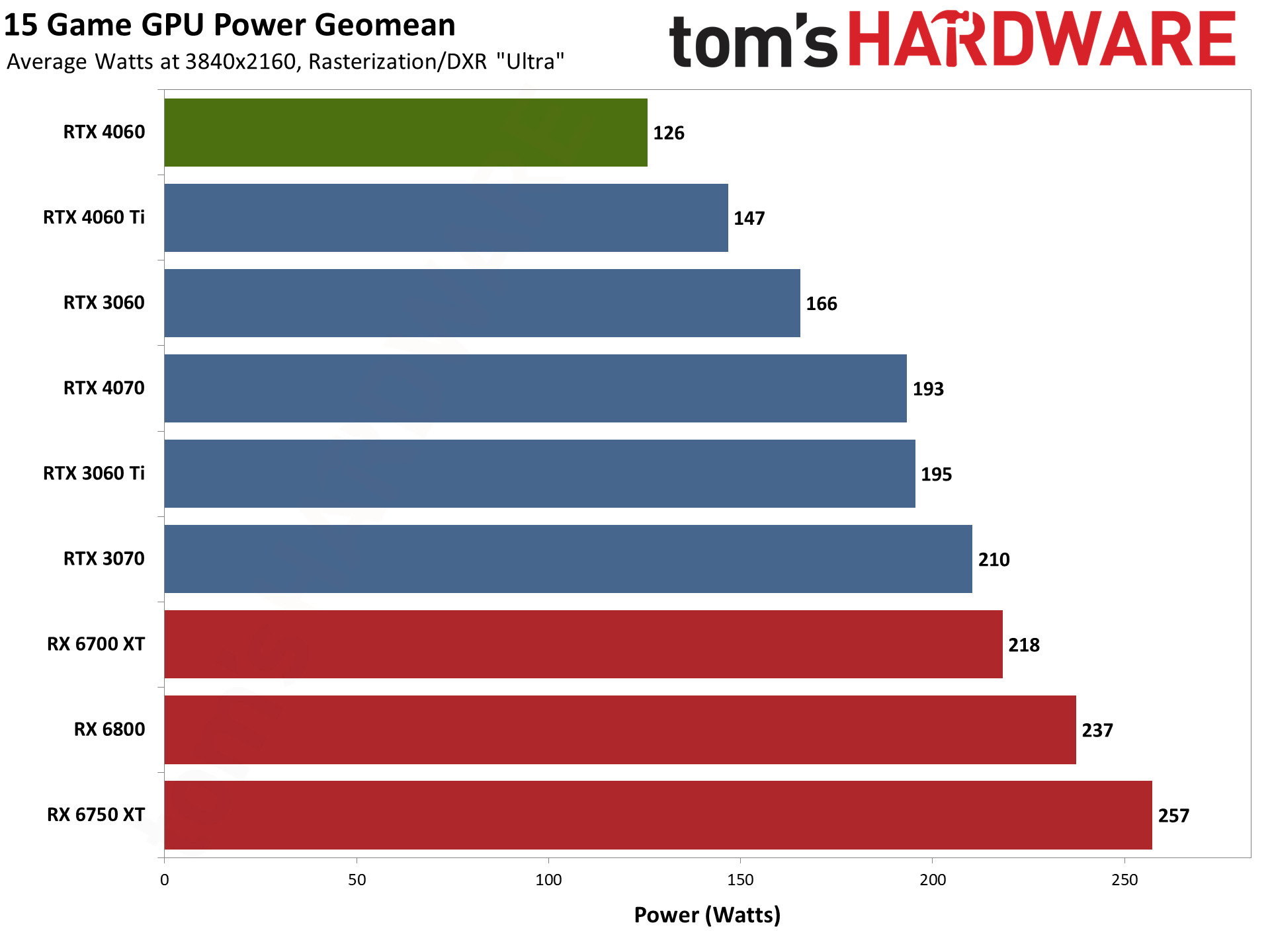

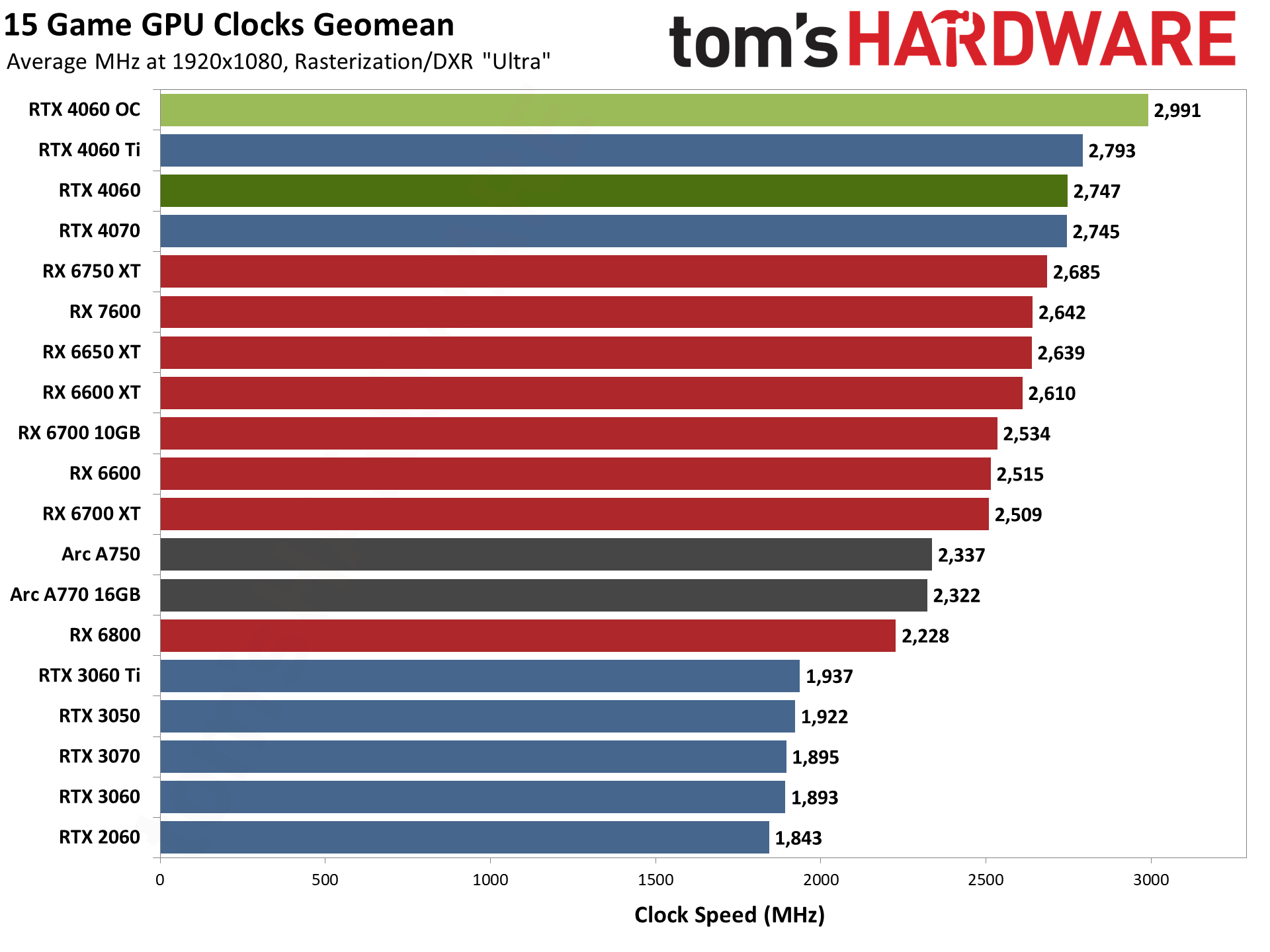

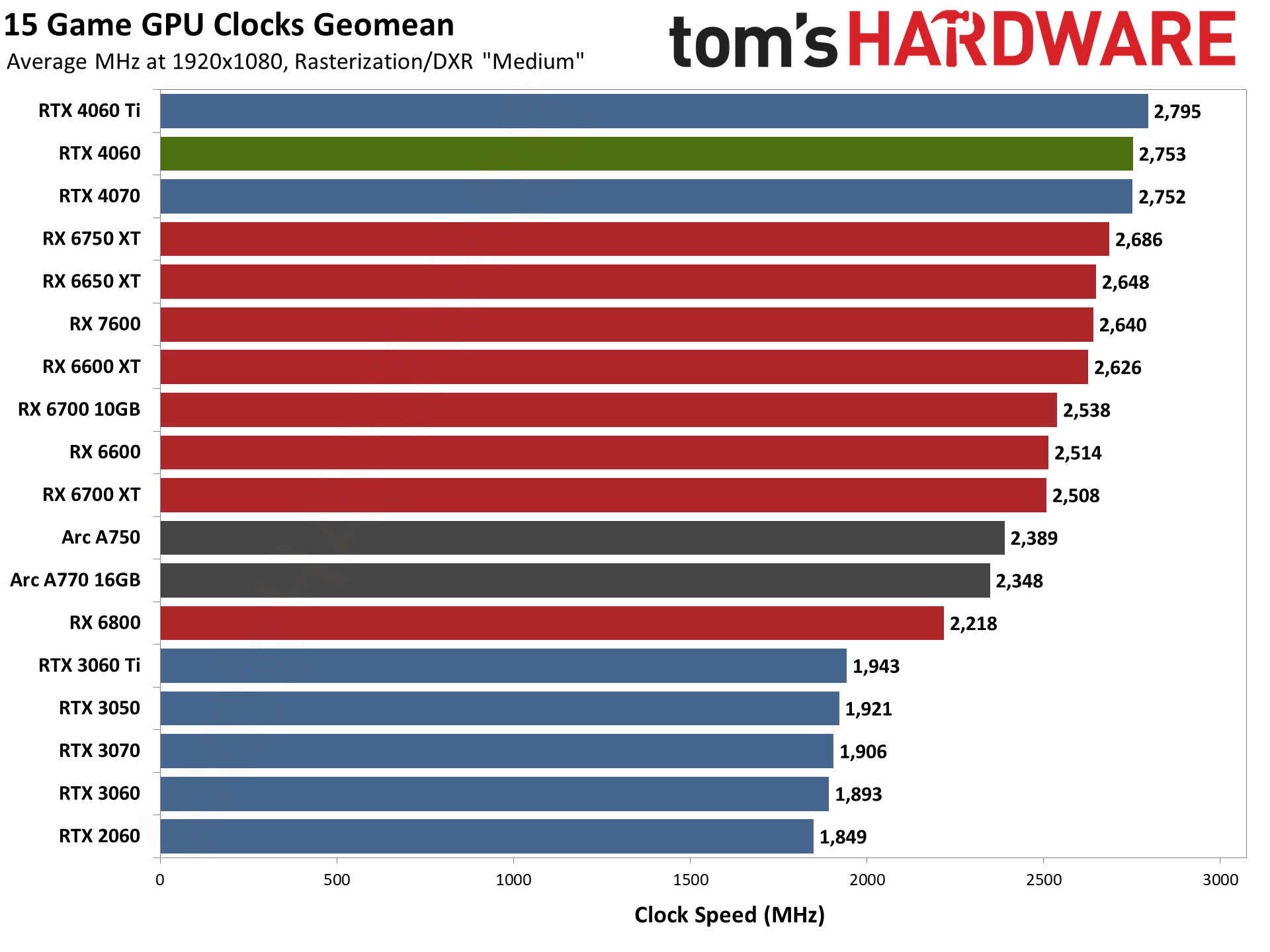

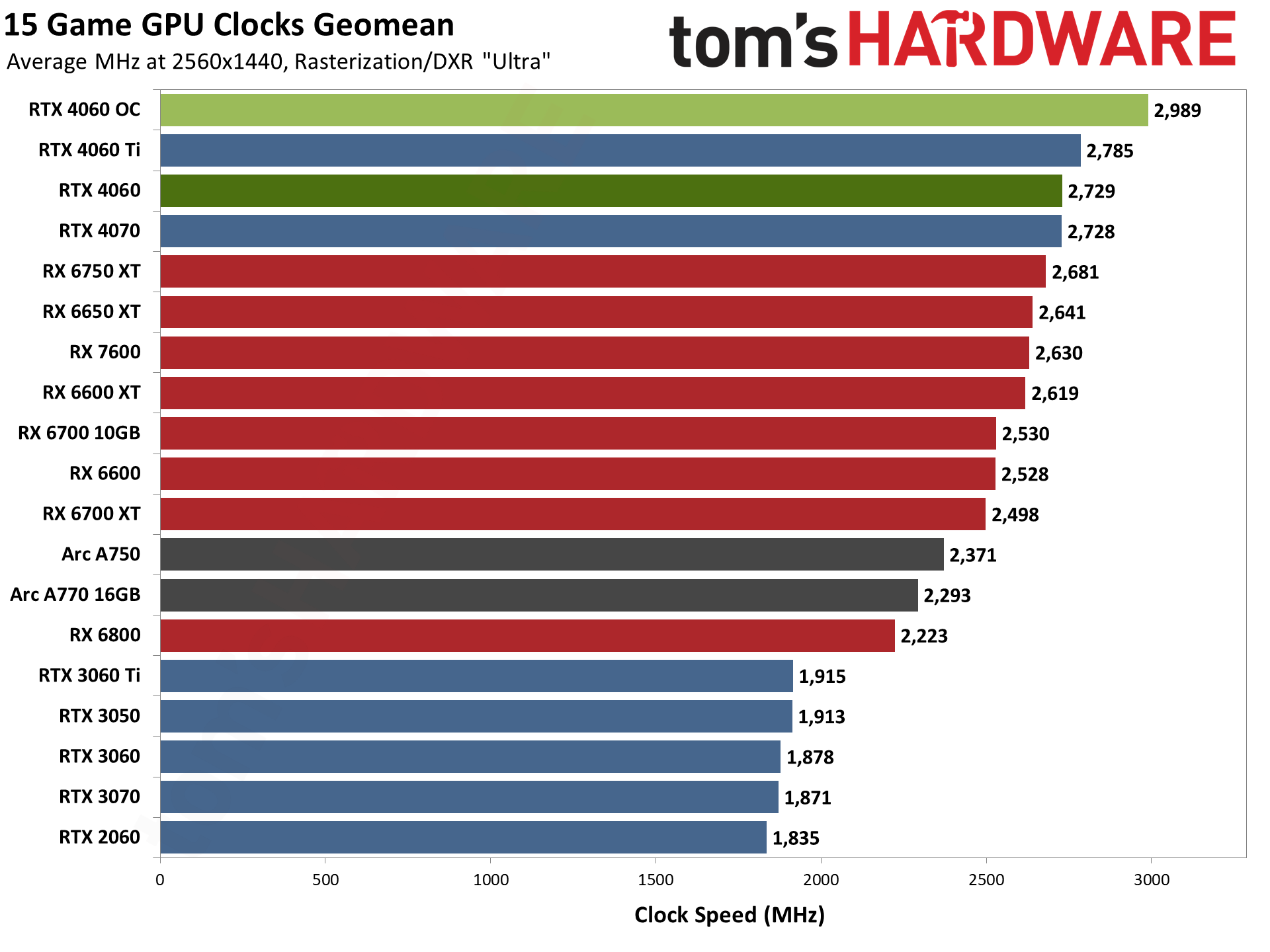

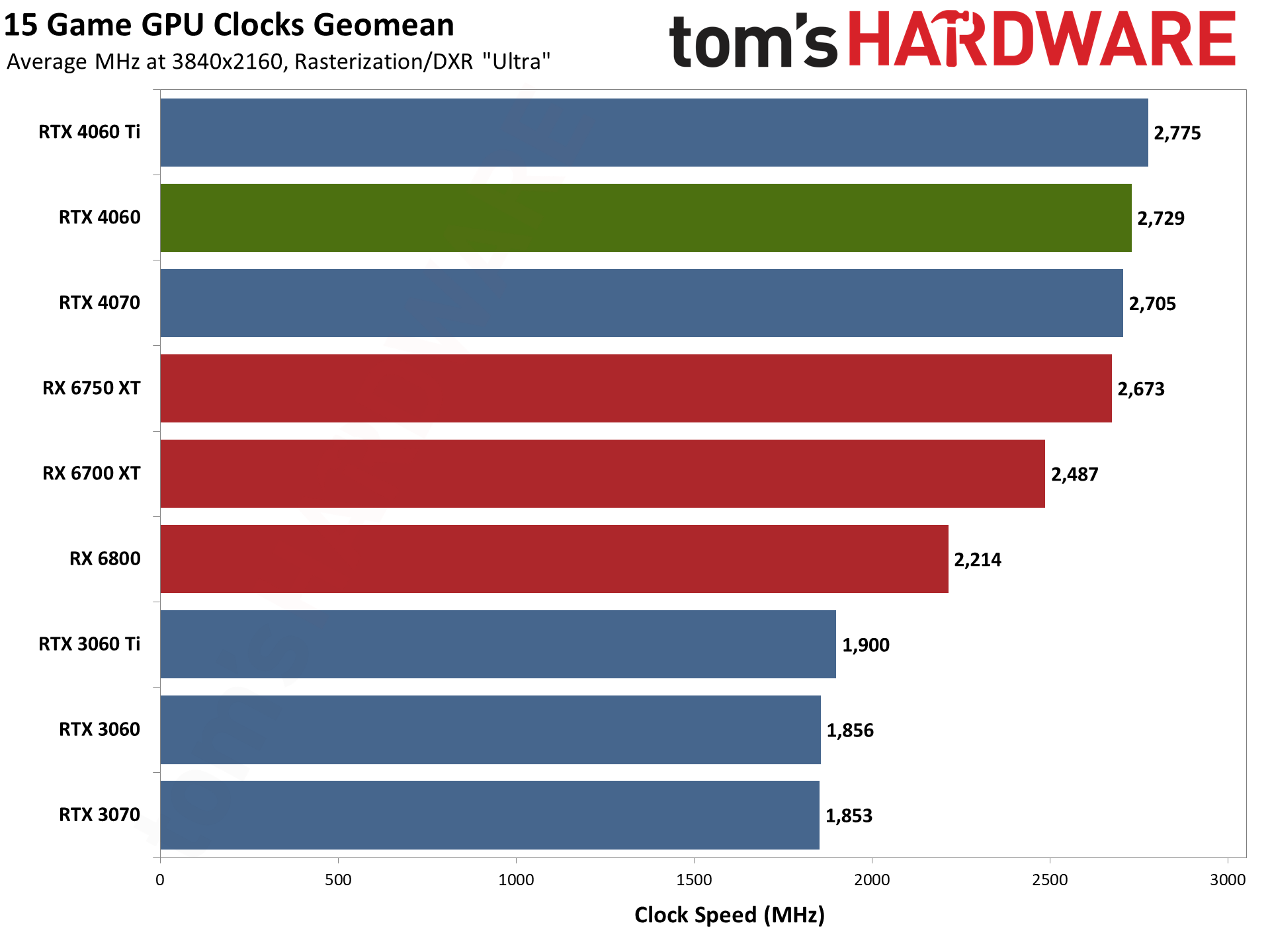

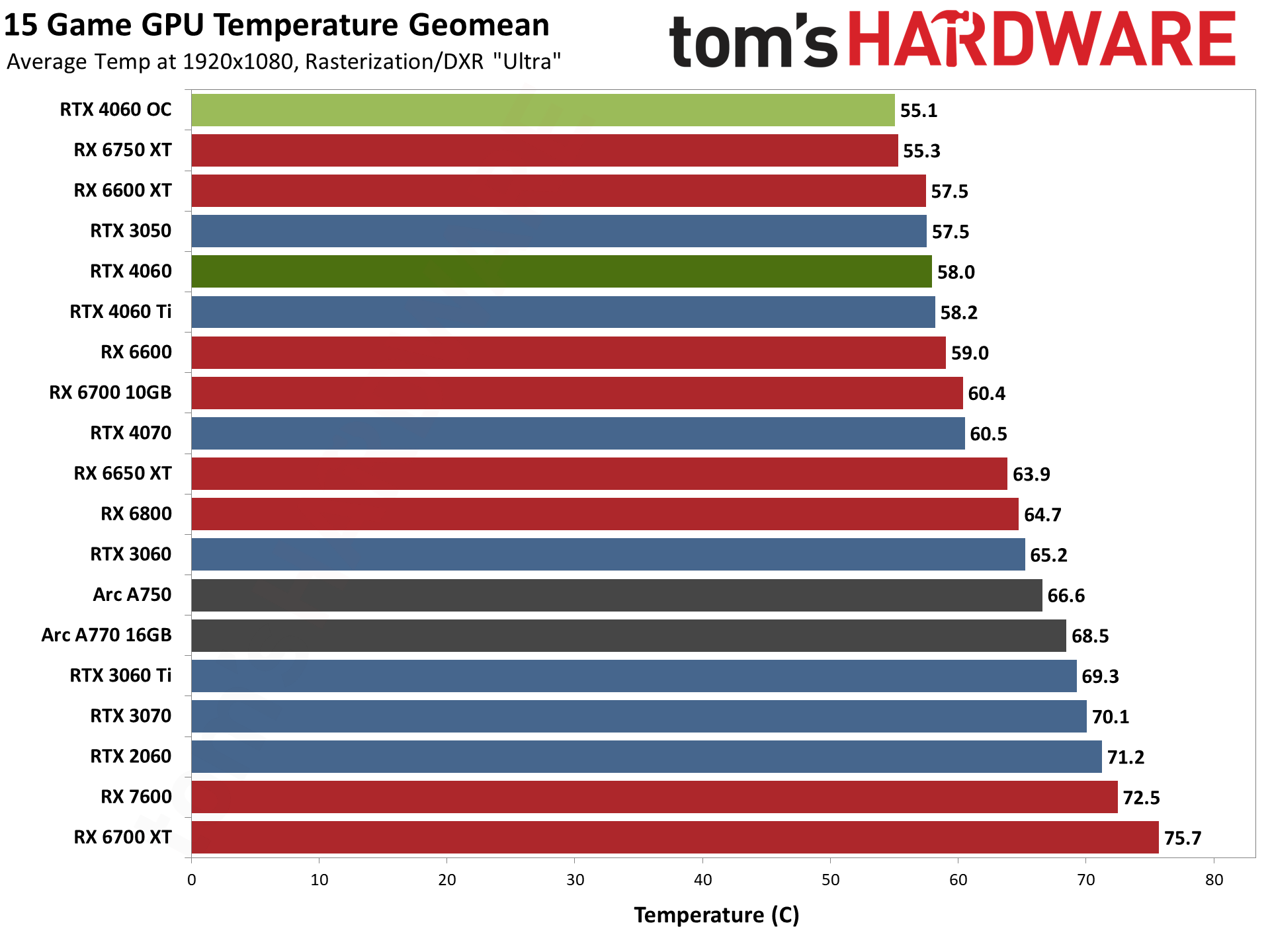

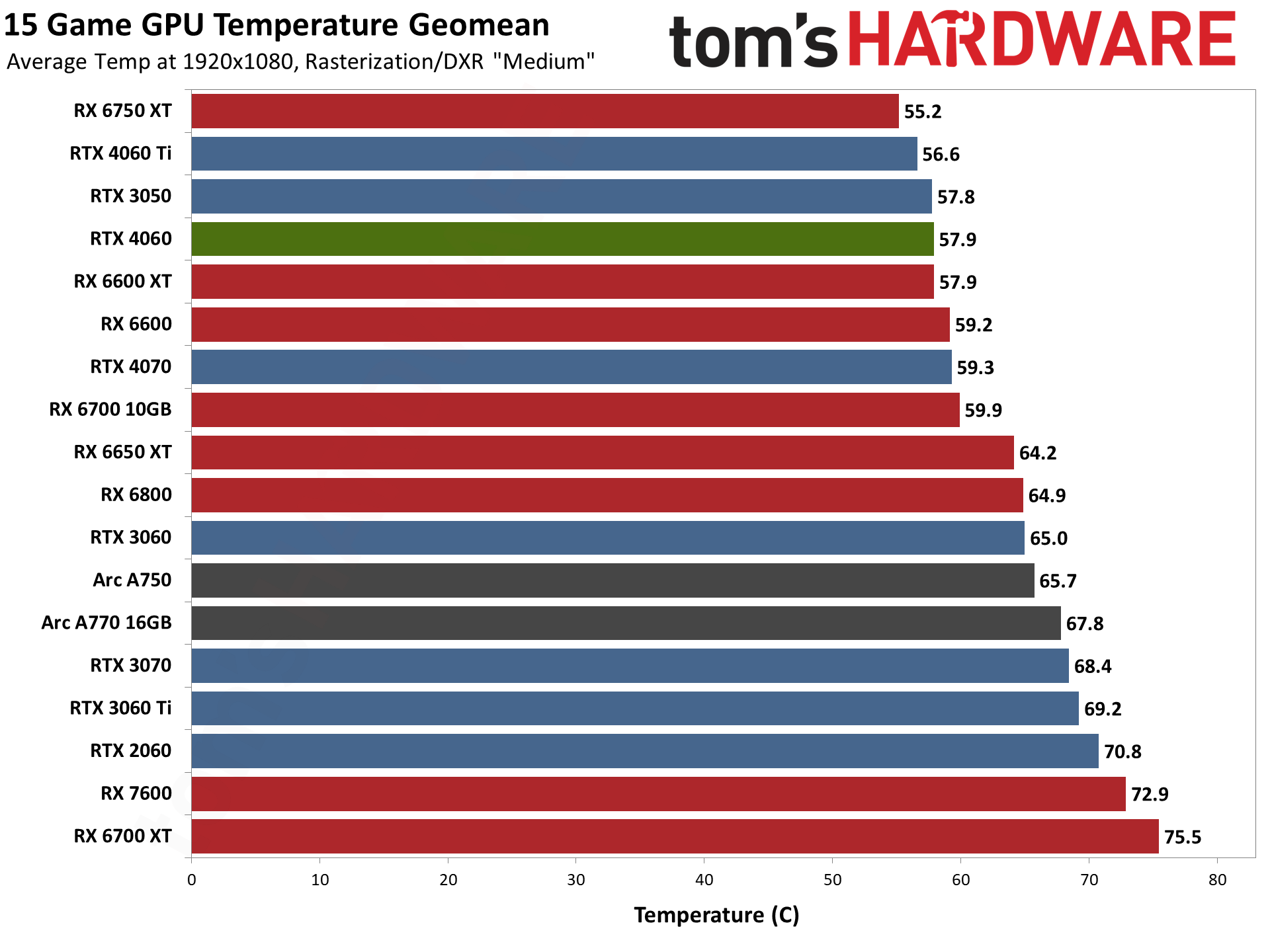

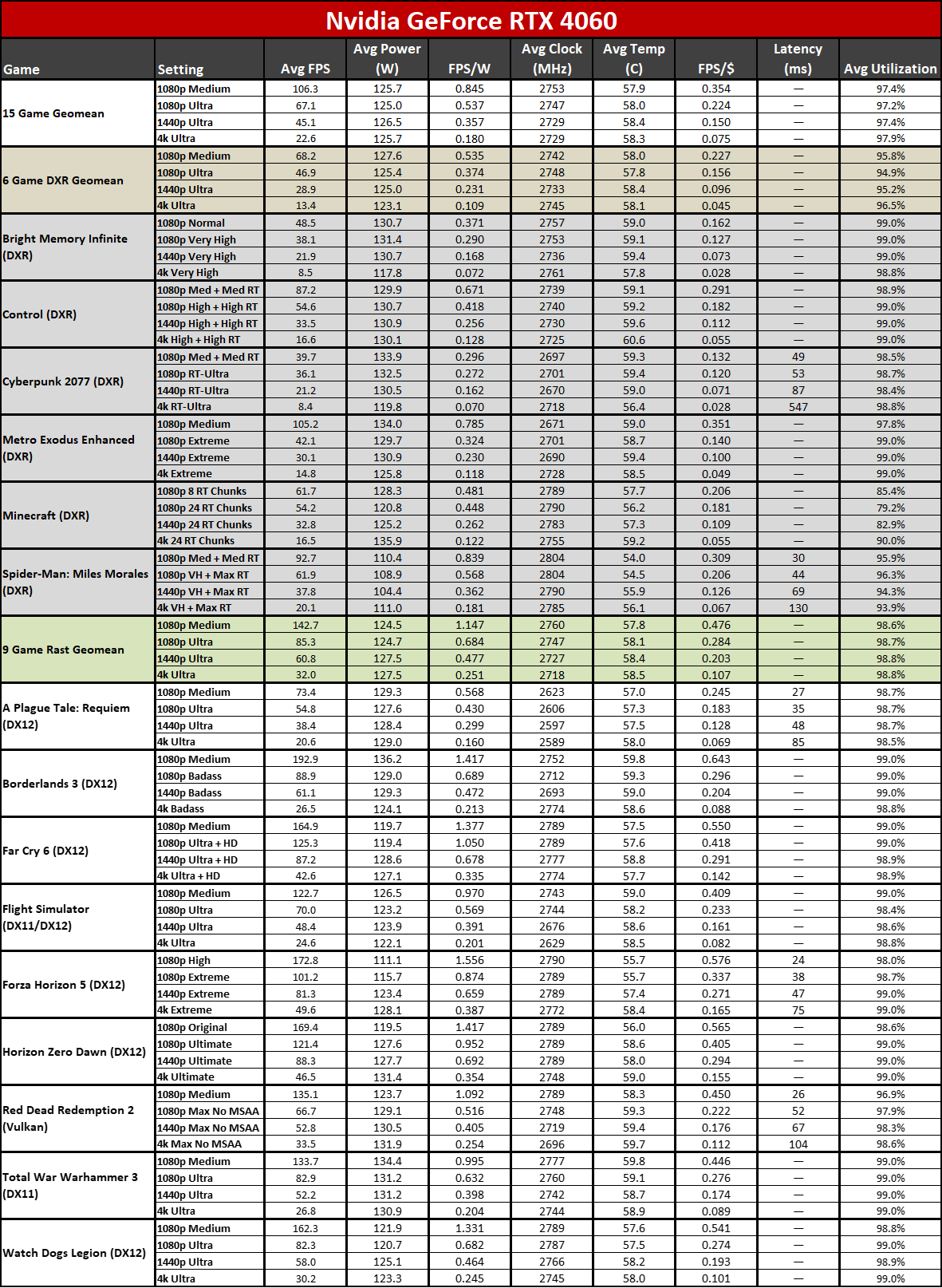

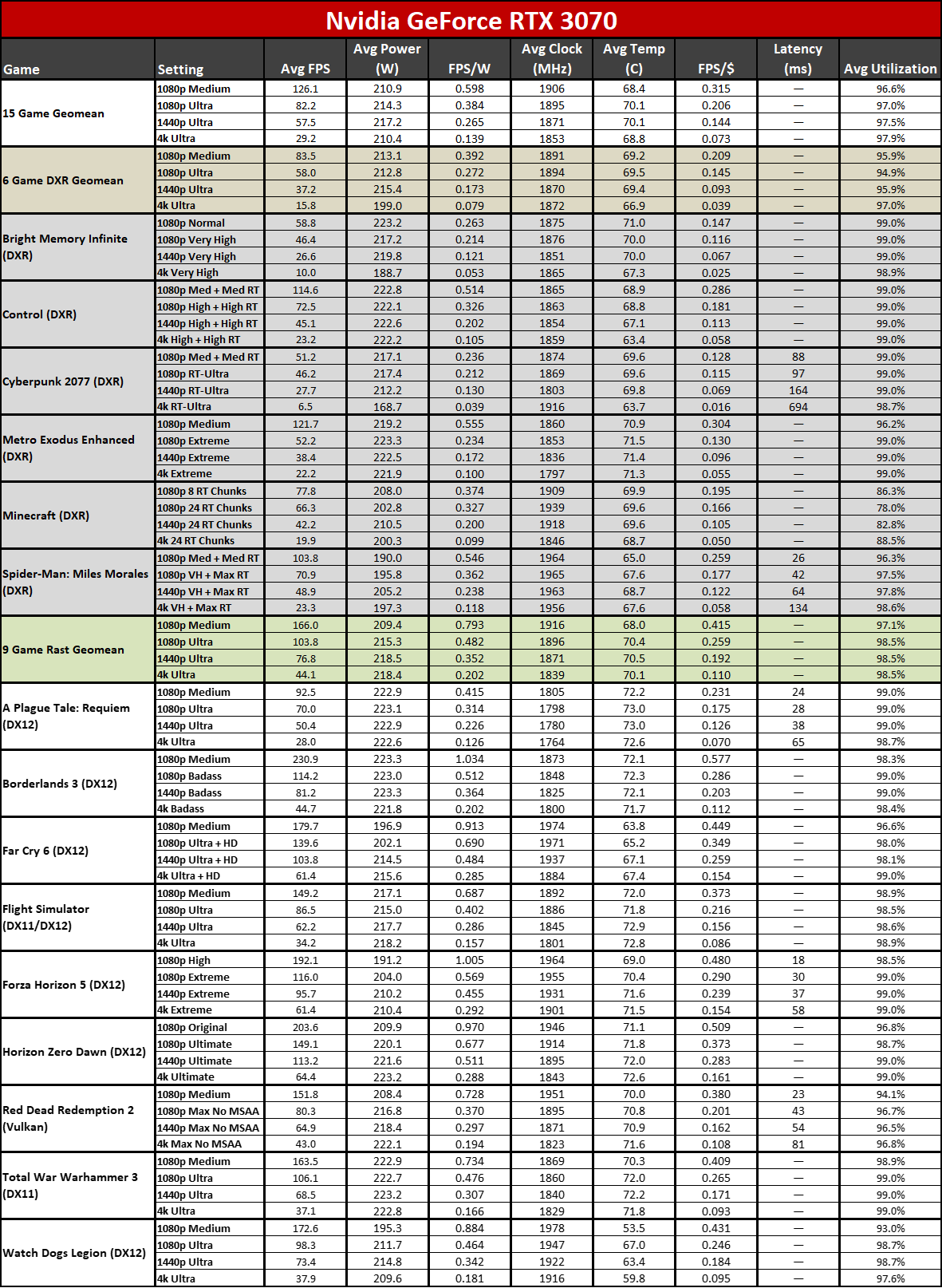

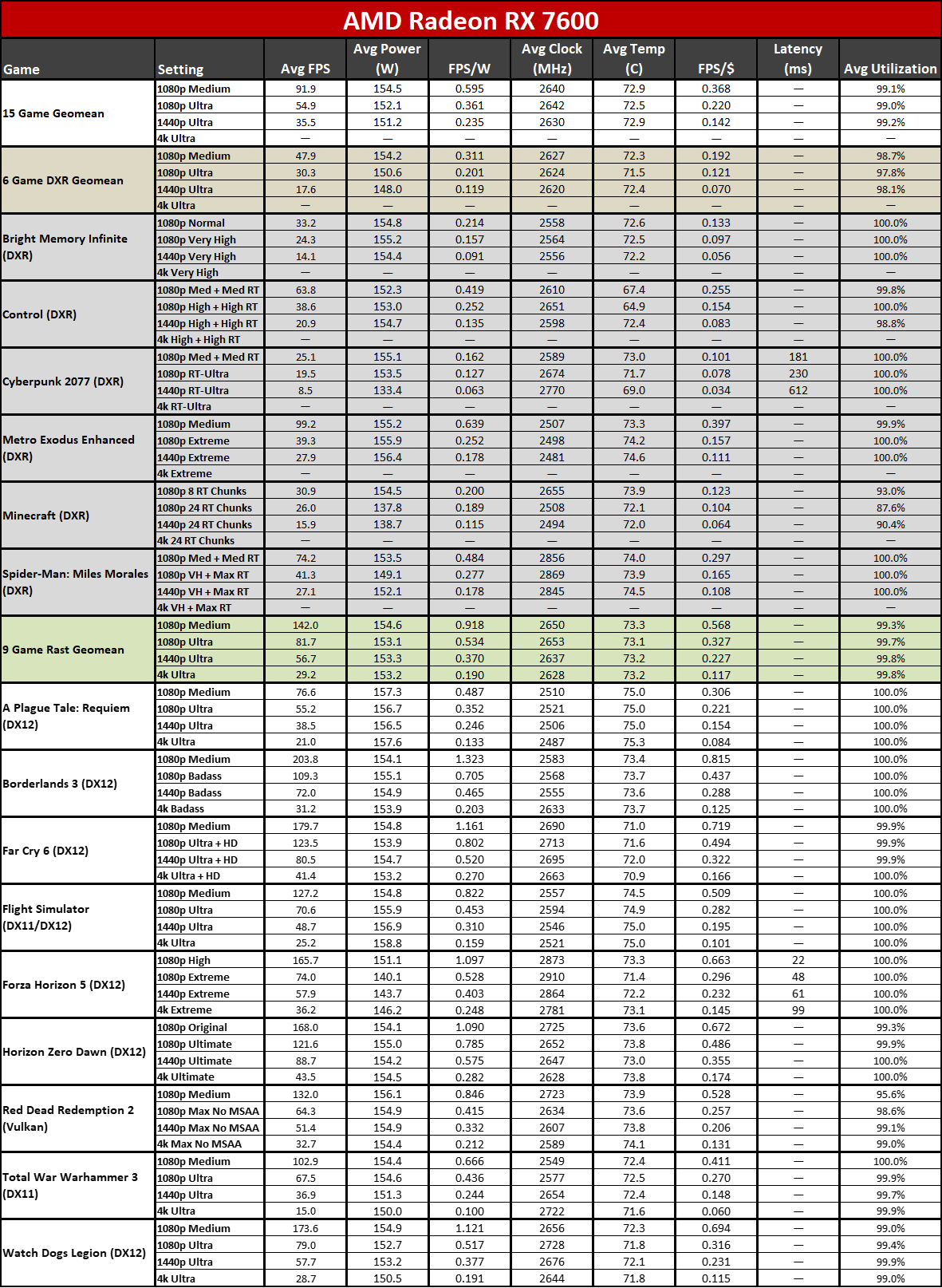

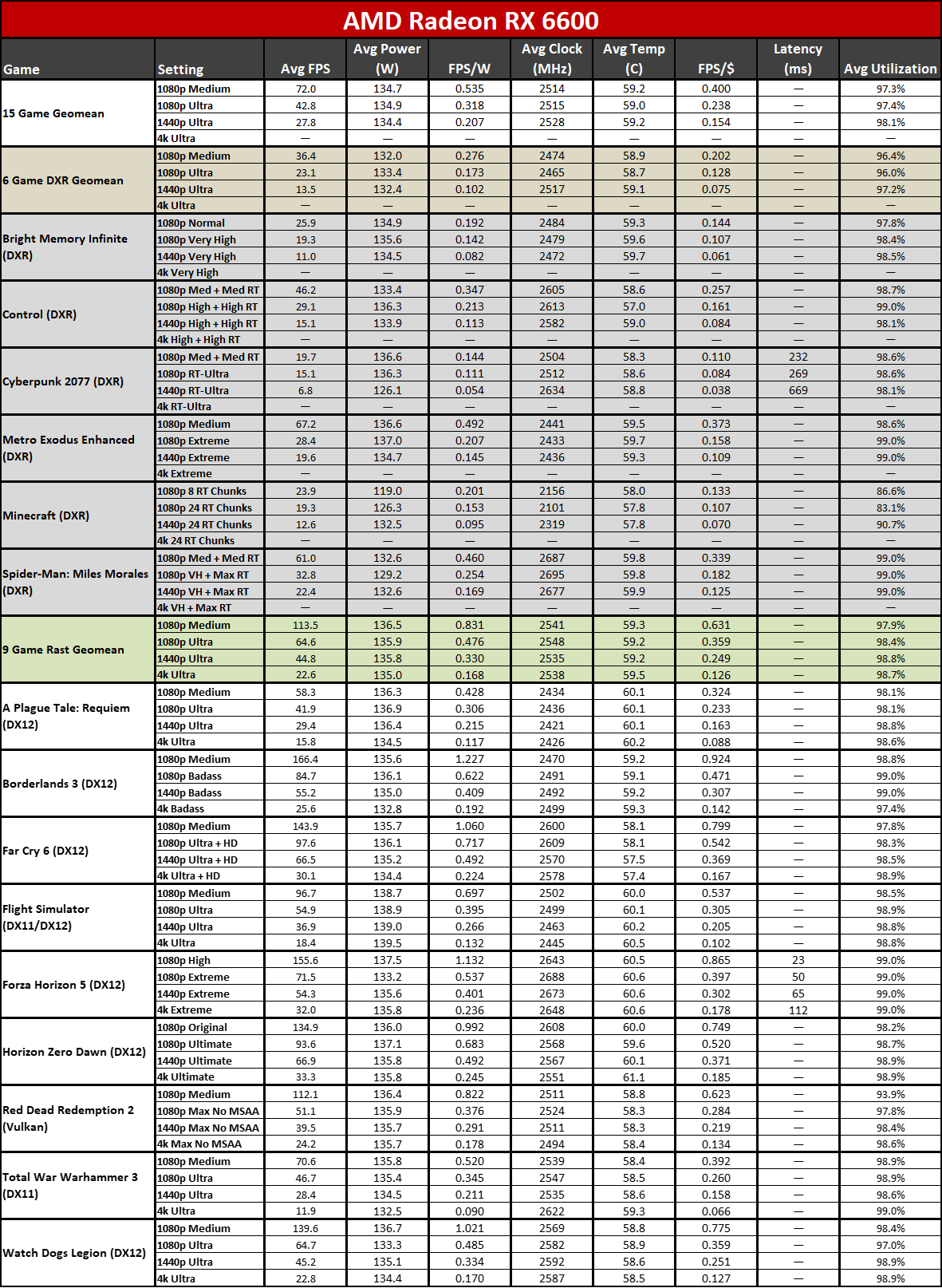

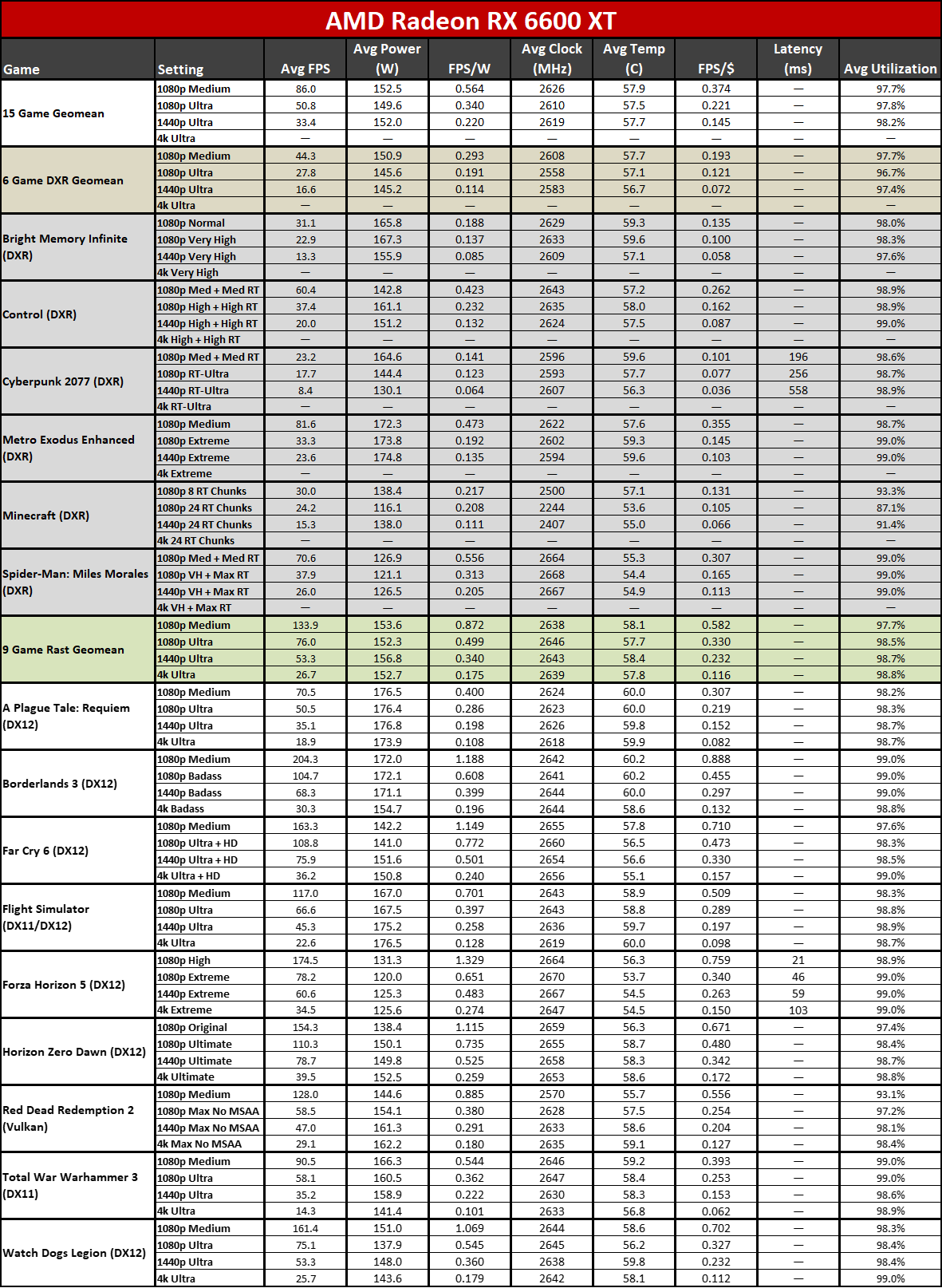

We've been working on this for a while, retesting all the GPUs on our new test PC using an Nvidia PCAT v2 device, and we've finally made the switch from the Powenetics hardware and software we've previously used. The benefit is that we're now using data from our full gaming suite, rather than just a couple of specific tests. The charts are the geometric mean across all 15 games, though we'll also have full tables showing the individual results — some games are more taxing than others, as you'd expect.

Our new testing approach also provides data on GPU clock speeds and temperatures (but not fan speeds), and we'll have separate charts for 1080p medium, 1080p ultra, 1440p ultra, and 4K ultra. Besides the power testing, we also check noise levels using an SPL meter. This is done at a distance of 10cm from in order to minimize noise pollution from other sources (like the CPU cooler).

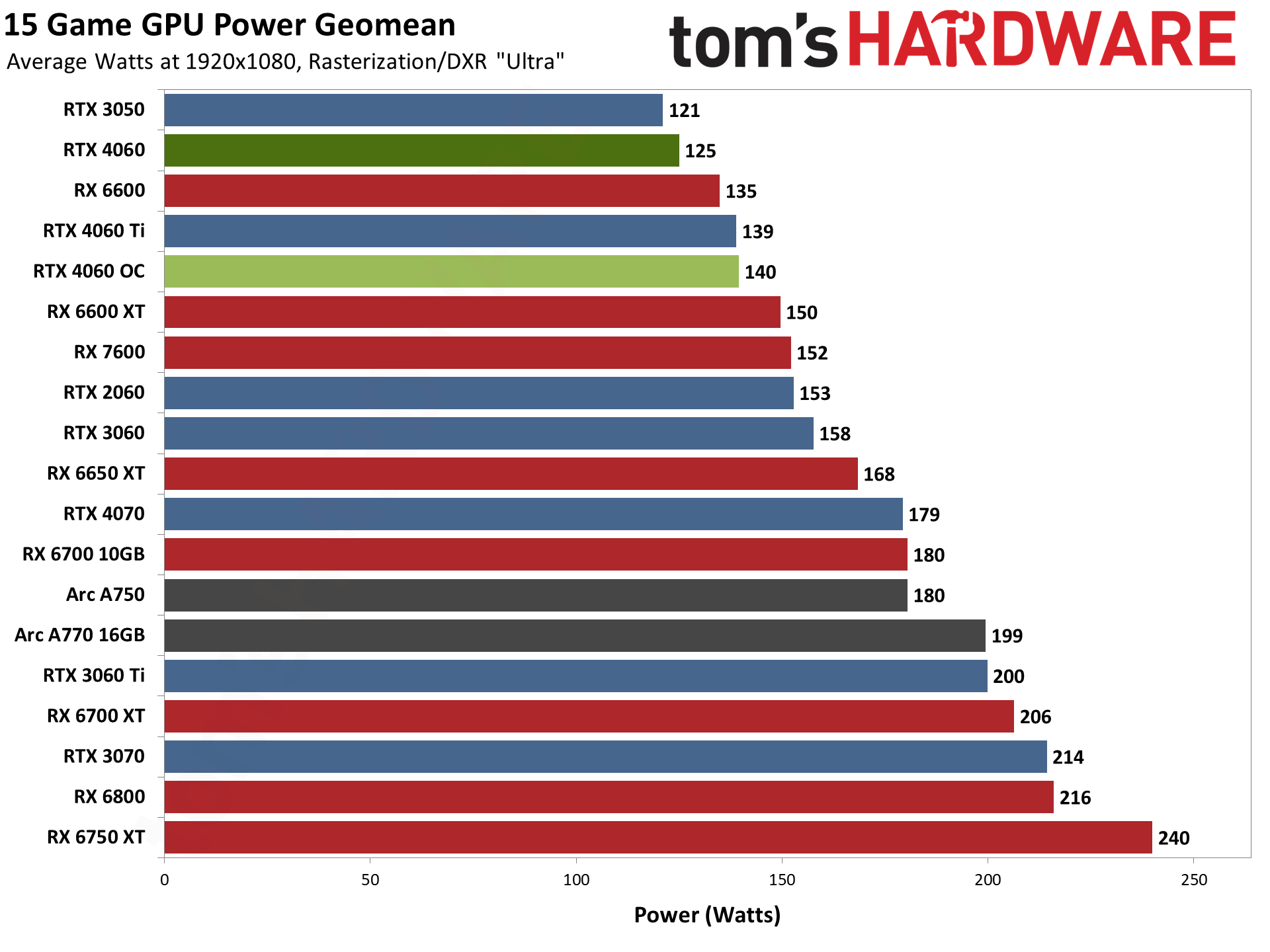

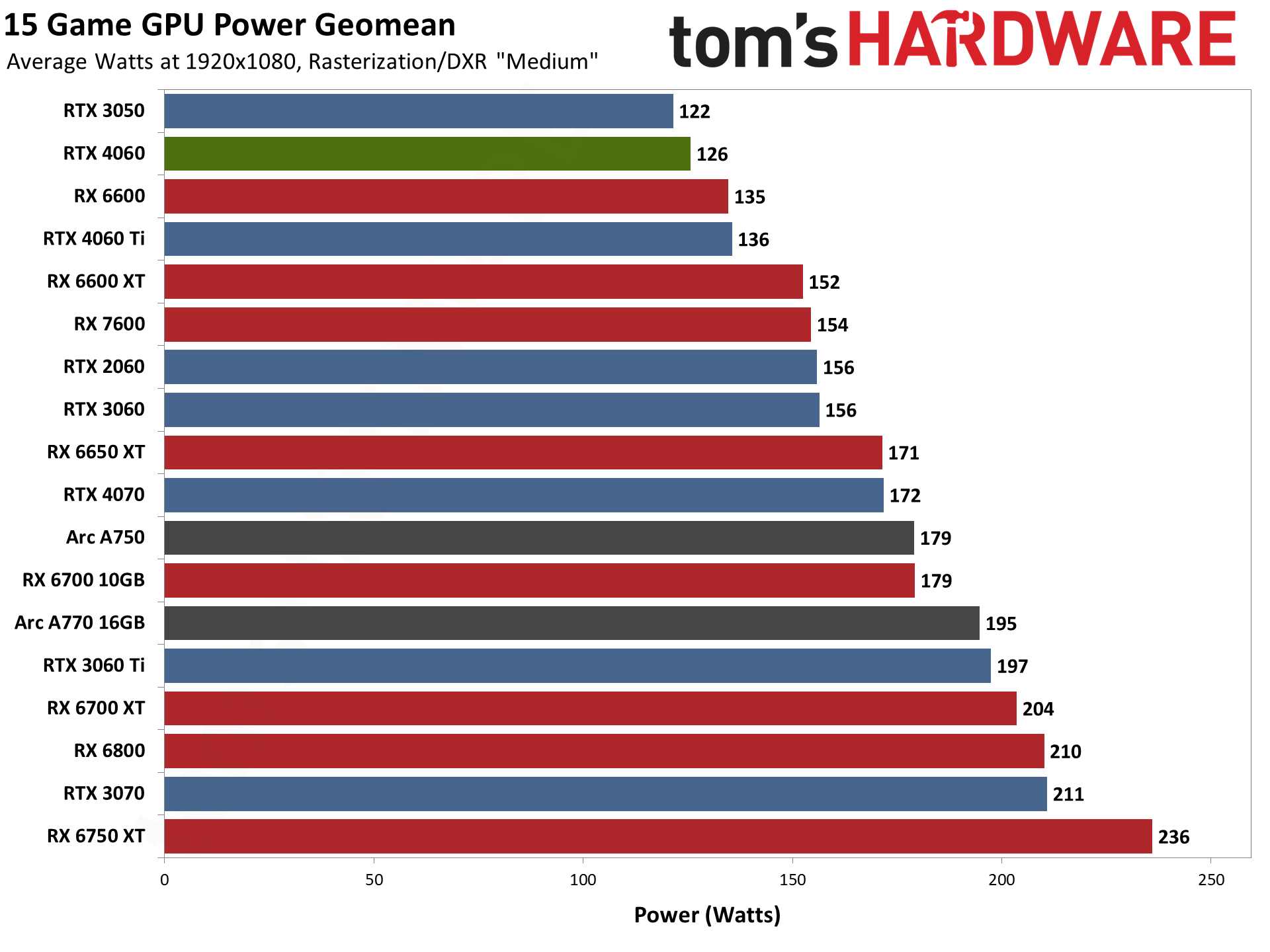

Starting with power consumption, the RTX 4060 has a nominal TGP (Total Graphics Power) of 115W. However, the Asus RTX 4060 Dual OC appears to have a slightly higher power limit of around 130W, based on our testing. On average, at 1080p ultra the Asus card consumed 125W. The other resolutions and settings don't change that too much, with average power use of 126–127 watts.

The result is a good overall efficiency score of 0.537 FPS/W at 1080p ultra, but that's not as good as the RTX 4060 Ti's 0.597 FPS/W. AMD's RX 7600 meanwhile lands at 0.361 FPS/W, while the RX 6700 XT only achieves 0.320 FPS/W. Overclocking doesn't change the efficiency too much either, with the 4060 OC still getting 0.525 FPS/W. Anyway, depending on where you live and how much you pay for power, these metrics can be an important factor.

Consider a moderate 15 hours per week of gaming (an hour or two on weekdays and then some longer sessions on the weekend). AMD's RX 6700 XT might be generally faster, but it consumes 80W more power. Over time, that adds up to 62 kWh per year. If you're lucky and only pay $0.10 per kWh, that's only $6, but some places like California can pay up to $0.30 per kWh, or $18 per year, and various places in Europe are in the $0.50 per kWh range — $30 per year.

Sure, that's spread out over twelve months, but it's something to think about. You could potentially buy a more efficient GPU and end up paying less in power costs. If you play a lot of games, which we know some people do, the difference in electricity costs could be even wider. It's food for thought, anyway.

GPU clocks aren't super meaningful, in and of themselves, but the Asus RTX 4060 averages about 2750 MHz across our test suite. That's roughly 200 MHz higher than the rated boost clock, which is typical of what we've seen with Nvidia's RTX 40-series GPUs. Our +200 MHz overclock, with a 120% power limit, boosts average GPU clocks to just under 3GHz, a rather impressive result.

It's nice to finally see Nvidia GPUs breaking the 2GHz barrier with ease. Higher clocks can reduce overall efficiency in some cases, but the combination of the Ada Lovelace architecture and TSMC's 4N process node proves potent. You have to wonder if we could start seeing 3GHz and even 4GHz GPUs with the next generation parts.

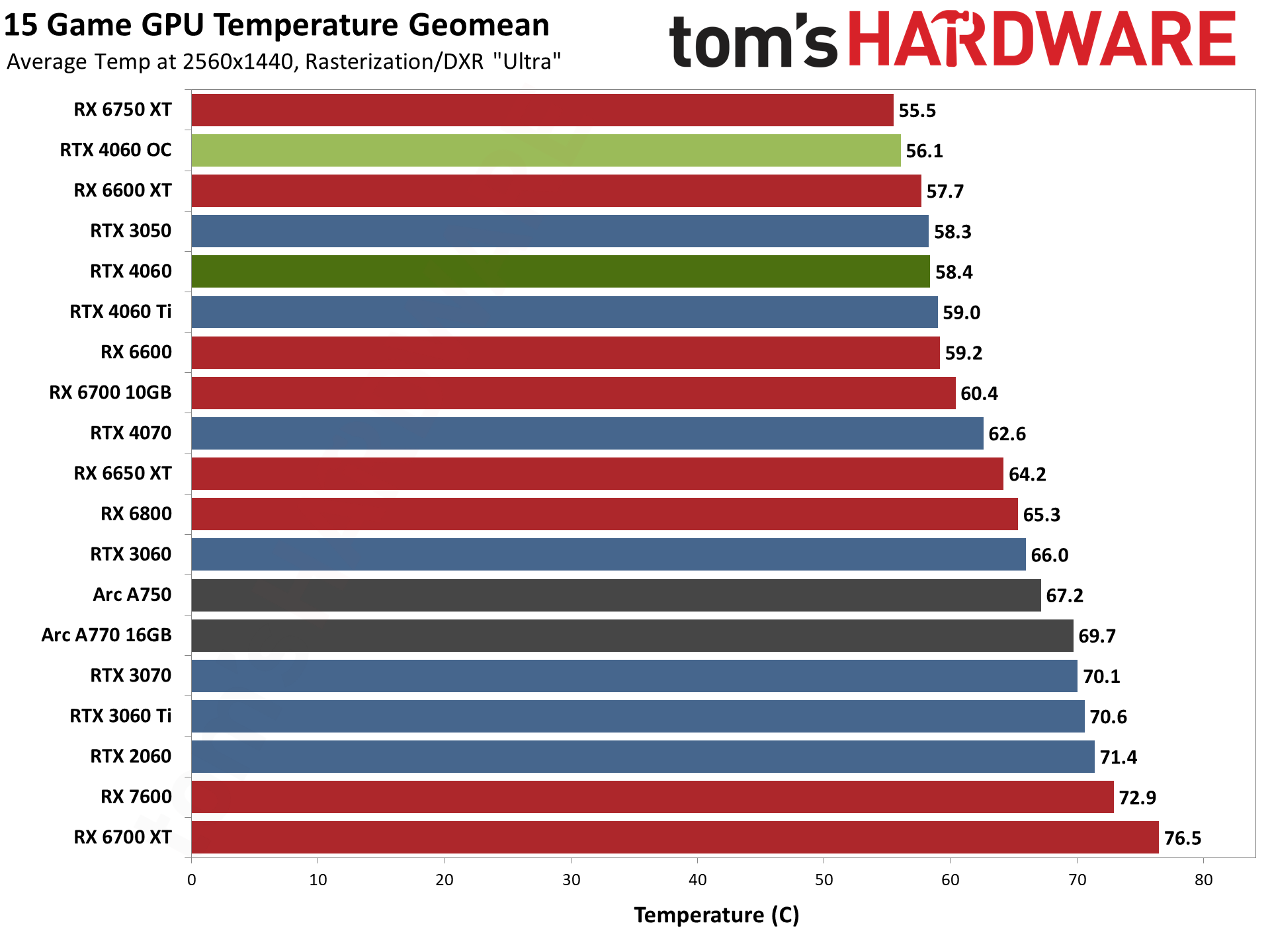

Temperatures are very good on the Asus RTX 4060 Dual OC. We expected as much, considering the relatively large heatsink, quality fans, and low TGP. On average across our test suite, the Asus card landed just under 60C, with the worst result being 61C in Control.

Overclocking didn't make things worse, thanks to our aggressive fan speed curve, with temperatures still staying under 60C. But temperatures don't exist in a vacuum; we also have to look at noise levels.

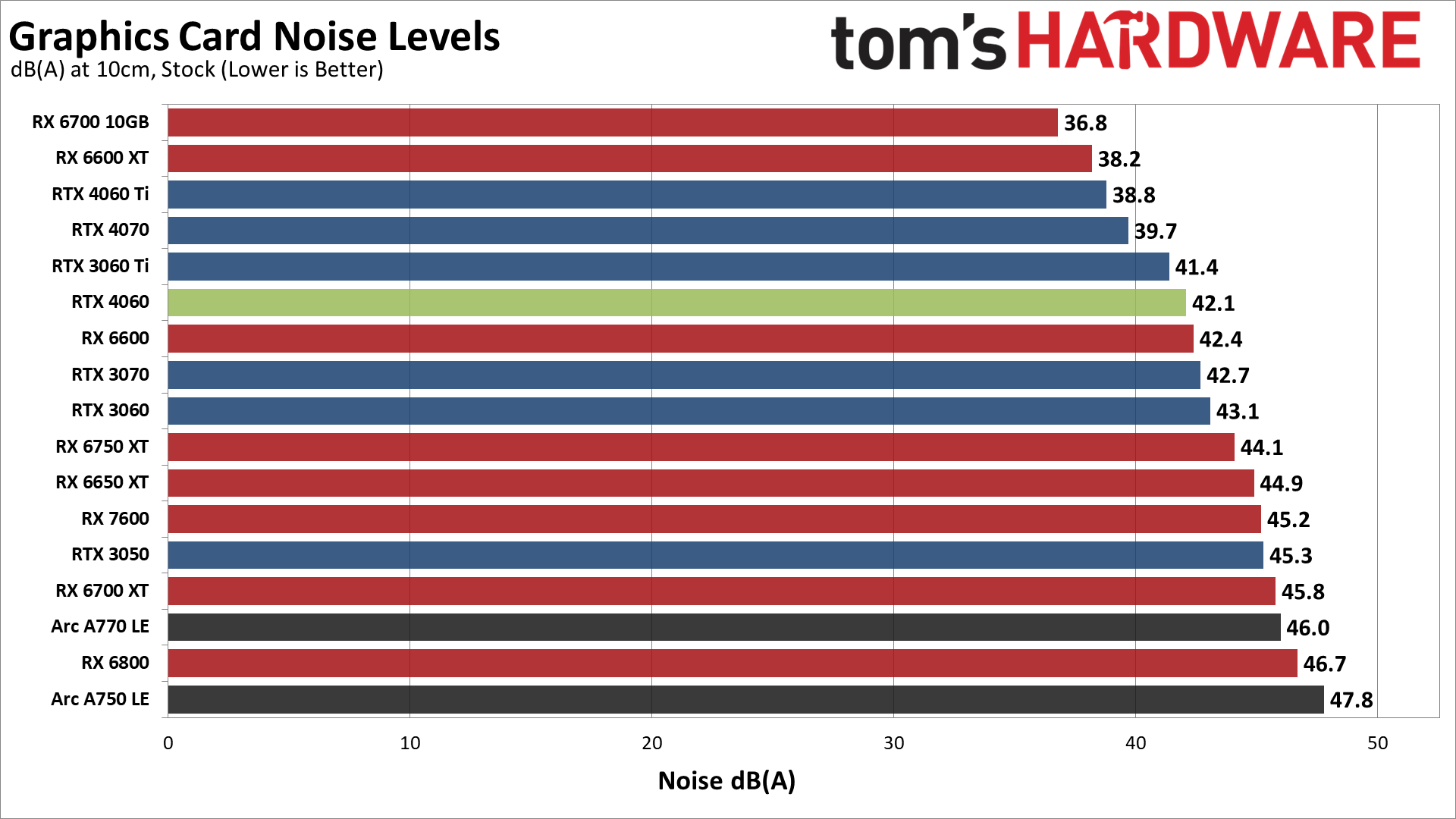

With an average power draw of 125W, the Asus card shouldn't have to work too hard to keep temperatures in check. For our noise testing, we run Metro Exodus for over 15 minutes and let things setting down. It's typically one of the most demanding games in our test suite, as far as GPU power draw goes, which means this should be a worst-case scenario for fan speed and noise.

We use an SPL (sound pressure level) meter placed 10cm from the card, with the mic aimed at the center of the right fan on the Asus card. This helps minimize the impact of other noise sources like the fans on the CPU cooler. The noise floor of our test environment and equipment is around 31–32 dB(A).

The Asus RTX 4060 Dual OC settled in at a fan speed of 47% after a few minutes. As expected, it doesn't make too much noise, registering 42.1 dB(A) on our meter. It's not the quietest card around, and we do have to wonder if some company might be daring enough to try a passively cooled RTX 4060. We've seen such cards in the past, and a 125W TGP ought to be manageable, assuming you have a bit of airflow from case fans.

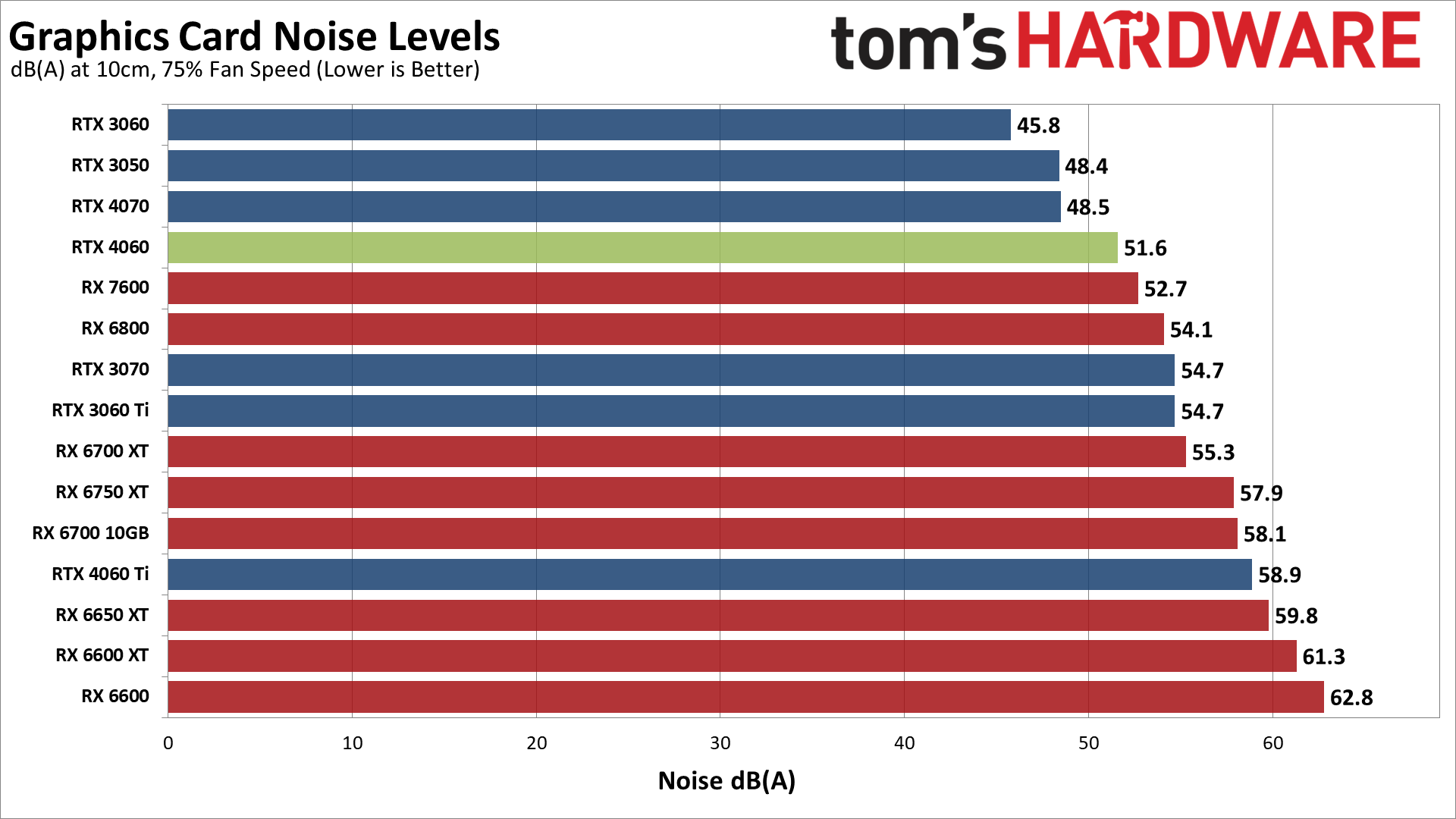

We also tested with a static fan speed of 75%, and the Asus RTX 4060 generated 51.6 dB(A) of noise. The card shouldn't need to hit such a high fan speed under normal use, or even with overclocking, but it does give you some idea of what you can expect in warmer environments.

Here's the full rundown of all of our test results, including performance per watt and performance per dollar columns. We've covered most of this information elsewhere, though the value aspect isn't something we've addressed directly.

As you'd expect, lower priced cards like the RTX 4060 rank higher on the value scale than more expensive cards, though that's only looking at the cost of the GPU and not the full system. Based on current online prices, it's the best value Nvidia GPU, just edging out the RTX 4060 Ti... and the RTX 3060 Ti and RTX 3060. Those last two are basically on clearance now, though, and any sales are unlikely to last long.

That doesn't mean the RTX 4060 is the best value overall, however. AMD's RX 6600, 6600 XT, and 6650 XT all have slightly better value rankings, and the RX 6700 XT is only a hair behind the 4060. Prices on previous generation graphics cards have also been fluctuating quite a bit, so a good sale could easily push some other card to the top for a bit. Still, the 4060 does offer a good combination of features, price, and efficiency.

- MORE: Best Graphics Cards

- MORE: GPU Benchmarks and Hierarchy

- MORE: All Graphics Content

The Nvidia RTX 4060 brings Ada Lovelace down to a truly mainstream price point of $299. That's the same as the launch price of the GTX 1060 6GB Founders Edition back in 2016, $50 cheaper than the RTX 2060 Founders Edition, and $30 cheaper than the RTX 3060 launch price. That's great for mainstream gamers, particularly those who might have a graphics card from several generations back that are looking to upgrade. However, the RTX 4060 definitely makes some compromises, chief among them being the 128-bit memory interface and 8GB of VRAM. Let's put that in perspective.

Last generation's RTX 3060 had a 192-bit interface and 12GB of memory. Prior to that, Nvidia used 192-bit interfaces with 6GB or even 3GB of memory for several generations. The RTX 2060 was a 192-bit interface with 6GB (and later 12GB, though those models came late in the cycle and were rarely seen). GTX 1660-series GPUs were also 192-bit with 6GB of memory. The GTX 1060 had a 192-bit interface, with either 3GB or 6GB. In fact, you have to go all the way back to the GTX 960 from 2015 before you can find an xx60-class Nvidia card that used less than a 192-bit interface.

Viewed in that light, Nvidia has rolled back the clock eight years. Yes, the large L2 cache helps mitigate the memory bandwidth associated with the 128-bit interface, but it really, really sucks that we even need to have this conversation. When Nvidia designed the various Ada Lovelace GPUs, it skimped on memory interface width and thus memory capacity on virtually every model, other than the top-tier RTX 4090. After the RTX 3060 moved to 12GB, it's a slap in the face to go back to 8GB on the mainstream model.

Is it the end of the world? No. Many games will still run fine with 8GB. All games that are coded in an intelligent fashion should have an option for texture settings that won't exceed 8GB at 1440p or 1080p, at least for the next several years. But there will invariably be games that push beyond 8GB, and it's definitely a step back from the 3060's 12GB. We thought we were done with 8GB on cards priced above $300 after the previous generation, but Nvidia giveth, and Nvidia taketh away.

Like the RTX 4060 Ti, we'd feel so much better about the RTX 4060 if it had 12GB of memory and a 192-bit memory interface. We also need to look at generational improvements to see how much Nvidia is counting on "neural rendering" and specifically DLSS 3 Frame Generation to try and tell a story about improved performance.

Based on our GPU benchmarks hierarchy testing, the RTX 2060 delivered 75% higher performance than the prior generation GTX 1060 6GB. There was also the GTX 1660 Super that dropped the ray tracing and DLSS aspects of the Turing architecture and got the price down to $229, while still delivering 40% higher performance than the 1060 6GB. Last generation's RTX 3060 meanwhile was about 30% faster than the RTX 2060 — not a huge improvement in performance, but still pretty reasonable, especially since it came with twice as much VRAM.

The new RTX 4060? It's 22% faster than the RTX 3060 on average, at 1080p, but it also comes with 33% less memory. It's one of the smallest generational improvements we've seen from Nvidia for an xx60-class GPU going back to at least the Maxwell era, and probably further than that.

Nvidia will trot out performance charts like the above showing performance with DLSS upscaling and frame generation to make things look better. If you buy into the marketing, with frame generation (aka, neural rendering), the RTX 4060 offers up to a 70% generational improvement over the RTX 3060. That's a seriously skewed view of how Frame Generation works and how it feels, unfortunately.

In practice, we'd credit Frame Generation with maybe a 15% boost to the overall performance you get from the RTX 4060 and other RTX 40-series GPUs. It's not bad in and of itself, but it's also not ideal. You're trading off lower latency and increased responsiveness for smoother framerates. It's definitely something I can feel when playing games.

There are other concerns as well. For one, DLSS 3 support is far more limited than DLSS 2 support right now. It's in something like 38 games at present, compared to over 300 games with DLSS support in general. But another issue is that we've encountered several DLSS 3 titles where the feature basically broke because of a patch.

When we tested Diablo IV, we found that enabling Frame Generation reduced performance on all of the RTX 40-series GPUs. We haven't confirmed whether the latest game updates have fixed the problem, but even so, it wasn't really needed. For this review, Frame Generation also broke in Forza Horizon 5, though Nvidia's own numbers suggest that it would only have improved performance by about 25% — the reviewer's guide for the 4060 listed 136 fps at 1080p, compared to the 116 fps that we measured.

But again, half of those frames are interpolated, so the base fps — the rate at which user input is sampled — would be 78 compared to the DLSS upscaled without Frame Generation result of 108 fps. Does a real 108 fps feel better than an interpolated 136 fps? Yes, we think it does. Does it look better to the eyes? That's a bit more debatable, though Frame Generation does at least allow people to make better use of high refresh rate monitors.

The RTX 4060 isn't a terrible card by any means. Some people will probably say it is, but across our benchmark suite, it was universally faster than the previous generation RTX 3060 at every setting that mattered (meaning, not counting 4K ultra performance, where neither card delivered acceptable performance). There will be edge cases where it falls behind, like Spider-Man: Miles Morales running 1440p ultra, where minimum fps was clearly worse than on the 3060. But overall? Yes, it's faster than the previous generation, and it even cuts the price by $30 — not that the RTX 3060 was available for $329 during most of its shelf life.

There are other benefits, like the power efficiency. The RTX 3060 consumes about 35W more power than the Asus RTX 4060, for example. Better performance while using less power is a good thing. Other architectural benefits include the AV1 encoding support, and features like Shader Execution Reordering, Opacity Micro-Maps, and Displaced Micro-Meshes might prove beneficial in the future — we wouldn't bet heavily on those, however, as they're all exclusive to the RTX 40-series GPUs right now and require the use of API extensions.

The saving grace for the RTX 4060 is undoubtedly its price tag. AMD already has cards that deliver generally similar performance at the same price, but there are a lot of gamers that stick with Nvidia, regardless of what other GPU vendors might have to offer. Now you can get a latest generation RTX 4060 for $299, with better performance and features than the RTX 3060. Just don't expect it to behave like an RTX 4070 that costs twice as much.

A more benevolent Nvidia, flush with the cryptocurrency and AI profits of the past two years, would have made this an RTX 3050 replacement. With the same $249 price, it would have been an awesome generational improvement, as it's 66% faster than the 3050. That's basically what Nvidia did with the RTX 4090, which is up to 60% faster than the RTX 3090 for nearly the same price. But every step down the RTX 40-series has been a tough pill to swallow.

The RTX 4080 is about 50% faster than the 3080, yet it costs 70% more. The 4070 is 30% faster than the 3070 and costs 20% more. The 4060 Ti has the same launch price as the 3060 Ti, but it's only 12% faster. Now we have the RTX 4060 that undercuts the price of the 3060 by 10% while delivering 20% better performance. That's better than most of its siblings, and maybe there's still hope for an RTX 4050... but probably not.

As with the last several graphics card launches, including the Radeon RX 7600, GeForce RTX 4060 Ti, and GeForce RTX 4070, we end up with similar feelings. It's good that the generational pricing didn't go up with the RTX 4060. For anyone running a GPU that's two or more generations old, the RTX 4060 represents a good upgrade option. But we're still disappointed that Nvidia chose to use a 128-bit interface and 8GB of VRAM for this generation's xx60-class models.

- MORE: Best Graphics Cards

- MORE: GPU Benchmarks and Hierarchy

- MORE: All Graphics Content