Nvidia shares ended lower last week, giving back around $150 billion in market value amid a broader selloff in rate-sensitive tech stocks ahead of the group's hotly anticipated third-quarter earnings on Wednesday.

Nvidia (NVDA) , which commands a nearly 80% share of the market for high-end AI-powering chips and processors, is discovering that its biggest challenge isn't the technological advances of its rivals. Instead, it's supply-chain partners' ability to meet what CEO Jensen Huang says is "insane" demand for its new Blackwell AI chips.

Related: Analyst updates Amazon stock price target ahead of conference

Blackwell chips, as a stand-alone, are said to be around two and a half times faster than Nvidia's legacy H100 chips, also known as Hopper, when used to train large-language AI models like OpenAI's ChatGPT. And they're around five times faster when used to run those models in real applications, a process called inferencing.

Of course, that performance comes at a price: Blackwell GPUs reportedly cost around twice as much as their H100 predecessors, between $60,000 and $70,000 per unit, with prices reaching as high as $3 million for a fully loaded server with 72 chips stacked inside.

Nvidia's broader chip architecture makes this possible, as chips can be stacked and interlocked, almost Lego-like, based on specific client needs.

Blackwell is also backward-compatible with the H100, enabling customers — if they're lucky enough to get their hands on them — to replace legacy chips with the newer, faster and more efficient models.

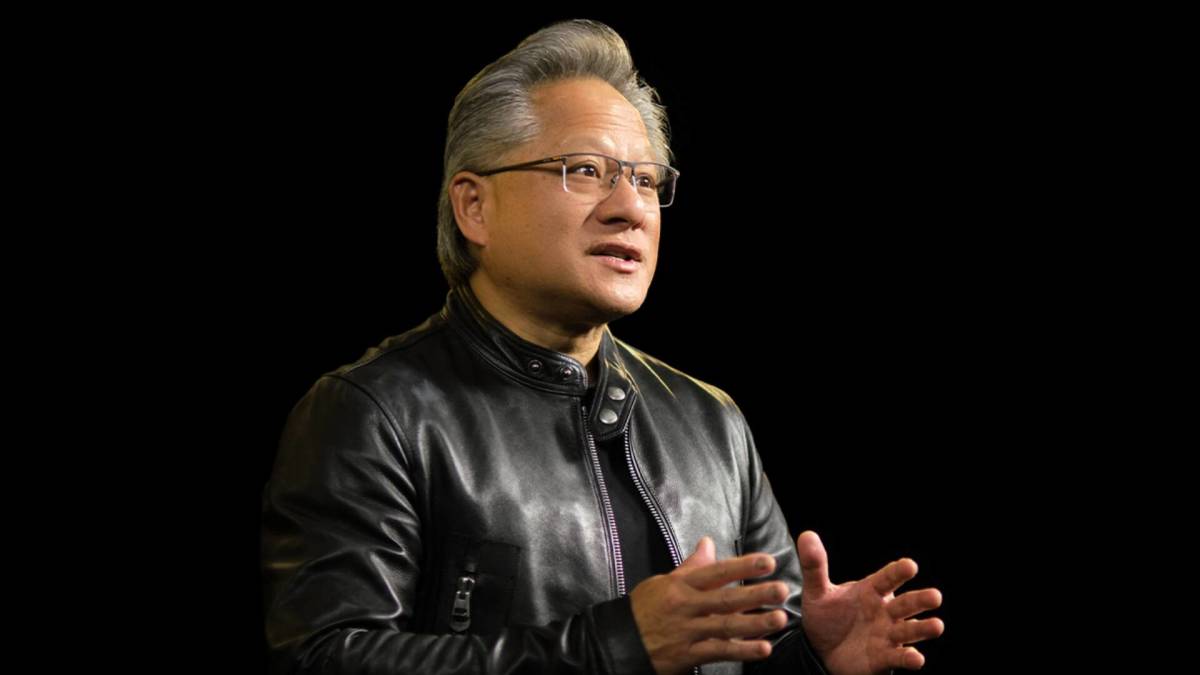

Shutterstock

Lucky for Nvidia and, ultimately, its investors, there's no lack of willingness among its biggest customers to spend.

Nvidia GPUs: Harder to buy than drugs?

Elon Musk, who runs a host of businesses alongside his obligations as Tesla (TSLA) CEO and President-elect Donald Trump's budget-slasher-in-chief, could be one of its biggest customers.

Musk's XAi startup, which aims to challenge OpenAI's ChatGPT, is looking to raise around $6 billion in fresh capital, CNBC reported last week. Such funding would value XAi at around $50 billion.

The report indicated that part of that funding will go to buying around 100,000 of Nvidia's H100 chips next year. That's on top of the 300,000 chips he wants to buy for Tesla to replace his existing cluster of H100 chips.

"[Nvidia] GPUs at this point are considerably harder to get than drugs," Musk told a Wall Street Journal CEO Council Summit last spring.

He's not far wrong.

Related: Nvidia to reap billions in big tech AI spending

Mark Zuckerberg's AI ambitions for Meta Platforms (META) , centered on the training and inferencing of its Llama supercomputer, reportedly require around 350,000 H100 chips. Upgrading those to the faster Blackwell line, which is sold out for all of next year, won't be cheap.

Meta and hyperscaler peers Amazon (AMZN) , Microsoft (MSFT) and Google parent Alphabet (GOOGL) are poised to spend around $200 billion this year alone on capital projects tied to new AI technologies.

Once-in-a-lifetime opportunity in AI

UBS analysts see that tally rising to $267 billion next year in what Amazon CEO Andy Jassy called a "once-in-a-lifetime" opportunity in generative AI.

Total AI spending, which includes software, hardware and services, is likely to more than double to around $632 billion by 2028 from around $235 billion in 2023, according to IDC estimates.

Supply challenges, however, might temper some of that demand.

Taiwan Semiconductor, (TSM,) the world's biggest contract chip manufacturer and a key Nvidia partner, is spending $65 billion on three new facilities in Arizona as it looks to expand its global footprint and wean itself from reliance on Asia-based production.

It's also reportedly cutting off customers in China ahead of the expected export restrictions on high-end tech from the Trump administration early next year in order to find capacity.

Related: Analysts revise Nvidia stock price targets as supply players update outlook

Interestingly, CFRA analyst Angelo Zino thinks Nvidia, which wins at almost everything, will likely find an advantage from Trump's expected tariffs.

"The Biden/Harris approach has been to limit China to certain advanced chips and equipment, like GPUs and" ASML-made extreme ultraviolet lithography systems, he said.

"According to our Washington Analysis team, Trump may be willing to offer more advanced AI chips to other nations, even China, if the price is right while maintaining a certain technology lead."

Some of Nvidia's biggest customers are also looking for ways to hedge against the group's dominant market position and any trade, tariff, or supply issues that could temper their ability to build, train, and ultimately monetize their AI investments.

Amazon aims to produce AI chips

Amazon told investors last month that some of its cloud customers want "better price performance on their AI workloads" as they scale their operations and look to reduce costs.

The e-retail and technology group is investing in its own high-performance chips, Trainium, which it can sell directly to clients who may not wish to wait for or pay for Nvidia's sought-after products.

Microsoft is also working up a new line of AI accelerators, which it calls the Maia 100, to train large-language models. These could both help it wean it from reliance on Nvidia and offer a lower-priced alternative to its Azure cloud customers.

That seems like a longer-term play, however, as both Microsoft and Amazon would need to enter the contract chip production market to grow their client base to scale, and there simply isn't a great deal of capacity beyond TSMC, Samsung, and New York-based GlobalFoundries.

Related: Goldman Sachs analyst updates Nvidia stock price target as AI grip tightens

Nvidia, meanwhile, looks set to go from strength to strength, with analysts forecasting October-quarter revenue of $33.12 billion, nearly double the year-earlier tally, when it reports after the close of trading on Wednesday.

Looking into the final months of Nvidia's financial year, which ends in January, Wall Street sees revenue in the region of $37 billion as Blackwell sales start to hit the group's top line.

By the end of the financial year 2026, investors see Nvidia generating $185.4 billion in sales, a staggering 205% increase from 2024 levels.

More AI Stocks:

- Nvidia to reap billions in big tech AI spending

- Analysts reset ServiceNow stock price targets after earnings, AI update

- Alphabet stock leaps as Google parent crushes Q3 earnings

"We’re now in this computer revolution,' Huang told a Goldman Sachs Talks event in September. "Now, what’s amazing is the first trillion dollars of data centers is going to get accelerated and invent this new type of software called generative AI."

"If you look at the whole IT industry up until now, we’ve been making instruments and tools that people use," he added. "For the very first time, we’re going to create skills that augment people. And so that’s why people think that AI is going to expand beyond the trillion dollars of data centers and IT and into the world of skills."

Nvidia shares closed Friday at $141.98 each, falling 3.26% on the session and extending their one-week decline to around 4.45%. That still leaves the stock up more than 50% over the past six months, nearly five times the gain for the Nasdaq, with a market value of $3.48 trillion.

Related: Veteran fund manager sees world of pain coming for stocks