Companies adapt to change or go out of business. Nvidia Corp. (NVDA) is no exception.

Founded as a graphics processing company, Nvidia has pivoted toward designing and building chips for accelerated computing that can process vast amounts of data quickly for generative AI (artificial intelligence).

Nvidia vaulted from a niche player in its early days to the forefront of the technology industry a few decades later. Its market capitalization in the trillions of dollars dwarfs many established companies, and as its name implies, Nvidia has become the envy of its rivals.

Related: Jensen Huang’s 2024 net worth & salary as Nvidia CEO

Nowadays, any company that’s associated with the development of AI uses Nvidia’s hardware and software, which the company calls “the AI factories of the future.” Generative AI, or AI that produces text, images, or video using vast amounts of data, has essentially become a new type of computing platform. Nvidia had a major part in the formation of OpenAi’s chatbot, ChatGPT — one of the best-known and most-used generative AI engines in existence.

Here’s the full story of how Nvidia grew from a small graphics processing company into the multitrillion-dollar tech and AI powerhouse it is today.

Nvidia’s origins, founding & early years

Like many well-known tech companies such as Apple (AAPL) and Hewlett-Packard (HPE) , Nvidia traces its roots to Silicon Valley, and the company has its own lore.

1990s to 2000s

Nvidia was founded by Jensen Huang and fellow engineers Chris Malachowsky and Curtis Priem. The three discussed the company’s formation over a meal at a Denny’s restaurant — the same diner chain Huang once worked at as a teenager in Portland — near San Jose, California. Their goal was to design a chip that would enable realistic 3D graphics on personal computers.

The co-founders envisioned better graphics that could be powered by a specialized electronic circuit known as a graphics processing unit (GPU). While a semiconductor can handle an abundance of tasks, it would be burdened by the multitude of calculations and processes needed to render complex graphics. Thus, a GPU could be added to a slot in a personal computer via a card to handle those graphics. Their GPU came into the market in 1999.

By the 2000s, GPUs were in high demand among gamers for their ability to accelerate graphics and image processing. Nvidia forged partnerships with Sega, which developed games and had its own gaming console, and Silicon Graphics, which produced hardware and software for graphics.

Eventually, GPUs evolved for use in non-graphical applications and paved the way for accelerated computing. In 2006, Nvidia developed the CUDA (Compute Unified Device Architecture) platform, which allowed GPUs to be used for non-graphical computing tasks, including AI and machine learning.

2010s to present

In the 2010s, Nvidia shifted toward developing hardware and software for next-level computing, which would be focused on AI. It bought some companies along the way to aid in its vision.

Nvidia started defining its GPU architectures (the underlying designs and technologies that define the capabilities and performance of its GPUs) in 2006 with the Tesla Architecture (each of its designs takes the name of a notable figure in technology, science, or mathematics).

It gained traction in the 2010s with further advancements and improvements with the Fermi Architecture in 2010, followed by the Kepler Architecture (2012), the Maxwell Architecture (2014), the Pascal Architecture (2016), and the Volta Architecture (2017).

With the Turing Architecture (2018), Nvidia focused on making enhancements with AI in mind, and others followed: Ampere Architecture (2020), Ada Lovelace Architecture (2022), Hopper Architecture (2022), and Grace Architecture (2023–2024).

Related: Can AI help you invest? How online services like ChatGPT & Magnifi work

In 2012, deep learning started to gain traction, and GPUs were being used in deep neural networks. These networks process complex patterns and relationships using vast amounts of data for tasks such as image and speech recognition and natural language processing.

Nvidia’s AI platform encompasses hardware and software that enables any organization to take advantage of developing AI tools for its own use. Its AI platform powers leading cloud services such as Amazon’s (AMZN) AWS, Alphabet’s (GOOGL) Google Cloud, and Microsoft’s (MSFT) Azure.

Nvidia’s bet on AI wasn’t a sure thing, but Huang was prepared to guide the company at the moment when the opportunity presented itself. As Alphabet and other companies started to delve into machine learning and developing the complex algorithms that would power generative AI, demand for Nvidia’s AI-focused products and services picked up.

Nvidia says that millions of developers create thousands of applications for accelerated computing, and tens of thousands of companies use its AI technologies.

How did Nvidia get its name?

The capital N at the beginning of Nvidia reportedly refers to the words “new,” “next-generation,” and “number” to symbolize performance, while vidia is derived from the Latin word "videre," which means to see. The name in its entirety can be taken to mean “new vision,” a motto reflected in the company’s logo — an all-seeing eye.

A timeline of Nvidia’s milestones over the years

1993: Nvidia is founded by Jensen Huang, Chris Malachowsky, and Curtis Priem.

1994: Nvidia forms a partnership with semiconductor supplier SGS-Thomson (now STMicroelectronics). Other key partnerships would follow in subsequent years, including Sega and Silicon Graphics.

1995: Nvidia releases the NV1, a multimedia PCI single-slot card manufactured by SGS-Thomson. It was a microprocessor used in rendering 3D images that could also connect to a joystick. Nvidia also gets its first round of outside funding from tech investment firms Sequoia and Sierra.

1996: Nvidia focuses on creating graphics for the desktop PC market. A year later, it forges relationships with key PC makers, such as Dell, Gateway, and Micron, to include Nvidia processors in their units.

1997: Nvidia launches RIVA 128, the first high-performance, 128-bit Direct3D processor to render 2D and 3D images. It becomes a hit with customers, and Nvidia wins awards. Over the next year, it sells more than 1 million units.

1998: Nvidia launches RIVA TNT, the industry's first multi-texturing 3D processor. The company moves its headquarters from Sunnyvale to Santa Clara, its present location.

1999: Nvidia goes public at $12 a share, and its stock begins trading on the Nasdaq Stock Exchange on Jan. 22. Nvidia releases the GeForce 256, the industry's first GPU, and a new way of computing is ushered in. The company also enters the commercial desktop PC market with its Nvidia Vanta 3D graphics processor.

More company histories and timelines:

- History of Tesla & its stock: Timeline, facts & milestones

- History of McDonald's: Timeline and Facts

- Bitcoin's history: A timeline of milestones, highs, & lows

2000: Nvidia becomes the supplier of the graphics processor for Microsoft's Xbox gaming console.

2001: Nvidia supplies GPUs for Apple’s “personal supercomputer,” the Power Mac G4. It’s a partnership that will last into the 2020s to supply GPUs on Mac computers, even as Apple eventually designs its own integrated GPUs with its line of M1 chips in the 2020s.

Nvidia is added to the Nasdaq 100 Index in May and then to the S&P 500 Index in November. At the end of 2001, its revenue exceeds $1 billion.

2006: Nvidia releases CUDA, which provides open parallel processing capabilities of GPUs to science and research. It allows software developers to use Nvidia’s GPUs for general-purpose processing, in what’s known as GPGPU. Its Tesla Architecture improves programmability and efficiency.

2007: Nvidia launches Tesla products (no relation to Elon Musk’s Tesla TSLA) for use in scientific and engineering computing. A Tesla could provide the performance of as many as 100 CPUs in a single GPU.

2010: Fermi Architecture makes enhancements in parallel computing capabilities.

2012: Nvidia releases the AlexNet neural network, which the company says leads to a breakthrough in the era of modern AI. The Kepler Architecture brings significant improvements in power efficiency.

2014: The company’s Maxwell Architecture further improves power performance and efficiency.

2016: Nvidia donates a DGX-1 supercomputer to startup OpenAI for work on AI's "toughest problems." That proves to be a useful gift. In 2022, OpenAI gives open access to its virtual assistant chatbot, ChatGPT, which helps to popularize AI among the public.

The Pascal Architecture brings major improvements in performance and memory bandwidth.

x.com

2017: The Volta Architecture powers Nvidia’s Tesla V100 Tensor Core, its data center GPU specifically designed for deep learning, high-performance computing (HPC), data science, and graphics.

2018: The Turing Architecture makes further AI-based enhancements with the Tensor Cores and makes significant improvements in graphics rendering and AI integration.

2020: The Ampere Architecture further enhances AI capabilities and improves power efficiency and performance.

Related: How to invest in AI: From individual stocks to ETFs

2022: The Ada Lovelace Architecture advances computational efficiency and AI-driven graphics rendering for GPUs. The Hopper Architecture — geared toward Tensor Cores — focuses on AI and HPC, and the design significantly speeds up large language models (LLMs) by 30 times compared to the previous generation.

2023–2024: The Grace Architecture (announced in 2021) is designed for AI and HPC and targets data centers to improve performance for complex computational tasks.

June 7, 2024: Nvidia conducts its sixth stock split, turning each existing share into 10 shares worth 1/10th of the price.

June 18, 2024: Nvidia, with a market capitalization of $3.3 trillion, moves past Microsoft and Apple to become the world’s most valuable publicly traded company. Huang’s 3.8% stake puts his net worth at at least $126 billion and within reach of the ranks of the 10 wealthiest people.

How has Nvidia’s stock price performed over the years?

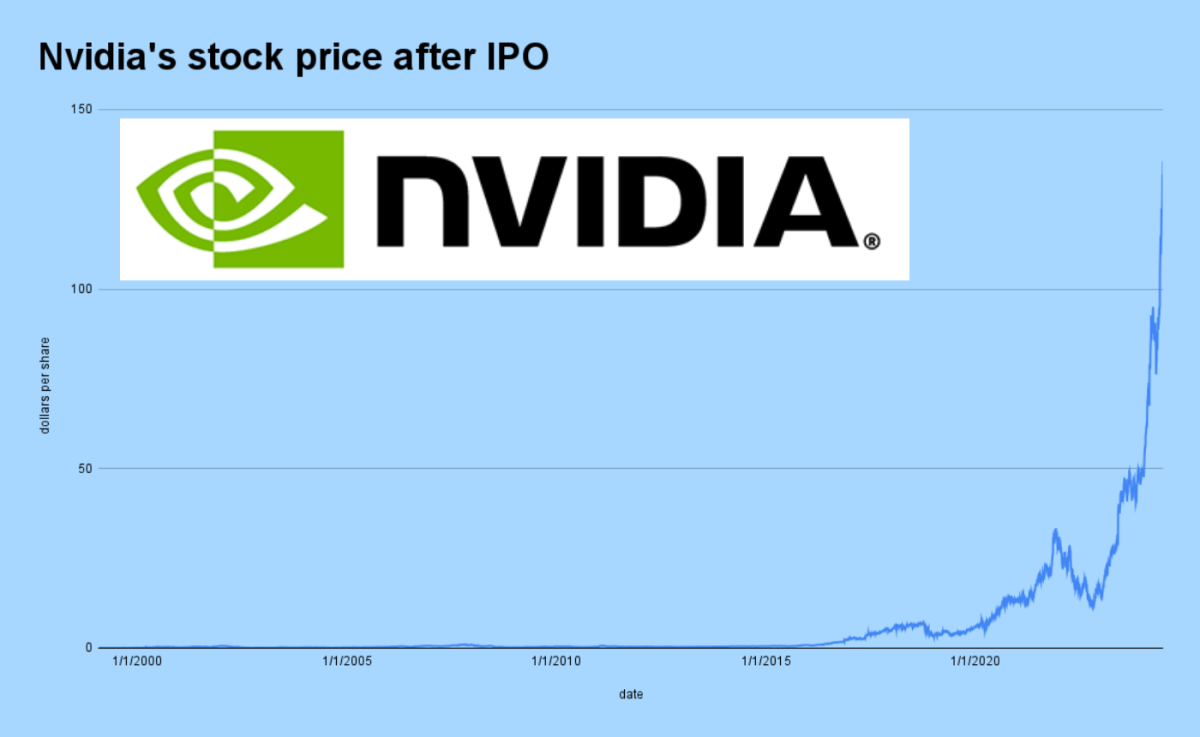

Nvidia’s stock price has performed better than most of its peers in the tech sector. A $10,000 investment in the company on its first day of trading in 1999 would be worth $33.9 million in mid-June 2024.

Nvidia’s meteoric rise in share price has occurred largely during the post-pandemic era starting in late 2022, as investment in the AI sector picked up tremendous momentum.

Related: Veteran fund manager picks favorite stocks for 2024