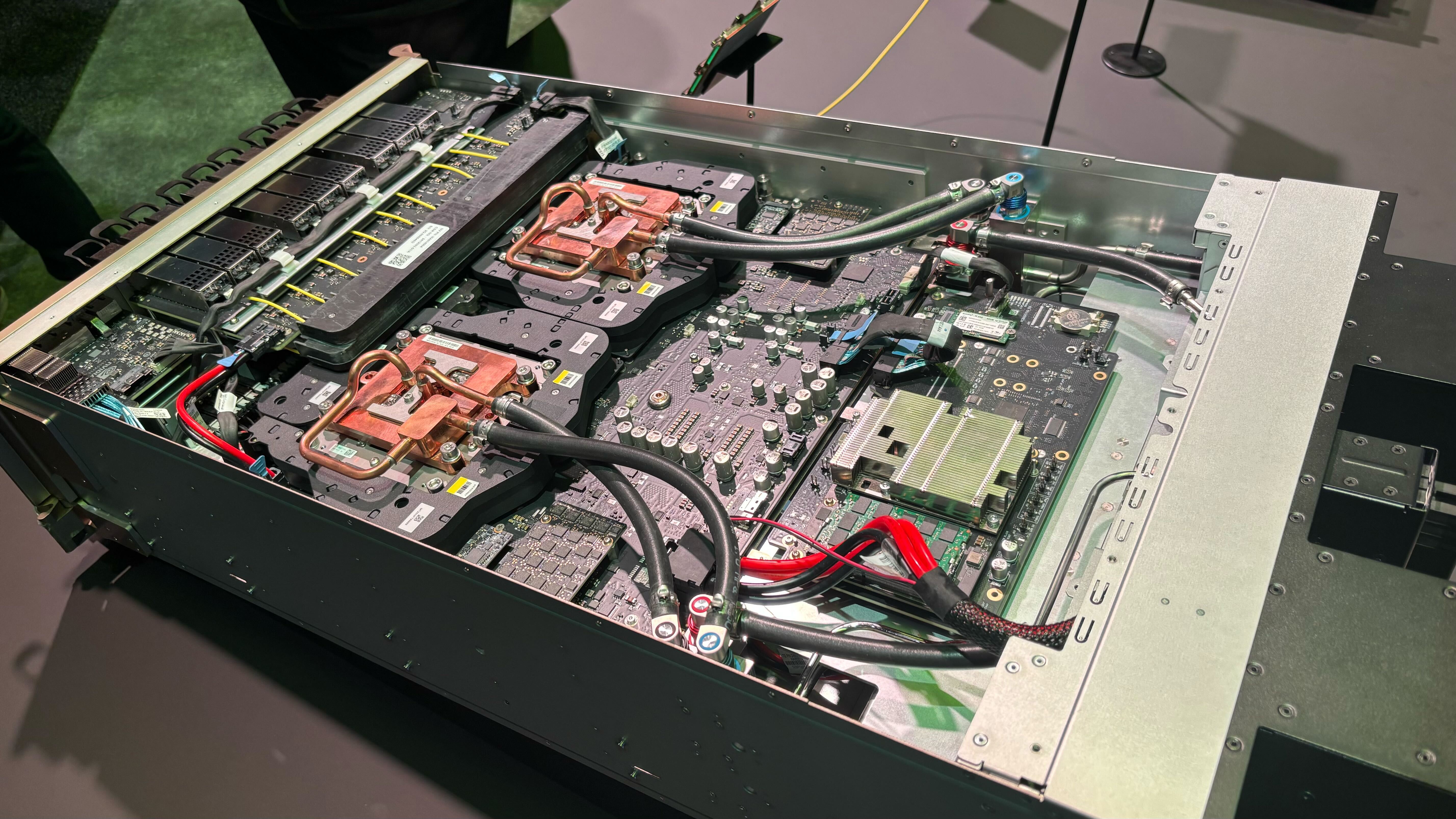

The Nvidia Blackwell Ultra B300 data center GPU was announced today during CEO Jensen Huang's keynote at GTC 2025 in San Jose, CA. Offering 50% more memory and FP4 compute than the existing B200 solution, it raises the stakes in the race to faster and more capable AI models yet again. Nvidia says it's "built for the age of reasoning," referencing more sophisticated AI LLMs like DeepSeek R1 that do more than just regurgitate previously digested information.

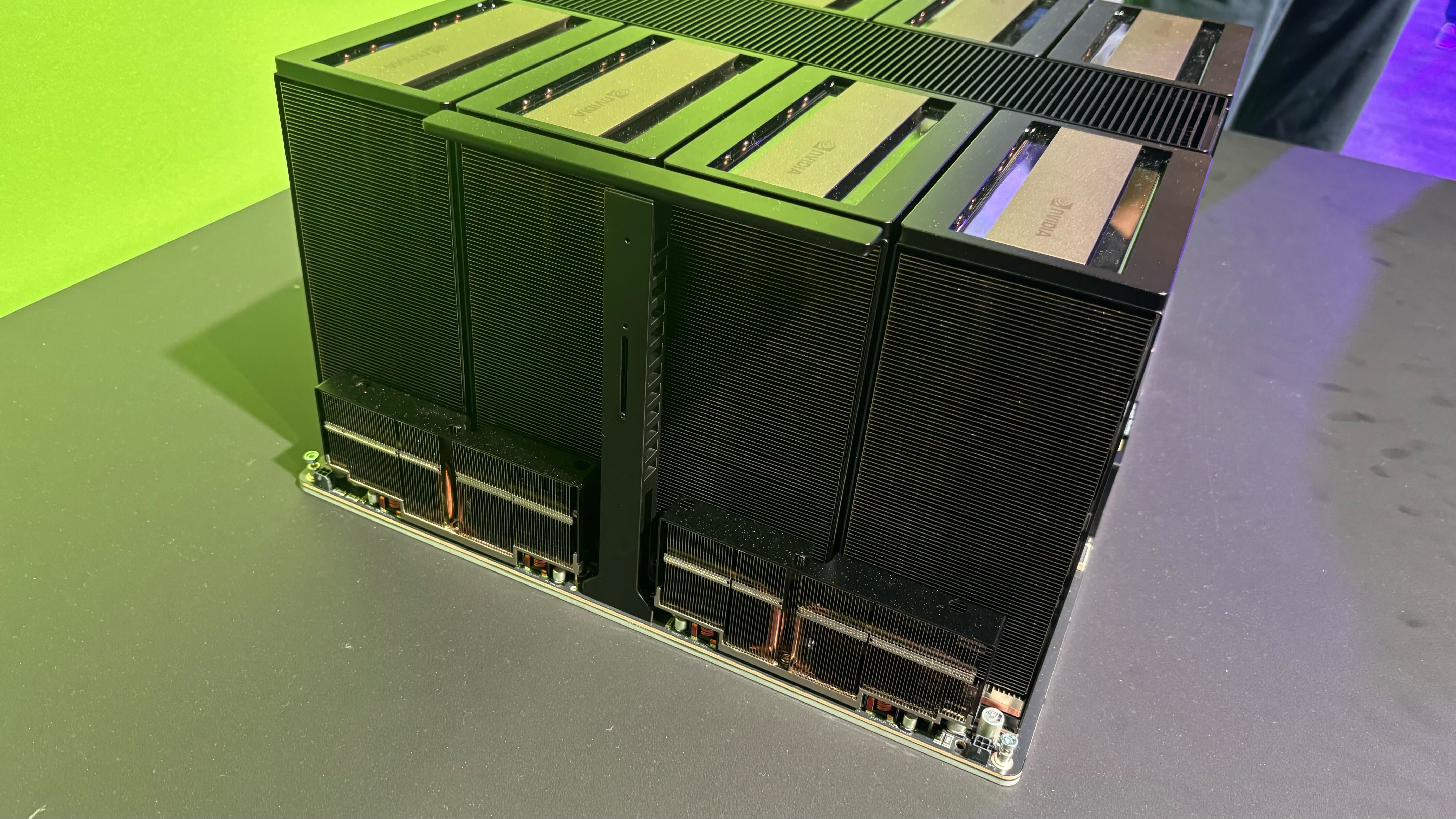

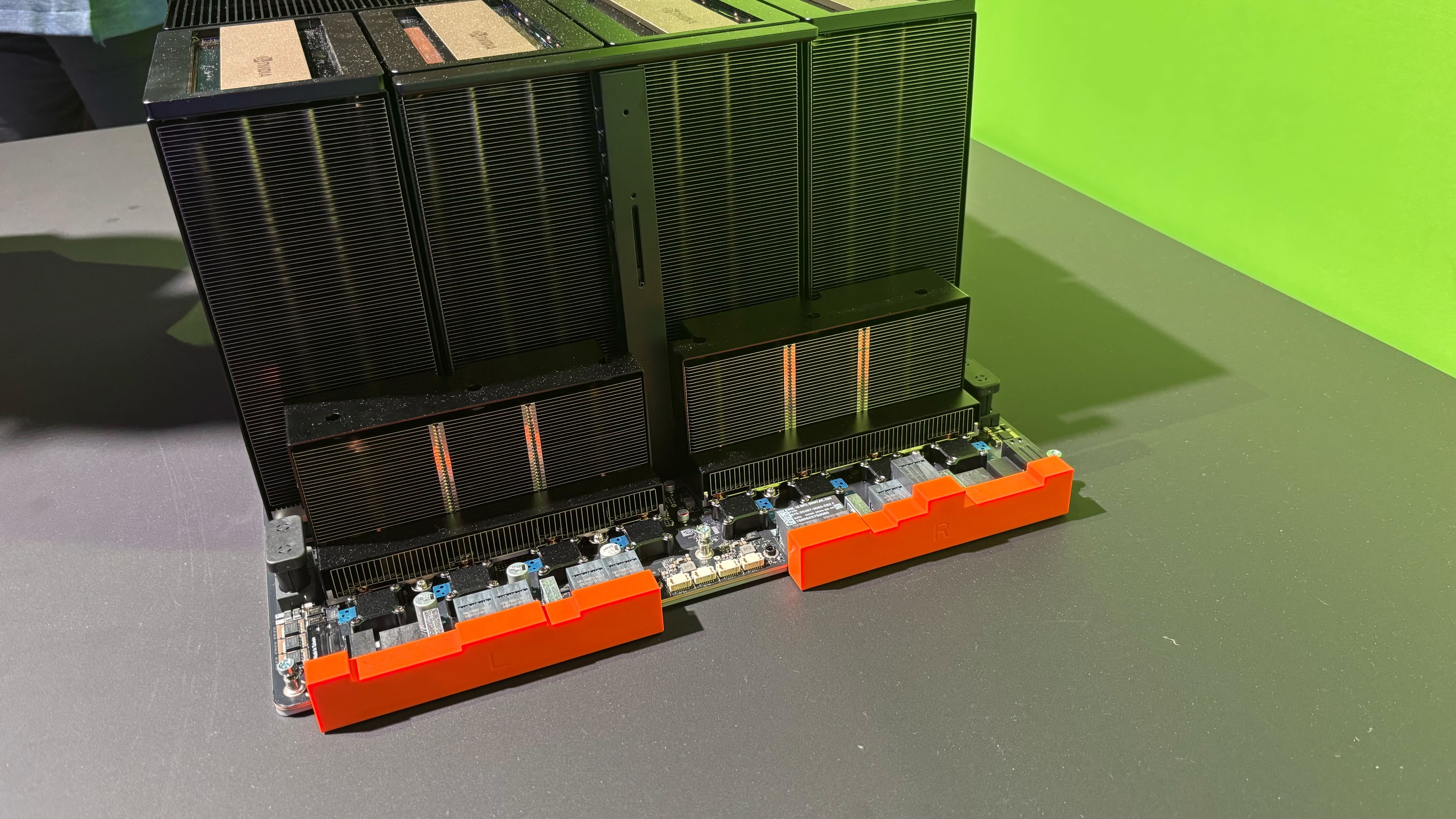

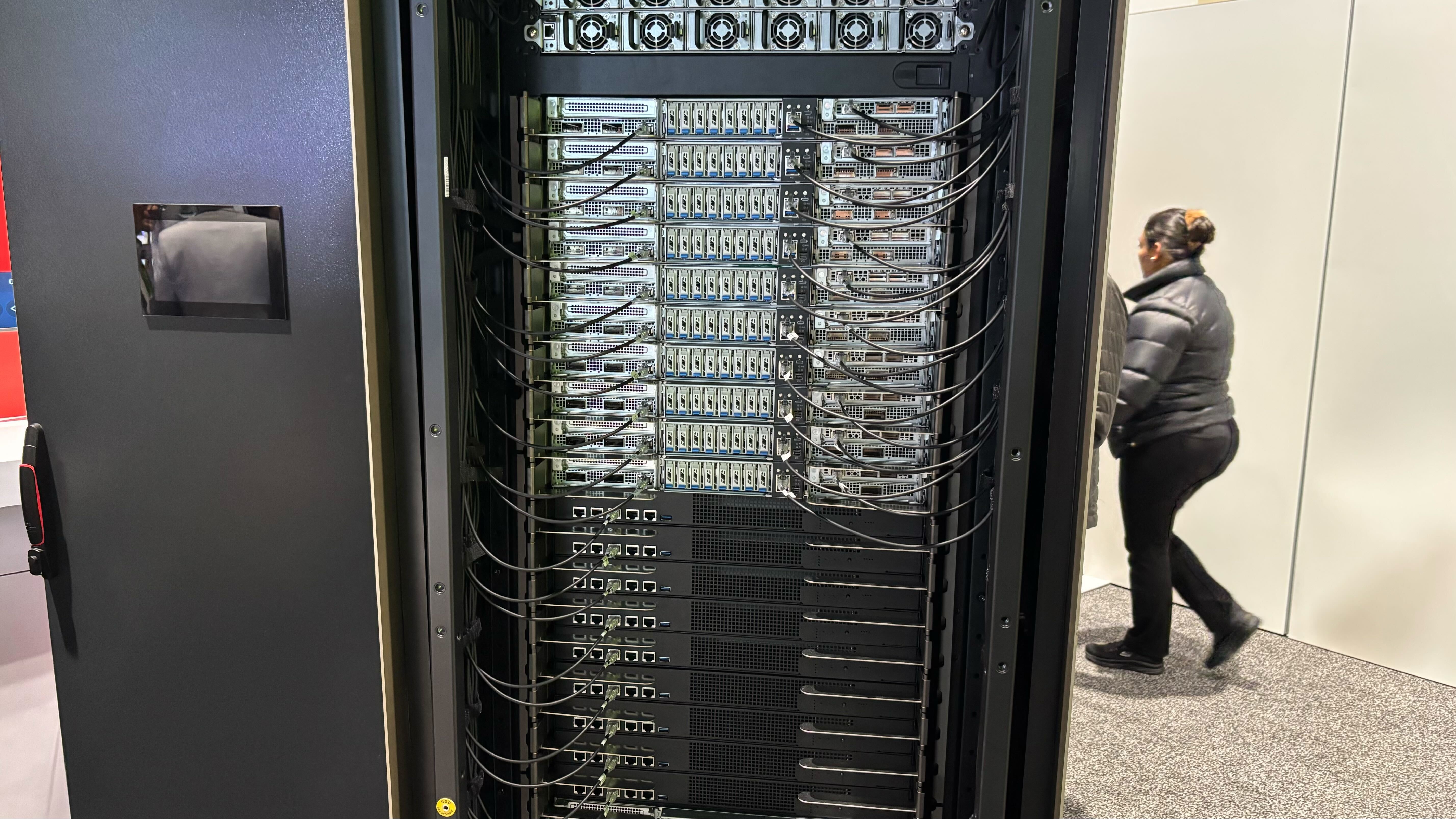

Naturally, Blackwell Ultra B300 isn't just about a single GPU. Along with the base B300 building block, there will be new B300 NVL16 server rack solutions, a GB300 DGX Station, and GB300 NV72L full rack solutions. Put eight NV72L racks together, and you get the full Blackwell Ultra DGX SuperPOD: 288 Grace CPUs, 576 Blackwell Utlra GPUs, 300TB of HBM3e memory, and 11.5 ExaFLOPS of FP4. These can be linked together in supercomputer solutions that Nvidia classifies as "AI factories."

While Nvidia says that Blackwell Ultra will have 1.5X more dense FP4 compute, what isn't clear is whether other compute have scaled similarly. We would expect that to be the case, but it's possible Nvidia has done more than simply enabling more SMs, boosting clocks, and increasing the capacity of the HBM3e stacks. Clocks may be slightly slower in FP8 or FP16 modes, for example. But here are the core specs that we have, with some inference of other data (indicated by question marks).

We asked for some clarification on the performance and details for Blackwell Ultra B300 and were told: "Blackwell Ultra GPUs (in GB300 and B300) are different chips than Blackwell GPUs (GB200 and B200). Blackwell Ultra GPUs are designed to meet the demand for test-time scaling inference with a 1.5X increase in the FP4 compute." Does that mean B300 is a physically larger chip to fit more tensor cores into the package? That seems to be the case, but we're awaiting further details.

What's clear is that the new B300 GPUs will offer significantly more computational throughput than the B200. Having 50% more on-package memory will enable even larger AI models with more parameters, and the accompanying compute will certainly help.

Nvidia gave some examples of the potential performance, though these were compared to Hopper, so that muddies the waters. We'd like to see comparisons between B200 and B300 in similar configurations — with the same number of GPUs, specifically. But that's not what we have.

By leveraging FP4 instructions, using B300 alongside its new Dynamo software library to help with serving reasoning models like DeepSeek, Nvidia says an NV72L rack can deliver 30X more inference performance than a similar Hopper configuration. That figure naturally derives from improvements to multiple areas of the product stack, so the faster NVLink, increased memory, added compute, and FP4 all factor into the equation.

In a related example, Blackwell Ultra can deliver up to 1,000 tokens/second with the DeepSeek R1-671B model, and it can do so faster. Hopper, meanwhile, only offers up to 100 tokens/second. So, there's a 10X increase in throughput, cutting the time to service a larger query from 1.5 minutes down to 10 seconds.

The B300 products should begin shipping before the end of the year, sometime in the second half of the year. Presumably, there won't be any packaging snafus this time, and things won't be delayed, though Nvidia does note that it made $11 billion in revenue from Blackwell B200/B100 last fiscal year. It's a safe bet to say it expects to dramatically increase that figure for the coming year.