According to a report from Morgan Stanley cited by United Daily News, Nvidia and its partners will charge roughly $2 million to $3 million per AI server cabinet equipped with Nvidia's upcoming Blackwell GPUs. The industry will need tens of thousands of AI servers in 2025, and their aggregate cost will exceed $200 billion.

So far, Nvidia has introduced two 'reference' AI server cabinets based on its Blackwell architecture: the NVL36, equipped with 36 B200 GPUs, which is expected to cost from $2 million ($1.8 million, according to previous reports), and the NVL72, with 72 B200 GPUs, which is projected to start at $3 million.

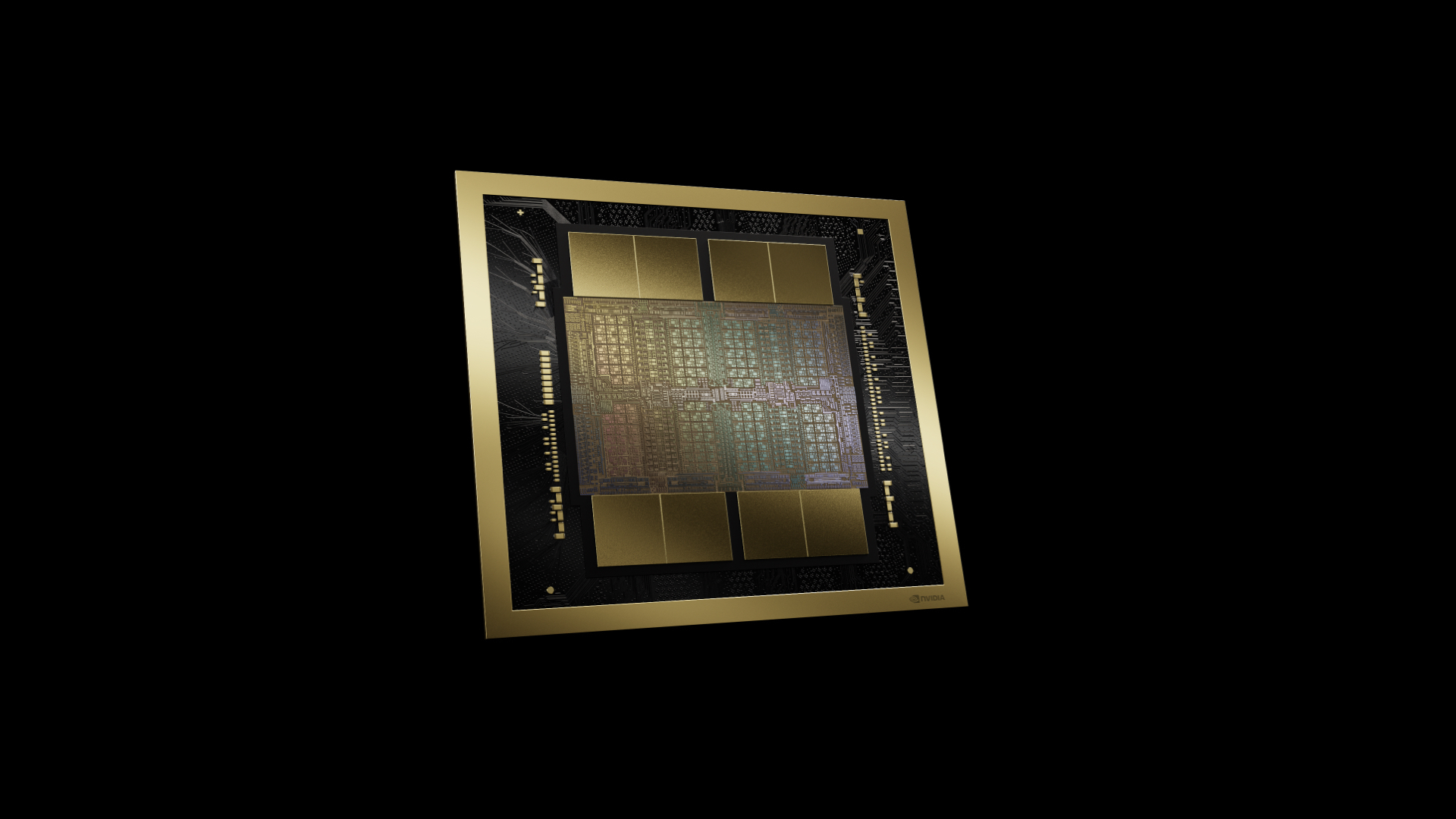

NVL36 and NVL72 server cabinets (or PODs) will be available not only from Nvidia itself as well as its traditional partners, such as Foxconn, the world's largest supplier of AI servers, Quanta, and Wistron but also from newcomers, such as Asus. Assuming that Nvidia's partner TSMC can produce enough B100 and B200 GPUs using its 4nm-class lithography process technology and packaged using its chip-on-wafer-on-substrate (CoWoS) technology, availability from newcomers should ease the tight supply of actual Blackwell-based machines.

Based on the UDN report citing Morgan Stanley, Nvidia anticipates shipping between 60,000 and 70,000 B200 server cabinets, each priced between $2 million and $3 million next year. It translates to an estimated annual revenue of at least $210 billion from these machines, which means that companies like AWS and Microsoft will spend even more. It brings us to math by Sequoia Capital partner David Cahn, who believes that the AI industry has to earn around $600 billion to pay off machines and data centers.

Demand for AI servers is setting records and will not slow down any time soon, which will benefit both makers of AI servers and developers of AI GPUs. Despite the influx of competitors, Nvidia's GPUs are set to remain the de facto standard for training and many inference workloads, which benefits the company.

Based on talks with industry sources, the same Morgan Stanley report revealed that international giants such as Amazon Web Services, Dell, Google, Meta, and Microsoft will all adopt Nvidia's Blackwell GPU for AI servers. As a result, demand is now projected to exceed expectations, so Nvidia is prompted to increase its orders with TSMC by approximately 25%, the report claims.