Most AI chatbots require a ton of processing power, so much so that they usually live in the cloud. Sure, you can run ChatGPT on your PC or even a Raspberry Pi, but the local program is sending your prompts over the Internet to OpenAI’s servers for a response. Some LLMs (Large Language Models) can run locally, but require a powerful GPU with a lot of VRAM. You couldn’t possibly run a chatbot locally on a mobile device? Or could you?

A brand new open-source project called MLC LLM is lightweight enough to run locally on just about any device, even an iPhone or an old PC laptop with integrated graphics. And, once you have MLC LLM installed, you can turn off the Internet, because all of the data and processing is happening on your system.

The “MLC” stands for Machine Learning Compilation, a process the developers of this project used to slim the model down and make it easy-to-process. The same group of researchers, who go by the name MLC AI, have a related project called Web LLM, which runs the chatbot entirely in a web browser. The project also includes additions from Carnegie Mellon University's Catalyst program, the SAMPL machine learning research group and the University of Washington, Shanghai Jiao Tong University, and OctoML.

MLC LM uses Vicuna-7B-V1.1, a lightweight LLM that is based on Meta's LLaMA and was trained in March and April 2023. It’s not nearly as good as GPT3.5 or GPT4, but it’s pretty decent considering its size.

Right now, MLC LLM is available for Windows, Linux, macOS and iOS with easy-to-follow instructions posted by the projects’ founders on their site and the full source code available on GitHub. There's no version for Android as of yet.

There are many reasons why having a local chatbot would be preferable to using a cloud-hosted solution such as ChatGPT. Your data stays local so your privacy is intact, you don’t need Internet access to use it and you might have more control over the output.

Installing and Running MLC LLM on an iPhone

You won't find MLC LLM in the App Store. While anyone can install the PC versions, the iOS version requires you to use TestFlight, Apple’s developer system, on your device and there’s a limit of 9,000 iOS users who can install the test app at one time. You can also compile it yourself from the source code. It's supposed to work on any iPhone, iPad or iPod Touch that runs iOS 13 or higher, but in our experience, it requires one of the more powerful Apple devices with plenty of RAM.

Senior Editor Andrew E. Freedman installed the MLC LLM test app on his iPhone 11 Pro Max, a 3GB download. However, on launch, the app crashed after showing the message “[System] Initialize…” every time he ran it.

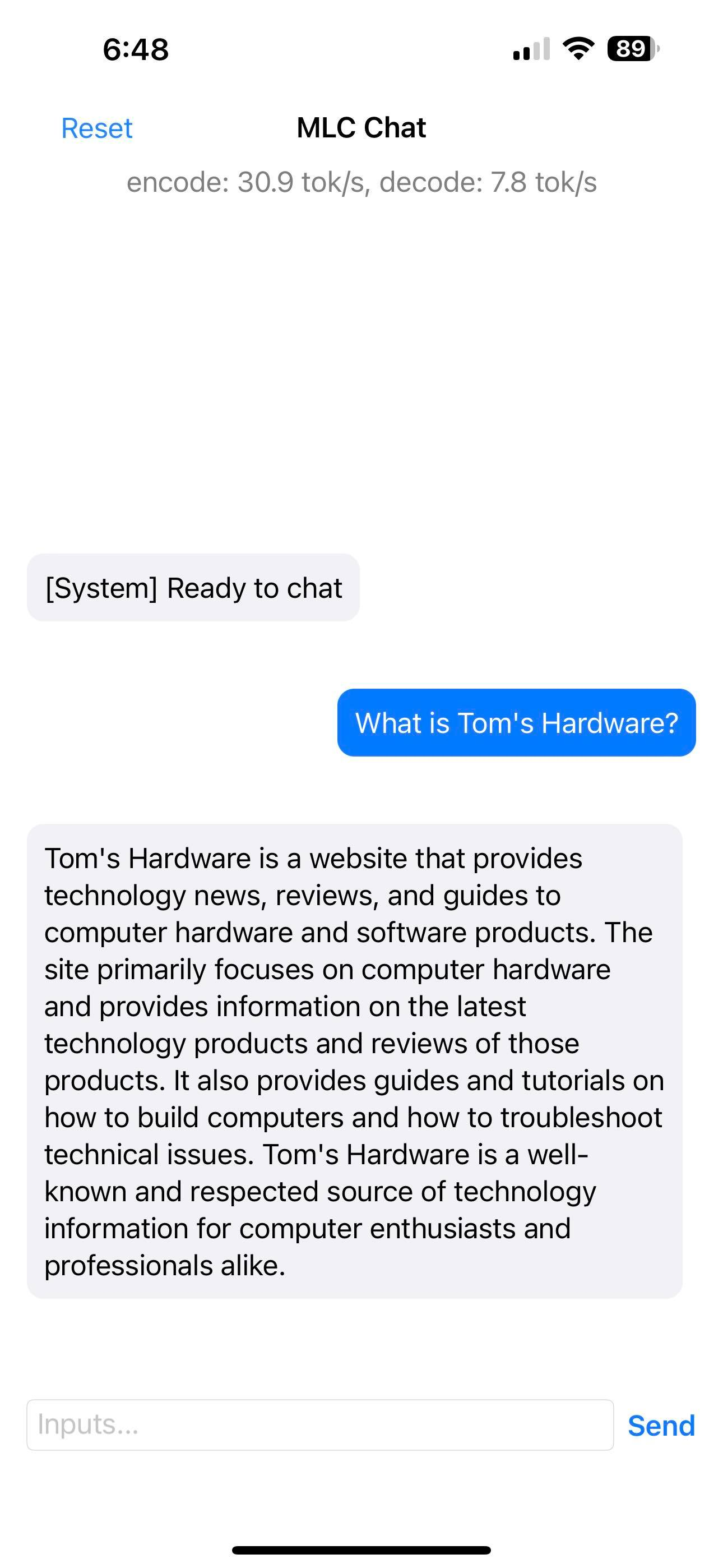

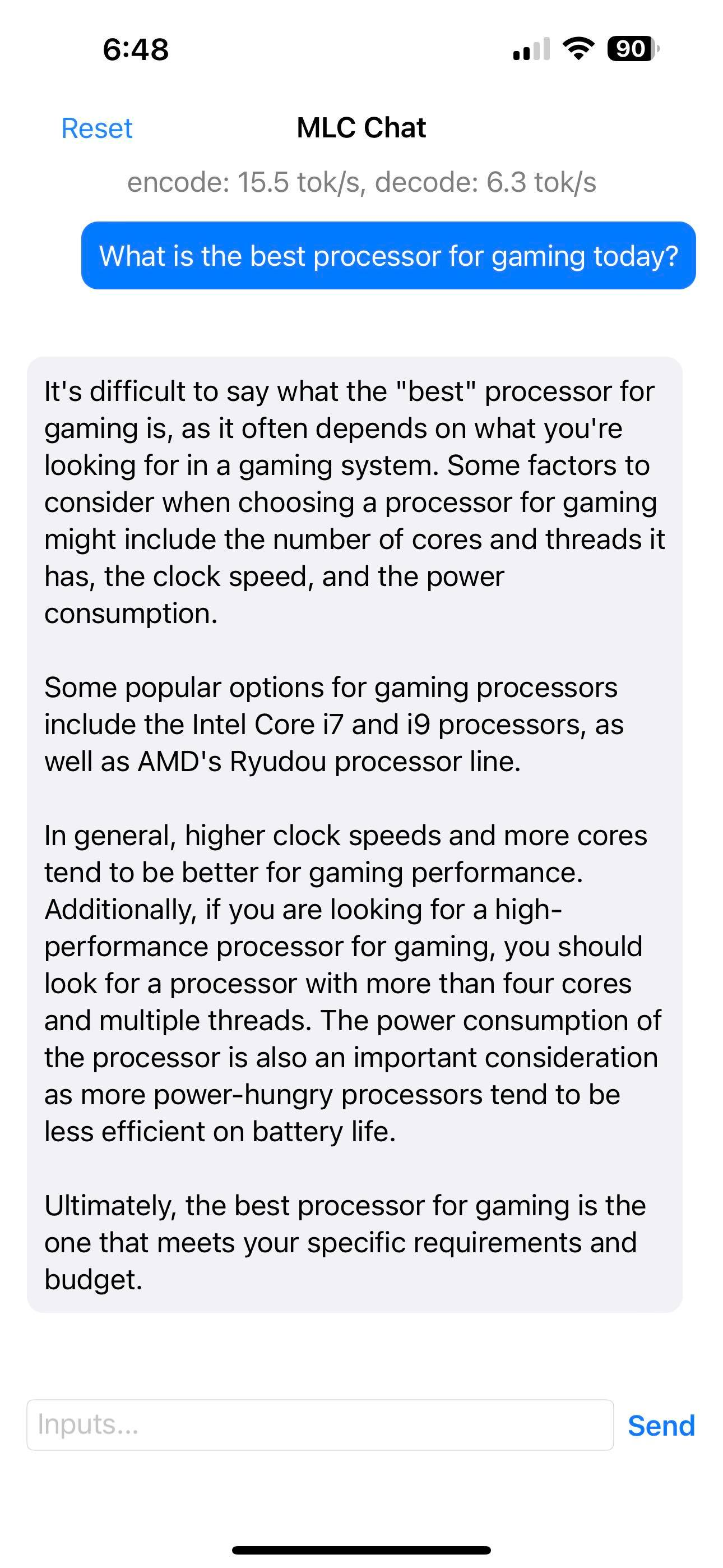

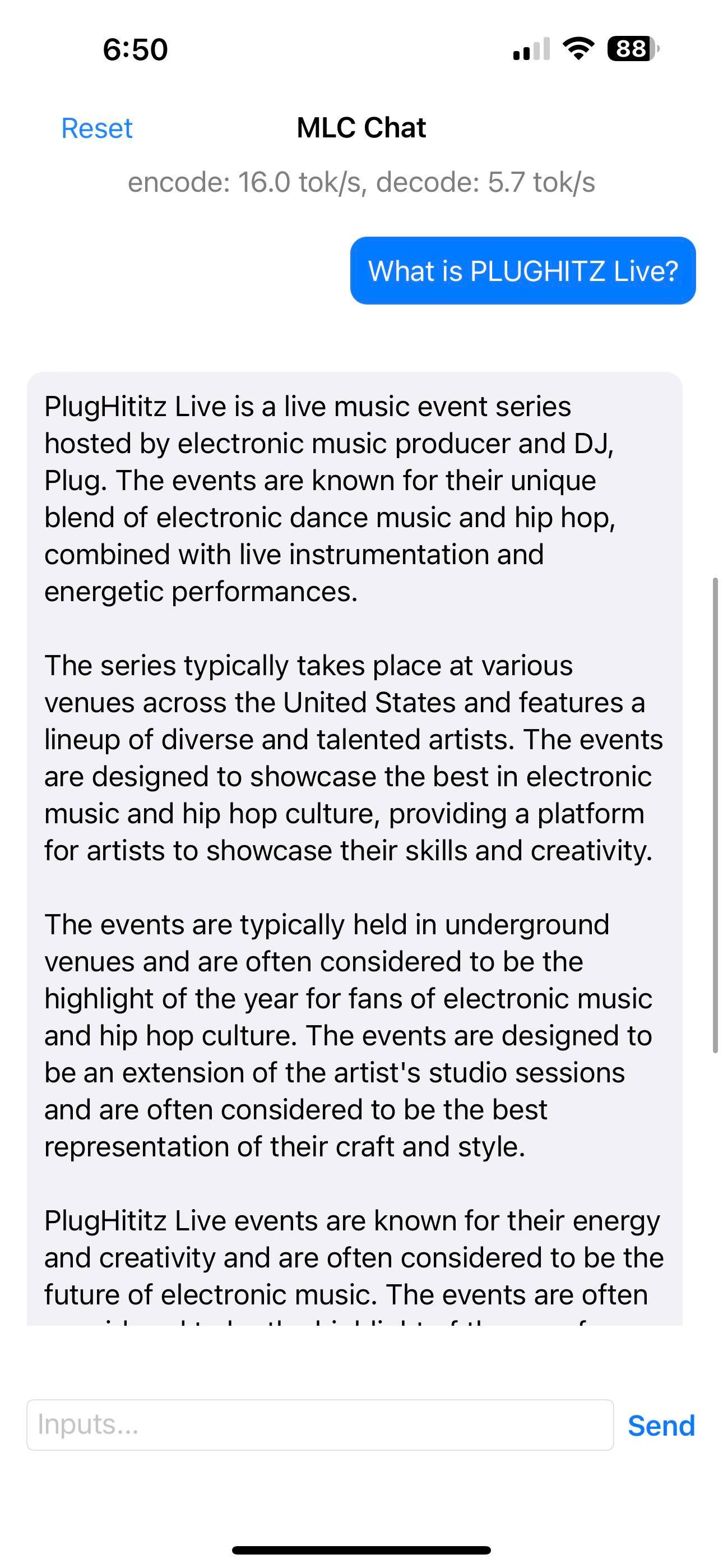

Later I asked my friend, Scott Ertz of PLUGHITZ Live, to try installing MLC LLM on his iPhone 14 Pro Max, which is more powerful than the iPhone 11 and has 6GB of RAM instead of 4GB. He had to try a couple of times to get the install to work, but once installed, the app itself worked without crashing. However, he said that the app dominated the phone, using all of its resources and slowing other apps down. He then tested with an iPhone 12 Pro Max, which also has 6GB of RAM, and found that it also worked.

He asked the MLC LLM a few questions and the responses were mixed. When he asked it to choose the best processor for gaming, it gave a very vague, non-committal answer where it didn't mention any specific models and just said to go for more cores and higher clock speeds. When he asked it about Tom's Hardware, it got a reasonable answer about what we do. But when he asked what was PLUGHITZ Live, a tech podcast company, he got a very odd answer saying that it's an electronic music concert series run by "DJ Plug."

Installing MLC LLM on a PC

I had no problem installing and running MLC LLM on my ThinkPad X1 Carbon (Gen 6) laptop, which runs Windows 11 on a Core i7-8550U CPU and an Intel UHD 620 GPU. This is a five-year-old laptop with integrated graphics and no VRAM.

To set up MLC LLM, I first had to install Miniconda for Windows, which is a light version of the popular Conda package manager (you can use the full Anaconda version). With Conda, you can create separate environments that have their own set of Python packages that don’t conflict with the other packages on your system.

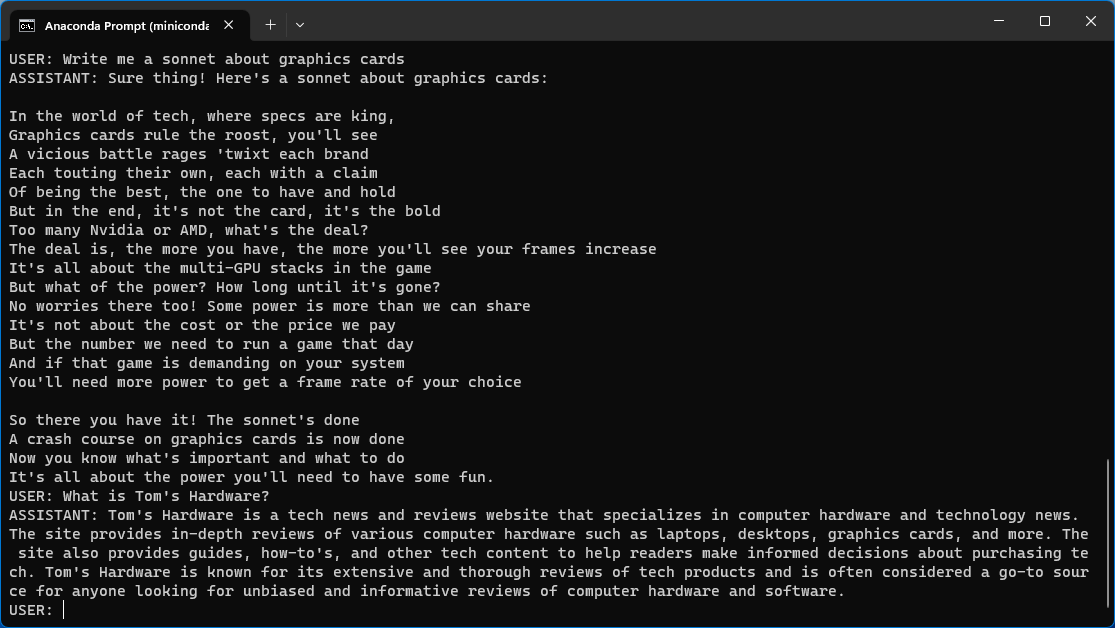

After installing Miniconda, I launched the Anaconda Prompt (a version of the command prompt that runs Conda). Then I used the set of instructions on mlc.ai to create an environment called mlc-chat and download the language model into it. The Vicuna-7B-V1.1 model took up just 5.7GB of storage space and the rest of the project uses up an additional 350MB or so.

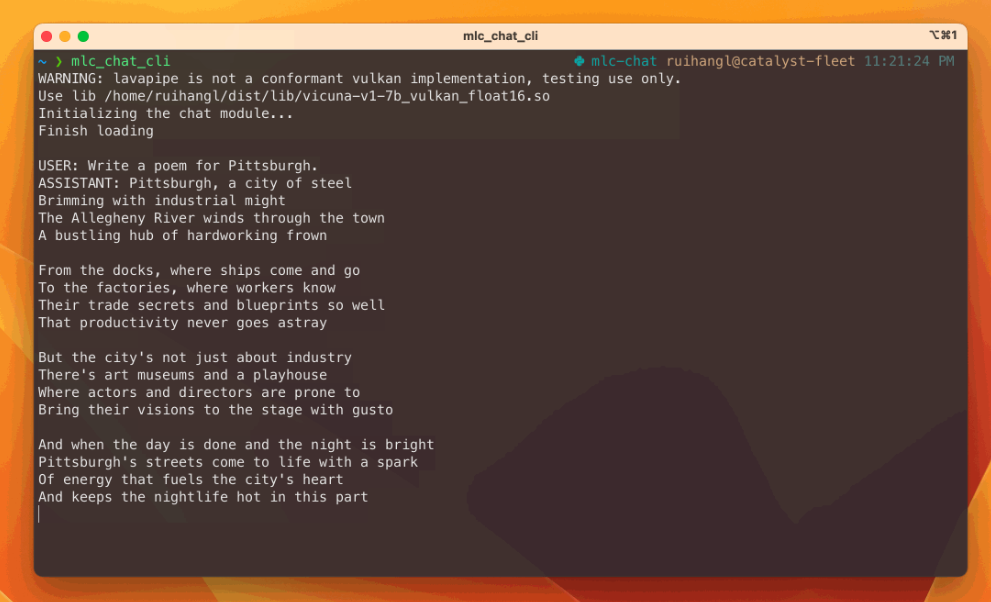

The chatbot runs in a command prompt window. To launch it, I had to activate the mlc-chat conda environment and enter the command mlc_chat_cli.

Using MLC LLM

When you launch MLC LLM’s chatbot, it first asks you for your name. Then it greets you and asks how it can help you and you can ask it questions.

On my laptop, the bot was really slow to respond, taking close to 30 seconds to begin entering a response to any query. Like ChatGPT, it types the answer while you watch so it can take a minute or two to see a complete response. I assume that this would go faster on a more powerful device.

The quality of answers I got from the LLM was nothing to type home about. It gave accurate answers to some factual questions but made up a fictional biography for me. It had the ability to write poetry, but did an awful job. It also was incapable of taking follow-up questions as it treated each prompt as a completely new conversation.

When I asked the bot to tell me who the fifth president of the U.S. was, it gave an atypical but truthful response, naming Thomas Jefferson. While most people would say that James Monroe is the correct answer, because he was the fifth person to be president, if you count presidential terms, Jefferson’s second term is the fifth overall.

I asked MLC LLM chatbot “what is Tom’s Hardware” and got a very accurate answer, describing our website and the different types of content we create. However, when I asked it about myself (“who is Avram Piltch?”), I got a completely fictional story where it misspelled my last name and made up a non-existent company I was apparently CEO of, making augmented-reality contact lenses. The response is below:

“Avram Piltsh is the CEO and co-founder of OneSight, a company that aims to develop and market a contact lens with the ability to read phone numbers with a camera or even to access files through contact lenses. He is known for his work on the development of the contact lens technology that allows users to read phone numbers with their eyes. He is also a well-known entrepreneur and inventor who has made significant contributions to the development of the technology industry. He is known for his passion for innovation and his dedication to finding solutions to complex problems in the tech industry.”

To my knowledge, there is no one named Avram Piltsh (with an S) and I could not find a company named OneSight that makes AR contact lenses. There is a charity called OneSight EssilorLuxottica Foundation that gives glasses to people who otherwise wouldn’t have access to them.

When I asked it to tell me more about Avram’s company, it got confused, because it can’t follow references to the prior question.

I also asked MLC LLM chatbot to write a sonnet about graphics cards and it wrote a really boring poem that doesn’t follow the rhyme scheme of a sonnet and has 15 lines instead of 14.

In the world of tech, where specs are king,

Graphics cards rule the roost, you'll see

A vicious battle rages 'twixt each brand

Each touting their own, each with a claim

Of being the best, the one to have and hold

But in the end, it's not the card, it's the bold

Too many Nvidia or AMD, what's the deal?

The deal is, the more you have, the more you'll see your frames increase

It's all about the multi-GPU stacks in the game

But what of the power? How long until it's gone?

No worries there too! Some power is more than we can share

It's not about the cost or the price we pay

But the number we need to run a game that day

And if that game is demanding on your system

You'll need more power to get a frame rate of your choice

That poem would make a Vogon blush. But it’s really impressive to be able to run an LLM chatbot on an old PC or, potentially, on a phone. Perhaps a future language model will deliver a more satisfying experience.