Scientists have designed a transistor that stores and processes information like the human brain and can perform cognitive tasks that most artificial intelligence (AI) systems today struggle with.

This technology, known as a "synaptic transistor," mimics the architecture of the human brain — in which the processing power and memory are fully integrated and found in the same place. This differs from conventional computing architecture, in which the processor and memory are physically separate components.

"The brain has a fundamentally different architecture than a digital computer," Mark Hersam, research co-leader and professor of material science, engineering and computing at Northwestern University, said in a statement. "In a digital computer, data move back and forth between a microprocessor and memory, which consumes a lot of energy and creates a bottleneck when attempting to perform multiple tasks at the same time."

Because of its full integration between computing power and memory, the synaptic transistor can achieve significantly higher energy efficiency and move data extremely fast, researchers wrote in the study, published Dec. 20 in the journal Nature. This new form of computing architecture is needed, the scientists said, because relying on conventional electronics in the age of big data and the growing demand for AI computing workloads will lead to unprecedented energy consumption.

Related: In a 1st, scientists combine AI with a 'minibrain' to make hybrid computer

Scientists have built synaptic transistors before, the researchers said, but they only operated at extremely cold temperatures. But the new transistor uses materials that work at room temperature.

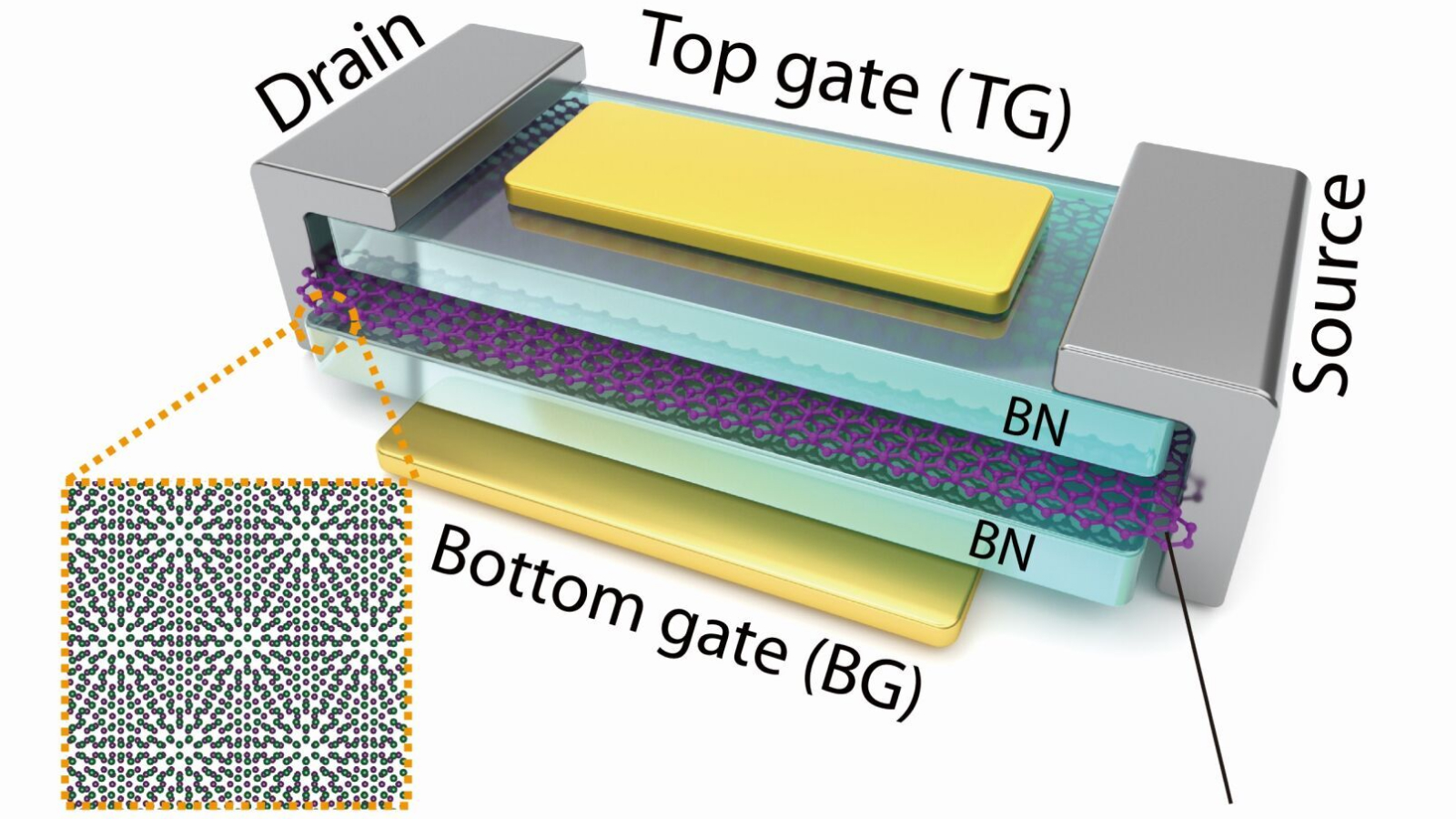

Conventional electronics pack transistors onto a silicon wafer, but in the new synaptic transistor, the researchers stacked bilayer graphene (BLG) and hexagonal boron nitride (hBN) and purposefully twisted them to form what's known as a moiré pattern.

When they rotated one layer relative to the other, new electronic properties emerged that didn't exist in either layer separately. Getting the transistor to work at room temperature required using a specific degree of twist and adopting a near-perfect alignment between hBN and BLG.

The researchers tested the chip by first training it on data so it could learn to recognize patterns. Then they showed the chip new sequences that were similar to the training data but not the same. This process, known as associative learning, is one that most machine learning systems can't perform well.

"If AI is meant to mimic human thought, one of the lowest-level tasks would be to classify data, which is simply sorting into bins," Hersam said. "Our goal is to advance AI technology in the direction of higher-level thinking. Real-world conditions are often more complicated than current AI algorithms can handle, so we tested our new devices under more complicated conditions to verify their advanced capabilities."

In one exercise, the researchers trained the AI to detect the sequence 000. The researchers then asked the AI to identify similar patterns — for example, by presenting it with 111 and 101. The sequences 000 and 111 aren't the same, but the AI figured out they were both three digits in a row.

This seems simple enough, but today's AI tools struggle with this type of cognitive reasoning. In further experiments, the researchers also threw "curveballs" at the AI by giving it incomplete patterns. But the AI using the chip still demonstrated associative learning, the researchers said.

"Thus far, we have only implemented the moiré synaptic transistor with hBN and BLG," Hersam told Live Science in an email. "However, there are many other two-dimensional materials that can be stacked into other moiré heterostructures. Therefore, we believe that we have only just begun to scratch the surface of what is possible in the emerging field of moiré neuromorphic computing."

The features the scientists observed in this experimental transistor could prime future generations of the technology to be used in highly energy-efficient chips that power advanced AI and machine learning systems, Hersam added.