In the tech world, you can't walk far without hearing about NVIDIA. This tech company, which has spread across hardware and software, is a true behemoth that seems to be at the forefront of technological innovation lately. NVIDIA's investments in emerging markets and continued dominance in established ones have results in NVIDIA becoming one of the most valuable companies on the planet, so it's no surprise that NVIDIA was a common presence at GDC 2024 in San Francisco.

I didn't just regularly see NVIDIA as I explored GDC; I met with team members in a one-on-one briefing to demo the various technologies NVIDIA is showcasing at this year's Game Developer's Conference. Most of it you can actually go see for yourself right now, but I'll go ahead and give you all the details alongside what I learned at GDC. It shouldn't surprise anyone, but NVIDIA's theme of the year at GDC was AI, AI, AI.

NVIDIA at GDC — Using AI to enhance your games

A long-standing part of NVIDIA's business has been utilizing a combination of its impressive RTX graphics processing units (GPUs) and AI-driven software to improve not only the performance of playing PC games but even actively improve visuals and detail. NVIDIA is continuing to invest in this area, and I was able to see a variety of tools in action, including NVIDIA RTX Remix, NVIDIA Freestyle, and NVIDIA DLSS 3.5.

DLSS 3.5

NVIDIA DLSS isn't a new technology — It has been around basically since the advent of NVIDIA's GeForce RTX GPUs, which replaced the aging GTX brand with more powerful, ray-tracing capable graphics cards.

DLSS aims to massively improve performance in supported games without sacrificing visual fidelity and does so through a combination of different techniques and tools such as DLSS Super Resolution (an AI-powered resolution upscaler), DLSS Frame Generation (uses AI to interpose generated frames into your gameplay and artificially increase your frames-per-second), and NVIDIA Reflect Low Latency, which optimizes the throughput between your gaming hardware and your monitor to reduce latency to its lowest possible point.

NVIDIA DLSS support in video games is actually extremely common now, and the next generation of NVIDIA's performance-enhancing technology was announced back in Aug. 2023. It's DLSS 3.5, which adds AI-powered ray reconstruction on top of DLSS 3 for massively improved performance when using ray tracing. Namely, it replaces hand-drawn denoisers with a new AI model that's far more responsive, detailed, and natural. We've already seen the effects in games like Cyberpunk 2077.

During GDC, NVIDIA announced that more games like Black Myth: Wukong and Naraka: Bladepoint are gaining DLSS 3.5 support, while a free update for Portal with RTX with DLSS 3.5 Ray Reconstruction and RTX IO support (this technology uses your RTX GPU to improve storage performance). You can go play Portal with RTX for free at Steam right now; NVIDIA demoed the improvements to me side-by-side, and I have to admit it's extremely impressive. DLSS 3.5 doesn't just improve performance; it provides dramatic improvements to visual fidelity and shadow detail with less latency when ray-traced lighting is enabled.

It was awesome to talk to the people at NVIDIA who helped make DLSS 3.5 a reality; the combination of various AI-powered technologies combined with the powerful Tensor cores of RTX 40-series GPUs does lead to dramatic performance gains in the best PC games, even if you opt not to use DLSS Frame Generation (which does increase latency). Ray tracing, especially, sees noticeable gains in both performance and quality with NVIDIA's technology, which was extremely obvious in games like Alan Wake 2.

Freestyle filters & auto-HDR

NVIDIA Freestyle isn't a new feature, but it is new to me. Introduced in 2018, Freestyle is a sub-feature included as a part of NVIDIA GeForce Experience, NVIDIA's flagship app for optimizing games, installing new drivers, and more. With Freestyle, you can apply a variety of post-processing, AI-powered filters onto supported games (of which there are many on NVIDIA's site) to dramatically change the look and feel of the game you're playing.

From color-grade filters to even video effects like making your game look like found footage, NVIDIA Freestyle filters utilize the power of your RTX GPU to seamlessly change your games. I've seen it in action, and it does work well. Each filter has settings to customize the intensity and effect, and you can even layer filters to achieve entirely unique looks! However, Freestyle did just recently get a new filter, and it's the most impressive of the bunch.

High dynamic range, or HDR, can improve the contrast, lighting, and vibrancy of supported games, but you have to own a premium monitor (or laptop) that supports it. Many games don't feature HDR support on Windows PC because of this, so Microsoft brought its Auto HDR feature from Xbox over to PC users. Now, you can achieve the same with NVIDIA Freestyle. Just like Microsoft's affair, NVIDIA Freestyle can apply a convincing HDR effect on supported games that looks awesome.

NVIDIA's implementation actually bests Microsoft's built-in option in a few ways, though. For one, you can customize the HDR filter to achieve the desired effect, similar to other NVIDIA Freestyle filters — with Auto HDR, it's either on or off. You can also stack other layers on top of it, too! NVIDIA demoed the new filter for me with Remnant 2, and I came away extremely impressed. If you love HDR gaming, you should check this one out.

RTX Remix

One of the most impressive parts of my time with NVIDIA at GDC was seeing the power of NVIDIA RTX Remix. This is a comprehensive modding platform built using all the technologies NVIDIA has at its disposal, and what you can accomplish with it is astounding. Remix allows you to mod classic games in real-time with a huge arsenal of tools, all supported by RTX hardware and NVIDIA's AI prowess.

RTX Remix can dynamically and instantaneously replace game assets with modded alternatives, massively upscale and enhance textures, and even inject NVIDIA features like DLSS 3, real-time ray tracing, and Reflex Low Latency. You can edit games and tune your mods in real-time using the Remix overlay, transforming classic titles with enhanced visuals, lighting, and performance. It's open source, too, meaning GitHub developers can contribute to the project and help it improve over time.

NVIDIA showed me how easy it was to significantly alter classic games like Half-Life 2, remaining faithful to the original while introducing new 3D assets, increasing detail and visual fidelity, and introducing realistic lighting. However, NVIDIA also showed me how Remix can add entirely new content to games, such as dropping a functional disco ball into Portal. It's a powerful and dynamic modding platform built on the NVIDIA Omniverse hub for 3D applications and services, powered by AI and RTX GPUs, and capable of bringing DLSS, RTX, and Reflex features to decades-old titles.

I was shocked by how capable this platform seems to be and how modders can actually play with it right now. The NVIDIA RTX Remix open beta was released earlier this year, and it's absolutely worth exploring if you have an interest in modding games.

NVIDIA at GDC — Creating AI gaming experiences

AI has increasingly grown in prevalence over the last few years, and shows no signs of stopping any time soon. Countless companies are heavily investing in artificial intelligence, aiming for the top in an expanding market that threatens to upheave the entire tech industry. NVIDIA's rise to glory recently is built almost entirely on the backs of AI and servers, for example — not gaming, although gamers may also benefit from new AI tech.

Companies like NVIDIA, Ubisoft, and even Xbox are working together with new arrivals like Inworld (who I also spoke to at GDC) on how AI can be useful for creating new gaming experiences. NVIDIA showed me its contributions to the effort with a demo called Covert Protocol. This slick detective game utilizes an underlying AI framework built by Inworld and enhanced by NVIDIA's Avatar Cloud Engine (ACE) to create realistic digital humans based on the generative AI models, Audio2Face (A2F) to convincingly animate speech for those digital humans, and Automatic Speech Recognition (ASR) to allow players to communicate with these AI characters.

That may sound like a lot, but the results are effortless to understand. Game developers can use Inworld to define the parameters of a character's personality, traits, and in-world knowledge and provide prompts to help guide story progression and set scenes. Using language models, these generated characters can respond to basically any player input while remaining true to the universe in which they're placed and create a believable and dynamic world for players to explore. NVIDIA's tech helps translate the underlying AI into perceivable animations and expressions, making it easier for players to communicate with these AI characters via voice.

Covert Protocol sees players take on the role of a detective tasked with discovering the hotel room number of a target. You'll have to ingratiate yourself with a handful of NPCs, talking with them to uncover clues and slowly unravel the mystery. It's fascinating being able to talk directly to in-game characters and have them respond to you in turn, and it was interesting to see how those characters' responses differed based on personality, circumstance, and even what the player was or had been doing. Because of the AI language models, these NPCs were even capable of having entirely off-topic conversations while staying in character.

After playing through the demo, I returned to a character (Sophia, who manned the front desk of the hotel) and struck up a conversation about her hobbies. Eventually, I delved into the world of nonsense by relentlessly talking about my interests in bullfighting, and Sophia took it in stride, even going so far as to tell me how the hotel could potentially accommodate bullfighting events within its facilities. It was goofy, but it also gave me an experience you just don't get in other games, which are focused on delivering carefully tailored, focused lines of dialogue.

What I found interesting, though, is how this actually requires more work than writing straight dialogue. Rather than using writers to put themselves into the shoes of imaginary characters to write their dialogue, you have to build the brains of those characters themselves. You'll still need the writers to build those brains using in-game lore, in-depth personality and trait information, and scene prompts. Of course, AI-generated characters don't need full-time voice actors, though, with their voices also needing to be AI-generated, so you do lose a lot of the emotion, nuance, and power of great voice acting.

While you can create AI voice models with real human voices, it's still less work for that voice actor (and less of a job) and results in a less convincing performance. AI-generated lines can be convincing, too, but will lack the quality or conciseness of brilliant video game writing. As such, I don't ever expect (and hope I never see) these AI characters in every game. For certain genres like RPGs, detective and puzzle games, and more, though... NVIDIA and companies like Inworld can open up a world of new possibilities.

I'll cover it more in the next section, but the above game is one such example of using AI without taking jobs away from writers or voice actors.

NVIDIA at GDC — Digital humans in digital worlds

Related to the previous section, NVIDIA is also flexing its strengths with investments in digital humans. That means using AI to create believable, natural-looking digital humans in games and applications, either to be used as interactive characters or avatars for the user. Results in this space have been mixed, to say the least, but NVIDIA does seem to be at the forefront with the combination of NVIDIA ACE (discussed before) and NVIDIA NeMo, which is an end-to-end platform assisting developers with creating generative AI models.

One example of this is Covert Protocol, of course, but NVIDIA is working with other companies to explore other uses for this digital human tech. Ubisoft also partnered with Inworld, for example, leveraging NVIDIA products to demo "NEO NPCs" at GDC. I saw these NPCs myself; similar to Covert Protocol, Ubisoft's NEO NPCs are capable of communicating with the player directly, using Inworld's AI character models to remain a faithful part of the in-game world. However, Ubisoft's research adds another element to this.

The two NEO NPCs Ubisoft demoed at GDC also use NVIDIA's A2F technology for more natural facial and character animations but also have an in-depth understanding of the game world. The idea is that NPCs won't just be able to converse with the player using their provided knowledge — they'll be able to directly comment and interact with the game world in real-time. Players might be able to watch an in-progress mission with an NPC and have that NPC provide a dynamic narrative and tips or undergo a mission briefing and outline a detailed plan of action with another NPC.

The idea is to blend scripted scenes and story beats with the dynamism of generative AI characters. Dialogue was a little rough around the edges, and Ubisoft's implementation has the same weaknesses as NVIDIA's Covert Protocol demo, but it still shows a fascinating future of games that are more interactive and responsive to player input than ever before.

NVIDIA's AI technology for digital humans doesn't just have to be used with AI NPCs, however. Japanese MMORPG World of Jade Dynasty demoed how NVIDIA's audio-to-face AI technology can be used to easily animate characters in different languages, making it far simpler to add multi-language support to a game. Now, all you need is to handle the scripted writing and voice acting, and NVIDIA A2F will handle all the lip-syncing. Another game demo built in Unreal Engine 5, Unawake, also showed how the Unreal Engine's MetaHuman tools can be paired with NVIDIA's AI modules.

It's not just about gaming, though. NVIDIA worked with the medical-focused company HippocraticAI to create a safe, task-focused health assistant to handle mundane medical tasks like calling patients to schedule appointments, handling follow-up check-ins, and delivering instructions for post-operation or post-discharge care. HippocraticAI's digital assistants need to be fast, accurate, and protected from abuse and mishandling, but they also need to be comfortable for patients of all ages and backgrounds. The company uses NVIDIA's entire range of digital human tools and products to make that happen.

In a similar vein, AI company UneeQ demoed how NVIDIA's AI tools can help it build more believable and interactive digital avatars across a wide range of industries and applications, such as customer service. Underlying AI language models are rapidly evolving in their own way, but companies like NVIDIA are bridging the gaps between those brains and the interfaces through which we access them. You can read more about NVIDIA's digital human technologies on NVIDIA's website.

NVIDIA at GDC — Local AI to help you stay productive

One of the biggest areas of improvement we've seen in AI is with large language models, or LLMs. There are plenty out there now, like with Microsoft's Copilot, which in turn is built off of ChatGPT. Generally, though, applications utilizing these LLMs rely on an online connection, often even streaming from the cloud to deliver personalized answers and information. NVIDIA's addition to the market goes against the tide in that respect.

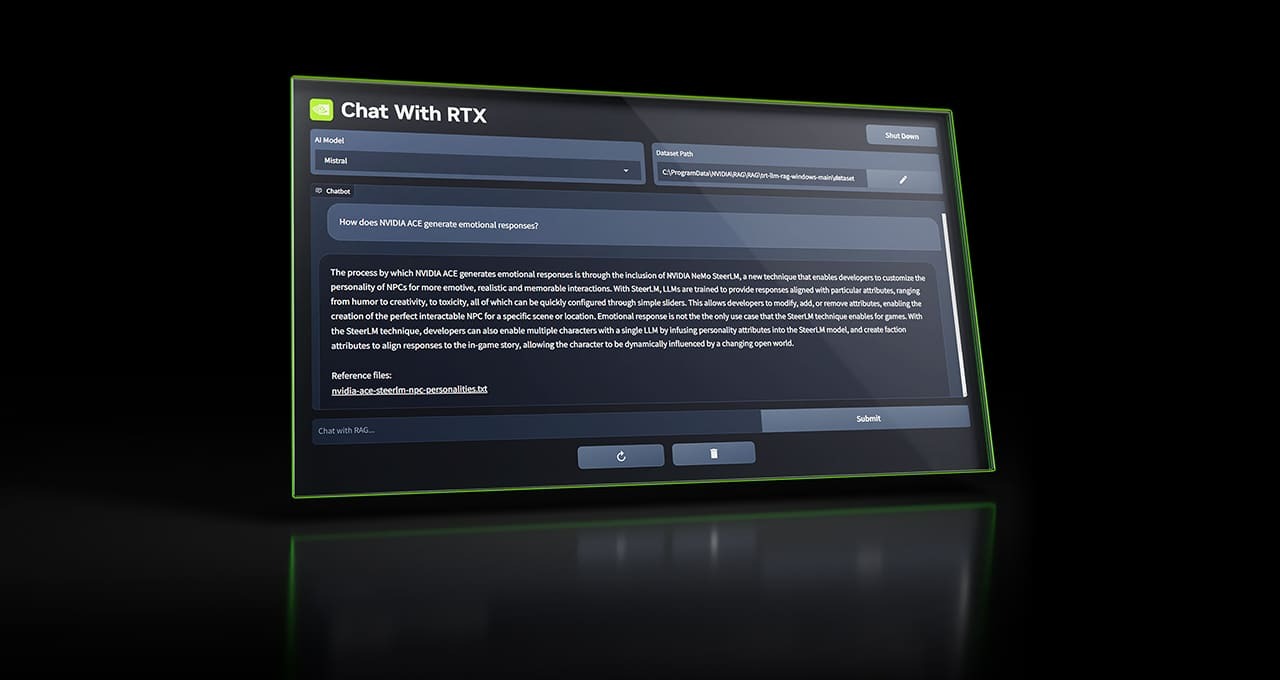

ChatRTX, which is now available to download on NVIDIA's website, is a unique AI app that lets you customize your own large language model situated entirely on your device. Yes, using the power of your NVIDIA RTX GPU, ChatRTX is 100% local, meaning it works offline and is specifically designed to tie in with your local files, programs, and media. Users can choose which underlying AI model they wish to use; for example, if you have limited VRAM, you can use a smaller AI model, or you can use an AI model that specializes in images rather than text.

What's important is that it's up to you to provide the data and train ChatRTX. You start with an underlying language model, and then you have a dedicated folder on your computer that acts as ChatRTX's dataset. The AI app can only access files and data within that dataset, meaning it doesn't have free reign to roam around your computer. You can even have various workloads or datasets within that master set and switch between them within seconds, giving ChatRTX different sets of data for different tasks to ensure that your answers are always focused and accurate (when I asked if ChatRTX could still return dataset-specific answers even when accessing its entire master dataset, NVIDIA tested it with me to prove that yes, yes it can).

It's as easy as moving files around your computer, but why would you want this? Well, ChatRTX uses natural language models to understand context in conversations, making it effective at certain tasks. If you download an article, PDF, or document, you can have ChatRTX provide succinct summaries based solely on that information (reducing the risk of made-up or inaccurate information you often get from online AI models).

Let's say you're working on a massive project that requires a large number of notes — ChatRTX can near-instantaneously pull specific information from those files and even answer abstract questions based on that information. Working on a game? Give ChatRTX access to your game notes and files as its dataset, and it can pull from the in-game lore to provide guidance on names, ideas, and more. I got to see ChatRTX do this all myself, and it's honestly impressive how quickly and effortlessly it works without the privacy or misinformation concerns associated with other AI apps.

NVIDIA told me that ChatRTX is also open-source. That means GitHub developers are already working on plug-ins for ChatRTX to expand its capabilities, including being able to pull information from specific websites you add to its directory or making it more effective at parsing information from images, abnormal file types, or massive datasets. As someone who hasn't been terribly interested in the onset of AI assistants and applications, ChatRTX actually seems useful. It can be a pain to keep organized when I'm working with years of images, notes, and files, but ChatRTX can parse through that for me and do so entirely locally.

NVIDIA at GDC — The rise to dominance with AI

NVIDIA wasted no time highlighting how it uses AI in 2024, and I had the privilege of seeing a lot of it... And talking to some of the people behind it. Artificial intelligence is a complex, nuanced topic with limitless potential for both greatness and harm, and NVIDIA is already one of the biggest players in the space. Many consumers may know NVIDIA for its gaming-focused GPUs, but it's actually a combination of AI and server blades that has elevated the company to one of the most valuable companies on the planet.

There are so many applications for AI, and more emerge every day, and it seems NVIDIA is dabbling in them all. From using large language models and RTX GPUs to run advanced, local processing to building believable digital humans in virtual spaces, NVIDIA seems to be in every AI-related conversation recently. I still see AI's potential to free people from an oppressive labor cycle to focus more on creativity, but I also see the adverse where AI is used to replace labor and creativity. What I've seen from NVIDIA makes me hopeful the company is investing in the right areas, but only time will tell if it made the correct choices.

At GDC 2024, NVIDIA made it more clear than any other individual company how prevalent AI is, not just as a buzzword in the tech industry but also as a real-world product that's making a difference in people's lives right now. I'm glad NVIDIA invited me to demo many of these products during my time in San Francisco, and I look forward to seeing what comes next.