What you need to know

- LinkedIn quietly shipped a new feature dubbed Data for Generative AI Improvement. This feature leverages your data in the employment-focused social network to train Microsoft and its affiliates' AI models.

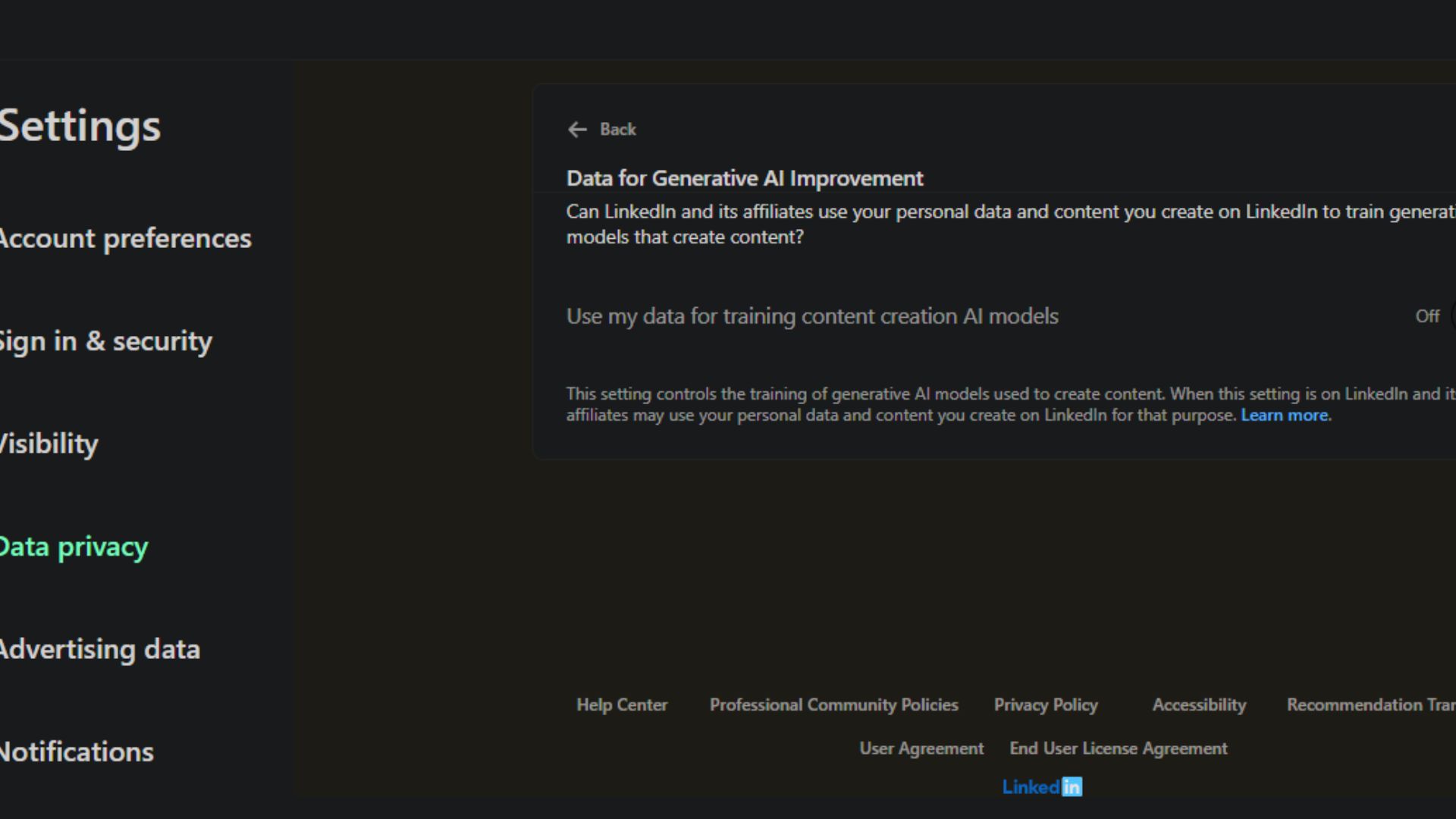

- The feature is enabled by default, but you can turn it off via the Data Privacy settings on the web and mobile.

- EU, EEA, and Switzerland users have been exempted from the controversial feature, presumably because of the EU AI Act's stringent rules.

Microsoft and OpenAI have been involved in multiple court battles over copyright infringement-related issues. AI-powered models rely on information from the web for training, which doesn't sit well with authors and publications that have blatantly expressed reservations about the approach.

While commenting on one of the many copyright infringement suits filed against OpenAI, CEO Sam Altman admitted that developing tools like ChatGPT without copyrighted content is impossible. Altman further argued that copyright law doesn't prohibit using copyrighted content to train AI models.

Anthropic is the latest AI firm to be sued for copyright infringement after a group of authors filed a lawsuit citing the use of their work to train Claude AI without consent or compensation. As it happens, Microsoft's employment-focused social network LinkedIn is reportedly leveraging users' data to train its AI models.

LinkedIn subtly rolled out a new feature (Data for Generative AI improvement), allowing AI models to access your data for training. Worse, it's enabled by default. Following this new revelation, Microsoft and its "affiliates" have access to data from LinkedIn's massive 134.5 million daily active users for AI training.

While speaking to PCMag, a LinkedIn spokesman indicated that "affiliates" refer to any Microsoft-owned company. The spokesman also clarified that LinkedIn wasn't sharing the collected data with OpenAI.

Interestingly, EU, EEA, and Switzerland users are seemingly missing in action. The theory behind this can be attributed to the stringent rules introduced to the region via the EU AI Act, which prohibits the training, validation, and testing of high-risk AI systems.

LinkedIn highlights its terms and conditions:

"Where LinkedIn trains generative AI models, we seek to minimize personal data in the data sets used to train the models, including by using privacy-enhancing technologies to redact or remove personal data from the training dataset."

It's unclear whether the new feature is limited to posts shared on LinkedIn or across the board, including DMs. "As with most features on LinkedIn, when you engage with our platform, we collect and use (or process) data about your use of the platform, including personal data," LinkedIn added. "This could include your use of the generative AI (AI models used to create content) or other AI features, your posts and articles, how frequently you use LinkedIn, your language preference, and any feedback you may have provided to our teams."

How to disable LinkedIn's Data for Generative AI Improvement feature

To disable LinkedIn's Data for Generative AI Improvement feature and prevent the use of your data for AI training, navigate to Settings > Data Privacy > Data for Generative AI Improvement and toggle the button off.

Elon Musk's Grok faced similar criticisms in July for secretly training the AI model without user consent. Like LinkedIn's new controversial feature, Grok's AI training feature was enabled by default. Worse, the ability to turn off the feature was limited to the web, making it difficult for mobile users to deactivate it.

X's quest to make Grok "the most powerful AI by every metric by December this year" opened the social network to regulatory scrutiny. The General Data Protection Regulation (GDPR) could fine X up to 4% of its global annual turnover if the platform can't establish a legal basis for using users' data to train its chatbot without their consent.

🎃The best early Black Friday deals🦃

- 🕹️Xbox Game Pass Ultimate (3-months) | $31.59 at CDKeys (Save $17!)

- 💻Samsung Galaxy Book4 Edge (X Elite) | $899.99 at Best Buy (Save $450!)

- 🎮Razer Wolverine V2 Chroma (Xbox & PC) | $99.99 at Amazon (Save $50!)

- 🕹️Starfield Premium Upgrade (Xbox & PC) | $27.79 at CDKeys (Save $7!)

- 💻ASUS Vivobook S 15 (X Elite) | $899 at Amazon (Save $400!)

- 🕹️Final Fantasy XVI (PC, Steam) | $43.89 at CDKeys (Save $6!)

- 💻Lenovo ThinkPad X1 Carbon | $1,481.48 at Lenovo (Save $1,368!)

- 🎮 Seagate Xbox Series X|S Card (2TB) | $249.99 at Best Buy (Save $110!)

- 🕹️Hi-Fi RUSH (PC, Steam) | $8.49 at CDKeys (Save $22!)

- 💻HP Victus 15.6 (RTX 4050) | $599 at Walmart (Save $380!)

- 🫙Seagate HDD Starfield Edition (2TB) | $79.99 at Best Buy (Save $30!)

- 🖱️Razer Basilisk V3 Wired Mouse | $44.99 at Best Buy (Save $25!)

- 🕹️Days Gone (PC, Steam) | $10.29 at CDKeys (Save $39!)

- 🖥️Lenovo ThinkStation P3 (Core i5 vPro) | $879.00 at Lenovo (Save $880!)