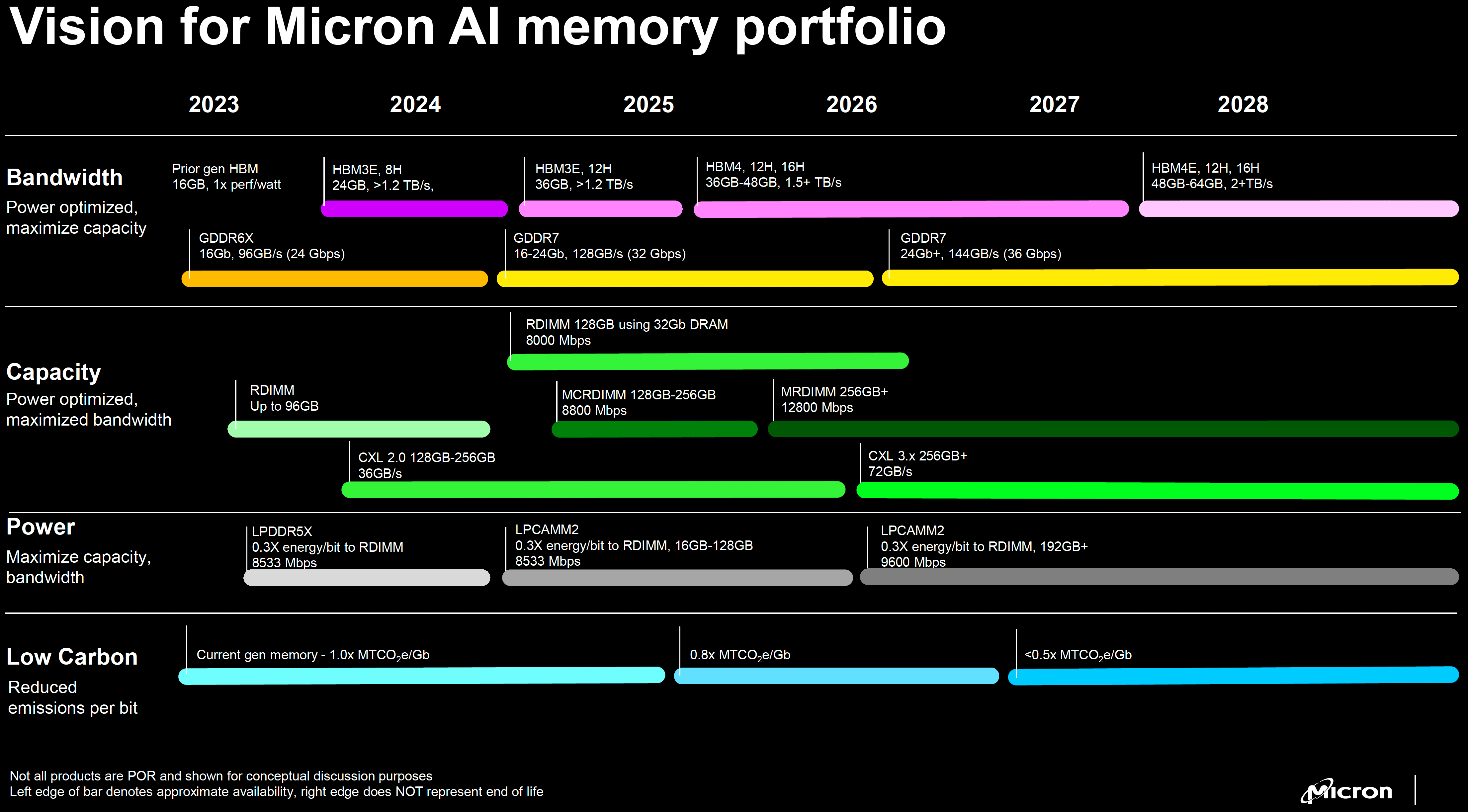

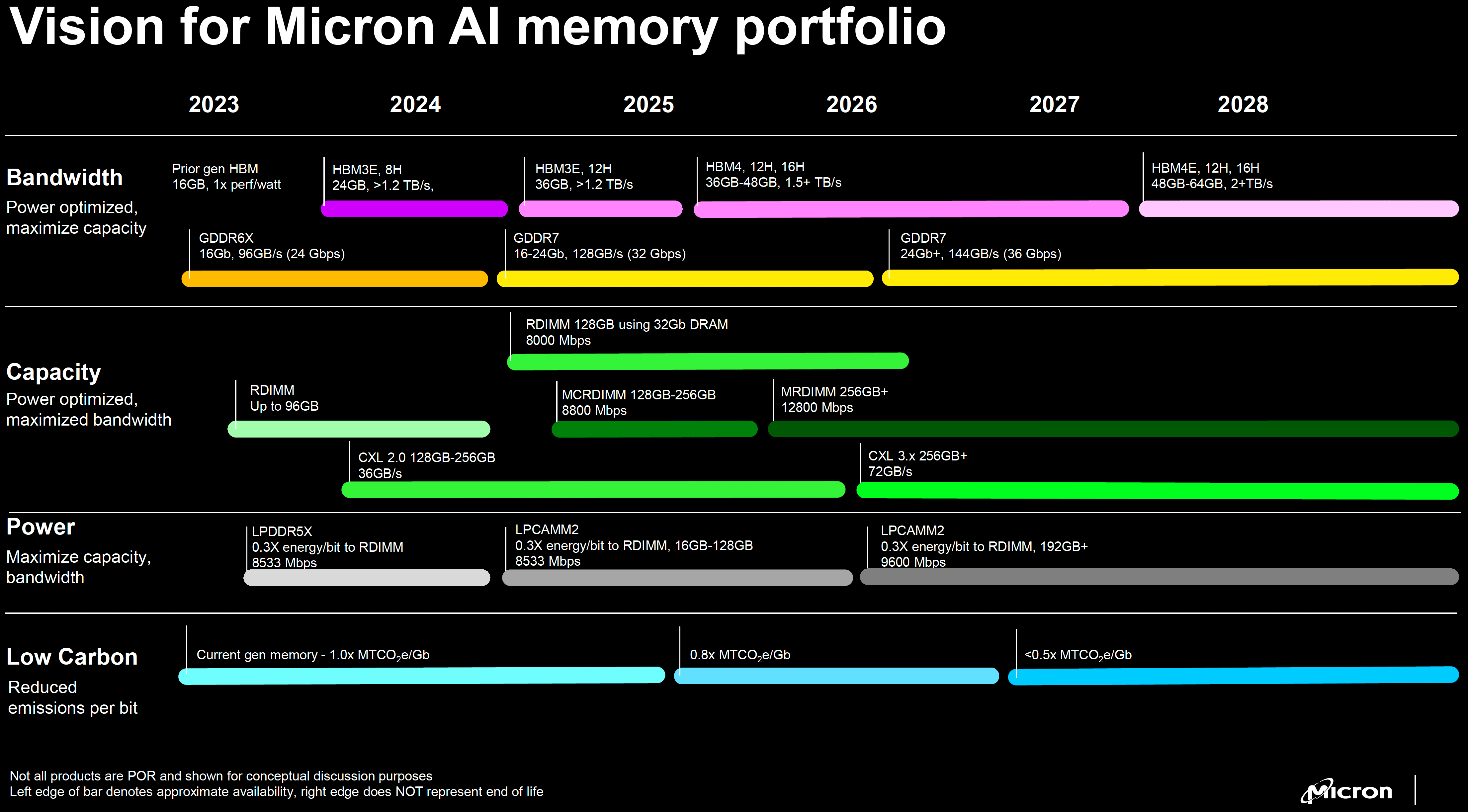

Micron on Thursday introduced its new 128GB DDR5-8000 RDIMM memory modules based on monolithic 32Gb DRAM ICs aimed at servers and shared its vision for future high-performance and high-capacity memory technologies set to arrive over the next five years. The company also shared a roadmap that includes several technologies not previously discussed publicly, including 256 GB DDR5-12800 sticks, HBM4E, CXL 2.0, and LPCAMM2.

Bandwidth Hungry Applications

When it comes to bandwidth-hungry applications, Micron expects them to keep using HBM and GDDR types of memory in the coming years. 24GB 8-Hi HBM3E stacks are set to arrive in early 2024 and offer bandwidth of over 1.2 TB/s per stack, which is set to tangibly increase the performance of AI training and inference. The company plans to refine its HBM3E lineup with 36GB 12-Hi HBM3E stacks by 2025, which will further increase memory capacity for HBM-supporting processors, but will not boost bandwidth. For example, Nvidia's H100 could use 216GB of HBM3E memory if the company had these stacks. In 2025, they would be used by a post-Blackwell GPU for AI and HPC compute.

The true revolution for HBM will be HBM4, which is set to arrive by 2026. These stacks will feature a 2048-bit interface, requiring memory, processor, and packaging makers to work together closely to make it work properly. Yet, the reward promises to be quite tangible as each stack is expected to feature bandwidth of over 1.5 TB/s. As for capacity, Micron envisions 36GB to 48GB 12-Hi and 16-Hi stacks in the 2026 to 2027 timeframe. HBM4 will be followed by HBM4E in 2028, according to Micron. The extended version of HBM4 is projected to gain clocks and increase bandwidth towards 2+ TB/s and capacity to 48GB to 64GB per stack.

HBM will remain the prerogative of the most expensive and bandwidth-demanding applications. Cheaper products, such as AI accelerators for inference and graphics cards, will keep using GDDR. Based on Micron's roadmap, the next version of GDDR — GDDR7 — is set to arrive by late 2024, boasting a data transfer rate of 32 GT/s (128 GB/s bandwidth per device) and capacities of 16 to 24Gb. As GDDR7 becomes more mature, it will speed up to 36 GT/s (144GB/s bandwidth per device) and gain per IC capacities higher than 24Gb (think 32Gb, 48Gb) sometime in late 2026.

Capacity

Servers need memory, and Micron's 128 GB DDR5-8000 RDIMM is just the beginning of Micron's 32Gb DRAM ICs. Expect the company to come up with more products based on these devices.

In a bid to bring together capacity and performance, Micron expects to offer 128GB – 256GB MCRDIMM modules with a data transfer rate of 8800 MT/s in 2025, and then MRDIMMs with capacities of over 256GB and a data transfer rate of 12800 MT/s in 2026 or 2027.

For machines that need further memory expansion, Micron is poised to ship CXL 2.0-supporting expanders featuring 128GB – 256GB capacities and up to 36 GB/s of bandwidth over a PCIe interface. These will be followed by CXL 3.x-compliant expanders with over 72 GB/s of bandwidth and capacities of over 256 GB.

Low Power

For low-power applications, the industry will keep using LPDDR memory and based on Micron's roadmap, LPDDR5X with 8533 MT/s or 9600 MT/s data transfer rates will stay with us for a while. Meanwhile, for laptops and other applications that benefit from memory on modules, Micron is set to offer LPDDR5X-8533 16 to 128GB LPCAMM2 modules starting in 2025, and then LPDDR5X-9600 LPCAMM modules with capacities of 192GB and higher starting in mid-2026.