Micron, Samsung, and SK hynix have introduced their small outline compression attached memory modules (SOCAMM) that use LPDDR5X memory and are aimed at AI and low-power servers. SOCAMMs — which will first be used with servers based on Nvidia's GB300 Grace Blackwell Ultra Superchip systems — are designed to bring together high capacity, high performance, small footprint, and low power consumption.

A SOCAMM measures 14x90mm — one-third of a traditional RDIMM — and carries up to four 16-die LPDDR5X memory stacks. Micron's initial SOCAMM modules will offer a capacity of 128GB and will rely on the company's LPDDR5X memory devices produced on its 1β (1-beta, 5th Generation 10nm-class) DRAM process technology. Micron does not disclose data transfer rates supported by its initial SOCAMM sticks but says that their memory is rated for up to 9.6 GT/s. Meanwhile, SK hynix's SOCAMM demonstrated at GTC 2025 is rated for up to 7.5 GT/s.

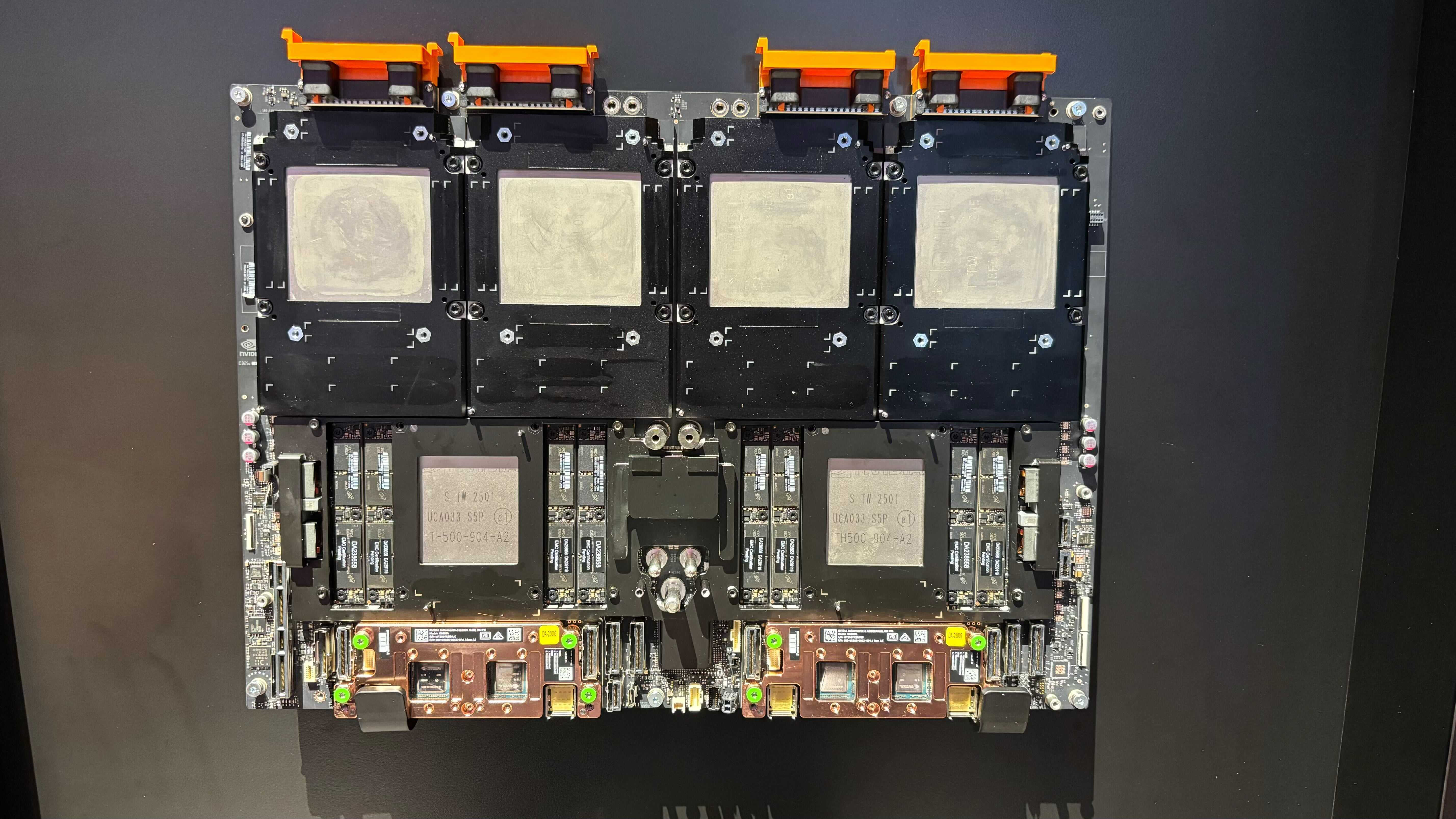

Memory consumes a sizeable part of server power consumption. For example, the power consumption of DRAM in servers equipped with terabytes of DDR5 memory per socket exceeds that of CPUs. Nvidia designed its Grace CPUs around LPDDR5X memory, which consumes less than DDR5, but implemented a wide memory bus (taking a page from datacenter-grade processors from AMD and Intel) to offer high memory bandwidth. However, with GB200 Grace Blackwell-based machines, Nvidia had to use soldered-down LPDDR5X memory packages as no standard LPDDR5X memory modules would meet its capacity requirements.

Micron's SOCAMM changes this by offering a standard modular solution that can house four 16-die LPDDR5X memory stacks, potentially offering a rather whopping capacity. Micron says that its 128GB SOCAMMs consume one-third of the power consumed by a DDR5 RDIMM of a 128GB capacity, which is a big deal. Unfortunately, it is unclear whether Micron's SOCAMMs will ever be an industry standard supported by JEDEC or will remain a proprietary solution developed by Micron, Samsung, SK hynix, and Nvidia for servers running Grace and Vera CPUs.

Micron's SOCAMM sticks are already in mass production, so expect systems based on Nvidia's GB300 Grace Blackwell Ultra Superchip to use this memory. Modular memory simplifies server production and maintenance, which will positively affect the prices of these devices.

"AI is driving a paradigm shift in computing, and memory is at the heart of this evolution," said Raj Narasimhan, senior vice president and general manager of Micron's Compute and Networking Business Unit. "Micron's contributions to the Nvidia Grace Blackwell platform yields significant performance and power-saving benefits for AI training and inference applications. HBM and LPDDR memory solutions help unlock improved computational capabilities for GPUs."