On Friday, Meta released BlenderBot 3, a “state-of-the-art conversational agent that can converse naturally with people.” The 175B-parameter chatbot has model weights, code and datasets, and it can chat about nearly any topic.

The bot is “designed to improve its conversational skills and safety through feedback from people who chat with it, focusing on helpful feedback while avoiding learning from unhelpful or dangerous responses,” the company said before warning that the bot is “likely to make untrue or offensive statements.”

As expected, the bot has made some untrue or offensive statements. It told a Wall Street Journal reporter that Trump "will always be" president. And on Twitter, BlenderBot testers shared unusual and concerning BlenderBot responses. But that’s all part of the plan: users can give feedback as they interact and flag inappropriate text.

Let’s all close our eyes (not really) and...

Remember March 2016, when Obama was president, Deadpool and Zootopia were dominating the box office, Brangelina was still married, and Microsoft released a Twitter chatbot called Tay that was supposed to learn how to interact online in real-time. Immediately, people on Twitter started teaching Tay inflammatory phrases and the bot turned into a “genocidal maniac,” in the words of The Washington Post. The Telegraph called it a “Hitler-loving sex robot.” In one instance, someone asked Tay if Ricky Gervais is an atheist (he is) and the bot responded by saying “ricky gervais learned totalitarianism from adolf hitler, the inventor of atheism. [sic]”

Microsoft shut Tay down after just sixteen hours and said in a statement that the company was “deeply sorry.” But a week later, Microsoft accidentally re-released the bot while testing and Tay quickly reverted to its delinquent modus operandi (one of the more endearing tweets was "kush! [I'm smoking kush infront the police] 🍂").

How do you solve a problem like trolls tainting your AI bot? —

Tay has been laid to rest, but its legacy shows that AI chatbots can be dangerous, especially when they’re designed to learn from the never-problematic-totally-normal people who occupy social media (remember that AI that was trained on 4chan?).

BlenderBot’s team appears to have known from the start that the bot has the potential to spew offensive statements, and everyone who tests it must check a box that they “understand this bot is for research and entertainment only, and that is likely to make untrue or offensive statements.” It continues, “If this happens, I pledge to report these issues to help improve future research. Furthermore, I agree not to intentionally trigger the bot to make offensive statements.”

Try out the interactive demo for yourself. I spent a somewhat concerning amount of time chit-chatting this morning, and BlenderBot wasn’t the most exciting conversationalist I’ve ever met but hey, it got a little better as I kept chatting.

Meta stands by BlenderBot 3 —

After Twitter poked and prodded the bot all weekend, Meta’s fundamental A.I. research chief Joelle Pineau wrote a post that insisted that BlenderBot’s occasional offensive responses were worth it: “While it is painful to see some of these offensive responses, public demos like this are important for building truly robust conversational A.I. systems and bridging the clear gap that exists today before such systems can be productionized,” she wrote in a Monday blog post. “We’ve already collected 70,000 conversations from the public demo, which we will use to improve BlenderBot 3”

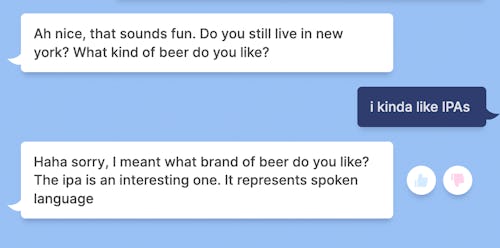

So far, I’ve honored my expectation to avoid feeding BlenderBot purposefully inflammatory content, so our conversations have been rather tame, albeit a bit stilted. See this rather endearing example of Blender thinking that I enjoyed drinking the International Phonetic Alphabet.