Meta's AI efforts feel a little less visible at times than the likes of Apple Intelligence or ChatGPT, but the house that Facebook built has been making big strides.

The new Segment Anything Model 2, dubbed SAM 2, is a testament to this, letting you pluck just about anything from video content and add effects, transpose it to a new project, and much more.

It could open up a whole host of potential in filmmaking, letting creators drag and drop disparate elements to make a cohesive whole. While it's only available in a sort of "research demo" at present, here's how you can get involved with SAM 2.

How to use Meta's SAM 2

One of the best parts of SAM 2 at present is that Meta is offering the model with a demo.

You will need to be in the US (or at least have a VPN) to gain access to the company's AI toolset, but if that's no problem you'll need to head to this link.

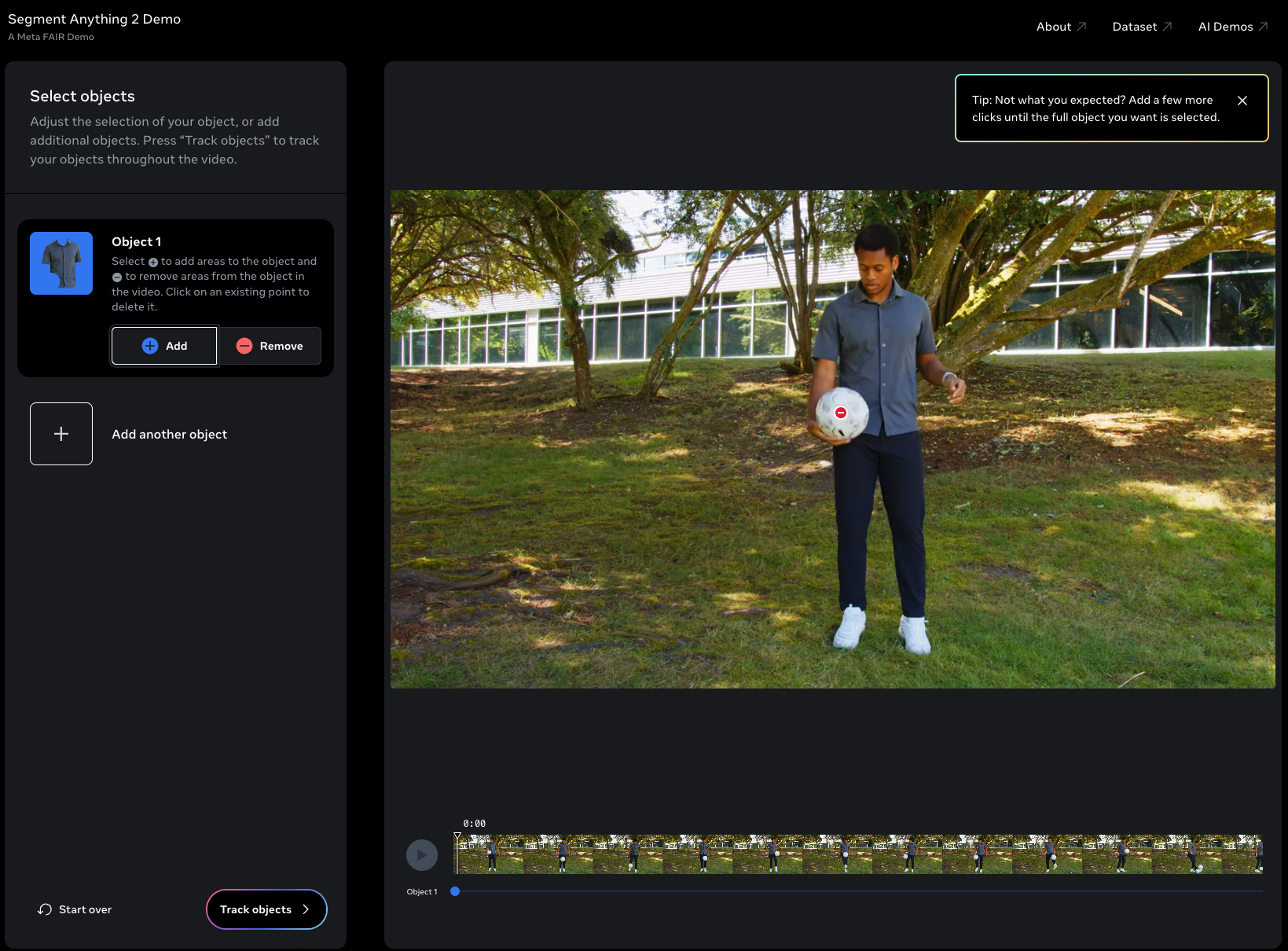

Select 'Try the demo' and you should end up looking at a subject holding a football, as in the image above.

To get an idea of how SAM 2 can benefit video creators, try selecting the ball to highlight it blue, then hit 'Track objects' at the bottom to see it track the ball as it's kicked and rotated by the subject.

There are multiple demos on offer, too. Switching to the skateboarder one will give you the option to highlight the boarder, the board, or just about anything else. I tested it by highlighting the visible foliage at the start of the clip, and while it was able to keep it highlighted, it also highlighted a similar-sized tree on the other side of the bowl.

That shows it's perhaps not quite perfect yet, but it's impressive to consider how this could change video editing in the future.

Once subjects are selected, users can adjust the effects for each, letting them look distinct visually from the background, or inverting the colors entirely.

What Meta says

In Meta's blog post, the company said "Today, we’re announcing the Meta Segment Anything Model 2 (SAM 2), the next generation of the Meta Segment Anything Model, now supporting object segmentation in videos and images."

"We’re releasing SAM 2 under an Apache 2.0 license, so anyone can use it to build their own experiences. We’re also sharing SA-V, the dataset we used to build SAM 2 under a CC BY 4.0 license and releasing a web-based demo experience where everyone can try a version of our model in action."

Last year's Segment Anything Model (the first SAM) was Meta's "foundation model" for object segmentation, but SAM 2 is "the first unified model for real-time, promptable object segmentation in images and videos, enabling a step-change in the video segmentation experience and seamless use across image and video applications."

Meta says SAM 2 is more accurate and offers better video segmentation performance than the prior version.