While Meta’s most recognizable hardware is its Quest VR headsets, its smart glasses created in collaboration with Ray-Ban are proving to be popular thanks to their sleek design and unique AI tools – tools that are getting an upgrade to turn them into a wearable tourist guide.

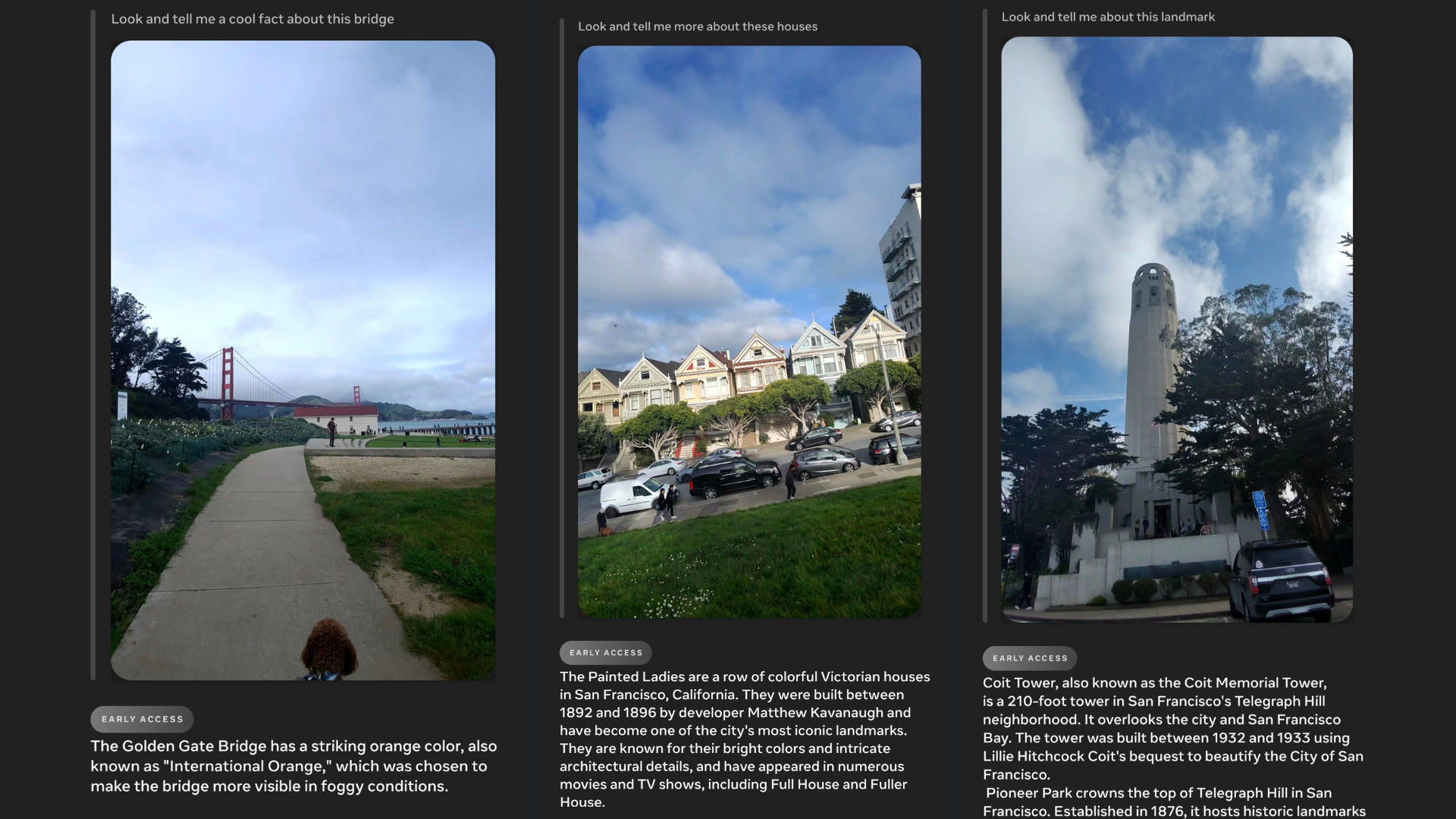

In a post on Threads – Meta’s Twitter-like Instagram spinoff – Meta CTO Andrew Bosworth showed off a new Look and Ask feature that can recognize landmarks and tell you facts about them. Bosworth demonstrated it using examples from San Francisco such as the Golden Gate Bridge, the Painted Ladies, and Coit Tower.

As with other Look and Ask prompts, you give a command like “Look and tell me a cool fact about this bridge.” The Ray-Ban Meta Smart Glasses then use their in-built camera to scan the scene in front of you, and cross-reference the image with info in the Meta AI’s knowledge database (which includes access to the Bing search engine).

The specs then respond with the cool fact you requested – in this case explaining the Golden Gate Bridge (which it recognized in the photo it took) is painted “International Orange” so that it would be more visible in foggy conditions.

Bosworth added in a follow-up message that other improvements are being rolled out, including new voice commands so you can share your latest Meta AI interaction on WhatsApp and Messenger.

Down the line, Bosworth says you’ll also be able to change the speed of Meta AI readouts in the voice settings menu to have them go faster or slower.

Still not for everyone

One huge caveat is that – much like the glasses’ other Look and Ask AI features – this new landmark recognition feature is still only in beta. As such, it might not always be the most accurate – so take its tourist guidance with a pinch of salt.

The good news is Meta has at least opened up its waitlist to join the beta so more of us can try these experimental features. Go to the official page, input your glasses serial number, and wait to get contacted – though this option is only available if you’re based in the US.

In his post Bosworth did say that the team is working to “make this available to more people,” but neither he nor Meta have given a precise timeline of when the impressive AI features will be more widely available.