I didn’t want to love it. My plan for my first Meta Orion AR glasses experience was to dispassionately try them and quickly identify the highlights, shortcomings, and pitfalls of this not-ready-for-primetime mixed reality product. But I can’t do that because I enjoyed every bit of it, almost to the point of giddiness.

Let’s back up for a minute.

Meta Orion AR glasses made their global debut just weeks ago at the Meta Connect Conference on the face of company founder and CEO Mark Zuckerberg. The live demo raised eyebrows for its build, image quality, eye and gesture tracking, and smart Meta AI integration.

Orion looked like everything Google once hoped Google Glass could be and, honestly, everything Apple Vision Pro should be today.

I read some of the early hands-on' with interest and some jealousy. I’ve been trying AR, VR, and mixed-reality headsets, and smart glasses for well over a decade, and I sensed a tipping point in Orion. Even so, I assumed it couldn’t be that good. If Meta wasn’t ready to release it to the public, Orion had to be more experiment than product and the utility would be limited at best.

Meta invited me to its New York headquarters this week to wear and try Orion for myself. Since I first heard of them, it seemed like a long road to get to this moment, but to be fair, Meta’s traveled far further.

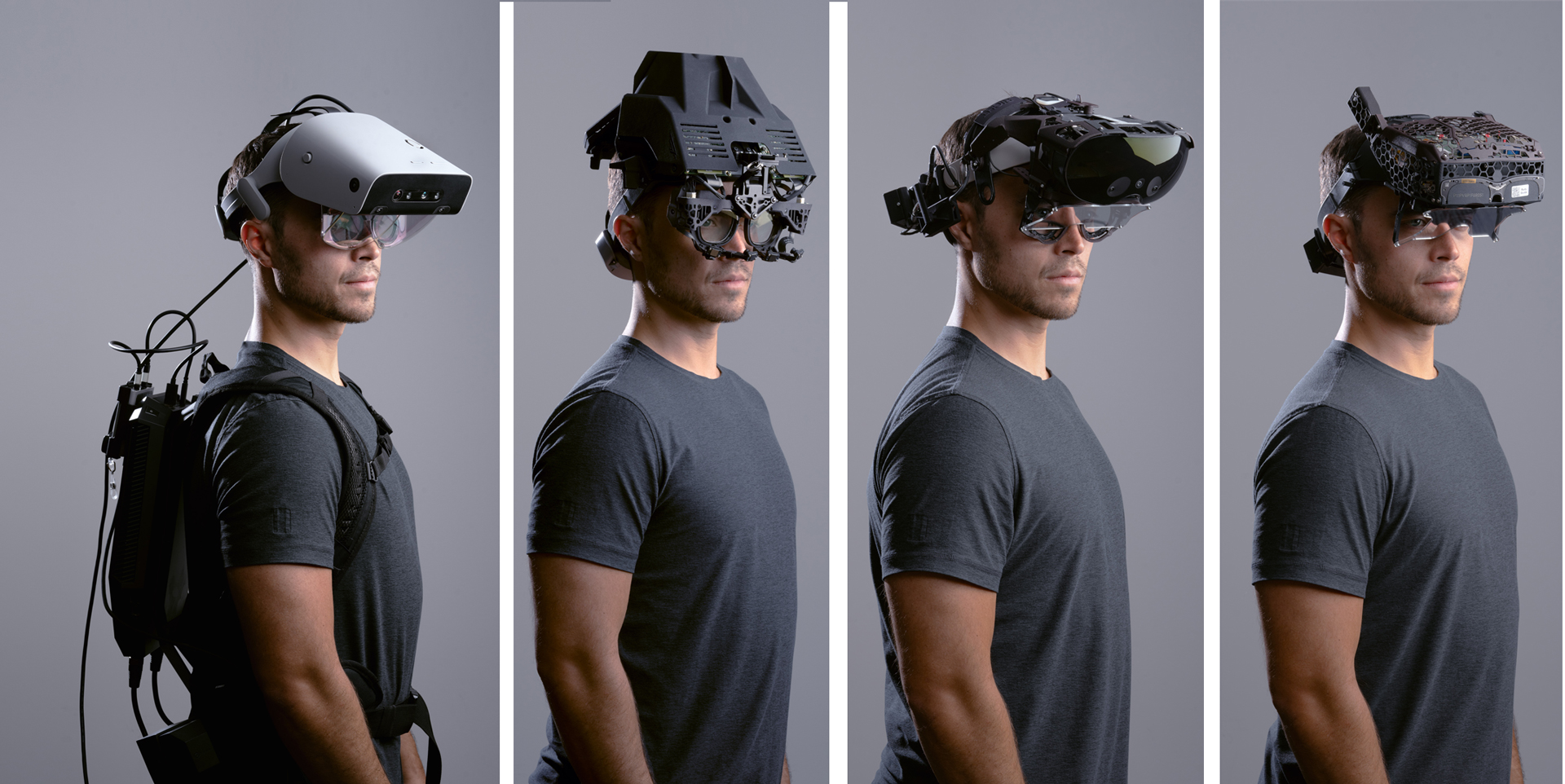

The Orion project started inauspiciously five years ago with a massive headset that was attached to a heavy backpack (see the progression below). Meta VP of Wearable Devices Ming Hua, who is also leading the Orion team, told me the joke at the time was that you had to be very strong to work on AI glasses.

Meta worked quickly in the intervening years to shrink the system. By 2022, the project, then known as Balboa, was a torn-down Quest Pro. It was only marginally smaller in 2023. It is almost miraculous that Orion made the leap from this massive and unwieldy headgear to a pair of almost fashionable magnesium glasses that weigh just under 100 grams.

Figuring out how to shrink components, which often seems to involve building custom silicon and then spreading it throughout the body of Orion, is an achievement in and of itself. I saw an image of a see-through version of Orion, which showed virtually every square millimeter filled with circuitry, silicon, and components. Even so, Meta has yet to fit everything inside the frames.

Some supporting custom silicon lives in the wireless puck. While Meta has experimented with additional puck features like a six-degree of freedom thumb control and some cameras, during my demo, the puck sits nearby like a silent partner.

There are enough sensors built into the glasses to track eye and head movement, but Meta went a different route for Orion input. It developed an Electromyography (EMG) band that reads subtle electrical signals produced in the wrist when you perform various hand gestures. I’ve used some of the EMG bands in the past and they were bulky and uncomfortable. Orion’s companion band is not much thicker than a sweatband and no less comfortable.

Orion’s defining feature, though, may be its display system. The lenses appear mostly clear, but there’s a complex interplay of tiny micro-LED projectors, silicon carbide, and waveform etching that creates a remarkably seamless AR experience.

The projectors live in the frame, sitting in stems and right next to lenses, which have a thin coating of silicon carbide, chosen for its high refraction and low image ghosting capabilities. The waveguide technology involves fine etchings on the silicon carbide surface that guide the light and imagery across the lens surfaces for a wider-than-it-sounds 70-degree field view. The resolution ends up being 13 pixels per degree (ppd).

The specs are fascinating, but the experience is what convinced me. I just got a glimpse of our AR glasses' future.

Wearing tomorrow

The first thing I noticed when they handed me the black Orion frames was that they were much lighter than expected. Yes, they are a lot thicker than, say, my glasses, but they’re not actually much bigger.

When I put them on first the first time, they don’t feel much different than when I’m trying to pick out a new pair of glasses. The only adjustment Meta’s team insists on is finding the right nose piece. I think this has a lot to do with making sure the cameras looking at my eyes for eye tracking are in the right place. I recall Ming Hua mentioning to me that the frames use magnesium for its lightness, but also for rigidity because even a hair-width shift in the frame could set the dual displays off-axis and result in double AR vision.

Owing to this being a work in progress, some of my demos are driven and managed by a guy behind a computer. He mostly troubleshoots and reboots from afar; otherwise, I get to drive Orion.

In addition to the smart glasses, I’m fitted with an EMG band. It’s positioned high on my wrist and tight enough that it can pick up my finger movements, so I can feel the haptic feedback to let me know that I’ve successfully transmitted a gesture instruction.

The setup and calibration are quite similar to Apple Vision Pro in that the system asks me to, using only my eyes, look at a series of floating dots that appear in a circle in front of me. I notice right away that it doesn’t seem to matter where I look, I can always see Orion’s AR display. Later, I learned how to find the edges of the 70-degree FOV, but it takes effort, and in normal wear and activity, the visuals fill my view (without ever blocking them).

The system also trains me on the few gestures I’ll use to access and control menus and engage with apps. Mostly, it’s a pinch of the index finger and thumb or a pinch with my middle finger and thumb. I had less success with a flicking gesture that was supposed to let me scroll. Throughout my lengthy demo, the EMG band slowly slips down my wrist and eventually hangs like a bracelet. Naturally, this means it can't read any of my gestures. If I keep it high and tight on the wrist and use slightly assertive gestures, it works consistently.

Home base

The home menu also reminds me of Vision Pro, and I reflexively pinch and grab a bar below it to bring the interface closer to me. Throughout the demo, I can't wear my glasses, which means some of the visuals are a little blurry, which is why I pulled them closer. I wondered how much better the experience would’ve been if I owned or had ever worn a pair of contacts.

I have several core Orion experiences, and each one was impressive in its own way.

When Ming Hua calls, and after I look at the call button and pinch to accept, her video call pops up in front of my face, looking much like a floating 42-inch screen. When I get up and move around, the screen stays in place. I can even look at it from the side. It really looks like part of my real world. I can see the home gesture to pull the interface in front of me. Not only is the video of decent quality, but the spatial audio, which sits near my ears, is clear. The six microphones mean I can talk in a normal tone of voice, and Hua can hear me perfectly.

I stand up and walk over to a collection of ingredients, including a pineapple, cacao, bananas, whey, dates, and coconut flakes. There is a gesture to enable Meta AI, but it never works for me, so I look at the Meta AI icon and tap my fingers to access it that way.

Then I say, “Give me a recipe.” Meta AI understands this to mean that it should look at the ingredients in front of it and come up with a recipe. It’s something we do all the time without the assistance of AI.

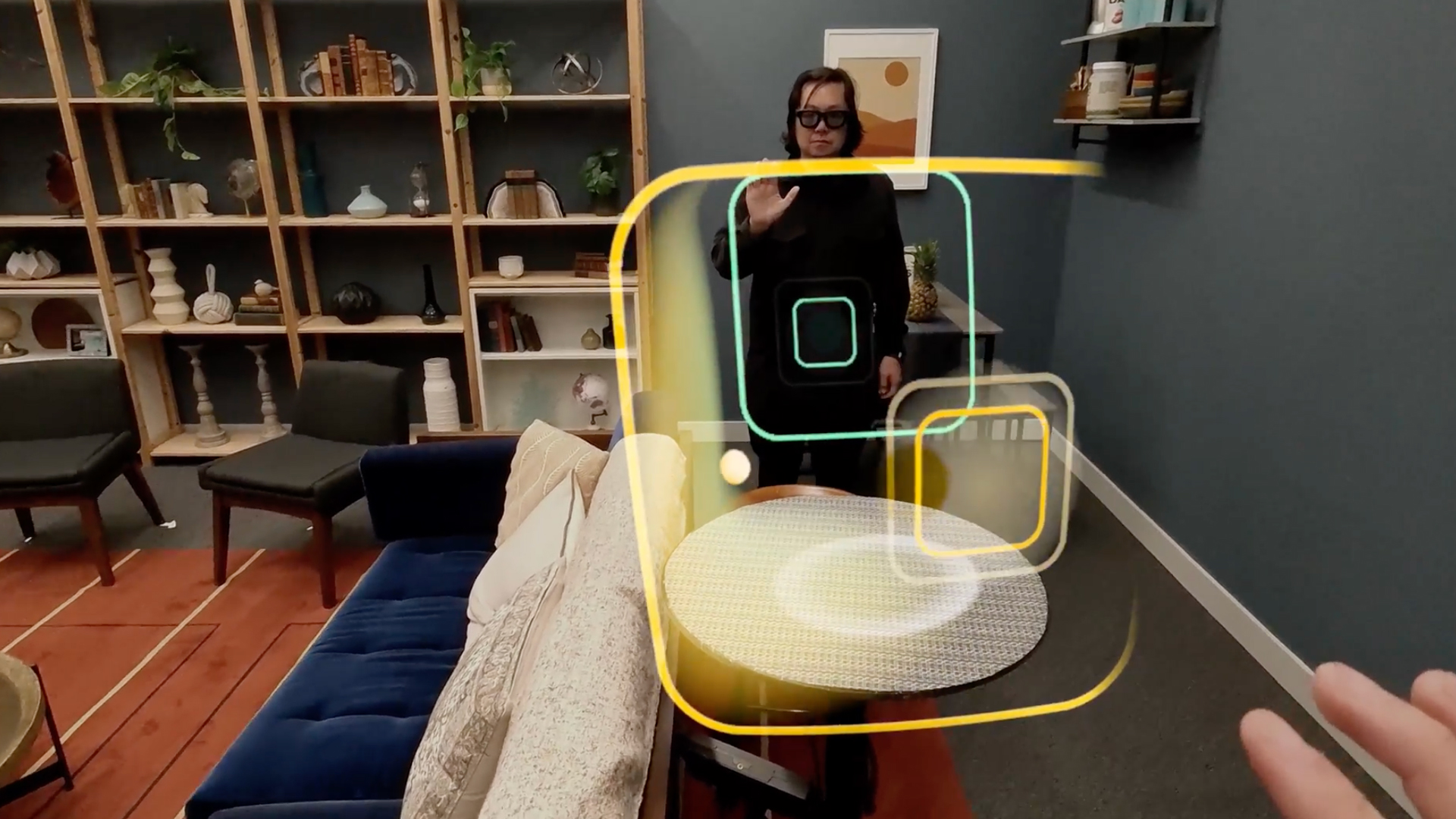

In this instance, though, I watch as Orion identifies all the ingredients, putting little labels on each one. The 3D mapping here is spot on. Within a few moments, I have a usable recipe that I could page through. When I remove an ingredient from the table and ask for another recipe, Meta AI adjusts and gives me a coconut-encrusted banana. Yummy.

I open Instagram, which is naturally part of the main menu, and then use a pinch and sweep-up gesture to scroll. The flick gesture doesn't work here, either. Someone messages me in another window, and when a call comes in, I look up at the call answer option, pinch my fingers, and a third window appears with a slightly unsettling-looking VR avatar of the caller. He is wearing a Quest 3s headset on his end but says that it would be two people wearing Orion glasses talking to each other with their VR avatars in the future.

As we talk, I look around at the three screens. It’s hard to assess the image quality at the edges because, well, I’m not looking there. When I do, everything is in focus. Hua told me the system not only tracks your gaze but uses something called pupil replication, which ensures that wherever you’re looking, you still see the same image.

Put another way, the image doesn’t distort if you look at it incorrectly. I think this is what helps with the casually immersive feel of the Orion experience. I have none-too-fond memories of using Google Glass and always having to glance up and to the right to see the tiny screen. If the headset wasn’t on right, things looked weird.

Orion can play games. My first experience is a space game where I steer a ship with my gaze and head movements and shoot by tapping my thumb and index finger. The game fills my field of view and is so entertaining I play all five levels and end up with a respectable 6,000+ score.

My only complaint is that I keep doing something to dump myself out of the game and into the Meta AI. I don’t know why it's happening, but I quickly return to gameplay by using the home gesture.

After that, we play a multiplayer game. My opponent wears a pair of Orion glasses and stands 8 feet away from me as we play virtual ping pong. I move my virtual paddle with my open palm and bounce the translucent green ball off the walls and into his space. I don't fare as well here, though, and lose both rounds. After we play, they encourage me to step around the play area to see it from the side. My losing playfield is aglow; I reach out to touch…nothing.

I’ve had some concerns about the viability of Orion, especially after I heard that Meta thought they might not be able to source and scale up the manufacturer of enough silicon carbide to support mass distribution. Hua, though, seemed less sure that is the case, telling me they’d had many people in the silicon carbide manufacturing industry reach out after the reveal. The material remains very much on the list of display technology possibilities.

That’s good news because I have a sense that silicon carbide plays a significant role in the wide and relatively immersive field of view. Interestingly, the base experience I just had turns out to be only one step on the way to even better Orion smart glasses in the future.

The screen resolution of the set I wore is 13ppd, but Meta already has a pair of Orion prototypes running at 26ppd, double the resolution of the first frames. They had the pair in the room and offered to let me try them out.

In one of my earlier demos, I’d opened a browser window and watched a clip from the recent Mets versus Phillies MLB division series. It looked pretty good. Now, though, on the 26ppd Orion smart glasses, there was a noticeable difference in image quality. The player’s faces and the ballpark were all clearly sharper.

I realized that while everyone is talking about wearing the future, Meta is moving apace, quickly swapping and trying new components and features. Orion smart glasses that are thinner, lighter, more efficient, with a better resolution, and that cost as much (or as little) as a high-end smartphone might arrive much sooner than we think.

When I finally took off the Orion AR smart glasses after almost an hour of wearing them (they finally asked to recharge them), I was struck by how no part of my face ached. I loved my Vision Pro and Quest 3s experiences, but after 15 minutes, you know you’re wearing a pound of silicon and plastic on your face. Orion felt like glasses, very, very smart and AR-capable glasses.

What if the future is almost now?

@techradar ♬ Innovation Technology - ZydSounds

You might also like

- I tried the Ray-Ban Meta Smart Glasses' newest AI features, and they're about to become a much better – and cooler-looking – companion

- Meta might soon cut the Meta Quest 3 price and discontinue the ...

- Meta Connect: 5 things I want to see at what's set to be the ...

- Meta Quest 3S: everything we know about the leaked cheap VR ...

- Meta showcases the hardware that will power recommendations for ...

- Pico 4 Ultra VS Meta Quest 3: the battle of the best mid-range VR ...