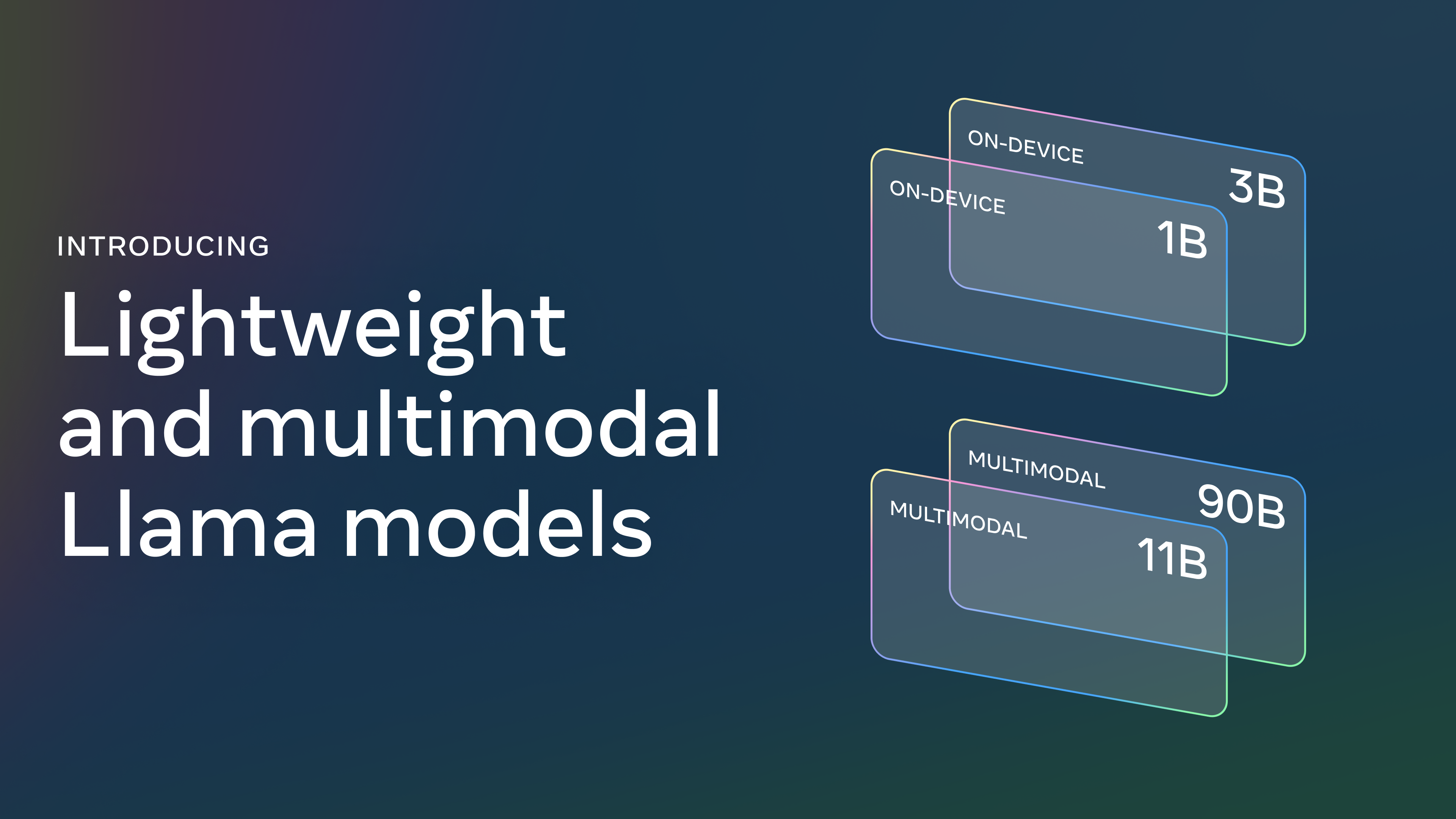

Meta has just dropped a new version of its Llama family of large language models. The updated Llama 3.2 introduces multimodality, enabling it to understand images in addition to text. It also brings two new ‘tiny’ models into the family.

Llama is significant—not necessarily because it's more powerful than models from OpenAI or Google, although it does give them a run for their money—but because it's open source and accessible to nearly anyone with relative ease.

The update introduces four different model sizes. The 1 billion parameter model runs comfortably on an M3 MacBook Air with 8GB of RAM, while the 3 billion model also works but just barely. These are both text only but can be run on a wider range of devices and offline.

The real breakthrough, though, is with the 11b and 90b parameter versions of Llama 3.2. These are the first true multimodal Llama models, optimized for hardware and privacy and far more efficient than their 3.1 predecessors. The 11b model could even run on a good gaming laptop.

What makes Llama such a big deal?

Llama's wide availability, state-of-the-art capability, and adaptability set it apart. It powers Meta’s AI chatbot across Instagram, WhatsApp, Facebook, Ray-Ban smart glasses, and Quest headsets, but it is also accessible on public cloud services, so users can download and run it locally or even integrate it into third-party products.

Groq, the ultra-fast cloud inference service, is one example of why having an open-source model is a powerful choice. I built a simple tool to summarize an AI research paper using Llama 3.1 70b running on Groq - it completed the summary faster than I could read the title.

Some open-source libraries let you create a ChatGPT-like interface on your Mac powered by Llama 3.2 or other models, including the image analysis capabilities if you have enough RAM. However, I took it a step further and built my own Python chatbot that queries the Ollama API, enabling me to run these models directly in the terminal.

Use cases for Llama 3.2

One of the significant reasons Llama 3.2 is such a big deal is its potential to transform how AI interacts with its environment, especially in areas like gaming and augmented reality. The multimodal capabilities mean Llama 3.2 can both "see" and "understand" visual inputs alongside text, opening up possibilities like dynamic, AI-powered NPCs in video games.

Outside of using the models as built by Meta, being open-source means companies, organizations and even governments can create their own customized and fine-tuned versions of the models. This is already happening in India to save near-extinct languages.

Imagine a game where NPCs aren't just following pre-scripted dialogue but can perceive the game world in real-time, responding intelligently to player actions and the environment. For example, a guard NPC could "see" the player holding a specific weapon and comment on it, or an AI companion might react to a change in the game's surroundings, such as the sudden appearance of a threat, in a nuanced and conversational way.

Beyond gaming, this technology can be used in smart devices like the Ray-Ban smart glasses and Quest headsets. Imagine pointing your glasses at a building and asking the AI for architectural history or details about a restaurant’s menu just by looking at it.

These use cases are exciting because Llama’s open-source nature means developers can customize and scale these models for countless innovative applications, from education to healthcare, where AI could assist visually impaired users by describing their environment.

Outside of using the models as built by Meta, being open-source means companies, organizations, and even governments can create their own customized and fine-tuned versions of the models. This is already happening in India to save near-extinct languages.

How Meta Llama 3.2 compares

Llama 3.2 11b and 90b are competitive with smaller models from Anthropic, such as Claude 3 Haiku and OpenAI, including GPT-4o-mini, when recognizing an image and similar visual tasks. The 3B version is competitive with similar-sized models from Microsoft and Google, including Gemini and Phi 3.5-mini across 150 benchmarks.

While not a direct benchmark, my own tests of having the 1b model analyze my writing and offer suggested improvements are roughly equal to the performance of Apple Intelligence writing tools, just without the handy context menu access.

The two vision models, 11b and 90b, can perform many of the same functions I've seen from ChatGPT and Gemini. For example, you can give it a photograph of your garden, and it can offer suggested improvements or even a planting schedule.

As I've said before, the performance, while good, isn't the most significant selling point for Llama 3.2; it is in how accessible it is and customizable for a range of use cases.