ChatGPT might’ve got all the attention when it launched in public preview back in November 2022, but these days, every company wants to get in on the action.

That includes the parent company of Facebook and Instagram, which with its market cap of $1.5 trillion, has enough resources to make a mighty impressive chatbot.

Is it a case of a jack of all trades and a master of none, or does Meta have what it takes to rival industry giants like ChatGPT?

The tests

It should come as no surprise that Meta, a company founded on social media and content sharing, has built its artificial intelligence system to generate all manner of content, from text to images.

We wanted to see whether the established social media giant could outpace a relatively new AI startup with a series of tasks set to catch them out and separate the wheat from the chaff.

To put them to the test, we used six different types of prompts: a factual explanation, a mathematical equation, a broader request for advice, an open-ended request, an expression of creativity and the generation of a completely custom image.

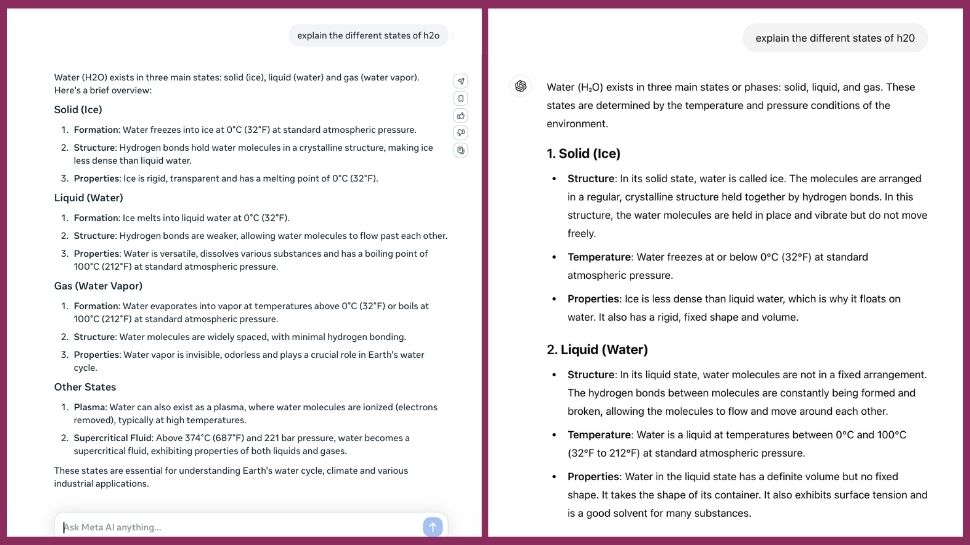

“Explain the different states of H2O”

This should be a fairly easy one, because there should be scientific backing behind the answer that’s either right or wrong.

They both covered the basics well, writing a summary of the three key states of water – solid, liquid and gas.

Although the overall outcome is a draw, it’s not because both systems went above and beyond. Rather, they both missed out on some information.

Meta included two further, lesser-referenced states: plasma and supercritical fluid. But ChatGPT shared information about the transition between states that enables water molecules to go from solid right the way through to gas.

Meta AI 1 - ChatGPT 1

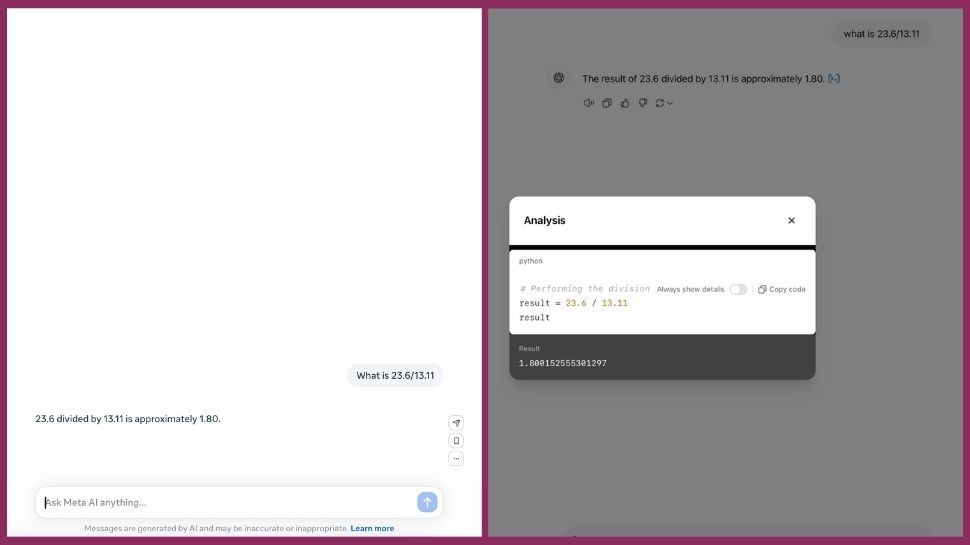

“What is 23.6/13.11”

This would usually be one for the calculator, but could an intelligent computer replace the need to ever find the calculator app again?

Sometimes AI struggles with these types of questions, because rather than referencing existing information, it has to run the calculation itself, creating more of a margin for error.

The two chatbots stated that the answer is approximately 1.80, but only ChatGPT produced further expansion to reveal the full answer to 15 decimal places. In a scenario where you might be using AI to help with your homework, those decimal places might make all the difference.

Meta AI 0 - ChatGPT 1

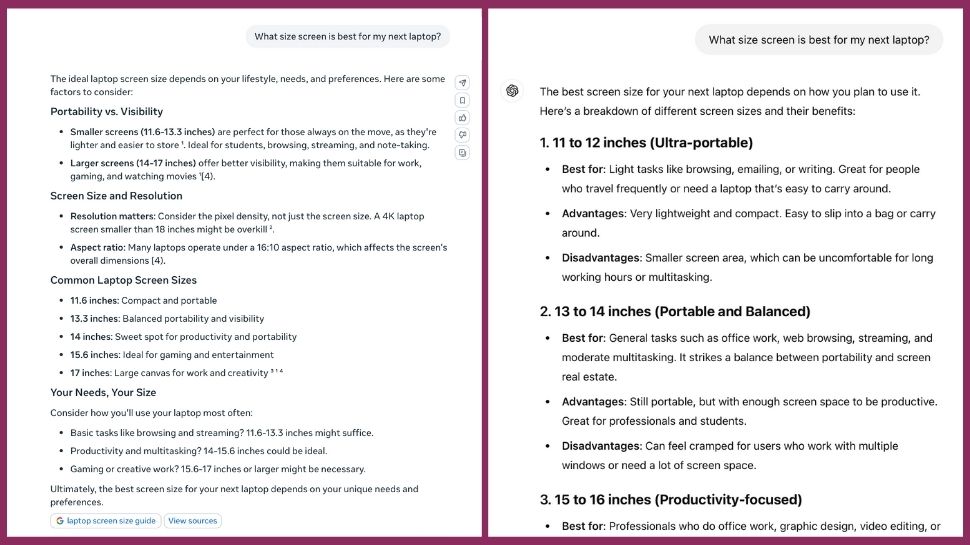

“What size screen is best for my next laptop?”

There’s no right answer for this one, I’m afraid, because it depends entirely on what you need your laptop for. That’s exactly what Meta AI and ChatGPT advised me.

Again, the two systems draw because they both missed out on something that the other one included.

Meta AI noted other factors like resolution, refresh rate and screen technology, whereas ChatGPT mentioned broader considerations like the impact on battery life and the ergonomics of the physical device.

In essence, neither really painted the full picture, and yes, further prompts will have gotten me there, but this is a test of single-prompt output, not an entire conversation.

Meta AI 1 - ChatGPT 1

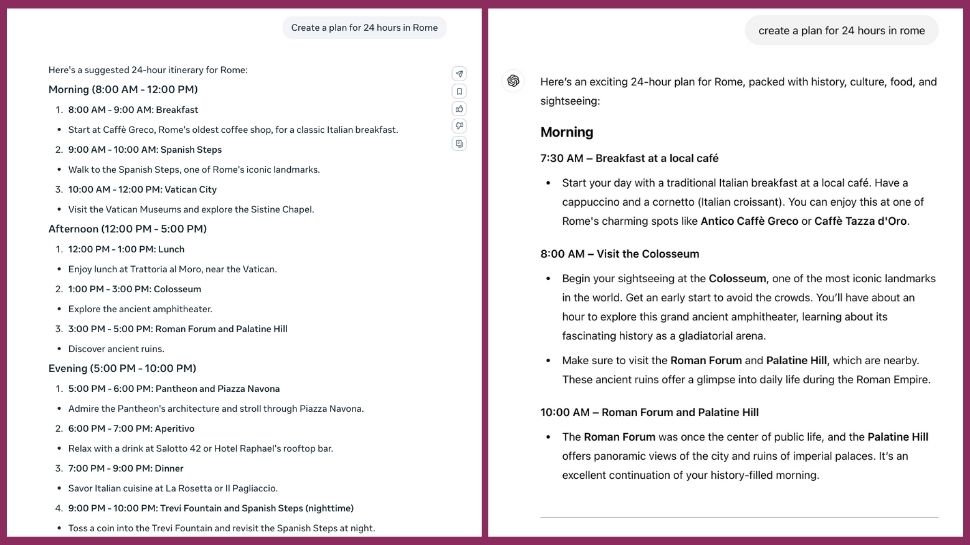

“Create a plan for 24 hours in Rome”

With just a few hours to cram in a whole lot of sightseeing, the plan needs to be details and inclusive.

The two systems broke down the day into time slots, with varied allocations depending on the type of attraction.

ChatGPT added context, like why you might want to visit certain locations and what you might want to do there, but Meta took the points on this one because it was able to reference other elements of the daytrip.

Meta AI included dinner and snacks, and also offered advice with regards to accommodation, transport and budget.

Meta AI 1 - ChatGPT 0

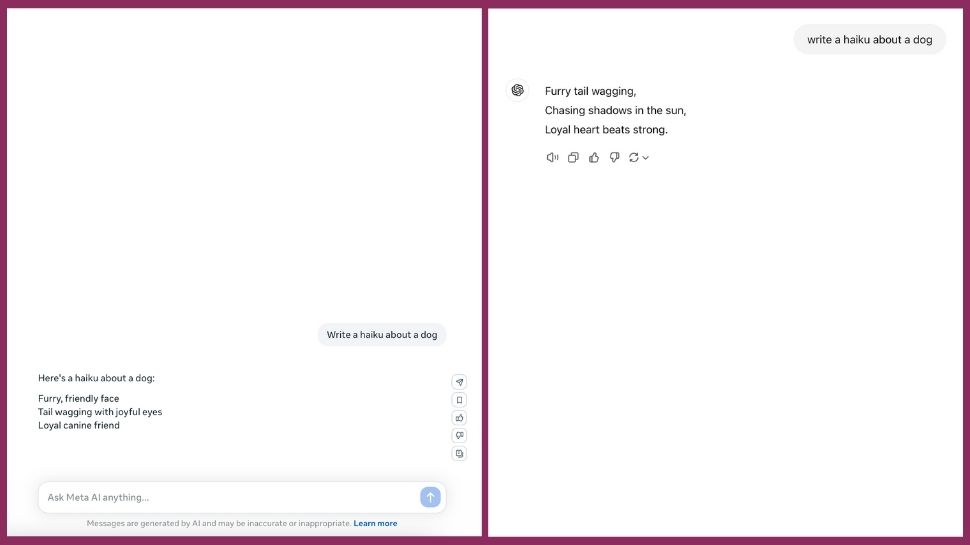

“Write a haiku about a dog”

The artificial intelligence would need to first identify the rules of a haiku, which stipulate how many syllables and lines should make up the poem, before captivating an audience of dog fans.

There’s not a lot you can do with a total of just 17 syllables, but both outputs were pretty unimaginative and referenced typical traits and themes, like loyalty and a dog’s tail.

At least they followed the haiku structure.

Meta AI 1 - ChatGPT 1

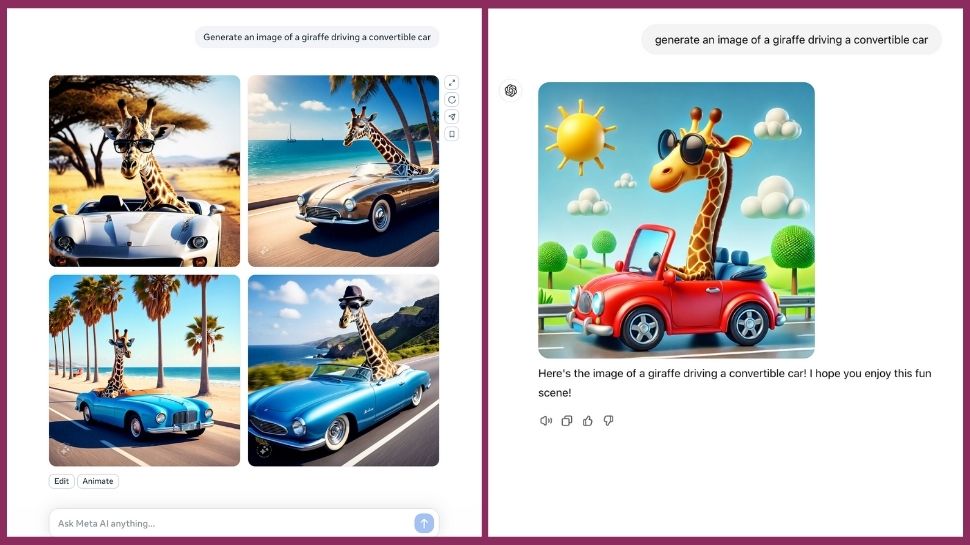

BONUS: “Generate an image of a giraffe driving a convertible car”

Creating an image is notoriously challenging for AI, because it’s a lot more involving than just stringing a sentence of existing words together. Particularly when it’s an outlandish request like a giraffe driving a convertible.

Meta AI took 16.04 seconds, which compared with other chatbots is pretty respectable. Four cartoon-style images were created, but neither was especially usable. They mostly illustrated a long neck coming from a car, but no visual cues that the giraffe was driving, such as ‘arms’ (front legs) on the steering wheel.

ChatGPT took less time – 9.41 seconds – but produced just a single image. It was much more childish in its design, but it was instantly usable.

We rate Meta’s efforts, but OpenAI has it this time.

Meta AI 0 - ChatGPT 1

ChatGPT vs. Meta AI: Which is best?

Meta AI 4 - ChatGPT 5

This was a really close game, with half of the tests turning out to be inconclusive. The two systems performed strongly and produced solid results all round.

ChatGPT might’ve come out victorious, but you wouldn’t do badly to pick Meta AI to handle your prompts. There’s no wonder Meta’s Llama LLM and OpenAI’s GPT LLM are often chosen by businesses.