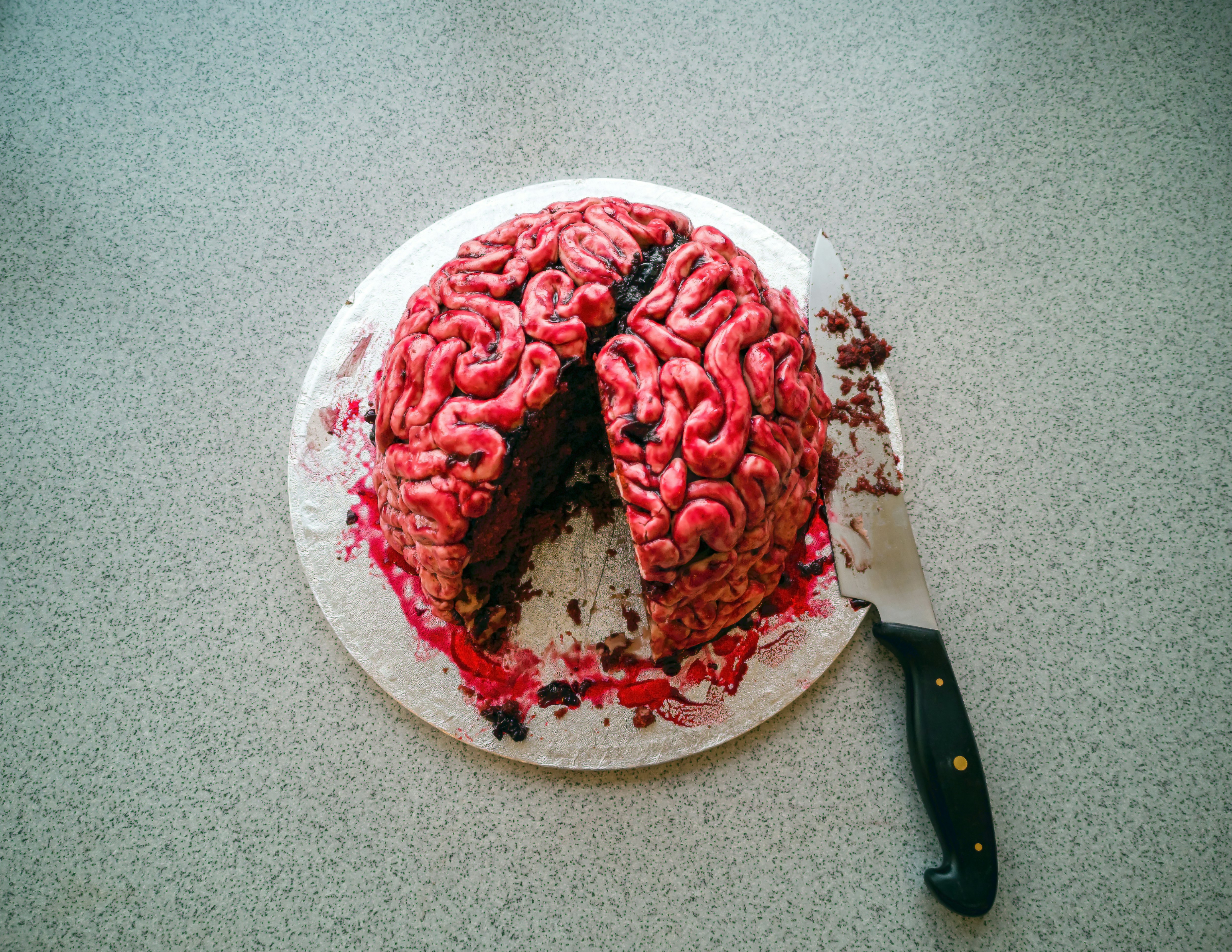

When you spy a delicious slice of cake, the jump from sight to hunger may seem quite simple. But inside your head, it’s actually an electrifying orchestra of information zipping from neuron to neuron, and from one brain region to the next that lands you a moist piece of red velvet straight in your mouth.

And while we have some idea of how the neural processes involved in how our brains respond to various foods work, we don’t have a complete, exhaustive roadmap of all the pitstops. But thanks to some serendipitous sleuthing, scientists at the Massachusetts Institute of Technology have discovered a special group of neurons that light up whenever you lock eyes on a culinary delight.

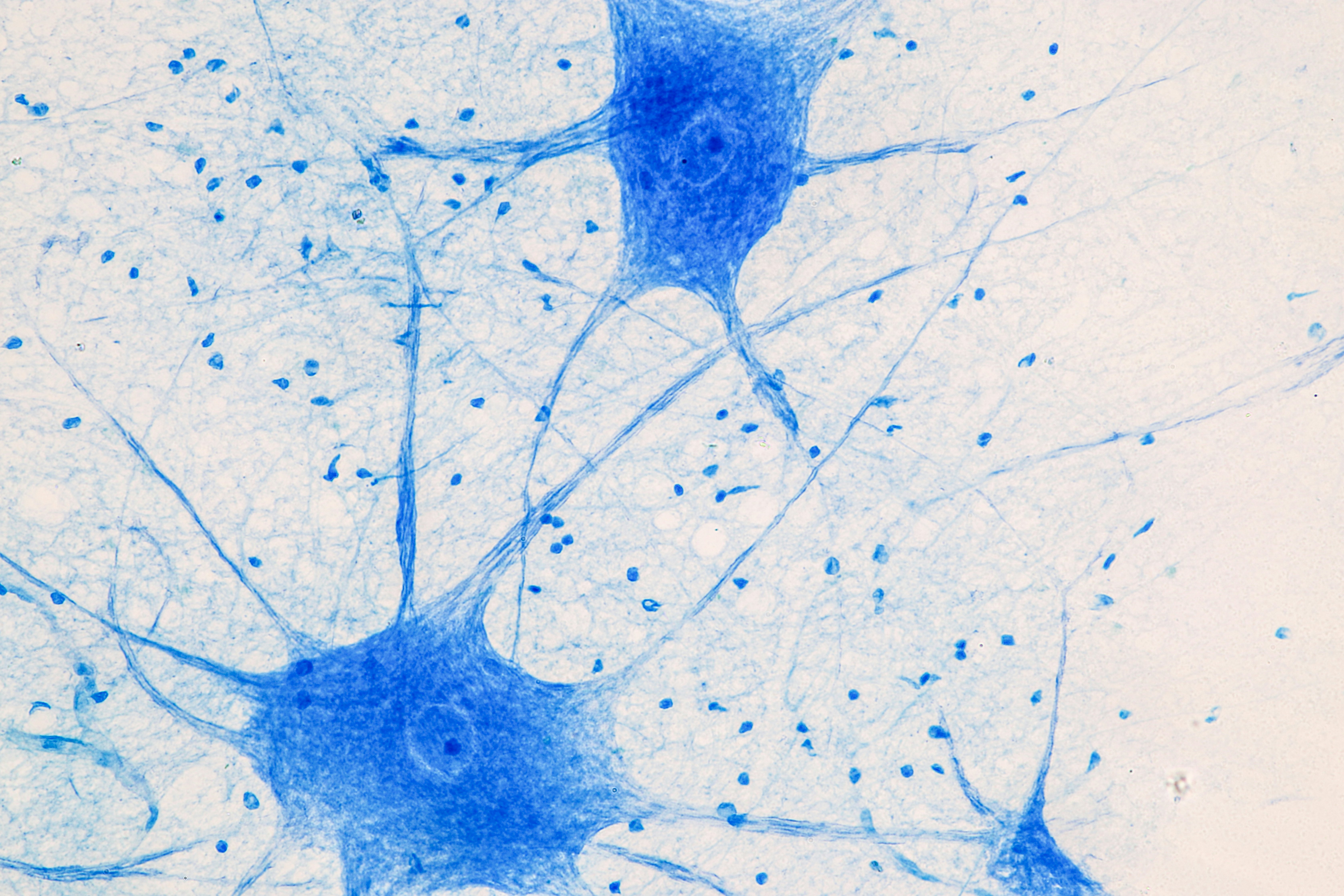

As described in a paper published last month in the journal Current Biology, these neurons are located in an area called the ventral visual stream, a neural pathway that carries information from the brain’s visual cortex to the temporal lobe, which sits just behind your ears. It’s a curious locale for food-sensitive neurons to be, but they may have evolved as a response to food’s relationship with culture and social behavior, Nancy Kanwisher, the paper’s senior author and Walter A. Rosenblith Professor of Cognitive Neuroscience at MIT, explains to Inverse.

What the researchers did — Kanwisher is best known for her work in the 1990s discovering the fusiform face area, a small region in the inferior temporal cortex that activates strongly when shown faces over any other sort of image. Since then, her lab has set out to better understand and characterize the ins and outs of visual perception.

But sometimes, to find something in the brain, you don’t intentionally go looking for it. This is the tact Kanwisher and Meenakshi Khosla, the paper’s first author and a postdoctoral fellow in Kanwisher’s lab, took when combing through the Natural Scenes Dataset (NSD), an enormous public collection of 10,000 functional whole-brain scans, to uncover the secrets of the ventral visual stream.

“There was no food-specific hypothesis we had prior to exploring this dataset,” Khosla tells Inverse. “We were just trying to see what kinds of selectivities popped up when we explore the dataset in a data-driven way.”

To do that, they used some math and computing to breakdown all the brain scans into a vast table, peering within which voxels (the tiniest unit of an MRI akin to a 3D pixel of brain tissue, says Kanwisher) the neurons that responded most to specific images like a house, puppy, or in this case food. (In NSD, participants were shown over 70,000 distinct, colored natural scenes taken from the Microsoft Common Objects in Context dataset.)

What they found — Within the ventral visual stream, the MIT researchers found four neural populations that corresponded to previously identified clusters that ordinarily respond to faces, places, bodies, and words. But then they noticed a fifth group: Neurons gleaming with gusto whenever images of food were involved. This curious finding was completely unexpected and left the researchers in disbelief.

“When we first saw it, we were like, ‘Oh, that’s really cute,’” says Kanwisher. “[We thought] those images are more colorful, they’re on roundish plates, so maybe it’s really color that we’re seeing selectivity for [or] the curviness of the plates or something about the texture.”

However, running the data again over the next several months, these neurons did appear to be very real and very much associated with only food. The researchers deemed this food-specific population the ventral food component or VFC. It seems to span across two clusters of neurons located on either side of the fusiform face area Kanwisher had previously discovered.

“I think we always thought of food as a conceptual category and not as strictly visually homogenous,” says Khosla. “That’s why we were so puzzled by it.”

To go beyond the NSD and see if their findings still held true for other images, the team created a predictive model with some machine learning. The AI — acting as sort of a human brain understudy — was fed over a million images, and it responded strongly to 1,000 of those pictures that were all food (even for black-and-white images).

Next, AI was shown matched food and non-food pairs that shared similarities in terms of shapes, colors, and textures, with the only difference being edibility. For instance, a banana and a crescent moon, M&Ms and buttons, and disturbingly, fried chicken and cute puppies.

“You see here, even though these things are matched visually, [AI] predicts a higher response to the food than non-food,” says Kanwisher. “It enables us to do a kind of proxy experiment without scanning subjects. We have to validate with real data, but it’s a nice way to kind of make a guess in advance.”

The researchers also found that for some subjects based on the brain scan data, the VFC lit up strongly to images of prepared food like a slice of pizza over raw fruits and veggies (which likely tracks with toddlers and adults everywhere) and images of warm-colored versus cool-toned foods.

Why it matters — It’s long been observed that color seems to be a deciding factor in food selectivity and perception, as seen in studies looking at colorblindness and food from the 1950s, as well as behavioral studies in adults, children, and monkeys suggest. Within that is trying to suss out all the factors involved in food perception, the parts of the brain involved in that learning, how it evolved in humans, and whether other animals share these foodie neurons.

“My lab and others have shown that face and place selectivities develop very early like you can detect those selectivities in six-month-old infants,” says Kanwisher. “So now this raises the question of how about food? Does that arise equally early? One strong possibility is that it does not” because figuring out whether something is edible is learned, and there’s also a cultural component to it as well.

What’s next — Kanwisher and her colleagues hope to figure out more about how the VFC specializes and guides us in what to eat. But they first need to directly confirm evidence for this region in live subjects rather than infer from data and algorithms.

“For now, we don’t know whether [the neural basis for the VFC] actually exists in single neurons or whether there are just some groups or populations of neurons that exhibit this food selective behavior,” says Khosla. “That’s an important question we want to address with future studies” either in humans or monkey models.

And if you’re a fan of Is it Cake? you’ll be pleased to know the MIT team is gearing up to study what goes on in your noggin when you’re watching a knife slice through a human-looking hand. Because that confectionery dissonance sure is a doozy.