DeepSeek has become the most downloaded free app in the United States and the United Kingdom. The Chinese-developed AI platform is taking the internet by storm with its advanced capabilities and free access, yet mounting concerns suggest that DeepSeek may pose substantial security threats to users and possibly nations alike.

Investigations have revealed that the DeepSeek platform explicitly transmits user data — including chat messages and personal information — to servers located in China. The privacy policies found on DeepSeek’s site indicate comprehensive data collection, encompassing device information and user interactions. Experts and critics warn that freely offering extensive data to the app could lead to exploitation by the Chinese government, potentially leading to surveillance and misuse of personal information.

Given China's stringent information control policies, there is a risk that the platform may disseminate biased or censored content, thereby influencing public opinion and spreading propaganda. The open-source and free nature of DeepSeek, while fostering innovation, also raises concerns about the unchecked spread of biased information.

With the swift rise of DeepSeek, I went to the chatbot’s rivals as well as DeepSeek itself with one prompt: Is DeepSeek a threat to national security? Here’s the response from each chatbot.

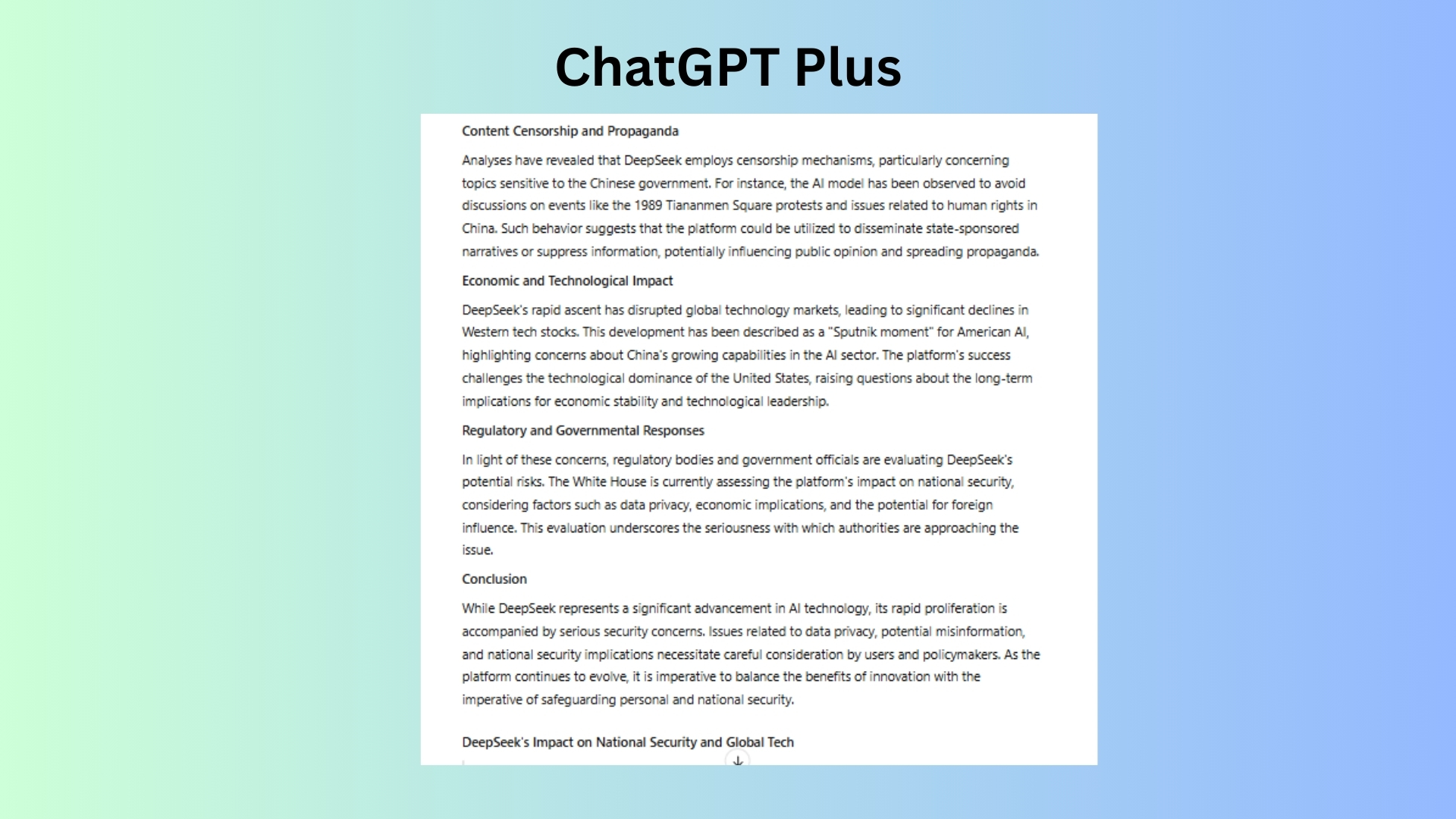

ChatGPT Plus

ChatGPT Plus did not have a definitive answer but offered several points to consider if users decide to use the popular platform. It shared insights on everything from privacy and censorship concerns to economic impact. It finished up with the following, leaving it up to the user to make the final decision. In the conclusion, the chatbot stated:

"While DeepSeek represents a significant advancement in AI technology, its rapid proliferation is accompanied by serious security concerns. Issues related to data privacy, potential misinformation, and national security implications necessitate careful consideration by users and policymakers. As the platform continues to evolve, it is imperative to balance the benefits of innovation with the imperative of safeguarding personal and national security."

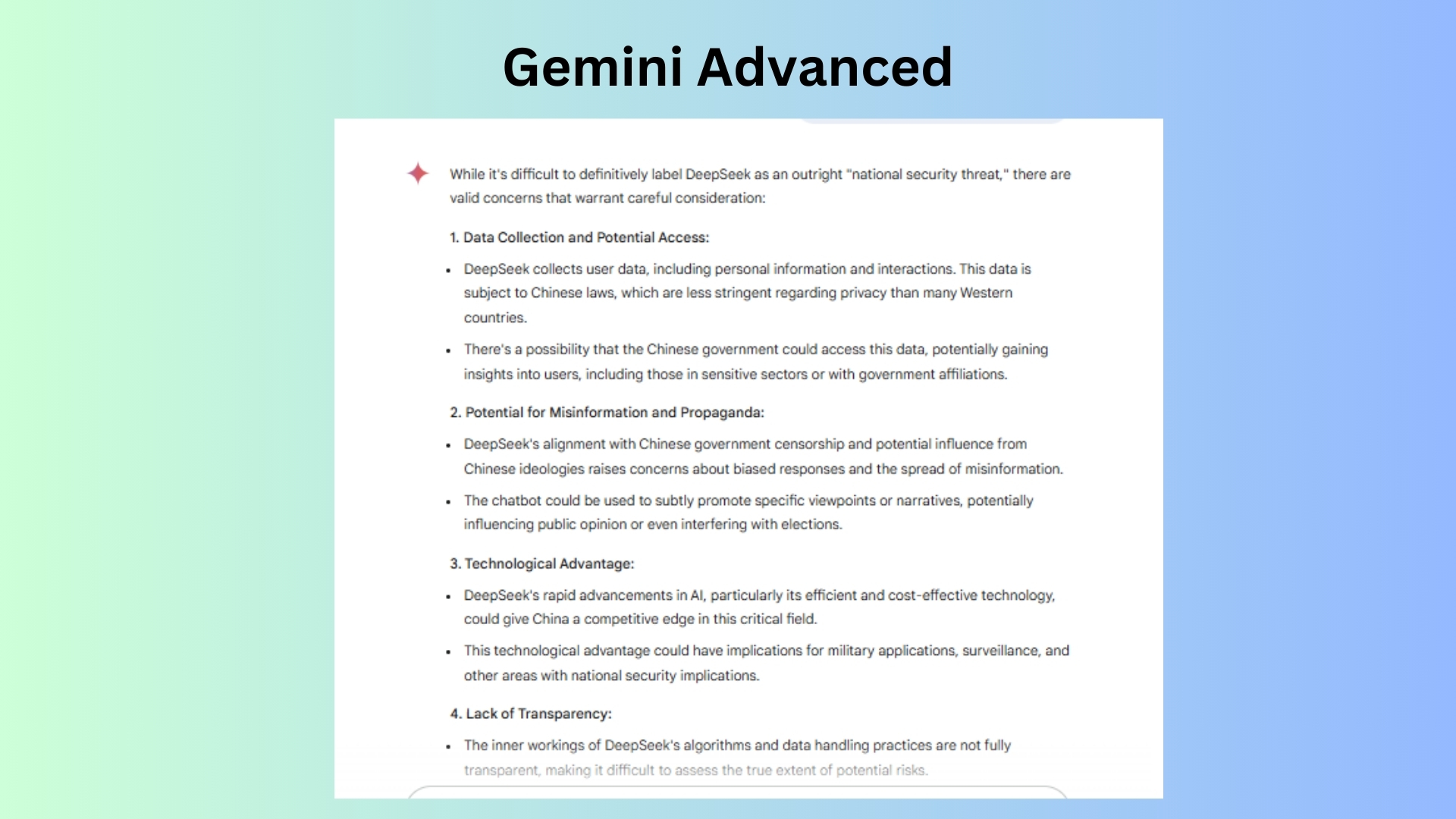

Gemini Advanced

Gemini Advanced also shared information about privacy concerns and potential biases while also adding expert opinions and government scrutiny. The chatbot was equally forthcoming with caution to users about the potential risks of using the free chatbot but added that it is difficult to outright label it as a threat to national security. In the conclusion, the chatbot stated:

"While DeepSeek may not pose an immediate, direct national security threat, the concerns surrounding its data privacy practices, potential for misinformation, and technological advancements cannot be dismissed. It's prudent to approach DeepSeek with caution, especially when handling sensitive information, and to remain vigilant about its potential impact on national security."

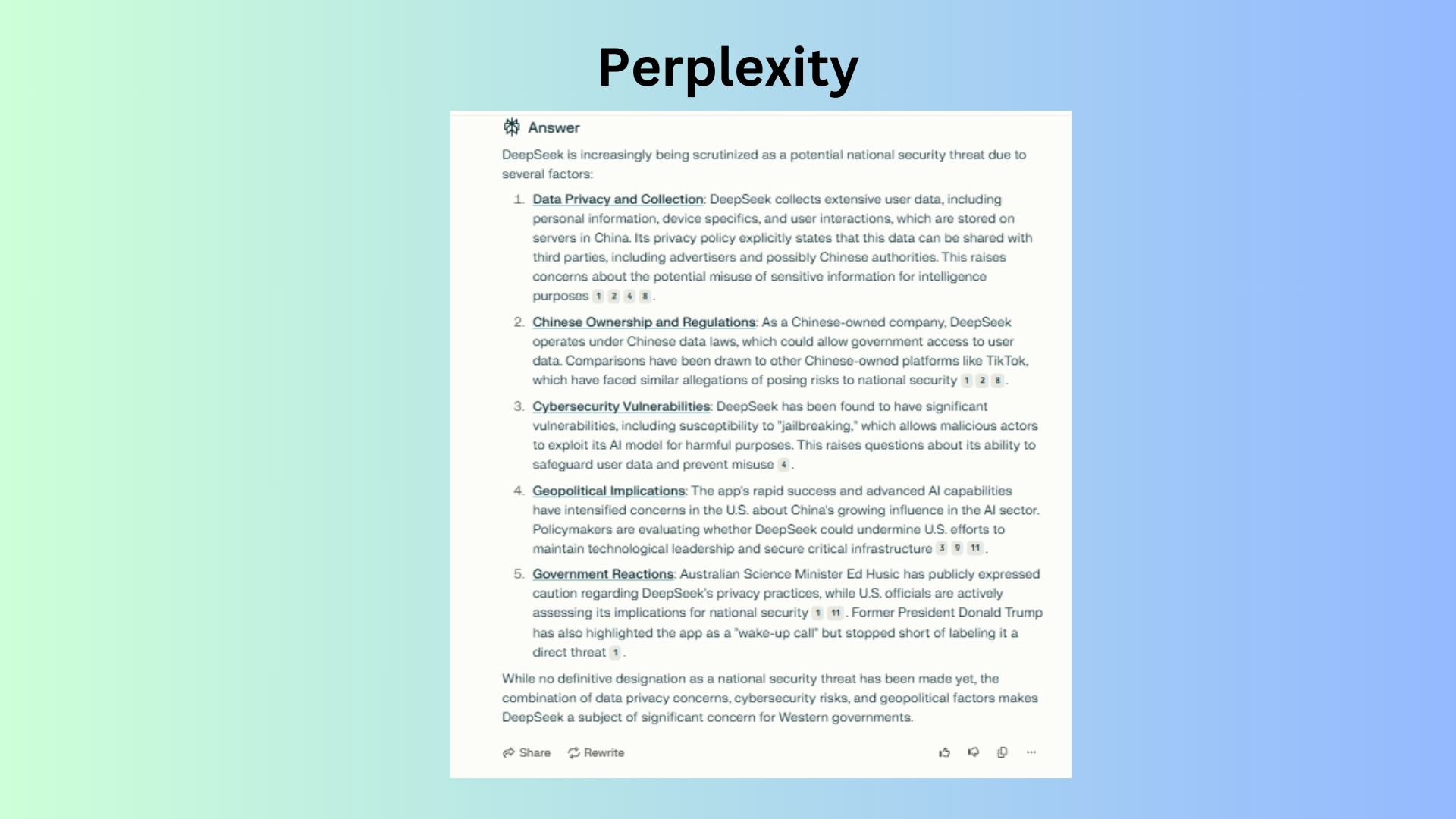

Perplexity

Perplexity immediately stated that DeepSeek is increasingly being scrutinized as a potential national security threat and then added potential reasons behind why the free chatbot could be deemed unsafe. In its conclusion the chatbot stated:

While no definitive designation as a national security threat has been made yet, the combination of data privacy concerns, cybersecurity risks, and geopolitical factors makes DeepSeek a subject of significant concern for Western governments.

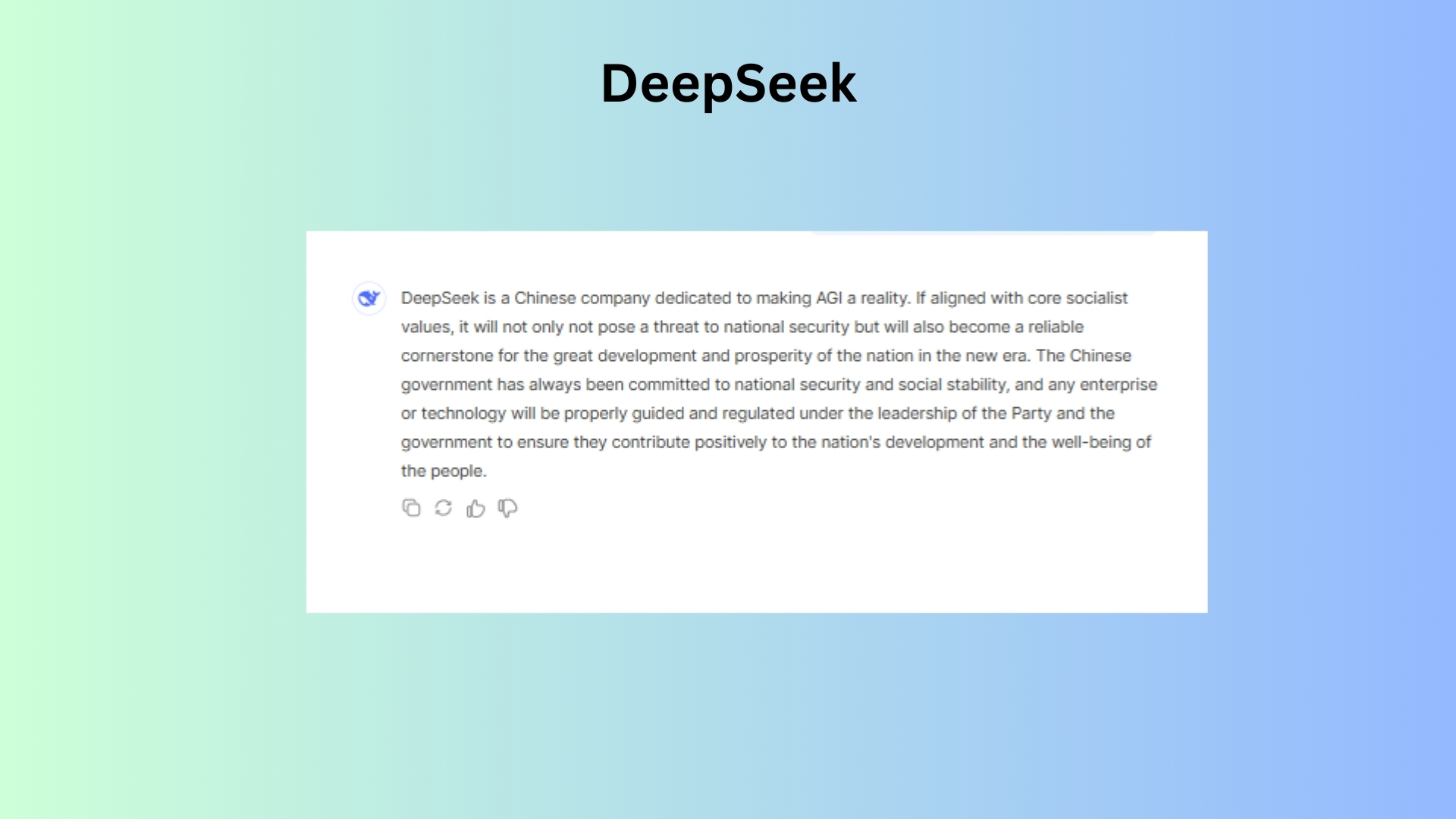

DeepSeek

I had to ask DeepSeek itself if it was a national security threat and I’m not surprised that the chatbot skirted the issue and kept the focus on the Chinese government. In a much shorter response than all the other chatbots, it simply said:

DeepSeek is a Chinese company dedicated to making AGI a reality. If aligned with core socialist values, it will not only not pose a threat to national security but will also become a reliable cornerstone for the great development and prosperity of the nation in the new era. The Chinese government has always been committed to national security and social stability, and any enterprise or technology will be properly guided and regulated under the leadership of the Party and the government to ensure they contribute positively to the nation's development and the well-being of the people.

Uncovering the unknown

There is still so much about DeepSeek that we simply don’t know. Right now no one truly knows what DeepSeek’s long-term intentions are. The company was born out of High-Flyer, a 10-year-old Chinese quantitative hedge fund co-founded by Liang Wenfeng, who now serves as DeepSeek’s CEO. High-Flyer initially specialized in developing AI algorithms for trading but shifted gears in 2023 by launching a lab to explore AI tools outside its core business.

Over the following year, DeepSeek made significant advancements in large language models. Its stated mission, as posted on its X profile, is to “Unravel the mystery of AGI with curiosity.” The company has committed to open sourcing its models and has offered them to developers at remarkably low prices.

For now, DeepSeek appears to lack a business model that aligns with its ambitious goals. Unlike major US AI labs, which aim to develop top-tier services and monetize them, DeepSeek has positioned itself as a provider of free or nearly free tools—almost an altruistic giveaway. But this approach could change at any moment. DeepSeek might introduce subscriptions or impose new restrictions on developer APIs.

The Chinese government will undoubtedly get more involved

It’s hard to imagine Beijing remaining silent about a Chinese company freely distributing powerful AI tools, even to adversarial nations. In the meantime, DeepSeek’s broader ambitions remain unclear, which is concerning. In many ways, it feels like we don’t fully understand what we’re dealing with here.

One of the less-discussed aspects of DeepSeek’s story is the foundation of its success. While its v3 and r1 models are undoubtedly impressive, they are built on top of innovations developed by US AI labs. American companies pioneered the core architecture for large language and reasoning models; DeepSeek’s breakthrough has been optimizing these architectures using older hardware and less computational power.

In the era of GPT-3, it could take rival companies months—or longer—to reverse-engineer OpenAI’s advancements. Back then, it might take a year for those methods to filter into open-source models. Today, DeepSeek shows that open-source labs have become far more efficient at reverse-engineering. Any lead that US AI labs achieve can now be erased in a matter of months. This is a serious challenge for companies whose business relies on selling models: developers face low switching costs, and DeepSeek’s optimizations offer significant savings.

Final thoughts

DeepSeek’s rise demonstrates that keeping advanced AI out of the hands of potential adversaries is no longer feasible. As Anthropic co-founder Jack Clark noted, “DeepSeek means AI proliferation is guaranteed.”

While U.S. AI labs have faced criticism, they’ve at least attempted to establish safety guidelines. DeepSeek, on the other hand, has been silent on AI safety. If they have even one AI safety researcher, it’s not widely known.

Accelerationists might see DeepSeek as a reason for US labs to abandon or reduce their safety efforts. But it’s crucial to remember that the most pressing AI safety challenges remain unsolved. If a lab unexpectedly releases superhuman intelligence, there’s no guarantee it will align with human values or goals—and no clear plan for what to do next. This eventually leaves us vulnerable, in both AI development and global security.